Many Common Problems are NP-Hard, and Why that Matters for AI

post by Andrew Keenan Richardson (qemqemqem) · 2025-03-26T21:51:17.960Z · LW · GW · 9 commentsContents

Background: P vs NP Surprisingly, there are many NP-Hard Problems in Your Daily Life Heuristics and Partial Solutions Easy Problems “Reasoning” Hard Problems are All Around Us Appendix — More Examples None 9 comments

Crosspost from my blog: https://mechanisticmind.substack.com/p/many-common-problems-are-np-hard

What problems will be left for superintelligence? AI models are quickly getting better, but certain important and very ordinary challenges are surprisingly, stubbornly hard. While large language models have made some previously difficult computational tasks trivial, a fundamental class of problems that challenge classical computers will likely remain inherently difficult even for advanced AI.

No matter how smart AI gets, there are some problems that defy efficient solutions. Even superhuman intelligences will struggle with them.

Formally, these challenges are called NP-hard, meaning they fundamentally resist efficient solutions. What makes this significant is how many familiar, everyday situations fall into this category - from planning vacations to organizing events.

When humans tackle these challenges, we typically dedicate some effort, then accept a 'good enough' outcome. Additional contemplation might improve our solution, but we can never be certain we've found the optimal answer - unlike other tasks where perfect solutions are verifiably achievable.

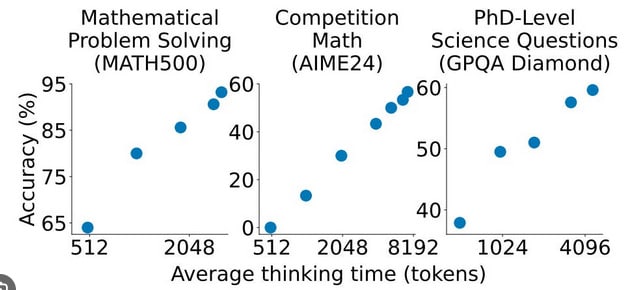

Fortunately, effective heuristics (like greedy algorithms) often yield good-enough results. LLMs excel at applying these heuristics, allowing them to perform reasonably well on such problems. However, heuristics have fundamental limits; finding truly optimal solutions requires exponentially more computational effort. That’s why we’re seeing inference-time compute scaling:

It pays to think. Spending more tokens on thinking yields better answers.

But notice that the x-axis is a log scale! A user can only double compute so many times before they run out of money.

This distinction between problems has a lot of significance for designing AI systems. Notice what you need to solve, and you can have clearer expectations and better understanding of where to allocate resources.

Background: P vs NP

For readers already familiar with computational complexity, you'll recall that solving NP-hard problems requires computation time that grows exponentially with size. Unlike easier problems that yield to clever optimizations, these are fundamentally difficult.

Unless P=NP (considered highly unlikely by most computer scientists), optimal solutions to NP-hard tasks will always be computationally intensive. This isn't merely an engineering limitation but stems from their mathematical structure.

NP-hard problems have no known efficient algorithms, strong evidence suggests none exist, and their computational requirements grow astronomically with size. This exponential growth means even classical computers might require years to solve certain instances, with large language models adding significant computational overhead.

But, while it’s very hard to get a perfect solution, it’s often possible to get a good-enough solution quickly. Unfortunately, when your bar for “good enough” gets higher, the amount of work required can grow exponentially, too. Sometimes it’s fast and easy to get a decent solution, but it requires incredible effort to get past that.

Surprisingly, there are many NP-Hard Problems in Your Daily Life

Intuitively it might seem like the hardest problems would only come up in specialized work like rocketry, but no, they commonly appear in everyday life, as in these examples:

Meeting Schedules: If you’ve ever tried organizing a meeting for a dozen people, then you've dealt with the classic Scheduling Problem. During planning, each constraint narrows down the space of possibilities — block off Tuesday afternoon for the CFO, and suddenly the VP of Operations can't attend, forcing you to reschedule, which then conflicts with the CEO's availability. This same mathematical challenge appears when planning family reunions or coordinating shift work. Tradeoffs become necessary, and the final schedule often requires human judgment calls.

System Architecture: Designing a software architecture that balances scalability, maintainability, and performance tackles a complex Graph Partitioning Problem. Each decision about how to divide functionality into services, modules, or classes creates cascading effects throughout the system. What makes this an NP-hard challenge is that each component interacts with others in ways that can't be evaluated in isolation—a microservice architecture that improves deployment flexibility might increase network latency, while a monolithic approach that optimizes performance might reduce maintainability. The dependencies between components grow exponentially with system size, which explains why architecture patterns emerge as approximation strategies rather than optimal solutions, and why even AI-powered design tools can only suggest approaches rather than compute the mathematically perfect architecture.

Wedding Seating: The wedding seating chart that gave you headaches is a complex Graph Partitioning Problem. What makes this uniquely difficult is that it combines both hard constraints (table size limits, family groupings) with soft constraints (maximizing conversation potential, managing interpersonal dynamics).

(more examples in the appendix below)

Heuristics and Partial Solutions

In the meeting scheduling problem above, we can use a greedy algorithm to find a good solution. First schedule a time that work with the hardest-to-schedule person, then a time with the next person, and so on. There are other good heuristics too, such as constraint propagation. These approaches often work in practice, but not always.

Sometimes you’ll apply the greedy algorithm to scheduling and eventually run into a blocker, and you’ll need to backtrack. Sometimes the scheduling will be especially thorny, such as if people have packed schedules, or you’re scheduling for a lot of people. In those cases, you’ll likely have to do a lot of backtracking indeed.

In fact in some cases, it won’t even be clear whether a solution is possible. Say you’re the registrar for a college, and you need to schedule classes so that every student can make it to every class they’re signed up for with no conflicts. You might stay up all night banging your head against partial solutions before you finally find a way to make it all work.

But sometimes it’s not clear whether any improvement can be found. Let’s say you’re planning errands around town, and you need to 8 things all over the city in the afternoon. That’s the traveling salesman problem. You might get a route in Google Maps that requires 3 hours of driving. Can you do better? It’s NP-hard to know if it’s even possible to improve — it requires exponential time!

Easy Problems

Not all problems are like this! Some can be solved relatively quickly, in polynomial time. For example, alphabetizing books on a shelf, creating a household budget, or assigning tasks to fungible workers are all easy tasks in that way.

These are requests that we expect LLMs to do a generally pretty good job on! You can give ChatGPT a list of 200 books and ask it to alphabetize them, for example, and it will succeed. That’s directly a consequence of Big-O computational analysis. Sorting a list requires O(n*logn) compute, but a transformer model spends O(n^3) compute, which is larger.

“Reasoning”

Fundamentally, when we talk about human reasoning, we’re talking about our ability to solve these hard problems. When things are easy — when they can be solved quickly — then they just don’t require a person to sit and think for a long time. But there’s this whole class of problems where we can show theoretically that if you want to get really good results you need to spend a lot of processing power on them — an exponential amount.

Human life is full of these difficulties, and we care a lot about the results. We care so much that we aren’t satisfied with the first solution we think of, we want to keep thinking. If you’re planning carefully for a trip, you don’t just throw stuff in a backpack until it gets too heavy. No, you stop and think about your options. If you’re writing a computer program, you don’t add features one at a time until it’s done, you have to stop and weigh tradeoffs between speed and accuracy, or customizability and ease-of-use.

Our ability as humans to think through thorny situations with lots of constraints is part of what makes us so smart. And our ability to do that is something that gives us an edge over LLMs, at least for now.

Hard Problems are All Around Us

Life is full of challenges. Not just hard in the colloqial sense, but in a formal mathematical sense. They’re hard in the sense that they require a lot of computing to find good answers — an exponential amount of computing. They require tenacity to keep thinking. They require long memories to guide us to new possibilities to consider.

Right now LLMs can struggle with these tasks. They may come up with a solution, but unless they think for a long time, it’s not possible to believe that their answer is the best one, or even a good one. Reasoning models are getting better, and they’re showing test time compute scaling improvements from thinking. But a linear increase in thinking won’t defeat challenges that require an exponential amount of computing.

There are some problems where you have to expect that an LLM is incapable of giving the best answer. These are quite common, but don’t lose hope, because additional thinking might continue to yield improvements for a long time!

Appendix — More Examples

Packing for Vacation: That struggle to decide which items to fit in your suitcase under the airline's weight limit? You've faced the fundamental Knapsack Problem. You’re trying to maximize the utility of items you bring under constraints on weight and volume. Each item creates a binary choice—pack it or leave it—creating 2^n possible combinations for n items.

Planning Errands: If you’ve ever struggled to find the shortest route for your weekend errands? You've encountered the infamous Traveling Salesman Problem. Each new stop multiplies the complexity factorially. With just 10 destinations, there are over 3 million possible routes to compare. At 15 stops, there are trillions. This explains why navigation apps can optimize routes with a few destinations but resort to approximations when you add more stops.

Exam Schedules: Remember those college final exam schedules with multiple tests on the same day? The registrar faced the challenging Graph Coloring Problem, where courses are vertices, shared students create edges, and time slots are "colors." This problem becomes particularly difficult when resources are constrained—limited rooms, preferred testing windows, and faculty availability all add constraints.

Sudoku: Is actually an NP-complete problem. Interestingly, this puzzle demonstrates the difference in approach between humans and algorithms. Computers might solve Sudoku using backtracking with constraint propagation, a depth-first search technique with pruning. But human solvers use pattern recognition and elimination strategies that often bypass brute-force searching entirely.

9 comments

Comments sorted by top scores.

comment by niplav · 2025-03-27T00:43:38.377Z · LW(p) · GW(p)

See also the counter-arguments by Gwern.

Replies from: osten, qemqemqem↑ comment by Andrew Keenan Richardson (qemqemqem) · 2025-03-27T04:28:34.123Z · LW(p) · GW(p)

I mostly agree with Gwern; they're very right that there are ways around complexity classes through cleverness and solving a different problem, or else by scaling hardware and writing programs more efficiently.

They conclude their essay to say:

at most, they demonstrate that neither humans nor AIs are omnipotent

and I think that's basically what I was trying to get at. Complexity provides a soft limit in some circumstances, and it's helpful to understand which points in the world impose that limit and which don't.

Replies from: Mo Nastri↑ comment by Mo Putera (Mo Nastri) · 2025-03-27T11:58:00.285Z · LW(p) · GW(p)

Just to clarify, your post's bottomline is that AIs won't be omnipotent, and this matters for AI because a lot of common real-life problems are NP-hard, but also that this doesn't really matter (for us?) because there are ways around NP-hardness through cleverness and solving a different problem, or else by scaling hardware and writing programs more efficiently, or (referencing James [LW(p) · GW(p)]) by just finding a good-enough solution instead of an optimal one?

comment by James Diacoumis (james-diacoumis) · 2025-03-27T08:23:30.855Z · LW(p) · GW(p)

I think this post misses a few really crucial points:

1. LLM’s don’t need to solve the knapsack problem. Thinking through the calculation using natural language is certainly not the most efficient way to do this. It just needs to know enough to say “this is the type of problem where I’d need to call a MIP solver” and call it.

2. The MIP solver is not guaranteed to give the most optimal solution but… do we mind? As long as the solution is “good enough” the LLM will be able to pack your luggage.

3. The thing which humans can do which allows us to pack luggage without solving NP hard problems is something like executive decision making. For example, a human will reason “I like both of these items but they don’t both fit, so I’ll either need to make some more space, leave one behind or buy more luggage.” Similarly Claude currently can’t play Pokémon very well because it can’t make good executive decisions about e.g. when to stay in an area to level up Pokémon, when to proceed to the next area, when to go back to revive etc..

↑ comment by Andrew Keenan Richardson (qemqemqem) · 2025-03-27T14:26:31.828Z · LW(p) · GW(p)

Maybe I didn't articulate my point very well. These problems contain a mix of NP-hard compute requirements and subjective judgements.

Packing is sometimes a matter of listing everything in a spreadsheet and then executing a simple algorithm on it, but sometimes the spreadsheet is difficult to fully specify.

Playing Pokemon well does not strike me as an NP-hard problem. It contains pathfinding, for which there are efficient solutions, and then mostly it is well solved with a greedy approach.

Replies from: james-diacoumis↑ comment by James Diacoumis (james-diacoumis) · 2025-03-27T20:43:27.400Z · LW(p) · GW(p)

If I understand your position - you’re essentially specifying an upper bound for the types of problems future AI systems could possibly solve. No amount of intelligence will break through the NP-hard requirements of computing power.

I agree with that point, and it’s worth emphasising but I think you’re potentially overestimating how much of a practical limit this upper bound will affect generally intelligent systems. Practical AI capabilities will continue to improve substantially in ways that matter for real-world problems, even if the optimal solution for these problems is computationally intractable.

Packing is sometimes a matter of listing everything in a spreadsheet and then executing a simple algorithm on it, but sometimes the spreadsheet is difficult to fully specify.

I agree that problem specification is hard, but it’s not NP-hard - humans do it all the time. This is the type of problem I’d expect future AI systems to be really good at. You seem to be implying that to pack a bag for a holiday we need to write a big spreadsheet with the weight and utility of each item and solve the problem optimally but no-one does this. In practice, we start packing the bag and make a few executive decisions along the way about which items need to be left out. It’s slightly suboptimal but much more computationally efficient.

Playing Pokemon well does not strike me as an NP-hard problem. It contains pathfinding, for which there are efficient solutions, and then mostly it is well solved with a greedy approach.

I agree that Pokémon is probably not NP-hard, this is exactly the point. The reason Pokemon is hard is because it requires a lot of executive decisions to be made.

Humans don’t beat Pokémon by laying out all possible routes and applying a pathfinding algorithm, they start going through the game and make adjustments to their approach as new information comes in. This is the type of problem I’d expect future LLM’s to be really good at (much better than humans.)

comment by osten · 2025-03-27T07:31:02.170Z · LW(p) · GW(p)

NP-hard problems have no known efficient algorithms, strong evidence suggests none exist

Can you back up this claim? I find the evidence I have seen pretty weak in a mathematical sense.

Replies from: qemqemqem↑ comment by Andrew Keenan Richardson (qemqemqem) · 2025-03-27T14:31:57.748Z · LW(p) · GW(p)

That's outside the scope of this post, and also it's an open research question. I agree with you mathematically that there's considerable uncertainty. I used the word "evidence" to simply mean that we haven't found general polynomial time algorithms yet.