We don't want to post again "This might be the last AI Safety Camp"

post by Remmelt (remmelt-ellen), Linda Linsefors, Robert Kralisch (nonmali-1) · 2025-01-21T12:03:33.171Z · LW · GW · 17 commentsThis is a link post for https://manifund.org/projects/11th-edition-of-ai-safety-camp

Contents

You can donate through our Manifund page Suggested budget for the next AISC Testimonials (screenshots from Manifund page) None 17 comments

We still need more funding to be able to run another edition. Our fundraiser raised $6k as of now, and will end if it doesn't reach the $15k minimum, on February 1st. We need proactive donors.

If we don't get funded for this time, there is a good chance we will move on to different work in AI Safety and new commitments. This would make it much harder to reassemble the team to run future AISCs, even if the funding situation improves.

You can take a look at the track record section and see if it's worth it:

- ≥ $1.4 million granted to projects started at AI Safety Camp

- ≥ 43 jobs in AI Safety taken by alumni

- ≥ 10 organisations started by alumni

Edit to add: Linda just wrote a new post about AISC's theory of change [LW · GW].

You can donate through our Manifund page

You can also read more about our plans there.

If you prefer to donate anonymously, this is possible on Manifund.

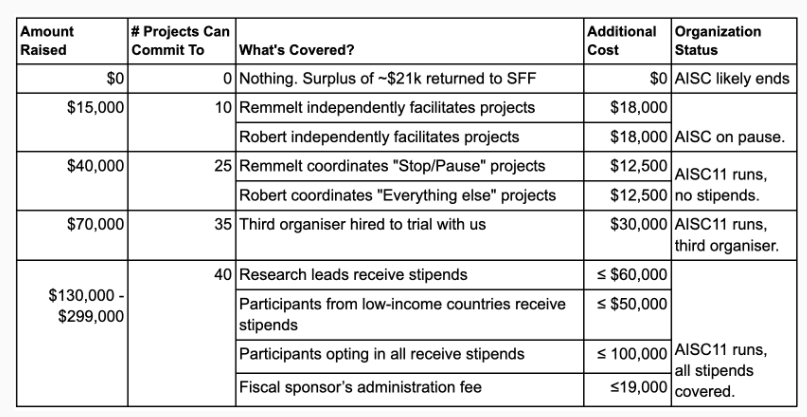

Suggested budget for the next AISC

If you're a large donor (>$15k), we're open to let you choose what to fund.

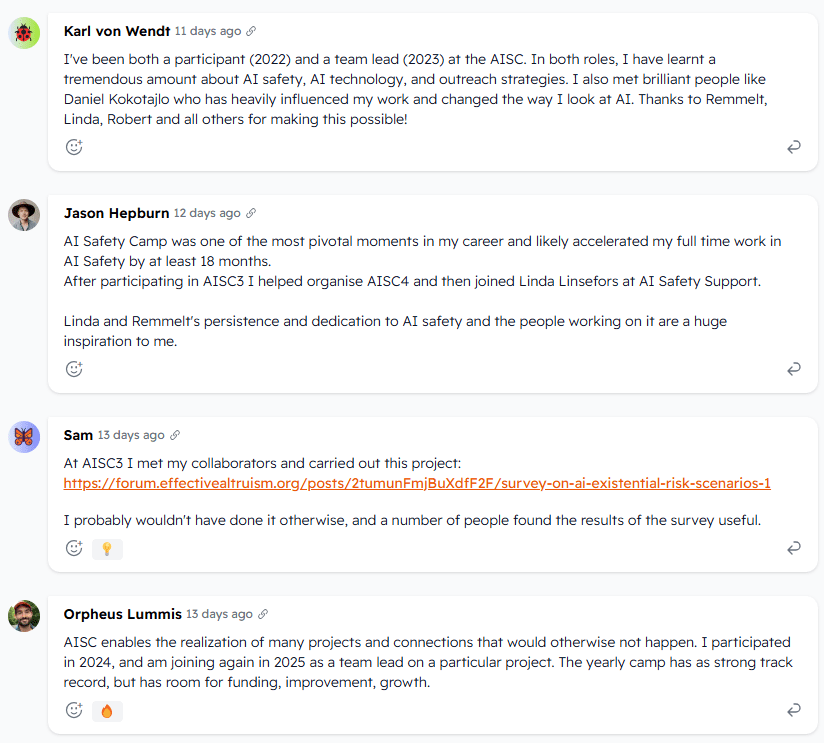

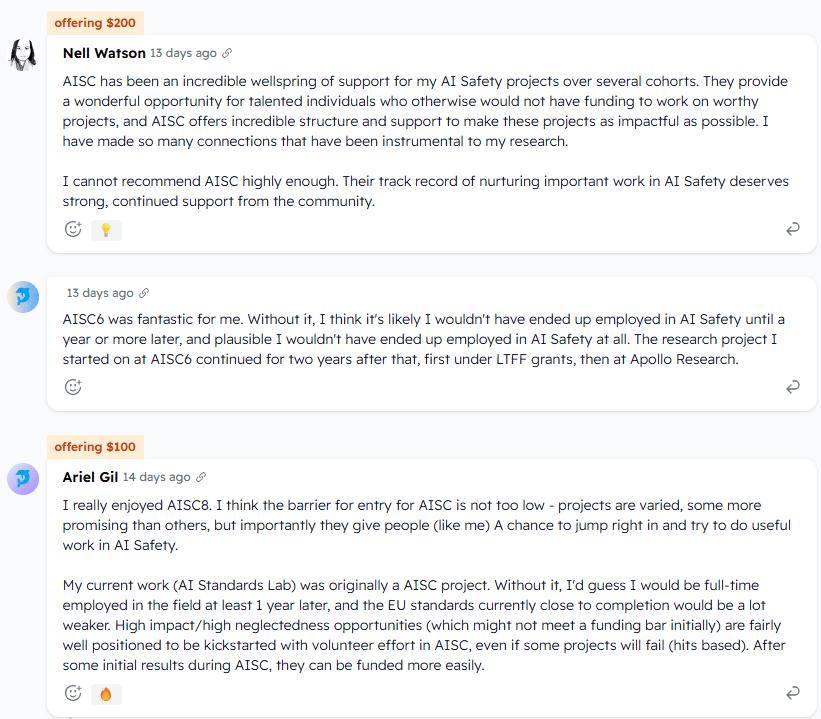

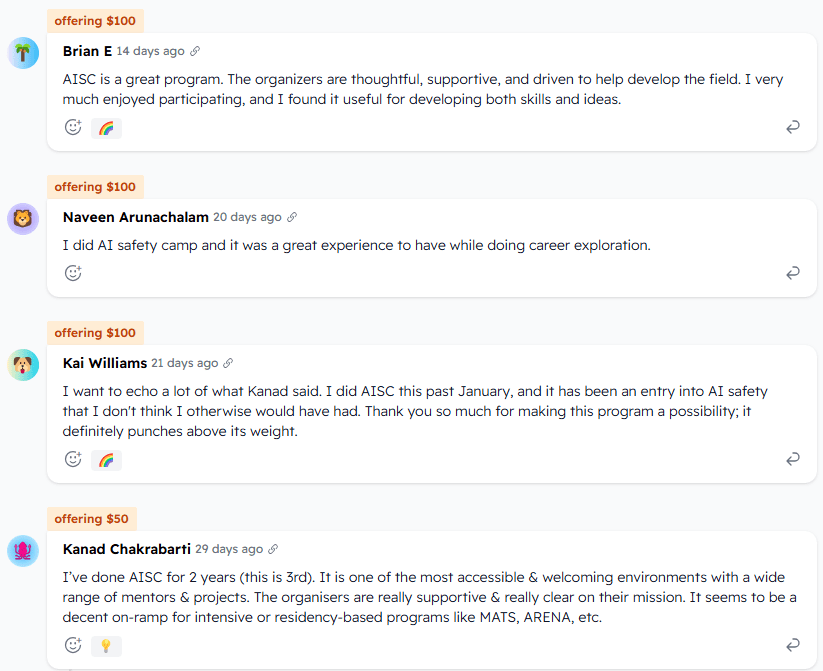

Testimonials (screenshots from Manifund page)

17 comments

Comments sorted by top scores.

comment by kwiat.dev (ariel-kwiatkowski) · 2025-01-21T21:26:40.351Z · LW(p) · GW(p)

While I participated in a previous edition, and somewhat enjoyed it, I couldn't bring myself to support it now considering Remmelt is the organizer, between his anti-AI-art crusades and an overall "stop AI" activism. It's unfortunate, since technical AI safety research is very valuable, but promoting those anti-AI initiatives makes it a probable net negative in my eyes.

Maybe it's better to let AISC die a hero.

Replies from: habryka4↑ comment by habryka (habryka4) · 2025-01-21T22:35:33.945Z · LW(p) · GW(p)

I think "stop AI" is pretty reasonable and good, but I agree that Remmelt seems kind of like he has gone off the deep end and that is really the primary reason why I am not supporting AI Safety camp. I would consider filling the funding gap myself if I hadn't seen this happen.

My best guess is AISC dying is marginally good, and someone else will hopefully pick up a similar mantle.

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2025-01-21T22:46:57.775Z · LW(p) · GW(p)

I don't understand why Remmelt going "off the deep end" should affect AI safety camp's funding. That seems reasonable for speculative bets, but not when there's a strong track-record available.

Replies from: habryka4↑ comment by habryka (habryka4) · 2025-01-22T00:22:53.349Z · LW(p) · GW(p)

I have heard from many people near AI Safety camp that they also have judged AI safety camp to have gotten worse as a result of this. I think there was just a distribution shift, and now it makes sense to judge the new distribution.

Separately, it matters who is in a position to shape the culture and trajectory of a community.

I think there is a track record for the last few safety camps since Remmelt went off the deep end, and it is negative (not purely so, and not with great confidence, I am just trying to explain why I don't think there is a historical track record thing that screens off the personalities of the people involved).

Replies from: Linda Linsefors, remmelt-ellen↑ comment by Linda Linsefors · 2025-01-22T19:53:52.947Z · LW(p) · GW(p)

Each organiser on the team are allowed to accept projects independently. So far Remmelt hasn't accepted any projects that I would have rejected, so I'm not sure how his unorthodox views could have affected project quality.

Do you think people are avoiding AISC because of Remmelt? I'd be surprised if that was a significant effect.

After we accept projects, the project is pretty much in the hands of each research lead, with very lite involvement from the organisers.

I'd be interested to learn more about in what way you think or have heard that the program have gotten worse.

Replies from: habryka4↑ comment by habryka (habryka4) · 2025-01-22T23:25:53.696Z · LW(p) · GW(p)

Do you think people are avoiding AISC because of Remmelt? I'd be surprised if that was a significant effect.

Absolutely, I have heard at least 3-4 conversations where I've seen people consider AISC, or talked about other people considering AISC, but had substantial hesitations related to Remmelt. I certainly would recommend someone not participate because of Remmelt, and my sense is this isn't a particularly rare opinion.

I currently would be surprised if I could find someone informed who I have an existing relationship with for whom it wouldn't be in their top 3 considerations on whether to attend or participate.

Replies from: Lblack, Linda Linsefors↑ comment by Lucius Bushnaq (Lblack) · 2025-01-23T10:03:49.189Z · LW(p) · GW(p)

I have heard from many people near AI Safety camp that they also have judged AI safety camp to have gotten worse as a result of this.

Hm. This does give me serious pause. I think I'm pretty close to the camps but I haven't heard this. If you'd be willing to share some of what's been relayed to you here or privately, that might change my decision. But what I've seen of the recent camps still just seemed very obviously good to me?

I don't think Remmelt has gone more crank on the margin since I interacted with him in AISC6. I thought AISC6 was fantastic and everything I've heard about the camps since then still seemed pretty great.

I am somewhat worried about how it'll do without Linda. But I think there's a good shot Robert can fill the gap. I know he has good technical knowledge, and from what I hear integrating him as an organiser seems to have worked well. My edition didn't have Linda as organiser either.

I think I'd rather support this again than hope something even better will come along to replace it when it dies. Value is fragile.

↑ comment by Linda Linsefors · 2025-01-23T10:26:06.625Z · LW(p) · GW(p)

I vouch for Robert as a good replacement for me.

Hopefully there is enough funding to onboard a third person for next camp. Running AISC at the current scale is a three person job. But I need to take a break from organising.

↑ comment by Remmelt (remmelt-ellen) · 2025-01-24T10:51:42.020Z · LW(p) · GW(p)

Lucius, the text exchanges I remember us having during AISC6 was about the question whether 'ASI' could control comprehensively for evolutionary pressures it would be subjected to. You and I were commenting on a GDoc with Forrest. I was taking your counterarguments against his arguments seriously – continuing to investigate those counterarguments after you had bowed out.

You held the notion that ASI would be so powerful that it could control for any of its downstream effects that evolution could select for. This is a common opinion held in the community. But I've looked into this opinion and people's justifications for it enough to consider it an unsound [LW · GW] opinion.[1]

I respect you as a thinker, and generally think you're a nice person. It's disappointing that you wrote me off as a crank in one sentence. I expect more care, including that you also question your own assumptions.

- ^

A shortcut way of thinking about this:

The more you increase 'intelligence' (as a capacity in transforming patterns in data), the more you have to increase the number of underlying information-processing components. But the corresponding increase in the degrees of freedom those components have in their interactions with each other and their larger surroundings grows faster.

This results in a strict inequality between:- the space of possible downstream effects that evolution can select across; and

- the subspace of effects that the 'ASI' (or any control system connected with/in ASI) could detect, model, simulate, evaluate, and correct for.

The hashiness model is a toy model for demonstrating this inequality (incl. how the mismatch between 1. and 2. grows over time). Anders Sandberg and two mathematicians are working on formalising that model at AISC.

There's more that can be discussed in terms of why and how this fully autonomous machinery is subjected to evolutionary pressures. But that's a longer discussion, and often the researchers I talked with lacked the bandwidth.

↑ comment by Lucius Bushnaq (Lblack) · 2025-01-24T12:54:19.632Z · LW(p) · GW(p)

It's disappointing that you wrote me off as a crank in one sentence. I expect more care, including that you also question your own assumptions.

I think it is very fair that you are disappointed. But I don't think I can take it back. I probably wouldn’t have introduced the word crank myself here. But I do think there’s a sense in which Oliver’s use of it was accurate, if maybe needlessly harsh. It does vaguely point at the right sort of cluster in thing-space.

It is true that we discussed this and you engaged with a lot of energy and in good faith. But I did not think Forrest’s arguments were convincing at all, and I couldn’t seem to manage to communicate to you why I thought that. Eventually, I felt like I wasn’t getting through to you, Quintin Pope also wasn’t getting through to you, and continuing started to feel draining and pointless to me.

I emerged from this still liking you and respecting you, but thinking that you are wrong about this particular technical matter in a way that does seem like the kind of thing people imagine when they hear ‘crank’.

Replies from: remmelt-ellen↑ comment by Remmelt (remmelt-ellen) · 2025-01-24T17:31:23.408Z · LW(p) · GW(p)

I kinda appreciate you being honest here.

Your response is also emblematic of what I find concerning here, which is that you are not offering a clear argument of why something does not make sense to you before writing ‘crank’.

Writing that you do not find something convincing is not an argument – it’s a statement of conviction, which could as much be a reflection of a poor understanding of an argument or of not taking the time to question one’s own premises. Because it’s not transparent about one’s thinking, but still comes across like there must be legit thinking underneath, this can be used as a deflection tactic (I don’t think you are, but others who did not engage much ended the discussion on that note). Frankly, I can’t convince someone if they’re not open to the possibility of being convinced.

I explained above why your opinion was flawed – that ASI would be so powerful that it could cancel all of evolution across its constituent components (or at least anything that through some pathway could build up to lethality).

I similarly found Quintin’s counter-arguments (eg. hinging on modelling AGI as trackable internal agents) to be premised on assumptions that considered comprehensively looked very shaky.

I relate why discussing this feels draining for you. But it does not justify you writing ‘crank’, when you have not had the time to examine the actual argumentation (note: you introduced the word ‘crank’ in this thread; Oliver wrote something else).

Overall, this is bad for community epistemics. It’s better if you can write what you thought was unsound about my thinking, and I can write what I found unsound about yours. Barring that exchange, some humility that you might be missing stuff is well-placed.

Besides this point, the respect is mutual.

↑ comment by Linda Linsefors · 2025-01-23T10:22:26.713Z · LW(p) · GW(p)

Is this because they think it would hurt their reputation, or because they think Remmelt would make the program a bad experience for them?

↑ comment by Remmelt (remmelt-ellen) · 2025-01-24T09:35:37.875Z · LW(p) · GW(p)

I agree that Remmelt seems kind of like he has gone off the deep end

Could you be specific here?

You are sharing a negative impression ("gone off the deep end"), but not what it is based on. This puts me and others in a position of not knowing whether you are e.g. reacting with a quick broad strokes impression, and/or pointing to specific instances of dialogue that I handled poorly and could improve on, and/or revealing a fundamental disagreement between us.

For example, is it because on Twitter I spoke up against generative AI models that harm communities, and this seems somehow strategically bad? Do you not like the intensity of my messaging? Or do you intuitively disagree with my arguments about AGI being insufficiently controllable? [LW · GW]

As is, this is dissatisfying. On this forum, I'd hope[1] there is a willingness to discuss differences in views first, before moving to broadcasting subjective judgements[2] about someone.

- ^

Even though that would be my hope, it's no longer my expectation. There's an unhealthy dynamic on this forum, where 3+ times I noticed people moving to sideline someone with unpopular ideas, without much care.

To give a clear example, someone else listed vaguely dismissive claims about research I support. Their comment lacked factual grounding but still got upvotes. When I replied [LW(p) · GW(p)] to point out things they were missing, my reply got downvoted into the negative.

I guess this is a normal social response on most forums. It is naive of me to hope that on LessWrong it would be different. - ^

This particularly needs to be done with care if the judgement is given by someone seen as having authority (because others will take it at face value), and if the judgement is guarding default notions held in the community (because that supports an ideological filter bubble).

↑ comment by habryka (habryka4) · 2025-01-24T18:50:57.596Z · LW(p) · GW(p)

I think many people have given you feedback. It is definitely not because of "strategic messaging". It's because you keep making incomprehensible arguments that don't make any sense and then get triggered when anyone tries to explain why they don't make sense, while making statements that are wrong with great confidence.

As is, this is dissatisfying. On this forum, I'd hope[1] there is a willingness to discuss differences in views first, before moving to broadcasting subjective judgements[2] about someone.

People have already spent many hours giving you object-level feedback on your views. If this still doesn't meet the relevant threshold for then moving on and discussing judgements, then basically no one can ever be judged (and as such our community would succumb to eternal september and die).

Replies from: remmelt-ellen↑ comment by Remmelt (remmelt-ellen) · 2025-01-24T23:55:46.060Z · LW(p) · GW(p)

It's because you keep making incomprehensible arguments that don't make any sense

Good to know that this is why you think AI Safety Camp is not worth funding.

Once a core part of the AGI non-safety argument is put into maths to be comprehensible for people in your circle, it’d be interesting to see how you respond then.

comment by Linda Linsefors · 2025-01-23T12:59:37.661Z · LW(p) · GW(p)

Why AISC needs Remmelt

For ASIC to survive long term, it needs at leasat one person who is long term committed to running the program. Without such a person I estimate the half-life of of AISC to be ~1 year. I.e, there would be be ~50% chance of AISC dying out every year, simply because there isn't an organiser team to run it.

Since the start, this person has been Remmelt. Because of Remmelt AISC has continued to exist. Other organiser have come and gone, but Remmelt has stayed and held things together. I don't know if there is anyone to replace Remmelt in this role. Maybe Robert? But I think it's too early to say. I'm definitely not available for this role, I'm too restless.

Hiring for long term commitment is very hard.

Why AISC would currently have had even less funding without Remmelt

For a while AISC was just me and Remmelt. During this time Remmelt took care of all the fundraising, and still mostly does, because Robert is still new, and I don't do grant applications.

I had several bad experiences around grant applications in the past. The last one was in 2021, when me and JJ applied for money for AI Safety Support. The LTFF committee decided that they didn’t like me personally and agreed to fund JJ’s salary but not mine. This is a decision they were allowed to make, of course. But on my side, it was more than I could take emotionally, and it led me to quit EA and AI Safety entirely for a year, and I’m still not willing to do grant applications. Maybe someday, but not yet.

I’m very grateful to Remmelt for being willing to take on this responsibility, and for hiring me at a time when I was the one who was toxic to grant makers.

I have less triggers for crowdfunding and private donations, than for grant applications, but I still find it personally very stressfull. I'm not saying my trauma around this is anyone's fault, or anyone else's problem. But I do think it's relevant context for understanding AISC funding situation. Organisations are made of people, and these people may have constraints that are invisible to you.

Some other things about Remmelt and his role in AISC

I know Remmelt get's into argument on Twitter, but i'm not on Twitter, so I'm not paying attention to that. I know Remmelt as a friend and as a great co-organiser. Remmelt is one of the rare people I work really well with.

Within AISC, Remmelt is overseeing the Stop/Pause AI projects. For all the other projects, Remmelt is only involved in a logistical capacity.

For the current AISC there are

- 4 Pause/Stopp AI projects. Remmelt is in charge of accepting or rejecting projects in this chathegory, and also supporting them if they need any help.

- 1 project which Remmelt is running personally as the research lead. (Not counted as one of the 4 above)

- 26 other projects where Remmelt only have purely logistical involvement. E.g. Remmelt is in charge of stipends and compute reimbursement, but his personal opinions abut AI Safety is not a factor in this. I see most of the requests, and I've never seen Remmelt discriminate based on what he think of the project.

Each of us organisers (Remmelt, Robert, me) can unilaterally decide to accept any project we like, and once a project is accepted to AISC, we all support it in our roles as organisers. We have all agreed to this, because we all thinks that having this diversity is worth it, even if not all of us likes every single project the other ones accept.

comment by Linda Linsefors · 2025-01-23T13:20:14.116Z · LW(p) · GW(p)

New related post:

Theory of Change for AI Safety Camp [LW · GW]