The Epsilon Fallacy

post by johnswentworth · 2018-03-17T00:08:01.203Z · LW · GW · 21 commentsThis is a link post for https://medium.com/@johnwentworth/the-epsilon-fallacy-94184386b1b1

Contents

Program Optimization Carbon Emissions The 80/20 Rule Conclusion: Profile Your Code Footnotes None 21 comments

Program Optimization

One of the earlier lessons in every programmer’s education is how to speed up slow code. Here’s an example. (If this is all greek to you, just note that there are three different steps and then skip to the next paragraph.)

// Step 1: import

Import foobarlib.*

// Step 2: Initialize random Foo array

Foo_field = Foo[1000]

// Step 3: Smooth foo field

For x in [1...998]:

Foo_field[x] = (foo_field[x+1] + foo_field[x-1])/2

Our greenhorn programmers jump in and start optimizing. Maybe they decide to start at the top, at step 1, and think “hmm, maybe I can make this import more efficient by only importing Foo, rather than all of foobarlib”. Maybe that will make step 1 ten times faster. So they do that, and they run it, and lo and behold, the program’s run time goes from 100 seconds to 99.7 seconds.

In practice, most slow computer programs spend the vast majority of their time in one small part of the code. Such slow pieces are called bottlenecks. If 95% of the program’s runtime is spent in step 2, then even the best possible speedup in steps 1 and 3 combined will only improve the total runtime by 5%. Conversely, even a small improvement to the bottleneck can make a big difference in the runtime.

Back to our greenhorn programmers. Having improved the run time by 0.3%, they can respond one of two ways:

- “Great, it sped up! Now we just need a bunch more improvements like that.”

- “That was basically useless! We should figure out which part is slow, and then focus on that part.”

The first response is what I’m calling the epsilon fallacy. (If you know of an existing and/or better name for this, let me know!)

The epsilon fallacy comes in several reasonable-sounding flavors:

- The sign(epsilon) fallacy: this tiny change was an improvement, so it’s good!

- The integral(epsilon) fallacy: another 100 tiny changes like that, and we’ll have a major improvement!

- The infinity*epsilon fallacy: this thing is really expensive, so this tiny change will save lots of money!

The epsilon fallacy is tricky because these all sound completely reasonable. They’re even technically true. So why is it a fallacy?

The mistake, in all cases, is a failure to consider opportunity cost. The question is not whether our greenhorn programmers’ 0.3% improvement is good or bad in and of itself. The question is whether our greenhorn programmers’ 0.3% improvement is better or worse than spending that same amount of effort finding and improving the main bottleneck.

Even if the 0.3% improvement was really easy - even if it only took two minutes - it can still be a mistake. Our programmers would likely be better off if they had spent the same two minutes timing each section of the code to figure out where the bottleneck is. Indeed, if they just identify the bottleneck and speed it up, and don’t bother optimizing any other parts at all, then that will probably be a big win. Conversely, no matter how much they optimize everything besides the bottleneck, it won’t make much difference. Any time spent optimizing non-bottlenecks, could have been better spent identifying and optimizing the bottleneck.

This is the key idea: time spent optimizing non-bottlenecks, could have been better spent identifying and optimizing the bottleneck. In that sense, time spent optimizing non-bottlenecks is time wasted.

In programming, this all seems fairly simple. What’s more surprising is that almost everything in the rest of the world also works like this. Unfortunately, in the real world, social motivations make the epsilon fallacy more insidious.

Carbon Emissions

Back in college, I remember watching a video about this project. The video overviews many different approaches to carbon emissions reduction: solar, wind, nuclear, and bio power sources, grid improvements, engine/motor efficiency, etc. It argues that none of these will be sufficient, on its own, to cut carbon emissions enough to make a big difference. But each piece can make a small difference, and if we put them all together, we get a viable carbon reduction strategy.

This is called the “wedge approach”.

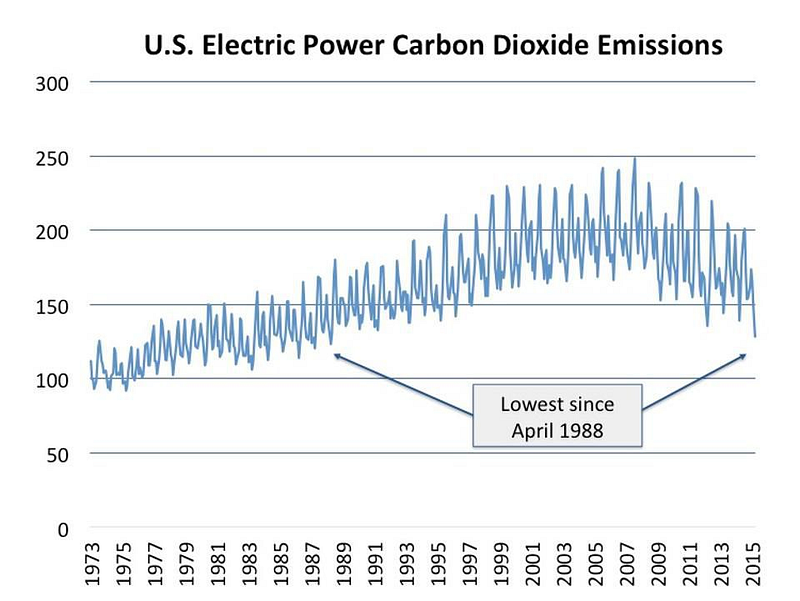

Here’s a chart of US carbon emissions from electricity generation by year, shamelessly cribbed from a Forbes article.

Note that emissions have dropped considerably in recent years, and are still going down. Want to guess what that’s from? Hint: it ain’t a bunch of small things adding together.

In the early 00’s, US oil drilling moved toward horizontal drilling and fracking. One side effect of these new technologies was a big boost in natural gas production - US natgas output has been growing rapidly over the past decade. As a result, natgas prices became competitive with coal prices in the mid-00’s, and electricity production began to switch from coal to natgas. The shift is already large: electricity from coal has fallen by 25%, while natgas has increased 35%.

The upshot: natgas emits about half as much carbon per BTU as coal, and electricity production is switching from coal to natgas en mass. Practically all of the reduction in US carbon emissions over the past 10 years has come from that shift.

Now, back to the wedge approach. One major appeal of the wedge narrative is that it’s inclusive: we have all these well-meaning people working on all sorts of different approaches to carbon reduction. The wedge approach says “hey, all these approaches are valuable and important pieces of the effort, let’s all work together on this”. Kum-bay-a.

But then we look at the data. Practically all the carbon reduction over the past decade has come from the natgas transition. Everything else - the collective effort of hundreds of thousands of researchers and environmentalists on everything from solar to wind to ad campaigns telling people to turn off their lights when not in the room - all of that adds up to barely anything so far, compared to the impact of the natgas transition.

Now, if you’re friends with some of those researchers and environmentalists, or if you did some of that work yourself, then this will all sound like a status attack. We’re saying that all these well-meaning, hard-working people were basically useless. They were the 0.3% improvement to run time. So there’s a natural instinct to defend our friends/ourselves, an instinct to say “no, it’s not useless, that 0.3% improvement was valuable and meaningful and important!” And we reach into our brains for a reason why our friends are not useless-

And that’s when the epsilon fallacy gets us.

“It’s still a positive change, so it’s worthwhile!”

“If we keep generating these small changes, it will add up to something even bigger than natgas!”

“Carbon emissions are huge, so even a small percent change matters a lot!”

This is the appeal of the wedge approach: the wedge approach says all that effort is valuable and important. It sounds a lot nicer than calling everyone useless. It is nicer. But niceness does not reduce carbon emissions.

Remember why the epsilon fallacy is wrong: opportunity cost.

Take solar photovoltaics as an example: PV has been an active research field for thousands of academics for several decades. They’ve had barely any effect on carbon emissions to date. What would the world look like today if all that effort had instead been invested in accelerating the natgas transition? Or in extending the natgas transition to China? Or in solar thermal or thorium for that matter?

Now, maybe someday solar PV actually will be a major energy source. There are legitimate arguments in favor.¹ Even then, we need to ask: would the long-term result be better if our efforts right now were focussed elsewhere? I honestly don’t know. But I will make one prediction: one wedge will end up a lot more effective than all others combined. Carbon emission reductions will not come from a little bit of natgas, a little bit of PV, a little bit of many other things. That’s not how the world works.

The 80/20 Rule

Suppose you’re a genetic engineer, and you want to design a genome for a very tall person.

Our current understanding is that height is driven by lots of different genes, each of which has a small impact. If that’s true, then integral(epsilon) isn’t a fallacy. A large number of small changes really is the way to make a tall person.

On the other hand, this definitely is not the case if we’re optimizing a computer program for speed. In computer programs, one small piece usually accounts for the vast majority of the run time. If we want to make a significant improvement, then we need to focus on the bottleneck, and any improvement to the bottleneck will likely be significant on its own. “Lots of small changes” won’t work.

So… are things usually more like height, or more like computer programs?

A useful heuristic: the vast majority of real-world cases are less like height, and more like computer programs. Indeed, this heuristic is already well-known in a different context: it’s just the 80/20 rule. 20% of causes account for 80% of effects.

If 80% of any given effect is accounted for by 20% of causes, then those 20% of causes are the bottleneck. Those 20% of causes are where effort needs to be focused to have a significant impact on the effect. For examples, here’s wikipedia on the 80/20 rule:

- 20% of program code contains 80% of the bugs

- 20% of workplace hazards account for 80% of injuries

- 20% of patients consume 80% of healthcare

- 20% of criminals commit 80% of crimes

- 20% of people own 80% of the land

- 20% of clients account for 80% of sales

You can go beyond wikipedia to find whole books full of these things, and not just for people-driven effects. In the physical sciences, it usually goes under the name “power law”.

(As the examples suggest, the 80/20 rule is pretty loose in terms of quantitative precision. But for our purposes, qualitative is fine.)

So we have an heuristic. Most of the time, the epsilon fallacy will indeed be a fallacy. But how can we notice the exceptions to this rule?

One strong hint is a normal distribution. If an effect results from adding up many small causes, then the effect will (typically) be normally distributed. Height is a good example. Short-term stock price movements are another good example. They might not be exactly normal, or there might be a transformation involved (stock price movements are roughly log-normal). If there’s an approximate normal distribution hiding somewhere in there, that’s a strong hint.

But in general, omitting some obvious normal distribution, our prior assumption should be that most things are more like computer programs than like height. The epsilon fallacy is usually fallacious.

Conclusion: Profile Your Code

Most programmers, at some point in their education/career, are given an assignment to speed up a program. Typically, they start out by trying things, looking for parts of the code which are obviously suboptimal. They improve those parts, and it does not seem to have any impact whatsoever on the runtime.

After wasting a few hours of effort on such changes, they finally “profile” the code - the technical name for timing each part, to figure out how much time is spent in each section. They find out that 98% of the runtime is in one section which they hadn’t even thought to look at. Of course all the other changes were useless; they didn’t touch the part where 98% of the time is spent!

The intended lesson of the experience is: ALWAYS profile your code FIRST. Do not attempt to optimize any particular piece until you know where the runtime is spent.

As in programming, so in life: ALWAYS identify the bottleneck FIRST. Do not waste time on any particular small piece of a problem until you know which piece actually matters.

Footnotes

¹The Taleb argument provides an interesting counterweight to the epsilon fallacy. If we’re bad at predicting which approach will be big, then it makes sense to invest a little in many different approaches. We expect most of them to be useless, but a few will have major results - similar to venture capital. That said, it’s amazing how often people who make this argument just happen to end up working on the same things as everyone else.

21 comments

Comments sorted by top scores.

comment by gjm · 2019-12-06T16:13:40.345Z · LW(p) · GW(p)

According to this webpage from the US Energy Information Administration, CO2 emissions from US energy generation went down 28% between 2005 and 2017, and they split that up as follows:

- 329 MMmt reduction from switching between fossil fuels

- 316 MMmt reduction from introducing noncarbon energy sources

along with

- 654 MMmt difference between actual energy demand in 2018 and what it would have been if demand had grown at the previously-expected ~2% level between 2005 and 2018 (instead it remained roughly unchanged)

If this is correct, I think it demolishes the thesis of this article:

- The change from coal to natural gas obtained by fracking does not dominate the reductions in CO2 emissions.

- There have been substantial reductions as a result of introducing new "sustainable" energy sources like solar and wind.

- There have also been substantial reductions as a result of reduced energy demand; presumably this is the result of a combination of factors like more efficient electronic devices, more of industry being in less-energy-hungry sectors (shifting from hardware to software? from manufacturing to services?), changing social norms that reduce energy consumption, etc.

- So it doesn't, after all, seem as if people wanting to have a positive environmental impact who chose to do it by working on solar power, political change, etc., were wasting their time and should have gone into fracking instead. Not even if they had been able to predict magically that fracking would be both effective and politically feasible.

↑ comment by johnswentworth · 2019-12-06T18:09:24.827Z · LW(p) · GW(p)

Those numbers say:

- The counterfactual decrease in emissions from low demand growth was larger than all other factors combined.

- Just looking at actual decreases (not counterfactual), the decrease in emissions from switching coal to natgas was larger than the decrease in emissions from everything else combined (even with subsidies on everything else and no subsidies on natgas).

I agree that, based on these numbers, the largest factor is not "a lot more effective than all others combined" as predicted in the post. But I wouldn't say it "demolishes the thesis" - however you slice it, the largest factor is still larger than everything else combined (and for actual decreases, that largest factor is still natgas).

Have there been substantial reductions in emissions from solar and wind? Yes. But remember the key point of the post: we need to consider opportunity costs. Would emissions be lower today if all the subsidies supporting solar/wind had gone to natgas instead? If all those people campaigning for solar/wind subsidies had instead campaigned for natgas subsidies? And that wouldn't have taken magical predictive powers ten years ago - at that time, the shift was already beginning.

Replies from: gjm↑ comment by gjm · 2019-12-07T15:38:27.911Z · LW(p) · GW(p)

It says that shifts between fossil fuels are about half the decrease (ignoring the counterfactual one, which obviously is highly dependent on the rather arbitrary choice of expected growth rate). I don't know whether that's all fracking, and perhaps it's hard to unpick all the possible reasons for growth of natural gas at the expense of coal. My guess is that even without fracking there'd have been some shift from coal to gas.

The thesis here -- which seems to be very wrong -- was: "Practically all the carbon reduction over the past decade has come from the natgas transition". And it needed to be that, rather than something weaker-and-truer like "Substantially the biggest single element in the carbon reduction has been the natgas transition", because the more general thesis is that here, and in many other places, one approach so dominates the others that working on anything else is a waste of time.

I appreciate that you wrote the OP and I didn't, so readers may be inclined to think I must be wrong. Here are some quotations to make it clear how consistently the message of the OP is "only one thing turned out to matter and we should expect that to be true in the future too".

- "it ain’t a bunch of small things adding together"

- "Practically all of the reduction in US carbon emissions over the past 10 years has come from that shift"

- "all these well-meaning, hard-working people were basically useless"

- "PV has been an active research field for thousands of academics for several decades. They’ve had barely any effect on carbon emissions to date"

- "one wedge will end up a lot more effective than all others combined. Carbon emission reductions will not come from a little bit of natgas, a little bit of PV, a little bit of many other things"

All of those appear to be wrong. (Maybe they were right when the OP was written, but if so then they became wrong shortly after, which may actually be worse for the more general thesis of the OP since it indicates how badly wrong one can be in evaluating what measures are going to be effective in the near future.)

Now, of course you could instead make the very different argument that if Thing A is more valuable per unit effort than Thing B then we should pour all our resources into Thing A. But that is, in fact, a completely different argument; I think it's wrong for several reasons, but in any case it isn't the argument in the OP and the arguments in the OP don't support it much.

The questions you ask at the end seem like their answers are supposed to be obvious, but they aren't at all obvious to me. Would natural gas subsidies have had the same sort of effect as solar and wind subsidies? Maaaaybe, but also maybe not: I assume most of the move from coal to gas was because gas became genuinely cheaper, and the point of solar and wind subsidies was mostly that those weren't (yet?) cheaper but governments wanted to encourage them (1) to get the work done that would make them cheaper and (2) for the sake of the environmental benefits. Would campaigning for natural gas subsidies have had the same sort of effect as campaigning for solar and wind? Maaaaybe, but also maybe not: campaigning works best when people can be inspired by your campaigning; "energy productions with emissions close to zero" is a more inspiring thing than "energy productions with a ton of emissions, but substantially less than what we've had before", and the most likely people to be inspired by this sort of thing are environmentalists, who are generally unlikely to be inspired by fracking.

Replies from: johnswentworth↑ comment by johnswentworth · 2019-12-07T18:11:40.132Z · LW(p) · GW(p)

All of those appear to be wrong.

Let's go through them one by one:

- "it ain’t a bunch of small things adding together" -> Still 100% true. Eyeballing the EIA's data, wind + natgas account for ~80% of the decrease in carbon emissions. That's 2 things added together.

- "Practically all of the reduction in US carbon emissions over the past 10 years has come from that shift" -> False, based on EIA 2005-2017 data. More than half of the reduction came from the natgas shift (majority, not just plurality), but not practically all.

- "all these well-meaning, hard-working people were basically useless" -> False for the wind people.

- "PV has been an active research field for thousands of academics for several decades. They’ve had barely any effect on carbon emissions to date" -> Still true. Eyeballing the numbers, solar is maybe 10% of the reduction to date. That's pretty small to start with, and on top of that, little of the academic research has actually translated to the market, much less addressed the major bottlenecks of solar PV (e.g. installation).

- "one wedge will end up a lot more effective than all others combined. Carbon emission reductions will not come from a little bit of natgas, a little bit of PV, a little bit of many other things" -> Originally intended as a prediction further into the future, and I still expect this to be the case. That said, as of today, "one wedge will end up a lot more effective" looks false, but "Carbon emission reductions will not come from a little bit of natgas, a little bit of PV, a little bit of many other things" looks true.

... So a couple of them are wrong, though none without at least some kernel of truth in there. And a couple of them are still completely true.

And it needed to be that, rather than something weaker-and-truer like "Substantially the biggest single element in the carbon reduction has been the natgas transition", because the more general thesis is that here, and in many other places, one approach so dominates the others that working on anything else is a waste of time.

No, as the next section makes clear, it does not need to be one approach dominating everything else; that just makes for memorable examples. 80/20 is the rule, and 20% of causes can still be more than one cause. 80/20 is still plenty strong for working on the other 80% of causes to be a waste of time.

comment by DirectedEvolution (AllAmericanBreakfast) · 2023-03-27T17:51:59.227Z · LW(p) · GW(p)

I'm not sure I'm ready to adopt the 80/20 rule as a heuristic, although I use it all the time as a hypothesis.

The 80/20 rule is too compatible with both availability bias and cherry-picking.

- "X is mainly bottlenecked by Y" is interesting to talk about, and gets shared, because the insight is valuable information.

- "No single cause explains more than 1% of the variation in X" is uninteresting, and gets shared less, because it's not very actionable. It's also harder to understand, because there are so many small variables to keep track of.

- As a result, we accumulate many more examples of the 80/20 "rule" in action than of the accumulation of many small contributing factors. Not only do we recall them more easily, but we coordinate human action around them more easily, which also helps generate vivid real-world examples.

The way I'd put it is that it's worth examining the hypothesis that the 80/20 rules is operating, because if it is, then you have a big opportunity to make improvements with minimal efforts. If it turns out that you can't find a bottleneck, move on to something else and forget about it...

... Unless the local problem you're trying to solve itself is the bottleneck for a larger problem. For example, let's say that fracking was the most important driver of carbon emissions reductions. Even if there weren't single large bottlenecks available to improve fracking efficiency, it might still be worth looking for all the available small wins.

Replies from: RamblinDash↑ comment by RamblinDash · 2023-03-27T18:03:55.708Z · LW(p) · GW(p)

The other problem with the Natural Gas argument is right there in the premise. If burning natural gas is twice as carbon efficient as burning coal (X emissions -> X/2 emissions), you can never do better than halving your emissions by switching everything to natural gas. So even though it's good that gas has led to lower emissions so far, we must necessarily work on these other technologies if we ever want to do better than X/2.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2023-03-27T18:22:58.324Z · LW(p) · GW(p)

Another issue is that, if your ultimate goal is to keep total CO2 content in the atmosphere before a certain level, natural gas gives you timeline but it is not, on its own, a solution. It is still an exhaustible resource that puts carbon permanently into the atmosphere.

That said, timelines count for a lot. If natgas gives us time to accumulate truly renewable green energy infrastructure, without reducing the urgency around installing renewables so much that the benefits cancel out, then it's to the good.

Replies from: RamblinDash↑ comment by RamblinDash · 2023-03-28T11:50:00.170Z · LW(p) · GW(p)

Right but i think part of the argument being made above is that we shouldn't bother will all this pie-in-the-sky stuff because the gas transition has caused almost all the actual emission reduction. It's boneheaded as a statement on climate policy, but in a way i think is constructive to explore and apply more broadly.

If you have achieved big gains doing some thing X, but you are very confident that ultimately X has fundamental limitations such that it can't possibly solve your ultimate problem....then it's not good to put all your resources into X. (cf RLHF, i guess)

comment by gjm · 2018-03-17T23:03:09.273Z · LW(p) · GW(p)

I'm willing to defend the wedge argument a bit. Let's consider those thousands of scientists who worked on solar electricity generation. Clearly, as you say, what they did wasn't useless -- they did produce a technology that does a useful thing. So the question, again as you say, is opportunity costs. What should those scientists have done instead of working on solar electricity generation?

Perhaps they should have gone into hydraulic fracking. But: 1. Presumably these are mostly experts in things like semiconductor physics, the material-science properties of silicon, etc. They'd not be that much use to the frackers. 2. A lot of their work happened before (so far as I know) there was good reason to think that horizontal drilling and fracking would be both effective and politically acceptable. So what's the actual principle these people could and should have followed, that would have led them to do something more effective? I suspect there isn't one.

(Also ... I have the impression, though it's far from an expert one and may be mostly a product of dishonest propaganda, that fracking has a bunch of bad environmental consequences that aren't captured by that graph showing carbon emissions. Unless the only thing we care about is carbon emissions, you can't just go from "biggest reduction in carbon emissions is from fracking" to "fracking should dominate our attempts to reduce carbon emissions" without some consideration of the other effects of fracking and other carbon-emissions-reducing activities.)

Replies from: johnswentworth↑ comment by johnswentworth · 2018-03-18T14:27:03.683Z · LW(p) · GW(p)

The first objection is particularly interesting, and I've been mulling another post on it. As a general question: if you want to have high impact on something, how much decision-making weight should you put on leveraging your existing skill set, versus targeting whatever the main bottleneck is regardless of your current skills? I would guess that very-near-zero weight on current skillset is optimal, because people generally aren't very strategic about which skills they acquire. So e.g. people in semiconductor physics etc probably didn't do much research in clean energy bottlenecks before choosing that field - their skillset is mostly just a sunk cost, and trying to stick to it is mostly sunk cost fallacy (to the extent that they're actually interested in reducing carbon emissions). Anyway, still mulling this.

Totally agree with the second objection. That said, there are technologies which have been around as long as PV which look at-least-as-promising-and-probably-more-so but receive far less research attention - solar thermal and thorium were the two which sprang to mind, but I'm sure there's more. From an outside view, we should expect this to be the case, because academics usually don't choose their research to maximize impact - they choose it based on what they know how to study. Which brings us back to the first point.

comment by gjm · 2018-03-17T22:03:55.732Z · LW(p) · GW(p)

This has nothing at all to do with the point actually under discussion, but my reaction on looking at those three lines of code was: hmmmm, that third line almost certainly isn't doing what its author intends it to do. It replaces entries in order, left to right, and replaces each entry by the average of the new entry on the left and the old entry on the right. But if someone wrote code like that, without a comment saying otherwise, I would bet they meant it just to replace each entry by the average of its two neighbours.

Also, it's a weird sort of smoothing; e.g., if the input is +1, -1, +1, -1, +1, -1, etc., then it won't smooth it at all, just invert it. It would likely be better to convolve with something like [1,2,1]/4 instead of [1,0,1]/2.

Replies from: johnswentworth↑ comment by johnswentworth · 2018-03-18T14:07:51.784Z · LW(p) · GW(p)

Oh lol I totally missed that. Apparently I've been using numpy for everything so long that I've forgotten how to do it c-style.

comment by tailcalled · 2024-06-26T11:45:28.781Z · LW(p) · GW(p)

Recently I've been thinking that a significant cause of the epsilon fallacy is that perception is by-default logarithmic (which in turn I think is because measurement error tends to be proportional to the size of the measured object, so if you scale things by the amount of evidence you have, you get a logarithmic transformation). Certain kinds of experience(?) can give a person an ability to deal with the long-tailed quantities inherent to each area of activity, but an important problem in the context of formalizing rationality and studying AIs is figuring out what kinds of experiences those are. (Interventions seem like one potential solution, but they're expensive. More cheaply it seems like one could model it observationally with the right statistical model applied to a collider variable... Idk.)

comment by DirectedEvolution (AllAmericanBreakfast) · 2023-03-28T16:42:18.158Z · LW(p) · GW(p)

After thinking about this more, I think that the 80/20 rule is a good heuristic before optimization. The whole point of optimizing is to pluck the low hanging fruit, exploit the 80/20 rule, eliminate the alpha, and end up with a system where remaining variation is the result of small contributing factors that aren’t worth optimizing anymore.

When we find systems in the wild where the 80/20 rule doesn’t seem to apply, we are often considering a system that’s been optimized for the result. Most phenotypes are polygenic, and this is because evolution is optimizing for advantageous phenotypes. The premise of “atomic habits” is that the accumulation of small habit wins compounds over time, and again, this is because we already do a lot of optimizing of our habits and routines.

It is in domains where there’s less pressure or ability to optimize for a specific outcome that the 80/20 rule will be most in force.

It’s interesting to consider how this jives with “you can only have one top priority.” OpenAI clearly has capabilities enhancement as its top priority. How do we know this? Because there are clearly huge wins available to it if it was optimizing for safety, and no obvious huge wins to improve capabilities. That means they’re optimizing for capabilities.

Replies from: johnswentworth, sharmake-farah↑ comment by johnswentworth · 2023-03-28T17:11:23.258Z · LW(p) · GW(p)

This is also my current heuristic, and the main way that I now disagree with the post.

↑ comment by Noosphere89 (sharmake-farah) · 2023-03-28T17:42:58.468Z · LW(p) · GW(p)

When we find systems in the wild where the 80/20 rule doesn’t seem to apply, we are often considering a system that’s been optimized for the result.

That doesn't always mean that you can't make big improvements, because the goal it was optimized for may not fit your goals.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2023-03-28T18:11:47.316Z · LW(p) · GW(p)

Agreed. And even after you've plucked all the low-hanging fruit, the high-hanging fruit may still offer the greatest marginal gains, justifying putting effort into small-improvement optimizations. This is particularly true if there are high switching costs to transition between top priorities/values in a large organization. Even if OpenAI is sincere in its "capabilities and alignment go hand in hand" thesis, they may find that their association with Microsoft imposes huge or insurmountable switching costs, even when they think the time is right to stop prioritizing capabilities and start directly prioritizing alignment.

And of course, the fact that they've associated with business that cares for nothing but profit is another sign OpenAI's priority was capabilities pure and simple, all along. It would have been relatively easy to preserve their option to switch to a capabilities priority if they'd remained independent, and I predict they will not be able to do so, could foresee this, and didn't care as much as they cared about impressive technology and making money.

comment by benjamincosman · 2023-03-27T22:39:33.431Z · LW(p) · GW(p)

Seems to me like avoiding this fallacy is also basically the entire starting premise of Effective Altruism. For most broad goals like "reduce global poverty", there are charities out there that are thousands of times more effective than other ones, so the less effective ones are the epsilons. Yes they are still "good" - sign(epsilon) is positive - but the opportunity cost (that dollar could have done >1000x more elsewhere) is unusually clear.

comment by [deleted] · 2018-03-17T19:17:59.814Z · LW(p) · GW(p)

The carbon emissions example is a great one that I think people don't take into account that often. EX: *Even if* every recycling / energy reduction campaign worked, i.e. if residential emissions dropped to 0%, this is still only about 12% of the US's overall emissions.

comment by Teja Prabhu (0xpr) · 2018-03-17T05:01:01.518Z · LW(p) · GW(p)

The first response is what I’m calling the epsilon fallacy. (If you know of an existing and/or better name for this, let me know!)

This reminds me of Amdahl's Law. You could call it Amdahl's fallacy, but I'm not sure if it is a better name.

Replies from: mraxilus↑ comment by mraxilus · 2018-03-17T17:01:06.019Z · LW(p) · GW(p)

As a fellow programmer, I think the epsilon fallacy is more memorable. If it were Amdahl's fallacy, it would be one of those fallacies I have to constantly lookup the fifty times or so (terrible memory, and not enough slack/motivation for a fallacy memory palace).