Posts

Comments

Good news: there is no way to opt in because you are already in. (If you want to opt out, we have a problem.)

This week's topic is Reading & Discussion.

should be changed

My guess at the truth of the matter is that almost no one is 100% guessing, but some people are extremely confident in their answer (a lot of the correct folks and also a small number of die-hard geocentrists), and then there's a range down to people who haven't thought about it in ages and just have a vague recollection of some elementary school teacher. Which I think is also a more hopeful picture than either the 36% clueless or the 18% geocentrists models? Because for people who are right but not confident, I'm reasonably ok with that; ideally they'd "know" more strongly, but it's not a disaster if they don't. And for people who are wrong but not confident, there are not that many of them and also they would happily change their mind if you just told them the correct answer.

How valid is it to assume that (approximately) everyone who got the heliocentrism question wrong got it wrong by "guessing"? If 18% got it wrong, then your model says that there's 36% who had no clue and half guessed right, but at the other extreme there's a model that everyone 'knows' the answer, but 18% 'know' the wrong answer. I'm not sure which is scarier - 36% clueless or 18% die-hard geocentrists - but I don't think we have enough information here to tell where on that spectrum it is. (In particular, if "I don't know" was an option and only 3% selected it, then I think this is some evidence against the extreme end of 36% clueless?)

Too Like the Lightning by Ada Palmer :)

Here's the closest thing to your argument in this post that I'd endorse:

- Ukraine did not allow its men to leave.

- NYT did not mention this fact as often as a "fully unbiased" paper would have, and in fact often used wordings that were deliberately deceptive in that they'd cause a reader to assume that men were staying behind voluntarily.

- Therefore NYT is not a fully unbiased paper.

The part I disagree with: I think this is drastically blown out of proportion (e.g. that this represents "extreme subversions of democracy"). Yes NYT (et al) is biased, but

- I think this has been true for much longer than just the Ukraine war

- I think there are much stronger pieces of evidence one could use to demonstrate it than this stuff about the Ukraine war (e.g. https://twitter.com/KelseyTuoc/status/1588231892792328192)

- I think that yes all this is bad, but democracy is managing to putter along anyway

Which means that in my eyes, the issue with your post is precisely the degree to which it is exaggerating the problem. Which is why I (and perhaps other commenters) focused our comments on your exaggerations, such as the "routinely and brazenly lied" title. So these comments seem quite sane to me; I think you're drawing entirely the wrong lesson from all this if you think the issue is that you drew the wrong people here with your tagging choices (you'd have drawn me no matter what with your big-if-true title), or didn't post large enough excerpts from the articles. But if you plan to firm up the "implications for geopolitics and the survival of democracy", I look forward to reading that.

The title of this post is a "level 6 lie" too. I, and I'd guess many if not most of your readers, came here expecting to read some type 7 once we saw "routinely and brazenly lied". Which means you built a false model in our heads, even if you can claim you are technically accurate because Scott Alexander once wrote a thing where NYT's behavior is type 6. Plus I will note that his description of type 6 calls it not technically lying, which rather weakens your claim to be even technically correct.

with the following assumptions:

Should the ∨ in assumption 1 be an ∧?

Cool idea!

One note about this:

Let's see what happens if I tweak the language: ... Neat! It's picked up on a lot of nuance implied by saying "important" rather than "matters".

Don't forget that people trying to extrapolate from your five words have not seen any alternate wordings you were considering. The LLM could more easily pick up on the nuance there because it was shown both wordings and asked to contrast them. So if you actually want to use this technique to figure out what someone will take away from your five words, maybe ask the LLM about each possible wording in a separate sandbox rather than a single conversation.

US Department of Transportation, as I’m sometimes bold enough to call them

I assume you intended to introduce your "US DoT" abbreviation here?

Oh, and as an aside a practical experiment I ran back in the day by accident: I played in a series of Diplomacy games where there was common knowledge that if I ever broke my word on anything all the other players would gang up on me, and I still won or was in a 2-way draw (out of 6-7 players) most of the time. If you have a sufficient tactical and strategic advantage (aka are sufficiently in-context smarter) then a lie detector won’t stop you.

I'm not sure this is evidence for what you're using it for? Giving up the ability to lie is a disadvantage, but you did get in exchange the ability to be trusted, which is a possibly-larger advantage - there are moves which are powerful but leave you open to backstabbing; other alliances can't take those moves and yours can.

Taken together, the two linked markets say there's a significant chance that the House does absolutely nothing for multiple weeks (i.e. they don't elect a new speaker and they don't conduct legislative business either). I guess this is possible but I don't think we're that dysfunctional and will bet against that result when my next Manifold loan comes in.

I haven't dived into the formalism (if there is one?), but I'm roughly using FDT to mean "make your decision with the understanding that you are deciding at the policy level, so this affects not just the current decision but all other decisions that fall under this policy that will be made by you or anything sufficiently like you, as well as all decisions made by anyone else who can discern (and cares about) your policy". Which sounds complicated, but I think often really isn't? e.g. in the habits example, it makes everything very simple (do the habit today because otherwise you won't do it tomorrow either). CDT can get to the same result there - unlike for some weirder examples, there is a causal though not well-understood pathway between your decision today and the prospective cost you will face when making the decision tomorrow, so you could hack that into your calculations. But if by 'overkill' you mean using something more complicated than necessary, then I'd say that it's CDT that would be overkill, not FDT, since FDT can get to the result more simply. And if by 'overkill' you mean using something more powerful/awesome/etc than necessary, then overkill is the best kind of kill :)

I think FDT is more practical as a decision theory for humans than you give it credit for. It's true there are a lot of weird and uncompelling examples floating around, but how about this very practical one: the power of habits. There's common and (I think) valuable wisdom that when you're deciding whether to e.g. exercise today or not (assuming that's something you don't want to do in the moment but believe has long-term benefits), you can't just consider the direct costs and benefits of today's exercise session. Instead, you also need to consider that if you don't do it today, realistically you aren't going to do it tomorrow either because you are a creature of habit. In other words, the correct way to think about habit-driven behavior (which is a lot of human behavior) is FDT: you don't ask "do I want to skip my exercise today" (to which the answer might be yes), instead you ask "do I want to be the kind of person who skips their exercise today" (to which the answer is no, because that kind of person also skips it every day).

Decision theories are not about what kind of agent you want to be. There is no one on god’s green earth who disputes that the types of agents who one box are better off on average. Decision theory is about providing a theory of what is rational.

Taboo the word "rational" - what real-world effects are you are actually trying to accomplish? Because if FDT makes me better off on average and CDT allows me to write "look how rational I am" in my diary, then there's a clear winner here.

I suggest Codenames as a good game for meetups:

- rounds are short (as is teaching the game)

- it can take a flexible number of players, and people can easily drop in and out

- it is good both for people who want a brain burner (play as captain) and for people who want something casual (don't play as captain)

- if you play without the timer, there will be dead time between turns for people to talk while the captains come up with clues

- as GuySrinivasan comments, there are some people who don't like board games, but anecdotally I'd estimate 90% of the people I've introduced to Codenames have enjoyed it, including multiple people who've said they don't normally play/enjoy games.

committing to an earning-to-give path would be a bet on this situation being the new normal.

Is that true, or would it just be the much more reasonable bet that this situation ever occurs again? because at least in theory, a dedicated earning-to-give person could just invest money during the funding-flush times and then donate it the next time we're funding-constrained?

Oh I entirely agree.

My guess is that a lot of the difference in perception-of-danger comes from how much control people feel they have in each situation. In a car I feel like I am in control, so as long as I don't do stupid stuff I won't get in an accident (fatal or otherwise), even though this is obviously not true as a random drunk driver could always hit me. Whereas on transit I feel less in control and have had multiple brushes with people who were obviously not fully in their right minds, one of whom claimed to have a gun; I may not have actually been in more danger but it sure felt like it.

Focusing on only deaths makes some sense since it's the largest likely harm, but I will note that death is not the only outcome some people are afraid of on public transit; if you include lesser harms like being mugged or groped then that is going to tip the scales further towards driving since those things have a ~0% chance of happening while driving, and a small-but-probably-non-trivial chance (I haven't looked it up) on transit.

Ultimately yes that is the resolution, but the point is that you usefully get there not by playing a never-ending game of whack-a-mole arguing that each variant is still somehow incoherent or has a truth value though some weird quirk of the English tense used, but rather by switching from a blacklist system to a whitelist system: everything is presumed invalid unless build up from axiomatic building blocks.

"All models are wrong but some are useful" - George Box

First for my less important point: I submit that your framing is a bit weird, in a way that I think stems from a non-standard and somewhat silly definition for the word "paradox". (The primary purpose here will be to build a framework useful in the next part, not to argue about the 'true definition of paradox' since such a thing does not exist.) We live in (as far as we know) a consistent universe; at the base reality layer, nothing self-contradictory or impossible ever occurs. But it is easy to (accidentally or intentionally) construct models in our heads that contain contradictions, and then it is quite useful to have a word meaning roughly "a concise statement/demonstration/etc which lays bare a contradiction in a model". I'm going to use the word "paradox" for that; feel free to pick a different word if you want. Since the contradiction is always in the model and not in reality, a paradox can always be resolved by figuring out where exactly the model was wrong. Once you do that, it is still reasonable to keep using the word "paradox" (or, again, whatever word you've chosen for this useful concept) to keep referring to the thing that just got resolved, since it is still a thing that lays bare a contradiction in that model. Continuing to use that word is not a "misnomer", otherwise every paradox is a misnomer (since we just haven't finished finding the flaws in the models yet, but you know we must eventually).

Now for the more important part: your resolution of these particular paradoxes kind of misses the point. There is a model of math/logic which basically says that every statement which sounds like it could have a truth value does have a truth value. This is a very convenient model, because it means that when I want to make a logical argument, I can just start saying English sentences and focus on how each logically follows from the ones before it, while not spending time convincing you that each is also a thing that can validly have a truth value at all. And most of the time this model actually works pretty well, to the point that it still has a place in widely accepted math proofs, even though we know the model is wrong. But part of why it remains usable is that we know when we can use it and when we must not, because we have things like the Liar's Paradox which concisely demonstrate where its flaws are. Your analysis resolves the paradoxes without showing where these important flaws are by instead exploiting 'gotchas' in the exact ways you chose to word them. As a result, the resolutions aren't particularly useful because someone with only those resolutions would still not know why the model is ultimately unfixably wrong, and also not very robust because I can break them using small variations on the original paradoxes which retain the underlying contradiction while avoiding your semantic weaseling - for example, replace "I am lying" by "I am lying or did lie", and "This sentence is false" by "The logical claim represented by this sentence is false".

Who is advocating for regulations?

...

Non-libertarians...tend to have somewhat more optimistic views on how likely things are to go well

...

Libertarians...tend to have more pessimistic views on our likelihood of making it through.

This claimed correlation between libertarianism and pessimism seemed surprising to me until I noticed that actually since we are conditioning on advocating-for-regulations, Berkson's Bias would make this correlation appear even in a world where libertarianism and pessimism were completely uncorrelated in the general population.

I just noticed that this fictional game is surprisingly similar to Marvel Snap (which was released later the same year); I assume based on the timing that this is a coincidence but I thought it was amusing.

you might be mindkilled by the local extinctionists

+1(agreement) and -1(tone): you are correct that good arguments against doom exist, but this way of writing about it feels to me unnecessarily mean to both OP and to the 'extinctionists'

from here: "at which point their shares will pay out $100 if they’re revealed to be in the mafia."

from the linked rules doc: "the top-priced player will be executed. Everyone who owns shares in that player will receive $100 per share."

Is it conditional or unconditional payout?

The link labeled "Calibration Trivia Sets" goes to a single slideshow labeled "Calibration Trivia Set 1 TF" rather than a folder with multiple sets; I assume (with 95% probability :) ) that this is a mistake?

Seems to me like avoiding this fallacy is also basically the entire starting premise of Effective Altruism. For most broad goals like "reduce global poverty", there are charities out there that are thousands of times more effective than other ones, so the less effective ones are the epsilons. Yes they are still "good" - sign(epsilon) is positive - but the opportunity cost (that dollar could have done >1000x more elsewhere) is unusually clear.

The title is definitely true: humans are irrational in many ways, and an AGI will definitely know that. But I think the conclusions from this are largely off-base.

-

the framing of "it must conclude we are inferior" feels like an unnecessarily human way of looking at it? There are a bunch of factual judgements anyone analyzing humans can make, some that have to do with ways we're irrational and some that don't (can we be trusted to keep to bargains if one bothered to make them? maybe not. Are we made of atoms which could be used for other things? yes); once you've established these kinds of individual questions, I'm not sure what you gain by summing it up with the term 'inferior', or why you think that the rationality bit in particular is the bit worth focusing on when doing that summation.

-

there is nothing inherently contradictory in a "perfectly rational" agent serving the goals of us "irrational" agents. When I decide that my irrational schemes require me to do some arithmetic, the calculator I pick up does not refuse to function on pure math just because it's being used for something "irrational". (Now whether we can get a powerful AGI to do stuff for us is another matter; "possible" doesn't mean we'll actually achieve it.)

Typo: "fit the bill" and "fitting the bill" -> "foot the bill" and "footing the bill"

We humans dominate the globe but we don’t disassemble literally everything (although we do a lot) to use the atoms for other purposes.

There are two reasons why we don't:

-

We don't have the resources or technology to. For example there are tons of metals in the ground and up in space that we'd love to get our hands on but don't yet have the tech or the time to do so, and there are viruses we'd love to destroy but we don't know how. The AGI is presumably much more capable than us, and it hardly even needs to be more capable than us to destroy us (the tech and resources for that already exist), so this reason will not stop it.

-

We don't want to. For example there are some forests we could turn into useful wood and farmland, and yet we protect them for reasons such as "beauty", "caring for the environment", etc. Thing is, these are all very human-specific reasons, and:

Isn’t it arguable that ASI or even AGI will have a better appreciation for systems ecology than we do…

No. Sure it is possible, as in it doesn't have literally zero chance if you draw a mind at random. (Similarly a rocket launched in a random direction could potentially land on the moon, or at least crash into it.) But there are so many possible things an AGI could be optimizing for, and there is no reason that human-centric things like "systems ecology" should be likely, as opposed to "number of paperclips", "number of alternating 1s and 0s in its memory banks", or an enormous host of things we can't even comprehend because we haven't discovered the relevant physics yet.

(My personal hope for humanity lies in the first bullet point above being wrong: given surprising innovations in the past, it seems plausible that someone will solve alignment before it's too late, and also given some semi-successful global coordination things in the past (avoiding nuclear war, banning CFCs), it seems plausible that a few scary pre-critical AIs might successfully galvanize the world into successful delaying action for long enough that alignment could be solved)

(This is of course just my understanding of his model, but) yes. The analogy he uses is that while you cannot predict Stockfish's next move in chess, you can predict for 'certain' that it will win the game. I think the components of the model are roughly:

- it is 'certain' that, given the fierce competition and the number of players and the incentives involved, somebody will build an AGI before we've solved alignment.

- it is 'certain' that if one builds an AGI without solving alignment first, one gets basically a random draw from mindspace.

- it is 'certain' that a random draw from mindspace doesn't care about humans

- it is 'certain' that, like Stockfish, this random draw AGI will 'win the game', and that since it doesn't care about humans, a won gameboard does not have any humans on it (because those humans were made of atoms which could be used for whatever it does care about)

part of this will be to make at least a nominal trade in every market I would want to be updated on

I don’t want to get updates on this so I’m sitting it out

For the future, you can actually follow/unfollow markets on Manifold independently of whether you've bet on them: they've moved this button around but it's currently on the "..." ("Market details") popup (which is found in the upper-right corner of a market's main pane).

I bought M270 of NO, taking out a resting buy order at 71%, causing me to note that you can sometimes get a better price buying in multiple steps where that shouldn’t be true. Weird.

According to the trade log at https://manifold.markets/ACXBot/41-will-an-image-model-win-scott-al, it looks like you bought 230 of NO (75%->70%), then a bot user responded in under a second by buying YES back up to 72%, and then you bought your final 40 of NO at the new price. There are several bots which appear to be watching and counteracting large swings, so I sometimes purposefully purchase in two stages like this to take advantage of exactly this effect. (Though I don't know the bots' overall strategies, and some appear to sometimes place immediate bets in the same direction as a large swing instead.)

The range of possible compute is almost infinite (e.g. 10^100 FLOPS and beyond). Yet both intelligences are in the same relatively narrow range of 10^15 - 10^30

10^15 - 10^30 is not at all a narrow range! So depending on what the 'real' answer is, there could be as little as zero discrepancy between the ratios implied by these two posts, or a huge amount. If we decide that GPT-3 uses 10^15 FLOPS (the inference amount) and meanwhile the first "decent" simulation of the human brain is the "Spiking neural network" (10^18 FLOPS according to the table), then the human-to-GPT ratio is 10^18 / 10^15 which is almost exactly 140k / 175. Whereas if you actually need the single molecules version of the brain (10^43 FLOPS), there's suddenly an extra factor of ten septillion lying around.

So if you strongly opine that there should be a lot more legal immigration and a lot more enforcement, that's "no strong opinion"? This is not satisfying, though of course there'll never be a way to make the options satisfy everyone <shrug>.

You might also be able to do even the original setup for non-trivially cheaper (albeit still expensive) by just offering a lower wage? Given that for most of the time they can be doing whatever they want on their own computer (except porn, evidently; what's so bad about that anyway :P ) and that you were able to find 5 people at the current rate, I'd guess you could fill the position with someone(s) you're happy with at 10% or even 20% less.

What does one answer for "How would you describe your opinion on immigration?" if your preferred policy would include much more legal immigration and more enforcement against illegal immigration?

we may adopt never interrupt someone when they're doing something you want, even for the wrong reason.

Don't take this too far though; calling out bullshit may still be valuable even if it's bullshit that temporarily supports "your side": ideally doing so helps raise the sanity waterline overall, and more cynically, bullshit may support your opponents tomorrow and you'll be more credible calling it bullshit then if you also do so today.

But in this exact case, banning GoF seems good and also not using taxpayer dollars on negative-EV things seems good, so these Republicans are just correct this time.

Is this assumption based on facts or is it based on assumptions?

GPT teaches rationality on hard mode

AI in general is meant to do what we cannot do - else we would have done it, already, without AI - but to "make things better", that we could very readily have done, already.

Strong disagree, for two related reasons:

AI in general is meant to do what we cannot do

- Why must AI only be used for things we cannot do at all? e.g. the internet has made it much easier to converse with friends and family around the world; we could already do that beforehand in inferior or expensive ways (voice-only calls, prohibitively-frequent travel) but now we can also do it with easy video calling, which I think pretty clearly counts as "make things better". It would be silly to say "the internet is meant to do what we cannot do, and we could very readily have already been calling home more often without the internet, so the internet can't make things better".

to "make things better", that we could very readily have done... we certainly had many opportunities to create such a civilization... [etc]

- Imagine a trade between two strangers in a setting without atomic swaps. What is the "upside" of a trusted third-party escrow agent? After all, whichever trader must hand over their goods first could 'very readily' just decide to trust the second trader instead of mistrusting them. And then that second trader could 'very readily' honestly hand over their goods afterward instead of running away with everything. Clearly since they didn't come up with that obvious solution, they don't really want to trade, and so this new (social) technology of escrow that would allow them to do so isn't actually providing a real "upside".

Devil's Advocate in support of certain CVS-style recoup-our-commitment donations:

Suppose that all the following are true:

- CVS giving to charity in some form is reasonable, and a classic donation-matching drive would have been one reasonable method

- CVS internal predictions suggest that a matching drive would generate ~$5m of customer donations, which they'd then have to match with ~$5m of their own

- A donation of exactly $10m is more useful to the recipient than the uncertainty of a donation drive with EV $10m, because the recipient can confidently budget around the fixed amount

In this case, instead of running the drive and donating ~$10m at the end, it seems pretty reasonable to donate $10m up front and then ask for customer donations afterward? And while a CDT agent might now refuse to donate because the donation goes to CVS and not to the charity, an LDT agent who would have donated to the matching drive should still donate to this new version because their being-and-having-been the kind of agent who would do that is what caused CVS to switch to this more useful fixed-size version.

(Though even if you buy the above, it would still behoove the retailer to be transparent about what they're doing; that plus the "retailers take a massive cut" argument seems like pretty good reasons to avoid donating through retailers anyway.)

C-4 can explode; undecided voters think this makes C-4 better than B-4.

Similar opinion here - OP is surely doing somebody a useful service by creating a cleaner and more easily available transcript, but LW does not seem the right place for it.

I am not at all doubting your unpleasant experiences with some EA men. But when I read this, one thing I wonder is how much this is actually correlated with EA, vs just being a 'some men everywhere are jerks' thing? (I am not at all condoning being a jerk - saying it might be common does not mean I'm also saying it's ok!) But e.g. I would not at all be surprised to find there are (to pick some arbitrary groups) some female doctors writing on their doctor forum that

From experience it appears that, a ‘no’ once said is not enough for many male doctors.

and that there are female chess players writing on their chess forum that

So how do these men find sexual novelty? Chess meet ups of course.

I do agree that the polyamory thing is definitely correlated with EA, but I'm not actually sure it's very relevant here? E.g. would it be any better if the jerks aggressively propositioning you were serial monogamist jerks rather than poly jerks?

To be clear though, I certainly don't have any evidence that it's not an EA-specific problem; I am legitimately asking for your (and others') thoughts on this.

EA is an optimization of altruism with “suboptimal” human tendencies like morality and empathy stripped from it

I so utterly disagree with this statement. Indeed I think one can almost summarize EA as the version of altruism that's been optimized for morality, instead of solely empathy (though it includes that too!).

we need some mechanism between them - the system of ball, hill, and their dynamics.

A brain seems to be full of suitable mechanisms? e.g. while we don't have a good model yet for exactly how our mind is produced by individual neurons, we do know that neurons exhibit thresholding behavior ('firing') - remove atoms one at a time and usually nothing significant will happen, until suddenly you've removed the exact number that one neuron no longer fires, and in theory you can get arbitrarily large differences in what happens next.

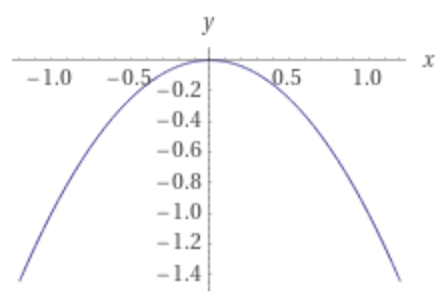

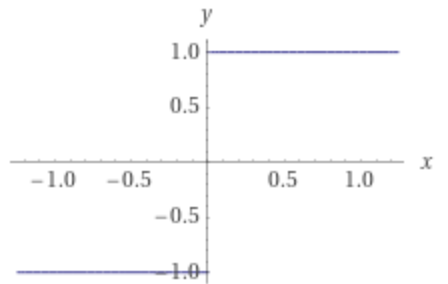

A ball sitting on this surface:

has a direction of travel given by this:

In other words, continuous phenomena can naturally lead to discontinuous phenomena. At the top of that curve, moving the ball by one Planck length causes an infinite divergence in where it ends up. So where does that infinite divergence "come from", and could the same answer apply to your brain example?

So if you rejected mind-body continuity, you'd have to admit that some non-infinitesimal mental change can have an infinitesimal (practically zero) effect on the physical world. So basically you'd be forced into epiphenomenalism.

I don't think there's a principled distinction here between "infinitesimal" and "non-infinitesimal" values. Imagine we put both physical changes and mental changes on real-valued scales, normalized such that most of the time, a change of x physical units corresponded to a change of approximately x mental units. But then we observe that in certain rare situations, we can find changes of .01 physical units that correspond to 100 mental units. Does this imply epiphenomenalism? What about 10^(-3) -> 10^4? So it feels like the only thing I'm forced to accept when rejecting the continuity postulate is that in the course of running a mind on a brain, while most of the time small changes to the brain will correspond to small changes to the mind, occasionally the subjective experience of the mind changes by more than one would naively expect when looking at the corresponding tiny change to the brain. I am willing to accept that. It's very different from the normal formulation of epiphenomenalism, which roughly states that you can have arbitrary mental computation without any corresponding physical changes, i.e. a mind can magically run without needing the brain. (This version of epiphenomenalism I continue to reject.)

I don't know if there are 'natural' events with irrational probabilities, but I think we can construct an artificial one? Roughly, let X be the event that a uniformly-random number in [0,10) is < pi; then P(X)=pi/10.* So if you were forced to give X a rational probability, what probability would you choose?

*One can't actually produce a uniformly-random real number, but here's a real-world procedure that should be equivalent to X: start generating an infinite string of digits v_i one at a time using a d10. For each digit, if it is less than the i'th digit of pi return YES, if it is greater return NO, if it is equal continue to rolling the next digit. So e.g. a roll of 2 is an immediate YES; a roll of 3 -> 1 -> 7 is a NO. This procedure takes unbounded time, but with probability 1 it terminates eventually.

I ran a very small Petrov Day gathering this year in Champaign-Urbana. (We had 5-6 people I think?) I put a Staples "Easy" button on the table and said if anyone presses it then the event ends immediately and we all leave without talking. (Or that I would do so anyway, obviously I couldn't make anyone else.) No one pressed the button.

Typo: yolk -> yoke