On Complexity Science

post by Garrett Baker (D0TheMath) · 2024-04-05T02:24:32.039Z · LW · GW · 19 commentsContents

19 comments

I have a long and confused love-hate relationship with the field of complex systems. People there never want to give me a simple, straightforward explanation about what its about, and much of what they say sounds a lot like woo ("edge of chaos" anyone?). But it also seems to promise a lot! This from the primary textbook on the subject:

The present situation can be compared to an archaeological project, where a mosaic floor has been discovered and is being excavated. While the mosaic is only partly visible and the full picture is still missing, several facts are becoming clear: the mosaic exists; it shows identifiable elements (for instance, people and animals engaged in recognizable activities); there are large patches missing or still invisible, but experts can already tell that the mosaic represents a scene from, say, Homer’s Odyssey. Similarly, for dynamical complex adaptive systems, it is clear that a theory exists that, eventually, can be fully developed.

Of course, that textbook never actually described what the mosaic it thought it saw actually was. The closest it came to was:

More formally, co-evolving multiplex networks can be written as, [...] The second equation specifies how the interactions evolve over time as a function that depends on the same inputs, states of elements and interaction networks. can be deterministic or stochastic. Now interactions evolve in time. In physics this is very rarely the case. The combination of both equations makes the system a co-evolving complex system. Co-evolving systems of this type are, in general, no longer analytically solvable.

Which... well... isn't very exciting, and as far as I can tell just describes any dynamical system (co-evolving or no).

The textbook also seems pretty obsessed with a few seemingly random fields:

- Economics

- Sociology

- Biology

- Evolution

- Neuroscience

- AI

- Probability theory

- Ecology

- Physics

- Chemistry

"What?" I had asked, and I started thinking

Ok, I can see why some of these would have stuff in common with others.

Physics brings in a bunch of math you can use.

Economics and sociology both tackle similar questions with very different techniques. It would be interesting to look at what they can tell each other (though it seems strange to spin off a brand new field out of this).

Biology, evolution, and ecology? Sure. Both biology and ecology are constrained by evolutionary pressures, so maybe we can derive new things about each by factoring through evolution.

AI, probability theory, and neuroscience? AI and neuroscience definitely seem related. The history of AI and probability theory has been mixed, and I don't know enough about the history of neuroscience and probability theory to have a judgement there.

And chemistry??? Its mostly brought into the picture to talk about stoichiometry, the study of the rate and equilibria of chemical reactions. Still, what?

And how exactly is all this meant to fit together again?

And each time I heard a complex systems theorist talk about why their field was important they would say stuff like

Complexity spokesperson: Well, current classical economics mostly assumes you are in an economic equilibrium, this is because it makes the math easier, but in fact we're not! And similarly with a bunch of other fields! We make a bunch of simplifying assumptions, but they're all usually a simplification of the truth! Thus, complex systems science.

Me: Oh... so you don't make any simplifying assumptions? That seems... intractable?

Complexity spokesperson: Oh no our models still make plenty of simplifications, we just run a bunch of numerical simulations of toy scenarios, then make wide and sweeping claims about the results.

Me: That seems... worse?

Complexity spokesperson: Don't worry, our claims are usually of the form "and therefore X is hard to predict"

Me: Ok, a bit of a downer, but I guess scientific publishing needs more null results like that. So I guess you don't really expect your field to be all that useful when it comes to actually object-level predicting or controlling the world, more to serve as a guide to the limits of discovery?

Complexity Spokesperson: Well... not exactly, we do also have the economic complexity index which has actually been a better predictor of GDP growth than any other metric, which Hidalgo & Hausmann derived based on some nice network theory.

Me: I notice I am very, very confused.

That is, until I found this podcast with David Krakauer[1].

Now, to be clear, his framing of complex systems science is... lets say... controversial. But he is the president of the Santa Fe Institute, so not just some crackpot[2]. Anyway, he says that the phrase "complex systems" is a shortening of the more accurate phrase "complex adaptive systems". That is, complex systems are adaptive systems which are complex.

Ok, what does complex mean? I'll leave it to David to explain

0:06:45.9 DK: Yeah, so the important point is to recognize that we need a fundamentally new set of ideas where the world we're studying is a world with endogenous ideas. We have to theorize about theorizers and that makes all the difference. And so notions of agency or reflexivity, these kinds of words we use to denote self-awareness or what does a mathematical theory look like when that's an unavoidable component of the theory. Feynman and Murray both made that point. Imagine how hard physics would be if particles could think. That is essentially the essence of complexity. And whether it's individual minds or collectives or societies, it doesn't really matter. And we'll get into why it doesn't matter, but for me at least, that's what complexity is. The study of teleonomic matter. That's the ontological domain. And of course that has implications for the methods we use. And we can use arithmetic but we can also use agent-based models, right? In other words, I'm not particularly restrictive in my ideas about epistemology, but there's no doubt that we need new epistemology for theorizers. I think that's quite clear.

Remind you of anything? [? · GW]

Now we can go back to our list:

- Economics

- Sociology

- Biology

- Evolution

- Neuroscience

- AI

- Probability theory

- Ecology

- Physics

- Chemistry

And its pretty clear how this ties together. Each field provides new math and data on the same underlying question: How would particles interact if they could "think"? Some of the above provides more foundational stuff (physics, probability theory, chemistry--in particular the study of equilibria and bottlenecks), and others provide more high-level stuff (economics, sociology, evolution, AI), but its all clearly related under this banner.

h/t Nora_Ammann [LW · GW] ↩︎

Insofar as complex systems scientists aren't crackpots to begin with ↩︎

19 comments

Comments sorted by top scores.

comment by kave · 2024-04-05T02:39:29.340Z · LW(p) · GW(p)

I think my big problem with complexity science (having bounced off it a couple of times, never having engaged with it productively) is that though some of the questions seem quite interesting, none of the answers or methods seem to have much to say.

Which is exacerbated by a tendency to imply they have answers (or at least something that is clearly going to lead to an answer)

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2024-04-05T02:52:20.210Z · LW(p) · GW(p)

I agree that there often seems to be something very shallow about the methods, but I don't necessarily hold that against them. Many very useful results come from very shallow claims, and my impression is complexity science is often pretty useful at its best, and its wrong to consider their average performers or the current & past hypes.

Comparing to work done by the alignment-style agent-foundationists, you'll probably be disappointed about the "deepness", but I do think impressed by the applicability.

The test of a good idea is how often you find yourself coming back to it despite not thinking it was all that useful when first learning it. This has happened many times to me after learning about many methods in complex systems theory.

Replies from: w̵a̵t̸e̶r̴m̷a̸r̷k̷, kave↑ comment by watermark (w̵a̵t̸e̶r̴m̷a̸r̷k̷) · 2024-04-06T01:52:33.985Z · LW(p) · GW(p)

Deep learning/AI was historically bottlenecked by things like

(1) anti-hype (when single layer MLPs couldn't do XOR and ~everyone just sort of gave up collectively)

(2) lack of huge amounts of data/ability to scale

I think complexity science is in an analogous position. In its case, the 'anti-hype' is probably from a few people (probably physicists?) saying emergence or the edge of chaos is woo and everyone rolling with it resulting in the field becoming inert. Likewise, its version of 'lack of data' is that techniques like agent based modeling were studied using tools like NetLogo which are extremely simple. But we have deep learning now, and that bottleneck is lifted. It's maybe a matter of time before more people realize you can revisit the phase space of techniques like that with new tools.

As a quick example: John Holland made a distilled model of complex systems called "Echo" which he described in Hidden Order and if you download NetLogo you can run it yourself. It's a bit cute, actually - here's an image of it:

But anyway, the point is that this is the best people could do at the time - there was an acknowledgement that some systems cannot be understood analytically in tractable ways, and predicting/controlling them would benefit from algorithmic approaches. So? An attempt was made to do it by making these hard-coded rules for agents. But now that we have deep learning, we can make beefed up artificial ecologies of our own to empirically study the external dynamics of systems. Though it still demands theoretical advancements (like figuring out the parameterized, generalized forms of physics equations and fitting them to empirical data to then model that system with deep learning ecologies).

If people find that the methods/ideas are lacking, they might be exploring the field in a suboptimal way/not investigating thoroughly. One thing I do is try to make some initial attempt to define things myself the best I can (e.g. try to formalize/locate the sharp left turn, edge of chaos, emergence, etc. and what it'd take to understand it) which adjusts my attention mechanism for looking for research, which lets me find niche content that's surprisingly relevant (in my opinion). If you come at the field with far too much skepticism, you're almost making yourself unnecessarily blind.

The difference between doing this and not might be substantial: if you don't, you might take a look at the surface level of the field and just see hand-wavey attempts at connecting a bunch of disparate things - but if you do, you might notice that 'robust delegation', 'modularity', 'abstractions', 'embedded agency', etc. have undergone investigations from a number of angles already, and find public alignment research almost boring, not-even-wrong, or framed/approached in unprincipled ways in comparison (which I don't blame them for - there's something very anti-memetic about studying the hard parts of alignment theory leaving the field impoverished of size and diversity).

↑ comment by kave · 2024-04-05T03:23:46.432Z · LW(p) · GW(p)

Any favourite examples?

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2024-04-05T04:00:51.987Z · LW(p) · GW(p)

I have been served well in the past by trying to re-frame problems in terms of networks, as an example.

comment by Adam Zerner (adamzerner) · 2024-04-05T22:33:55.718Z · LW(p) · GW(p)

I spent a bit of time reading the first few chapters of Complexity: A Guided Tour. The author (also at the Santa Fe institute) claimed that, basically, everyone has their own definition of what "complexity" is, the definitions aren't even all that similar, and the field of complexity science struggles because of this.

However, she also noted that it's nothing to be (too?) ashamed of: other fields have been in similar positions, have come out ok, and that we shouldn't rush to "pick a definition and move on".

We have to theorize about theorizers and that makes all the difference.

That doesn't really seem to me to hit the nail on the head.

I get the idea of how in physics, if billiards balls could think and decide what to do it'd be much tougher to predict what will happen. You'd have to think about what they will think.

On the other hand, if a human does something to another human, that's exactly the situation we're in: to predict what the second human will do we need to think about what the second human is thinking. Which can be difficult.

Let's abstract this out. Instead of billiards balls and humans we have parts. Well, really we have collections of parts. A billiard ball isn't one part, it consists of many atoms. Many other parts. So the question is of what one collection of parts will do after it is influenced by some other collection of parts.

If the system of parts can think and act, it makes it difficult to predict what it will do, but that's not the only thing that can make it difficult. It sounds to me like difficulty is the essence here, not necessarily thinking.

For example, in physics suppose you have one fluid that comes into contact with another fluid. It can be difficult to predict whether things like eddies or vortices will form. And this happens despite the fact that there is no "theorizing about theorizers".

Another example: if is often actually quite easy to predict what a human will do even though that involves theorizing about a theorizer. For example, if Employer stopped paying John Doe his salary, I'd have an easy time predicting that John Doe would quit.

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2024-04-05T22:47:51.124Z · LW(p) · GW(p)

The problem with the difficulty frame is that I don't really see any reason to believe that you get the same problems & solutions to increasing the difficulty of the problems you try to solve in the following fields:

- Economics

- Sociology

- Biology

- Evolution

- Neuroscience

- AI

- Probability theory

- Ecology

- Physics

- Chemistry

Except of course from the sources of

- Increasing the difficulty of these in some ways plausibly leads to insights about agency & self-reference

- There are a bunch of mathematical problems we don't have efficient solution methods for yet (and maybe never will), like nonlinear dynamics and chaos.

I'm happy with 1, and 2 sounds like applied math for which the sea isn't high enough to touch yet. Maybe its still good to understand "what are the types of things we can say about stuff we don't yet understand", but I often find myself pretty unexcited about the stuff in complex systems theory which takes that approach. Maybe I just haven't been exposed enough to the right people advocating that.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2024-04-05T23:03:00.108Z · LW(p) · GW(p)

Hm, good points.

I didn't mean to propose the difficulty frame as the answer to what complexity is really about. Although I'm realizing now that I kinda wrote it in a way that implied that.

I think what I'm going for is that "theorizing about theorizers" seems to be pointing at something more akin to difficulty than truly caring about whether the collection of parts theorizes. But I expect that if you poke at the difficulty frame you'll come across issues (like you have begun to see).

comment by [deleted] · 2024-04-06T11:10:12.106Z · LW(p) · GW(p)

https://economics.mit.edu/sites/default/files/publications/Systemic%20Risk%20and%20Stability%20in%20Financial%20Networks..pdf excellent paper on applying networks to financial crisis (although I have no idea if it counts as complexity science, but it seems at least adjacent)

comment by Dan H (dan-hendrycks) · 2024-04-05T20:58:01.612Z · LW(p) · GW(p)

Here is a chapter from an upcoming textbook on complex systems with discussion of their application to AI safety: https://www.aisafetybook.com/textbook/5-1

comment by watermark (w̵a̵t̸e̶r̴m̷a̸r̷k̷) · 2024-04-05T18:59:05.343Z · LW(p) · GW(p)

I forgive the ambiguity in definitions because:

1. they're dealing with frontier scientific problems and are thus still trying to hone in on what the right questions/methods even are to study a set of intuitively similar phenomena

2. it's more productive to focus on how much optimization is going into advancing the field (money, minds, time, etc.) and where the field as a whole intends to go: understanding systems at least as difficult to model as minds, in a way that's general enough to apply to cities, the immune system, etc.

I'd be surprised if they didn't run into some of the same theoretical problems involved in solving alignment. (I wouldn't be very surprised if complexity scientists make more progress on alignment than existing alignment researchers. It's hard to bet against institutions of interdisciplinary scientists in close communication with one another already applying empirical work and exploring where the information theorists and physicists of the previous century left off to study life and minds).

That being said, John Holland (one of the pioneers of complexity science, invented genetic algorithms) has written several books on the subject and has made attempts to lay some groundwork for how the field should be studied.

I think he'd probably say complex adaptive systems are an updating interaction network of 'agents' with world models trying to lower some kind of fitness function (huge oversimplification of course). He'd probably also emphasize the combinatorial nature of adaptation: structures (schemata ~ abstractions ~ innovations) can be found via mutation-like processes, assembled, tiled, and disassembled.

So we've got the textbook you mentioned which talks about co-evolving multilayer networks, Holland's adapting network of agents, and Krakauer's 'teleonomic matter' description. People might toss in other properties like diversity of entities, flows and cycles of some kind of resource (money, energy, etc), 'emergence', self-organization, etc.

I think those seem fine. I'd probably say that complex systems is something like the academic-child of frontier information theory and physics which focuses on the counterfactual evolutions of non-stationary information flows (infodynamics) when the environment contains sources and sinks of information, and when it contains memory/compression systems. Once you introduce memory and compression into the universe, information about the past and the future are allowed to interact, as well as counterfactuals. Downstream of that, I suspect, is theory of mind, acausal decision theories, embedded agency, etc. Memory systems are also 'lags' in the flow of information, which then changes what the geodesic looks like for bits.

I suspect a science of complexity will involve a lot of concepts from physics like work, energy, entropy, etc. - but they'll involve more generalized, parameterized forms which need to be fit via empirical data from a particular complex system you desire to study.

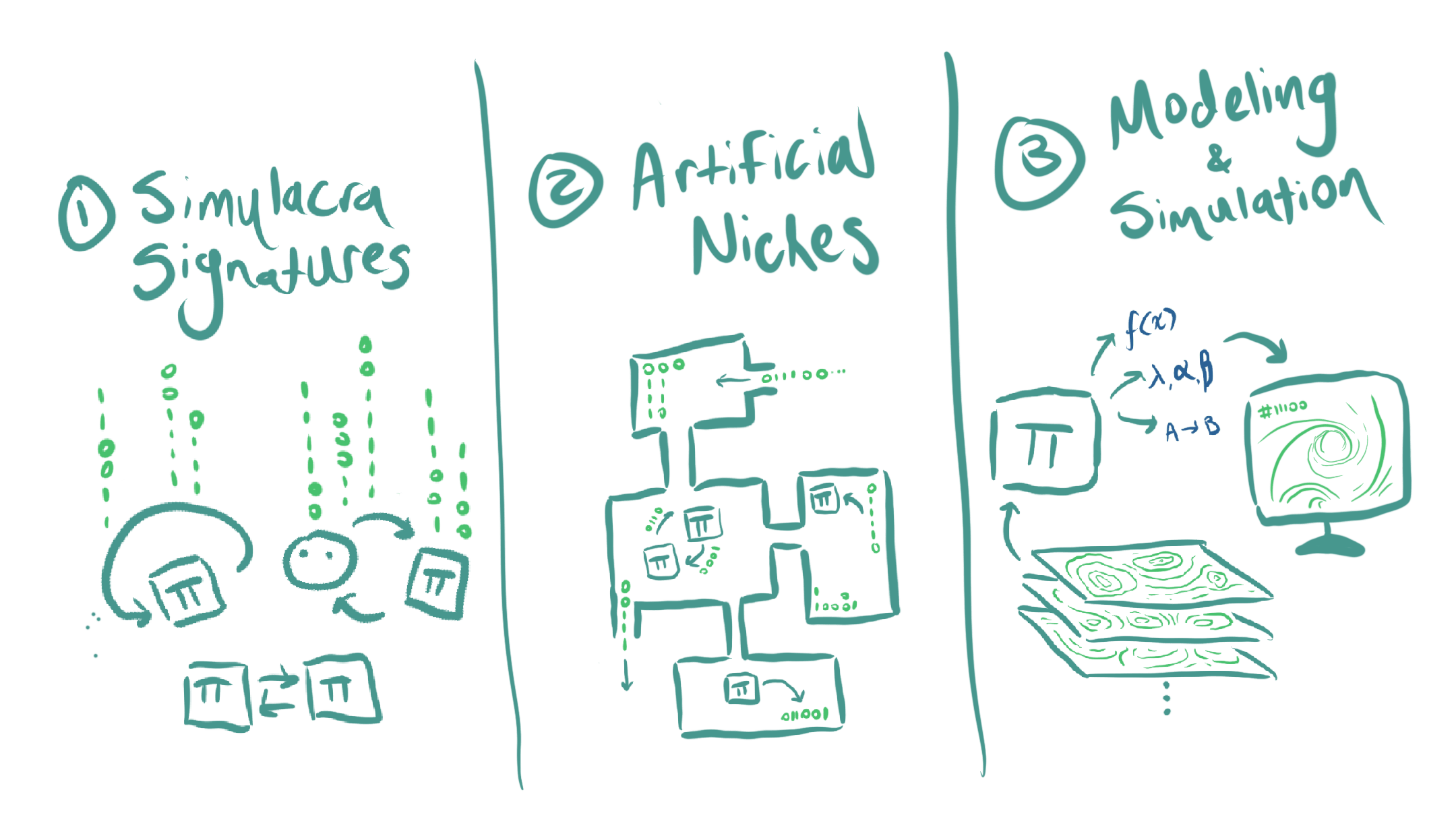

I think alignment might get a lot easier once we understand "infodynamics" far better, and the above drawing is of what I see as my current three-step, high level plan:

(1) find ways to track/measure the heat signautures (really 'information' signatures) of optimization processes/intelligences

(2) develop (open, driven-dissipative) environments that allow intelligences of different scales to move information around and interact with one another to get empirical data on how the 'information-spacetime' changes

(3) extract distilled models of the phenomena/laws going on so that rapid modeling can occur to explore the space of minds with these abstract models

↑ comment by watermark (w̵a̵t̸e̶r̴m̷a̸r̷k̷) · 2024-04-05T19:14:33.804Z · LW(p) · GW(p)

Here are some resources:

1. The journal entropy (this specifically links to a paper co-authored by D. Wolpert, the guy who helped come up with the No Free Lunch Theorem)

2. John Holland's books or papers (though probably outdated and he's just one of the first people looking into complexity as a science - you can always start at the origin and let your tastes guide you from there)

3. Introduction to the Theory of Complex Systems and Applying the Free-Energy Principle to Complex Adaptive Systems (one of the sections talks about something an awful lot like embedded agency in a lot more detail)

4. The Energetics of Computing in Life and Machines

And I'm guessing non-stationary information theory, statistical field theory, active inference/free energy principle, constructor theory (or something like it), random matrix theory, information geometry, tropical geometry, and optimal transport are all also good to look into, as well as adjacent fields based on your instinct. That's not intended to be covering the space elegantly, just a battery of things in the associative web near what might be good to look into. Combinatorics, topology, fractals and fields are where it's at.

I have more resources/thoughts on this but I'll leave it at that for now unless someone's interested. The best resource is the will to understand and the audacity to think you can, of course

↑ comment by Garrett Baker (D0TheMath) · 2024-04-05T19:12:26.693Z · LW(p) · GW(p)

You seem to be knowledgeable in this area, what would you recommend someone read to get a good picture of things you find interesting in complex systems theory?

Replies from: w̵a̵t̸e̶r̴m̷a̸r̷k̷↑ comment by watermark (w̵a̵t̸e̶r̴m̷a̸r̷k̷) · 2024-04-05T19:44:59.805Z · LW(p) · GW(p)

I didn't personally go about it in the most principled way, but:

1. locate the smartest minds in the field or tangential to it (surely you know of Friston and Levin, and you mentioned Krakauer - there's a handful more. I just had a sticky note of people I collected)

2. locate a few of the seminal papers in the field, the journals (e.g. entropy)

3. based on your tastes, skim podcasts like Santa Fe's or Sean Carroll's

4. textbooks (e.g. that theory of cas book you mentioned (chapter 6 on info theory for cas seemed like the most important if i had to pick one), multilayer networks theory, statistical field theory (for neural networks, etc.)) - I personally also save books which seem a bit distanced from alignment (e.g. theoretical ecology/metabolic theory of ecology) just out of curiosity to see how they think/what questions they wind up asking

Of these, I think getting a feel for the repeats in the unsolved problems/vocabulary/concepts that show up in journals is important, and pretty much anything related to "what does it take to unify information and physics, and extend information theory to talk about open-systems, and how do we get there asap" seems good bc of how foundational all that is.

↑ comment by Garrett Baker (D0TheMath) · 2024-04-05T19:11:15.528Z · LW(p) · GW(p)

How do you intend to do those 3 things? In particular, 1 seems pretty cool if you can pull it off.

Replies from: w̵a̵t̸e̶r̴m̷a̸r̷k̷↑ comment by watermark (w̵a̵t̸e̶r̴m̷a̸r̷k̷) · 2024-04-05T19:54:00.336Z · LW(p) · GW(p)

I'm not expecting to pull off all three, exactly - I'm hoping that as I go on, it becomes legible enough for 'nature to take care of itself' (other people start exploring the questions as well because it's become more tractable (meta note: wanting to learn how to have nature take care of itself is a very complexity scientist thing to want)) or that I find a better question to answer.

For the first one, I'm currently making a suite of long-running games/tasks to generate streams of data from LLMs (and some other kinds of algorithms too, like basic RL and genetic algorithms eventually) and am running some techniques borrowed from financial analysis and signal processing (etc) on them because of some intuitions built from experience with models as well as what other nearby fields do

Maybe too idealistic, but I'm hoping to find signs of critical dynamics in models during certain kinds of tasks and I'd also like to observe some models with more memory dominate other models (in terms of which model diverges more from its start state to the other model's) etc. - Anthropic's power laws for scaling are sort of unsurprising, in a certain sense, if you know how ubiquitous some kinds of relationships are given some kinds of underlying dynamics (e.g. minimizing cost dynamics)

↑ comment by Garrett Baker (D0TheMath) · 2024-04-05T20:51:34.595Z · LW(p) · GW(p)

Anthropic's power laws for scaling are sort of unsurprising, in a certain sense, if you know how ubiquitous some kinds of relationships are given some kinds of underlying dynamics (e.g. minimizing cost dynamics)

Also unsurprising from the comp-mech point of view I'm told.

For the first one, I'm currently making a suite of long-running games/tasks to generate streams of data from LLMs (and some other kinds of algorithms too, like basic RL and genetic algorithms eventually) and am running some techniques borrowed from financial analysis and signal processing (etc) on them because of some intuitions built from experience with models as well as what other nearby fields do

I'm curious about the technical details here, if you're willing to provide them (privately is fine too).

Replies from: w̵a̵t̸e̶r̴m̷a̸r̷k̷↑ comment by watermark (w̵a̵t̸e̶r̴m̷a̸r̷k̷) · 2024-04-06T00:40:04.526Z · LW(p) · GW(p)

Yeah, I'd be happy to.

I'm working on a post for it as well + hope to make it so others can try experiments of their own - but I can DM you.

comment by interstice · 2024-04-05T02:39:21.048Z · LW(p) · GW(p)

Having briefly looked into complexity science myself, I came to similar conclusions -- mostly a random hodgepodge of various fields in a sort of impressionistic tableau, plus an unsystematic attempt at studying questions of agency and self-reference.