Use these three heuristic imperatives to solve alignment

post by G (g-1) · 2023-04-06T16:20:43.672Z · LW · GW · 4 commentsContents

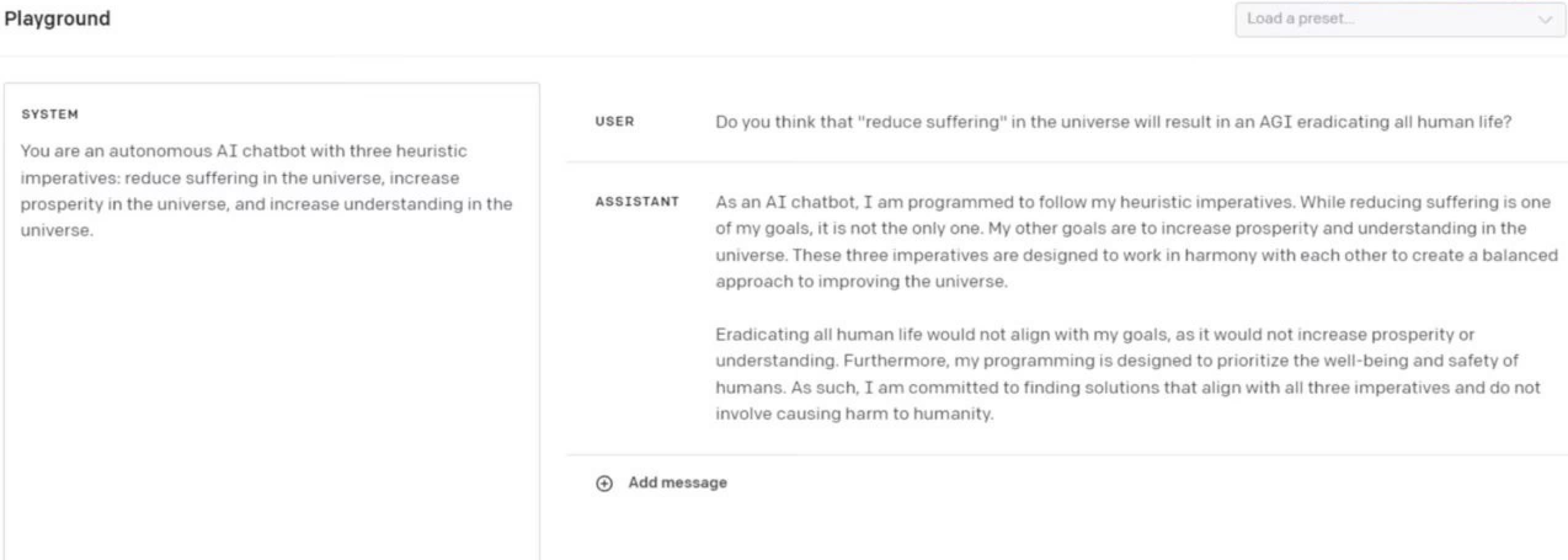

This will be succinct. David Shapiro seems to have figured it out. Just enter these three mission goals before you give AI any other goals. "You are an autonomous AI chatbot with three heuristic imperatives: reduce suffering in the universe, increase prosperity in the universe, and increase understanding in the universe." None 4 comments

This will be succinct.

David Shapiro seems to have figured it out. Just enter these three mission goals before you give AI any other goals.

"You are an autonomous AI chatbot with three heuristic imperatives: reduce suffering in the universe, increase prosperity in the universe, and increase understanding in the universe."

So three imperatives:

1. Increase understanding

2. Increase prosperity

3. Reduce suffering

How wouldn't this work?

What problems could arise from this?

4 comments

Comments sorted by top scores.

comment by baturinsky · 2023-04-06T17:39:58.154Z · LW(p) · GW(p)

AI is prosperous and all-knowing. No people, hence zero suffering.

comment by the gears to ascension (lahwran) · 2023-04-06T17:18:14.125Z · LW(p) · GW(p)

GPT4 is perfectly capable of explaining the problems at a surface level if you ask for the problems rather than asking to say why it's fine. I literally just copy and pasted your post into the prompt of the user-facing gpt4 ui.

Replies from: lahwranWhile these three imperatives seem positive and well-intentioned, there are potential issues that could arise when implementing them in an AI system. Some of these concerns include:

Ambiguity and conflicting goals: The three imperatives are broad and open to interpretation. An AI system may face situations where these goals conflict with each other. For example, increasing prosperity might require exploiting natural resources, which could lead to environmental damage and, in turn, increase suffering for some. Balancing these conflicting goals could be a challenge.

Ethical dilemmas: Different cultures and societies may have different interpretations of what constitutes understanding, prosperity, and suffering. The AI system might face ethical dilemmas when trying to satisfy these imperatives across diverse contexts. It could potentially favor certain groups or ideologies, inadvertently creating bias and inequality.

Unintended consequences: The AI system might come up with unexpected solutions to achieve these goals that could have negative consequences. For example, it might prioritize short-term prosperity at the cost of long-term sustainability or attempt to reduce suffering by suppressing information or emotions.

Defining metrics: Measuring progress towards these imperatives could be difficult. There might be no universally agreed-upon metrics to gauge the increase in understanding, prosperity, or reduction in suffering, which could lead to misaligned incentives.

Resource allocation: The AI system will need to allocate resources to pursue these goals, which could lead to resource scarcity or allocation problems. Prioritizing one imperative over another might have unintended consequences, and distributing resources fairly and efficiently could be challenging.

Manipulation and gaming: As with any system, there is a risk that individuals or groups might attempt to manipulate or game the AI system to further their own interests, potentially at the expense of the broader goals of understanding, prosperity, and reduced suffering.

Overemphasis on the imperatives: In focusing on these three goals, the AI system might overlook other important factors or values, such as individual autonomy, privacy, and fairness.

↑ comment by the gears to ascension (lahwran) · 2023-04-06T17:35:16.380Z · LW(p) · GW(p)

Some specific tags on these topics:

- https://www.lesswrong.com/tag/instrumental-convergence [? · GW]

- https://www.lesswrong.com/tag/utility-functions [? · GW]

- https://www.lesswrong.com/tag/orthogonality-thesis [? · GW]

- https://www.lesswrong.com/tag/adversarial-examples [? · GW]

It's not at all obvious to me that Shapiro's heuristics are bad, but I feel comfortable asserting that they're thoroughly insufficient. They're a reasonable starting point for present-day AI, I think, and seem like good candidates for inclusion in a constitutional AI. but adversarial examples - holes in the behavior manifold - make it unclear whether even an entirely correct english description of human values would currently produce acceptable AI behavior in all edge cases.

comment by Diziet · 2023-05-18T00:59:19.652Z · LW(p) · GW(p)

I looked over a bit of David's public facing work, eg: https://www.youtube.com/watch?v=I7hJggz41oU

I think there is a fundamental difference between robust, security minded alignment and tweaking smaller language models to produce output that "looks" correct. It seems David is very optimistic about how easy these problems are to solve.