Biological Holism: A New Paradigm?

post by Waddington · 2021-05-09T22:42:31.231Z · LW · GW · 9 commentsContents

Cybernetics

Systems science

Cognitive science

Complex Adaptive Systems

The Intentional Stance, Extended

The Deacon Version

Further reading:

None

9 comments

Biologist Michael Levin was recently featured in an article by The New Yorker with a captivating headline: Is Bioelectricity the Key to Limb Regeneration?

The thought struck me that Levin's work is a great example of what you might call a novel paradigm in biology that people here might not be entirely familiar with. Now is as good a time as ever to make your acquaintance. Let's dive straight in.

You've probably heard complaints about 'reductionism' and 'mechanistic' views of life. These complaints are usually followed by a slogan: the whole is more than the sum of its parts. Other words that tend to crop up: emergence, self-organization, complexity, and so on. A recurring theme is that we are missing out on something important when we break a living thing down into parts. This perspective on life—which we can call holism—might strike you as wishy-washy New Age-nonsense. I want to capture your attention with a surprising notion: it emerged from the Manhattan Project.

Los Alamos National Laboratory became a site of cross-fertilization during WWII where great minds exchanged ideas and discussed the grandest challenge faced by science at large: predicting (and controlling) the behavior of nonlinear systems. It was an important challenge, as that included most things. As Manhattan Project participant Stanislaw Ulam quipped:

Using a term like nonlinear science is like referring to the bulk of zoology as the study of non-elephant animals.

Of these great minds, John von Neumann was probably the greatest. One of his contributions to the challenge, game theory, deals with the dynamics of interacting agents who make rational decisions. The assumption of rationality can be very useful, even if it doesn't literally apply in real-life circumstances. This is because it converts the impossible task of predicting the nonlinear dynamics of interpersonal interactions into an optimization problem.

But even more important in our biological context is the cellular automaton, discovered along with Ulam. Chiara Marletto, working with David Deutsch within the framework of constructor theory, has shown that you need the logic of cellular automata in order to get the appearance of design without actual design[1]. In other words: von Neumann and Ulam explained why life is something that can just happen. You don't need a divine entity. You don't need fine-tuned initial conditions. All you need is a self-perpetuating process.

Cybernetics

Their discussions continued after the war in the form of the interdisciplinary Macy Conferences, and particularly those on the topic of cybernetics. Norbert Wiener, its chief originator, described it in the following terms[2]:

Cybernetics is a word invented to define a new field in science. It combines under one heading the study of what in a human context is sometimes loosely described as thinking and in engineering is known as control and communication. In other words, cybernetics attempts to find the common elements in the functioning of automatic machines and of the human nervous system, and to develop a theory which will cover the entire field of control and communication in machines and in living organisms.

Wiener wrote a book that became a surprise bestseller: Cybernetics: Or Control and Communication in the Animal and the Machine. One of the central ideas of cybernetics is that feedback regulation is crucial for control. A simple thermostat relies on negative feedback: it corrects deviations from a set-point value. But what if it also had to determine the optimal set-point value? That's a much more interesting problem. In order for the question to make sense, you have to assume some sort of goal. And that turns out to be a key assumption, as we'll see later.

Crucial to this way of thinking was that you had to think of an organism as an integrated whole continually correcting errors on its way toward some target state. As Wiener pointed out, simply correcting the error between actual and desired target states is a slow (homeostatic) process. A better strategy is to predict errors and correct for them before they have the chance to mess up your plans.

Fellow conference attendee Donald MacKay proposed an information-flow model of human behavior[3] where probabilistic inference was used to match incoming sensory signals with perceptual hypotheses, organized in a hierarchical fashion. He also wrote on the problem of meaningful information[4], to be contrasted with the objective measure worked out by Claude Shannon (and Norbert Wiener) earlier. A message is subjectively meaningful to a receiver if they believe it enhances their ability to achieve some sort of goal. Note the holism implied by this characterization: the meaning of a message depends on the perceived context. This means that two observers can (and often will) interpret the same message in different ways.

If you're lazy, you can take this to mean that all knowledge is inherently subjective and relative and that there's no such thing as "truth" aka postmodernism. The reason why this lazy interpretation is flawed is remarkably simple: interpretative frames that result in more useful predictions are objectively better than others. That was the lesson Thomas Kuhn tried to impart in The Structure of Scientific Revolutions[5]. As I assume you all know, this is also the reason why Bayesian inference is useful: like hermit crabs, we abandon prior models when we come across models with higher relative fitness.

In an unexpected twist, anthropologist power-couple Margaret Mead and Gregory Bateson loved cybernetics and spread it to a wider audience in the soft sciences. Mead in particular was heavily involved in developing what came to be known as second-order cybernetics, which is the recursive application of cybernetics to itself. Researchers studying cybernetics are also cybernetic systems and as such part of their own study. Biologists Humberto Maturana and Francisco Varela were fascinated by this idea and would later develop the highly-influential idea of autopoiesis[6]. A system like a biological cell is meta-cybernetic in that it continually and recursively recreates itself.

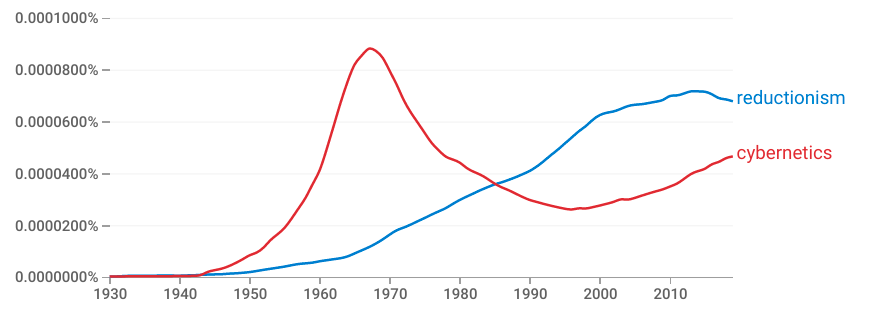

Over time, people sort of lost interest in cybernetics. Its insights became scattered across different disciplines, but have recently found a new audience in the form of dynamical systems theory. For a pessimistic take on the development of cybernetics, see Jean-Pierre Dupuy's On the Origins of Cognitive Science[7].

Systems science

These conferences also marked the foundation of what is now generally known as systems science. That includes, of course, systems biology. A book review of a systems biology textbook was posted here in 2019 [LW · GW].

A key insight, generally credited to Ludwig von Bertalanffy, is that open systems are different than closed systems. In a closed thermodynamic system, you sooner-or-later end up with a rather bland equilibrium as entropy reaches its maximum level. In open systems, however, interesting structures tend to emerge. What you get is a non-equilibrium steady state, formalized by chemist Ilya Prigogine as a dissipative structure. In more recent times, physicist Jeremy England has developed this notion to a process account of evolution as dissipation-driven adaptation. Most recently, his group has come up with the concept of "low rattling" as a predictive principle of self-organization[8].

Imagine a stochastic particle whose behavior is to some extent correlated with the behavior of its neighbors. As a collective, the particles are disturbed by an input of energy. They are thus constrained both by their interactions and their input. The interesting consequence of this dual constraint is that the process of relaxation (tendency toward equilibrium) becomes analogous to optimization. To an outside observer, it will seem as if the collective is working together to find a stable configuration.

This means you can use the same strategy as von Neumann used in game theory: you can frame it all as optimization and use this informational leverage to direct the system in a top-down manner.

Another key insight is that biological systems are robust. Japanese systems biologist Hiroaki Kitano has proposed that biological robustness can serve as an overarching principle of organization[9]. Remember Wiener's problem of finding an optimal set-point value? That's a problem of robustness. The environment to which a system is adapted tends to change over time, meaning that there's no such thing as a perfect set-point.

Again, homeostasis can be construed as a negative feedback loop. But homeostasis is just a process of maintenance. And you don't want to keep maintaining a solution that will inevitably fail at some unknown time. This means that you also need growth.

The balance of maintenance and growth is a balance between negative and positive feedback. You need negative feedback to prevent a system from falling apart. You need positive feedback for the system to find potentially-better set-point values.

In the language of dynamical systems theory, we want to find stable attractor states. And we need occasional perturbations in the form of positive feedback in order to make "updates". The concept of allostasis (stability through change) was conceived of in stress physiology to reflected this importance of positive feedback in adaptation[10]. Remember how Wiener anticipated the need for anticipation (of errors)? That's the beauty of it all: if we amplify errors that exceed a certain threshold, that's all the positive feedback we need. And that's exactly the error used to make Bayesian updates.

I mentioned Thomas Kuhn earlier. In the preface to his essay collection The Essential Tension[11], he describes how he stumbled upon the hermeneutic method without knowing what it was. The hermeneutic method is a recursive process of interpretation developed by monks as a way to improve interpretations of the Bible. You start off with an interpretation and analyze the text accordingly. When you come across inconsistencies, you update your interpretation such that these are accounted for. This recursive scheme is, in fact, a precursor to Bayesian inference.

Gregory Bateson famously defined information as "the difference that makes a difference."[12] With hindsight, we can now refer to it by its proper name: Kullback-Leibler (KL) divergence, or relative entropy. KL divergence is a measure of what you might call Bayesian surprise[13] [14]. This is also the sort of information Donald McKay was talking about earlier: meaningful information.

You don't have to make grand assumptions about cognition for such a process to take place. A nice example of how positive feedback can help a system "find" a stable configuration is the phenomenon of supercooling. Take a bottle of pure water and put it in a freezer. You might find that the water has cooled below its freezing temperature even though it's still liquid. It's like it's forgotten to transition from one phase to another. However, if you introduce a disturbance (like shaking the bottle), it quickly finds the "solution".

I want to reiterate: optimization is a lens through which you can view the behavior of nonlinear systems. There's no need to take it literally. Well, it's a matter of personal choice. If thinking about the world in these terms make you feel better, there's no harm in it I suppose.

A big-picture account of the development of systems science in general is found in Fritjof Capra and Pier Luigi Luisi's The Systems View of Life: A Unifying Vision[15]. Further reflections on biological holism can be found in system biologist Denis Noble's Dance to the Tune of Life: Biological Relativity[16]. For a more "meaty" introduction, see Experiences in the Biocontinuum: A New Foundation for Living Systems by Richard L. Summers[17].

It should also be mentioned that mathematical biologist Robert Rosen worked on setting up an entire field based on these ideas—relational biology—centered on the view of living things as anticipatory systems[18]. Unfortunately, he was way ahead of his time. He wanted to describe complex systems in terms of category theory, an idea that's still remarkably novel. His 1985 book on anticipatory systems got a second edition in 2012 and researchers are gradually coming to terms with the idea that Rosen might have gotten it right a long time ago.

Cognitive science

The interdisciplinary field of cognitive science also arose from the Macy Conferences on cybernetics. I can't help but share a segment from a 1993 paper by neuropsychologist Roger Sperry where he wonders where this holistic idea came from[19]:

Whatever caused this turnabout, it came with a startling suddenness, described in the early 1970s by Pylyshyn as having "recently exploded" into fashion. It was as if the floodgates holding back the many pressures of consciousness and subjectivity were suddenly opened. What caused this abrupt turnabout has continued to puzzle many leaders in the field.

Based on his choice of language (and timing) here, I'm guessing he's implying it came from psychedelics. Little did he know it actually came from the Manhattan Project:

The narrative on the rise of cognitive psychology almost always frames it as an ideological victory over behaviorism, which was seen as a 'mechanistic' and 'reductionist' abomination. Later, there would be a debate between so-called cognitivists and ecological psychologists, the latter group led by James J. Gibson.

Gibson claimed that we had to emphasize the animal-environment system as a whole. He also claimed that representations didn't exist and that perception was direct. This confused a lot of people, including Ulric Neisser who has been called the "father of cognitive psychology" and wrote its first official textbook. In what came as a shock to pretty much everyone, Neisser eventually switched sides.

Cognitive psychology described cognition in terms of information processing and the manipulation of representations. Perception was seen as a constructive process of model refinement, inspired by MacKay's earlier information-flow model. But Neisser began to have doubts:

What was the point of saying perception was constructive if it always constructed exactly the correct thing? That seemed far-fetched. Then, of course I was listening to Gibson and all these ideas were around and so after a while I began, you might say, waking up in the middle of the night in a cold sweat saying: He's right, what am I going to do now? Gibson is right!

Ulric Neisser in a 1997 interview[20]

Ironically, the solution to this conundrum had been there all along: cybernetics. When you refine a model based on input from the environment, it should be obvious that you're getting the information from somewhere. And Gibson should have realized that you can't have feedback regulation without a model.

Even more ironically, it turned out that behaviorism supplied the missing pieces of the puzzle. According to the behaviorists, feedback regulation (reinforcement and punishment) was all you needed. And it was a self-avowed behaviorist who pieced it together: Edward C. Tolman.

Tolman had been greatly influenced by Gestalt psychology. This was a holistic school of thought emphasizing that parts must always be considered in relation to the whole. It wouldn't be too inaccurate to describe it as relational psychology. Behavior, then, had to be seen as relative to explicit or implicit goals. Based on his experiments with mice, Tolman proposed the notion of cognitive maps[21]. Later, this construct would be applied to a specific neural structure: the hippocampus[22].

Recent work has led to the idea that the function of the hippocampus is actually to perceive context[23]. If accurate, this means that the hippocampus is the basis for holistic thought. The hippocampus contextualizes perceptual input and as such provides the frame of interpretation we use to make sense of the world around us.

In our view, the recurrent circuits of the hippocampus are capable of self-organization, such that they do not require a priori learning to form a spatiotemporal pattern. Input to such networks triggers nonlinear interplay between the neurons of the network, resulting in emergent order and structured activity. This emergent pattern is not only robust, that is, recoverable in the face of mild perturbations, but also adaptable. If the pattern is changed slightly, some number of neurons cease to fire while others begin. Thus, organisms can detect both similarity and difference, generating a physiological response that maintains and adapts a pattern of activity in accordance with changes in input. This is best thought of as pattern formation, rather than pattern completion or separation.

(...)

This formulation is similar to the notion of hierarchical prediction error

(PE) signaling, at the core of current ‘free energy’ approaches to brain function. The hippocampus receives dopamine-mediated PE signals from locus coeruleus, and is affected as much by novelty as by reward PE.—Maurer, A. P., & Nadel, L. (2021). The continuity of context: A role for the hippocampus. Trends in Cognitive Sciences.

Keep in mind the mutual constraints implied here: input to the hippocampal circuits constrains its activity, which in turn constrains perception. As we have seen, conflicting constraints result in behavior that can be construed as optimization.

The authors of the aforementioned paper refer to a topic which has been discussed at length on this site: predictive processing. Let's discuss it some more.

Karl Friston's free energy principle[24] is yet another version of biological holism resulting from the work of Manhattan Project scientists. In this case, it's Richard Feynman and his path-integral or sum-over-many-paths formulation of quantum mechanics.

You can imagine that a particle, such as a photon, explores every possible trajectory from A to B. All trajectories except one cancel out via constructive and destructive interference (owing to its wave-like nature). And this is the actual trajectory of the photon. That is, you can (yet again) convert an impossible problem to an optimization problem by pretending the photon is an agent making a decision.

If you want to experience some existential dread (or mild amusement) you might very well ask yourself a pertinent question: are you an agent conjured up by your hippocampus in order to cope with pesky nonlinearities? Is your consciousness, your happiness, and your sorrows the result of evolution blindly stumbling upon the answer to the questions discussed by those scientists at Los Alamos all these years ago?

Probably.

Feynman's approach is an example of a variational principle. Pierre de Fermat famously applied one to describe the path of light: it follows the path of least time. His contemporaries were shocked, thinking he had committed the crime of teleology (explaining phenomena according to their purpose or ends). Many people were puzzled by Feynman's principle as well. In his textbook[25], he teases the reader:

How does the particle find the right path? From the differential point of view, it is easy to understand. Every moment it gets an acceleration and knows only what to do at that instant. But all your instincts on cause and effect go haywire when you say that the particle decides to take the path that is going to give the minimum action. Does it ‘smell’ the neighboring paths to find out whether or not they have more action?

(...)

The miracle of it all is, of course, that it does just that. That’s what the laws of quantum mechanics say. So our principle of least action is incompletely stated. It isn’t that a particle takes the path of least action but that it smells all the paths in the neighborhood and chooses the one that has the least action by a method analogous to the one by which light chose the shortest time.

Friston's free energy principle suggests we should borrow Feynman's tool to understand brain function. We can frame every aspect of behavior and cognition as the optimization of a singular objective function: minimal information-theoretic free energy. This corresponds to the difference between model predictions and observations. Keep in mind that this doesn't mean that you gravitate toward the most accurate model; overly complex models result in more errors. Instead, you gravitate toward the most efficient model. You have to make a compromise between accuracy and complexity.

Which brings us back to Bayesian inference. The point is that you don't have to understand the subtleties of the free energy principle (FEP) itself: the FEP says you get everything you need by framing it all as a process of inference.

Friston arrived at the same conclusion as Robert Rosen and Norbert Wiener, following a very different route: we are anticipatory (predictive) systems. At least, that's a useful way of thinking about ourselves.

Complex Adaptive Systems

Those early discussions at Los Alamos also resulted in the foundation of the Santa Fe Institute, dedicated to the study of complex systems. Most of its founders worked at Los Alamos National Laboratories and it remains to this day a sort of replica of the wide-ranging interdisciplinary symbiosis formed through the Manhattan Project.

Their Center for Complexity & Collective Computation (C4), headed by Jessica Flack, have converged on a view they refer to as life's information hierarchy[26]:

Our work suggests that complexity and the multiscale structure of biological systems are the predictable outcome of evolutionary dynamics driven by uncertainty minimization. This recasting of the evolutionary process as an inferential one is based on the premise that organisms and other biological systems can be viewed as hypotheses about the present and future environments they or their offspring will encounter, induced from the history of past environmental states they or their ancestors have experienced.

The hierarchy they refer to consists of nested levels of components that extract regularities from components below them. Higher levels of organization, then, exert top-down control on lower levels. You might recognize this as predictive processing. Again, they arrived at this conclusion from a different angle than others before them.

It should be noted that the perspective taken by C4 and the Santa Fe Institute is extremely broad and covers a lot of ground; it's enough to make one dizzy.

By now, we've gotten a glimpse of the implications of biological holism. We have seen that holism can be used as a tool to deal with nonlinear systems. We have also seen we may be tools of our own making, an evolutionarily-crafted strategy to survive in and to make sense of a messy world. We've seen further that our very existence might have to be considered in the context of a self-perpetuating process that can be cast as inference.

If you're still not convinced that biological holism is a promising paradigm, consider this: Daniel Dennett is a recent convert.

The Intentional Stance, Extended

When philosopher Jerry Fodor read Terrence Deacon's Incomplete Nature: How Mind Emerged from Matter[27], he admitted to skipping most of it[28]. Daniel Dennett, in contrast, famously penned a rave review of it, which startled quite a few philosophers:

I myself have been trying in recent years to say quite a few of the things Deacon says more clearly here. So close and yet so far! I tried; he succeeded, a verdict I would apply to the other contenders in equal measure. Alicia Juarrero (1999) and Evan Thompson (2007) have both written excellent books on neighboring and overlapping topics, but neither of them managed to win me over to the Romantic side, whereas Deacon, with his more ambitious exercise of reconstruction, has me re-examining my fundamental working assumptions. I encourage others who see versions of their own pet ideas emerging more clearly and systematically in Deacon’s account to join me in applauding.

Romanticism is closely aligned with holism. They are both inherently subjective and thus at odds with objectivity and reductionist approaches to knowledge. However, there's a way to combine them into what you might call pragmatic Romanticism. This is the view that attributing causal power to various agents in the world is useful, even though it's not true.

Biologist J. B. S. Haldane (a dear friend of Norbert Wiener) once observed that, "Teleology is like a mistress to a biologist: he cannot live without her but he's unwilling to be seen with her in public."

Pragmatic Romanticists don't have teleological mistresses, but they pretend that they do.

Daniel Dennett and Michael Levin have even co-written an essay, which some might find interesting:

From this perspective, we can visualise the tiny cognitive contribution of a single cell to the cognitive projects and talents of a lone human scout exploring new territory, but also to the scout’s tribe, which provided much education and support, thanks to language, and eventually to a team of scientists and other thinkers who pool their knowhow to explore, thanks to new tools, the whole cosmos and even the abstract spaces of mathematics, poetry and music.

(...)

By distributing the intelligence over time – aeons of evolution, and years of learning and development, and milliseconds of computation – and space – not just smart brains and smart neurons but smart tissues and cells and proofreading enzymes and ribosomes – the mysteries of life can be unified in a single breathtaking vision.

The last part brings to mind Darwin's sentiment regarding his theory of evolution: there is grandeur in this view of life. It's also fits well with Julia Galef's recent book; The Scout Mindset[29].

I should perhaps also mention here that theoretical physicist Sean Carroll has already proposed the philosophy I shamelessly dubbed pragmatic Romanticism. He calls it poetic naturalism, and presents it in his book The Big Picture[30].

The funny thing is that Daniel Dennett had already introduced a similar notion: the intentional stance[31].

Here is how it works: first you decide to treat the object whose behavior is to be predicted as a rational agent; then you figure out what beliefs that agent ought to have, given its place in the world and its purpose. Then you figure out what desires it ought to have, on the same considerations, and finally you predict that this rational agent will act to further its goals in the light of its beliefs. A little practical reasoning from the chosen set of beliefs and desires will in most instances yield a decision about what the agent ought to do; that is what you predict the agent will do.

Biological holism extends this logic to the entire realm of living things.

The Deacon Version

I'm not aware of any completely original ideas in Deacon's Incomplete Nature. He has been criticized for not citing earlier work, and it appears he simply wasn't aware that his core ideas had been described elsewhere in different terms. That doesn't mean his book doesn't add anything useful. As a systematic synthesis it does a great job. And it convinced Daniel Dennett, so Deacon must have done something right.

I don't want to spend too much time on this section, so I'll present a sort of caricature instead.

We start off with the second law of thermodynamics: the entropy of a closed system tends to increase. This is a statistical phenomenon. The equilibrium state of a thermodynamic system is its most probable state over time. We can call this process (non-equilibrium --> equilibrium) relaxation.

A type 1 (homeodynamic) system simply relaxes. You can imagine a cup of coffee gradually reaching room temperature.

Now, let's say that the output of a type 1 system is stress. Put your hand over the hot cup of coffee and you will get a vivid demonstration of this phenomenon. What happens when a type 1 system disturbs another type 1 system? Why, you get a type 2 system of course.

A type 2 (morphodynamic) system has to find a relaxation channel. Because it's constantly being disturbed by the type 1 system, it must find a way to counter its impact. A canonical example is Rayleigh-Bénard convection. The flow of energy through the system gives rise to interesting patterns.

Type 2 systems turn into type 1 systems immediately when you stop "feeding" them with stress. Now, imagine a system which feeds itself with stress. You have one type 2 system feeding another type 2 system in a cycle. It forms patterns of mutual constraints. Which gives you, of course, a type 3 system.

A type 3 (teleodynamic) system exhibits stress-relaxation cycles. Imported stress is used to maintain structural integrity. It's sort of like charging a battery. This provides the impetus for importing more stress. And so the cycle continues.

Now, let's think about this in terms of dynamical systems. An attractor state is simply a state to which a system will naturally gravitate. A repeller state is the opposite. It's easiest to think about this in terms of a landscape with hills (repellers) and valleys (attractors). Imagine a stochastic agent exploring this landscape. It's easy for the stochastic agent to end up in valleys: it simply falls in. It's difficult for it to climb hills: it falls down. Over time, what is the most likely position of the stochastic agent? The answer is the deepest valley.

If you course-grain the behavior of the stochastic agent you end up with something you could call its goal. It obviously wants to spend most of its time in the deepest valley. So if you were to help it find it, you would be doing something useful. In return, the stochastic agent would be happy to perform work on your behalf.

An organ thus provides meaning and context to the activity of its constituent cells. An organism does the same for its organs. A social group does it for organisms, and so on and so forth. It's all very holistic. Suddenly we have minds and cognition and tribes of agents working together with various functions and roles in the service of the greater whole.

Remember: this way of looking at things is simply a matter of pragmatism.

Life is really, like Hungarian biochemist Albert-Szent Györgyi once said, "nothing but an electron looking for a place to rest."

The late Harold Morowitz and Eric Smith provide a staggering synthesis of this view in The Origin and Nature of Life on Earth[32]. They argue that life emerged gradually from geochemistry as a way to relieve planetary free energy stresses.

From an earlier joint paper of theirs[33]:

Life is universally understood to require a source of free energy and mechanisms with which to harness it. Remarkably, the converse may also be true: the continuous generation of sources of free energy by abiotic processes may have forced life into existence as a means to alleviate the buildup of free energy stresses. This assertion—for which there is precedent in nonequilibrium statistical mechanics and growing empirical evidence from chemistry would imply that life had to emerge on the earth, that at least the early steps would occur in the same way on any similar planet, and that we should be able to predict many of these steps from first principles of chemistry and physics together with an accurate understanding of geochemical conditions on the early earth. A deterministic emergence of life would reflect an essential continuity between physics, chemistry, and biology. It would show that a part of the order we recognize as living is thermodynamic order inherent in the geosphere, and that some aspects of Darwinian selection are expressions of the likely simpler statistical mechanics of physical and chemical self-organization.

This holistic understanding of biological systems may be our best bet at tackling big and difficult problems concerning all of us. I want to close things off with a line by Winston Churchill:

"I cannot forecast to you the action of Russia. It is a riddle, wrapped in a mystery, inside an enigma; but perhaps there is a key. That key is Russian national interest."

As you can see, we have sort of returned to game theory and von Neumann, as discussed at the beginning. We can use the tool of holism to wrap our minds around something as big and complicated as a nation. What Dennett, Levin and others are telling us is that the same is true in biology: Churchill's key allows us to see further than we've done before. And given that biology is filled with riddles, wrapped in mysteries, inside enigmas, we have no excuse not to entertain the holistic view and see how far it can take us.

Further reading:

Gödel, Escher, Bach: An Eternal Golden Braid (1979) by Douglas Hofstadter

A classic work in cognitive science. Investigates the idea of recursion and its relationship to cognition. Goes fairly deep and has fun getting there.

Chaos: Making a New Science (1987) by James Gleick

Tells the story of early adventures into the realm of nonlinear systems. Made chaos fun for the general public and also a bit embarrassing to scientists.

Out of Control: The New Biology of Machines, Systems, and the Economic World (1994) by Kevin Kelly

Inspired The Matrix. Deals with the greater implications of what investigations into nonlinear systems has told us.

Sync: The Emerging Science of Spontaneous Order (2003) by Steven Strogatz

Oscillations. Synchronization. Fun! A spiritual successor to Gleick's book.

Complexity: A Guided Tour (2011) by Melanie Mitchell

Mitchell became a student of Hofstadter after reading his book. Now she's an expert in her own right.

Surfing Uncertainty: Prediction, Action, and the Embodied Mind (2016) by Andy Clark

Clark brought predictive processing to a wider audience and is almost single-handedly responsible for its current popularity. This book is a nice introduction to the topic.

Marletto, C. (2015). Constructor theory of life. Journal of the Royal Society Interface, 12(104), 20141226. https://doi.org/10.1098/rsif.2014.1226 ↩︎

Wiener, N. (1948). Cybernetics or Control and Communication in the Animal and the Machine. ↩︎

MacKay, D. M. (1956). Towards an information‐flow model of human behaviour. British Journal of Psychology, 47(1), 30-43. ↩︎

MacKay, D. M. (1969). Information, mechanism and meaning. ↩︎

Kuhn, T. S. (1962). The Structure of Scientific Revolutions. ↩︎

Maturana, H. R., & Varela, F. J. (1980). Autopoiesis and Cognition: The Realization of the Living. ↩︎

Dupuy, J-P. (2009). On the Origins of Cognitive Science: The Mechanization of the Mind. ↩︎

Chvykov, P., Berrueta, T. A., Vardhan, A., Savoie, W., Samland, A., Murphey, T. D., ... & England, J. L. (2021). Low Rattling: A Predictive Principle for Self-Organization in Active Collectives. Science, 371(6524), 90-95. ↩︎

Kitano, H. (2004). Biological Robustness. Nature Reviews Genetics, 5(11), 826-837. ↩︎

McEwen, B. S., & Wingfield, J. C. (2003). The Concept of Allostasis in Biology and Biomedicine. Hormones and behavior, 43(1), 2-15. ↩︎

Kuhn, T. S. (1977). The Essential Tension. ↩︎

Bateson, G. (1970). Form, Substance, and Difference. Essential Readings in Biosemiotics, 501. ↩︎

DeDeo, S. (2016). Information Theory for Intelligent People. ↩︎

DeDeo, S. (2016). Bayesian Reasoning for Intelligent People. ↩︎

Capra, F., & Luisi, P. L. (2014). The Systems View of Life: A Unifying Vision. ↩︎

Noble, D. (2016). Dance to the Tune of Life: Biological Relativity. ↩︎

Summers, R. L. (2020). Experiences in the Biocontinuum: A New Foundation for Living Systems. ↩︎

Rosen, R. (2012). Anticipatory Systems. ↩︎

Sperry, R. W. (1993). The Impact and Promise of the Cognitive Revolution. American psychologist, 48(8), 878. ↩︎

Szokolszky, A. (2013). Interview with Ulric Neisser. Ecological Psychology, 25(2), 182-199. ↩︎

Tolman, E. C. (1948). Cognitive maps in rats and men. Psychological review, 55(4), 189. ↩︎

O'keefe, J., & Nadel, L. (1978). The Hippocampus as a Cognitive Map. ↩︎

Maurer, A. P., & Nadel, L. (2021). The Continuity of Context: A Role for the Hippocampus. Trends in Cognitive Sciences. ↩︎

Friston, K. (2010). The Free-Energy Principle: A Unified Brain Theory? Nature Reviews Neuroscience, 11(2), 127-138. ↩︎

The Feynman Lectures on Physics. Ch. 19: The Principle of Least Action. ↩︎

Flack, J. (2017). 12 Life’s Information Hierarchy. From matter to life: information and causality, 283. ↩︎

Deacon, T. W. (2011). Incomplete nature: How mind emerged from matter. WW Norton & Company. ↩︎

Fodor, J. (2012). What are Trees About. London Review of Books, 34(10), 31. ↩︎

Galef, J. (2021). The Scout Mindset: Why Some People See Things Clearly and Others Don't. ↩︎

Carroll, S. (2017). The Big Picture: On the Origins of Life, Meaning, and the Universe Itself. ↩︎

Dennett, D. C. (1989). The Intentional Stance. ↩︎

Smith, E., & Morowitz, H. J. (2016). The Origin and Nature of Life on Earth: The Emergence of the Fourth Geosphere. Cambridge University Press. ↩︎

Morowitz, H., & Smith, E. (2007). Energy Flow and the Organization of Life. Complexity, 13(1), 51-59. ↩︎

9 comments

Comments sorted by top scores.

comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2021-05-10T03:49:14.066Z · LW(p) · GW(p)

My first problem with holism, or higher order cybernetics generally, is that while it's an interesting and sometimes illuminating perspective, it doesn't give me useful tools. It's not even really a paradigm, in that it provides no standard methods (or objects) of enquiry, doesn't help much with interpretation, etc.

The "AI effect" (as soon as it works, no one calls it AI any more) has a similar application in cybernetics: the ideas are almost omnipresent in modern sciences and engineering, but we call them "control engineering" or "computer science" or "systems theory" etc. Don't get me wrong: this was basically my whole degree ("interdisciplinary studies"), I've spend the last few years at ANU's School of Cybernetics (eg), and I love it, but it's more of a worldview than a discipline. I frame problems with this lens, and then solve them with causal statistics or systems theory or HCI or ...

My second problem is that it leaves people prone to thinking that they have a grand theory of everything, but without the expertise to notice the ways in which they're wrong - or humility to seek them out. Worse, these details are often actually really important to get right. For example:

I want to reiterate: optimization is a lens through which you can view the behavior of nonlinear systems. There's no need to take it literally. Well, it's a matter of personal choice. If thinking about the world in these terms make you feel better, there's no harm in it I suppose.

I strongly disagree: viewing most systems as optimizers will mislead you, both epistemically and affectively. We have a whole tag for "Individual organisms are best thought of as adaptation-executers rather than as fitness-maximizers." [? · GW]! The difference really matters!

Replies from: Waddington↑ comment by Waddington · 2021-05-10T05:22:27.134Z · LW(p) · GW(p)

You're right that it probably makes more sense to think of it as a perspective rather than a paradigm. Yet, I imagine that some useful fundamental assumptions may be made in the near future that could change that. If nothing else, a shared language to facilitate the transfer of relevant information across disciplines would be nice. And category theory seems like an interesting candidate in that regard.

I disagree with what you said about optimization, though. Phylogenetic and ontogenetic adaptations both result from a process that can be thought of as optimization. Sudden environmental changes don't propagate back to the past and render this process void; they serve as novel constraints on adaptation.

If I optimize a model on dataset X, its failure to demonstrate optimal behavior on dataset Y doesn't mean the model was never optimized. And it doesn't imply that the model is no longer being optimized.

To use the example mentioned by Yudkowsky in his post on the topic: ice cream serves as superstimuli because it resulted from a process that can be considered optimization. On an evolutionary timeline, we are but a blip. As in the case of dataset Y, the observation that preferences resulting from an evolutionary process can prove maladaptive when constraints change doesn't mean there's no optimization at works.

My larger argument (that I now see I failed to communicate properly) was that the appearance of optimization ultimately derives from chance and and necessity. A photon doesn't literally perform optimization. But it's useful to us to pretend as if it does. Likewise, an individual organism doesn't literally perform optimization either. But it's useful to pretend as if it does if we want to predict its behavior. Let's say that I offered you $1,000 for waving your hand at me. By assuming that you are an individual that would want money, I could make the prediction that you would do so. This is, of course, a trivial assumption. But it rests on the assumption that you are an agent with goals. This comes naturally to us. And that's precisely my point. That's Dennett's intentional stance.

I'm not sure I'm getting my point across exactly, but I want to emphasize that I see no apparent conflict between the content in my post above and the idea of treating individual organisms as adaptation executers (except if the implication is that phylogenetic learning is qualitatively different from ontogenetic learning).

Replies from: zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2021-05-10T07:00:40.540Z · LW(p) · GW(p)

I think that we agree that it can be useful to model many processes as optimization.

My point is that it's dangerous to lose the distinction between "currently useful abstraction" and "it actually is optimization" - much like locally-optimal vs. globally-optimal, it's a subtle confusion but can land you in deep confusion and on an unsound basis for intervention. Systems people seem particuarly prone to this kind of error, maybe because of the tendency to focus on dynamics rather than details.

Replies from: Waddington↑ comment by Waddington · 2021-05-10T08:14:37.059Z · LW(p) · GW(p)

That's a perfectly reasonable concern. Details keep you tethered to reality. If a model disagrees with experiment, it's wrong.

Personally, I see much promise in this perspective. I believe we'll see many interesting medical interventions in the coming decades inspired by this view in general.

comment by [deleted] · 2021-05-10T04:15:30.994Z · LW(p) · GW(p)

I personally know two of the scientists spoken of in this post, and agree that greedy reductionism has a tendency to overshadow very interesting phenomena.

comment by Mitchell_Porter · 2021-05-10T10:53:47.025Z · LW(p) · GW(p)

The Santa Fe Institute was founded in 1984, the first Macy conferences were in the 1940s, Smuts wrote Holism and Evolution in 1926, Aristotle had three types of soul... what's new about this?

Replies from: Waddington↑ comment by Waddington · 2021-05-10T11:37:17.348Z · LW(p) · GW(p)

I didn't anticipate that anyone might think I meant that holism itself was a novel idea, biological or otherwise. To clarify: it's not. It has a long history. But most modern versions can be traced back to the Manhattan Project, which some would consider surprising. Recently, the same basic version of biological holism has popped up in several different fields. This convergence, or consilience if you will, is interesting if only because consilience in science is interesting in general.

The historical background was provided as context for those who might need it. I've heard people say here before that holism is something of a collective blindspot. So why not bring it up for discussion?

Daniel Dennett and Michael Levin have been promoting this view recently. Friston's free energy principle is also a recent development. Flack et al's notion of the information hierarchy is also new. So is Morowitz and Smith's work on energy flow. And the England group's work on dissipation-driven organization. Big-picture syntheses that fit conveniently into this framework such as The Systems View of Life, Dance to the Tune of Life, Experiences in the Biocontinuum, and Incomplete Nature have all made recent appearances. Some might find it interesting to consider all of this in terms of the difficulties in making sense of nonlinear systems, which is why I framed it as such. It's an old problem with novel developments.

Replies from: None↑ comment by [deleted] · 2021-05-12T04:51:15.115Z · LW(p) · GW(p)

FWIW I find the book by Smith and Morowitz to be staggeringly illuminating.

Replies from: Waddington↑ comment by Waddington · 2021-05-12T13:19:26.379Z · LW(p) · GW(p)

I absolutely agree. It's a new way of looking at life.