Mapping downside risks and information hazards

post by MichaelA, JustinShovelain, David_Kristoffersson · 2020-02-20T14:46:30.259Z · LW · GW · 0 commentsContents

Positive vs negative Downside risks and downside effects Information hazards One action can have many effects Closing remarks Suggestion for future work None No comments

This post was written for Convergence Analysis.

Many altruistic actions have downside risks; they might turn out to have negative effects, or to even be negative overall. Perhaps, for example, the biosecurity research you might do could pose information hazards [LW · GW], the article you might write could pose memetic downside risks [LW · GW], or that project you might start could divert resources (such as money or attention) from more valuable things.

This means that one way to be more effective in one's altruism is to prevent or mitigate downside risks and information hazards. This post is intended to aid with that goal by clarifying the concepts of downside risks and information hazards, and how they relate to each other and to other “types” of effects (i.e., impacts). This will be done through definitions, visuals, and examples.

I believe that the ideas covered in this post are fairly well-known, and should seem fairly intuitive even for those who hadn’t come across them before. But I still think there’s value in showing visually how the concepts covered relate to each other (i.e., “mapping” [EA · GW] them onto the space of possible types of effects), as this post does.

Positive vs negative

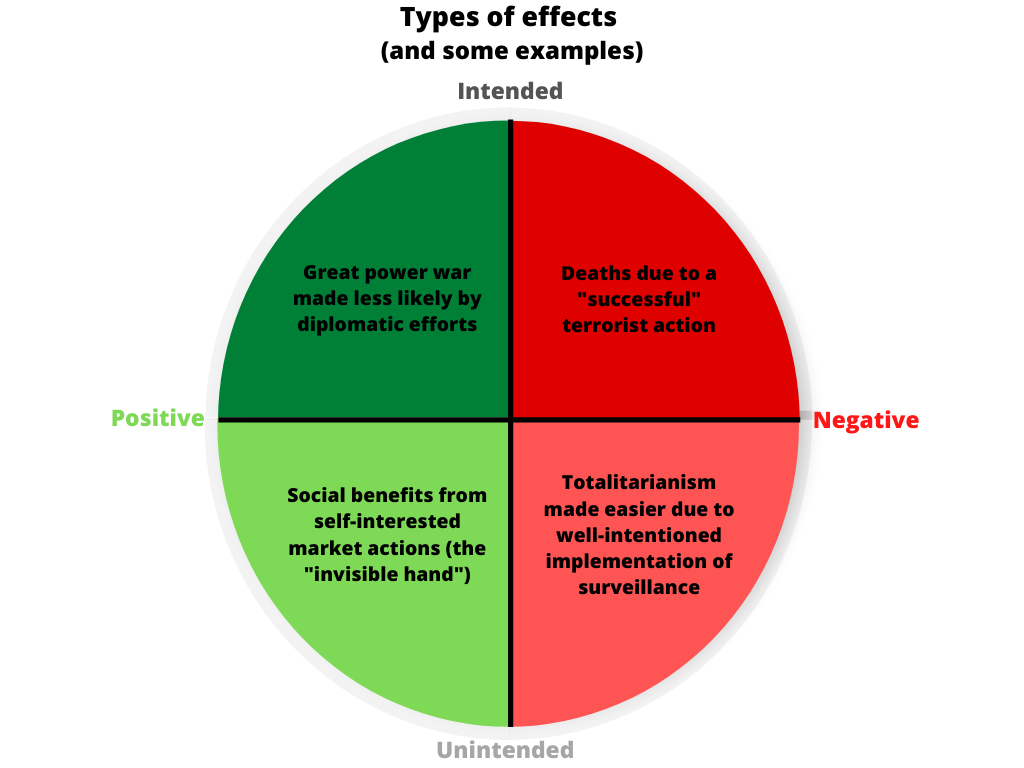

First I’ll present and explain the space in which I’ll later map downside risks and information hazards. This space is shown in the following figure (along with a handful of examples to illustrate the ideas[1]):

This space divides all possible effects into either positive and intended (top left), negative and intended (top right), negative and unintended (bottom right), or positive and unintended (bottom left).

In this model, an action is judged as normatively or morally [LW · GW] “positive” and “negative” from the perspective of the person using this model in analysing, observing, or considering one or more effects. We could call this person the “analyst”. This analyst may also be the “actor”; the person who is performing, or might perform, the actions that will lead to the effects in question. But the analyst will not necessarily be the actor.

For example, if I am using this model in deciding whether I should take up a diplomatic career, then I am both the analyst and the actor. From my perspective, making great power wars less likely is a positive effect, and so I will be more likely to take up a diplomatic career if I think it will lead to that effect.

However, if I am using this model in thinking about the potential effects of terrorist actions, I am the analyst but not the actor. From my perspective, deaths from such actions are negative effects. This is the case even if the terrorists (the actors) would view those deaths as positive effects.

In contrast, I mean “intended” and “unintended” to be from the perspective of the actor. This is why I call deaths from a terrorist action an intended effect, despite the fact that I (the analyst) would not intend it. I have in mind a relatively loose and “commonsense” definition of intend, along the lines of “to have as a plan or purpose” (Cambridge).

All effects that are unanticipated (by the actor) are also unintended (by the actor). This is because, if an actor doesn't anticipate that an action will cause a particular outcome, it can't have been the case that the actor chose to take that action in order to cause that outcome.

Arguably, some effects that were anticipated will also have been unintended. For example, an actor might realise that rolling out a mass surveillance system could result in totalitarianism, and believe that that outcome would be bad. But the actor might decide to take this action anyway, as they judge the action to be net beneficial in expectation (e.g., to help reduce certain existential risks). In this case, the effect was anticipated but not intended; the reason the actor took the action was not to bring about that outcome.[2]

But this can get quite debatable, especially in cases where the actor knew with certainty that the “unintended” effect would occur, or where the “unintended” effect was actually necessary in order for the “intended” effect to occur. We will ignore such complexities and edge cases here.

Three last things to note about this representation of the “space” of possible effects:

- I’m using simple, categorical distinctions; I’m merely classifying things as positive or negative, and as intended or unintended. One could also use a more continuous representation, for example placing effects that are (on balance) very positive far to the left, and those that are just a little positive close to the vertical dividing line. But that’s not what I’m doing here.

- Many effects might actually be basically neutral (i.e., of negligible positive or negative impact, as best we can assess), because they are extremely small, or because they have both negative and positive aspects that seem to roughly cancel each other out. These effects can’t be represented in this space. (In a more continuous representation of this space, such effects could be represented as points on the vertical dividing line.[3])

- The area of each component of this “space” is not meant to signify anything about the relative commonness or likelihood of each type of effect. For example, I would suspect unintended negative effects are much more common than intended negative effects, but I have kept each quadrant the same size regardless.

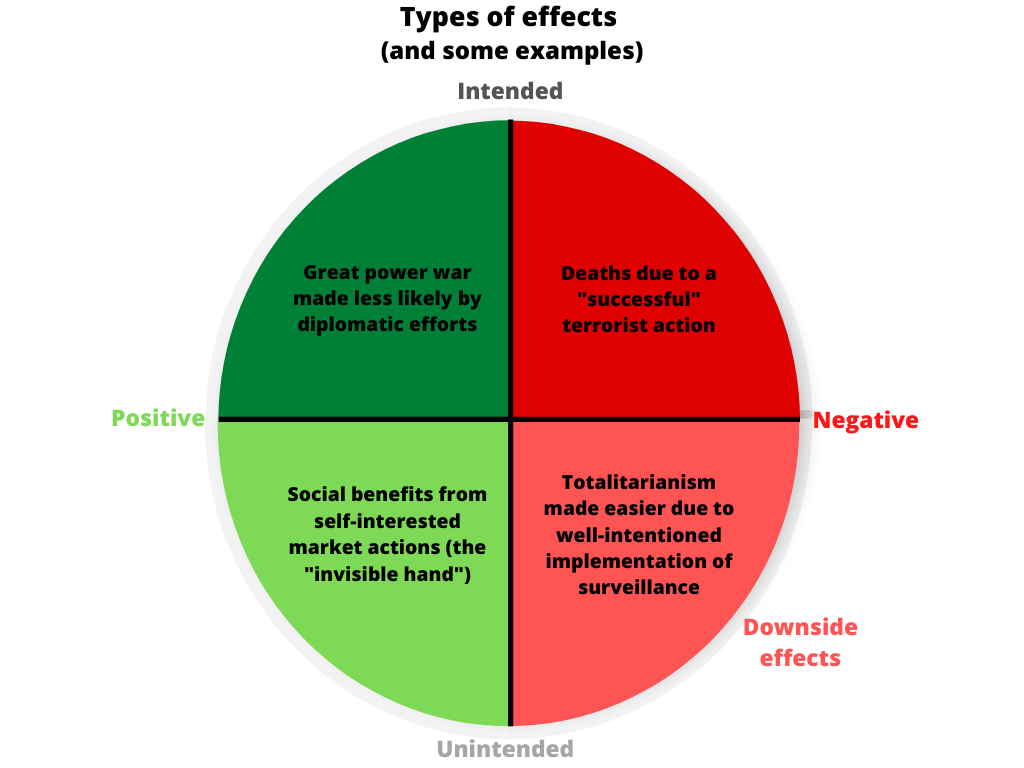

Downside risks and downside effects

One definition of a downside is “The negative aspect of something otherwise regarded as good or desirable” (Lexico). By extension, I would say that, roughly speaking, a downside risk is a risk (or possibility) that there may be a negative effect of something that is good overall, or that was expected or intended to be good overall. Note that a downside risk exists before the action is taken or the full effects are known (i.e., ex ante). (There’s also a more specific meaning of the term downside risk in finance, but that isn’t directly relevant here.)

It seems useful to also be able to refer to occasions when the negative effects actually did occur (i.e., ex post). It seems to me that the natural term to use would be downside effect, which we could roughly define as “a negative effect of something that is good overall, or was expected or intended to be good overall.”[4]

Thus, it seems to me that, roughly speaking, downside effects are unintended, negative effects, and correspond to the bottom right quadrant of our space of effects:[5]

We could also create an “ex ante” version of this figure, where effects are replaced with possibilities, or with effects which one has credence might occur. In this version of the figure, we'd replace “downside effects” with “downside risks”.

Information hazards

An information hazard [LW · GW] is “A risk that arises from the dissemination or the potential dissemination of (true) information that may cause harm or enable some agent to cause harm” (Bostrom). There are many types of information hazards, some of which are particularly important from the perspective of reducing catastrophic or existential risks. For example, there are “Data hazard[s]: Specific data, such as the genetic sequence of a lethal pathogen or a blueprint for making a thermonuclear weapon, if disseminated, create risk” (Bostrom).

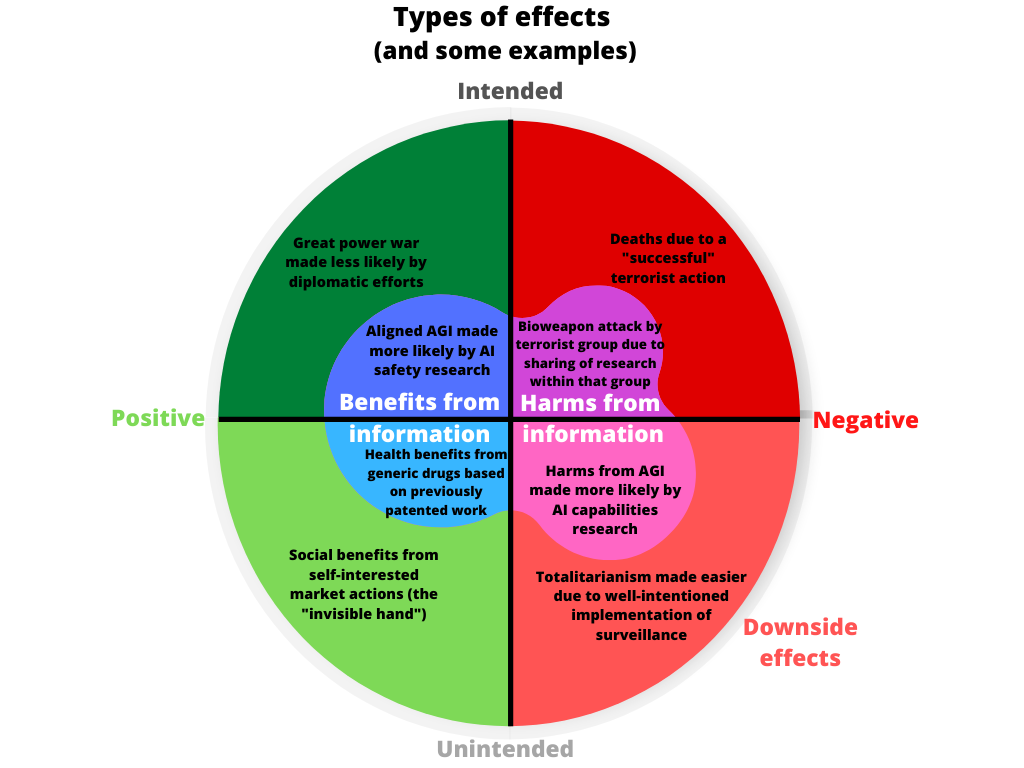

One thing to highlight is that an information hazard is a risk, not an actual effect. If we wish to refer specifically to the potential harms themselves (rather than to the risk that such harms occur), or to the harms that did end up occurring (ex post), we could say “harms from information” or “harms from information hazards”.

Harms from information hazards are a subset of all negative effects, covering the cases in which the cause of the negative effects would be the sharing of (true) information. (Information hazards are the risks that such harms occur.) Likewise, benefits from information is a subset of all positive effects, covering the cases in which the cause of the positive effects would be the sharing of (true) information.

We can thus map “effects from information” (both benefits and harms) as subsets of each of the four quadrants of our space of effects:

(Again, I’ve added a handful of examples to illustrate the concepts. Note that there’s no particular significance to the specific “splotchy” shape I’ve used; I just thought that a circle made the diagram look “too neat” and too much like a bullseye.)

Most obviously, there are many cases of intended positive effects of information. This is arguably the main premise justifying a great deal of expenditure on education, research, journalism, and similar.

But there can also be unintended positive effects of information: positive effects that were unexpected, or weren’t the primary reason why someone took the action they took. In fact, I would guess that a lot of education, research, and journalistic efforts that are intended to have positive effects also have additional positive effects that weren’t expected or intended. For example, research with a specific focus often provides additional, unexpected, useful discoveries.

The most obvious kinds of harm from information hazards are unintended negative effects. For example, in the above quote from Bostrom, it seems likely that the people who originally developed or shared “the genetic sequence of a lethal pathogen or a blueprint for making a thermonuclear weapon” did not intend for this to lead to a global or existential catastrophe. Thus, such an outcome, if it occurred, would be an unintended effect.

But it also seems possible for harms from information hazards to be intended. For example, the people who developed or shared “the genetic sequence of a lethal pathogen or a blueprint for making a thermonuclear weapon” may indeed have intended for this to lead to something that “we” (the “analysts”) would consider negative. For example, those people may have intended for their own militaries to use these technologies against others, in ways that “we” would see as net negative.

Thus, harms from information hazards are negative effects (whether intended or not) from the sharing of (true) information, corresponding to both the purple and pink sections of the above diagram.

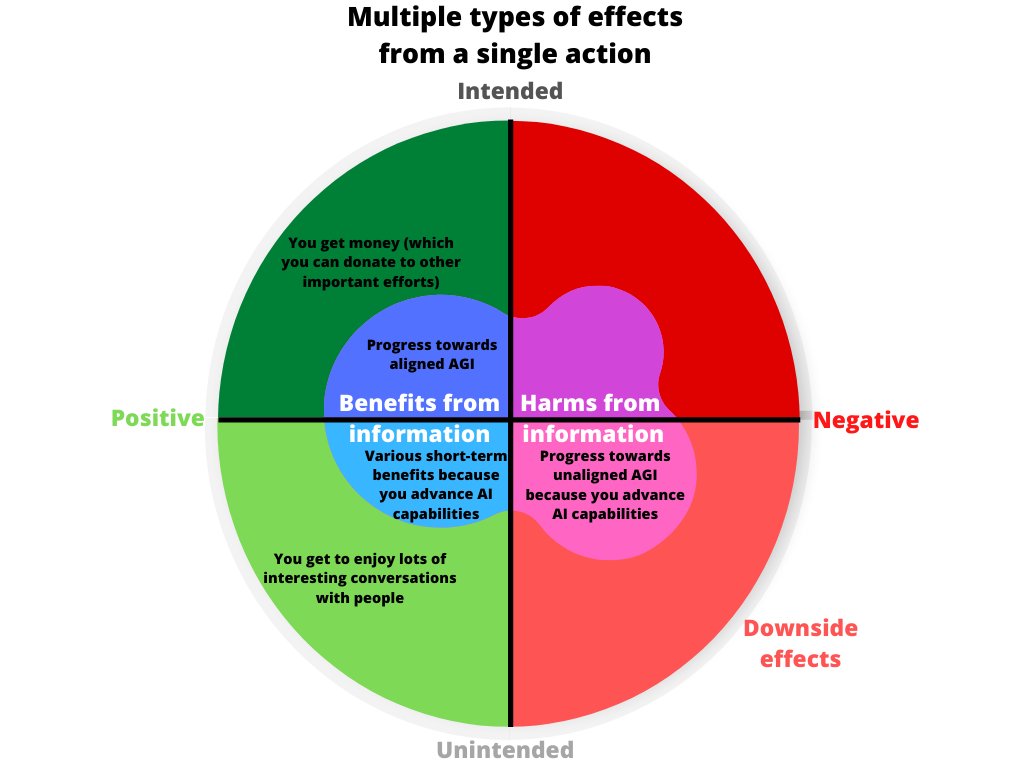

One action can have many effects

Part of why Convergence thinks that these concepts are important, and that it may useful to clarify and map them, is that well-intentioned actions can have (a chance of causing) a variety of effects. Thus, we think that an important way to ensure one’s actions have a more positive impact on the world is to attend to, predict, and mitigate the downside risks and information hazards those actions might pose.

We will illustrate this with an example. Imagine you’re studying computer science, and considering the action of becoming an AI safety researcher, with a focus on reducing the risks of particularly catastrophic outcomes. Some of the potential effects of this action can be shown in the diagram below:

Your rationale for taking this action might be that you could make progress towards safe, aligned AGI (which has various benefits, including reducing risks from unsafe, unaligned AGI), and that you could make a good salary, allowing you to donate more to efforts to mitigate other risks (e.g., work on alternative foods or on reducing biorisks). These are the intended effects of the action. Both are positive, and the first occurs via the sharing of (true) information.

Taking this action might also result in you having lots of interesting conversations with interesting people. This is something you’d enjoy, but it’s not something you specifically anticipated or had as a reason for taking this action. As such, it’s an unintended positive effect.

Finally, although your research would be intended to advance AI safety and alignment in particular, it might also result in insights that advance AI capabilities in general (see also this post [LW · GW]). This could have various short-term benefits, such as leading to better diagnosis and treatment of medical conditions, earlier detection of potential pandemics, and faster progress of clean energy technologies. But it could also advance progress towards unaligned, unsafe AGI.

This last risk is an information hazard. It’s possible that that information hazard would be substantial enough to make the action as a whole net negative (in expectation), such that you should avoid it. At the least, it seems likely that considering that risk in advance would help you choose a specific version or execution of the action that minimises that risk, and would help you look out for signs that that downside effect is occurring. In either case, thinking in advance about potential downside effects and information hazards would help you to have a more positive effect on the world.

Note that this is just one example; we think that the ideas in this post also apply to non-researchers and to issues other than catastrophic and existential risks.

Closing remarks

In this post, I’ve attempted to map part of the space of potential effects, specifically focusing on positive vs negative effects, intended vs unintended effects, downside effects, and harms from information hazards. I’ve argued that, roughly speaking, downside effects are unintended negative effects, and information hazards are risks of (typically unintended) negative effects from the sharing of (true) information.

I hope that you’ve found the ideas and explanations in this post clear, and that they’ll be helpful to you in considering the range of potential effects your actions could have, and choosing actions so as to minimise the downsides and maximise the benefits.

In my next post [LW · GW], I’ll discuss in more detail why people should care about information hazards, and provide some suggestions for how to handle them.

Suggestion for future work

There are many other ways one could carve up the space of types of effects (or types of ex-ante possibilities), and other ways the space could be represented (e.g., with a third dimension, or with decision trees). For a handful of examples:

- I previously [EA · GW] visually represented the relationship between positive effects, differential progress, differential intellectual progress, and differential technological development.

- One could try to also map subtypes of information hazards (e.g., data hazards, attention hazards; see Bostrom’s paper or my post [LW · GW]), and/or come up with and map subtypes of downside risks.

- One could try to visually represent relationships between direct, indirect, short-term, long-term, local, and distant effects.

We hope to provide more of these sorts of analyses and mappings in future. But we would also be excited to see others do similar work.

Thanks to David Kristoffersson [LW · GW] and Justin Shovelain [LW · GW] for helping develop the ideas in this post, and for useful feedback.

See here [EA(p) · GW(p)] and here [EA(p) · GW(p)] for additional sources related to information hazards and downside risks, respectively.

There are also a massive range of other possible examples for each type of effect. The examples I’ve given are not necessarily “typical”, nor necessarily the most extreme or most important. ↩︎

Note that our key aim in this post is to highlight the importance of, and provide tools for, considering the range of effects (including negative effects) which one’s actions could have. Our aim is not to make claims about which specific actions one should then take. ↩︎

Likewise, in a more continuous representation of the space, “edge cases” of effects with debatable intentionality could be placed on or near the horizontal dividing line. ↩︎

The term downside effect returns surprisingly few results on Google, and I couldn’t quickly find any general-purpose definition of the term. But the term and my rough definition seem to me like very natural and intuitive extensions of existing terms and concepts. ↩︎

I think that, “technically”, if we take my rough definition quite seriously, we could think of convoluted cases in which there are downside effects that aren’t unintended negative effects. For example, let’s say some terrorists perform a minor action intended to hurt some people, and do achieve this, but their action also gives the authorities information that results in the capture of these terrorists and various related groups. Let’s also say that this means that, overall, the action actually led to the world being better, on balance. In this example, the hurting of those people was indeed “a negative effect of something that is good overall”, and yet was also intended. But this sort of thing seems like an edge case that can mostly be ignored. ↩︎

0 comments

Comments sorted by top scores.