Memetic downside risks: How ideas can evolve and cause harm

post by MichaelA, JustinShovelain, algekalipso · 2020-02-25T19:47:18.237Z · LW · GW · 3 commentsContents

Overview Background and concept Memetics Downside risks Memetic downside risks Information hazards Relationship between MDRs and information hazards Directions in which ideas will often evolve Towards simplicity Towards salience Towards usefulness Towards perceived usefulness “Noise” and “memetic drift” Discussion and implications Suggestion for future work None 3 comments

This post was written for Convergence Analysis.

Overview

We introduce the concept of memetic downside risks (MDR): risks of unintended negative effects that arise from how ideas “evolve” over time (as a result of replication, mutation, and selection). We discuss how this concept relates to the existing concepts of memetics, downside risks, and information hazards.

We then outline four “directions” in which ideas may evolve: towards simplicity, salience, usefulness, and perceived usefulness. For each “direction”, we give an example to illustrate how an idea mutating in that direction could have negative effects.

We then discuss some implications of these ideas for people and organisations trying to improve the world, who wish to achieve their altruistic objectives and minimise the unintended harms they cause. For example, we argue that the possibility of memetic downside risks increases the value of caution about what and how to communicate, and of “high-fidelity” methods of communication.

Background and concept

Memetics

Wikipedia describes a meme as:

an idea, behavior, or style that spreads by means of imitation from person to person within a culture—often with the aim of conveying a particular phenomenon, theme, or meaning represented by the meme. A meme acts as a unit for carrying cultural ideas, symbols, or practices, that can be transmitted from one mind to another through writing, speech, gestures, rituals, or other imitable phenomena with a mimicked theme.

The same article goes on to say:

Proponents [of the concept of memes] theorize that memes are a viral phenomenon that may evolve by natural selection in a manner analogous to that of biological evolution. Memes do this through the processes of variation, mutation, competition, and inheritance, each of which influences a meme's reproductive success. Memes spread through the behavior that they generate in their hosts. Memes that propagate less prolifically may become extinct, while others may survive, spread, and (for better or for worse) mutate. Memes that replicate most effectively enjoy more success, and some may replicate effectively even when they prove to be detrimental to the welfare of their hosts.

Memetics, in turn, is “the discipline that studies memes and their connections to human and other carriers of them” (Wikipedia).[1]

Downside risks

In an earlier article [LW · GW], we discussed the concept of a downside risk, which we roughly defined as a “risk (or possibility) that there may be a negative effect of something that is overall good, or that was expected or intended to be good”. Essentially, a downside risk is a risk that a given action will lead to an unintended negative effect (i.e., will lead to a downside effect).

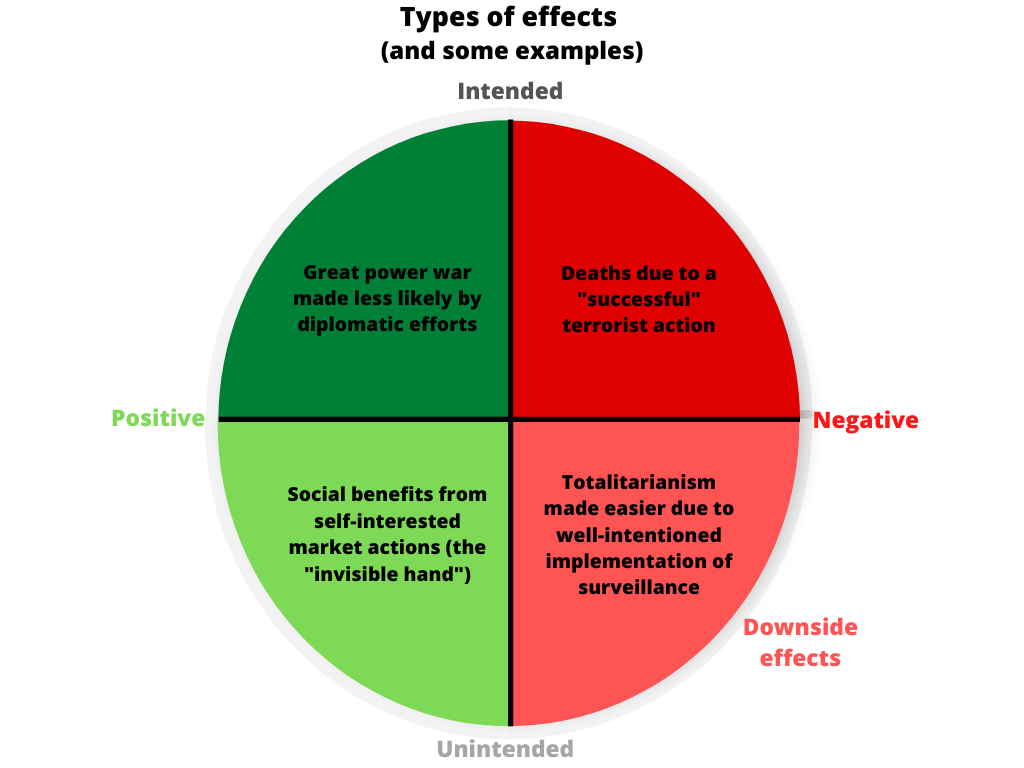

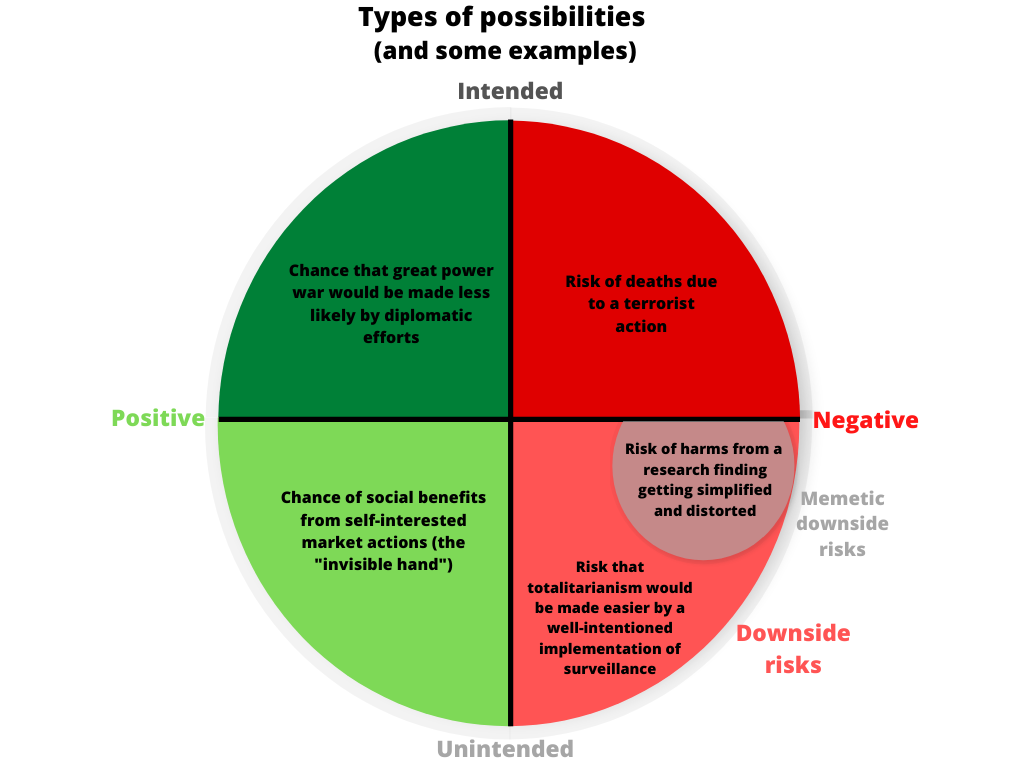

We can thus visually represent downside risks, in relation to other types of possibilities (e.g., the possibility of an intended positive effect), as shown in the following diagram:

This diagram also includes some examples to illustrate each type of possibility. The diagram is adapted from our previous post [LW · GW], which provides further details and explanation.

If an action poses a downside risk, this reduces its expected value. But the downside risk won’t necessarily make the action’s expected value negative, as the potential benefits may outweigh the risks.

Memetic downside risks

We propose bringing the concepts of memetics and downside risks together in the concept of memetic downside risks (MDRs), defined as risks of unintended negative effects that arise from how ideas “evolve” over time (as a result of replication, mutation, and selection). In other words, MDRs are a subset of all downside risks, covering the cases in which the cause of the negative effects would be how ideas evolve over time. We can thus add MDRs (along with an illustrative example) within the bottom right section of the above diagram, as shown below:

Note that we use the term “ideas” quite broadly here, such that it includes true information, false information, normative [LW · GW] claims (e.g., “the suffering of non-human animals is bad”), concepts and constructs, plans and methodologies (e.g., the lean startup methodology), etc. For our present purposes, we would also refer to each different way of stating, framing, or presenting an idea as an individual idea or as a “version” or “variant” of that idea.[2]

We’ll now illustrate the concept of MDRs with an example.

80,000 Hours popularised the idea of “earning to give”: aiming to earn more money in order to donate more to effective organisations or important causes. 80,000 Hours “always believed, however, that earning to give is just one strategy among many, and [they] think that a minority of people should pursue it.” Unfortunately, according to 80,000 Hours, they have come to be seen “primarily as the people who advocate for earning to give. [...] The cost is that we’ve put off people who would have been interested in us otherwise.”

It seems likely to us that popularising the idea of earning to give would have had few downsides if the idea was always understood in its intended form, in context alongside 80,000 Hours’ other views. However, as the idea was spread (i.e., as it replicated), some simplifications or misinterpretations of the idea and how it fit alongside 80,000 Hours’ other advice occurred (i.e., the idea mutated). Gradually, these distorted versions of the idea became more prominent, seemingly because they were more attention-grabbing (so the media preferred to use them), and they were more memorable (so these versions of the ideas were more likely to stick in people’s minds and be available for further sharing later). That is, the selection pressures favoured these mutations. (See also Vaughan.)

Thus, the “cost” 80,000 Hours notes seems to be an example of an unintended harm from the way in which an idea that was valuable in itself evolved. That is, it seems there was an (unrecognised) memetic downside risk, and that a memetic downside effect indeed occurred.

We should make two things clear at this point:

- We’re not arguing that developing and sharing the idea of earning to give was net negative. It’s entirely possible for an action to have a downside risk (or cause a downside effect), which is worth paying attention to and mitigating if possible, even if the overall expected value (or actual impact) is still positive.

- We think that this concept of MDRs, and our later discussion of the directions in which ideas can evolve, is fairly commonsensical, and that various related ideas already exist. We don’t claim these ideas are groundbreaking, but rather that this is a potentially useful way to collect, frame, and extend various existing ideas.

Information hazards

We’ve previously discussed [LW · GW] the concept of an information hazard, as well as [LW · GW] why it’s worth caring about potential information hazards, who should care about them, and some options for handling them. In essence, an information hazard is a “risk which arises from the dissemination or the potential dissemination of (true) information that may cause harm or enable some agent to cause harm” (Bostrom). There are many types of information hazards, such as “Data hazard[s]: Specific data, such as the genetic sequence of a lethal pathogen or a blueprint for making a thermonuclear weapon, if disseminated, create risk” (Bostrom).

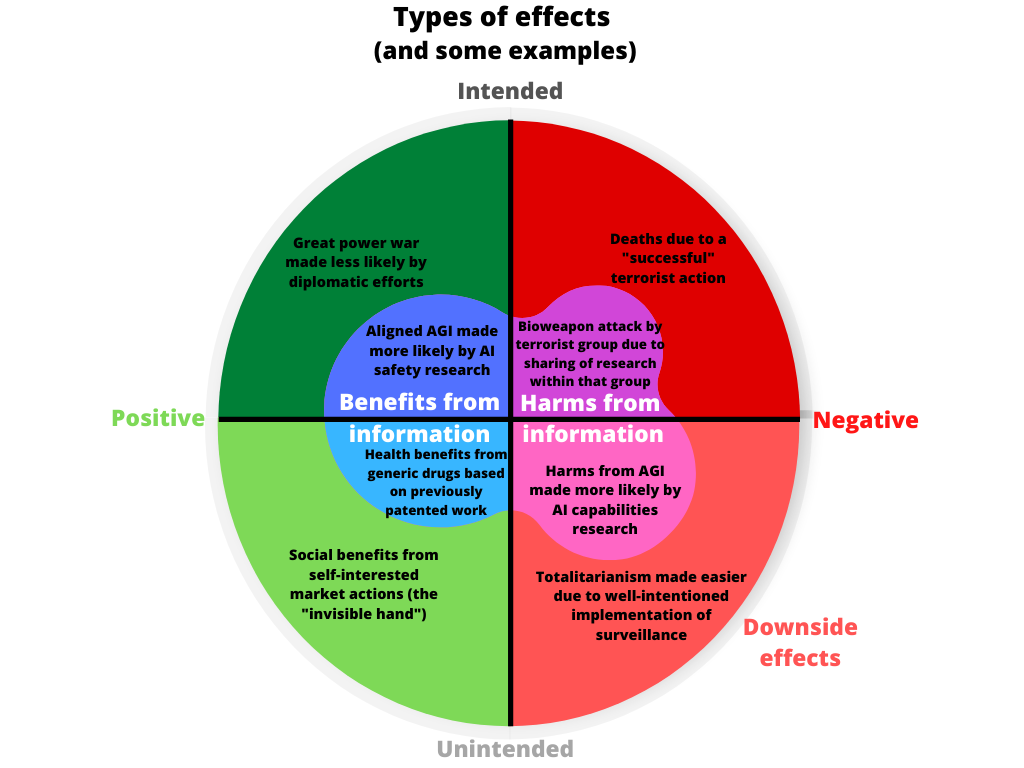

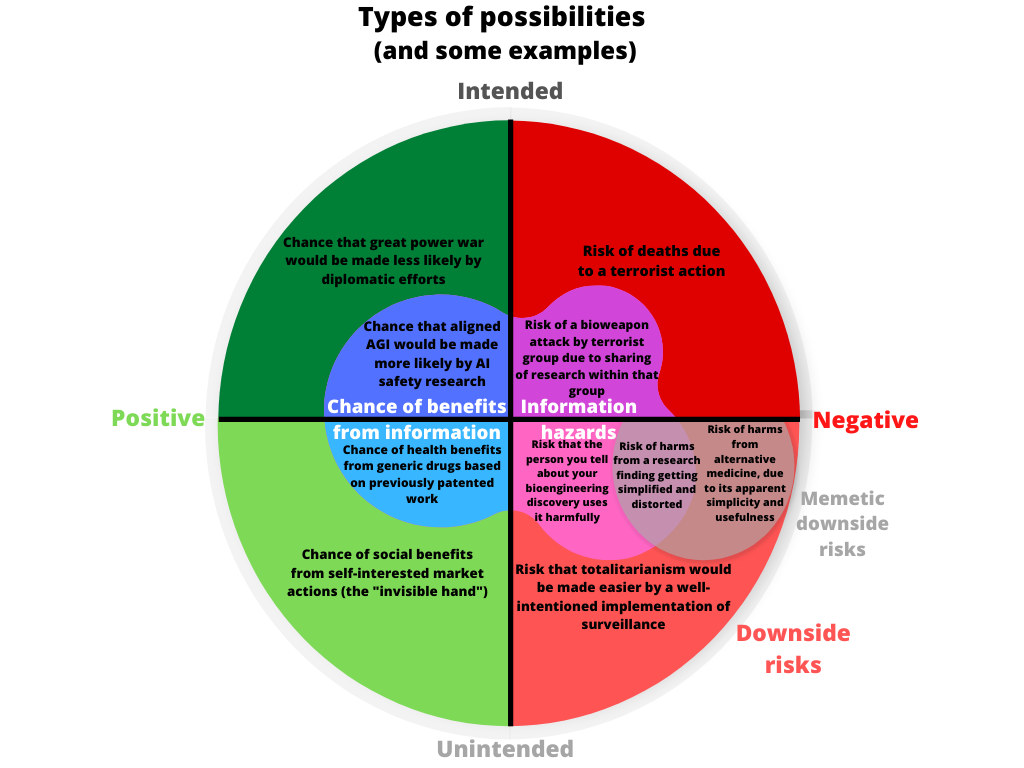

As discussed in our previous post [LW · GW], information hazards can be risks of unintended negative effects (in which case they are also downside risks), or of intended negative effects. Information hazards (and two illustrative examples) can thus be represented as the pink and purple sections of the below diagram:

Relationship between MDRs and information hazards

As noted above, we define MDRs as risks of harm from how ideas evolve over time, with “ideas” including true information, but also including false information and ideas that aren’t well-described as true or false (e.g., plans and methodologies). Thus, some MDRs will also be information hazards, but others will not be.

For instance, compared to the claims of mainstream medicine, the claims of alternative medicine may often seem:

-

Simpler

- For example, the claims may draw on existing and plausible-seeming superstitions, rather than on complicated biological mechanisms.

-

More impressive

- For example, the claims may be confident assertions that all diseases will be cured by a given treatment, rather than that there’s a certain probability that particular diseases would be cured.

As noted in the next section, selection pressures may favour ideas that are simpler and that are perceived as more useful. This may help explain why ideas related to alternative medicine continue to be widely believed, despite lacking evidence and often being harmful (e.g., through side effects, costs, or decreasing the use of mainstream medicine). If so, this would be a case of harm from MDRs (i.e., harms from how ideas evolved over time), but not a case of harm from information hazards, because the harm-inducing information was not true.

Likewise, some information hazards will also be MDRs, but others won’t be. This is despite the fact that any true information can be considered an “idea” (as we use the term). This is because not all harms from information hazards would be due to how ideas evolve over time.

For example, it would be risky to share “the genetic sequence of a lethal pathogen or a blueprint for making a thermonuclear weapon” (Bostrom) to a person who might themselves use this information in a directly dangerous way. This is the case even if there’s no possibility that the recipient of the information would share the information further, or that the person would receive or share a distorted version of the information. Thus, this is an information hazard even if there is no chance of replications, mutations, or selection pressures.

Thus, neither information hazards nor MDRs are a subset of the other. But the category of MDRs overlaps substantially with the category of unintended information hazards. This can be represented in the following Venn diagram (which once again provides examples for illustration):

Directions in which ideas will often evolve

We believe that ideas will often “evolve” towards simplicity, salience, usefulness, and perceived usefulness. Each of these directions represents a selection pressure acting on the population of ideas. Below, we will explain why we think it is likely that evolution in each of those four directions will often occur, and how such evolution could result in unintended harm. Then, in the final section, we will discuss some implications of these ideas for people and organisations trying to improve the world, who wish to achieve their altruistic objectives and minimise the unintended harms they cause.

Some quick caveats first:

- The evolution of ideas in these directions will not necessarily cause harm, and could sometimes be beneficial.

- We doubt that our list is exhaustive; there are likely also other directions in which ideas could harmfully evolve.

- Ideas will often evolve in more than one of these directions at the same time, and sometimes these evolutions will be related or hard to tease apart (e.g., an idea may become more salient partly because it has become simpler).

- As noted earlier, we see most of these ideas as fairly commonsensical, and as related to various phenomena that people often point out. Our hope is simply that this will be a useful way to collect, frame, and extend these relatively common ideas.

- That said, we also acknowledge that some of these claims (including some commonsensical and commonly noted ones) are somewhat speculative, or are grounded mostly in perceptions and anecdotes rather than firm evidence.

Towards simplicity

The simpler an idea is, the easier the idea will be to remember, communicate, and understand. Thus, we would predict that the simpler an idea (or a version of an idea) is, the more likely it is that:

- people will be able to transmit the idea (as they’ll be more likely to recall it)

- people will be motivated to transmit the idea (as doing so will take less effort)

- the idea will be received and remembered intact

As such, over time, simpler ideas may become dominant over more complicated ideas.[3]

A good example of this, and how it can cause harm, is the example mentioned earlier of how a simplified version of the idea of earning to give evolved, became widespread, and likely turned some people off of 80,000 Hours.

This is also related to the idea of hedge drift [LW · GW], in which:

The originator of [a] claim adds a number of caveats and hedges to their claim [e.g., “somewhat”, qualifications of where the claim doesn’t hold], which makes it more defensible, but less striking and sometimes also less interesting. When others refer to the same claim, the caveats and hedges gradually disappear, however.

Towards salience

Salience is:

the state or quality by which [an item] stands out from its neighbors. Saliency detection is considered to be a key attentional mechanism that facilitates learning and survival by enabling organisms to focus their limited perceptual and cognitive resources on the most pertinent subset of the available sensory data. (Wikipedia)

A more salient (version of an) idea will be easier to remember (just as with simpler ideas), and will stand out more to “audiences” (listeners, readers, etc.). Thus, more salient ideas may tend to spread and survive better than less salient ideas do.

We expect ideas may be more salient if they cause strong emotions, and particularly strong negative emotions (see negativity bias).

For a (slightly) hypothetical example, imagine that an AI researcher highlights reasons to think very carefully about the existential risks [EA · GW] that artificial general intelligence could pose. Multiple newspapers report on this researcher’s statements, with various different headlines, pictures, framings, and tones. The newspaper articles that get the most attention and shares are those with headlines like “AI will cause extinction in next 20 years”, pictures of the Terminator, and no mention of the reasonable strategies the researcher discussed for mitigating AI risk.

This is because these extreme, fear-inducing articles are the most striking (as well as the simplest). This leads to more newspapers picking up and further spreading this salient and simple version of the story. Ultimately, this may reduce the amount of respect and (positive) attention given to work on AI safety and existential risks.

This is again related to the idea of hedge drift, as a version of an idea that has fewer hedges and caveats may be more exciting, fear-inducing, or otherwise attention-grabbing.

Towards usefulness

Ideas that are more useful or (i.e., higher quality, better), from a particular agent’s perspective, will likely seem more worthy of attention, memory, and sharing. Thus, more useful (versions of) ideas may gradually become more common than less useful ones.

We expect that evolution in this direction would be beneficial in the vast majority of cases. However, it seems plausible that such evolution could be harmful in cases where an agent becoming better able to achieve its goals would actually be morally bad, or bad for society (likely due to the agent being malicious, misinformed, or careless).

For example, a wide range of ideas (from the scientific method to specific methods of microscopy) have been developed that allow for faster research and development by scientists, corporations, and society. These ideas continue to be refined into more and more useful versions. Additionally, these ideas, and refinements upon them, spread widely, regularly displacing earlier, less useful ideas.

This has produced a wide range of benefits, but has also arguably increased certain catastrophic and existential risks from advanced technologies (see also our post on differential progress [EA · GW]). To the extent that this is true, this would be a case of memetic downside risks. (Note that, as mentioned earlier, this doesn’t necessarily mean or require that the evolution of the ideas is net negative, just that there are some risks.)

One form of usefulness that may be particularly likely to create risks is usefulness for social signalling (e.g., signalling intelligence, kindness, or membership in some group). For example, there is some evidence (a) that motivated reasoning on politically charged topics is (at least in part) a “form of information processing that promotes individuals’ interests in forming and maintaining beliefs that signify their loyalty to important affinity groups”, and (b) that this may help explain political polarisation. If this is true, that would mean (a) that selection pressures favour gradually more extreme and sometimes inaccurate ideas as a result of those ideas being useful in some ways for the individuals who hold and express them, and (b) that this creates a risk of misguided public policies and increased political divisiveness. This would be an MDR.

Towards perceived usefulness

We’ve argued that ideas will often evolve towards usefulness, because this makes ideas seem more worth paying attention to, remembering, and sharing. But we don’t always know how useful an idea actually is. Thus, even if an idea isn’t actually better or more useful than other ideas, if it appears more useful (i.e., if it can “oversell itself”), it may be more likely to be noticed, remembered, and shared, and may come to dominate over time.

Note that evolution towards merely perceived usefulness will be less likely when it’s easier for people to assess the true usefulness of an idea.

This once more seems related to hedge drift [LW · GW]. For example (from that post):

scientific claims (particularly in messy fields like psychology and economics) often come with a number of caveats and hedges, which tend to get lost when re-told. This is especially so when media writes about these claims, but even other scientists often fail to properly transmit all the hedges and caveats that come with them.

This is probably partly due to the tendencies noted above for ideas to evolve towards simplicity and salience. However, it may also be partly because a “hedge-free” version of a scientist’s ideas seems more useful to audiences than the original version of the ideas.

For example, a version of the scientist’s ideas that says a particular treatment definitely cures cancer may seem more useful than a version that includes mention of unrepresentative samples, uncontrolled variables, the test being exploratory rather than confirmatory, etc. Other scientists, journalists, and the public may pay more attention to the more useful-seeming version of the idea, and may consider that version more worth spreading. This is understandable, but could cause harm by leading to too many resources being spent on this alleged cure, rather than on options that in fact have more potential.

“Noise” and “memetic drift”

There are two additional noteworthy ways in which ideas may tend to evolve. In contrast to the four “directions” discussed above, these tendencies do not rely on selection pressures.

Firstly, a given idea may have a tendency to become noisier over time: to change in random directions, due to random mutations that aren’t subsequently corrected. The classic example is the game of telephone, in which the message that is ultimately received is typically very different to the message that was originally sent. In our terminology, the idea mutates as it is replicated. The evolution of the idea may to some extent be towards simplicity or salience, such as because people might tend to jump to the silliest (and thus most attention-grabbing) interpretation of hard-to-hear whispers. But it seems likely that the evolution is mostly just random; each replication of the idea (each time it is whispered and heard) is an imperfect copy, so the idea drifts in unpredictable directions.

Secondly, there will be some randomness in which ideas, out of the population of currently existing ideas, survive and become dominant. We could call this “memetic drift”, by analogy to genetic drift.

We would expect both of these tendencies to typically be harmful, because there may typically be far more ways in which an idea or population of ideas could randomly shift for the worse than for the better (see also generation loss and regression to the mean). On the other hand, these random shifts, and thus the harm they cause, may typically only be small, due to:

- the tendency for less useful (versions of) ideas to gradually be replaced by more useful ones

- the ability of people to make an active effort to avoid random shifts, such as by communicating clearly, checking for mutual understanding, and choosing ideas carefully

Discussion and implications

In this post, we introduced the concept of memetic downside risks (MDR): risks of unintended negative effects that arise from how ideas evolve over time. We discussed how this concept is a subset of all downside risks, and how the concept overlaps with, but is distinct from, information hazards. We described how ideas may often evolve towards simplicity, salience, usefulness, and perceived usefulness, as well as evolving in random ways. We noted how each of these forms of evolution can have negative effects.

We believe that preventing or mitigating downside risks more broadly is an important way for people and groups working to improve the world to do so more effectively. Furthermore, we think that such altruistic actors may often face potential MDRs, particularly if those actors are involved in substantial amounts of research and communication (including via informal channels like blog posts or small events). This is why we wished to draw further attention to, and more clearly conceptualise, MDRs; we think that this should help altruistic actors to notice, think about, and effectively handle one type of downside risks.

What, more specifically, one should do about MDRs will vary greatly in different circumstances. But we think three sets of existing ideas may provide useful starting points and guidance.

Firstly, many of our ideas for handling potential information hazards [LW · GW] could likely be adapted for handling potential MDRs. For example, one could use the rule of thumb that it’s typically worth thinking carefully about whether an idea poses an MDR when the idea could be relatively impactful and when the idea (or something like it) isn’t already well-known. In other cases, such careful thought may typically not be worthwhile. Furthermore, when it is worth thinking carefully about whether an idea poses an MDR, some options for what to do could include sharing the idea anyway, being very careful with how you frame the idea and who you share it with, or not sharing the idea at all. (For more details, see our previous post [LW · GW].)

Secondly, one could draw on the Centre for Effective Altruism’s fidelity model of spreading ideas, developed in response to issues like the distortion of the idea of earning to give. Vaughan writes:

We can analyze the fidelity of a particular mechanism for spreading EA by looking at four components:

Breadth: How many ideas can you explore?

Depth: How much nuance can you add to the ideas?

Environment: Will the audience be in an environment that is conducive to updating their opinions?

Feedback: Can you adapt your message over time to improve its fidelity?

This model led CEA to be more cautious about “low-fidelity” methods of spreading ideas, such as mass media or “heated political discussion[s] on Twitter”. Instead, they put more emphasis on “high-fidelity” methods, such as academic publications and local, in-person interactions. The same basic principles should also be useful for handling MDRs in many cases, though of course other factors (e.g., difficulty publishing one's ideas in academic journals) may push in favour of using low-fidelity methods anyway.

Thirdly, one could draw on Stefan Schubert’s recommendations [LW · GW] for preventing hedge drift (which, as noted above, is a similar phenomenon to some types of MDR):

Many authors use eye-catching, hedge-free titles and/or abstracts, and then only include hedges in the paper itself. This is a recipe for hedge-drift and should be avoided.

Make abundantly clear, preferably in the abstract, just how dependent the conclusions are on keys and assumptions. Say this not in a way that enables you to claim plausible deniability in case someone misinterprets you, but in a way that actually reduces the risk of hedge-drift as much as possible.

Explicitly caution against hedge drift, using that term or a similar one, in the abstract of the paper.

All that said, most fundamentally, we hope altruistic actors become more aware of MDRs (and other downside risks), and thereby move towards appropriate levels of caution and thoughtfulness regarding what and how they communicate.[4][5]

Suggestion for future work

As noted, many of this post’s claims were somewhat speculative. We would be excited to see someone gather or generate more empirical evidence, or at least more precise arguments, related to these claims. For example, how likely is evolution in each of the directions we mentioned? How often is evolution in each direction harmful? What are the most effective ways to handle MDRs, in particular types of circumstances, with particular types of ideas that might evolve in particular ways?

We expect such work would further help people and groups more effectively achieve their altruistic objectives and minimise the unintended harms they cause in the process.

This post was written by MichaelA, based on an earlier post written by Andrés Gómez Emilsson and Justin Shovelain [LW · GW], and with help and feedback from Justin and David Kristoffersson [LW · GW]. Aaron Gertler and Will Bradshaw also provided helpful comments and edits on earlier drafts.

Wikipedia also notes various criticisms of the concept or study of memes, as well as counterarguments to some of these criticisms. Most notably, some criticisms allege that many of the apparent insights of memetics are actually fairly commonsensical anyway, and some criticisms allege that many of the claims associated with memetics are speculative and lacking empirical evidence. As also noted later in the main text, we would accept both of these criticisms as having some relevance to this post, but think that this post can be useful regardless. ↩︎

One could use a broader definition of MDRs, as risks of unintended negative effects that arise from how ideas, behaviours, or styles “evolve” over time. This would be more in keeping with a broad definition of “memes”. However, the term “memes” is also often applied particularly to ideas, and we think that the concept we wish to refer to is more useful if it’s only about ideas, and not about other behaviours or styles. ↩︎

This seems similar to Scott Alexander’s point that more “legible” ideas or policies may win out over time over “less legible” ones, even if the more legible ideas are actually less accurate or more flawed. ↩︎

Some actors may already be appropriately, or even overly, cautious and thoughtful. This is why we say “move towards appropriate levels”, rather than necessarily advocating for increased levels in all cases. It is also why we mentioned a rule of thumb for whether it’s worth even thinking carefully about whether an idea poses an MDR. ↩︎

One additional reason for caution when spreading ideas is that ideas can be very “sticky”. For example, “Information that initially is presumed to be correct, but that is later retracted or corrected, often continues to influence memory and reasoning. This occurs even if the retraction itself is well remembered” (source). See here [EA · GW] for discussion of related issues.

Note that this risk from the "stickiness" of ideas is not identical to, or a subset of, either information hazards or MDRs. This is because this risk will most obviously or typically relate to false information (whereas information hazards relate to true information), and doesn’t depend on ways in which ideas evolve (though it can interact with ways in which ideas evolve). ↩︎

3 comments

Comments sorted by top scores.

comment by TAG · 2020-03-03T20:11:55.063Z · LW(p) · GW(p)

Popular understandings of Auman's agreement theorem are examples of hedge drift.

Replies from: MichaelA↑ comment by MichaelA · 2020-03-04T07:58:29.918Z · LW(p) · GW(p)

Yeah, that seems correct.

Another example that comes to mind is popular understandings of Maslow's hierarchy of needs. It was a few years ago that I read Maslow's actual paper, but I remember being surprised to find he was pretty clear that this was somewhat speculative and would need testing, and that there are cases where people pursue higher-level needs without having met all their lower-level ones yet. I'd previously criticised the hierarchy for overlooking those points, but it turned out what overlooked those points was instead the version of the hierarchy that had moved towards simplicity, apparent usefulness, and lack of caveats.

Replies from: TAG