Unifying Bargaining Notions (2/2)

post by Diffractor · 2022-07-27T03:40:30.524Z · LW · GW · 19 commentsContents

Imaginary Prices, Tradeoffs, and Utilitarianism CoCo Equilibria CoCo Equilibrium Questions Scale-Shift Invariance Bargaining Games as Special Case N-Player Generalizations and Coalitions Harsanyi Equilibria and Generalizing the Nash Bargaining Solution But What if it Sucks, Tho (it Does) Appendix: Proof of Theorem 1 (you can skip this one) None 19 comments

Alright, time for the payoff, unifying everything discussed in the previous post [LW · GW]. This post is a lot more mathematically dense, you might want to digest it in more than one sitting.

Imaginary Prices, Tradeoffs, and Utilitarianism

Harsanyi's Utilitarianism Theorem can be summarized as "if a bunch of agents have their own personal utility functions , and you want to aggregate them into a collective utility function with the property that everyone agreeing that option x is better than option y (ie, for all i) implies , then that collective utility function must be of the form for some number and nonnegative numbers ."

Basically, if you want to aggregate utility functions, the only sane way to do so is to give everyone importance weights, and do a weighted sum of everyone's individual utility functions.

Closely related to this is a result that says that any point on the Pareto Frontier of a game can be post-hoc interpreted as the result of maximizing a collective utility function. This related result is one where it's very important for the reader to understand the actual proof, because the proof gives you a way of reverse-engineering "how much everyone matters to the social utility function" from the outcome alone.

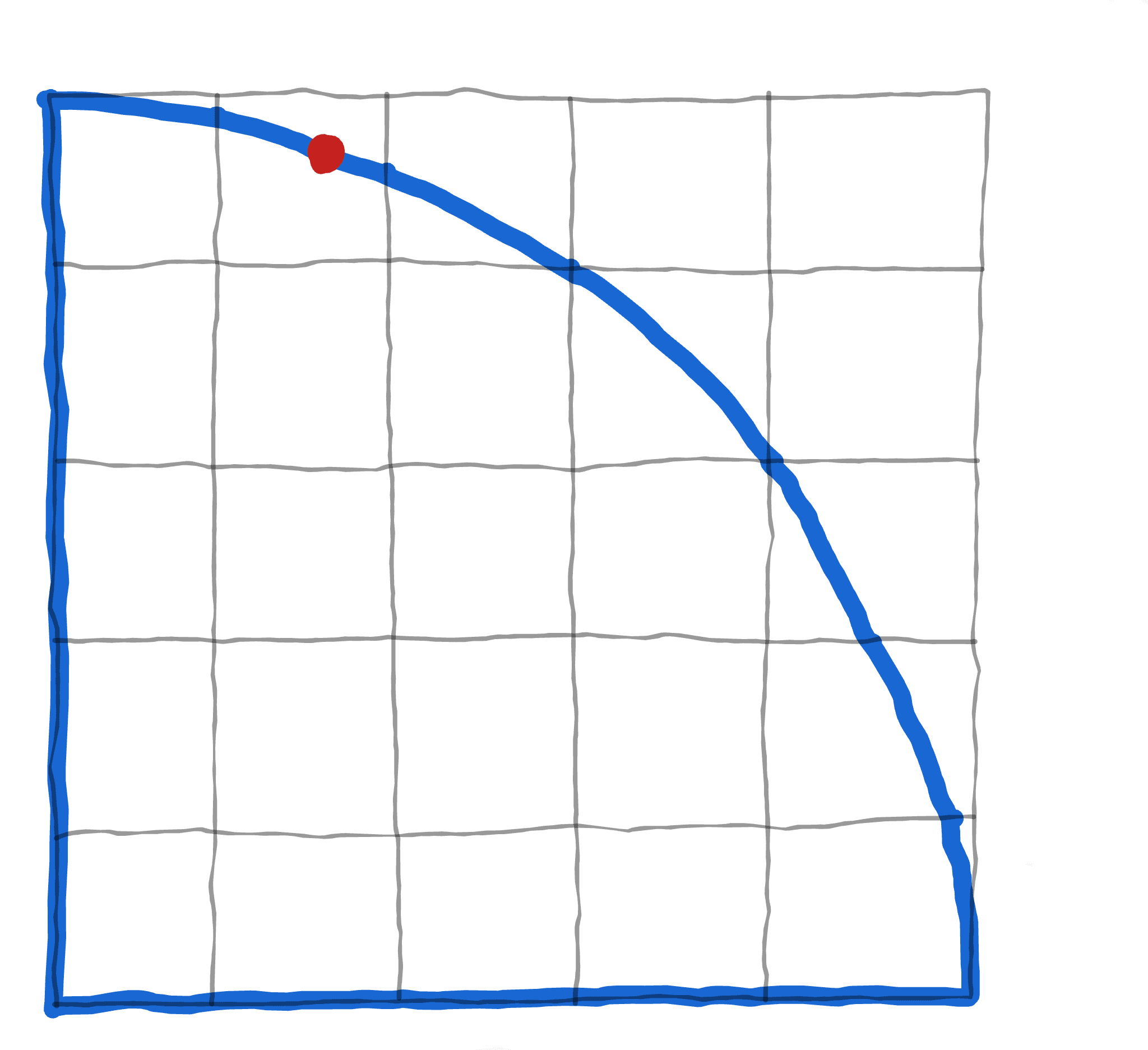

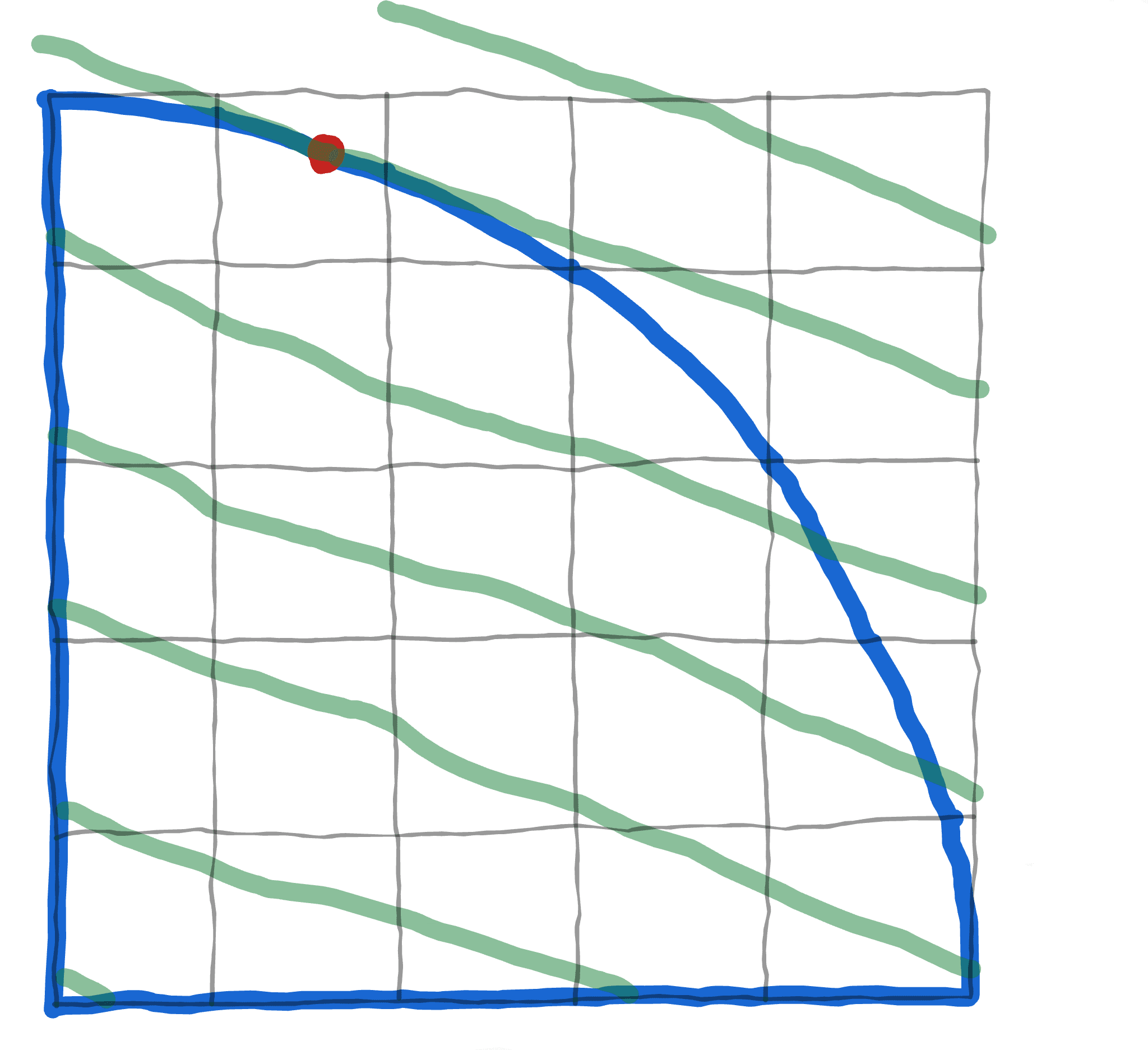

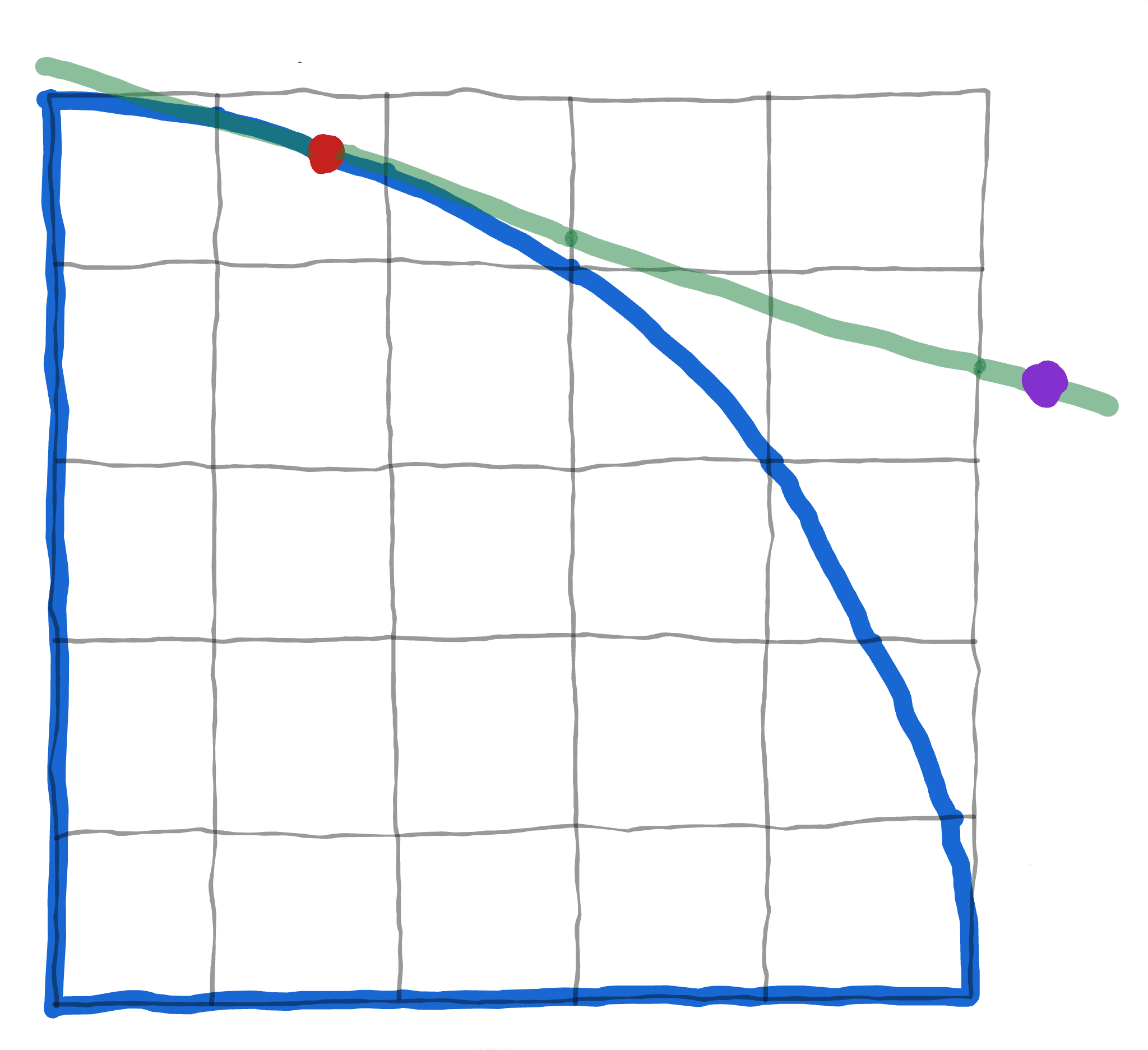

First up, draw all the outcomes, and the utilities that both players assign to them, and the convex hull will be the "feasible set" , since we have access to randomization. Pick some Pareto frontier point (although the drawn image is for only two players)

Use the Hahn-Banach separation theorem to create a linear function such that . (such that is abbreviated s.t. from here on out) Or put another way, is one of the points in the feasible set that maximizes the linear function you created. In the image, the lines are the level sets of the linear function, the set of all points where .

That linear function can be written as . Bam, those coefficients are the utility weights you need. is a point that maximizes the function , and the function is implementing "take this particular weighted sum of the utilities of the players", so we have rationalized our particular Pareto-optimal point as being produced by maximizing some weighted sum of how important everyone's utilities are.

And that's how to take any point on the Pareto frontier and reverse-engineer the weighted sum of everyone's utilities it's maximizing (though if there are corner points, there can be multiple possible weights that'd work, because the tangent plane is no longer unique).

But, there's another completely different way of viewing this process! If we take our Pareto-frontier point and zoom way way in...

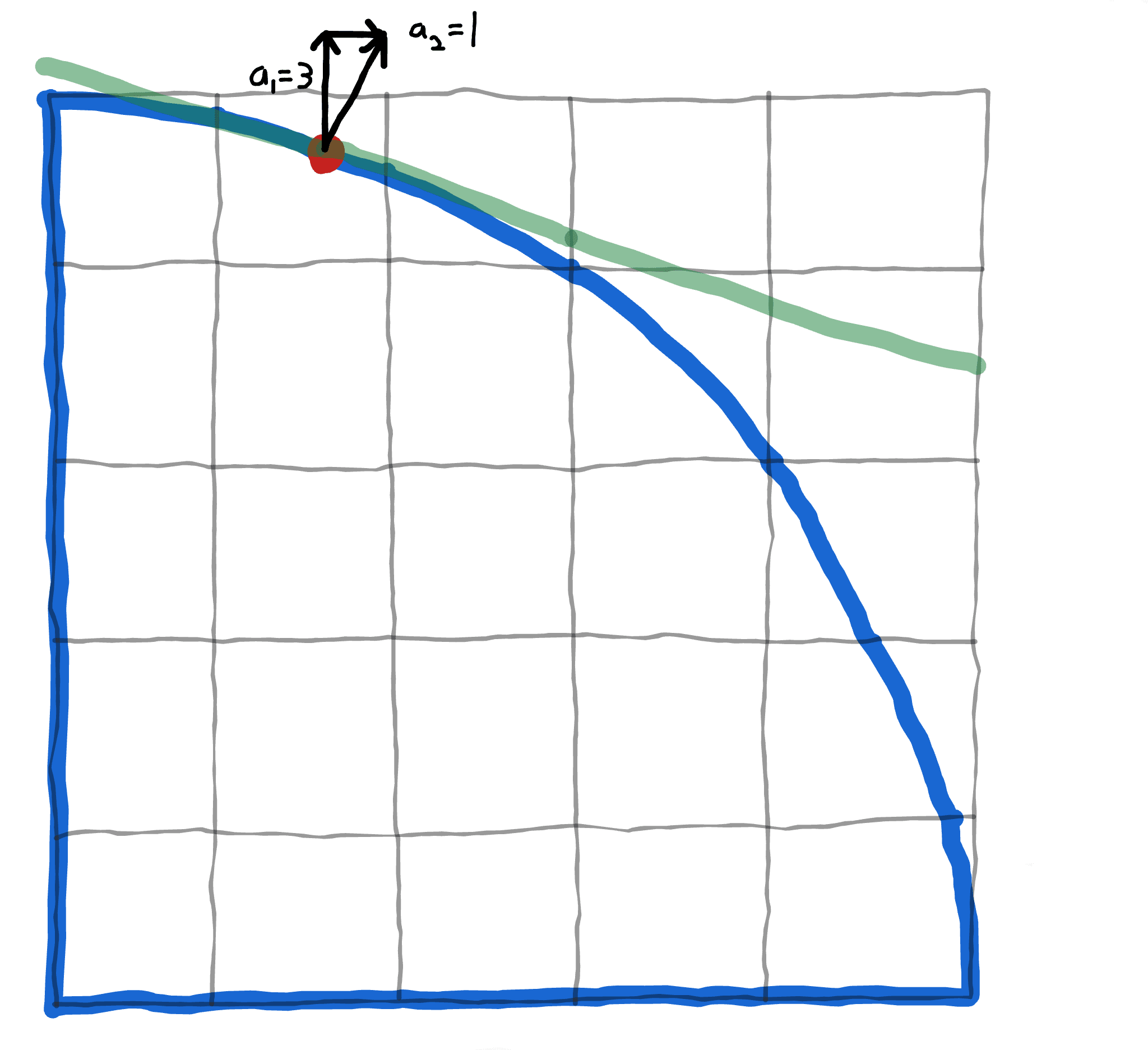

There's a locally linear tradeoff between the utilities of the various players. An increase in the utility of Alice corresponds to a decrease in the utility of Bob. One thing that we can do with this local linearity is invent an imaginary currency! It goes like this. One curnit (currency unit) can be redeemed for a tiny adjustment back and forth along this part of the Pareto frontier, and agents can trade curnits back and forth as needed to adjust exactly where they are on the Pareto frontier. And in particular, the fact that there's a 3 to 1 ratio between how the utility of Alice trades off against the utility of Bob corresponds to Alice needing to spend 3 curnits to get an AliceUtilion, while Bob only needs to spend 1 curnit to get a BobUtilon.

There's a few ways of thinking about this. The first way of thinking about it is that, in this little piece of the Pareto frontier, Alice is 3x harder to satisfy. Another way of thinking about it is that it's like Bob is poor and his marginal utility for a dollar (or a curnit) is a good deal higher than it is for Alice. And a third way of thinking about this is that if we find out this is the best collective point, we can go "huh, the only way that 3 curnits/AliceUtilon and 1 curnit/BobUtilon makes sense is if an AliceUtilon is worth 3x as much as a BobUtilon". Which, oh hey, is the exact same conclusion as we would have gotten from trying to figure out the weights for Alice vs Bob in the social utility function that says that this is the best point to be at.

So, combining these views, we can take any point on the Pareto frontier, and get a vector which can be interpreted either as "these are the importance weights for the players", or as "these are the curnits/utilon conversion factors for the various players".

CoCo Equilibria

So, the CoCo value [LW · GW]works really great for games with transferrable utility, some sort of currency that can be passed around amongst the players. But there are a lot of games without transferrable utility!

But remember our earlier discussion on how, in the local neighborhood of a Pareto-frontier point, we can invent an imaginary currency that reflects how hard it is to improve the utilities of the various players. This works fine in the vicinity of the point, but breaks down as you stray far away.

So, let's take our given example with Alice and Bob. If we introduce "curnits" as a currency in the local vicinity of the point they're at, then we can convert from utility functions (denoted in AliceUtilons and BobUtilons), to (both players' utilities are denoted in curnits now, and are commensurable), use the CoCo values to tell us what payoffs the players "should" get, and divide the result by and respectively to convert it back into AliceUtilons and BobUtilons. When we end up doing this with our example, we'll get a result that has the following reasoning behind it.

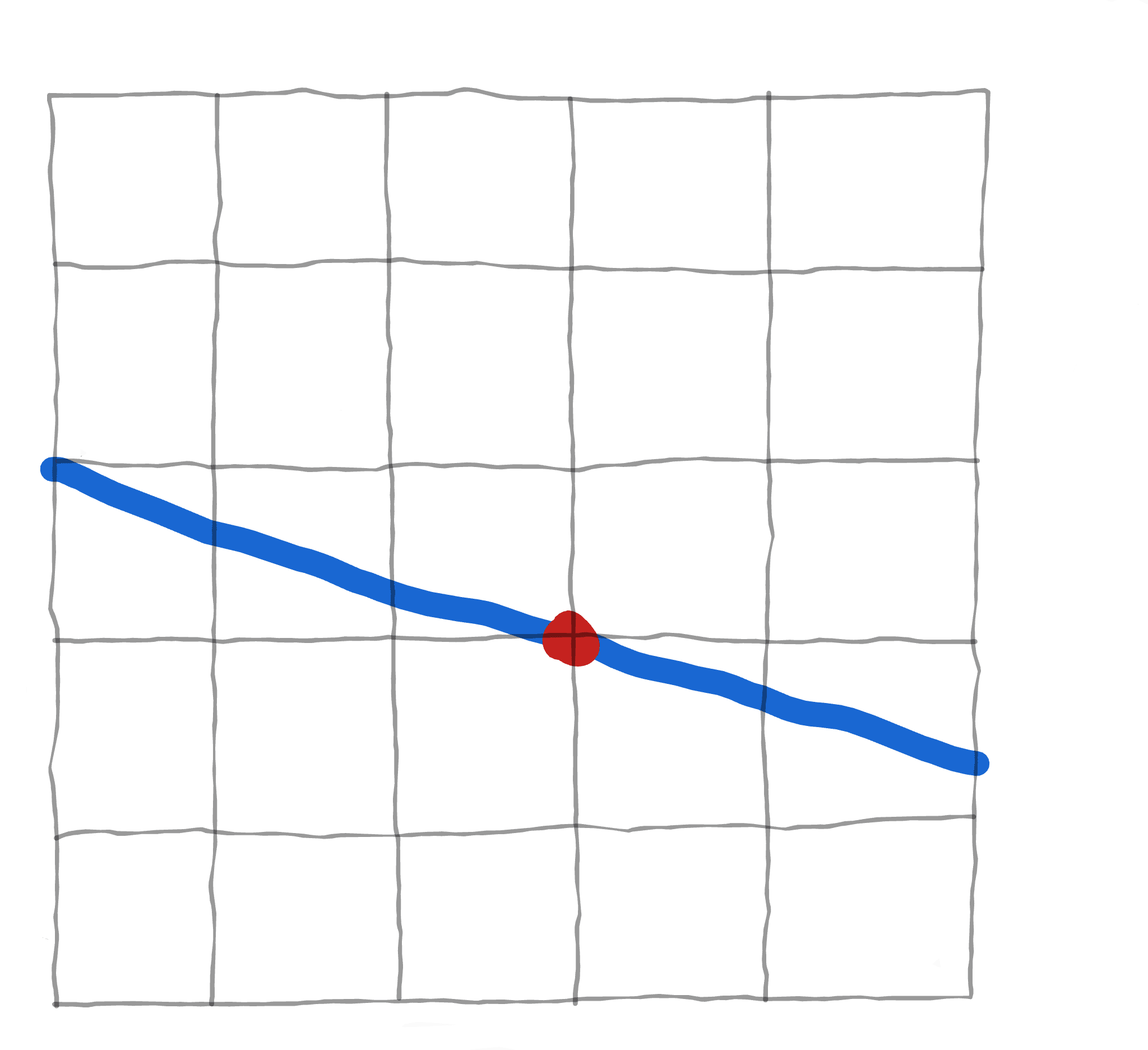

"Since curnits are much more valuable to Bob than they are to Alice, the CoCo games will advise that Alice give Bob some money to get Bob to go along with her plans, since the money is 3x less useful to Alice than it is to Bob. Converting the CoCo payoffs back to utilons, the net result would be "Alice gets a bit less utility than she did at the old Alice-favoring point, Bob gets a giant pile of utility from all those curnits", and it'd actually be an impossible pair of utility values, there's just no way for Bob to get that much utility.

Using the local currency at the red point for a CoCo transferrable-utility game means that Bob gets a small pile of currency in the CoCo game, which translates back into a big pile of BobUtilons, and we end up at the purple point, which is impossible to attain.

Generalizing past this particular example, in general, for Pareto-frontier points where some players lose out really hard (and so implicitly have low utility weights), then when you convert it to a game with transferrable utility, pick the CoCo value, and convert back, the players with really low utility weights will end up with giant piles of utility. This is because "low utility weights " correspond to "The curnits/utilon value is low, so it takes few curnits to help this player a lot", so they get a small pile of money which converts to a big pile of utility.

And so, the question to ask now is something like "is there a point on the Pareto frontier where we can get the curnits/utilon conversion numbers from that point, convert everyone's utility to curnits, work out the CoCo value of the resulting game, convert back to utilons, and end up at the exact same point we started at?"

Basically, a CoCo equilibrium would be a spot where, if the players squabbling over what direction to move on the Pareto frontier went "let's introduce a virtual currency redeemable for tiny perturbations on the Pareto frontier", and worked out the CoCo value for the game they're in (which is a very chaa solution when money is available), it'd return the answer "stay where you currently are, it's a good spot, nobody needs to pay anyone else anything". Which is a very fortunate result to get since this currency doesn't actually exist so nobody could pay each other anything anyways.

CoCo Equilibrium Questions

There are several questions we could ask about CoCo equilibria.

1: Is it scale-and-shift invariant, or does it depend on how everyone represents their utility functions?

2: If we try using it on bargaining games in particular, will it reproduce any well-known bargaining notions?

3: How do we generalize it to the n-person case? Is it straightforward, or are there hidden difficulties?

4: If we really just found a universal notion of how to split gains in games, how come nobody else came up with it first?

5: Do CoCo equilibria even exist, anyways? If so, are they unique?

Time to reveal the answers, which won't be proved yet because they'll follow as a consequence of one big theorem later on.

Scale-Shift Invariance

For the first question, yes, it is scale-and-shift invariant. It doesn't matter how you represent everyone's utility functions, you'll get the same answer. Intuitively, here's what happens. Let's say Alice multiplies all her utility numbers by 100. Now, at the point of interest, this means that we just went from 3 curnits/AliceUtilon to 3 curnits/100 AliceUtilons. And so, the coefficient we multiply Alice's utility function by, (the curnits/AliceUtilons number), went from 3 to , which will perfectly cancel out the fact that Alice multiplied all her numbers by 100. So, the CoCo value (as denominated in curnits) doesn't change one bit. Then we divide by to convert back to Alice's utility, which means that we multiply by , and get a big number for AliceUtilons, as expected (since Alice multiplied all her numbers by 100)

As for shifting, if Alice adds 10 utility to all her numbers, it doesn't alter the coefficient (3 curnits/AliceUtilon), so all of Alice's utility payoffs as denominated in curnits, are 10 higher than usual. But, CoCo value is shift-invariant. If Alice gets a guaranteed 10 extra curnits no matter what she does, her CoCo value will be 10 curnits higher than it'd be usually, and Bob's won't change at all. And so, when we divide by to convert back to Alice's utility, we get an extra 10 utility, as expected (since Alice added 10 to all her numbers)

Ok, we've got scale-and-shift invariance, which is a super-important property to have for something to maybe be "chaa" (a mathematically distinguished point in a negotiation game against a foe where you both have an interest in preventing destructive conflicts, that's neutral enough that aliens would probably come up with it).

Bargaining Games as Special Case

If we apply CoCo equilibrium concepts to bargaining games in particular (player 1 proposes an option or plays "reject", player 2 can accept or reject, anyone rejecting means that both sides get their disagreement payoffs), what do we get?

Well, though it won't be proved now (it'll be indirectly proved later on), CoCo equilibria in bargaining games will turn out to be equivalent to the Nash bargaining solution! The Nash solution can be derived as a special case of this generalization of the CoCo solution to when utility isn't transferrable!

N-Player Generalizations and Coalitions

For generalizing to the n-person case, there's the obvious generalization where, given a Pareto frontier point, we can get the imaginary prices , and the CoCo value makes sense for n-player games. But this doesn't fully take coalitions into account. It's possible that a coalition could conspire amongst themselves to guarantee a good payout. And we could add extra conditions regarding coalitions, like that within a coalition, they use some sort of CoCo-like or Shapley-like split of resources.

To formalize the stronger version of that equilibrium, let be the set of players, and be the utility function of player , and be player 's set of actions.

Formal Definition: Coalition-Perfect CoCo Equilibrium

A coalition-perfect CoCo equilibrium is a tuple (the joint strategies that all possible coalitions would play if they were against the opposing coalition, ), s.t, defining

1: is on the Pareto frontier.

2: There is an tuple (virtual prices) that makes both of the following conditions true.

3:

(ie, all the joint strategies are trying to maximize the money earned if up against the opposing coalition in a zero-sum game, and as a special case, when S=N, it says that what the entire group actually ends up doing maximizes surplus value, which is another way of stating that the are the appropriate virtual currencies to use at the point)

4:

Or, rephrasing this in terms of the more standard framing of Shapley Value...

So, that's a coalition-perfect CoCo equilibrium. You could interpret it as there being a "virtual currency" phrased in terms of how hard it is, relatively, to improve everyone's utility, and everyone getting their CoCo value, and even in the event of zero-sum conflicts between teams, everyone on a team will get their CoCo value. Or you could interpret it as everyone getting their Shapley payoffs, where the analogue of the marginal gain from a particular player is "their value when they join team , plus the improvements in everyone else's value from them no longer opposing team ". Or you could interpret the game, and all the zero-sum subgames, as just maximizing a weighted sum of utilities (like Harsanyi's utilitarianism theorem), and the cool part is the "weights" for how important everyone is will be the same for the full game as well as all the zero-sum subgames.

Harsanyi Equilibria and Generalizing the Nash Bargaining Solution

If this exists and it's nice, why has nobody found it before?

Actually, someone did find this before! That's a major occupational hazard of finding math that aliens would independently reinvent: there's a high chance that someone beat you to the punch. Specifically, John Harsanyi, back in 1963, found this first. He wound up with this same exact solution, though it's quite nontrivial to show the equivalence between our equilibrium notions.

CoCo equilibria were motivated via the nice properties of the CoCo value and generalizing it to the non-transferrable utility case, which turned out to be secretly equivalent to generalizing Shapley values. Harsanyi, as detailed in his lovely paper "A Simplified Bargaining Model for the n-Person Cooperative Game" (which I very highly recommend you read via your favorite paper-reading website!), found it from trying to generalize the Nash Bargaining Solution to games without well-defined disagreement points.

Harsanyi's basic insight was that, in a general two-player game, if it's known in advance that the Nash bargaining solution will be used, and the players are picking their "disagreement strategies" (what they'll fall back on if they can't cooperate on some joint distribution over actions) then Alice would try to pick a "disagreement strategy" that makes it so that, no matter what Bob's disagreement strategy is, the Nash bargaining solution would favor Alice as much as possible, and Bob is in a similar position. So, the two players will end up in a game where they're trying to disagree in a way that'll rig the Nash bargaining solution in their favor. I'm not sure whether or not this is zero-sum, but it is true that if one player wins, the other must lose, so it's zero-sum enough that there's a unique pair of disagreement utilities that you get from maximin strategies, that are mutually optimal against each other, and then you can just use the Nash bargaining solution from there.

In particular, if you're trying to make a threat that's suitable for rigging a bargaining game in your favor, what you need are not threats that hurt both of you equally, or threats that are worse for you than for the foe, or threats that the foe could possibly defuse. What you need is something that matters far more to the foe than you, which the foe can't evade by any action they can take. Or, rephrasing in terms of the CoCo value, to successfully rig the Nash bargaining solution in your favor, you'll need a good move in the zero-sum competition game of the cooperation/competition decomposition.

Generalized to the n-player case, between any two coalitions, they'll be doing the same sort of squabbling over what counts as the disagreement point, everyone within the coalition will agree on what disagreement point to go for in event of conflicts and within any coalition, they'll also be splitting things according to the Nash bargaining bolution as well. I don't fully understand the reasoning behind how that informal sort of description cashes out in the math (in particular, I still don't understand why the disagreement points are defined as they are in the paper), but I'll attempt a summary of Harsanyi's paper anyways. You're highly encouraged to read the paper yourself; it's got lots of goodies in it that I don't mention.

Harsanyi starts off by assuming that every coalition can guarantee some marginal gain to everyone making it up, which is denoted by , the marginal utility payoff to player received from the coalition .

Further, the payoff that a player gets in the event of a zero-sum conflict between and everyone else should just be the sum of the marginal payoffs from all the subsets of that is in, ie. (where is as previously defined, the utility that i gets in the event of a zero-sum conflict between and everyone else). The argument for this is that if the sum of marginal payoffs was more than (the payoff that i gets in the event of a zero-sum conflict), the coalitions collectively would be promising more utility to player i than can actually be guaranteed, and they're making unfulfillable promises. But player i should really be picking up all the utility promised to it from all the coalitions, and not leaving excess on the table, and so we get equality.

As it turns out, if that equation holds, then you can work out how all the must be defined: it must hold that . This is around where I have problems. I just can't quite manage to get myself to see how this quantity is the "slice of marginal utility that coalition promises to player i", so let me know in the comments if anyone manages to pull it off.

Then, we go "ah, if is the marginal gain from being part of coalition " (which, again, I can't quite see), then , where is the payoff to i from playing its part in S's minimax strategy, and is i's threat utility/disagreement point utility from being in coalition S. And so, the disagreement point utility of player i within coalition S must be .

Again, I can't see at all how this is true from the equation alone, but other people might be able to understand it. I can see how, in the special case of the coalition "Alice and Bob", it reproduces the intuitive result of Alice's disagreement-point-utility being "screw you Bob, I'll go off on my own and fight the rest of the world" (ie, ), but I can't see how it extends to larger coalitions than that.

Anyways, a few interesting results are derived and discussed from there. One is that if two players i and j (Ione and Jake) are arguing over their split of the gains in every coalition S that contains both of them, there's a well-defined fallback point for Ione which is (the sum of payoffs from every coalition that contains Ione but lacks Jake), and symmetrically for Jake, and if they do Nash bargaining from there, then it's possible to apply a result on Nash bargaining in subgames (intuitively, both players want to pick a point that doesn't worsen their bargaining position for the full game) to derive that Ione and Jake will agree to the same ratio for how to split coalition gains between them, in every coalition game. So, if Ione and Jake are splitting value 60/40 in one coalition, they're doing that same split in every coalition. This can be used to derive the general result that, in every coalition containing two or more players, they'll all play the Nash bargaining solution against each other.

And there's another interesting and highly nontrivial result from Harsanyi's paper, which effectively says that if the two coalitions of S and N/S (everyone who isn't in S) appoint an individual member to decide on the threat strategy that their coalition will follow, and the appointed representatives Ione and Jake only care about maximizing their own payoff in the overall game (ie, maximizing and ), (ie. they know that the zero-sum fight between S and N/S probably isn't happening, it's just a bargaining chip to get good overall payoffs, and they just care about their own overall payoff, not the interests of the rest of their coalition), then the threat strategies they'll pick will be minimax threat strategies for the S vs N/S zero-sum game. Correspondingly, it doesn't matter what players the coalitions S and N/S appoint as representatives, they'll end up picking a minimax threat strategy to maximize their payoff in the overall game.

What Harsanyi eventually ended up deciding on as the equilibrium conditions were as follows (slightly re-expressed for notational compliance). Let be the utility function of player i, and be their space of actions.

Formal Definition: Coalition-Perfect Harsanyi Equilibrium

A coalition-perfect Harsanyi equilibrium is a tuple (the joint strategies that each coalition would play if they were against the opposing coalition, ),s.t, defining (payoff to player if coalitions and fight), and (the fallback utility value for player in coalition ).

1: is on the Pareto frontier.

2: There is an tuple (virtual prices, though Harsanyi didn't use the term) that makes both of the following conditions true.

3:

(ie. all the joint strategies are trying to maximize the money earned if up against the opposing coalition in a zero-sum game and as a special case, when S=N, it says that what the entire group actually ends up doing maximizes surplus value, which is another way of stating that the are the appropriate virtual currencies to use at the point)

4:

(it's very nonobvious, but if you use Lagrange multipliers on the Nash bargaining problem, this is effectively saying that for all coalitions , the division of resources within , using the various as the disagreement payoffs, follows the Nash bargaining solution)

And now we get to the centerpiece theorem, that coalition-perfect CoCo equilibria are the same as coalition-perfect Harsanyi equilibria. Since Harsanyi already showed things like scale-and-shift invariance, and that these equilibria exist, and lots of other results about them, we just need to prove equivalence and then we can lift all of Harsanyi's work - no point in rederiving everything on our own. Since three of the four conditions for the equilibria are obviously identical, the whole proof focuses on showing that the "Every coalition uses the Nash bargaining solution internally to divide gains, with suitably defined threat points" condition of Harsanyi equilibria is equivalent to the "Every coalition uses the CoCo payoffs/modified Shapley payoffs internally to divide gains" condition of CoCo equilibria.

Theorem 1: A tuple of strategies for every coalition is a coalition-perfect Harsanyi equilibrium iff it's a coalition-perfect CoCo equilibrium.

Equilibria Existence

And now for the final question. Do these sorts of equilibria even exist at all? That's an awful lot of conditions to fulfill at once, you've got one equilibria condition for each coalition, and there's a whole lot of coalitions.

Well, fortunately, Harsanyi proved that these sorts of equilibria exist in his paper! So we can just copy off his work. Well, technically, he did it under some moderately restrictive assumptions which don't look essential, and can probably be removed, though it'll be annoying to do so. Pretty much, his proof works by setting up a game which is related to the bargaining game, and any Nash equilibrium of the auxiliary game can be converted into a coalition-perfect Harsanyi equilibrium.

The assumptions Harsanyi made were, in particular, that the space of all possible utility values in were compact (ie, nobody can get unbounded positive utility or unbounded negative utility, and this assumption is violated in full transferrable utility games), and its affine hull was of dimension , and that the Pareto frontier had no vertices in the sense that, for all points on it, there's a unique tangent hyperplane that touches that point (ie, you can uniquely read off the weights from all Pareto frontier points). Think of a sphere in 3d space: every point on the sphere surface has a unique tangent plane. But for a cube in 3d space, the edges or vertices of the cube can have multiple distinct tangent planes which touch the cube at that point.

With those assumptions, yes, there is a coalition-perfect Harsanyi equilibrium, as proven in his paper.

Harsanyi made the remark that if the Pareto frontier has vertices, it's possible to write any such game as a limit of games that don't have vertices (like, imagine a cube but all the corners and edges have been sanded down a bit, and take the limit of doing less and less sanding), in order to extend the results to games with vertices on their Pareto frontier.

Though he didn't comment on it, it seems like it's possible to also deal with the affine hull dimension issue in this way, in the sense that for any set of possible utility values whose affine hull is of dimension , it's possible to write it as a limit of games whose set of utility values has an affine hull of dimension (the analogue is that any 2-dimensional shape can be thought of as a limit of 3-dimensional shapes that keep getting thinner), and presumably extend his existence result to cases like that.

He didn't actually do these limiting-case proofs at any point, they just seem like the sort of argument that'd need to be done to generalize his proof.

There's another question which is, "are Harsanyi/CoCo equilibria unique"?

Harsanyi made the remark that they were unique for bargaining games (where they'd give the Nash bargaining solution), games with transferrable utility (where they'd give the CoCo value), and 2-player games, but weren't necessarily unique for general n-player games, and then completely refused to elaborate on this.

The problem is, although Harsanyi said there were counterexamples to uniqueness (he meant a counterexample in the stronger sense of "there's more than one tuple of utility values that's an equilibrium", not the obvious weak sense of "maybe there's different strategies for everyone that gives the same payoff"), at no point did he every actually give such a counterexample, even in the paper he cited to that effect. This is somewhat infuriating, and I fear that the non-uniqueness of these equilibria is one of those apocryphal results that nobody ever actually got around to double-checking at any point. I'd be extremely pleased if anyone could find a paper with an actual example of such.

So, yeah, that's about it. There's one good notion of equilibria, that gives Shapley values, CoCo values, and the Nash bargaining solution as special cases, which can variously be thought of as:

1: Maximizing a suitable weighted sum of everyone's utilities, where all the various coalitions agree on the weights of everyone's utilities (so if Alice is twice as important as Bob, then Alice will be twice as important as Bob in all coalitions containing the two of them).

2: Gives everyone their modified Shapley payoffs, and all the coalitions split their gains in a Shapley way.

3: Inventing a virtual currency reflecting how hard it is to improve the utilities of everyone relative to each other and splitting the game into a bunch of coalition vs coalition fights with perfect cooperation and competition, and paying everyone accordingly.

4: Every coalition jostles for a threat strategy that gives them the most payoff from the Nash bargaining solution, and then every coalition does Nash bargaining within itself to split gains.

But What if it Sucks, Tho (it Does)

So, there's one super-important aspect of this that makes it dramatically less appealing that I haven't seen anyone point out. The payoffs for everyone are determined by games of the form "coalition fights coalition not-, coalition is maximizing the quantity "utility of coalition - utility of opposite coalition", and vice-versa for the opposite coalition".

If you depart from nice comfy visualizations of games involving hot-dog selling, and ponder what that'd mean for humanity, you'll probably realize how exceptionally ugly those imaginary games would get.

Actually take one minute, by the clock, to think about what it means that the following equation determine people's payoffs:

This is why I was stressing that "chaa" and "fair" are very different concepts, and that this equilibrium notion is very much based on threats. They just need to be asymmetric threats that the opponent can't defuse in order to work (or ways of asymmetrically benefiting yourself that your opponent can't ruin, that'll work just as well).

I think it's a terrible idea to automatically adopt an equilibrium notion which incentivises the players to come up with increasingly nasty threats as fallback if they don't get their way. And so there seems to be a good chunk of remaining work to be done, involving poking more carefully at the CoCo value and seeing which assumptions going into it can be broken.

Also, next Thursday (June 28) at noon Pacific time is the Schelling time to meet in the Walled Garden and discuss the practical applications of this. Come one, come all, and bring your insights!

Appendix: Proof of Theorem 1 (you can skip this one)

Since conditions 1, 2, and 3 are all obviously equivalent to each other, that just leaves that showing that the condition 4's of both types of equilibria imply each other. First, we'll show a lemma.

Lemma 1:

Start off with

Unpack the definition of .

We can interchange this sum, and view it as picking the set T first, and the set R second.

And group

And we can ask what coefficient is paired with the various . Really, there's two possibilities. One possibility is that , the other is that , so let's split this up.

Clearly, for the former term, is the only possibility, and , so we get

We can reexpress picking a as picking an (the fragments not in T) and unioning it with T.

Try writing this as a sum over subset sizes, and you'll get a factorial term showing up from the many possible subsets of a given size.

And then, by plugging this into Wolfram Alpha, we get 0 and all that stuff cancels.

, and so the lemma has been proved.

Onto the full proof, starting off by assuming condition 4 of a coalition-perfect Harsanyi equilibria, and deriving condition 4 of a coalition-perfect CoCo equilibria. Start off with . Then, we use our lemma that .

Distribute the constant in

Use that , by definition of .

Now, use that, by condition 4 of coalition-perfect Harsanyi equilibria, every player has , so we can rewrite this as

Unpack what is

Distribute the negative in

Merge it into one big sum

Reshuffle the sum so we're summing over subsets first, and elements of that subset later. The available subsets T are all the subsets of R, and they can only be included in the sum for the j that lie in T.

We can reshuffle the negative 1 part outside of the innermost sum.

Abbreviate as , and reshuffle the sums a little bit.

Now, for a given T, we'll work out the coefficient in front of for the entire sum. The first possibility is that . Then the possible R that contribute to the coefficient of in the entire sum are exactly the . The second possibility is that , so then the possible R that contribute to the coefficient of in the entire sum are exactly the . So, breaking things up that way, we get

And we can rephrase supersets of T as subsets of , unioned with T. And rephrase supersets of T that contain i as subsets of , unioned with .

Reexpress

Cancel

Split into a sum over the various sizes of what could possibly be

Reexpress

Group into one big fraction.

Plug it into Wolfram Alpha, and use how the gamma function is defined to get it back into factorial form.

Reindex T to R for notational compliance later on, and we can rewrite this as a single sum over all R that contain i, because they're all paired off with a unique complement that lacks i.

Figure out what the cardinalities of the various sets are

Cancel out, realize that the fractions are the same, and get

And unpacking how was defined, we get, as intended, that this entire chain of equalities has proven

The exact condition 4 for a coalition-perfect CoCo equilibria, proving that all coalition-perfect Harsanyi equilibria are coalition-perfect CoCo equilibria.

Now it's time for the reverse derivation, showing that all coalition-perfect CoCo equilibria are coalition-perfect Harsanyi equilibria. The goal is to show that for all , and , that

So, let's start out with

Substitute in what is

Cancel out the negatives

Fold it into one big sum

Multiply the in

Now, we use condition 4 of a coalition-perfect CoCo equilibrium.

Abbreviate the sum as , to get

Now, we'll have to work out what the coefficent is for a given T, for the entire sum. Like, what number ends up being in front of when we sum everything up? There are two possibilities. The first possibility is that . Then the relevant R that we're summing over are the . If , then the relevant R that we're summing over are the , and we've got a negative 1 showing up from these Z terms being sutracted instead of added, which we can fold into the negative 1 power at the start. Using this grouping, we get

Reexpress it slightly

And cancel

And we can reexpress this as picking a subset of , and unioning it with T to make R, or as picking a subset of and unioning it with to make R.

Reexpress

Cancel

Reexpress as summing up over all possible sizes for , introducing a factorial term because of the many subsets of a given size.

Reexpress

Merge into one big fraction and cancel

And plug into Wolfram Alpha to get that both of these alternating sums over factorials are actually the same coefficient, so we can just write it as

Summing all this up, we've derived

And then we can do this whole line of reasoning again but swapping out i for j, and nothing at all changes, we still get the same quantity at the end, so we have

For all , the fourth condition for a coalition-perfect Harsanyi equilibrium, so all coalition-perfect CoCo equilibria are coalition-perfect Harsanyi equilibria.

Since we've proved both directions, something is a coalition-perfect Harsanyi equilibria iff it's a coalition-perfect CoCo equilibria.

19 comments

Comments sorted by top scores.

comment by Vanessa Kosoy (vanessa-kosoy) · 2022-08-17T05:47:43.633Z · LW(p) · GW(p)

I think it's a terrible idea to automatically adopt an equilibrium notion which incentivises the players to come up with increasingly nasty threats as fallback if they don't get their way. And so there seems to be a good chunk of remaining work to be done, involving poking more carefully at the CoCo value and seeing which assumptions going into it can be broken.

I'm not convinced there is any real problem here. The intuitive negative reaction we have to this "ugliness" is because of (i) empathy and (ii) morality. Empathy is just a part of the utility function which, when accounted for, already ameliorates some of the ugliness. Morality is a reflection of the fact we are already in some kind of bargaining equilibrium. Therefore, thinking about all the threats invokes a feeling of all existing agreements getting dissolved sending us back to the "square one" of the bargaining. And the latter is something that, reasonably, nobody wants to do. But none of this implies this is not the correct ideal notion of bargaining equilibrium.

comment by Martín Soto (martinsq) · 2022-07-29T19:38:10.849Z · LW(p) · GW(p)

You might have already talked about this in the meeting (I couldn't attend), but here goes

. This is around where I have problems. I just can't quite manage to get myself to see how this quantity is the "slice of marginal utility that coalition promises to player i", so let me know in the comments if anyone manages to pull it off.

Let's reason this out for a coalition of 3 members, the simplest case that is not readily understandable (as in your "Alice and Bob" example). We have . We can interpret as the strategic gain obtained (for 1) thanks to this 3 member coalition, that is a direct product of this exact coalition's capability for coordination and leverage, that is, that doesn't stem from the player's own potentials () neither was already present from subcoalitions (like ). The only way to calculate this exact strategic gain in terms of the is to subtract from all these other gains that were already present. In our case, when we rewrite , we're only saying that is the supplementary gain missing from the sum if we only took into account the gain from the 1-2 coalition plus the further marginal gain added by being in a coalition with 3 as well, and didn't consider the further strategic benefits that the 3 member coalition could offer. Or expressed otherwise, if we took into account the base potential and added the two marginal gains and .

Of course, this is really just saying that , which is justified by your (and Harsanyi's) previous reasoning, so this might seem like a trivial rearrangement which hasn't provided new explanatory power. One might hope, as you seem to imply, that we can get a different kind of justification for the formula, by for instance appealing to bargaining equilibria inside the coalition. But I feel like this is nowhere to be found. After all, you have just introduced/justified/defined , and this is completely equivalent to . It's an uneventful numerical-set-theoretic rearrangement. Not only that, but this last equality is only true in virtue of the "nice coherence properties" justification/definition you have provided for the previous one, and would not necessarily be true in general. So it is evident that any justification for it will be a completely equivalent reformulation of your previous argument. We will be treading water and ultimately need to resource back to your previous justification. We wouldn't expect a qualitatively different justification for than for , so we shouldn't either expect one in this situation (although here the trivial rearrangement is slightly less obvious than subtracting , because to prove the equivalence we need to know those equalities hold for every and ).

Of course, the same can be said of the expression for , which is an equivalent rearrangement of that for the : any of its justifications will ultimately stem from the same initial ideas, and applying definitions. It will be the disagreement point for a certain subgame because we have defined it/justified its expression just like that (and then trivially rearranged).

Please do let me know if I have misinterpreted your intentions in some way. After all, you probably weren't expecting the controversial LessWrong tradition of dissolving the question :-)

comment by mtaran · 2022-07-27T15:52:11.682Z · LW(p) · GW(p)

Please consider aggregating these into a sequence, so it's easier to find the 1/2 post from this one and vice versa.

Replies from: Diffractor↑ comment by Diffractor · 2022-07-27T20:13:24.898Z · LW(p) · GW(p)

Task completed.

comment by MSRayne · 2023-09-11T16:11:04.820Z · LW(p) · GW(p)

I really enjoy this sequence but there's a sticking point that's making me unable to continue until I figure it out. It seems to me rather obvious that... utility functions are not shift-invariant! If I denominate option A at 1 utilon and option B at 2 utilons, that means I am indifferent between a certain outcome of A and a 50% probability of B - and this is no longer the case if I shift my utility function even slightly. Ratios of utilities mean something concrete and are destroyed by translation. Since your entire argument seems to rest on that inexplicably not being the case, I can't see how any of this is useful.

Replies from: MinusGix↑ comment by MinusGix · 2023-09-12T16:58:29.719Z · LW(p) · GW(p)

Utility functions are shift/scale invariant.

If you have and , then if we shift it by some constant to get a new utility function: and then we can still get the same result.

If we look at the expected utility, then we get:

Certainty of :

50% chance of , 50% chance of nothing:

- (so you are indifferent between certainty of and a 50% chance of by )

I think this might be where you got confused? Now the expected values are different for any nonzero !

The issue is that it is ignoring the implicit zero. The real second equation is:

- + 0 = 1$

Which results in the same preference ordering.

comment by the gears to ascension (lahwran) · 2023-05-12T17:57:53.343Z · LW(p) · GW(p)

In both this and the previous post, this notation goes undefined:

What are delta and product doing in this definition?

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-05-12T18:04:49.726Z · LW(p) · GW(p)

In the given expression , the delta symbol () represents the simplex, and the product symbol () denotes the Cartesian product.

The simplex, denoted by , is a concept from geometry, but in this context, it's used to indicate the set of probability distributions over a finite set. In the context of game theory, the simplex is used to represent mixed strategies, which are probability distributions over the set of pure strategies.

The Cartesian product, denoted by , is a mathematical operation that returns a set from multiple sets, where the elements of the resulting set are all possible ordered pairs (or tuples) of elements from the input sets. In this case, the Cartesian product is taken over the set of actions for each player .

Combining these two concepts, the expression means that is a mixed strategy profile for the set of players , where each player chooses a probability distribution over their set of actions . The mixed strategy profile is an element of the simplex over the Cartesian product of the action sets of all the players in .

The use of mixed strategies in game theory allows for the analysis of situations where players randomize their actions according to a probability distribution rather than deterministically choosing a single action. This is useful when considering equilibria in games where players have incomplete information or when considering games with multiple Nash equilibria Source 1.

In the context of the bargaining equilibrium, the mixed strategy profile represents the probability distributions over the actions of each player in the game. This allows for the analysis of scenarios where players don't choose a single action with certainty but instead randomize their choices according to a probability distribution.

this doesn't seem quite right to me. probability? seems like an LLM confabulation, perhaps? but it argues convincingly.

comment by IrenicTruth · 2022-09-10T18:15:02.922Z · LW(p) · GW(p)

I haven't finished reading this; I read the first few paragraphs and scanned the rest of the article to see if it would be worth reading. But I want to point out that starting with Harsanyi's Utilitarianism Theorem (a.k.a. Harsanyi's Impartial Observer Theorem) implies that you assume "independence of irrelevant alternatives" because the theorem assumes that its agents obey [1] the Von Neumann–Morgenstern utility theorem. The fourth axiom of this theorem (as listed in Wikipedia) is the "independence of irrelevant alternatives.". Since from the previous article [? · GW],

The Nash Bargaining Solution is the only one that fulfills the usual three desiderata, and the axiom of Independence of Irrelevant Alternatives.

I am not surprised that this results in the Nash Bargaining solution as the solution to Bargaining Games [LW · GW]. The last article also points out that the independence of irrelevant alternatives is not an obvious axiom, so I do not find that the Nash Bargaining solution to be more plausible because it is a generalization of the CoCo Equilibria.[2]

From the abstract here: [https://www.researchgate.net/publication/228049690_Harsanyi' s_'Utilitarian_Theorem'_and_Utilitarianism] and the introduction to Generalized UtilitarianismandHarsanyi's Impartial Observer Theorem ↩︎

This is a bit inconsistent on my part because I usually make formal decisions according to Rule Utilitarianism, and most forms of utilitarianism assume Von Neumann–Morgenstern expected utility. However, in my defense, I'm not firmly attached to Rule Utilitarianism; it is just the current best I've found. ↩︎

comment by Nathaniel (nathaniel-sauerberg) · 2022-08-30T11:16:33.435Z · LW(p) · GW(p)

Thanks for the great post!

In the definition of Coalition-Perfect CoCo Equilibrium, it seems to me like part 1) is already implied by part 3).

1: is on the Pareto frontier.

1) means that the utility profile achieved by the joint strategy for the grand coalition is on the Pareto frontier.

3:

(ie. all the joint strategies are trying to maximize the money earned if up against the opposing coalition in a zero-sum game and as a special case, when S=N, it says that what the entire group actually ends up doing maximizes surplus value, which is another way of stating that the are the appropriate virtual currencies to use at the point)

If in the joint strategy for the special case of S=N the group maximizes surplus value according to some weight function, then utility profile resulting from this joint strategy should be on the Pareto frontier, so 1) should be automatically satisfied.

If it wasn't, then you could improve a player's utility without hurting anyone else. But that would improve the surplus value as well[1], which would mean that the S=N joint strategy didn't maximize surplus value (contradicting 3) ).

I think this is because your utilitarian characterization is an if and only if.

Closely related to this is a result that says that any point on the Pareto Frontier of a game can be post-hoc interpreted as the result of maximizing a collective utility function.

Could be: An outcome is on the Pareto frontier if and only if it can be post-hoc interpreted as the result of maximizing a collective utility function.

- ^

I guess I'm assuming the weights are strictly positive, whereas you only assumed them to be non-negative. Does this matter/Is this the reason why we need 1)?

comment by Lukas Finnveden (Lanrian) · 2022-07-28T19:56:22.506Z · LW(p) · GW(p)

Also, next Thursday (June 28) at noon Pacific time is the Schelling time to meet in the Walled Garden and discuss the practical applications of this.

Is the date wrong here?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-07-28T20:06:15.396Z · LW(p) · GW(p)

No, we just met & had a great time. Were you trying to join? Where were you?

Replies from: Lanrian↑ comment by Lukas Finnveden (Lanrian) · 2022-07-28T20:50:41.991Z · LW(p) · GW(p)

Ah, I see, it was today. Nope, wasn't trying to join. I first interpreted "next" thursday as thursday next week, and then "June 28" was >1 month off, which confused me. In retrospect, I could have deduced that it was meant to say July 28.

comment by Sonata Green · 2022-11-21T07:43:19.056Z · LW(p) · GW(p)

And so, the question to ask now is something like "is there a point on the Pareto frontier where we can get the curnits/utilon conversion numbers from that point, convert everyone's utility to curnits, work out the CoCo value of the resulting game, convert back to utilons, and end up at the exact same point we started at?"

I'm mostly not following the math, but at first glance this feels more like it's defining a stable point rather than an optimal point.

Replies from: Diffractor↑ comment by Diffractor · 2023-03-26T06:12:17.980Z · LW(p) · GW(p)

I'd strongly agree with that, mainly because, while points with this property exist, they are not necessarily unique. The non-uniqueness is a big issue.

comment by Chris van Merwijk (chrisvm) · 2022-11-07T20:48:12.235Z · LW(p) · GW(p)

Is the following a typo?

"So, the ( [LW · GW]works"

first sentence of "CoCo Equilbiria".

Replies from: Diffractor↑ comment by Diffractor · 2023-03-26T06:09:14.466Z · LW(p) · GW(p)

It was a typo! And it has been fixed.