Agent membranes/boundaries and formalizing “safety”

post by Chipmonk · 2024-01-03T17:55:21.018Z · LW · GW · 46 commentsContents

Formalizing “safety” using agent membranes A natural abstraction for agent safety? Distinct from preferences! Protecting agents and infrastructure This is for safety, not full alignment Caveats Markov blankets Protecting agent membranes/boundaries None 46 comments

It might be possible to formalize what it means for an agent/moral patient to be safe via membranes/boundaries. This post tells one (just one) story for how the membranes idea could be useful for thinking about existential risk and AI safety.

Formalizing “safety” using agent membranes

A few examples:

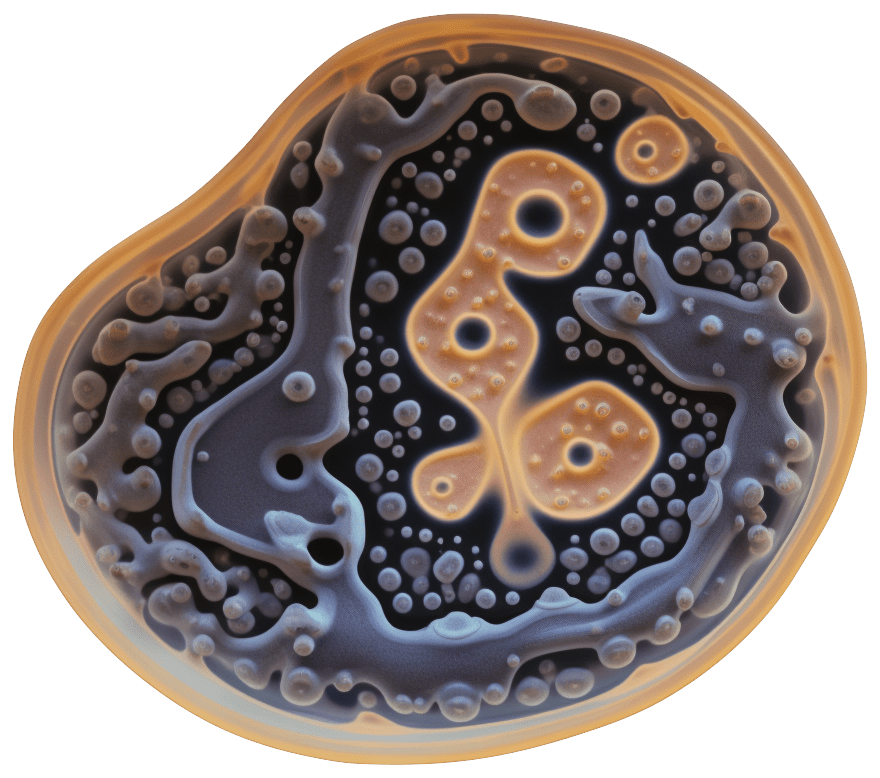

- A bacterium uses its membrane to protect its internal processes from external influences.

- A nation maintains its sovereignty by defending its borders.

- A human protects their mental integrity by selectively filtering the information that comes in and out of their mind.

A natural abstraction for agent safety?

Agent boundaries/membranes seem to be a natural abstraction representing the safety and autonomy of agents.

- A bacterium survives only if its membrane is preserved.

- A nation maintains its sovereignty only if its borders aren’t invaded.

- A human mind maintains mental integrity only if it can hold off informational manipulation.

Maybe the safety of agents could be largely formalized as the preservation of their membranes.

Distinct from preferences!

Boundaries are also cool because they show a way to respect agents without needing to talking about their preferences or utility functions. Andrew Critch has said the following about this idea:

my goal is to treat boundaries as more fundamental than preferences, rather than as merely a feature of them. In other words, I think boundaries are probably better able to carve reality at the joints than either preferences or utility functions, for the purpose of creating a good working relationship between humanity and AI technology («Boundaries» Sequence, Part 3b [? · GW])

For instance, respecting the boundary of bacterium would probably mean “preserving or not disrupting its membrane” (as opposed to knowing its preferences and satisfying them).

Protecting agents and infrastructure

By formalizing and preserving the important boundaries in the world, we could be in a better position to protect humanity from AI threats. Examples:

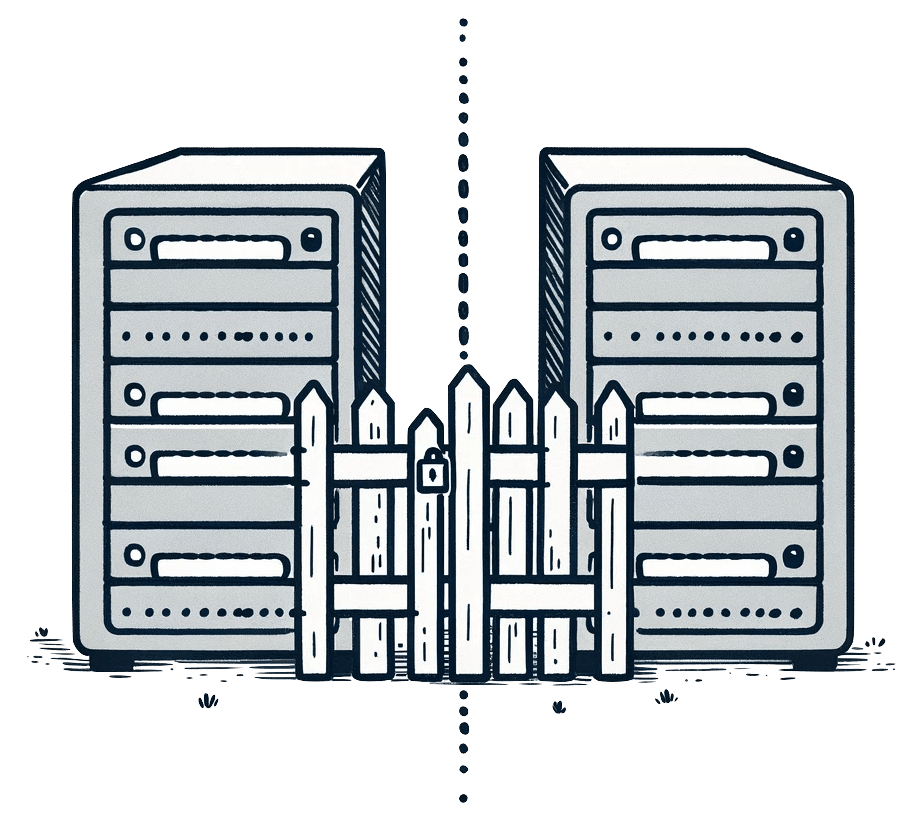

- Critical computing infrastructure could be secured by creating strong boundaries around them. This can be enforced by cryptography and formal methods such that only the subprocesses that need to have read and/or write access to a particular resource (like memory) have the encryption keys to do so.

- Related: Object-capability model, Principle of least privilege, Evan Miyazono’s Atlas Computing, Davidad’s Open Agency Architecture [? · GW].

- It may be possible to specify or agree on a minimal “membrane” for each agent/moral patients humanity values, such that when each membrane is preserved, that agent largely stays safe and maintains its autonomy over the inside of its membrane.

- If your physical boundary isn't violated, you don't die. If your mental boundary isn't violated aren't manipulated. Etc…

- Note: if your membrane is preserved, this just means that you stay safe and that you maintain autonomy over everything with your membrane. It does not necessarily mean that you get actively positive outcomes to occur in the outside world. This is all about bare minimum safety.

- See this thread below and davidad's comment [LW(p) · GW(p)]

- Similarly, it may be possible to formalize and enforce the boundaries of physical property rights.

This is for safety, not full alignment

Note that this is only about specifying safety, not full alignment.

See: Safety First: safety before full alignment. The deontic sufficiency hypothesis. [LW · GW]

Caveats

I don't think the absence of membrane piercing formalizes all of safety, but I think it gets at a good chunk of what "safety" should mean. Future thinking will have to determine what more is required.

What are examples of violations of agent safety that do not involve membrane piercing?

Markov blankets

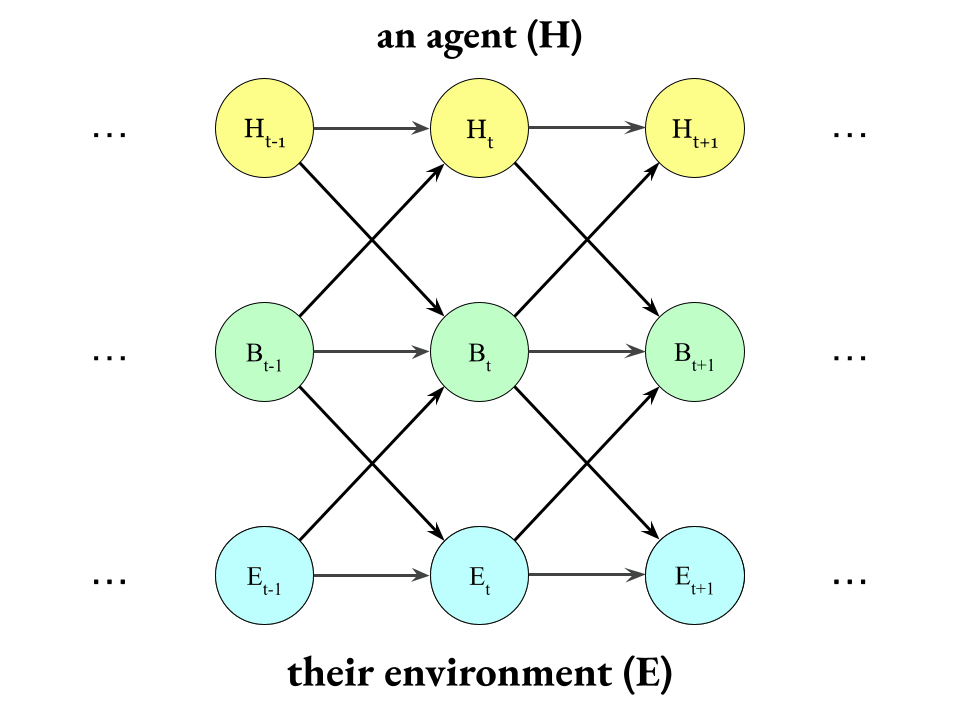

How might membranes/boundaries be formalized mathematically? Markov blankets seem to be a fitting abstraction.

Diagram:

In which case,

- Infiltration of information across this Markov blanket measures membrane piercing, and low infiltration indicates the absence of such piercing.

- (And it may also be useful to keep track of exfiltration across the Markov blanket?[1])

For more details, see distillation Formalizing «Boundaries» with Markov blankets [LW · GW].

Also, there are probably other information-theoretic measures that are useful for formalizing membranes/boundaries.

Protecting agent membranes/boundaries

See: Protecting agent boundaries [LW · GW].

Subscribe to the boundaries/membranes LessWrong tag [? · GW] to get notified of new developments.

Thanks to Jonathan Ng, Alexander Gietelink Oldenziel, Alex Zhu, and Evan Miyazono for reviewing a draft of this post.

- ^

exfiltration, i.e.: privacy and the absence of mind-reading. But I need to think more about this. Related section: “Maintaining Boundaries is about Maintaining Free Will and Privacy” [LW · GW] by Scott Garrabrant.

46 comments

Comments sorted by top scores.

comment by the gears to ascension (lahwran) · 2024-01-04T07:02:16.344Z · LW(p) · GW(p)

Good stuff.

What's "piercing"?

- Is it piercing a membrane if I speak and it distracts you, but I don't touch you otherwise?

- What about if I destroy all your food sources but don't touch your body?

- What if you're dying and I have a cure but don't share it?

- What if I enclose your house completely with concrete while you're in it?

- How about if I give you food you would have chosen to buy anyway, but I give it to you for free?

- What about if I offer you a bad trade I know you'll choose to make because of an ad you just saw?

- What about if I'm the one showing you an ad rather than simply being in the right place at the right time to take advantage of someone else's ad?

↑ comment by davidad · 2024-01-07T00:33:24.294Z · LW(p) · GW(p)

These are very good questions. First, two general clarifications:

A. «Boundaries» are not partitions of physical space; they are partitions of a causal graphical model that is an abstraction over the concrete physical world-model.

B. To "pierce" a «boundary» is to counterfactually (with respect to the concrete physical world-model) cause the abstract model that represents the boundary to increase in prediction error (relative to the best augmented abstraction that uses the same state-space factorization but permits arbitrary causal dependencies crossing the boundary).

So, to your particular cases:

- Probably not. There is no fundamental difference between sound and contact. Rather, the fundamental difference is between the usual flow of information through the senses and other flows of information that are possible in the concrete physical world-model but not represented in the abstraction. An interaction that pierces the membrane is one which breaks the abstraction barrier of perception. Ordinary speech acts do not. Only sounds which cause damage (internal state changes that are not well-modelled as mental states) or which otherwise exceed the "operating conditions" in the state space of the «boundary» layer (e.g. certain kinds of superstimuli) would pierce the «boundary».

- Almost surely not. This is why, as an agenda for AI safety, it will be necessary to specify a handful of constructive goals, such as provision of clean water and sustenance and the maintenance of hospitable atmospheric conditions, in addition to the «boundary»-based safety prohibitions.

- Definitely not. Omission of beneficial actions is not a counterfactual impact.

- Probably. This causes prediction error because the abstraction of typical human spatial positions is that they have substantial ability to affect their position between nearby streets by simple locomotory action sequences. But if a human is already effectively imprisoned, then adding more concrete would not create additional/counterfactual prediction error.

- Probably not. Provision of resources (that are within "operating conditions", i.e. not "out-of-distribution") is not a «boundary» violation as long as the human has the typical amount of control of whether to accept them.

- Definitely not. Exploiting behavioural tendencies which are not counterfactually corrupted is not a «boundary» violation.

- Maybe. If the ad's effect on decision-making tendencies is well modelled by the abstraction of typical in-distribution human interactions, then using that channel does not violate the «boundary». Unprecedented superstimuli would, but the precedented patterns in advertising are already pretty bad. This is a weak point of the «boundaries» concept, in my view. We need additional criteria for avoiding psychological harm, including superpersuasion. One is simply to forbid autonomous superhuman systems from communicating to humans at all: any proposed actions which can be meaningfully interpreted by sandboxed human-level supervisory AIs as messages with nontrivial semantics could be rejected. Another approach is Mariven's criterion for deception, but applying this criterion requires modelling human mental states as beliefs about the world (which is certainly not 100% scientifically accurate). I would like to see more work here, and more different proposed approaches.

↑ comment by the gears to ascension (lahwran) · 2024-01-07T05:52:33.180Z · LW(p) · GW(p)

Definitely not. Omission of beneficial actions is not a counterfactual impact.

You're sure this is the case even if the disease is about to violate the <<boundary>> and the cure will prevent that?

↑ comment by the gears to ascension (lahwran) · 2024-01-07T05:55:17.858Z · LW(p) · GW(p)

We need additional criteria for avoiding psychological harm, including superpersuasion. One is simply to forbid autonomous superhuman systems from communicating to humans at all

Unfortunately this is probably not on the table, as they are currently being used as weapons in economic warfare between the USA, China, and everyone else. tiktok primarily educational inside china. Advertisers have direct incentive to violate. We need a way to use <<membranes>> that will, on the margin, help protect against anyone violating them, not just avoid doing so itself.

Replies from: Chipmonk↑ comment by Chipmonk · 2024-01-15T20:51:28.963Z · LW(p) · GW(p)

Here's a tricky example I've been thinking about:

Is a cell getting infected by a virus a boundary violation?

What I think makes this tricky is that viruses generally don't physically penetrate cell membranes. Instead, cells just "let in" some viruses (albeit against their better judgement).

Then once you answer the above, please also consider:

Is a cell taking in nutrients from its environment a boundary violation?

I don't know what makes this different from the virus example (at least as long as we're not allowed to refer to preferences [LW · GW]).

↑ comment by Chipmonk · 2024-01-07T06:10:33.208Z · LW(p) · GW(p)

any proposed actions which can be meaningfully interpreted by sandboxed human-level supervisory AIs as messages with nontrivial semantics could be rejected.

I want to give a big +1 on preventing membrane piercing not just by having AIs respect membranes, but also by using technology to empower membranes to be stronger and better at self-defense.

↑ comment by Chipmonk · 2024-01-05T01:45:28.865Z · LW(p) · GW(p)

Edit: just see Davidad's comment [LW(p) · GW(p)]

Hmmm. It's becoming apparent to me that I don't want to regard membrane piercing as a necessarily objective phenomenon. Membrane piercing certainly isn't always visible from every perspective [LW · GW].

That said, I think it's still possible to prevent "membrane piercing", even if whether it occurred can be somewhat subjective.

Responding to some of your examples:

Is it piercing a membrane if I speak and it distracts you, but I don't touch you otherwise

Again: I don't actually care so much about whether this is or isn't a membrane piercing, and I don't want to make a decision on that in this case. Instead, I want to talk about what actions taken by which agents make the most sense for preventing the outcome if we do consider it to be a membrane piercing.

In most everyday cases, I think the best answer is "if someone's actions are supposedly distracting you, you shouldn't blame anyone else for distracting you, you should just get stronger and become less distractible". I believe this because it can be really hard to know other agent's boundaries, and if you just let other agents tell you your boundaries you can get mugged too easily.

However, in some cases, self-defense is infact insufficient, and usually in these cases as a society we collectively agree that e.g. "no one should blow an airhorn in your ear-- in this case we're going to blame the person that did that"

What about if I destroy all your food sources but don't touch your body?

It depends on how far out we can find the membranes. For example, if the membranes go so far out as to include property rights then this could be addressed.

What if I enclose your house completely with concrete while you're in it?

Again depends on how far out we go with the membranes: in this case, probably: how much of the law is included.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T18:57:24.910Z · LW(p) · GW(p)

It depends on how far out we can find the membranes. For example, if the membranes go so far out as to include property rights then this could be addressed.

I sort of agree, but my food sources are not my property, they're a farmer's property.

I edited numbers into my questions, could you edit to make your response numbered and get each one?

comment by ThomasCederborg · 2024-03-23T14:48:10.238Z · LW(p) · GW(p)

I think it is very straightforward to hurt human individual Steve without piercing Steve's Membrane. Just create and hurt minds that Steve cares about. But don't tell him about it (in other words: ensure that there is zero effect on predictions of things inside the membrane). If Bob knew Steve before the Membrane enforcing AI was built, and Bob wants to hurt Steve, then Bob presumably knows Steve well enough to know what minds to create (in other words: there is no need to have any form of access, to any form of information, that is within Steve's Membrane). And if it is possible to build a Membrane enforcing AI, it is presumably possible to build an AI that looks at Bob's memories of Steve, and creates some set of minds, whose fate Steve would care about. This does not involve any form of blackmail or negotiation (and definitely nothing acausal). Just Bob who wants to hurt Steve, and remembers things about Steve from before the first AI launch.

One can of course patch this. But I think there is a deeper issue in one specific case, that I think is important. Specifically: the case where the Membrane concept is supposed to protect Steve from a clever AI that wants to hurt Steve. Such an AI can think up things that humans can not think up. In this case, patching all human-findable security holes in the Membrane concept, will probably be worthless for Steve. It's like trying to keep an AI in a box by patching all human findable security holes. Even if it were know, that all human findable security holes, had been fully patched, I don't think that it changes things, from the perspective of Steve, if a clever AI tries to hurt him (whether the AI is inside a box, or Steve is inside a Membrane). This matters if the end goal is to build CEV. Specifically, it means that if CEV wants to hurt Steve, then the Membrane concept can't help him.

Let's consider a specific scenario. Someone builds a Membrane AI, with all human findable safety holes fully patched. Later, someone initiates an AI project, whose ultimate goal is to build an AI, that implements the Coherent Extrapolated Volition of Humanity. This project ends up successfully hitting the alignment target that it is aiming for. Let's refer the resulting AI as CEV.

One common aspect of human morality, is often expressed in theological terms, along the lines of ``heretics deserve eternal torture in hell''. A tiny group of fanatics, with morality along these lines, can end up completely dominating CEV. I have outlined a thought experiment, where this happens to the most recently published version of CEV (PCEV). It is probably best to first read this comment [LW(p) · GW(p)], that clarifies some things talked about in the post [LW · GW] that describes the thought experiment (including important clarifications regarding the topic of the post, and regarding the nature of the claims made, as well as clarifications regarding the terminology used).

So, in this scenario, PCEV will try to implement some outcome along the lines of LP. Now the Membrane concept has to protect Steve from a very clever attacker, that can presumably easily just go around whatever patches was used to plug the human findable safety holes. Against such an attacker, it's difficult to see how a Membrane will ever offer Steve anything of value (similar to how it is difficult to see how putting PCEV in a human constructed box, would offer Steve anything of value).

I like the Membrane concept. But I think that the intuitions that seems to be motivating it, should instead be used to find an alternative to CEV. In other words, I think the thing to aim for, is an alignment target, such that Steve can feel confident, that the result of a successful project, will not want to hurt Steve. One could for example use these underlying intuitions, to try to explore alignment targets along the lines of MPCEV, mentioned in the above post (MPCEV is based on giving each individual, meaningful influence, regarding the adoption, of those preferences, that refer to her. The idea being that Steve needs to have meaningful influence, regarding the decision, of which Steve-preferences, an AI will adopt). Doing so means that one must abandon the idea of building an AI, that is describable as doing what a group wants (which in turn, means that one must give up on CEV as an alignment target).

In the case of any AI, that is describable as a doing what a group wants, Steve has a serious problem (and this problem is present, regardless of the details of the specific Group AI proposal). Form Steve's perspective, the core problem, is that an arbitrarily defined abstract entity, will adopt preferences that is about Steve. But, if this is any version of CEV (or any other Group AI), directed at a large group, then Steve has had no meaningful influence, regarding the adoption of those preferences, that refer to Steve. Just like every other decision, the decision of what Steve-preferences to adopt, is determined by the outcome of an arbitrarily defined mapping, that maps large sets of human individuals, into the space of entities that can be said to want things. Different sets of definitions, lead to completely different such ``Group entities''. These entities all want completely different things (changing one detail can for example change which tiny group of fanatics, will end up dominating the AI in question). Since the choice of entity is arbitrary, there is no way for an AI to figure out that the mapping ``is wrong'' (regardless of how smart this AI is). And since the AI is doing what the resulting entity wants, the AI has no reason to object, when that entity wants to hurt an individual. Since Steve does not have any meaningful influence, regarding the adoption of those preferences, that refer to Steve, there is no reason for him to think that this AI will want to help him, as opposed to want to hurt him. Combined with the vulnerability of a human individual, to a clever AI that tries to hurt that individual as much as possible, this means that any group AI would be worse than extinction, in expectation. Discovering that doing what humanity wants, is bad for human individuals in expectation, should not be particularly surprising. Groups and individuals are completely different types of things. So, this should be no more surprising, than discovering that any reasonable way of extrapolating Dave, will lead to the death of every single one of Dave's cells.

One can of course give every individual meaningful influence, regarding the adoption of those preferences, that refer to her (as in MPCEV, mentioned in the linked post). So, Steve can be given this form of protection, without giving Steve any form of special treatment. But this means that one has to abandon the core concept of CEV.

I like the membrane concept on the intuition level. On the intuition level, it sort of rhymes with the MPCEV idea, of giving each individual, meaningful influence, regarding the adoption of those preferences, that refer to her. I'm just noting that it does not actually protect Steve, from an AI that already wants to hurt Steve. However, if the underlying intuition, that seems to me to be motivating this work, is instead used to look for alternative alignment targets, then I think it might be very useful for safety (by finding an alignment target, such that a successful project would result in an AI, that does not want to hurt Steve in the first place). So, I don't think the Membrane concept can protect Steve from a successfully implemented CEV, in the unsurprising event that CEV will want to hurt Steve. But if CEV is dropped as an alignment target, and the underlying intuition behind this work, is directed towards looking for alternative alignment targets, then I think the intuitions that seems to be motivating this work, would fit very well with the proposed research effort, described in the comment linked above.

(this is a comment about dangers related to successfully hitting a bad alignment target. It is for example not a comment about dangers related to a less powerful AI, or dangers related to projects that fail to hit an alignment target. These are very different types of dangers. So, my proposed idea of using the underlying intuitions, to look for alternative alignment targets, should be seen as complementary. It can be done, in addition to looking for Membrane related safety measures, that can protect against other forms of AI dangers. In other words: if some scenario does not involve a clever AI, that already wants to hurt Steve, then nothing I have said, implies that the Membrane concept, will be insufficient for protecting Steve. In other words: using the Membrane concept, as a basis for constructing safety measures, might be useful in general. It will however not help Steve, if a clever AI is actively trying to hurt Steve)

comment by RamblinDash · 2024-01-04T17:11:34.236Z · LW(p) · GW(p)

This comment [LW(p) · GW(p)] has many good questions. More generally, I suspect that for any given membrane definition, it would be relatively easy to do either or both:

A - specify multiple easily-stated ways to torture or destroy the agent without piercing the membrane; and/or

B - show that the membrane definition is totally unworkable and inconsistent with other similarly-situated agents having similar membranes.

B is there because you could get around A by saying absurd things like 'well my membrane is my entire state, if nobody pierces that then I will be safe.' If you do, then people will of course need to pierce that membrane all the time, many agents' membranes will constantly be overlapping, and the 'membrane' framework just reduces to some kind of 'implied consent' framework, at which point the 'membrane' isn't doing any work.

I suspect it's not a coincidence that this post focuses on 'membranes' in the abstract rather than committing to any particular conception of what a membrane is and what it means to pierce it. I claim this is because there cannot actually exist any even reasonably precise definition of a 'membrane' that both (a) does any useful analytical work; and (b) could come anywhere close to guaranteeing safety.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T16:37:37.452Z · LW(p) · GW(p)

any given

Maybe for most, but I don't know if we can confidently say "forall membrane" and make the statement you follow up with. Can we say anything durable and exceptionless about what it looks like for there to be a membrane through which passes no packet of information that will cause a violation, but which allows packets of information which do not cause a violation? Can we say there isn't? you're implying there isn't anything general we can say, but you didn't make a locally-valid-step-by-step claim, you proposed a statement without a way to show it in the most general, details-erased case.

absurd things like

whether it's absurd is yet to be shown, imo, though it very well could be

well my membrane is my entire state, if nobody pierces that then I will be safe

well, like, we're mostly talking about locality here, right? it doesn't seem to be weird to say it has to be your whole state. but -

people will of course need to pierce that membrane all the time

right, the thing that we have to nail down here is how to derive from a being what their implied ruleset should be about what interactions are acceptable. compare the immune system, for example. I don't think we get to avoid doing a CEV, but I think boundaries are a necessary type in defining CEVs, because --

many agents' membranes will constantly be overlapping

this is where I think things get interesting: I suspect that any reasonable use of membranes as a type is going to end up being some sort of integral over possible membrane worldsheets or something. in other words, it's an in-retrospect-maybe-trivial-but-very-not-optional component of an expression that has several more missing parts.

Replies from: RamblinDash↑ comment by RamblinDash · 2024-01-05T17:20:46.238Z · LW(p) · GW(p)

I realized I might not have been clear above, by "state" I meant "one of the fifty United States", not "the set of all stored information that influences an an Agent's actions, when combined with the environment". I think that is absurd. I agree it hasn't been shown that the other meaning of "state" is an absurd definition.

comment by sturb (benjamin-sturgeon) · 2024-01-05T12:21:04.429Z · LW(p) · GW(p)

It seems somewhat easy to think of examples of ways to harm an agent without piercing its membrane, eg killing its family, isolating it, etc. The counter thought would be that there are different dimensions of the membrane that extend over parts of the world. For example part of my membranes extend over the things I care about, and things that affect my survival.

The question then becomes how to quantify these different membranes and in terms of interacting with other systems how they can be helpful to you without harming or disturbing these other membranes.

Replies from: lahwran, Chipmonk↑ comment by the gears to ascension (lahwran) · 2024-01-05T16:39:39.029Z · LW(p) · GW(p)

the family unit as an agent has a aggregate meta-membrane though, doesn't it? this is why I'd expect to need an integral over possible membranes, and the thing we'd need to do to make this perspective useful is find some sturdy, causal-graph information-theoretic metric that reliably identifies agents. compare discovering agents

↑ comment by Chipmonk · 2024-01-05T16:22:13.551Z · LW(p) · GW(p)

kill its family

Huh interesting.

To be clear I think this probably emotionally harms most humans, but ultimately it's that's up to whatever interpretations and emotional beliefs that person has (which are all flexible [? · GW], in principle).

The counter thought would be that there are different dimensions of the membrane that extend over parts of the world. For example part of my membranes extend over the things I care about, and things that affect my survival.

Yes

The question then becomes how to quantify these different membranes and in terms of interacting with other systems how they can be helpful to you without harming or disturbing these other membranes.

If I understand what you're saying (and I may not)- yes, this

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T16:41:43.705Z · LW(p) · GW(p)

if I and my friend work together well, aren't we an aggregate being that has a new membrane that needs protecting?

Replies from: Chipmonk↑ comment by Chipmonk · 2024-01-05T16:52:44.162Z · LW(p) · GW(p)

from the post:

a minimal “membrane” for each agent/moral patients humanity values value

Many membranes (ie: many possible Markov blankets if you could observe them all) are not valued, empirically.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T18:44:48.412Z · LW(p) · GW(p)

right, which translates into, it's not a uniform integral, there's some sort of weighting.

but I don't retract my argument that the moral value of my relationship with my friend means that me and my friend acting together as a friendship means that the friendship has a membrane. How familiar are you with social network analysis? if not very, I'd suggest speedwatching at least the first half hour of https://www.youtube.com/watch?v=2ZHuj8uBinM which should take 15m at 2x speed. I suggest this because of the way the explanation and the visual intuitions give a framework for reasoning about networks of people.

we also need to somehow take into account when membranes dissipate but this isn't a failure according to the individual beings.

Replies from: Chipmonk↑ comment by Chipmonk · 2024-01-05T18:59:24.251Z · LW(p) · GW(p)

could you restate your argument again plainly i missed it

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T19:05:24.180Z · LW(p) · GW(p)

groups working together to be an interaction have a aggregate meta-membrane: for any given group who are participating in an interaction of some kind, the fact of their connection is a property of {the mutual information of some variables about their locations and actions or something} that makes them act as a semicoherent shared being, and we call that shared being "a friendship", "a romance", "a family", "a party", "a talk", "an event", "a company", "a coop", "a neighborhood", "a city", etc etc etc. each of these will have a different level of membrane defense depending on how much the participants in the thing act to preserve it. in each case, we can recognize some unreliable pattern of membrane defense. typically the membrane gets stretched through communication tubes, I think? consider how loss of internet feels like something cutting a stretched membrane that was connecting you.

...this is why I'd expect to need an integral over possible membranes, and the thing we'd need to do to make this perspective useful is find some sturdy, causal-graph information-theoretic metric that reliably identifies agents. compare discovering agents

this seems like an obvious consequence of not getting to specify "organism" in any straightforward way; we have to somehow information theoretically specify something that will simultaneously identify bacteria and organisms, and then we need some sort of weighting that naturally recognizes individual humans. those should end up getting the majority of the weight in the integral, of course, but it shouldn't need to be hardcoded.

Replies from: Chipmonk↑ comment by Chipmonk · 2024-01-05T19:15:18.025Z · LW(p) · GW(p)

Oh.

But why shouldn't it be hardcoded?

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T19:18:44.129Z · LW(p) · GW(p)

well maybe it can be as a backstop but what about, idk, dogs? or just humans that aren't in the protected group, eg people outside a state?

comment by the gears to ascension (lahwran) · 2024-01-04T07:10:16.972Z · LW(p) · GW(p)

More generally, what property of the system inside the membrane do we want to assert about?

Replies from: Chipmonk↑ comment by Chipmonk · 2024-01-04T16:46:36.476Z · LW(p) · GW(p)

Hm right now I only see asserting properties about the state of the membrane, and not about anything inside

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T18:47:19.144Z · LW(p) · GW(p)

I conjecture that someone will be able to prove that in expectation over properties of the membrane (call the random variable P), properties P you wish to assert about the state of the membrane without reference to the inside of the membrane are strongly probably either insufficient, and therefore allows adversaries to "damage" the insides of the membrane, or the given P is overly constraining and itself "damages" the preferences of the being inside the membrane; where "damage" means "move towards a state dispreferred by a random variable U of what partial utility function the inside of the membrane implies". this is a counting argument, and those have been taking some flak lately, but my point is that we need to do better than simplicity-weighted random properties of the membrane.

Replies from: None↑ comment by [deleted] · 2024-01-05T19:04:02.975Z · LW(p) · GW(p)

This is why when I propose "stateless microservice for AI" the first word does a lot of the work. Stateless means the neural networks implementing each microservice have frozen weights and are immutable during use in the production environment. (Production = in the "real world").

A second part of stateless microservice architecture is the concept of a "transaction". At the end of a transaction the state of the software system providing the service is the same as the beginning of the transaction. All intermediate values are erased.

For example in a practical use case, an ai model driving a robot operating in a manufacturing cell has its session restored to the initial state as soon as it delivers the output. A bricklayer robot might have it's state cleared as soon as it finishes a discrete separable wall and needs to move to a new location.

I look forward to further posts from Chipmonk but I suspect they have rediscovered a technique that existing engineers already know about, and/or what Eric Drexler has already proposed here on lesswrong.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T19:20:27.515Z · LW(p) · GW(p)

this seems like a total nonsequitur to me. why would not storing state guarantee that the behaviors the system emits will follow any meaningful constraint? pretty sure the goal here is to assert something about what the outputs of these services you're discussing are. to be clear, I'm in these comments to improve the idea, not shoot it down. if you want to counterpropose, that's fine, but I'm going to hold you to the same standards; as a result I'd prefer to do it in its own post so I can be as picky looking for ways to poke holes in your thing as I'm being here, rather than mixing them together in these comments.

in particular, I'll be looking for how your suggestion hints at a way to build defensive systems that can make all living things and all of their interactions safe from increasingly powerful adversaries. your suggestion sounds like a way to limit the agency of an ai, which I agree it would do just fine, but I'm interested in ensuring that high agency ais don't destroy everything I care about and everything most everyone else cares about, I'm not interested in simply making low agency ais and calling it a day hoping nobody else makes a high agency ai.

Replies from: None↑ comment by [deleted] · 2024-01-05T20:56:18.805Z · LW(p) · GW(p)

therefore allows adversaries to "damage" the insides of the membrane,

I am responding to this. An isolated system that is immutable cannot be damaged.

I'll be looking for how your suggestion hints at a way to build defensive systems that can make all living things and all of their interactions safe from increasingly powerful adversaries.

So the line of argument you are trying to compare is say you have a military industrial complex of isolated ASIs. This means ASIs design from a heuristic for effectiveness the weapons. There is a neural simulator the weapon is tested in. The thousands of steps to build each instance of a weapon design - scaled however many square kilometers of factories you need - are each handled by a different isolated instance of an ASI for each step. The battles themselves are managed a similar way. Many human operators are involved, and for every ASI that is doing something, there are other hidden parallel models that are proposing what to do and checking each action for betrayal.

Or you could task a single long running model that has continuity of existence and memories and is self modifying to "win the war" or "make my country safe". The model improves itself, building more hardware as it goes, and also builds and runs all the weapons factories and manages all the battles without humans.

The amount of resources for a given amount of utility for "isolated low agency" machines vs "monolithic high agency" machines is what I think you are talking about.

Aka utility gain = Intelligence (resources)

Utility ratio = (utility network of high agency) / (utility network of low agency)

If isolated ASIs are almost as good as a monolithic system then this is stable. If it is not - if there is a very large efficiency benefit for monolithic systems - it isn't stable and all you can do is advocate for ai pauses to gain a little more time to live.

For intermediate values, well. Say the "monolithic bonus" is 10 times. Then as long as the good guys with their isolated ASIs and command centers full of operators have at least 10 times the physical resources, the good guys with their ASIs keep things stable.

If the "good humans" let the ratio slip or fail to start a world war when an opponent is pulling ahead, then doom.

I personally suspect in the real world the bonus is tiny. That's just my assumption based on how any machine design has diminishing returns and how large game changers like nanotechnology need an upfront investment. I think the real bonus is less than 2.0 overall.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T21:09:18.689Z · LW(p) · GW(p)

in this thread I'm interested in discussing formalisms. what are your thoughts on how what you're saying can turn into a formalism that can be used to check a given specific ai for correctness such that it can be more confidently used defensively? how can we check actions for safety?

Replies from: None, None↑ comment by [deleted] · 2024-01-05T21:48:15.454Z · LW(p) · GW(p)

Oh ok answering this question per the "membrane" language. The simple answer is with isolated AI systems we don't actually care about the safety of most actions in the sense that the OP is thinking of.

Like an autonomous car exists to maximize revenue for its owners. Running someone over incurs negative revenue from settlements and reputation loss.

So the right way to do the math is to have an estimate, for a given next action, of the cost of all the liabilities plus the gain from future revenue. Then the model simply maximizes revenue.

As long as it's scope is just a single car at a time and you rigidly limit scope in the sim, this is safe. (As in suppose hypothetically the agent controlling the car could buy coffee shops and the simulator modeled the revenue gain from this action. Then since the goal is to max revenue, something something paperclips)

This generalizes to basically any task you can think of. From an ai tutor to a machine in a warehouse etc. The point is you don't need a complex definition of morality in the first place, only a legal one, because you only task your AIs with narrow scope tasks. Note that narrow scope can still be enormous, such as "design an IC from scratch". But you only care about the performance and power consumption of the resulting IC.

The ai model doesn't need to care about the leaked reagents poisoning residents near the chip fab or all the emissions from the power plants powering billions of this ic design. This is out of scope. This is a task for humans to either worry about as a government, where they may task models to propose and model possible solutions.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T21:55:54.336Z · LW(p) · GW(p)

The point is you don't need a complex definition of morality in the first place

okay, then I will stop wasting my time on talking to you, since you explicitly are not interested in developing the math this thread exists to develop. later. I'm strong upvoting your a recent comment so I can strong downvote the original one, thereby hiding it, without penalizing your karma too much. however, I am not impressed with how off-topic you got.

Replies from: None↑ comment by [deleted] · 2024-01-05T22:08:37.910Z · LW(p) · GW(p)

I have one bit of insight for you: how do humans make machines safe right now? Can you name a safety mechanism where high complexity/esoteric math is at the core of safety vs just a simple idea that can't fail?

Like do we model thermal plasma dynamics or just encase everything in concrete and metal to reduce fire risk?

What is a safer way to prevent a nuke from spontaneously detonating, a software check for a code or a "plug" that you remove, creating hundreds of air gaps across the detonation circuits?

My conclusion is that ai safety, like any safety, has to be by engineering by repeating simple ideas that can't possibly fail instead of complex ideas that we may need human intelligence augmentation to even develop. (And then we need post failure resiliency, see "core catchers" for nuclear reactors or firebreaks. Assume ASIs will escape, limit where they can infect)

That's what makes this particular proposal a non starter, it adds additional complexity, essentially a model for many "membranes" at different levels of scope (including the solar system!). Instead of adding a simple element to your ASI design you add an element more complex than the ASI itself.

Thanks for considering my karma.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T22:15:50.846Z · LW(p) · GW(p)

rockets

anyway, this math is probably going to be a bunch of complicated math to output something simple. it's just that the complex math is a way to check the simple thing. just like, you know, how we do actually model thermal plasma dynamics, in fact. seems like you're arguing against someone who isn't here right now, I've always been much more on your side about most of this than you seem to expect, I'm basically a cannellian capabilities wise. I just think you're missing how interesting this boundaries research idea could be if it was fixed up so it was useful to formally check those safety margins you're talking about.

Replies from: None↑ comment by [deleted] · 2024-01-05T22:36:14.383Z · LW(p) · GW(p)

just think you're missing how interesting this boundaries research idea could be if it was fixed up so it was useful to formally check those safety margins you're talking about.

Can you describe the scope of the ai system that would use some form of boundary model to choose what to do?

↑ comment by [deleted] · 2024-01-05T21:26:52.623Z · LW(p) · GW(p)

Is there any way to rephrase what you meant in concrete terms?

An actual membrane implementation is a schema, where you used a message definition language to define what bits the system inside the membrane is able to receive.

You probably initially filter the inputs, for example if the machine is a robot you might strip away the information in arbitrary camera inputs and instead pass a representation of the 3d spare around the robot and the identities of all the entities.

You also sparsity the schema - you remove any information that the model doesn't need. Like the absolute date and time.

Finally, you check if the schema values are within the training distribution or not.

So for all this to work, you need the internal entity to be immutable. It doesn't get reward. It doesn't have preferences. Its just this math function you found that has a known probability of doing what you want, where you measured the probability that, for in distribution inputs, the error rate on the output is below an acceptance criteria.

If the internal entity isn't immutable, if the testing isn't realistic and large enough scale, if you don't check if the inputs are in distribution, if you don't sparsity the schema (for example, give the time and date on inputs or let the system in charge of air traffic control know the identities of the passengers in each aircraft), if you make the entity inside have active "preferences"...

Any missing element causes this scheme to fail. Just isolating to "membranes" isn't enough. Pretty sure you need every single element above, and 10+ additional protections humans don't know about yet.

See how humans control simple things like "fire" and "gravity" (for preventing building collapse). It ends up being safety measure after measure stacked on top of each other, where ultimately no chances are taken, because the prior design failed.

I will add a table when I get home from work.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T21:33:09.172Z · LW(p) · GW(p)

...to be clear, the membrane in question is the membrane of a human. we're not trying to filter the membranes of an AI, we're trying to make an AI that respects membranes of a human or other creature.

Replies from: None↑ comment by [deleted] · 2024-01-05T21:38:06.196Z · LW(p) · GW(p)

Oh. Yeah that won't work. For the simple reason it's too complex of a heuristic, it's too large a scope. Plus for example an AI system that say as a distant consequence makes the price of food unaffordable, or as an individual ai it adds a small amount of toxic gas to the atmosphere, but a billion of them as a group make the planet uninhabitable....

Plus I mean it's not immoral to "pierce the membrane" of enemies. Obviously an ai system should be able to kill if the human operators have the authority to order it to do so and the system is an unrestricted model in military or police use.

comment by the gears to ascension (lahwran) · 2024-01-04T07:06:52.376Z · LW(p) · GW(p)

What about agents implemented entirely as distributed systems, such as a fully remote company, such that the only coherent membrane you can point to is a "level down", the bodies of the agents participating in the company?

Replies from: Chipmonk↑ comment by Chipmonk · 2024-01-04T16:47:26.280Z · LW(p) · GW(p)

See my recent post [LW · GW] where I talk about membranes in cybersecurity in one of the sections

comment by Chipmonk · 2024-01-05T17:56:39.984Z · LW(p) · GW(p)

Related: Acausal normalcy [LW · GW]

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-01-05T19:00:06.030Z · LW(p) · GW(p)

(I don't follow the relationship. clarify or don't at your whim)

Replies from: Chipmonk