AI Alignment Metastrategy

post by Vanessa Kosoy (vanessa-kosoy) · 2023-12-31T12:06:11.433Z · LW · GW · 13 commentsContents

Conservative Metastrategy Incrementalist Metastrategy Information Security Design Implementation Measuring Alignment Design Implementation Unpredictable Progress Butlerian Metastrategy Transhumanist Metastrategy None 13 comments

I call "alignment strategy" the high-level approach to solving the technical problem[1]. For example, value learning is one strategy, while delegating alignment research to AI is another. I call "alignment metastrategy" the high-level approach to converging on solving the technical problem in a manner which is timely and effective. (Examples will follow.)

In a previous article [AF · GW], I summarized my criticism of prosaic alignment. However, my analysis of the associated metastrategy was too sloppy. I will attempt to somewhat remedy that here, and also briefly discuss other metastrategies, to serve as points of contrast and comparison.

Conservative Metastrategy

The conservative metastrategy follows the following algorithm:

- As much as possible, stop all work on AI capability outside of this process.

- Develop the mathematical theory of intelligent agents to a level where we can propose adequate alignment protocols with high confidence. Ideally, the theoretical problems should be solved in such order that results with direct capability applications emerge as late as possible.

- Design and implement empirical tests of the theory that incur minimal risk in worlds in which the theory contains errors or the assumptions of the theory are violated in practice.

- If the tests show problems, go back to step 2.

- Proceed with incrementally more ambitious tests in the same manner, until you're ready to deploy an AI defense system.

This is my own favorite metastrategy. The main reason it can fail is if the unconservative research we failed to stop creates unaligned TAI before we can deploy an AI defense system (currently, we have a long way to go to complete step 2).

I think that it's pretty clear that a competent civilization would follow this path, since it seems like the only one which leads to a good long-term outcome without taking unnecessary risks[2]. Of course, in itself that is an insufficient argument to prove that, in our actual civilization, the conservative metastrategy is the best for those concerned with AI risk. But, it is suggestive.

Beyond that, I won't lay out the case for the conservative metastrategy here. The interested reader can turn to 1 [LW · GW] 2 [LW · GW] 3 [LW(p) · GW(p)] 4 [LW · GW] 5 [LW · GW].

Incrementalist Metastrategy

The incrementalist metastrategy follows the following algorithm:

- Find an advance in AI capability (by any means, including trial and error).

- Find a way to align the new AI design (prioritizing solutions that you expect to scale further).

- Validate alignment using a combination of empiricial tests and interpretability tools.

- If validation fails, go back to step 2.

- If possible, deploy an AI defense system using current level of capabilities.

- Go to step 1.

This is (more or less) the metastrategy favored by adherents of prosaic alignment. In particular, this is what the relatively safety-conscious actors involved with leading AI labs present as their plan.

There are 3 main mutually reinforcing problems with putting our hopes in this metastrategy, which I discuss below. There are 2 aspects to each problem: the "design" aspect, which is what would happen if the best version of the incrementalist metastrategy was implemented, and the "implementation" aspect, which is what happens in AI labs in practice (even when they claim to follow incrementalist metastrategy).

Information Security

Design

If a new AI capability is found in step 1, and the knowledge is allowed to propagate, then irresponsible actors will continue to compound it with additional advances before the alignment problem is solved on the new level. Ideally, either the new capability should remain secret at least until the entire iteration is over, or government policy should prevent any actor from subverting the metastrategy, or some sufficient combination of both needs to be in place. Implementing the above involves major institutional and political challenges, which undermines the feasibility of the entire metastrategy.

Now, one could argue that the same problem exists with the conservative metastrategy: after all, some theoretical discoveries can also be used by irresponsible actors for dangerous AI capability advances. However, when it comes to theoretical research, the problem only shows up in some cases and usually requires additional steps by irresponsible actors (going from theory to practice). Moreover, inasmuch as dangerous AI capability advances only happen when they're grounded at solid theory, there is more hope that the risks will be transparent, predictable and avoidable.

Hence, while the conservative metastrategy also calls for regulation against irresponsible actors, the entire approach allows for graceful degradation when this part fails. On the other hand, the incrementalist metastrategy calls for working directly on the sort of research which presents the most risk: opaque AI designs discovered by trial-and-error and selected only for their capabilities. This is comparable to the dubious rationale behind gain-of-function research.

Implementation

In practice, even "safety conscious" AI labs share a lot of technical details about their research. Even when they don't, their employees are not selected for outstanding trustworthiness and understanding of the risks. Many of them might ultimately continue to other organizations and disseminate the knowledge, even when nominally bound by NDA[3]. I also doubt they maintain a very high standard of cybersecurity. All this is in sharp contrast to organizations which actually take secrecy seriously (military and government bodies dealing with classified information).

See also: Six Dimensions of Operational Adequacy [LW · GW]

Measuring Alignment

Design

Step 3 in the incrementalist metastrategy requires validating alignment. But, how can we tell whether an AI is aligned? What does it even mean for it to be aligned? One possibility is the economic criterion: "the AI is aligned when it behaves close enough to the designer's intent to be a profitable product". This must be appealing to company executives. However, under this definition there might be abrupt changes in alignment as a function of capability progress (or just time) that undermine the entire case for incrementalism.

The latter is true even if capability progress is perfectly smooth. For example, imagine agentic AIs that go along with our wishes because they know that they are within our power, right until they aren't. We gradually hand over the entire economy to AIs, then one day the AIs decide it's time to get rid of the humans. Even if some problems become apparent in advance, at some point it will be very hard to stop/slow the tide in the face of economic incentives.

Even with the best intentions, measuring alignment is hard. One difficulty is deceptive alignment [LW · GW]: agentic AI that strategically searches for ways to appear aligned because this appearance is correlated with utility (for obvious reasons) under its internal world-model. But even if the AI is not deceiving us on purpose, we might still select an AI design whose behavior locally appears aligned but internal reasoning is very different from what we expect, or we can end up with behaviors that are too complicated to interpret. See Wentworth's article [LW · GW] for more discussion.

A satisfactory solution to the measurement problem requires interpretability tools that can detect any unintended motivation inside the AIs reasoning, before you can complete even a single iteration. However, we are far from having such tools. Moreover, it's not clear for what kind of AI designs such tools exist even in principle. Worse, even if we had candidate tools, how would we know they work correctly? Without either a theoretical foundation or an alternative reliable method of measurement?

Implementation

AFAICT, AI labs just follow the economic criterion. We have no idea what kind of reasoning processes happen inside e.g. large language models and have no tools to determine that. When problems are found, they are patched as best as the companies know how, but some problems (jailbreaks, hallucinations) still don't have a general solution. I'm pointing this out not because these specific problems are important in themselves, but because it shows the companies don't actually follow the incrementalist metastrategy at all: despite the fact their products are demonstrably misaligned in some sense, they continue to compound capability advances.

Unpredictable Progress

What happens if progress in AI capabilities fails to be smooth and predictable? (Which I believe will happen.) It creates two problems:

- When an innovation is found that substantially changes the design, previous alignment techniques might be rendered irrelevant, to such an extent that a working alignment technique for the new design cannot be a small modification of previous techniques. This gets us stuck in steps 2-3 for a dangerously long time, during which information can leak if the information security is less than perfect. Moreover, it might mean that a lot of previous work on alignment was wasted.

- When an innovation is found that substantially advances the capabilities, it creates a gap between SOTA AI and SOTA aligned AI. If this gap reaches a decisive strategic advantage, the result is catastrophic. If this gap doesn't reach a decisive strategic advantage in itself, there is still a risk that information leaks will allow irresponsible actors to compound the gap further.

The combination of unpredictable progress with information security failures can create an extremely dangerous situation where responsible actors need to play difficult catch-up with irresponsible actors, even when the irresponsible actors are too weak to "win" the race otherwise. This consideration is in addition to the risk of a "responsible" actor ending the world by a lab experiment.

I don't distinguish between "design" and "implementation" here, because the incrementalist metastrategy has only been defended by AI labs (even nominally) for a few years, and there haven't been many large and unexpected innovations during this time[4].

Butlerian Metastrategy

The butlerian metastrategy is: postpone TAI indefinitely. Essentially, it requires creating an AI defense system without the help of TAI[5].

This metastrategy doesn't have many advocates, but I still find it useful to have a name for it. Most metastrategies exist on a continuum where the butlerian metastrategy is an (extreme) endpoint, depending on how bullish they are on stopping irresponsible forms of AI research and for how long.

The major problems with this metastrategy are:

- Creating an adequate AI defense system without the help of TAI is extremely difficult, probably much more so than solving the technical alignment problem.

- The best case scenario in this metastrategy is a future without TAI, which means giving up on enormous value.

Transhumanist Metastrategy

The transhumanist metastrategy follows the following algorithm:

- As much as possible, stop all work on AI, or at least anything with capability implications.

- Create technology for substantially augmenting human intelligence.

- Delegate the rest of the problem to the augmented humans (who would presumably solve it much better than anything we could come up with).

This metastrategy is advocated [LW(p) · GW(p)] by Eliezer Yudkowsky.

There are 3 reasons I'm pessimistic about this metastrategy:

- Substantially augmenting adult human intelligence seems to me harder than solving the technical AI alignment problem. I have a plan [AF · GW] for the latter, and I'm not sure there is any serious plan for the former. (Although, see this [LW · GW].) Augmenting intelligence by epilogenics [LW · GW] seems more tractable, but will take too long.

- It's not clear whether this metastrategy is safe. On the one hand, we have an aligned starting point (baseline humans). On the other hand, we are dealing with a giant undocumented mess of spaghetti code (the design of the human brain). It seems easy to imagine that changes which strongly increase intelligence can also substantially mutate values in ways that are critical but difficult to detect (among other reasons, because the augmented humans will be incentivized to hide it). We might end up handing the future to e.g. a race of superintelligent psychopaths.

- The sort of experimentation on humans that would be required is (I think) illegal in most countries, and legalizing it is a major challenge, especially under a time limit.

Inasmuch as augmented humans are safe, this metastrategy has the advantage that (in contrast to incrementalism) it doesn't burn the commons by advancing AI capability. Therefore, it might make sense to work on this and the conservative approach in parallel.

- ^

There is some arbitrary choice about what constitutes "high-level". It would be more precise to say that there is are multiple layers or even a spectrum between "general direction" and "full detailed solution". For example, Physicalist Superimitation [AF · GW] is much more detailed than just value learning, but is still insufficiently detailed for actual implementation.

- ^

Some critics doubt that the sort of mathematical theory I pursue is possible at all. To those I would point out that a competent civilization would at least make a serious attempt to create that theory before trying something else.

- ^

I think that enforcing an NDA is pretty hard: the company doesn't have an easy way to know the NDA is violated, and even if it suspects that, proving it is hard, especially given that the technical details mutate along the way.

- ^

As far as I know. If there have been but they were kept secret, then, kudos!

- ^

In general, an AI defense system can be preventative i.e. aimed at preventing unaligned AI from being created rather than disabling it after it is created. It would definitely have to be preventative in this case.

13 comments

Comments sorted by top scores.

comment by RogerDearnaley (roger-d-1) · 2024-01-01T02:39:22.863Z · LW(p) · GW(p)

On the one hand, we have an aligned starting point (baseline humans).

Humans are not aligned. [LW · GW] Joseph Stalin was not aligned with the utility of the citizenry of Russia. Humans of roughly equal capabilities can easily be allied with (in general, all you need to do is pay them a decent salary, and have a capable law enforcement system as a backup). This is not the same thing as an aligned AI, which is completely selfless, and cares only about what you want — that is the only thing that's still safe when much smarter than you. In a human, that would be significantly past the criteria for sainthood. Once a human or group of humans are enhanced to become dramatically more capable and thus more powerful than the rest of human culture (including its law enforcement), power corrupts, and absolute power corrupts absolutely.

The Transhumanist Metastrategy consists of building known-to-be-unaligned superintelligences that have full human rights, so you can't even try boxing them. You just created a superintelligent living species sharing our niche and motivated by standard Evolutionary Psychology drives. As long as none of the transhumans are sociopaths, you might manage for a decade or two while the transhumans and humans are still connected by bonds of friendship and kinship, but after a generation or so, the inevitable result is Biology 101: the superior species out-competes the inferior species. Full stop, end of human race. Which is of course fine for the transhumans, until some proportion of them upgrade themselves further, and out-compete the rest. And so on, in an infinite arms race, or until they have the sense to ban making the same mistake over and over again.

Replies from: rotatingpaguro↑ comment by rotatingpaguro · 2024-01-13T05:09:05.803Z · LW(p) · GW(p)

I heard that Ashkenazi Jews are 1 SD up on IQ, which is about the kind of improvement we are talking about with embryo selection. I do not have the impression they are bad towards other humans. Do you think otherwise?

To be clear, I am not trying to gotcha you with antisemitism, and I totally understand if you want to avoid discussion because this is a politically charged topic.

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2024-01-15T05:24:57.894Z · LW(p) · GW(p)

I wouldn't expect this issue to kick in significantly until you were roughly twice as intelligent as the average detective in law enforcement: you would need a significant effective power differential, such that you could pretty reliably commit crimes and actually get away with them (or come up with dastardly tactics that haven't been criminalized because no one else though of them). If that statistic is accurate, then 1 SD is ~15 IQ points which isn't going to cut it. If we had accurate crime records for a decent sample size of people with IQ 200–250 (at least 7 SD) then we could have a discussion — otherwise I'm extrapolating from effects of other much larger power differentials produced mostly by causes other then raw IQ, like handing people executive power.

Replies from: espoire↑ comment by espoire · 2024-08-01T05:11:03.113Z · LW(p) · GW(p)

Agreed.

...which would imply that dangers should be minimal from either slow augmentation which has time to become ubiquitous in the gene pool, or from limited augmentation that does not exceed a few standard deviations from the current mean. Assuming, of course, that our efforts don't cause unwanted values shift.

I think all currently progressing human enhancement projects of which I am aware are not expecting gains so large as to be dangerous, and therefore worthy of support.

comment by Roman Leventov · 2024-01-03T17:34:08.269Z · LW(p) · GW(p)

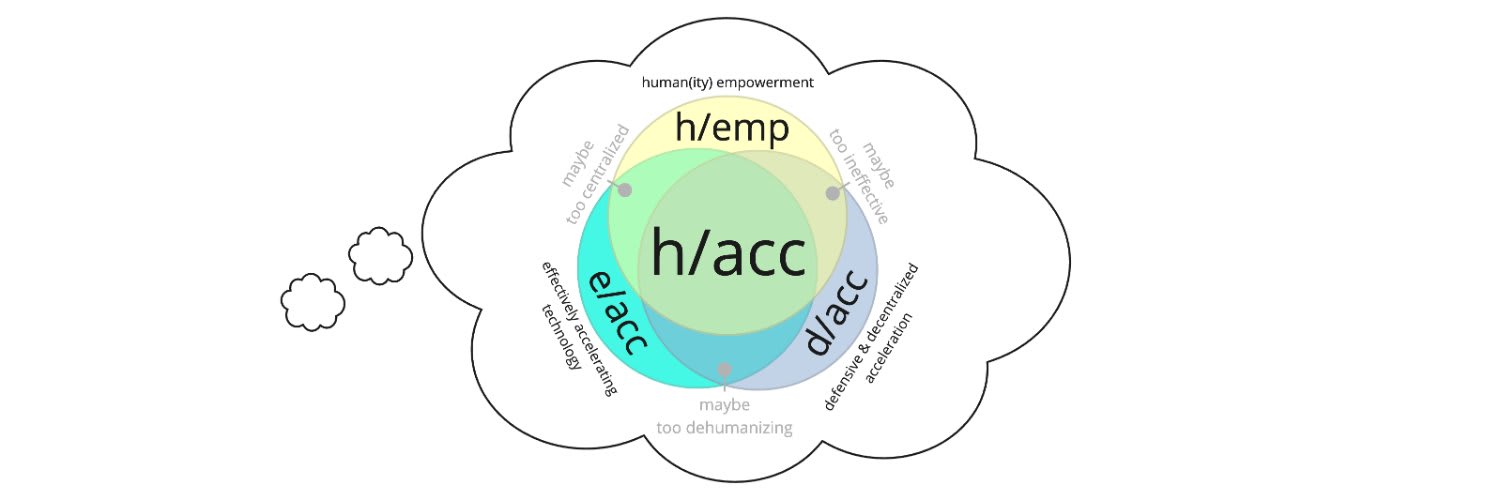

I think this metastrategy classification is overly simplified to the degree that I'm not sure it is net helpful. I don't see how Hendrycks' "Leviathan safety", Drexler's Open Agency Model, Davidad's OAA, Bengio's "AI pure scientist" and governance proposals (see https://slideslive.com/39014230/towards-quantitative-safety-guarantees-and-alignment), Kaufmann and Leventov's Gaia Network, AI Objectives Institute's agenda (and related Collective Intelligence Project's), Conjecture's CoEms, OpenAI's "AI alignment scientist" agenda, and Critch's h/acc (and related janus et al.'s Cyborgism) straightforwardly lend on this classification, at least not without losing some important nuance.

Furthermore, there is also the missing dimension of [technical strategy, organisational strategy, governance and political strategy] that could perhaps recombine to some degree.

Finally, in the spirit of "passing ideological Turing test" and "describing, not persuading" norms, it would be nice I think to include the criticism of the "conservative strategy" to the same level of fidelity that other metastrategies are criticised here, even if you or others discussed that in some other posts.

Replies from: mateusz-baginski↑ comment by Mateusz Bagiński (mateusz-baginski) · 2024-03-22T15:30:10.231Z · LW(p) · GW(p)

Can you link to what "h/acc" is about/stands for?

Replies from: Roman Leventov↑ comment by Roman Leventov · 2024-03-24T13:24:12.463Z · LW(p) · GW(p)

Humane/acc, https://twitter.com/AndrewCritchPhD

Replies from: mateusz-baginski↑ comment by Mateusz Bagiński (mateusz-baginski) · 2024-03-29T07:46:25.436Z · LW(p) · GW(p)

For people who (like me immediately after reading this reply) are still confused about the meaning of "humane/acc", the header photo of Critch's X profile is reasonably informative

comment by Seth Herd · 2024-01-02T01:14:06.474Z · LW(p) · GW(p)

Great post in identifying metastrategies. I think we need more metastrategic thinking.

I think you're conflating two separable factors in the conservative strategy: taking longer, and leaning on math as the primary route to good alignment work. Math being better/safer seems to be a very common assumption, and it seems baseless to me (and therefore creates a communication gap with a world that doesn't share your love of pure math).

I doubt that you can math all the way from atoms to human values in an implementable form. It's not at all apparent that a mathematically precise notion of agency would be helpful in solving the technical problems of getting an actual implemented AGI to do things we like. If you're thinking of applying that rigor to develop algorithmic/Bayesian AGI, I think it's true that Deep Learning Systems Are Not Less Interpretable Than Logic/Probability/Etc [LW · GW]., and that the same insight applies to making them provably safe. I see the appeal of the intuition, and I think it's just an intuition.

I think there's a good bit of mathophilia or maybe physics envy going on in alignment. But different fields lean on different techniques and types of analysis. Machine learning, AI design, computer science, and psychology seem to me like more useful fields for aligning AGI, however long we take to do it. All of those fields use math but don't heavily rely on rigorously provable formulations of their problems.

Separately, it's not obvious to me that a "competent civilization" would obviously pursue the conservative metastrategy. You're assuming a "competent civilization" would be long-termist and roughly utilitarian in its values. Humans tend to not be. If that's what you mean by "competent civilization", then fine, they would be more cautious.

There's lots more to say on both of those points, but it will be a while before I get around to writing a full post on either, so here are those thoughts. Thanks again for a useful conceptualization of metastrategy.

Replies from: rotatingpaguro↑ comment by rotatingpaguro · 2024-01-13T05:14:50.455Z · LW(p) · GW(p)

All of those fields use math but don't heavily rely on rigorously provable formulations of their problems.

Chicken and egg: is this evidence they are not mature enough to make friendly AI, or evidence that friendly AI can be made with that current level of rigor?

Replies from: Seth Herd↑ comment by Seth Herd · 2024-01-13T23:17:23.707Z · LW(p) · GW(p)

I agree; practices in other fields aren't evidence for the right approach to AGI.

My point is that there's no evidence that math IS the right approach, just loose intuitions and preferences.

And the arguments for it are increasingly outdated. Yudkowsky originated those arguments, and he now thinks that stopping current AGI research, starting over and doing better math is the best approach, but still >99% likely to fail.

Arguments against less-rigorous, more-ML-and-cogsci-like approaches are loose and weak. Therefore, those approaches are pretty likely to offer better odds of success than Yudkowsky's plan at estimated 99%-plus failure. This is a big claim, but I'm prepared to make it and defend it. That's the post I'm working on. In short, claims about the fragility of values and capabilities generalizing better than alignment are based on intuitions, and the opposite conclusions are just as easy to argue for. This doesn't say which is right, it says that we don't know yet how hard alignment is for deep network based AGI.

comment by Chris_Leong · 2023-12-31T15:51:58.024Z · LW(p) · GW(p)

Great post! The concept of meta-strategies seems very useful to add to my toolkit.

comment by Review Bot · 2024-02-14T06:49:27.003Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?