References & Resources for LessWrong

post by XiXiDu · 2010-10-10T14:54:13.514Z · LW · GW · Legacy · 104 commentsContents

A list of references and resources for LW Summary Contents LessWrong.com Overview Why read Less Wrong? Artificial Intelligence General Friendly AI Machine Learning The Technological Singularity Heuristics and Biases Mathematics Learning Mathematics Basics General Probability Logic Foundations Miscellaneous Decision theory Game Theory Programming Python Haskell General Computer science (Algorithmic) Information Theory Physics General General relativity Quantum physics Foundations Evolution Philosophy General The Mind Epistemology Linguistics Neuroscience General Education Miscellaneous Concepts Websites Fun & Fiction Fiction Fun Go Note: None 104 comments

A list of references and resources for LW

Updated: 2011-05-24

- F = Free

- E = Easy (adequate for a low educational background)

- M = Memetic Hazard (controversial ideas or works of fiction)

Summary

Do not flinch, most of LessWrong can be read and understood by people with a previous level of education less than secondary school. (And Khan Academy followed by BetterExplained plus the help of Google and Wikipedia ought to be enough to let anyone read anything directed at the scientifically literate.) Most of these references aren't prerequisite, and only a small fraction are pertinent to any particular post on LessWrong. Do not be intimidated, just go ahead and start reading the Sequences if all this sounds too long. It's much easier to understand than this list makes it look like.

Nevertheless, as it says in the Twelve Virtues of Rationality, scholarship is a virtue, and in particular:

It is especially important to eat math and science which impinges upon rationality: Evolutionary psychology, heuristics and biases, social psychology, probability theory, decision theory.

Contents

LessWrong.com

This list is hosted on LessWrong.com, a community blog devoted to refining the art of human rationality - the art of thinking. If you follow the links below you'll learn more about this community. It is one of the most important resources you'll ever come across if your aim is to get what you want, if you want to win. It shows you that there is more to most things than meets the eye, but more often than not much less than you think. It shows you that even smart people can be completely wrong but that most people are not even wrong. It teaches you to be careful in what you emit and to be skeptical of what you receive. It doesn't tell you what is right, it teaches you how to think and to become less wrong. And to do so is in your own self interest because it helps you to attain your goals, it helps you to achieve what you want.

- About Less Wrong FE

- FAQ FE

- Less Wrong wiki (The wiki about rationality.) F

- Less Wrong discussion area F

- The Sequences (The most systematic way to approach the Less Wrong archives.) FE

- Sequences in Alternative Formats (HTML, Markdown, PDF, and ePub versions.) FE

- List of all articles from Less Wrong (In chronological order.) F

- Graphical Visualization of Major Dependencies (Dependencies between Eliezer Yudkowsky posts.) FE

- Eliezer's Posts Index (Autogenerated index of all Yudkowsky posts in chronological order.) FE

- Eliezer Yudkowsky's Homepage (Founder of LW and top contributor.) FE

- Less Wrong Q&A with Eliezer Yudkowsky: Video Answers FE

- An interview with Eliezer Yudkowsky (Parts 1, 2 and 3) FE

- Eliezer Yudkowsky on Bloggingheads.tv FE

- Best of Rationality Quotes 2009/2010 FE

- Less Wrong Rationality Quotes (Sorted by points. Created by DanielVarga.) FE

- Comment formatting FE

A few articles exemplifying in detail what you can expect from reading Less Wrong, why it is important, what you can learn and how it does help you.

- Yes, a blog. FE

- What I've learned from Less Wrong FE

- Goals for which Less Wrong does (and doesn't) help FE

- Rationality: Common Interest of Many Causes FE

- How to Save the World FE

- Reflections on rationality a year out FE

Artificial Intelligence

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultra-intelligent machine could design even better machines; there would then unquestionably be an "intelligence explosion," and the intelligence of man would be left far behind. — I. J. Good, "Speculations Concerning the First Ultraintelligent Machine"

- AI Foom Debate F

- Intelligence explosion FE

- Why an Intelligence Explosion is Probable F

- The Nature of Self-Improving Artificial Intelligence (Audio) F

- SIAI Reading List: Artificial Intelligence and Technology Acceleration Skeptics

- SIAI Reading List: Artificial General Intelligence and the Singularity

- So You Want To Be A Seed AI Programmer F

- Levels of Organization in General Intelligence F

- Artificial Intelligence: A History of Ideas and Achievements F

- Publications | Singularity Institute for Artificial Intelligence F

- Some Singularity, Superintelligence, and Friendly AI-Related Links F

- Introduction to Artificial Intelligence (index) FE

The AI does not hate you, nor does it love you, but you are made out of atoms which it can use for something else. — Eliezer Yudkowsky, Artificial Intelligence as a Positive and Negative Factor in Global Risk

- Recommended Reading for Friendly AI Research F

- A review of proposals toward safe AI F

- Friendly AI: a bibliography F

- Creating Friendly AI 1.0 (The Analysis and Design of Benevolent Goal Architectures) F

- What is Friendly AI? FE

- Knowability Of FAI F

- Bostrom & Yudkowsky, "The Ethics of Artificial Intelligence" (2011) F

- Paperclip maximizer FE

- From mostly harmless to civilization-threatening: pathways to dangerous artificial general intelligences FE

- A compact list of Eliezer Yudkowsky's positions (Reasons to take friendly AI serious.) FE

- The Basic AI Drives F

- Catastrophic risks from artificial intelligence F

- Super-intelligence does not imply benevolence (Videos) F

- Coherent Extrapolated Volition (CEV) FM

- Shaping the Intelligence Explosion (Anna Salamon at Singularity Summit 2009) FE

- Machine Ethics is the Future F

- Who’s Who in Machine Ethics F

- Mitigating the Risks of Artificial Superintelligence F

Not essential but an valuable addition for anyone who's more than superficially interested in AI and machine learning.

- A Gentle Introduction to the Universal Algorithmic Agent AIXI F

- School in Logic, Language and Information (ESSLLI) F

- Good Freely Available Textbooks on Machine Learning F

- Learning About Statistical Learning

- Learning about Machine Learning, 2nd Ed.

- Bayesian Reasoning and Machine Learning F

The term “Singularity” had a much narrower meaning back when the Singularity Institute was founded. Since then the term has acquired all sorts of unsavory connotations. — Eliezer Yudkowsky

- Three Major Singularity Schools, Eliezer Yudkowsky FE

- The Singularity FAQ FE

- Brief History of Intellectual Discussion of Accelerating Change FE

- The Singularity: A Philosophical Analysis FE

- Special Report: The Singularity (IEEE Spectrum) FEM

- The Coming Technological Singularity (The original essay by Vernor Vinge.) FEM

- Technological singularity FEM

- The Singularity Is Near, Ray Kurzweil EM

- Robot: Mere Machine to Transcendent Mind E

- Mind Children: The Future of Robot and Human Intelligence E

- There’s More to Singularity Studies Than Kurzweil FE

- Tech Luminaries Address Singularity (IEEE Spectrum. (2008, June).) FEM

- Economics Of The Singularity (Hanson, R. (2008).) FE

- What did you learn about the singularity today? F

- The Singularity Hypothesis: A Scientific and Philosophical Assessment (Bibliography) FE

- Yes, The Singularity is the Biggest Threat to Humanity FE

- What should a reasonable person believe about the Singularity? FE

- An overview of models of technological singularity F

- Hard Takeoff Sources F

Heuristics and Biases

One of the painful things about our time is that those who feel certainty are stupid, and those with any imagination and understanding are filled with doubt and indecision. — Bertrand Russell

Ignorance more frequently begets confidence than does knowledge. — Charles Darwin

The heuristics and biases program in cognitive psychology tries to work backward from biases (experimentally reproducible human errors) to heuristics (the underlying mechanisms at work in the brain).

- Cognitive biases, common misconceptions, and fallacies. FE

- Cognitive Biases Potentially Affecting Judgment of Global Risks F

- Ugh fields (The Ugh Field forms a self-shadowing blind spot) FE

- The Apologist and the Revolutionary (Not being aware of your own disabilities.) FE

- Generalizing From One Example FE

- Self-fulfilling correlations F

- The scourge of perverse-mindedness FE

- Dunning–Kruger effect FE

- Procrastination FE

Mathematics

Here's a phenomenon I was surprised to find: you'll go to talks, and hear various words, whose definitions you're not so sure about. At some point you'll be able to make a sentence using those words; you won't know what the words mean, but you'll know the sentence is correct. You'll also be able to ask a question using those words. You still won't know what the words mean, but you'll know the question is interesting, and you'll want to know the answer. Then later on, you'll learn what the words mean more precisely, and your sense of how they fit together will make that learning much easier. The reason for this phenomenon is that mathematics is so rich and infinite that it is impossible to learn it systematically, and if you wait to master one topic before moving on to the next, you'll never get anywhere. Instead, you'll have tendrils of knowledge extending far from your comfort zone. Then you can later backfill from these tendrils, and extend your comfort zone; this is much easier to do than learning "forwards". (Caution: this backfilling is necessary. There can be a temptation to learn lots of fancy words and to use them in fancy sentences without being able to say precisely what you mean. You should feel free to do that, but you should always feel a pang of guilt when you do.) — Ravi Vakil

- Habits of Mathematical Minds FE

- How to Develop a Mindset for Math FE

- How to learn math? FE

- How Do You Go About Learning Mathematics? (Here another version.) FE

- How to Read Mathematics F

- A Learning Roadmap: From high-school to mid-undergraduate studies F

- The Khan Academy (World-class education for free (1800+ videos).) FE

- Just Math Tutotrials (FREE math videos for the world!) F

- BetterExplained (There’s always a better way to explain a topic.) FE

- Steven Strogatz on the Elements of Math (A very basic introduction to mathematics.) FE

- Mathematics Illuminated F

- The Princeton Companion to Mathematics (Reference for anyone with a serious interest in mathematics.)

- Concrete Mathematics: A Foundation for Computer Science (A solid and relevant base of mathematical skills.)

- The Art and Craft of Problem Solving

- Mathematical Logic F

- Free Mathematics eBooks F

- Free Online Mathematics Textbooks F

- Interactive Mathematics Miscellany and Puzzles F

- math.stackexchange.com (Q&A for people studying math at any level.) F

- MathOverflow F

- wolframalpha.com (Check your math!) F

Probabilities express uncertainty, and it is only agents who can be uncertain. A blank map does not correspond to a blank territory. Ignorance is in the mind. — Eliezer Yudkowsky

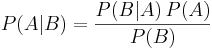

Math is fundamental, not just for LessWrong. But especially Bayes’ Theorem is essential to understand the reasoning underlying most of the writings on LW.

- Probability is in the Mind FE

- My Bayesian Enlightenment FE

- What is Bayesianism FE

- Bayes' Theorem Illustrated (My Way) FE

- An Intuitive (and Short) Explanation of Bayes’ Theorem FE

- An Intuitive Explanation of Eliezer Yudkowsky’s Intuitive Explanation of Bayes’ Theorem FE

- An Intuitive Explanation of Bayes' Theorem FE

- A Technical Explanation of Technical Explanation (More Bayes. Many writings rely on this page.) F

- Bayes' theorem FE

- You, A Bayesian FE

- Visualizing Bayes’ theorem FE

- The Nature of Probability (Video talk between Eliezer Yudkowsky and the statistician Andrew Gelman.) FE

- Probability Theory: The Logic of Science , E. T. Jaynes (Free draft available.)

- Probability Theory With Applications in Science and Engineering, E. T. Jaynes F

- Bayesian Probability Theory (Bayesian approach) vs. Frequentist Probability Theory (Frequentist approach) F

- Probability Theory As Extended Logic F

- Introduction to Bayesian Statistics, William M. Bolstad

- Bayesian statistics (Scholarpedia) F

- Bayesian probability (Wikipedia) F

- Bayes’ Theorem (A whole crowd on the blogs that seems to see more in Bayes’s theorem.) F

- Bayesian Epistemology (Stanford Encyclopedia of Philosophy) F

- Monty Hall problemformally proven using Bayes' theorem F

- Monty Hall, Monty Fall, Monty Crawl F

- The Bayesian revolution of the sciences F

- Bayesian data analysis F

- What to believe: Bayesian methods for data analysis F

- Probability Booklist

- An Introduction to Probability Theory and Its Applications

- Aumann's agreement theorem (Agreeing to Disagree) F

- Mr. Spock is Not Logical FE

- Logic FE

- Mathematical logic FE

- Introduction to Boolean algebra F

- Boolean algebra F

- Boolean logic F

- First-order logic F

- First-Order Logic, Raymond M. Smullyan

- Propositional calculus F

- Introduction to Mathematical Logic F

- Introduction to Logic, Alfred Tarski

- Introduction to Mathematical Logic, Alonzo Church

- Possible Worlds: An Introduction to Logic and Its Philosophy F

- Gödel Without Tears F

- Second-order logic F

- An Introduction to Non-Classical Logic

- Logical Labyrinths

- Stephen Cook's lecture notes in computability and logic F

- How to Prove It: A Structured Approach

- Proofs are Programs: 19th Century Logic and 21st Century Computing F

- Mathematics and Plausible Reasoning: Induction and analogy in mathematics

- The Cartoon Guide to Löb's Theorem F

- Symbolic Logic: An Accessible Introduction to Serious Mathematical Logic F

All the limitative theorems of metamathematics and the theory of computation suggest that once the ability to represent your own structure has reached a certain critical point, that is the kiss of death: it guarantees that you can never represent yourself totally. Gödel’s Incompleteness Theorem, Church’s Undecidability Theorem, Turing’s Halting Theorem, Tarski’s Truth Theorem — all have the flavour of some ancient fairy tale which warns you that “To seek self-knowledge is to embark on a journey which … will always be incomplete, cannot be charted on any map, will never halt, cannot be described.” — Douglas Hofstadter 1979

- Foundations of mathematics FE

- Mathematics: A Very Short Introduction E

- What Is Mathematics? An Elementary Approach to Ideas and Methods F

- The Mathematical Experience

- What is Mathematics: Gödel's Theorem and Around F

- Metamath (Constructs mathematics from scratch, starting from ZFC set theory axioms) F

- The Mathematical Atlas (Clickable Map of Mathematics) F

- Introductory Mathematics: Algebra and Analysis (Bridges the gap between school & university work.)

- Naive Set Theory

- Proofs from THE BOOK, Martin Aigner

- Principles of Mathematical Analysis, Walter Rudin

- A Classical Introduction to Modern Number Theory

- Reading List: Graph Isomorphism

- A Measure Theory Tutorial (Measure Theory for Dummies) F

- Topology Without Tears F

- Category Theory for Beginners F

- Category Theory for the Mathematically Impaired (A Short Reading List) F

- TheCatsters' Category Theory Videos F

- Foundations of Algebraic Geometry F

- Elements of Information Theory

- The “no self-defeating object” argument, and the vagueness paradox F

- Vanity and Ambition in Mathematics (A few posts by multifoliaterose.) F

Decision theory

It is precisely the notion that Nature does not care about our algorithm, which frees us up to pursue the winning Way - without attachment to any particular ritual of cognition, apart from our belief that it wins. Every rule is up for grabs, except the rule of winning. — Eliezer Yudkowsky

Remember that any heuristic is bound to certain circumstances. If you want X from agent Y and the rule is that Y only gives you X if you are a devoted irrationalist then ¬irrational. Under certain circumstances what is irrational may be rational and what is rational may be irrational. Paul K. Feyerabend said: "All methodologies have their limitations and the only ‘rule’ that survives is ‘anything goes’."

- Decision theory F

- Decision Theory (LW Wiki) F

- Timeless Decision Theory, by Eliezer Yudkowsky F

- What is Wei Dai's Updateless Decision Theory? F

- Good and Real (Rationality & Decision Theory)

- Newcomb's paradox F

- Newcomb's Problem and Regret of Rationality F

- The Meta-Newcomb Problem (A self-undermining variant.) F

- Pascal's Mugging (Finite version of Pascal's Wager.) F

Game theory is the study of the ways in which strategic interactions among economic agents produce outcomes with respect to the preferences (or utilities) of those agents, where the outcomes in question might have been intended by none of the agents. — Stanford Encyclopedia of Philosophy

- Game theory (Wikipedia) F

- Game Theory (Stanford Encyclopedia of Philosophy) F

- Strategy F

- Mixed strategy Nash equilibrium FE

- Nash equilibrium F

- Prisoner's dilemma F

- Gambit: Software Tools for Game Theory F

- Game Theory with Ben Polak F

- Game Theory — Open Yale Courses F

- Game Theory 101 F

- Von Neumann, Morgenstern, and the Creation of Game Theory: From Chess to Social Science F

- Game theory: mathematics as metaphor F

- The History of Combinatorial Game Theory F

Programming

With Release 33-9117, the SEC is considering substitution of Python or another programming language for legal English as a basis for some of its regulations. — Will Wall Street require Python?

Programming knowledge is not mandatory for LessWrong but you should however be able to interpret the most basic pseudo code as you will come across various snippets of code in discussions and top-level posts outside of the main sequences.

Python is a general-purpose high-level dynamic programming language.

- python.org F

- Dive Into Python (Python from novice to pro) F

- learnpythonthehardway.org F

- A Byte of Python F

- Python in a Nutshell, Second Edition

- Python for Software Design

- Python Cookbook

- Learning Python, 3rd Edition

- Free eBook Programming Tutorial

for Python Games! F - Probability and Statistics for Python programmers F

Haskell is a standardized, general-purpose purely functional programming language, with non-strict semantics and strong static typing.

- haskell.org F

- hackage.haskell.org/platform/ (All you need to get up and running.) F

- Learn Haskell in 10 minutes F

- Learn You a Haskell for Great Good! F

- Programming in Haskell

- Real World Haskell F

- The Haskell Road to Logic, Maths and Programming

- Pearls of Functional Algorithm Design (Techniques of reasoning about programs in an equational style.)

- Write Yourself a Scheme in 48 Hours F

- Haskell tutorial by Conrad Barski F

- Programming Language Pragmatics, Michael L. Scott

- Practical Foundations for Programming Languages F

- Structure and Interpretation of Computer Programs F

- How to Design Programs (An Introduction to Computing and Programming) F

- projecteuler.net (Learn programming and math by solving problems) F

- GitHub (Social Coding) F

- The FTP Site (Functional Programming) F

- Syntax and Semantics of Programming Languages

- Bootstrapping (compilers) F

- Low-level programming language F

- Assembly language F

- Quine (computing) (Self-producing program) F

- Probabilistic Programming FE

- A Field Guide to Genetic Programming F

Computer science

The introduction of suitable abstractions is our only mental aid to organize and master complexity. — Edsger W. Dijkstra

One of the fundamental premises on LessWrong is that a universal computing device can simulate every physical process and that we therefore should be able to reverse engineer the human brain as it is fundamentally computable. That is, intelligence and consciousness are substrate-neutral.

- Computer science FE

- Introduction to Computer Science & Programming: Free Courses FE

- Exploring Computational Thinking FE

- What is computation? FE

- Complexity Explained: The Complete Series F

- Computation Finite and Infinite Machines, Marvin Minsky

- Introduction to the Theory of Computation, Michael Sipser

- The Hidden Language of Computer Hardware and Software, Charles Petzold E

- Programming Language Pragmatics, Michael L. Scott

- Introduction to Algorithms, Thomas H. Cormen

- Concrete Mathematics: A Foundation for Computer Science (Mathematics that support advanced computer programming and the analysis of algorithms.)

- Computability, Complexity, and Languages: Fundamentals of Theoretical Computer Science

- An Introduction to Kolmogorov Complexity and Its Applications

- Theoretical Computer Science (Q&A site for theoretical computer scientists and researchers in related fields.) F

- The Original 'Lambda Papers' F

- The Design of Approximation Algorithms F

(Algorithmic) Information Theory

- An Introduction to Information Theory FE

- Information vs. Meaning FE

- Omega and why maths has no TOEs FE

- What is Solomonoff Induction? FE

- Occam's Razor F

- Decoherence is Simple F

- Kolmogorov complexity F

- An Introduction to Kolmogorov Complexity and Its Applications

- Solomonoff Induction (An introduction to Solomonoff's approach to inductive inference.) F

- Algorithmic information theory F

- Algorithmic probability F

- Solomonoff Induction (SIAI Blog) F

- Information theory F

- Entropy in thermodynamics and information theory F

- The Unknowable (Free book by Gregory Chaitin) F

Physics

A poet once said, "The whole universe is in a glass of wine." We will probably never know in what sense he meant that, for poets do not write to be understood. But it is true that if we look at a glass of wine closely enough we see the entire universe. — Richard Feynman

- The Road to Reality

- The Feynman Lectures on Physics

- Usenet Physics FAQ F

- So You'd Like to Learn Some Physics... F

- 100 Videos for Teaching and Studying Physics F

- From Eternity to Here (The Quest for the Ultimate Theory of Time)

- Carl Sagan's Apple Pie FE

You do not really understand something unless you can explain it to your grandmother. ~ Albert Einstein

- Introduction to Differential Geometry and General Relativity F

- Lecture Notes on General Relativity F

- The General Relativity Tutorial F

- Modern Physics: General Relativity F

An electron is not a billiard ball, and it’s not a crest and trough moving through a pool of water. An electron is a mathematically different sort of entity, all the time and under all circumstances, and it has to be accepted on its own terms. The universe is not wavering between using particles and waves, unable to make up its mind. It’s only human intuitions about QM that swap back and forth. — Eliezer Yudkowsky

I am not going to tell you that quantum mechanics is weird, bizarre, confusing, or alien. QM is counterintuitive, but that is a problem with your intuitions, not a problem with quantum mechanics. Quantum mechanics has been around for billions of years before the Sun coalesced from interstellar hydrogen. Quantum mechanics was here before you were, and if you have a problem with that, you are the one who needs to change. QM sure won’t. There are no surprising facts, only models that are surprised by facts; and if a model is surprised by the facts, it is no credit to that model. — Eliezer Yudkowsky

- The Quantum Physics Sequence F

- And the Winner is... Many-Worlds! (MWI) F

- The Everett Interpretation F

- "Quantum Computing since Democritus" course notes F

- Consistent Quantum Theory F

- Lecture series on quantum mechanics from Oxford's undergraduate course. F

- Learning Material on Quantum Computing F

- Foundations of Physics (Journal Devoted to the Conceptual Bases and Fundamental Theories of Modern Physics) FEM

- FQXi (Foundational Questions Institute) FEM

- Theory of everything (TOE) FEM

- Theories of Everything and Godel's theorem FM

- List of unsolved problems in physics F

- The Born Probabilities FM

- Scott Aaronson on Born Probabilities FE

- Eliezer Yudkowsky and Scott Aaronson on Born Probabilities (Video talk.) FE

- Born rule (One of the key principles of quantum mechanics.) F

- Spin-statistics theorem F

- Why we need the spin-statistics theorem FE

- Entropy F

- Entropy (arrow of time) F

- Beyond the Reach of God FE

- Information and the Nature of Reality: From Physics to Metaphysics

- The Universes of Max Tegmark FEM

- The mathematical universe (Level IV Multiverse/Ultimate Ensemble/Mathematical Universe Hypothesis) FEM

Evolution

(Evolution) is a general postulate to which all theories, all hypotheses, all systems must henceforward bow and which they must satisfy in order to be thinkable and true. Evolution is a light which illuminates all facts, a trajectory which all lines of thought must follow — this is what evolution is. — Pierre Teilhard de Chardin

- Darwin's Dangerous Idea, Daniel Dennett

- The Greatest Show on Earth: The Evidence for Evolution, Richard Dawkins E

- 29+ Evidences for Macroevolution FE

- Evolution: 24 myths and misconceptions FE

- Micro- and macroevolution (Image) FE

- Evolutionary Theory: Mathematical and Conceptual Foundations

- Talk.origins (Discussion and debate of biological and physical origins.) FE

- Human Evolution Education Resources F

- Genetic Algorithms and Evolutionary Computation F

- Evolution of Adaptive Behaviour in Robots by Means of Darwinian Selection

- Inner Fish: A Journey into the 3.5-Billion-Year History of the Human Body

- The Making of the Fittest: DNA and the Ultimate Forensic Record of Evolution

- Evolution: What the Fossils Say and Why It Matters

- Why Evolution Is True

- Universal Darwinism F

- Bayesian Methods and Universal Darwinism F

- Evolutionary Psychology: A Primer FE

Philosophy

There is no such thing as philosophy-free science; there is only science whose philosophical baggage is taken on board without examination. — Daniel Dennett, Darwin's Dangerous Idea, 1995.

Philosophy is a battle against the bewitchment of our intelligence by means of language. — Wittgenstein

- Gödel, Escher, Bach: An Eternal Golden Braid

- Good and Real (Demystifying Paradoxes from Physics to Ethics)

- nickbostrom.com F

- Quantum Mechanics and Philosophy: An Introduction F

- Metaphilosophical Mysteries FE

Everything of beauty in the world has its ultimate origins in the human mind. Even a rainbow isn't beautiful in and of itself. — Eliezer Yudkowsky

- The Mind's I: Fantasies and Reflections on Self & Soul

- Sweet Dreams: Philosophical Obstacles to a Science of Consciousness

- The Ego Tunnel: The Science of the Mind and the Myth of the Self

- Consciousness (Stanford Encyclopedia of Philosophy) F

- 7681 free papers on consciousness in philosophy and in science. F

- Neuroscience of Ethics

- Intelligence (Definitions) FE

Levels of epistemic accuracy.

- The Simple Truth (This essay is meant to restore a naive view of truth.) FE

- Probability is in the Mind (Probabilities express ignorance, states of partial information.) F

- Not technically a lie FE

- Falsehood FE

- Bullshit (Not even wrong) FE

- Evidence (What is Evidence?) F

- Formal Epistemology F

- In Defense of Objective Bayesianism

- Bayesian Epistemology

- The “no self-defeating object” argument, and the vagueness paradox F

- Knowledge and Its Limits, Timothy Williamson M

- Being an Absolute Skeptic FE

- Ned Hall and L.A. Paul (On what contemporary philosophy thinks about causality.) F

Linguistics

- Language: the Basics, R. L. Trask E

- The Language Instinct, Steven Pinker E

- A Brief History of Grammar F

- babelsdawn.com (A blog about the origins of speech.) F

- Language Log (A collaborative languageblog) F

Neuroscience

- Neuroscience for Kids (For students and teachers who would like to learn about the nervous system.) FE

- Principles of Neural Science (All the details of how the neuron and brain work.)

- Essentials of Neural Science and Behavior (The fundamentals of biology in mental processes.)

- Reverse Engineering the Brain (Ideas regarding the sufficient “hardware” and information processing capabilities to build a human equivalent computational substrate.) F

- Bayesian brain F

- The Bayesian brain: the role of uncertainty in neural coding and computation F

General Education

- The Best Textbooks on Every Subject F

- 250 Free Online Courses from Top Universities F

- VideoLectures - exchange ideas & share knowledge F

- Online degrees and video courses from leading universities. F

- Khan Academy FE

- YouTube – EDU F

- iTunes U F

- The Harvard Extension School’s Open Learning Initiative F

- Free Electric Circuits Textbooks F

- Podcasts from the University of Oxford F

- Ask a Mathematician / Ask a Physicist F

Miscellaneous

Not essential but a good preliminary to reading LessWrong and in some cases helpful to be able to make valuable contributions in the comments. Many of the concepts in the following works are often mentioned on LessWrong or the subject of frequent discussions.

- Good and Real (Rationality & Decision Theory)

- Reasons and Persons (Ethics, rationality and personal identity.)

- Predictably Irrational E

- Influence: The Psychology of Persuasion

- The Demon-Haunted World: Science as a Candle in the Dark E

- A New Kind of Science FM

- Conway's Game of Life F

- Anthropic principles agree on bigger future filters FM

- Cognitive Science in One Lesson FE

Elaboration of miscellaneous terms, concepts and fields of knowledge you might come across in some of the subsequent and more technical advanced posts and comments on LessWrong. The following concepts are frequently discussed but not necessarily supported by the LessWrong community. Those concepts that are controversial are labeled M.

- Rationality FE

- The map is not the territory FE

- Utility theory F

- Utilitarianism FM

- Antiprediction F

- Cellular automaton F

- Paradise-engineering FEM

- Simulation Argument FM

- Anthropic Principle FM

- Boltzmann brain FEM

- Self-Indication Assumption FM

- Many-worlds interpretation (MWI) F

- Quantum suicide and immortality FEM

- Cryonics (We Agree: Get Froze) FE

- Prediction market FE

- Bootstrapping (compilers) F

- Pascal's mugging F

Websites

Relevant websites. News and otherwise. F

- yudkowsky.net (Eliezer S. Yudkowsky)

- theuncertainfuture.com (Visualizing "The Future According to You")

- overcomingbias.com

- singinst.org (The SIAI, Singularity Institute for Artificial Intelligence)

- acceleratingfuture.com

- nickbostrom.com

- Meteuphoric

- wrongbot.com

- Transhumanist Resources

- BLTC Research (Global technology project to abolish the biological substrates of suffering.) M

Fun & Fiction

The following are relevant works of fiction or playful treatments of fringe concepts. That means, do not take these works at face value.

Accompanying text: The Logical Fallacy of Generalization from Fictional Evidence

- Harry Potter and the Methods of Rationality (A LessWrong Community Project) FE

- Luminosity (A Twilight Fanfiction Story by Alicorn) FE

- Three Worlds Collide (A story to illustrate some points on naturalistic metaethics and diverse other issues of rational conduct) FE

- The Finale of the Ultimate Meta Mega Crossover (Vernor Vinge x Greg Egan crackfic) FM

- Permutation City (Accompanying text) (The famous science fiction novel by Greg Egan.) M

- Diaspora (Accompanying text) (Another influential hard science fiction novel by Greg Egan.) M

- A Fire Upon the Deep (This novel by Vernor Vinge has set the stage for a new generation of SF.) M

- Neverness, David Zindell M

- Free Hard SF Novels & Short Stories FEM

- orionsarm.com (Hard science fiction collective worldbuilding project.) FEM

- The Strangest Thing An AI Could Tell You FEM

- The AI in a box boxes you FEM

- How Many LHC Failures Is Too Many? FEM

- Hamster in Tutu Shuts Down Large Hadron Collider FEM

- Eliezer Yudkowsky Facts FEM

- A Much Better Life? FEM

Go

A popular board game played and analysed by many people in the LessWrong and general AI crowd.

- What Is the Game of Go? FE

- The Interactive Way To Go FE

- Rationality Lessons in the Game of Go F

- An overview of online go servers F

- AITopics Go (If you want to understand intelligence, the game of Go is much more demanding.) F

- Computer Go F

- Go software (Extensive list of Go software) F

- Go Software (A commercial Go-playing program for PC, iPhone, iPad.)

Note:

This list is a work in progress. I will try to constantly update and refine it.

If you've anything to add or correct (e.g. a broken link), please comment below and I'll update the list accordingly.

104 comments

Comments sorted by top scores.

comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2010-10-10T18:20:14.248Z · LW(p) · GW(p)

Would you mind if I edited this post in order to express more strongly that the vast majority of this reading is not required to keep up with the vast majority of LW posts?

Replies from: XiXiDucomment by AngryParsley · 2010-10-19T04:53:07.860Z · LW(p) · GW(p)

The dependency graphs of Eliezer's posts are an often-overlooked resource. I don't see them linked anywhere on the wiki either.

comment by Vladimir_Nesov · 2010-10-10T15:10:37.597Z · LW(p) · GW(p)

A list capturing all background knowledge you might ever need for LW

No it isn't.

Replies from: XiXiDucomment by XFrequentist · 2010-10-10T17:08:24.155Z · LW(p) · GW(p)

Nice idea, thanks for taking the time to compile these resources!

A few thoughts:

This would be easier to follow if the links in each section were ordered roughly from easiest to most challenging.

The length of this list is going to intimidate some new readers. One could productively add to the LW conversation after understanding a small fraction of these references. You should make it clear that these aren't prerequisite.

Some of the entries seem only tangentially related to LW (e.g. Haskell, Go).

The "Key Concepts" section might be better near the beginning.

The "Key Resources" do not seem to me to be key resources.

I'm in the process of trying to get another LW project started, but I've long thought that a "LessWrong Syllabus" (laid out in the style of a university degree planner), would be a good idea. This post seems to be a step in that direction.

It could list assumed prerequisites, recommended or core topics, advanced topics, plus suggested learning materials (books, online courses, etc.), and means of testing progress at each stage. [ETA: Links above are just examples]

Methods of testing might be controversial, but it would be straightforward to capture most of the topics, particularly at the beginner stages.

I should note that this is meant to help guide self-study of the theoretical mathy-sciencey aspects of LessWrongian rationality; I think that this format might be less well suited to the study of applied rationality.

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-10T17:59:19.972Z · LW(p) · GW(p)

- "Key Resources" are now called Relevant Websites.

- "Key Concepts" are now at the very top.

- I added "Note: Don't be intimidated by the length of this list. Most of these references aren't prerequisite. Only a small fraction is necessary to follow most of the posts and discussions on LessWrong. Before giving up rather just go ahead and read the Sequences, you'll see it's much easier to understand than this list makes it look like. And if you've trouble understanding some concept, just ask in the comments or come back and see if you can find some explanation via this companion."

I know that some entries are only tangentially related to LW, but I wanted to compile a list that gives you as much as possible background knowledge to understand and participate on LW and integrate into the community. I believe that programming is a essential field of knowledge and that Go is not just very popular with people interested in LW related content but also one of the first hard AI problems people can learn about by simply playing a game.

About ordering it from easiest to most challenging. Well, I can't do that. First of all it would likely mess up the categories and it is not clear to me what is easy and what not. This list is the culmination of feedback I received from asking, "What should I learn?" That is, although I'm reasonable sure that all of the items are of high quality as they were recommended by highly educated people who have read them, I haven't actually read most of it myself yet.

Replies from: XFrequentist↑ comment by XFrequentist · 2010-10-13T03:26:48.178Z · LW(p) · GW(p)

Thanks. This is much improved, by the way!

comment by Mass_Driver · 2010-10-10T15:21:48.375Z · LW(p) · GW(p)

Very nice. My only suggestions are to (1) add a biology section, for people who haven't quite grok'd how the brain is an organ, and (2) tweak the physics section so that it doesn't lead off with quantum physics, if necessary by making two physics sections. The notion that the world's fiddly bits behave according to mathematical laws is neither obvious nor self-explanatory, and starting to explain this notion by reference to casually observable phenomena (rocks, light, magnets, water) rather than deeply confusing and occasionally controversial phenomena (quarks, neutrinos) is a really good idea.

Replies from: XiXiDu, XiXiDu↑ comment by XiXiDu · 2010-10-13T16:17:26.352Z · LW(p) · GW(p)

I added a neuroscience section now. Pretty empty so far. The first link is Neuroscience for Kids which I was given by a professional neuroscientist as a valuable and easy to understand resource. The second link is a list made by me on controversial ideas regarding the reverse engineering of the brain. It also includes a video by EY and Anders Sandberg.

I'll advance it with time and also add a category for biology in general.

↑ comment by XiXiDu · 2010-10-10T15:39:49.343Z · LW(p) · GW(p)

Thanks, the physics section is now subdivided into 3 categories. I'll refine it according to your suggestion with time. I'll try to come up with a biology section too and ask a neuroscientist for some easy to understand resources on neuroscience.

comment by Alex Flint (alexflint) · 2011-04-12T16:40:21.503Z · LW(p) · GW(p)

Excellent free lecture series on quantum mechanics from Oxford's undergraduate course. Consists of 24 one-hour lectures. Course material, solutions, and even the PDF of textbook is free.

comment by hamnox · 2017-10-27T16:39:32.573Z · LW(p) · GW(p)

Want to note: I noticed the category "memetic hazard" and started immediately skimming the page to find everything labeled as such. Something is wrong with my reasoning here—

It wasn't the worst impulse to follow after all, since the category means something like controversial or fictional. Except... "memetic hazard" is a meaningful warning. I would prefer it keeps its value as a signal.

↑ comment by AgentME · 2018-09-09T07:29:53.749Z · LW(p) · GW(p)

Guilty, I also immediately scrolled through the page for all of the M links. "Memetic hazard" sounds a bit enticing like "forbidden knowledge".

Maybe EY was onto something with the ideas in the Bayesian Conspiracy that education should be dressed up a bit as "forbidden" but not actually restricted.

comment by XiXiDu · 2010-11-11T14:32:39.618Z · LW(p) · GW(p)

Update 2010-11-11

- I added the FAQ (What is Less Wrong and where did it come from?; When should I write a top-level article? etc.) and a List of all articles from Less Wrong (in chronological order) to the Overview (LessWrong) section.

- I added What is Wei Dai's Updateless Decision Theory? to the Decision Theory section.

- I added Omega and why maths has no TOEs to the (Algorithmic) Information Theory section. It is a free and simple introduction to many associated concepts.

comment by Ben Pace (Benito) · 2013-05-26T19:22:06.103Z · LW(p) · GW(p)

In information theory, the link to 'Information Vs. Meaning' is broken.

Please fix it, it sounds useful :)

Replies from: Curiouskidcomment by XiXiDu · 2010-12-02T11:07:12.501Z · LW(p) · GW(p)

Update 2010-12-02

- I added a new section to the Overview (LessWrong) category and grouped it into a general Overview section and one called Why read Less Wrong?.

- I added Eliezer Yudkowsky on Bloggingheads.tv to the Overview (Less Wrong) section.

- I added The map is not the territory and Rationality (LW Wiki entry) to the basic Concepts section.

- I added The Singularity: A Philosophical Analysis to the Singularity section.

- I added Mathematics Illuminated to the Basics section of Mathematics

- I added What is computation? to the Computer Science category.

- I added The Unknowable (free book by Gregory Chaitin), Occam's Razor and Decoherence is Simple to the (Algorithmic) Information Theory category.

- I added A Brief History of Grammar to the Linguistics category.

comment by Vladimir_M · 2010-10-13T23:46:50.377Z · LW(p) · GW(p)

In the linguistics section, Trask's book should be marked as "easy." It's short and very readable, and assumes almost no background knowledge. (But despite that, provides an informative and well-balanced introduction to the field.)

Edit: Also, a draft of Jaynes's book is available for free online, but the list contains only an Amazon link.

comment by XiXiDu · 2010-10-13T12:35:59.009Z · LW(p) · GW(p)

Recent updates:

- I added a table of contents

- Key Concepts are now just Concepts

- I added a heuristics and biases category

- I introduced a new label M = Memetic Hazard

- I added a Fun section

- Various other changes and additions

↑ comment by NancyLebovitz · 2010-10-13T13:50:51.856Z · LW(p) · GW(p)

Thanks. That post is a spectacular piece of work.

comment by Vladimir_Nesov · 2010-10-10T15:14:14.454Z · LW(p) · GW(p)

Marks with similar-looking letters (F and E) in light colors look bad on white background (hard to notice). Use contrasting darker colors (if at all) and more distinct text labels, maybe also bolded.

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-10T15:27:25.787Z · LW(p) · GW(p)

I changed the colors. I will think about some other form of labeling.

Replies from: DSimon↑ comment by DSimon · 2010-10-12T14:11:44.149Z · LW(p) · GW(p)

I suggest small graphical icons (~16x16) with distinctive colors and sillhouetes. Maybe:

- A gray X over a black dollar sign for Free

- A green exclamation mark for Easy

- A yellow yield sign with an exclamation mark for Memetic Hazard (like your lightbulb one, but probably a lightbulb shape would be too small to make out at reasonable icon size).

It would also be good to arrange the icons and entries in a table, so that a given icon always appears in the same column.

comment by [deleted] · 2012-06-16T06:04:37.980Z · LW(p) · GW(p)

Absolutely the best resource for learning computer programming that I've come across. Highly recommended for beginners.

comment by Document · 2011-01-07T05:02:16.299Z · LW(p) · GW(p)

All the M labels could use explanations, but in particular, why is A Fire Upon the Deep controversial?

Replies from: XiXiDu↑ comment by XiXiDu · 2011-01-07T11:09:47.260Z · LW(p) · GW(p)

It is a work of fiction that does contain bogus ideas that have been added to sidestep the problem of writing about a post-Singularity future (e.g. the Zones of Thought). Whereas a story like Three Worlds Collide does not deserve this labeling since it especially mentions its deliberate shortcomings and explains that they have been introduced to enable the writer to highlight and dissolve certain issues. If you think that this labeling policy should be altered, please elaborate on your reasons.

All the M labels could use explanations...

That would be nice but might go beyond the scope of this list. Take for example CEV (Coherent Extrapolated Volition). It got labeled 'controversial' because there seem to be many people, even on Less Wrong, that take a critical look at it. The various objections may not necessarily be sound but the idea itself is popular enough to list it here. The label is there to indicate that the interested reader should search for a review of the idea if they are more than superficially interested.

comment by XiXiDu · 2010-10-29T14:39:49.964Z · LW(p) · GW(p)

Update 2010-10-29

- I added Less Wrong Q&A with Eliezer Yudkowsky: Video Answers to the Overview (LessWrong) section.

- I added Introduction to Computer Science & Programming: Free Courses, Exploring Computational Thinking, The Original 'Lambda Papers' and the Wiki page on Computer Science to the Computer Science section.

- I added A Gentle Introduction to the Universal Algorithmic Agent AIXI to the AI Machine Learning section.

- I added The Basic AI Drives to the Friendly AI section.

- I added Naive Set Theory, Elements of Information Theory, Category Theory for Beginners, Topology Without Tears and A Measure Theory Tutorial (Measure Theory for Dummies) to the Misc Math section.

- I added Proofs are Programs: 19th Century Logic and 21st Century Computing to the Logic section.

- I added Syntax and Semantics of Programming Languages and an introductory article to Probabilistic Programming to the General Programming section.

- I added The Art and Craft of Problem Solving to the General Mathematics section.

- I added The Demon-Haunted World: Science as a Candle in the Dark to the Misc. section.

- I added 250 Free Online Courses from Top Universities and VideoLectures - exchange ideas & share knowledge to the General Education section.

- I added Evolutionary Theory: Mathematical and Conceptual Foundations to the Evolution section.

comment by XiXiDu · 2010-10-21T19:23:16.216Z · LW(p) · GW(p)

Update 2010-10-21 #1

- I added A Learning Roadmap request: From high-school to mid-undergraduate studies to the Learning Mathematics section. It is a MathOverflow question with a lot of valueable answers that include resources such as this bibliography.

- I also added a few introductory Wikipedia pages to the logical section.

- I added the book What Is Mathematics? An Elementary Approach to Ideas and Methods to the mathematical foundations section.

- I added a miscellaneous math section where I added various links like Reading List: Graph Isomorphism and a few books.

comment by XiXiDu · 2010-10-20T10:14:34.211Z · LW(p) · GW(p)

Update 2010-10-20 #1

I added the dependency graphs of Eliezer's posts as suggested by AngryParsley and in the course of it added a new category called LessWrong Overview.

comment by randallsquared · 2010-10-15T00:43:41.718Z · LW(p) · GW(p)

The Leading Go Software (PC, Mac, iPhone, iPad)

There is as of yet no Mac version, just a placeholder page.

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-16T14:13:07.543Z · LW(p) · GW(p)

Thanks, fixed. I also added the Wiki on Go software with lots of links to lists on free Go software of all kinds.

comment by Persol · 2010-10-14T00:55:57.721Z · LW(p) · GW(p)

The first 3 chapters of Jaynes' "Probability Theory: The Logic of Science" is available at: http://bayes.wustl.edu/etj/prob/book.pdf

Also, here's a copy of his unpublished book (pdf link at bottom): http://bayes.wustl.edu/etj/science.pdf.html

comment by chesh · 2010-10-11T18:58:42.945Z · LW(p) · GW(p)

Are you sure Diaspora should be marked Easy?

I tried to get a fairly intelligent, friend who's interested in science (generally, not necessarily any specific domains covered in the book) to read it and she gave up within about half an hour.

I (a layman, but well-acquainted with the set of singularitarian memes that the book draws from) found that trying to visualize the physics made my head hurt, even with the accompanying illustrative java applets at the author's website.

It also might be valuable to link to those (there are probably some for Permutation City as well, but I haven't checked since I haven't been able to track a copy of it down): http://www.gregegan.net/DIASPORA/DIASPORA.html

Replies from: NancyLebovitz, XiXiDu↑ comment by NancyLebovitz · 2010-10-12T18:47:27.825Z · LW(p) · GW(p)

In particular, the book starts with a description of how new minds are formed in the polis which is very abstract and technical. I wouldn't be surprised if people who could enjoy the rest of the book bounce off the beginning.

↑ comment by XiXiDu · 2010-10-12T08:16:18.025Z · LW(p) · GW(p)

You are right, the more technical aspects of the book are really hard. I took it as a whole, how it is portraying a society of uploads. I'm going to change it anyway, thanks.

ETA I also added the links to Egan's site as accompanying text.

comment by MartinB · 2010-10-10T18:23:26.753Z · LW(p) · GW(p)

Not sure how much it fits here, but http://docartemis.com/brainsciencepodcast/2010/09/bsp70-lillienfeld/ is a reasonable intro + reference collection on some mental blindspots

comment by arundelo · 2010-10-10T16:01:58.069Z · LW(p) · GW(p)

Permutation City (the infamous science fiction novel by Greg Egan)

Why "infamous"?

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-10T16:06:19.153Z · LW(p) · GW(p)

Whoops! Yes, that's clearly the wrong word. Thank you. To my excuse, I never learnt English formally but basically taught it myself with time :-)

Replies from: gwern↑ comment by gwern · 2010-10-10T16:17:49.253Z · LW(p) · GW(p)

I dunno, Permutation City is pretty infamous in my books because it presents disquieting ideas I don't know how to disprove. (Kind of like Boltzmann Brains or the Eternal Return.)

Replies from: rwallace↑ comment by rwallace · 2010-10-11T15:47:42.482Z · LW(p) · GW(p)

Thank you, I was about to comment on this; you've given me a needed data point.

Permutation City is the only work of fiction I've enjoyed that I do not go around recommending, because I'm wary that to a reader without the requisite specialized background to separate the parts based on real science from the parts that are pure fiction, it might actually be something of a memetic hazard.

If you are going to recommend it, I would suggest accompanying the recommendation with a link to the antidote

Replies from: gwern, XiXiDu, Document↑ comment by gwern · 2010-10-11T16:50:33.949Z · LW(p) · GW(p)

So your strategy is basically 'subjective anticipation is a useful but ultimately incoherent idea; Permutation City takes it to an absurdum'?

That's a good idea, but I don't think your antidote post is strong enough. Subjective anticipation is a deeply-held belief, after all.

Replies from: rwallace, XiXiDu↑ comment by XiXiDu · 2010-10-11T17:41:28.473Z · LW(p) · GW(p)

I haven't added the antidote post as accompanying reading, as I have to read it yet, but 'The Logical Fallacy of Generalization from Fictional Evidence' post by EY. Reload and see the fiction section. Not sure, maybe a bit drastic. But at least it is obvious now.

Replies from: gwern↑ comment by gwern · 2010-10-11T18:05:47.648Z · LW(p) · GW(p)

I don't think that Permutation City being fiction matters (if I understand your comment).

The nonfiction ideas stand on their own, though they were presented in (somewhat didactic) fiction: that computation can be sliced up arbitrarily in space and time, that it be 'instantiated' on almost arbitrary arrangements of matter, and that this implies the computation of our consciousness can 'jump' from correct random arrangement of matter (like space dust) to correct random arrangement, lasting forever, and hooking in something like quantum suicide so that it's even likely...

If it were simply pointing out that the fiction presupposes all sorts of arbitrary and unlikely hidden mechanisms like Skynet wanting to exterminate humanity, Permutation City would not be a problem. But it shows its work, and we LWers frequently accept the premises.

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-12T08:31:11.117Z · LW(p) · GW(p)

However, the book could also mislead people to believe those arbitrary and unlikely elements if they are linked to them on a list of resources for LessWrong. That's why I think a drastic warning is appropriate. Science fiction can give you a lot of ideas but can also seduce you to believe things that might be dangerous, like that there is no risk from AI.

↑ comment by XiXiDu · 2010-10-11T17:39:01.930Z · LW(p) · GW(p)

I introduced a new label M for Memetic Hazard and added a warning sign including a accompanying text to the fiction section.

Replies from: rwallace↑ comment by rwallace · 2010-10-11T21:13:45.571Z · LW(p) · GW(p)

And I see a number of other things that merited the memetic hazard label also now have it, good idea. I'd suggest that it also be added to the current links in the artificial intelligence section, and to the link on quantum suicide.

Maybe also add a link to Eliezer's Permutation City crossover story, now that we have the requisite memetic hazard label for such a link?

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-12T08:11:33.851Z · LW(p) · GW(p)

I thought quantum suicide is not controversial since MWI is obviously correct? And the AI section? Well, the list is supposed to reflect the opinions hold in the LW community, especially by EY and the SIAI. I'm trying my best to do so and by that standard, how controversial is AI going FOOM etc.?

Eliezer's Permutation City crossover story? It is on the list for some time, if you are talking about the 'The Finale of the Ultimate Meta Mega Crossover' story.

Replies from: rwallace↑ comment by rwallace · 2010-10-12T16:37:57.568Z · LW(p) · GW(p)

I thought quantum suicide is not controversial since MWI is obviously correct?

I agree MWI is solid, I'm not suggesting that be flagged. But it does not in any way imply quantum suicide; the latter is somewhere between fringe and crackpot, and a proven memetic hazard with at least one recorded death to its credit.

And the AI section? Well, the list is supposed to reflect the opinions hold in the LW community, especially by EY and the SIAI. I'm trying my best to do so and by that standard, how controversial is AI going FOOM etc.?

Well, AI go FOOM etc is again somewhere in the area between fringe and crackpot, as judged by people who actually know about the subject. If the list were specifically supposed to represent the opinions of the SIAI, then it would belong on the SIAI website, not on LW.

Eliezer's Permutation City crossover story? It is on the list for some time, if you are talking about the 'The Finale of the Ultimate Meta Mega Crossover' story.

So it is, cool.

Replies from: Richard_Kennaway, XiXiDu↑ comment by Richard_Kennaway · 2010-10-13T09:40:59.659Z · LW(p) · GW(p)

[quantum suicide is] a proven memetic hazard with at least one recorded death to its credit.

I hadn't heard of this -- can you give more details?

Replies from: rwallace↑ comment by rwallace · 2010-10-13T16:53:02.858Z · LW(p) · GW(p)

Replies from: khafra↑ comment by khafra · 2010-10-13T19:10:23.379Z · LW(p) · GW(p)

Not even the most optimistic interpretations of quantum immortality/quantum suicide think it can bring other people back from the dead. Does it count as a memetic hazard if only a very mistaken version of it is hazardous?

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-14T13:37:29.344Z · LW(p) · GW(p)

Why not? If you kill yourself in any branch that lacks the structure that is your father, then the only copies of you that will be alive are those that don't care or those that live in the unlikely universes where your father is alive (even if it means life extension breakthroughs or that he applied for cryonics.)

ETA: I guess you don't need life extension. After all it is physical possible to grow 1000 years old, if unlikely. Have I misunderstood something here?

Replies from: DanielVarga, khafra↑ comment by DanielVarga · 2010-10-16T17:24:05.249Z · LW(p) · GW(p)

Why not? If you kill yourself in any branch that lacks the structure that is your father, then the only copies of you that will be alive are those that don't care or those that live in the unlikely universes where your father is alive (even if it means life extension breakthroughs or that he applied for cryonics.)

No, that's not what would happen. Rather, being faithful to your commitment, you would go on a practically infinite suicide spree (*) searching for your father. A long and melancholic story with a suprise happy ending.

(*) I googled it and was sad to see that the phrase "suicide spree" is already taken for a different concept.

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-16T17:33:04.863Z · LW(p) · GW(p)

I'm not sure where you think we disagree? Personally if I was going to take MWI and quantum suicide absolutely seriously I'd still make the best out of every branch. All you do by quantum suicide is to cancel out the copies you deem having unworthy experiences. But why would I do that if I do not change anything about the positive branches.

Replies from: DanielVarga↑ comment by DanielVarga · 2010-10-16T18:20:51.773Z · LW(p) · GW(p)

My reply wasn't meant to be taken seriously, and I don't take the idea of quantum suicide seriously. But to answer your question, here is the disagreement, or really, me nitpicking for the sake of comedic effect:

In your scenario, most of the copies will NOT be in universes with your father. Most of them will be in the process of committing suicide. This is because -- at least the way I interpreted your wording -- your scenario differs from the classic quantum lottery scenario in that here it is you who evaluates whether you are in the right universe or not.

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-16T18:44:45.821Z · LW(p) · GW(p)

Yes, we agree. So how serious do you take MWI? I'm not sure I understand how someone could take MWI seriously but not quantum suicide. I haven't read the sequence on it yet though.

Replies from: jimrandomh, timtyler, wedrifid↑ comment by jimrandomh · 2010-10-16T19:09:56.889Z · LW(p) · GW(p)

Easy - if you believe in MWI, but your utility function assigns value to the amount of measure you exist in, then you don't believe in quantum suicide. This is the most rational position, IMO.

Replies from: DanielVarga↑ comment by DanielVarga · 2010-10-17T01:52:40.938Z · LW(p) · GW(p)

I am absolutely uninterested in the amount of measure I exist in, per se. (*) I am interested in the emotional pain a quantum suicide would inflict on measure 0.9999999 of my friends and relatives.

(*) If God builds a perfect copy of the whole universe, this will not increase my utility the slightest.

Replies from: wedrifid↑ comment by wedrifid · 2010-10-17T02:19:58.829Z · LW(p) · GW(p)

I am absolutely uninterested in the amount of measure I exist in, per se. (*) I am interested in the emotional pain a quantum suicide would inflict on measure 0.9999999 of my friends and relatives.

The is a potentially coherent value system but I note that it contains a distinct hint of arbitrariness. You could, technically, like life, dislike death, like happy relatives and care about everything in the branches in which you live but only care about everything except yourself in branches in which you die. But that seems likely to be just a patch job on the intuitions.

Replies from: DanielVarga↑ comment by DanielVarga · 2010-10-17T22:05:01.467Z · LW(p) · GW(p)

Are you sure about this? Isn't my preference simply a result of a value system that values the happiness of living beings in every branch? (Possibly weighted with how similar / emotionally close they are to me, but that's not really necessary.) If I kill myself in every branch except in those where I win the lottery, then there will be many branches with (N-1) sad relatives, and a few branches with 1 happy me and (N-1) neutral relatives. So I don't do that. Is there really anything arbitrary about this?

Replies from: wedrifid↑ comment by wedrifid · 2010-10-18T08:06:12.872Z · LW(p) · GW(p)

The part that surprises me is that you do care about all the branches (relatives, etc) yet in those branches you don't care if you die. You'll note that I assumed you preferred death to life? In those worlds you seem to have a preference for happy vs sad relatives but have somehow (and here is where I would say 'arbitrarily') decided you don't care whether you live or die.

Say, for example, that you have a moderate aversion to having one of your little toes broken. You set up a quantum lottery where in the 'lose' branches have your little toe broken instead of you being killed. Does that seem better or worse to you? I mean, there is suffering of someone near and dear to you so I assume that seems bad to you. Yet it seems to me that if you care about the branch at all then you would prefer 'sore toe' to 'death' when you lose!

Replies from: DanielVarga↑ comment by DanielVarga · 2010-10-18T13:21:37.564Z · LW(p) · GW(p)

You are right that my proposed value system does not incorporate survival instinct, and this makes it sound weird, as survival instinct is an important part of every actual human value system, including mine. Your broken toe example shows this nicely.

So why did I get rid of survival instinct? Because you argued that what I wrote "contains a distinct hint of arbitrariness". I think it doesn't. I care for everyone's preferences, and a dead body has no preferences. And to decide against quantum suicide, that is all what is needed. In place of survival instinct we basically have the disincentive of grieving relatives.

When we explicitly add survival instinct, the ingredient you rightfully miss, then yes, the result will indeed become somewhat messy. But the reason for this mess is the added ingredient in itself, not the other, clean part, nor the interrelation with the other part. I just don't think survival instinct can be turned into a coherent, formalized value. So the bug is not in my proposed idealized value system, the bug is in my actual messy human value system.

This approach, by the way, affects my views on cryonics, too.

Replies from: wedrifid↑ comment by wedrifid · 2010-10-18T18:43:45.547Z · LW(p) · GW(p)

I think it doesn't. I care for everyone's preferences, and a dead body has no preferences. And to decide against quantum suicide, that is all what is needed. In place of survival instinct we basically have the disincentive of grieving relatives.

This is a handy way to rationalise against quantum suicide. Until you consider Quantum suicide on a global level. People who have been vaporised along with their entire planet have no preferences... Would you bite that bullet and quantum planetary-suicide?

Replies from: DanielVarga↑ comment by DanielVarga · 2010-10-18T23:55:52.445Z · LW(p) · GW(p)

As I already wrote, the above is not my actual value system, but rather a streamlined version of it. My actual value system does incorporate survival instinct. You intend to show with quantum planetary suicide that the streamlined value system leads to nonsensical results. I don't really find the results nonsensical. In this sense, I would bite the bullet.

Actually, I wouldn't, but for a reason not directly related to our current discussion. I don't have too much faith in the literal truth of the MWI. I am quite confused about quantum mechanics, but I have a gut feeling that single-world is not totally out of the question, and not-every-world is quite likely. This is because as a compatibilist, I am willing to bite some bullets about free will most others will not bite. I believe that the full space-time continuum is very finely tuned in every direction (*), so it is totally plausible to me that some of those many worlds are simply locked from us by fine-tuning. There are already some crankish attempts in this direction under the name superdeterminism. I don't think these are successful so far, but I surely would not bet my whole planet against the possibility.

(*) This sentence might sound fuzzy or even pseudo-science. All I have is an analogy to make it more concrete: Our world is not a Gold Universe, but I am talking about the sort of fine-tuning found in a Gold Universe.

Replies from: wedrifid↑ comment by wedrifid · 2010-10-19T01:21:12.900Z · LW(p) · GW(p)

You intend to show with quantum planetary suicide that the streamlined value system leads to nonsensical results.

Not nonsensical, no. It would be not liking the idea of planetary suicide that would be nonsensical, given your other expressed preferences. I can even see a perverse logic behind your way of carving which parts of the universal wavefunction you care about, based on the kind of understanding you express of QM.

Just... if you are ever exposed to accessible quantum randomness then please stay away from anyone I care about. These values are, by my way of looking at things, exactly as insane as those parents who kill their children and spouse before offing themselves as well. I'm not saying you are evil or anything. It's not like you are really going to act on any of this so you fall under Mostly Harmless. But the step from mostly killing yourself to evaluating it as preferable for other people to be dead too takes things from none of my business to threat to human life.

Strange as may seem we are talking about the real world here!

Replies from: DanielVarga↑ comment by DanielVarga · 2010-10-19T19:28:58.233Z · LW(p) · GW(p)

wedrifid, please don't use me as a straw-man. I already told you that my actual value system does contain survival instinct, and I already told you why I omitted it here anyway. Here it is, spelled out even more clearly:

You wanted a clean value system that decides against quantum suicide. (I use 'clean' as a synonym of nonarbritrary, low-complexity, aesthetically pleasing.) I proposed a clean value system that is already strong enough to decide against many forms of quantum suicide. You correctly point out that it is not immune against every form.

Incorporating any version of survival instinct makes the value system immune to quantum suicide by definition. I claimed that any value system incorporating survival instincts is necessarily not clean, at least if it has to consistently deal with issues of quantum lottery, mind uploads and such. I don't have a problem with that, and I choose survival over cleanness. And don't worry for my children and spouse. I will spell it out very explicitly, just in case: I don't value the wishes of dead people, because they don't have any. I value the wishes of living people, most importantly their wish to stay alive.

You completely ignored the physics angle to concentrate on the ethics angle. I think the former is more interesting, and frankly, I am more interested in your clever insights there. I already mentioned that I don't have too much faith in MWI. Let me add some more detail to this. I believe that if you want to find out the real reason why quantum suicide is a bad idea, you will have to look at physics rather than values. My common sense tells me that if I put a (quantum or other) gun in my mouth right now, and pull the trigger many times, then the next thing I will feel is not that I am very lucky. Rather, I will not feel anything at all because I will be dead. I am quite sure about this instinct, and let us assume for a minute that it is indeed correct. This can mean two things. One possible conclusion is that MWI must be wrong. Another possible conclusion is that MWI is right but we make some error when we try to apply MWI to this situation. I give high probability to both of this possibilities, and I am very interested in any new insights.

Let me now summarize my position on quantum suicide: I endorse it

IF MWI is literally correct. (I don't believe so.)

IF the interface between MWI and consciousness works as our naive interpretation suggests. (I don't believe so.)

IF the quantum suicide is planetary, more exactly, if it affects a system that is value-wise isolated from the rest of the universe. (Very hard or impossible to achieve.)

IF survival instinct as a preference of others is taken into account, more concretely, if your mental image of me, the Mad Scientist with the Doomsday Machine, gets the consent of the whole population of the planet. (Very hard or impossible to achieve.)

↑ comment by wedrifid · 2010-10-20T03:03:21.245Z · LW(p) · GW(p)

wedrifid, please don't use me as a straw-man.

End of conversation. I did not read beyond that sentence.

Replies from: DanielVarga↑ comment by DanielVarga · 2010-10-20T10:13:03.004Z · LW(p) · GW(p)

I am sorry to hear this, and I don't really understand it.

↑ comment by khafra · 2010-10-14T20:12:22.298Z · LW(p) · GW(p)

The way I understand quantum suicide, it's supposed to force your future survival into the relatively scarce branches where an event goes the way you want it by making it dependent on that event. Killing yourself after living in the branch where that event did not go the way you wanted at some time in the past is just ordinary suicide; although there's certainly room for a new category along the lines of "counterfactual quantum suicide," or something.

edit: Although, to the extent that counterfactual quantum suicide would only occur to someone who'd heard of traditional, orthodox quantum suicide, the latter would be a memetic hazard.

Replies from: XiXiDu↑ comment by XiXiDu · 2010-10-15T08:43:06.306Z · LW(p) · GW(p)

What difference does it make if you kill yourself before event X, event X kills you or if you commit suicide after event X? In all cases the branches in which event X does not take place are selected for. That is, if agent Y always commits suicide if event X or is killed by event X then the only branches to include Y are those in which X does not happen.

Replies from: khafra↑ comment by khafra · 2010-10-17T03:00:52.208Z · LW(p) · GW(p)

The difference, to me, is how you define the difference between quantum suicide and classical suicide. Everett's daughter killing herself in all universes where she outlived him only sounds like quantum suicide to me if her death was linked to his in a mechanical and immediate manner; otherwise, with her suffering in the non-preferred universe for a while, it just sounds like plain old suicide.

Replies from: wedrifid↑ comment by XiXiDu · 2010-10-12T17:47:25.200Z · LW(p) · GW(p)

I started a discussion: Help: Which concepts are controversial on LW

comment by XiXiDu · 2010-11-10T17:13:08.737Z · LW(p) · GW(p)

Update 2010-11-10

- I added (Algorithmic) Information Theory as a discrete category because it does encompass amongst other things probability theory, computer science and decision theory. It's range of applications is even more extensive, such as in artificial intelligence, machine learning, physics (entropy/thermodynamics) and so on.

↑ comment by JGWeissman · 2010-11-10T17:47:13.737Z · LW(p) · GW(p)

If you are going to be regularly updating this post, it would make sense to convert in into a wiki article.

Replies from: XiXiDu↑ comment by XiXiDu · 2010-12-06T12:36:42.274Z · LW(p) · GW(p)

I thought about doing that but feared that it would be edited mercilessly. Not that it would be a bad thing but I compiled this list for someone like me who needs a lot of background knowledge, elementary introductions and the assurance that there are resources that will help you out if you get stuck. Many people on Less Wrong are highly skilled and educated. When time I will create a Wiki entry to see what's going to come out of it.

This list would have helped me a great deal when I started my self-education. It took a lot of time just to figure out what there is to learn, where to start and the necessary resources to learn from. With this list you can just go ahead and start learning.

I also love links and the pleasant anticipation of lots of material to digest.

comment by XiXiDu · 2010-11-03T16:27:15.944Z · LW(p) · GW(p)

Update 2010-11-03

- I added Anthropic principles agree on bigger future filters to the Miscellaneous section.

More information here: http://lesswrong.com/r/discussion/lw/30t/anthropic_principles_agree_on_bigger_future/

comment by XiXiDu · 2010-11-01T11:06:35.006Z · LW(p) · GW(p)

Update 2010-11-01

- I added A compact list of Eliezer Yudkowsky's positions (Reasons to take friendly AI serious.) to the Friendly AI section.

comment by XiXiDu · 2010-10-19T10:45:28.532Z · LW(p) · GW(p)

I added a lot of new books, e.g. Concrete Mathematics: A Foundation for Computer Science or Principles of Neural Science, and integrated the external list of links on Bayesian probability into the probability section.

For future updates I'll leave a comment giving the details of each update so everyone who has already read the whole list only has to read that particular comment for possible interesting new content.

comment by lukeprog · 2011-06-08T03:48:40.476Z · LW(p) · GW(p)

So glad to see you are continuing to keep this updated!

Replies from: XiXiDucomment by NancyLebovitz · 2011-04-11T14:38:42.659Z · LW(p) · GW(p)

http://en.wikiquote.org/wiki/Main_Page

A source for quotes (presumably checked for accuracy) which includes their contexts.

comment by XiXiDu · 2010-12-06T12:04:52.277Z · LW(p) · GW(p)

Update 2010-12-06

- I added Luminosity - A Twilight Fanfiction Story by Alicorn - to the Fiction section.

- I added Sequences in Alternative Formats (HTML, Markdown, PDF, and ePub versions.) to the Overview (LessWrong) section.

- I updated the link to the book Real World Haskell (Now FREE) in the Programming/Haskell section.

- I updated the link to the book A New Kind of Science (Now FREE) in the Miscellaneous section.

- I added The Harvard Extension School’s Open Learning Initiative to the General Education section.

- I added the free book Possible Worlds: An Introduction to Logic and Its Philosophy to the Logic section.

- I added the free book Mathematical Logic to the General Mathematics section.

- I added the free book Practical Foundations for Programming Languages to the General Programming section.

- I added The “no self-defeating object” argument, and the vagueness paradox to the Epistemology and Miscellaneous Mathematics section.

- I added Category Theory for the Mathematically Impaired (A Short Reading List) and TheCatsters' Category Theory Videos to the Miscellaneous Mathematics section.

comment by XiXiDu · 2010-11-09T13:38:16.029Z · LW(p) · GW(p)

Update 2010-11-09

- I added Pearls of Functional Algorithm Design to the Haskell subcategory of the Programming section.

Richard Bird takes a radically new approach to algorithm design, namely, design by calculation. These 30 short chapters each deal with a particular programming problem drawn from sources as diverse as games and puzzles, intriguing combinatorial tasks, and more familiar areas such as data compression and string matching. Each pearl starts with the statement of the problem expressed using the functional programming language Haskell, a powerful yet succinct language for capturing algorithmic ideas clearly and simply. The novel aspect of the book is that each solution is calculated from an initial formulation of the problem in Haskell by appealing to the laws of functional programming. Pearls of Functional Algorithm Design will appeal to the aspiring functional programmer, students and teachers interested in the principles of algorithm design, and anyone seeking to master the techniques of reasoning about programs in an equational style.

comment by XiXiDu · 2010-11-08T16:17:27.045Z · LW(p) · GW(p)

Update 2010-11-08

- I added the free book The Design of Approximation Algorithms to the Computer Science section.