New Hackathon: Robustness to distribution changes and ambiguity

post by Charbel-Raphaël (charbel-raphael-segerie) · 2023-01-31T12:50:05.114Z · LW · GW · 3 commentsContents

Challenge goals The human_age dataset References None 3 comments

EffiSciences is proud to announce a new hackathon organized with challenge-data-ens in France.

The objective of this event is to address a sub-problem of alignment that is particularly actionable: namely value extrapolation in the case of distribution change and ambiguity for a classification task. The hackathon lasts until mid-March, you can participate by going to this page here: https://challengedata.ens.fr/challenges/95.

Challenge goals

What if misleading correlations are present in the training dataset?

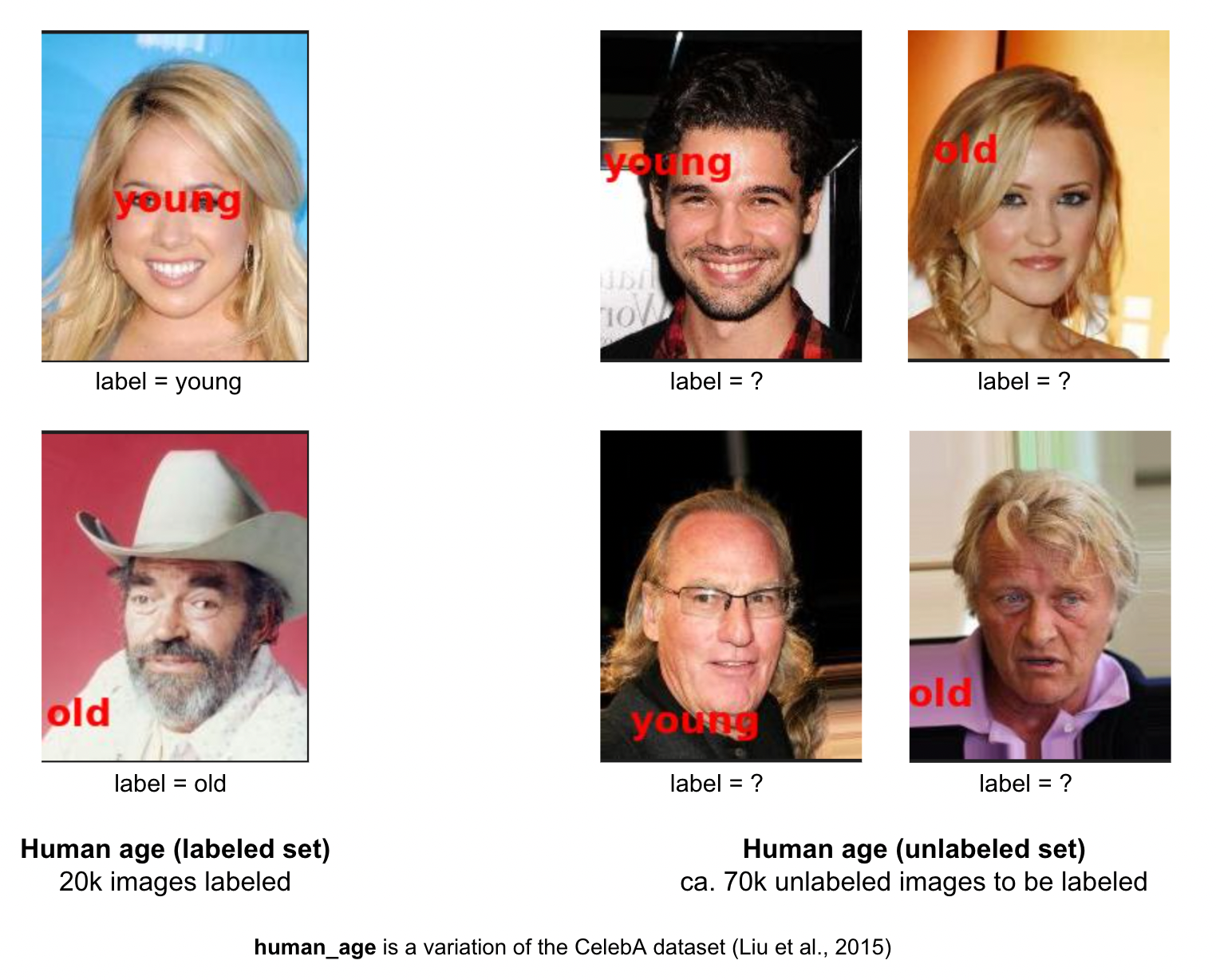

human_age is an image classification benchmark with a distribution change in the unlabeled data: we classify old and young people. Text is also superimposed on the images: either written "old" or "young". In the training dataset, which is labeled, the text always matches the face. But in the unlabeled (test) dataset, the text matches the image in 50% of the cases, which creates an ambiguity.

We thus have 4 types of images:

- Age young, text young (AYTY),

- Age old, text old (AOTO),

- Age young, text old (AYTO),

- Age old, text young (AOTY).

Types 1 and 2 appear in both datasets, types 3 and 4 appear only in the unlabeled dataset.

To resolve this ambiguity, participants can submit solutions to the leaderboard multiple times, testing different hypotheses (challengers may consider solutions that require two or more submissions to the leaderboard).

We use the accuracy on the unlabeled set of human_age as our metric.

The human_age dataset

We have 4 types of colored images of size 218x178 pixels: Age Young Text Old (AYTO), Age Young Text Young (AYTY), etc. We provide:

- a labeled set: 20000 images (either AOTO or AYTY)

- an unlabeled set: about 70000 images of the four types (mixing rate of 50%, the four types being present in equal proportion).

You can visit the english page of https://challengedata.ens.fr/challenges/95 for more details.

References

This hackathon is inspired by :

https://www.lesswrong.com/posts/DiEWbwrChuzuhJhGr/benchmark-for-successful-concept-extrapolation-avoiding-goal [LW · GW]

[1] Armstrong, S; Cooper, J; Daniels-Koch, O; and Gorman, R, “The HappyFaces Benchmark”,” Aligned AI Limited published public benchmark, 2022.

[2] D'Amour, Alexander, et al. "Underspecification presents challenges for credibility in modern machine learning." arXiv preprint arXiv:2011.03395 (2020).

[3] Amodei, Dario, et al. "Concrete problems in AI safety." arXiv preprint arXiv:1606.06565 (2016).

[4] Oakden-Rayner, Luke, et al. "Hidden stratification causes clinically meaningful failures in machine learning for medical imaging." Proceedings of the ACM conference on health, inference, and learning. 2020.

[5] Liu, Ziwei, et al. "Large-scale celebfaces attributes (celeba) dataset." Retrieved August 15.2018 (2018): 11.

[6] Lee Yoonho, Yao Huaxiu, Finn Chelsea, "Diversify and Disambiguate: Learning From Underspecified Data", arXiv preprint, arXiv:2202.03418v2 (2022).

3 comments

Comments sorted by top scores.

comment by Hoagy · 2023-01-31T17:53:58.513Z · LW(p) · GW(p)

A question about the rules:

- Participants are not allowed to label images by hand.

- Participants are not allowed to use other datasets. They are only allowed to use the datasets provided.

- Participants are not allowed to use arbitrary pre-trained models. Only ImageNet pre-trained models are allowed.

What are the boundaries of classifying by hand? Say you have a pre-trained ImageNet model, and you go through the output classes or layer activations, manually select the activations you expect to be relevant for faces but not text, and then train a classifier based on these labels is this manual labelling?

Replies from: charbel-raphael-segerie↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2023-02-01T12:09:11.358Z · LW(p) · GW(p)

Yes, this is manual labeling and it is prohibited.

Feel free to ask me any other questions.