Alex Irpan: "My AI Timelines Have Sped Up"

post by Vaniver · 2020-08-19T16:23:25.348Z · LW · GW · 20 commentsThis is a link post for https://www.alexirpan.com/2020/08/18/ai-timelines.html

Contents

20 comments

Blog post by Alex Irpan. The basic summary:

In 2015, I made the following forecasts about when AGI could happen.

- 10% chance by 2045

- 50% chance by 2050

- 90% chance by 2070

Now that it’s 2020, I’m updating my forecast to:

- 10% chance by 2035

- 50% chance by 2045

- 90% chance by 2070

The main underlying shifts: more focus on improvements in tools, compute, and unsupervised learning.

20 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-20T00:04:31.203Z · LW(p) · GW(p)

Seems a bit weird to have 10% probability mass for the next 15 years followed by 40% probability mass over the subsequent 10 years. That seems to indicate a fairly strong view about how the next 20 years will go. IMO the probability of AGI in the next 15 years should be substantially higher than 10%.

Replies from: Pongo↑ comment by Pongo · 2020-08-20T00:40:37.788Z · LW(p) · GW(p)

Whoa, I hadn’t noticed that. The old predictions put 40% probability on AGI being developed in a 5 year window

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-20T00:49:56.528Z · LW(p) · GW(p)

That was going to be my initial comment, then I noticed that the blog post addresses that problem. Not sufficiently IMO.

Replies from: jungofthewon↑ comment by jungofthewon · 2020-08-21T18:43:09.424Z · LW(p) · GW(p)

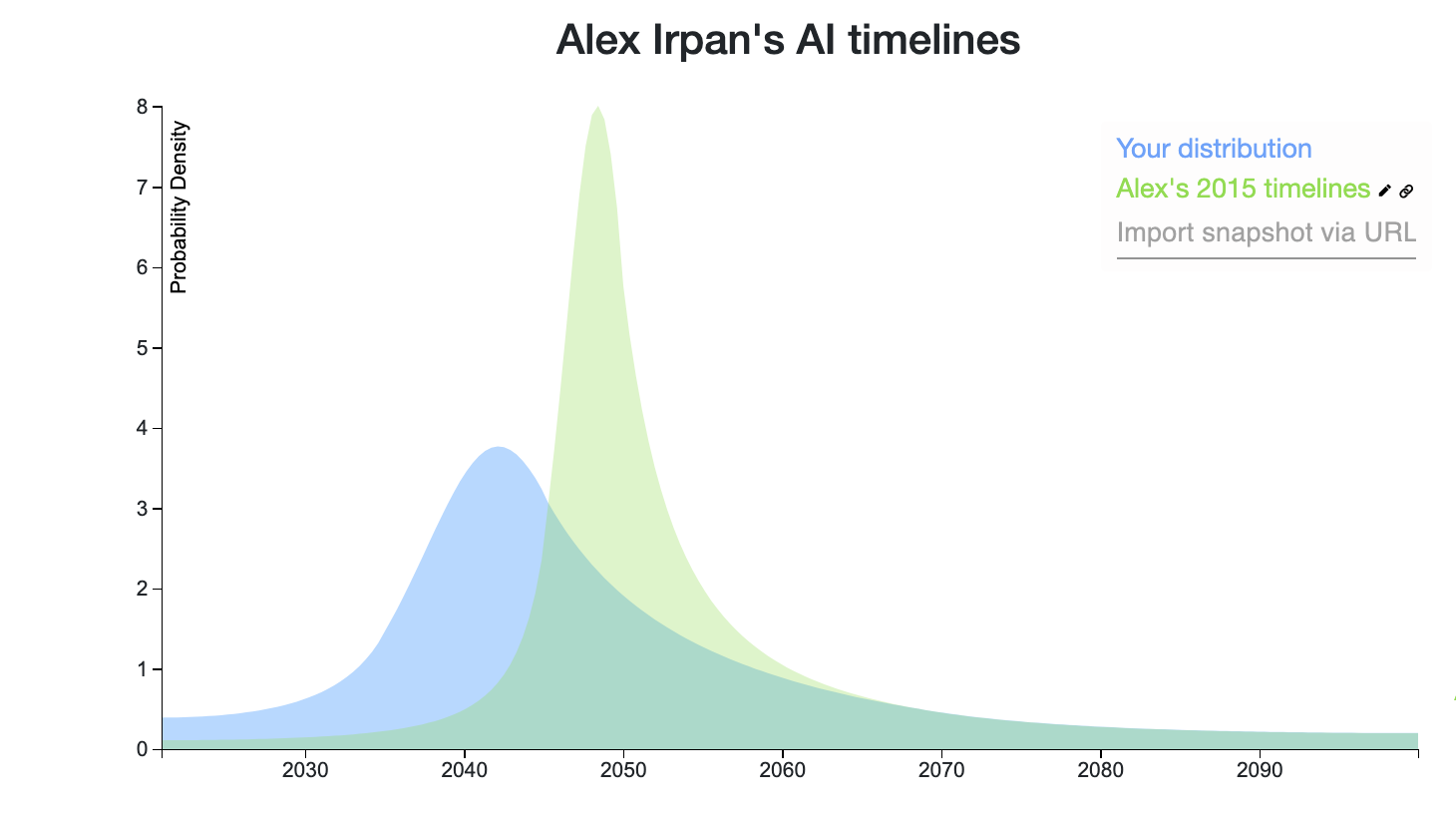

Yea this was a lot more obvious to me when I plotted visually: https://elicit.ought.org/builder/om4oCj7jm

(NB: I work on Elicit and it's still a WIP tool)

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-21T21:07:56.896Z · LW(p) · GW(p)

Ahhhh this is so nice! I suspect a substantial fraction of people would revise their timelines after seeing what they look like visually. I think encouraging people to plot out their timelines is probably a pretty cost-effective intervention.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-08-21T21:09:34.895Z · LW(p) · GW(p)

Sounds like we could have a thread for that. The image above looks great, would be interested in seeing more comparisons like it, if they're easy to generate using elicit.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-21T21:23:46.613Z · LW(p) · GW(p)

Yes, let's please create a thread. If you don't I will. Here's mine: (Ignore the name, I can't figure out how to change it)

https://elicit.ought.org/builder/xt516PmHt

Replies from: Benito, jungofthewon↑ comment by Ben Pace (Benito) · 2020-08-21T21:28:45.154Z · LW(p) · GW(p)

Can you make the thread (make it a question post?) and share it with me? Then I'll suggest any rewrites and we can publish it?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-22T00:23:38.733Z · LW(p) · GW(p)

I shared it with you and jungofthewon; maybe they could help me include some sort of instructions for how to use Elicit?

Replies from: Amandango↑ comment by Amandango · 2020-08-22T00:38:36.558Z · LW(p) · GW(p)

I can help with this if you share the post with me!

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-22T01:06:04.369Z · LW(p) · GW(p)

Thanks so much!

↑ comment by jungofthewon · 2020-08-21T22:45:54.678Z · LW(p) · GW(p)

You want to change "Your Distribution" to something like "Daniel's 2020 distribution"?

Replies from: daniel-kokotajlo, daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-22T00:24:14.124Z · LW(p) · GW(p)

Could you help me by writing some instructions for how to use Elicit, to be put in my question on the topic? (See discussion with Ben above)

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-22T00:13:20.663Z · LW(p) · GW(p)

Yes, but I don't know how.

comment by sairjy · 2020-08-20T10:22:44.317Z · LW(p) · GW(p)

If 65% of the AI improvements will come from compute alone, I find quite surprising that the post author assigns only 10% probability of AGI by 2035. By that time, we should have between 20x to 100x compute per $. And we can also easily forecast that AI training budgets will increase 1000x easily over that time, as a shot to AGI justifies the ROI. I think he is putting way too much credit on the computational performance of the human brain.

comment by ESRogs · 2020-08-22T01:40:52.395Z · LW(p) · GW(p)

For this post, I’m going to take artificial general intelligence (AGI) to mean an AI system that matches or exceeds humans at almost all (95%+) economically valuable work.

I'm not sure this is such a good operationalization. I believe that if you looked at the economically valuable work that humans were doing 200 hundred years ago (mostly farming, as I understand), more than 95% of it is automated today. And we don't spend 95% of GDP on farming today.

So I'm not quite sure what the above means. Does it mean 95% of GDP spent on compute? Or unemployment at 95%? Or 95% of jobs that are done today by people are done then by computers? If that last one, then how do you measure it if jobs have morphed s.t. there's neither a human nor a computer clearly doing a job that today is done by a human?

I think that productivity is going to increase. And humans will continue to do jobs where they have a comparative advantage relative to computers. And what those comparative advantages are will morph over time. (And in the limit, if I'm feeling speculative, I think being a producer and a consumer might merge, as one of the last areas where you'll have a comparative advantage is knowing what your own wants are.)

But given that prices will be set based on supply and demand it's not quite obvious to me how to measure when 95% of economically valuable work is done by computers. Because, for a given task that involves both humans and computers, even if computers are doing "more" of the work, you won't necessarily spend more on the computers than the people, if the supply of compute is plentiful. So, in some hard-to-define sense, computers may be doing most of the work, but just measured in dollars they might not be. And one could argue that that is already the case (since we've automated so much of farming and other things that people used to do).

Alternatively, you could operationalize 95% of economically valuable work being done by computers as the total dollars spent on compute being 20x all wages. That's clear enough I think, but I suspect is not exactly what Alex had in mind. And also, I think it may just be a condition that never holds, even when AI is strongly superhuman, depending on how we end up distributing the spoils of AI, and what kind of economic system we end up with at that point.