Double Crux

post by CFAR!Duncan (CFAR 2017) · 2022-07-24T06:34:15.305Z · LW · GW · 9 commentsContents

Interlude: The Good Faith Principle Identifying cruxes In Search of More Productive Disagreement Playing Double Crux The Double Crux algorithm Double Crux—Further Resources None 9 comments

Author's note: There is a preexisting standalone essay on double crux (also written by Duncan Sabien) available here [LW · GW]. The version in the handbook is similar, but has enough disoverlap that it seemed worth including it rather than merely adding the standalone post to the sequence.

Missing from this sequence is a writeup of "Finding Cruxes," a CFAR class developed primarily by instructor Eli Tyre as a prerequisite to Double Crux, giving models and concrete suggestions on how to more effectively introspect into one's own belief structure. At the time of this post, no writeup existed; if one appears in the future, it will be added.

Epistemic status: Preliminary/tentative

The concepts underlying the Double Crux technique (such as Aumann’s Agreement Theorem and psychological defensiveness) are well-understood, but generally limited in scope. Our attempt to expand them to cover disagreements of all kinds is based on informal theories of social interactions and has met with some preliminary success, but is still being iterated and has yet to receive formal study.

There are many ways to categorize disagreements. We can organize them by content—religion, politics, relationships, work. We can divide them into questions of opinion and matters of fact. We can talk about disagreements that “matter” (in which we try very hard to convince others of our view) versus those where we can calmly agree to disagree.

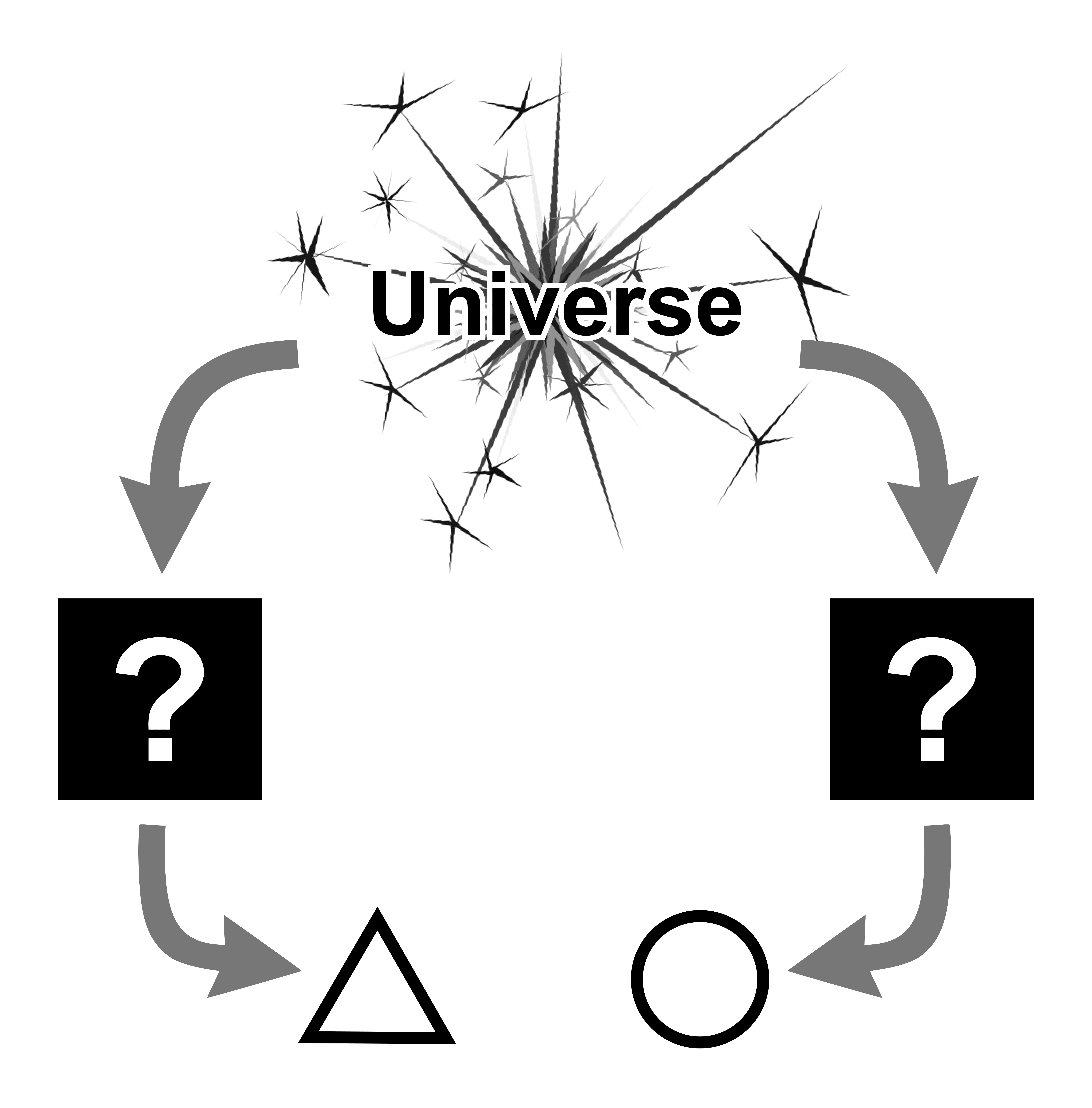

In most cases, disagreements revolve around the outputs of models. What we mean by that is that each person in a disagreement has a 1) certain set of base assumptions about the world, 2) a certain toolkit of analyses, algorithms, and perspectives that they bring to bear on those assumptions, and 3) a number of conclusions that emerge from the combination of the two. Every person is essentially a black box which takes in sense data from the universe, does some kind of processing on that sense data, and then forms beliefs and takes actions as a result.

This is important, because often disagreements—especially the ones that feel frustrating or unproductive—focus solely on outputs. Alice says “We need to put more resources into space exploration,” and Bob says “That’d be a decadent waste,” and the conversation stops moving forward because the issue has now become binary, atomic, black-and-white—it’s now about everyone’s stance on space, rather than being about improving everyone’s mutual understanding of the shape of the world.

The double crux perspective claims that the “space or not space” question isn’t particularly interesting, and that a better target for the conversation might be something like “what would we have to know about the universe to confidently answer the ’space or not space’ question?” In other words, rather than seeing the disagreement as a clash of outputs, double crux encourages us to see it as a clash of models.

(And their underpinnings.)

Alice has a certain set of beliefs about the universe which have led her to conclude “space!” Bob has a set of beliefs that have caused him to conclude “not space!” If we assume that both Alice and Bob are moral and intelligent people who are capable of mature reasoning, then it follows that they must have different underlying beliefs—otherwise, they would already agree. They each see a different slice of reality, which means that they each have at least the potential to learn something new by investigating the other’s perspective.

Of course, the potential to learn something new doesn’t always mean you want to. There are any number of reasons why double crux might be the wrong tool for a given interaction—you may be under time pressure, the issue may not be important, your dynamic with this person might be strained, etc. The key is to recognize that this deeper perspective is a tool in your toolkit, not to require yourself to use it for everything.

Interlude: The Good Faith Principle

About that whole “both Alice and Bob are moral and intelligent people” thing ... isn’t it sometimes the case that the person on the other side of the debate isn’t moral or intelligent? I mean, if we walk around assuming that others are always acting in good faith, aren’t we exposing ourselves to the risk of being taken advantage of, or of wasting a lot of time charitably trying to change minds that were never willing to change in the first place?

Yes—sort of. The Good Faith Principle states that, in any interaction (absent clear and specific evidence to the contrary), one should assume that all agents are acting in good faith—that all of them have positive motives and are seeking to make the world a better place. And yes, this will be provably wrong some fraction of the time, which means that you may be tempted to abandon it preemptively in cases where your opponent is clearly blind, stupid, or evil. But such judgements are uncertain, and vulnerable to all sorts of biases and flaws-of-reasoning, which raises the question of whether one should err on the side of caution, or charity.

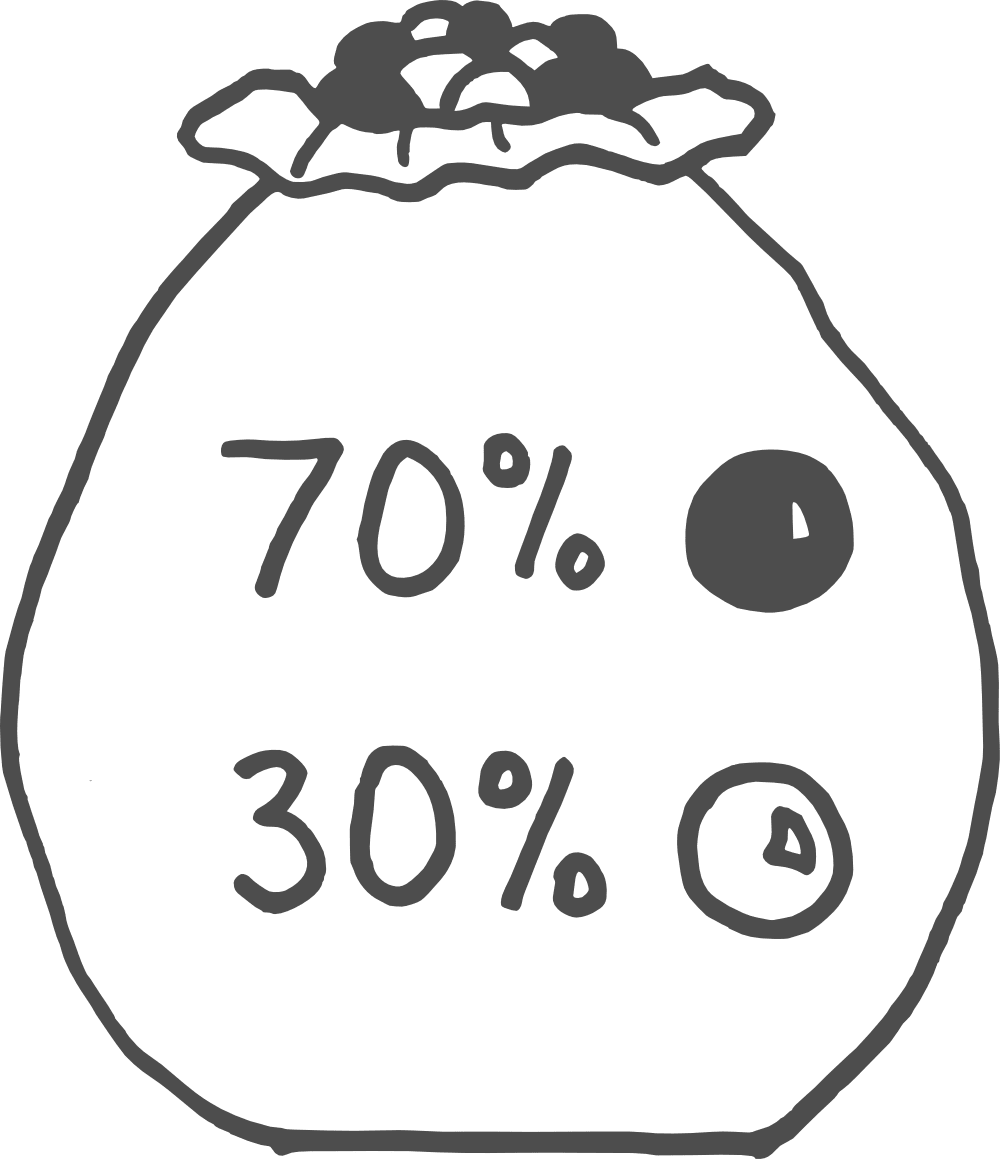

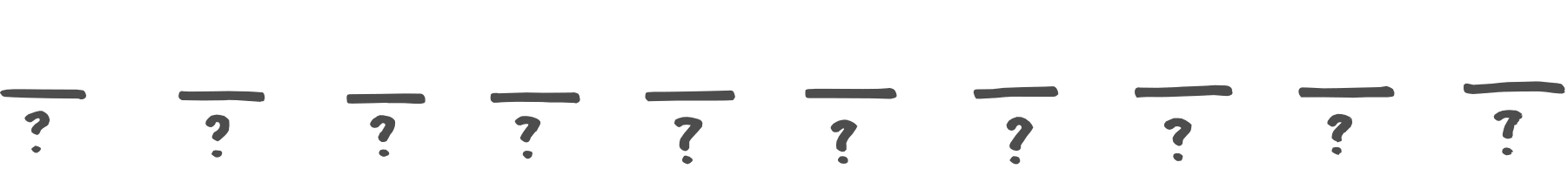

Consider the following metaphor, which seeks to justify the GFP. I have a bag of marbles, of which 70% are black and 30% are white (for the sake of argument, imagine there is a very large number of marbles, such that we don’t have to worry about the proportions meaningfully changing over the course of the thought experiment).

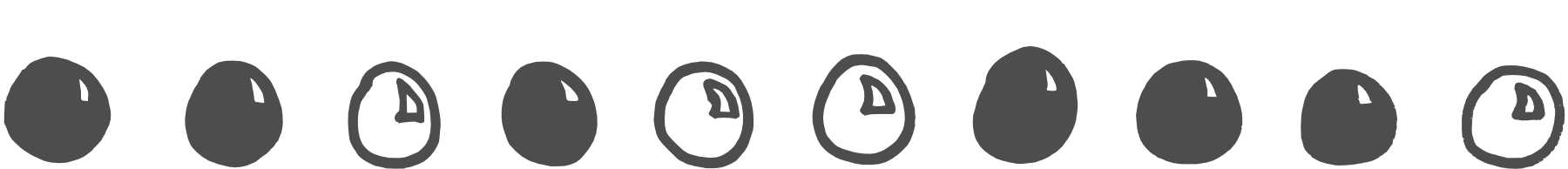

I draw ten marbles out of the bag as a demonstration, in the following order:

Broadly speaking, this result makes sense. There are four white marbles instead of the three we would ideally expect, but that’s well within variance—the pattern BBWBWWBBBW is certainly consistent with a 70/30 split.

Now imagine that I ask you to predict the next ten marbles, in order:

What sort of prediction do you make?

Many people come up with something along the lines of BWBBBWBBBW. This makes intuitive, emotional sense, because it looks like a 70/30 split, similar to the example above. However, it’s a mistake, as one can clearly tell by treating each separate prediction individually. Your best prediction for the first marble out of the bag is B, as is your best prediction for the second, and the third, and the fourth ...

There’s something squidgy about registering the prediction BBBBB.... It feels wrong to many people, in part because we know that there ought to be some Ws in there. For a lot of us, there’s an urge to try “sprinkling in” a few.

But each individual time we predict W, we reduce our chances of being right from 70% to 30%. By guessing all Bs, we put an upper bound on how wrong we can possibly be. Over a large enough number of predictions, we’ll get 30% of them wrong, and no more, whereas if we mix and match, there’s no guarantee we’ll put the Ws in the right places.

What does any of this have to do with disagreements and Double Crux?

Essentially, it’s a reminder that even in a system containing some bad actors, we minimize our chances of misjudging people if we start from the assumption that everyone is acting in good faith. Most people are mostly good, after all—people don’t work by magic; they come to their conclusions because of causal processes that they observe in the world around them. When someone appears to be arguing for a position that sounds blind, stupid, or evil, it’s much more likely that the problem lies somewhere in the mismatch between their experiences and beliefs and yours than in actual blindness, stupidity, or malice.

By adopting the GFP as a general rule, you’re essentially guessing B for every marble—you’ll absolutely be wrong from time to time, but you’ll both be leaving yourself maximally open to gaining new knowledge or perspective, and also limiting your misjudgments of people to the bare minimum (with those misjudgments leaning toward giving people the benefit of the doubt).

Identifying cruxes

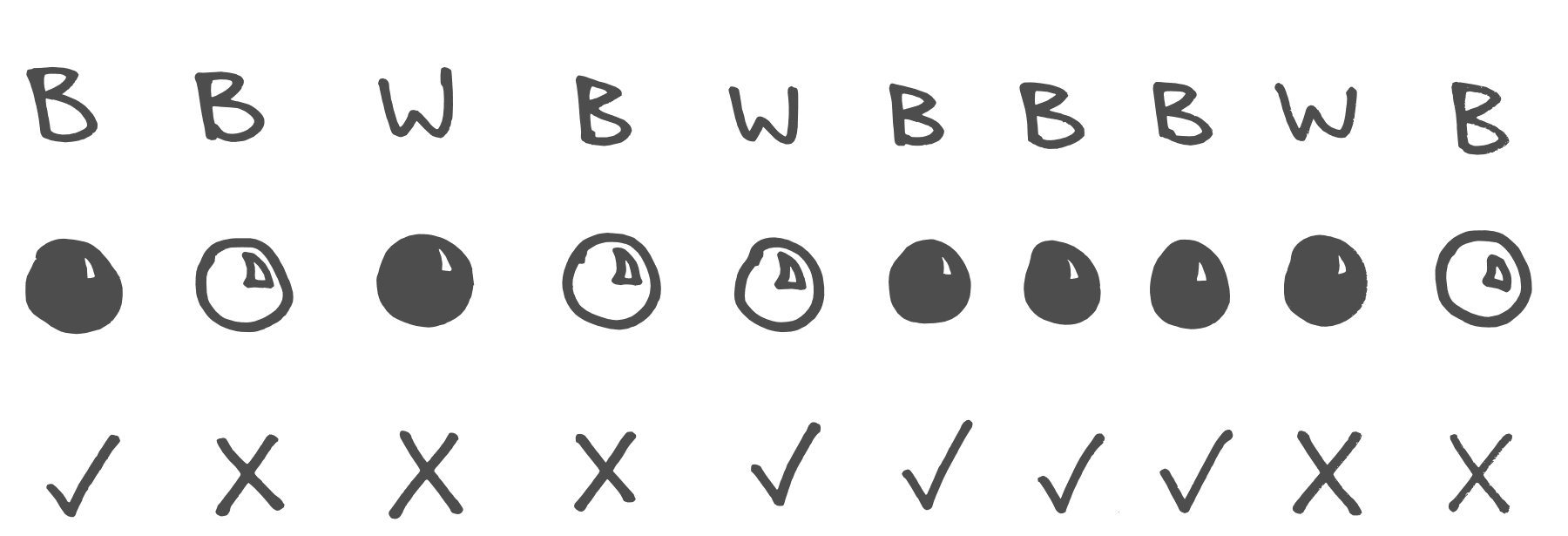

For any given belief, there is likely to be at least one crux—an underlying, justifying belief that supports and upholds the overall conclusion. People don’t simply believe in health care reform or free market capitalism or the superiority of Jif to other brands of peanut butter—they believe those things because they have deeper assumptions or principles about what is right or good or true. For example:

- Some socially liberal activists argue for tighter gun control laws because they believe that gun violence is a high priority for anyone wishing to save lives, that gun control laws tend to reduce gun violence and gun deaths, and that those laws will not curtail guaranteed rights and freedom in any meaningful or concerning way.

- Some socially conservative activists argue for a reduction in the size of government because they believe that government programs tend to be inefficient and wasteful, that those programs incorrectly distribute the burden of social improvement, and that local institutions like credit unions, churches, and small businesses can more accurately address the needs of the people.

- Some mental health professionals and spiritual leaders argue for principles of forgiveness and reconciliation (even in extreme cases) because they believe that grudges and bitterness have negative effects on the people holding them, that reconciliation tends to prevent future problems or escalation, and that fully processing the emotions resulting from trauma or conflict can spur meaningful personal growth.

None of these examples are exhaustive or fully representative of the kinds of people who hold these beliefs or why they hold them, but hopefully the basic pattern is clear—given belief A, there usually exist beliefs B, C, and D which justify and support A.

In particular, among beliefs B, C, and D are things with the potential to change belief A. If I think that schools should have dress codes because dress codes reduce distraction and productively weaken socioeconomic stratification, and you can show clear evidence that neither of those effects actually occur, then I may well cease to believe that dress codes are a positive intervention (or else discover that I had other unstated reasons for my belief!). By identifying the crux of the issue, we’ve changed the conversation from one about surface conclusions to one about functional models of how the world works—and functional models of how the world works can be directly addressed with research and experimentation.

You might find it difficult to assemble facts to answer the question “Should schools have dress codes?” because I might have any number of different reasons for my stance. But once you know why I believe what I believe, you can instead look into “Do dress codes affect student focus?” and “Do dress codes promote equal social treatment between students?” which are clearer, more objective questions that are more likely to have a definitive answer.

In Search of More Productive Disagreement

Typically, when two people argue, they aren't trying to find truth—they're trying to win.

If you're trying to win, you're incentivized to obscure the underlying structure of your beliefs. You don't want to let your opponent know where to focus their energy, to more efficiently defeat you. You might, for instance, bury your true crux in a list of eleven supporting points, hoping (whether consciously or not) that they'll waste a lot of time overturning the irrelevant ones.

Meanwhile, you're lobbing your best arguments like catapult loads over at their tower-of-justifications, hoping to knock out a crucial support.

This process is wasteful, and slow! You're spending the bulk of your processing power on modeling their argument, while they try to prevent you from doing so.

Sometimes, this is the best you can manage. Sometimes you do indeed need to play to win.

But if you notice that this is not actually the case—if you realize that the person you're talking to is actually reasonable, and reasonably willing to cooperate with you, and that you just slid into an adversarial mode through sheer reflex—you can both recoup a tremendous amount of mental resources by simply changing the game.

After all, you have a huge advantage at knowing the structure of your own beliefs, as they do at knowing theirs! If you're both actually interested in getting to the truth of the matter, you can save time and energy by just telling each other where to focus attention.

Playing Double Crux

The actual victory condition for double crux isn’t the complete resolution of the argument (although that can and does happen). It’s agreement on a shared causal model of the world—in essence, you’ve won when both you and your partner agree to the same if-then statements.

(e.g. you both agree that if uniforms prevent bullying, then schools should have them, and if they do not, then schools should not.)

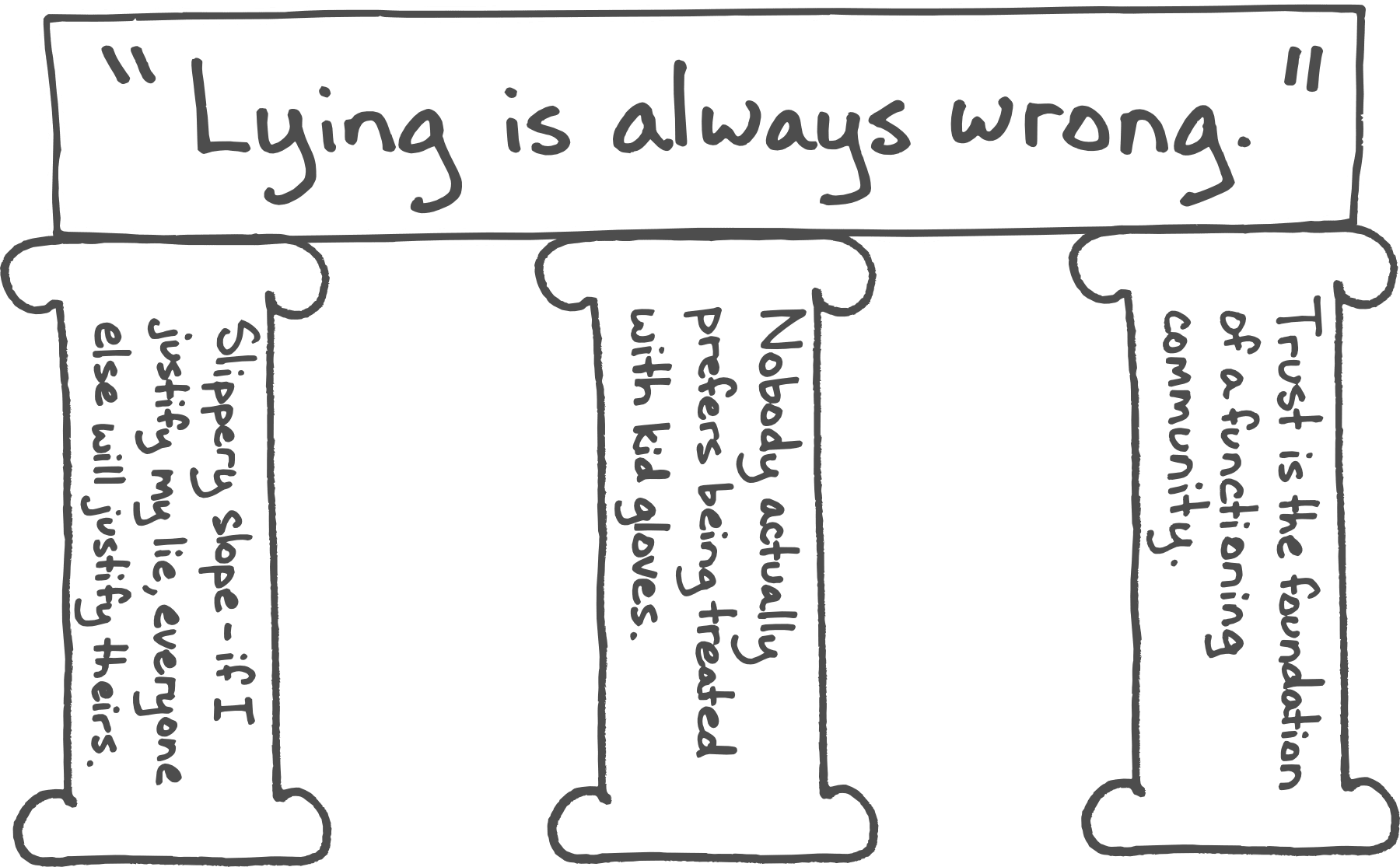

To get there, you and your partner need to find a double crux—a belief or statement that is a crux for you and for your partner. More formally, if you and your partner start by disagreeing about the truth of statement A, then you’re looking for a statement B about which you also disagree, and which has the potential to influence either of you to change your mind about A.

This isn’t an easy or trivial task—this is the whole process. When you engage in double crux with a partner, you’re attempting to compare models and background beliefs instead of conclusions and surface beliefs. You’re looking for the why of your disagreement, and for places where either of you can potentially learn from the other (or from the world/research/experiments).

The Double Crux algorithm

1. Find a disagreement with another person

- A case where you believe one thing and they believe the other

- A case where you and the other person have different confidences (e.g. you think X is 60% likely to be true, and they think it’s 90%)

2. Operationalize the disagreement

- Define terms to avoid getting lost in semantic confusions that miss the real point

- Find specific test cases—instead of (e.g.) discussing whether you should be more outgoing, instead evaluate whether you should have said hello to Steve in the office yesterday morning

- Wherever possible, try to think in terms of actions rather than beliefs—it’s easier to evaluate arguments like “we should do X before Y” than it is to converge on “X is better than Y.”

3. Seek double cruxes

- Seek your own cruxes independently, and compare with those of the other person to find overlap

- Seek cruxes collaboratively, by making claims (“I believe that X will happen because Y”) and focusing on falsifiability (“It would take A, B, or C to make me stop believing X”)

4. Resonate

- Spend time “inhabiting” both sides of the double crux, to confirm that you’ve found the core of the disagreement

- Imagine the resolution as an if-then statement, and use your inner sim and other checks to see if there are any unspoken hesitations about the truth of that statement

5. Repeat!

This process often benefits from pencil and paper, and from allotting plenty of time to hash things out with your partner. Sometimes it helps to independently brainstorm all of the cruxes you can think of for your belief, and then compare lists, looking for overlap. Sometimes, it helps to talk through the process together, with each of you getting a feel for which aspects of the argument seem crucial to the other (note that crucial and crux share the same etymological root). Sometimes, exploring exactly what each of you means by the words you’re using causes the disagreement to evaporate, and sometimes it causes you to recognize that you disagree about something else entirely.

(In the book Superforecasting, Philip Tetlock relates a story from Sherman Kent, a member of the CIA in the 50s, who discovered that analysts on his team who had all agreed to use the phrase “serious possibility” in a particular report in fact had different numbers in mind, ranging from 20% likely to 80% likely—exact opposite probabilities!)

Often, a statement B which seems to be a productive double crux will turn out to need further exploration, and you’ll want to go another round, finding a C or a D or an E before you reach something that feels like the core of the issue. The key is to cultivate a sense of curiosity and respect—when you choose to double crux with someone, you’re not striving against them; instead, you’re both standing off to one side of the issue, looking at it together, discussing the parts you both see, and sharing the parts that each of you has unique perspective on. In the best of all possible worlds, you and your partner will arrive at some checkable fact or runnable experiment, and end up with the same posterior belief.

But even if you don’t make it that far—even if you still end up disagreeing about probabilities or magnitudes, or you definitively check statement B and one of you discovers that your belief in A didn’t shift, or you don’t even manage to narrow it down to a double crux but run out of time while you’re still exploring the issue—you’ve made progress simply by refusing to stop at “agree to disagree.” You won’t always fully resolve a difference in worldview, but you’ll understand one another better, hone a habit-of-mind that promotes discourse and cooperation, and remind yourself that beliefs are for true things and thus—sometimes—minds are for changing.

Double Crux—Further Resources

If two people have access to the same information and the same lines of reasoning, then (with certain idealized assumptions) they may be expected to reach identical conclusions. Thus, a disagreement is a sign that the two of them are reasoning differently, or that one person has information which the other does not. Aumann’s Agreement Theorem is a formalization of the claim that perfect Bayesian reasoners should reach agreement under idealized assumptions.

https://en.wikipedia.org/wiki/Aumann%27s_agreement_theorem

A leading theory of defensiveness in the field of social psychology is that people become defensive when they perceive something (such as criticism or disagreement) as a challenge to their identity as a basically capable, virtuous, socially-respected person. Sherman and Cohen (2002) review the research on psychological defensiveness, including how defensiveness interferes with reasoning and ways of countering defensiveness.

Sherman, D. K., & Cohen, G. L. (2002). Accepting threatening information: Self-affirmation and the reduction of defensive biases. Current Directions in Psychological Science, 11, 119-123. http://goo.gl/JD5lW

People tend to be more open to information inconsistent with their existing beliefs when they are in a frame of mind where it seems like a success to be able to think objectively and update on evidence, rather than a frame of mind where it is a success to be a strong defender of one’s existing stance.

Cohen, G.L., Sherman, D.K., Bastardi, A., McGoey, M., Hsu, A., & Ross, L. (2007). Bridging the partisan divide: Self-affirmation reduces ideological closed-mindedness and inflexibility in negotiation. Journal of Personality and Social Psychology, 93, 415-430. http://goo.gl/ibpGf

People have a tendency towards “naive realism,” in which one’s own interpretation of the world is seen as reality and people who disagree are seen as ill-informed, biased, or otherwise irrational.

Ross, L., & Ward, A. (1996). Naive realism in everyday life: Implications for social conflict and misunderstanding. In T. Brown, E. S. Reed & E. Turiel (Eds.), Values and knowledge (pp. 103-135). Hillsdale, NJ: Erlbaum. http://goo.gl/R23UX

9 comments

Comments sorted by top scores.

comment by tailcalled · 2022-07-24T20:46:46.721Z · LW(p) · GW(p)

I think this is useful, but also it seems to me that it only really works for hyperindividualistic contexts that are not based on trust in the wider culture. A culture may come to a consensus that is not explicitly justified, and one might trust the culture and adopt its consensus.

comment by Information Project (information-project) · 2023-07-19T14:29:06.018Z · LW(p) · GW(p)

A little criticism. There is a problem: very little is said about external sources of knowledge.

In the chapter "Playing Double Crux" the reader's attention is focused on the beliefs of the opponents (find the belief "B" on which the truth of "A" depends). Before that, it was said, that you don't need to hide your own belief structure. And in general, throughout the text, a lot of attention is focused on the beliefs, that "exists in the head" of the participants, and they only need, to be obtained from there (..and if you're lucky, it will be the desired judgment "B", which both participants will call the "root of the disagreement", and will be able to verify in practice).

Only at the end of the chapter, a few words are written: "..potentially learn from the other (or from the world/research/experiments)".

Now I will tell you what negative consequences, such focusing has led to, in my experience with several rationalists, who practice D.C.:

We discussed a topic, that is close in meaning to the evaluation of the actions of a surgeon in a hospital, who cuts a patient. Our disagreement: Is the surgeon cutting correctly? (notice: participants do not know medical science)

It seems, that it would be rational to study books on surgery & medicine, take a course at the university, or at least watch a video on YouTube. But, since this methodology did not pay enough attention to the need to deepen their knowledge of the real world (surgery), the participants unsuccessfully tried to "reinvent the wheel", and find testable beliefs "B", somewhere on the surface, and among their existing knowledge.. It's very much like a "cargo cult", where a tribe tries to build an airplane out of sticks, using only their knowledge, and no intention of reading an aerodynamics (or surgery) textbook.

CONCLUSION: I am sure it would be helpful, if the authors and readers, take this point into account, and add focus to the study of the real world: Look for the argument "B" in books, and from specialists, and not reinvent the wheel in the course of the dispute.

comment by Leon Lang (leon-lang) · 2023-02-09T19:52:08.114Z · LW(p) · GW(p)

Summary

- Disagreements often focus on outputs even though underlying models produced those.

- Double Crux idea: focus on the models!

- Double Crux tries to reveal the different underlying beliefs coming from different perspectives on reality

- Good Faith Principle:

- Assume that the other side is moral and intelligent.

- Even if some actors are bad, you minimize the chance of error if you start with the prior that each new person is acting in good faith

- Identifying Cruxes

- For every belief A, there are usually beliefs B, C, D such that their believed truth supports belief A

- These are “cruxes” if them not being true would shake the belief in A.

- Ideally, B, C, and D are functional models of how the world works and can be empirically investigated

- If you know your crux(es), investigating it has the chance to change your belief in A

- For every belief A, there are usually beliefs B, C, D such that their believed truth supports belief A

- In Search of more productive disagreement

- Often, people obscure their cruxes by telling many supporting reasons, most of which aren’t their true crux.

- This makes it hard for the “opponent” to know where to focus

- If both parties search for truth instead of wanting to win, you can speed up the process a lot by telling each other the cruxes

- Often, people obscure their cruxes by telling many supporting reasons, most of which aren’t their true crux.

- Playing Double Crux

- Lower the bar: instead of reaching a shared belief, find a shared testable claim that, if investigated, would resolve the disagreement.

- Double Crux: A belief that is a crux for you and your conversation partner, i.e.:

- You believe A, the partner believes not A.

- You believe testable claim B, the partner believes not B.

- B is a crux of your belief in A and not B is a crux of your partner’s belief in not B.

- Investigating conclusively whether B is true may resolve the disagreement (if the cruxes were comprehensive enough)

- The Double Crux Algorithm

- Find a disagreement with another person (This might also be about different confidences in beliefs)

- Operationalize the disagreement (Avoid semantic confusions, be specific)

- Seek double cruxes (Seek cruxes independently and then compare)

- Resonate (Do the cruxes really feel crucial? Think of what would change if you believed your crux to be false)

- Repeat (Are there underlying easier-to-test cruxes for the double cruxes themselves?)

comment by gjm · 2022-07-24T21:10:40.512Z · LW(p) · GW(p)

This presentation of the technique does better than (my possibly bad recollection of) the last time I saw it explained in one important respect: acknowledging that in any given case there very well might not be a double-crux to find. I'd be happier if it were made more explicit that this is a thing that happens and that when it does it doesn't mean "you need to double-crux harder", it may simply be that the structure of your beliefs, or of the other person's, or of both together, doesn't enable your disagreement to be resolved or substantially clarified using this particular technique.

(if empirically it usually turns out in some class of circumstances that double-crux works, then I think this is a surprising and interesting finding, and any presentation of the technique should mention this and maybe offer explanations for why it might be. My guess is that (super-handwavily) say half the time a given top-level belief's support is more "disjunctive" than "conjunctive", so that no single one of the things that support it would definitely kill it if removed, and that typically if my support for belief X is more "conjunctive" then your support for belief not-X is likely to be more "disjunctive". And you only get a double-crux when our beliefs both have "conjunctive" support (and when, furthermore, there are bits in the two supports that match in the right way).

Nitpicky remark: Of course in a rather degenerate sense any two people with a disagreement have a double-crux: if I think A and you think not-A, then A/not-A themselves are a double-crux: I would change my mind about A if I were shown to be wrong about A, and you would change your mind about not-A if you were shown to be wrong about not-A :-). Obviously making this observation is not going to do anything to help us resolve or clarify our disagreement. So I take it the aim is to find genuinely lower-level beliefs, in some sense, that have the double-crux relationship.

Further nitpicky-remark: even if someone's belief that A is founded on hundreds of things B1, B2, ... none of which is close to being cruxy, you can likely synthesize a crux by e.g. combining most of the Bs. This feels only slightly less cheaty than taking A itself and calling it a crux. Again, finding things of this sort probably isn't going to help us resolve or clarify our disagreement. So I take it the aim is to find reasonably simple genuinely lower-level beliefs that have the double-crux relationship. And that's what I would expect can often not be done, even in principle.

(I have a feeling I may have had a discussion like this with someone on LW before, but after a bit of searching I haven't found it. If it turns out that I have and my comments above have already been refuted by someone else, my apologies for the waste of time :-).)

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-07-25T01:00:00.531Z · LW(p) · GW(p)

The claim of CFAR (based on instructors' experience in practice) is that a single B represents something like 60-90% of the crux-weight underlying A "a surprising amount of the time."

Like, I imagine that most people would guess there is a single crucial support maybe 5% of the time and the other 95% of the time there are a bunch of things, none of which are an order of magnitude more important than any other.

But it seems like there really is "a crux" maybe ... a third of the time?

Here I'm wishing again that we could've gotten an Eli Tyre writeup of Finding Cruxes at some point.

Replies from: SaidAchmiz, gjm↑ comment by Said Achmiz (SaidAchmiz) · 2022-07-25T14:39:04.411Z · LW(p) · GW(p)

Consider this hypothetical scenario:

In the course of you teaching me the double crux technique, we attempt to find a crux for a belief of mine. Suppose that there is no such crux. However, in our mutual eagerness to apply this cool new technique—which you (a person with high social status in my social circle, and possessed of some degree of personal charisma) are presenting to me, as a useful and proven thing, which lots of other high-status people use—we confabulate some “crux” for my belief. This “faux crux” is not really a crux, but with some self-deception I can come to believe it to be such. You declare success for the technique; I am impressed and satisfied. You file this under “we found a crux this time, as in 60–90% of other times”.

Do you think that this scenario is plausible? Implausible?

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-07-26T00:18:02.156Z · LW(p) · GW(p)

Plausible. But I (at least attempted to) account for this prior to giving the number.

Like, the number I give matches my own sense, introspecting on my own beliefs, including doing sensible double-checks and cross-checks with other very different tools such as Focusing or Murphyjitsu and including e.g. later receiving the data and sometimes discovering that I was wrong and have not updated in the way I thought I would.

I think you might be thinking that the "a surprising amount of the time" claim is heavily biased toward "immediate feedback from people who just tried to learn it, in a context where they are probably prone to various motivated cognitions," and while it's not zero biased in that way, it's based on lots of feedback from not-that-context.

↑ comment by gjm · 2022-07-25T11:01:41.026Z · LW(p) · GW(p)

Interesting.

So if there's a crux 1/3 of the time, and if my having a crux and your having a crux are independent (they surely aren't, but it's not obvious to me which way the correlation goes), we expect there to be cruxes on both sides about 10% of the time, which means it seems like it would be surprising if there were an available double-crux more than about 5% of the time. Does that seem plausible in view of CFAR experience?

Of course double-cruxing could be a valuable technique in many cases where there isn't actually a double-crux to be found: it encourages both participants to understand the structure of their beliefs better, to go out of the way to look for things that might refute or weaken them, to pay attention to one another's positions ... What do you say to the idea that the real value in double-cruxing isn't so much that sometimes you find double-cruxes, as that even when you don't it usually helps you both understand the disagreement better and engage productively with one another?

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-07-26T00:20:35.059Z · LW(p) · GW(p)

Actually finding a legit double crux (i.e. a B that both parties disagree on, that is a crux for A that both parties disagree on) happening in the neighborhood of 5% of the time sounds about right.

More and more, CFAR leaned toward "the spirit of double crux," i.e. seek to move toward getting resolution on your own cruxes, look for more concrete and more falsifiable things, assume your partner has reasons for their beliefs, try to do less adversarial obscuring of your belief structure, rather than "literally play the double crux game."