Double Crux — A Strategy for Mutual Understanding

post by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2017-01-02T04:37:25.683Z · LW · GW · Legacy · 108 commentsContents

Preamble Casus belli Prerequisites How to play Methods Pitfalls Algorithm 1. Find a disagreement with another person 2. Operationalize the disagreement 3. Seek double cruxes 4. Resonate 5. Repeat! Conclusion None 108 comments

Preamble

Double crux is one of CFAR's newer concepts, and one that's forced a re-examination and refactoring of a lot of our curriculum (in the same way that the introduction of TAPs and Inner Simulator did previously). It rapidly became a part of our organizational social fabric, and is one of our highest-EV threads for outreach and dissemination, so it's long overdue for a public, formal explanation.

Note that while the core concept is fairly settled, the execution remains somewhat in flux, with notable experimentation coming from Julia Galef, Kenzi Amodei, Andrew Critch, Eli Tyre, Anna Salamon, myself, and others. Because of that, this post will be less of a cake and more of a folk recipe—this is long and meandering on purpose, because the priority is to transmit the generators of the thing over the thing itself. Accordingly, if you think you see stuff that's wrong or missing, you're probably onto something, and we'd appreciate having them added here as commentary.

Casus belli

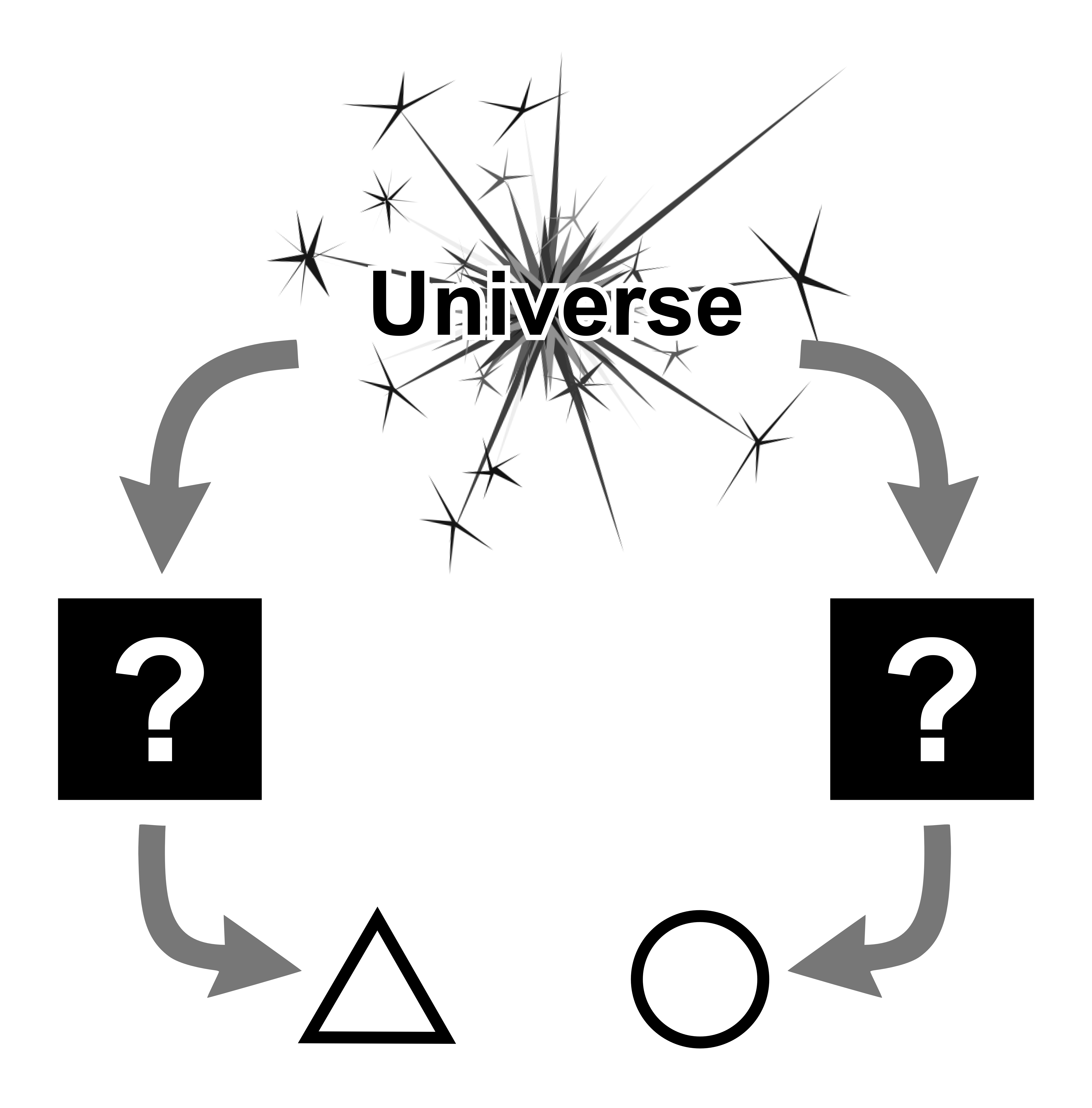

To a first approximation, a human can be thought of as a black box that takes in data from its environment, and outputs beliefs and behaviors (that black box isn't really "opaque" given that we do have access to a lot of what's going on inside of it, but our understanding of our own cognition seems uncontroversially incomplete).

When two humans disagree—when their black boxes output different answers, as below—there are often a handful of unproductive things that can occur.

The most obvious (and tiresome) is that they'll simply repeatedly bash those outputs together without making any progress (think most disagreements over sports or politics; the people above just shouting "triangle!" and "circle!" louder and louder). On the second level, people can (and often do) take the difference in output as evidence that the other person's black box is broken (i.e. they're bad, dumb, crazy) or that the other person doesn't see the universe clearly (i.e. they're biased, oblivious, unobservant). On the third level, people will often agree to disagree, a move which preserves the social fabric at the cost of truth-seeking and actual progress.

Double crux in the ideal solves all of these problems, and in practice even fumbling and inexpert steps toward that ideal seem to produce a lot of marginal value, both in increasing understanding and in decreasing conflict-due-to-disagreement.

Prerequisites

This post will occasionally delineate two versions of double crux: a strong version, in which both parties have a shared understanding of double crux and have explicitly agreed to work within that framework, and a weak version, in which only one party has access to the concept, and is attempting to improve the conversational dynamic unilaterally.

In either case, the following things seem to be required:

- Epistemic humility. The number one foundational backbone of rationality seems, to me, to be how readily one is able to think "It's possible that I might be the one who's wrong, here." Viewed another way, this is the ability to take one's beliefs as object, rather than being subject to them and unable to set them aside (and then try on some other belief and productively imagine "what would the world be like if this were true, instead of that?").

- Good faith. An assumption that people believe things for causal reasons; a recognition that having been exposed to the same set of stimuli would have caused one to hold approximately the same beliefs; a default stance of holding-with-skepticism what seems to be evidence that the other party is bad or wants the world to be bad (because as monkeys it's not hard for us to convince ourselves that we have such evidence when we really don't).1

- Confidence in the existence of objective truth. I was tempted to call this "objectivity," "empiricism," or "the Mulder principle," but in the end none of those quite fit. In essence: a conviction that for almost any well-defined question, there really truly is a clear-cut answer. That answer may be impractically or even impossibly difficult to find, such that we can't actually go looking for it and have to fall back on heuristics (e.g. how many grasshoppers are alive on Earth at this exact moment, is the color orange superior to the color green, why isn't there an audio book of Fight Club narrated by Edward Norton), but it nevertheless exists.

- Curiosity and/or a desire to uncover truth. Originally, I had this listed as truth-seeking alone, but my colleagues pointed out that one can move in the right direction simply by being curious about the other person and the contents of their map, without focusing directly on the territory.

At CFAR workshops, we hit on the first and second through specific lectures, the third through osmosis, and the fourth through osmosis and a lot of relational dynamics work that gets people curious and comfortable with one another. Other qualities (such as the ability to regulate and transcend one's emotions in the heat of the moment, or the ability to commit to a thought experiment and really wrestle with it) are also helpful, but not as critical as the above.

How to play

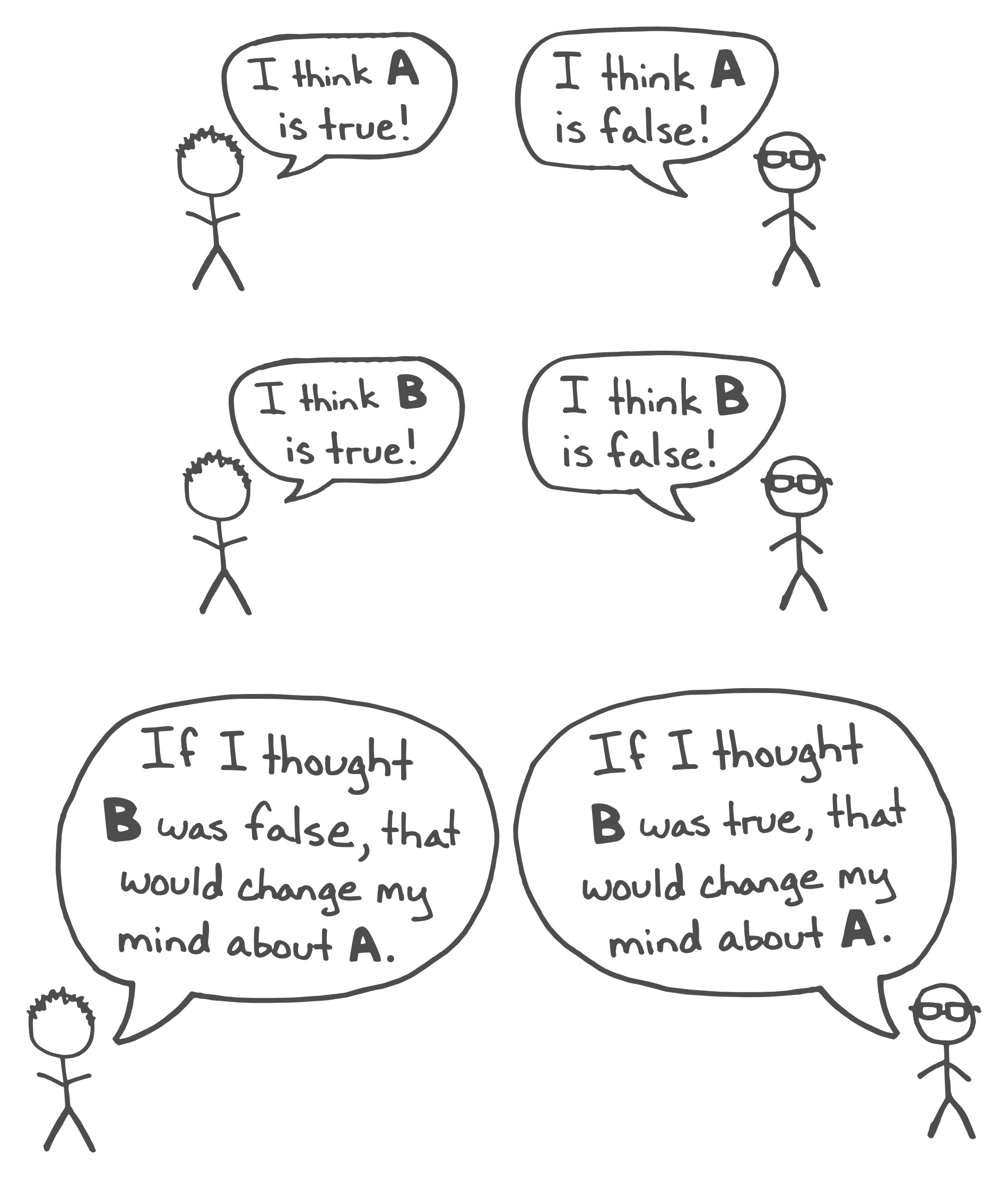

Let's say you have a belief, which we can label A (for instance, "middle school students should wear uniforms"), and that you're in disagreement with someone who believes some form of ¬A. Double cruxing with that person means that you're both in search of a second statement B, with the following properties:

- You and your partner both disagree about B as well (you think B, your partner thinks ¬B).

- The belief B is crucial for your belief in A; it is one of the cruxes of the argument. If it turned out that B was not true, that would be sufficient to make you think A was false, too.

- The belief ¬B is crucial for your partner's belief in ¬A, in a similar fashion.

In the example about school uniforms, B might be a statement like "uniforms help smooth out unhelpful class distinctions by making it harder for rich and poor students to judge one another through clothing," which your partner might sum up as "optimistic bullshit." Ideally, B is a statement that is somewhat closer to reality than A—it's more concrete, grounded, well-defined, discoverable, etc. It's less about principles and summed-up, induced conclusions, and more of a glimpse into the structure that led to those conclusions.

(It doesn't have to be concrete and discoverable, though—often after finding B it's productive to start over in search of a C, and then a D, and then an E, and so forth, until you end up with something you can research or run an experiment on).

At first glance, it might not be clear why simply finding B counts as victory—shouldn't you settle B, so that you can conclusively choose between A and ¬A? But it's important to recognize that arriving at B means you've already dissolved a significant chunk of your disagreement, in that you and your partner now share a belief about the causal nature of the universe.

If B, then A. Furthermore, if ¬B, then ¬A. You've both agreed that the states of B are crucial for the states of A, and in this way your continuing "agreement to disagree" isn't just "well, you take your truth and I'll take mine," but rather "okay, well, let's see what the evidence shows." Progress! And (more importantly) collaboration!

Methods

This is where CFAR's versions of the double crux unit are currently weakest—there's some form of magic in the search for cruxes that we haven't quite locked down. In general, the method is "search through your cruxes for ones that your partner is likely to disagree with, and then compare lists." For some people and some topics, clearly identifying your own cruxes is easy; for others, it very quickly starts to feel like one's position is fundamental/objective/un-break-downable.

Tips:

- Increase noticing of subtle tastes, judgments, and "karma scores." Often, people suppress a lot of their opinions and judgments due to social mores and so forth. Generally loosening up one's inner censors can make it easier to notice why we think X, Y, or Z.

- Look forward rather than backward. In places where the question "why?" fails to produce meaningful answers, it's often more productive to try making predictions about the future. For example, I might not know why I think school uniforms are a good idea, but if I turn on my narrative engine and start describing the better world I think will result, I can often sort of feel my way toward the underlying causal models.

- Narrow the scope. A specific test case of "Steve should've said hello to us when he got off the elevator yesterday" is easier to wrestle with than "Steve should be more sociable." Similarly, it's often easier to answer questions like "How much of our next $10,000 should we spend on research, as opposed to advertising?" than to answer "Which is more important right now, research or advertising?"

- Do "Focusing" and other resonance checks. It's often useful to try on a perspective, hypothetically, and then pay attention to your intuition and bodily responses to refine your actual stance. For instance: (wildly asserts) "I bet if everyone wore uniforms there would be a fifty percent reduction in bullying." (pauses, listens to inner doubts) "Actually, scratch that—that doesn't seem true, now that I say it out loud, but there is something in the vein of reducing overt bullying, maybe?"

- Seek cruxes independently before anchoring on your partner's thoughts. This one is fairly straightforward. It's also worth noting that if you're attempting to find disagreements in the first place (e.g. in order to practice double cruxing with friends) this is an excellent way to start—give everyone the same ten or fifteen open-ended questions, and have everyone write down their own answers based on their own thinking, crystallizing opinions before opening the discussion.

Overall, it helps to keep the ideal of a perfect double crux in the front of your mind, while holding the realities of your actual conversation somewhat separate. We've found that, at any given moment, increasing the "double cruxiness" of a conversation tends to be useful, but worrying about how far from the ideal you are in absolute terms doesn't. It's all about doing what's useful and productive in the moment, and that often means making sane compromises—if one of you has clear cruxes and the other is floundering, it's fine to focus on one side. If neither of you can find a single crux, but instead each of you has something like eight co-cruxes of which any five are sufficient, just say so and then move forward in whatever way seems best.

(Variant: a "trio" double crux conversation in which, at any given moment, if you're the least-active participant, your job is to squint at your two partners and try to model what each of them is saying, and where/why/how they're talking past one another and failing to see each other's points. Once you have a rough "translation" to offer, do so—at that point, you'll likely become more central to the conversation and someone else will rotate out into the squinter/translator role.)

Ultimately, each move should be in service of reversing the usual antagonistic, warlike, "win at all costs" dynamic of most disagreements. Usually, we spend a significant chunk of our mental resources guessing at the shape of our opponent's belief structure, forming hypotheses about what things are crucial and lobbing arguments at them in the hopes of knocking the whole edifice over. Meanwhile, we're incentivized to obfuscate our own belief structure, so that our opponent's attacks will be ineffective.

(This is also terrible because it means that we often fail to even find the crux of the argument, and waste time in the weeds. If you've ever had the experience of awkwardly fidgeting while someone spends ten minutes assembling a conclusive proof of some tangential sub-point that never even had the potential of changing your mind, then you know the value of someone being willing to say "Nope, this isn't going to be relevant for me; try speaking to that instead.")

If we can move the debate to a place where, instead of fighting over the truth, we're collaborating on a search for understanding, then we can recoup a lot of wasted resources. You have a tremendous comparative advantage at knowing the shape of your own belief structure—if we can switch to a mode where we're each looking inward and candidly sharing insights, we'll move forward much more efficiently than if we're each engaged in guesswork about the other person. This requires that we want to know the actual truth (such that we're incentivized to seek out flaws and falsify wrong beliefs in ourselves just as much as in others) and that we feel emotionally and socially safe with our partner, but there's a doubly-causal dynamic where a tiny bit of double crux spirit up front can produce safety and truth-seeking, which allows for more double crux, which produces more safety and truth-seeking, etc.

Pitfalls

First and foremost, it matters whether you're in the strong version of double crux (cooperative, consent-based) or the weak version (you, as an agent, trying to improve the conversational dynamic, possibly in the face of direct opposition). In particular, if someone is currently riled up and conceives of you as rude/hostile/the enemy, then saying something like "I just think we'd make better progress if we talked about the underlying reasons for our beliefs" doesn't sound like a plea for cooperation—it sounds like a trap.

So, if you're in the weak version, the primary strategy is to embody the question "What do you see that I don't?" In other words, approach from a place of explicit humility and good faith, drawing out their belief structure for its own sake, to see and appreciate it rather than to undermine or attack it. In my experience, people can "smell it" if you're just playing at good faith to get them to expose themselves; if you're having trouble really getting into the spirit, I recommend meditating on times in your past when you were embarrassingly wrong, and how you felt prior to realizing it compared to after realizing it.

(If you're unable or unwilling to swallow your pride or set aside your sense of justice or fairness hard enough to really do this, that's actually fine; not every disagreement benefits from the double-crux-nature. But if your actual goal is improving the conversational dynamic, then this is a cost you want to be prepared to pay—going the extra mile, because a) going what feels like an appropriate distance is more often an undershoot, and b) going an actually appropriate distance may not be enough to overturn their entrenched model in which you are The Enemy. Patience- and sanity-inducing rituals recommended.)

As a further tip that's good for either version but particularly important for the weak one, model the behavior you'd like your partner to exhibit. Expose your own belief structure, show how your own beliefs might be falsified, highlight points where you're uncertain and visibly integrate their perspective and information, etc. In particular, if you don't want people running amok with wrong models of what's going on in your head, make sure you're not acting like you're the authority on what's going on in their head.

Speaking of non-sequiturs, beware of getting lost in the fog. The very first step in double crux should always be to operationalize and clarify terms. Try attaching numbers to things rather than using misinterpretable qualifiers; try to talk about what would be observable in the world rather than how things feel or what's good or bad. In the school uniforms example, saying "uniforms make students feel better about themselves" is a start, but it's not enough, and going further into quantifiability (if you think you could actually get numbers someday) would be even better. Often, disagreements will "dissolve" as soon as you remove ambiguity—this is success, not failure!

Finally, use paper and pencil, or whiteboards, or get people to treat specific predictions and conclusions as immutable objects (if you or they want to change or update the wording, that's encouraged, but make sure that at any given moment, you're working with a clear, unambiguous statement). Part of the value of double crux is that it's the opposite of the weaselly, score-points, hide-in-ambiguity-and-look-clever dynamic of, say, a public political debate. The goal is to have everyone understand, at all times and as much as possible, what the other person is actually trying to say—not to try to get a straw version of their argument to stick to them and make them look silly. Recognize that you yourself may be tempted or incentivized to fall back to that familiar, fun dynamic, and take steps to keep yourself in "scout mindset" rather than "soldier mindset."

Algorithm

This is the double crux algorithm as it currently exists in our handbook. It's not strictly connected to all of the discussion above; it was designed to be read in context with an hour-long lecture and several practice activities (so it has some holes and weirdnesses) and is presented here more for completeness and as food for thought than as an actual conclusion to the above.

1. Find a disagreement with another person

- A case where you believe one thing and they believe the other

- A case where you and the other person have different confidences (e.g. you think X is 60% likely to be true, and they think it’s 90%)

2. Operationalize the disagreement

- Define terms to avoid getting lost in semantic confusions that miss the real point

- Find specific test cases—instead of (e.g.) discussing whether you should be more outgoing, instead evaluate whether you should have said hello to Steve in the office yesterday morning

- Wherever possible, try to think in terms of actions rather than beliefs—it’s easier to evaluate arguments like “we should do X before Y” than it is to converge on “X is better than Y.”

3. Seek double cruxes

- Seek your own cruxes independently, and compare with those of the other person to find overlap

- Seek cruxes collaboratively, by making claims (“I believe that X will happen because Y”) and focusing on falsifiability (“It would take A, B, or C to make me stop believing X”)

4. Resonate

- Spend time “inhabiting” both sides of the double crux, to confirm that you’ve found the core of the disagreement (as opposed to something that will ultimately fail to produce an update)

- Imagine the resolution as an if-then statement, and use your inner sim and other checks to see if there are any unspoken hesitations about the truth of that statement

5. Repeat!

Conclusion

We think double crux is super sweet. To the extent that you see flaws in it, we want to find them and repair them, and we're currently betting that repairing and refining double crux is going to pay off better than try something totally different. In particular, we believe that embracing the spirit of this mental move has huge potential for unlocking people's abilities to wrestle with all sorts of complex and heavy hard-to-parse topics (like existential risk, for instance), because it provides a format for holding a bunch of partly-wrong models at the same time while you distill the value out of each.

Comments appreciated; critiques highly appreciated; anecdotal data from experimental attempts to teach yourself double crux, or teach it to others, or use it on the down-low without telling other people what you're doing extremely appreciated.

- Duncan Sabien

[1]One reason good faith is important is that even when people are "wrong," they are usually partially right—there are flecks of gold mixed in with their false belief that can be productively mined by an agent who's interested in getting the whole picture. Normal disagreement-navigation methods have some tendency to throw out that gold, either by allowing everyone to protect their original belief set or by replacing everyone's view with whichever view is shown to be "best," thereby throwing out data, causing information cascades, disincentivizing "noticing your confusion [? · GW]," etc.

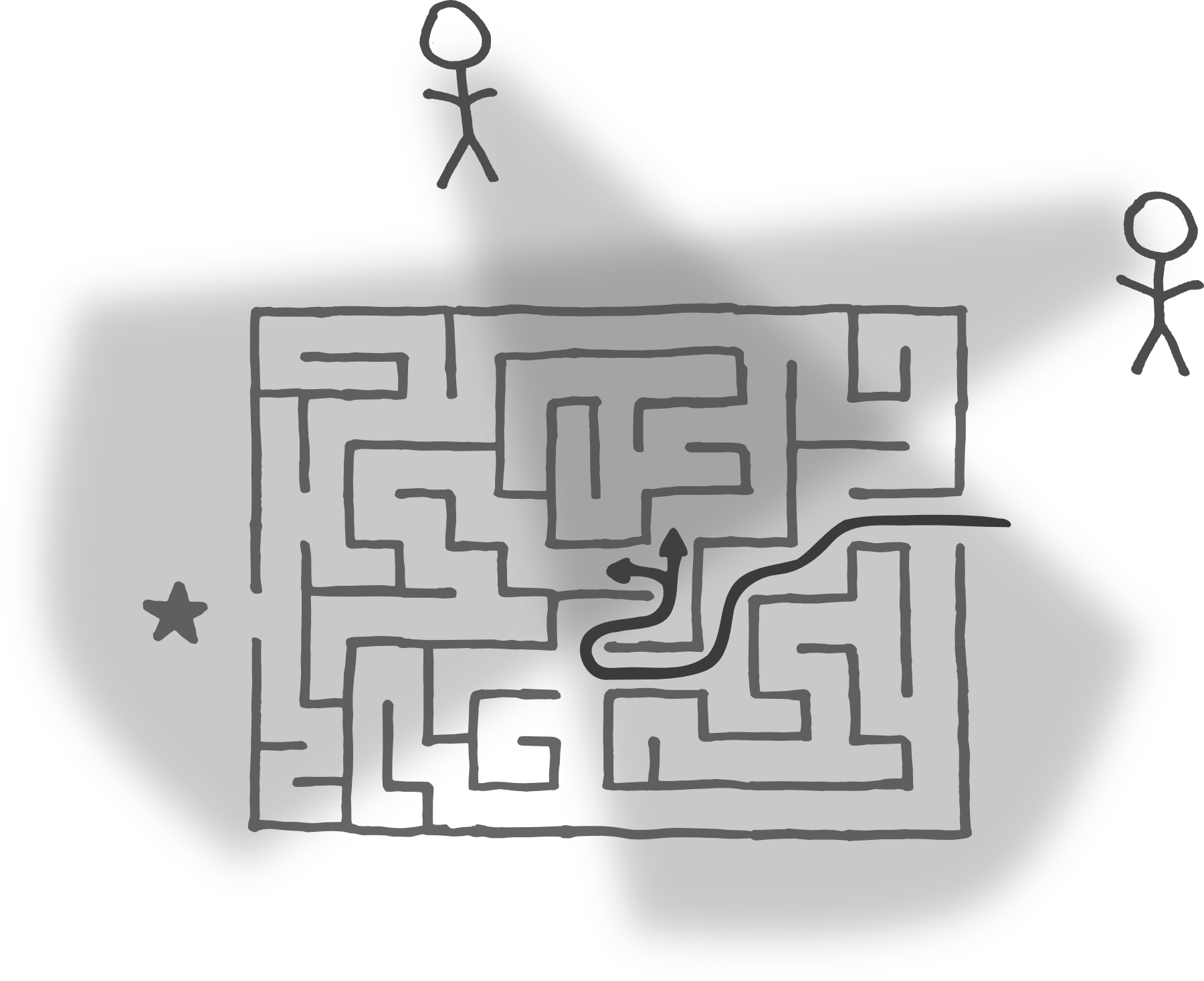

The central assumption is that the universe is like a large and complex maze that each of us can only see parts of. To the extent that language and communication allow us to gather info about parts of the maze without having to investigate them ourselves, that's great. But when we disagree on what to do because we each see a different slice of reality, it's nice to adopt methods that allow us to integrate and synthesize, rather than methods that force us to pick and pare down. It's like the parable of the three blind men and the elephant—whenever possible, avoid generating a bottom-line conclusion until you've accounted for all of the available data.

The agent at the top mistakenly believes that the correct move is to head to the left, since that seems to be the most direct path toward the goal. The agent on the right can see that this is a mistake, but it would never have been able to navigate to that particular node of the maze on its own.

108 comments

Comments sorted by top scores.

comment by johnswentworth · 2016-11-29T22:33:47.812Z · LW(p) · GW(p)

A suggestion: don't avoid feelings. Instead, think of feelings as deterministic and carrying valuable information; feelings can be right even when our justifications are wrong (or vice versa).

Ultimately, this whole technique is about understanding the causal paths which led to both your own beliefs, and your conversational partner's beliefs. In difficult areas, e.g. politics or religion, people inevitably talk about logical arguments, but the actual physical cause of their belief is very often intuitive and emotional - a feeling. Very often, those feelings will be the main "crux".

For instance, I've argued that the feelings of religious people offer a much better idea of religion's real value than any standard logical argument - the crux in a religious argument might be entirely a crux of feelings. In another vein, Scott's thrive/survive theory offers psychological insight on political feelings more than political arguments, and it seems like it would be a useful crux generator - i.e., would this position seem reasonable in a post-scarcity world, or would it seem reasonable during a zombie outbreak?

Replies from: korin43↑ comment by Brendan Long (korin43) · 2016-11-30T02:41:47.422Z · LW(p) · GW(p)

This feels like a point but I'm having trouble thinking of specific examples. What would a crux like this look like and how could you resolve the disagreement involved with it?

Replies from: johnswentworth↑ comment by johnswentworth · 2016-11-30T17:07:13.863Z · LW(p) · GW(p)

Let's use the school uniforms example.

The post mentions "uniforms make students feel better about themselves" as something to avoid. But that claim strongly suggests a second statement for the claimant: "I would have felt better about myself in middle school, if we'd had uniforms." A statement like that is a huge gateway into productive discussion.

First and foremost, that second statement is very likely the true cause for the claimant's position. Second, that feeling is something which will itself have causes! The claimant can then think back about their own experiences, and talk about why they feel that way.

Of course, that creates another pitfall to watch out for: argument by narrative, rather than statistics. It's easy to tell convincing stories. But if one or both participants know what's up, then each participant can produce a narrative to underlie their own feelings, and then the real discussion is over questions like (1) which of those narratives is more common in practice, (2) should we assign more weight to one type of experience, (3) what other types of experiences should we maybe consider, and (4) does the claim make sense even given the experience?

The crux is then the experiences: if I'd been through your experience in middle school, then I can see where I'd feel similarly about the uniforms. That doesn't mean your feeling is right - maybe signalling through clothing made your middle school experience worse somehow, but I argue that kids will find ways to signal regardless of uniform rules. But that would be a possible crux of the argument.

Replies from: rational_rob↑ comment by rational_rob · 2016-12-07T12:36:55.869Z · LW(p) · GW(p)

I always thought of school uniforms as being a logical extension of the pseudo-fascist/nationalist model of running them. (I mean this in the pre-world war descriptive sense rather than the rhetorical sense that arose after the wars) Lots of schools, at least in America, try to encourage a policy of school unity with things like well-funded sports teams and school pep rallies. I don't know how well these policies work in practice, but if they're willing to go as far as they have now, school uniforms might contribute to whatever effects they hope to achieve. My personal opinion is in favor of school uniforms, but I'm mostly certain that's because I'm not too concerned with fashion or displays of wealth. I'd have to quiz some other people to find out for sure.

Replies from: BarbaraB, Duncan_Sabien↑ comment by BarbaraB · 2016-12-21T05:57:40.500Z · LW(p) · GW(p)

Are the uniforms at US schools reasonably practical, comfortable and do they have reasonable colour, e.g. not green ? As a girl of socialism, I experienced pioneer uniforms, which were not well designed. They forced short skirts on girls, which are impractical in some weather. The upper part, the shirt, needed to be ironed. There was no sweather or coat to unify kids in winter.. My mother once had to stand coatless in winter in a wellcome row for some event. I can also imagine some girls having aesthetic issues with the exposed legs or unflattering color. But what are the uniforms in the US usually like ?

Replies from: ESRogs, Lumifer↑ comment by ESRogs · 2017-01-11T07:24:32.251Z · LW(p) · GW(p)

What's wrong with green?

Replies from: hairyfigment↑ comment by hairyfigment · 2017-01-11T23:43:52.581Z · LW(p) · GW(p)

Well, it's not easy.

↑ comment by Lumifer · 2016-12-21T15:55:03.000Z · LW(p) · GW(p)

Schools in the US are run locally and are very diverse. Some schools (mostly private ones) require uniforms, but as far as I know the majority of public schools do not. There are dress codes -- all schools have policies about what's acceptable to wear to school -- but within those guidelines you can generally wear what you want.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2016-12-07T22:37:24.353Z · LW(p) · GW(p)

I should note that my own personal opinions on school uniforms are NOT able-to-be-determined from this article.

comment by mingyuan · 2017-02-02T02:02:29.218Z · LW(p) · GW(p)

Anecdotal data time! We tried this at last week’s Chicago rationality meetup, with moderate success. Here’s a rundown of how we approached the activity, and some difficulties and confusion we encountered.

Approach:

Before the meeting, some of us came up with lists of possibly contentious topics and/or strongly held opinions, and we used those as starting points by just listing them off to the group and seeing if anyone held the opposite view. Some of the assertions on which we disagreed were:

- Cryonic preservation should be standard medical procedure upon death, on an opt-out basis

- For the average person, reading the news has no practical value beyond social signalling

- Public schools should focus on providing some minimum quality of education to all students before allocating resources to programs for gifted students

- The rationality movement focuses too much of its energy on AI safety

- We should expend more effort to make rationality more accessible to ‘normal people’

We paired off, with each pair in front of a blackboard, and spent about 15 minutes on our first double crux, after the resolution of which the conversations mostly devolved. We then came together, gave feedback, switched partners, and tried again.

Difficulties/confusion:

For the purposes of practice, we had trouble finding points of genuine disagreement – in some cases we found that the argument dissolved after we clarified minor semantic points in the assertion, and in other cases a pair would just sit there and agree on assertion after assertion (though the latter is more a flaw in the way I designed the activity than in the actual technique). However, we all agree that this technique will be useful when we encounter disagreements in future meetings, and even in the absence of disagreement, the activity of finding cruxes was a useful way of examining the structure of our beliefs.

We were a little confused as to whether coming up with an empirical test to resolve the issue was a satisfactory endpoint, or if we actually needed to seek out the results in order to consider the disagreement resolved.

In one case, when we were debating the cryonics assertion, my interlocutor managed to convince me of all the factual questions on which I thought my disagreement rested, but I still had some lingering doubt – even though I was convinced of the conclusion on an intellectual level, I didn’t grok it. When we learned goal factoring, we were taught not dismiss fuzzy, difficult-to-define feelings; that they could be genuinely important reasons for our thoughts and behavior. Given its reliance on empiricism, how does Double Crux deal with these feelings, if at all? (Disclaimer: it’s been two years since we learned goal factoring, so maybe we were taught how to deal with this and I just forgot.)

In another case, my interlocutor changed his mind on the question of public schools, but when asked to explain the line of argument that led him to change his mind, he wasn’t able to construct an argument that sounded convincing to him. I’m not sure what happened here, but in the future I would place more emphasis on writing down the key points of the discussion as it unfolds. We did make some use of the blackboards, but it wasn’t very systematic.

Overall it wasn’t as structured as I expected it to be. People didn’t reference the write-up when immersed in their discussions, and didn’t make use of any of the tips you gave. I know you said we shouldn’t be preoccupied with executing “the ideal double crux,” but I somehow still have the feeling that we didn’t quite do it right. For example, I don’t think we focused enough on falsifiability and we didn’t resonate after reaching our conclusions, which seem like key points. But ultimately the model was still useful, no matter how loosely we adhered to it.

I hope some of that was helpful to you! Also, tell Eli Tyre we miss him!

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2017-02-02T04:28:01.105Z · LW(p) · GW(p)

Very useful. I don't have the time to give you the detailed response you deserve, but I deeply appreciate the data (and Eli says hi).

comment by jollybard · 2016-12-01T03:23:50.715Z · LW(p) · GW(p)

Personally, I am still eagerly waiting for CFAR to release more of their methods and techniques. A lot of them seem to be already part of the rationalist diaspora's vocabulary -- however, I've been unable to find descriptions of them.

For example, you mention "TAP"s and the "Inner Simulator" at the beginning of this article, yet I haven't had any success googling those terms, and you offer no explanation of them. I would be very interested in what they are!

I suppose the crux of my criticism isn't that there are techniques you haven't released yet, nor that rationalists are talking about them, but that you mention them as though they were common knowledge. This, sadly, gives the impression that LWers are expected to know about them, and reinforces the idea that LW has become a kind of elitist clique. I'm worried that you are using this in order to make aspiring rationalists, who very much want to belong, come to CFAR events, to be in the know.

Replies from: Kaj_Sotala, AnnaSalamon, kenzi↑ comment by Kaj_Sotala · 2016-12-01T20:35:32.850Z · LW(p) · GW(p)

Decided to contribute a bit: here's a new article on TAPs! :)

↑ comment by AnnaSalamon · 2016-12-01T04:18:11.622Z · LW(p) · GW(p)

TAPs = Trigger Action Planning; referred to in the scholarly literature as "Implementation intentions". The Inner Simulator unit is CFAR's way of referring to what you actually expect to see happen (as contrasted with, say, your verbally stated "beliefs".)

Good point re: being careful about implied common knowledge.

↑ comment by kenzi · 2016-12-20T18:28:13.141Z · LW(p) · GW(p)

Here's a writeup on the Asana blog about Inner Simulator, based on a talk CFAR gave there a few years ago.

comment by chrizbo · 2016-11-30T02:15:48.968Z · LW(p) · GW(p)

I'm excited to try this out in both strong and weak forms.

There are parallels of getting to the crux of something in design and product discovery research. It is called Why Laddering. I have used it when trying to understand the reasons behind a particular person's problem or need. If someone starts too specific it is a great way to step back from solutions they have preconceived before knowing the real problem (or if there is even one).

It also attempts to get to a higher level in a system of needs.

Are there ever times that the double crux have resulted in narrowing with each crux? Or do they generally become more general?

There is the reverse as well called How Laddering which tries to find solutions for more general problems.

It sounds like the 'reverse double crux' would be to find a new, common solution after a common crux has been found.

Replies from: Kenny, Drea↑ comment by Kenny · 2016-12-05T19:12:22.038Z · LW(p) · GW(p)

There are parallels of getting to the crux of something in design and product discovery research. It is called Why Laddering. I have used it when trying to understand the reasons behind a particular person's problem or need. If someone starts too specific it is a great way to step back from solutions they have preconceived before knowing the real problem (or if there is even one).

In programming, we call that The XY Problem.

Replies from: chrizbo↑ comment by Drea · 2016-12-11T19:20:25.196Z · LW(p) · GW(p)

Nice association.

I see this model as building on Laddering or the XY problem, because it also looks for a method of falsifiability.

It's closer to a two-sided use of Eric Ries' Lean Startup (the more scientific version), where a crux = leap of faith assumption. I've called the LoFA a "leap of faith hypothesis", and your goal is to find the data that would tell you the assumption is wrong.

The other product design thinker with a similar approach is Tom Chi who uses a conjecture -> experiment -> actuals -> decision framework.

In all of these methods, the hard work/thinking is actually finding a crux and how to falsify it. Having an "opponent" to collaborate with may make us better at this.

comment by Jess_Whittlestone · 2016-12-01T10:13:25.615Z · LW(p) · GW(p)

Thanks for writing this up! One thing I particularly like about this technique is that it seems to really help with getting into the mindset of seeing disagreements as good (not an unpleasant thing to be avoided), and seeing them as good for the right reasons - for learning more about the world/your own beliefs/changing your mind (not a way to assert status/dominance/offload anger etc.)

I feel genuinely excited about paying more attention to where I disagree with others and trying to find the crux of the disagreement now, in a way I didn't before reading this post.

Replies from: Lumifer, sleepingthinker↑ comment by Lumifer · 2016-12-01T15:43:43.503Z · LW(p) · GW(p)

the mindset of seeing disagreements as good

It's interesting how you assume that disagreements are not likely to lead to bad real-world consequences.

Replies from: Jess_Whittlestone, ProofOfLogic↑ comment by Jess_Whittlestone · 2016-12-02T10:34:21.269Z · LW(p) · GW(p)

I don't think I was assuming that, but good point - there are of course lots of nuances to whether disagreements are good/bad/useful/problematic in various ways. I definitely wasn't meaning to say "disagreements are always a good thing", but rather something much weaker, like "disagreements are not always a bad thing to be avoided, and can often be a good opportunity to learn more about the world and/or your own reasons for your beliefs, and internalising this mindset more fully seems very useful."

I don't think this means we should try and create disagreements where none exist already, or that the world wouldn't be a better place if people agreed more. But assuming a lot of disagreements already exist, identifying those disagreements can be a very good thing if you have good tools for resolving/making better sense of them. So when I say I'm excited about finding more disagreements, I mean that given the assumption that those disagreements already exist, and would have any potential bad real-world consequences regardless of whether I'm aware of them or not.

Replies from: Lumifer↑ comment by Lumifer · 2016-12-02T15:45:39.621Z · LW(p) · GW(p)

But assuming a lot of disagreements already exist, identifying those disagreements can be a very good thing if you have good tools for resolving/making better sense of them.

Yep, these... clarifications make the statement a lot more reasonable :-)

It's just that the original unconditional claim had a lot of immediate counterexamples spring to mind, e.g.:

- When there is a power disparity between the two disagreeing (e.g. arguing with cops is generally a bad idea)

- When you make yourself a target (see e.g. this)

- When it's just unnecessary (imagine two neighbours who get along quite well until they discover that one is a Trumper and one is a Clintonista)

↑ comment by Kenny · 2016-12-05T19:21:56.192Z · LW(p) · GW(p)

Ooh, I hope you're not upset because I disagree with you! Your examples, numbered for easier reference:

- When there is a power disparity between the two disagreeing (e.g. arguing with cops is generally a bad idea)

- When you make yourself a target (see e.g. this)

- When it's just unnecessary (imagine two neighbours who get along quite well until they discover that one is a Trumper and one is a Clintonista)

I'm not sure what disagreement you had in mind with respect to [2]; maybe whether 'forking someone's repo' was a sexual reference?

For [1], I can think of counter-counter-examples, e.g. where a copy suspects that you've committed a crime and you know you haven't committed that crime. If you could identify a shared crux of that disagreement you might be able to provide evidence to resolve that crux and exonerate yourself (before you're arrested, or worse).

For [3], I can also think of counter-counter-examples, e.g. where because both neighbors got along well with each other before discovering their favored presidential candidates, they're inclined to be charitable towards each other and learn about why they disagree. I'm living thru something similar to this right now with someone close to me. I agree with Jess and think that discovering disagreement can be a generally positive event, regardless of the overall negative outcomes pertaining to specific disagreements.

Saying 'water is good to drink' doesn't imply 'you can't hurt or kill yourself by drinking too much water'.

Replies from: Lumifer↑ comment by Lumifer · 2016-12-05T19:39:46.962Z · LW(p) · GW(p)

It's hard to upset me :-)

Re [2] the disagreement was about whether that joke was (socially) acceptable.

One can always come up with {counter-}examples -- iterate to depth desired -- but that's not really the point. The point is to which degree a general statement applies unconditionally. I happen to think that "a disagreement is a positive event" is a highly conditional observation.

Oh, and I don't recommend double-cruxing cops. I suspect this will go really badly.

↑ comment by ProofOfLogic · 2016-12-02T01:17:44.698Z · LW(p) · GW(p)

Disagreements can lead to bad real-world consequences for (sort of) two reasons:

1) At least one person is wrong and will make bad decisions which lead to bad consequences. 2) The argument itself will be costly (in terms of emotional cost, friendship, perhaps financial cost, etc).

In terms of #1, an unnoticed disagreement is even worse than an unsettled disagreement; so thinking about #1 motivates seeking out disagreements and viewing them as positive opportunities for intellectual progress.

In terms of #2, the attitude of treating disagreements as opportunities can also help, but only if both people are on board with that. I'm guessing that is what you're pointing at?

My strategy in life is something like: seek out disagreements and treat them as delicious opportunities when in "intellectual mode", but avoid disagreements and treat them as toxic when in "polite mode". This heuristic isn't always correct. I had to be explicitly told that many people often don't like arguing even over intellectual things. Plus, because of #1, it's sometimes especially important to bring up disagreements in practical matters (that don't invoke "intellectual mode") even at risk of a costly argument.

It seems like something like "double crux attitude" helps with #2 somewhat, though.

Replies from: Lumifer↑ comment by sleepingthinker · 2017-02-05T23:24:55.642Z · LW(p) · GW(p)

Disagreements are not always bad, however what happens in the real world most of the time is that the disagreements are not based on rational thought and logic, but instead on some fluffy slogans and "feelings". People don't actually go deep into examining whether their argument makes sense and is supported by sound facts and not things like narrative fallacy.

In fact, when you point out to other people that what they are saying is not supported by any logical arguments, they get even more defensive and irrational.

Replies from: Lumifer↑ comment by Lumifer · 2017-02-06T01:02:36.152Z · LW(p) · GW(p)

what happens in the real world most of the time is that the disagreements are not based on rational thought and logic, but instead on some fluffy slogans and "feelings".

What you're missing is a rather important concept called "values".

comment by PeterisP · 2016-11-29T23:54:09.504Z · LW(p) · GW(p)

I'm going to go out and state that the chosen example of "middle school students should wear uniforms" fails the prerequisite of "Confidence in the existence of objective truth", as do many (most?) "should" statements.

I strongly believe that there is no objectively true answer to the question "middle school students should wear uniforms", as the truth of that statement depends mostly not on the understanding of the world or the opinion about student uniforms, but on the interpretation of what the "should" means.

For example, "A policy requiring middle school students to wear uniforms is beneficial to the students" is a valid topic of discussion that can uncover some truth, and "A policy requiring middle school students to wear uniforms is mostly beneficial to [my definition of] society" is a completely different topic of discussion that likely can result in a different or even opposite answer.

Talking about unqualified "should" statements are a common trap that prevents reaching a common understanding and exploring the truth. At the very least, you should clearly distinguish between "should" as good, informed advice from "should" as a categorical moral imperative. If you want to discuss if "X should to Y" in the sense of discussing what are the advantages of doing Y (or not), then you should (see what I'm doing here?) convert them to statements in the form "X should do Y because that's a dominant/better/optimal choice that benefits them", because otherwise you won't get what you want but just an argument between a camp arguing this question versus a camp arguing about why we should/shouldn't force X to do Y because everyone else wants it.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2016-11-30T07:07:32.277Z · LW(p) · GW(p)

I think you're basically making correct points, but that your conclusion doesn't really follow from them.

Remember that double crux isn't meant to be a "laboratory technique" that only works under perfect conditions—it's meant to work in the wild, and has to accommodate the way real humans actually talk, think, and behave.

You're completely correct to point out that "middle school students should wear uniforms" isn't a well-defined question yet, and that someone wanting to look closely at it and double crux about it would need to boil down a lot of specifics. But it's absolutely the sort of phrase that someone who has a well-defined concept in mind might say, at the outset, as a rough paraphrase of their own beliefs.

You're also correct (in my opinion/experience) to point out that "should" statements are often a trap that obfuscates the point and hinders progress, but I think the correct response there isn't to rail against shoulds, but to do exactly the sort of conversion that you're recommending as a matter of habit and course.

People are going to say things like "we should do X," and I think letting that get under one's skin from the outset is unproductive, whereas going, ah, cool, I can think of like four things you might mean by that—is it one of these? ... is a simple step that any aspiring double cruxer or rationalist is going to want to get used to.

comment by entirelyuseless · 2016-12-01T13:48:35.980Z · LW(p) · GW(p)

I've waited to make this comment because I wanted to read this carefully a few times first, but it seems to me that the "crux" might not be doing a lot here, compared to simply getting people to actually think about and discuss an issue, instead of thinking about argument in terms of winning and losing. I'm not saying the double crux strategy doesn't work, but that it may work mainly because it gives the people something to work at other than winning and losing, and something that involves trying to understand the issues.

One thing that I have noticed working is this rule: say what is right about what the other person said before you criticize anything about it. And if you think there is nothing right about the content, at least say what you think is actually evidence for it. (If you think there is literally no evidence at all for it, then you basically think the other person is lying.) This can work pretty well even if only person is doing it, and I'm not sure that two people can consistently do it without arriving at least at a significant amount of agreement.

Replies from: atucker, Duncan_Sabien↑ comment by atucker · 2016-12-01T14:31:23.620Z · LW(p) · GW(p)

I think that crux is doing a lot of work in that it forces the conversation to be about something more specific than the main topic, and because it makes it harder to move the goal posts partway through the conversation. If you're not talking about a crux then you can write off a consideration as "not really the main thing" after talking about it.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2016-12-01T18:46:28.400Z · LW(p) · GW(p)

I agree with both you and atucker—I think the crux is doing the important things they cite, but I also think that a significant amount of the value comes from the overall reframing of the dynamic.

comment by hkrsmk · 2018-09-22T10:50:33.495Z · LW(p) · GW(p)

Hi, some friends and I tried to practice this a couple days back. So I guess main takeaway points (two years later! Haha):

-

It's hard to practice with "imagined" disagreements or devil advocates; our group often blanked out when we dug deeper. Eg one thing we tried to discuss was organ donation after death. We all agreed that it was a good idea, and had a hard time imagining why someone wouldn't think it was a good idea.

-

Choice of topic is important - some lighter topics might not work that well. We tackled something lighter afterwards - "Apple products are bad". It was slowly refined to "apple products cost more than the benefit provided to the consumers, compared to other smartphones". A quick internet search pulled up the costs of an iphone vs a normal smartphone, although we realised that value also depends on the consumer. We abandoned it afterwards because it was possibly too light to find a double crux - the crux was likely to be apparent in the "problem statement". Or perhaps it could have been better seen as "If apple products (or rather, just the iphone X) cost more than the benefit to consumers, then they are bad."

We then asked our iphone user if she would get twice the amount of utility out of an iphone as compared to a standard smartphone (because that was the rough difference in price), and she said likely no.

- We got stuck in the definitions of terms. We tried to have a discussion around "is there objective moral truth?" and had a sliding scale for answers, with the two extremes being yes and no. When we started discussing, we realised that we all understood the idea differently. Eventually the crux that we got (after about ~1.5h) was "If it is more likely than not that humans collectively will change their definition of what is moral, then there is no objective moral truth."

(It rested on another assumption that moral truth is determined by humans collectively.)

We spent about 45mins to 1 hour of time defining "objective moral truth" to one another first; having a common understanding of the terms used helps facilitate the discussion.

- We might have gotten better results with "should" questions instead of "is/are" type questions.

comment by CCC · 2016-11-30T07:44:46.414Z · LW(p) · GW(p)

This set of strategies looks familiar. I've never called it double crux or anything like that, but I've used a similar line in internet arguments before.

Taking a statement that disagrees with me; assuming my opponent is sane and has reasons to insist that that statement is true; interrogating (politely) to try to find those reasons (and answering any similar interrogations if offered); trying to find common ground where possible, and work from there to the point of disagreement; eventually either come to agreement or find reasons why we do not agree that do not further dissolve.

I've found it works nicely, though it really helps to be polite at all points as well. Politeness is very, very important when using the weak version, and still very important while using the strong version - it reduces emotional arguments and makes it more likely that your debate partner will continue to debate with you (as opposed to shout at you, or go away and leave the question unresolved).

comment by Felix Hans Michel Grimberg (Grim-bot) · 2020-04-14T00:36:07.571Z · LW(p) · GW(p)

I hope very minor nitpicks make acceptable comments. I apologize if not.

In the section about Prerequisites, specifically about The belief in an objective truth, the example "is the color orange superior to the color green" does not function as a good example in my opinion. This is because it is not a well-posed problem for which a clear-cut answer exists. At the very least, the concept of "superior" is too vague for such an answer to exist. I would suggest adding "for the specific purpose we have in mind", or removing that example.

Happy Easter!

comment by Morendil · 2016-12-30T12:36:21.984Z · LW(p) · GW(p)

I'm seeing similarities between this and Goldratt's "Evaporating Cloud". You might find it worthwhile to read up on applications of EC in the literature on Theory of Constraints, if you haven't already.

comment by Unnamed · 2016-12-05T22:09:35.346Z · LW(p) · GW(p)

(This is Dan from CFAR)

Here are a few examples of disagreements where I'd expect double crux to be an especially useful approach (assuming that both people hit the prereqs that Duncan listed):

2 LWers disagree about whether attempts to create "Less Wrong 2.0" should try to revitalize Less Wrong or create a new site for discussion.

2 LWers disagree on whether it would be good to have a norm of including epistemic effort metadata at the start of a post.

2 EAs disagree on whether the public image of EA should make it seem commonsense and relatable or if it should highlight ways in which EA is weird and challenges mainstream views.

2 EAs disagree on the relative value of direct work and earning to give.

2 co-founders disagree on whether their startup should currently be focusing most of its efforts on improving their product.

2 housemates disagree on whether the dinner party that they're throwing this weekend should have music.

These examples share some features:

- The people care about getting the right answer because they (or people that they know) are going to do something based on the answer, and they really want it to go well.

- The other person's head is one of the better sources of information that you have available. You can't look these things up on Wikipedia, and the other person's opinion seems likely to reflect some relevant experiences/knowledge/skills/models/etc. that you haven't fully incorporated into your own thinking.

- Most likely, neither person came into the conversation with a clear, detailed model of the reasoning behind their own conclusion.

So digging into your own thinking and the other person's thinking on the topic is one of the more promising options available for making progress towards figuring out something that you care about. And focusing on cruxes can help keep that conversation on track so that it can engage with the most relevant differences between your models and have a good chance of leading to important updates.

There are other cases where double crux is also useful which don't share all of these features, but these 6 examples are in what I think of as the core use case for double crux. I think it helps to have these sorts of examples in mind (ideally ones that actually apply to you) as you're trying to understand the technique, rather than just trying to apply double crux to the general category of "disagreement".

comment by negamuhia · 2016-12-03T14:12:32.581Z · LW(p) · GW(p)

Does anyone else, other than me, have a problem with noticing when the discussion they're having is getting more abstract? I'm often reminded of this fact when debating some topic. This is relating to the point on "Narrowing the scope", and how to notice the need to do this.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2016-12-05T19:20:02.945Z · LW(p) · GW(p)

A general strategy of "can I completely reverse my current claim and have it still make sense?" is a good one for this. When you're talking about big, vague concepts, you can usually just flip them over and they still sound like reasonable opinions/positions to take. When you flip it and it seems like nonsense, or seems provably, specifically wrong, that means you're into concrete territory. Try just ... adopting a strategy of doing this 3-5 times per long conversation?

Replies from: negamuhia↑ comment by negamuhia · 2016-12-10T22:20:19.305Z · LW(p) · GW(p)

This seems useful and simple enough to try. I'll set up an implementation intention to do this next time I find myself in a long conversation. It also reminds me of the reversal test, a heuristic for eliminating status-quo bias.

comment by Sniffnoy · 2016-12-01T05:59:42.324Z · LW(p) · GW(p)

Interesting. Some time ago I was planning on writing some things on how to have an argument well, but I found a lot of it was already covered by Eliezer in "37 ways words can be wrong". I think this covers a lot of the rest of it! Things like "Spot your interlocutor points so you can get to the heart of the matter; you can always unspot them later if they turn out to be more crucial than you realized."

One thing I've tried sometimes is actively proposing reasons for my interlocutor's beliefs when they don't volunteer any, and seeing if they agree with any; unfortunately this doesn't seem to have gone well when I've done it. (Maybe because the tone of "and I have a counterargument prepared for each one!" was apparent and came off as a bit too hostile. :P ) Not sure that any real conclusions can be drawn from my failures there though.

Replies from: Lumifer↑ comment by Lumifer · 2016-12-01T15:47:47.409Z · LW(p) · GW(p)

actively proposing reasons for my interlocutor's beliefs when they don't volunteer any, and seeing if they agree with any; unfortunately this doesn't seem to have gone well when I've done it.

Yeah, when you ask leading questions ("You are saying X is true, do you think so because you believe that Y happens?"), people tend to get unreasonably suspicious :-/

comment by ProofOfLogic · 2016-11-30T09:17:14.305Z · LW(p) · GW(p)

If double crux felt like the Inevitable Correct Thing, what other things would we most likely believe about rationality in order for that to be the case?

I think this is a potentially useful question to ask for three reasons. One, it can be a way to install double crux as a mental habit -- figure out ways of thinking which make it seem inevitable. Two, to the extent that we think double crux really is quite useful, but don't know exactly why, that's Bayesian evidence for whatever we come up with as potential justification for it. But, three, pinning down sufficient conditions for double crux can also help us see limitations in its applicability (IE, point toward necessary conditions).

I like the four preconditions Duncan listed:

- Epistemic humility.

- Good faith.

- Confidence in the existence of objective truth.

- Curiosity.

I made my list mostly by moving through the stages of the algorithm and trying to justify each one. Again, these are things which I think might or might not be true, but which I think would help motivate one step or another of the double crux algorithm if they were true.

- A mindset of gathering information from people (that is, a mindset of honest curiosity) is a good way to combat certain biases ("arguments are soldiers" and all that).

- Finding disagreements with others and finding out why they believe what they believe is a good way to gather information from them.

- Most people (or perhaps, most people in the intended audience) are biased to argue for their own points as a kind of dominance game / intelligence signaling. This reduces their ability to learn things from each other.

- Telling people not to do that, in some appropriate way, can actually improve the situation -- perhaps by subverting the signaling game, making things other than winning arguments get you intelligence-signaling-points.

- Illusion of transparency is a common problem, and operationalizing disagreements is a good way to fight against the illusion of transparency.

- Or: Free-floating beliefs are a common problem, and operationalization is a good way to fight free-floating beliefs.

- Or: operationalizing / discussing examples is a good way to make things easier to reason about, which people oftem don't take enough advantage of.

- Seeking your cruxes helps ensure your belief isn't free-floating: if the belief is doing any work, it must make some predictions (which means it could potentially be falsified). So, in looking for your cruxes, you're doing yourself a service, not just the other person.

- Giving your cruxes to the other person helps them disprove your beliefs, which is a good thing: it means you're providing them with the tools to help you learn. You have reason to think they know something you don't. (Just be sure that your conditions for switching beliefs are good!)

- Seeking out cruxes shows the other person that you believe things for reasons: your beliefs could be different if things were different, so they are entangled with reality.

- In ordinary conversations, people try to have modus ponens without modus tollens: they want a belief that implies lots of things very strongly, but which is immune to attack. Bayesian evidence doesn't work this way; a hypothesis which makes sharp prediction is necessarily sticking its neck out for the chopping block if the prediction turns out false. So, asking what would change your mind (asking for cruxes) is in a way equivalent to asking for implications of your belief. However, it's doing it in a way which enforces the equivalence of implication and potential falsifier.

- Asking for cruxes from them is a good way to avoid wasting time in a conversation. You don't want to spend time explaining something only to find that it doesn't change their mind on the issue at hand. (But, you have to believe that they give honest cruxes, and also that they are working to give you cruxes which could plausibly lead to progress rather than ones which will just be impossible to decide one way or the other.)

- It's good to focus on why you believe what you believe, and why they believe what they believe. The most productive conversations will tend to concentrate on the sources of beliefs rather than the after-the-fact reasoning, because this is often where the most evidence lies.

- If you disagree with their crux but it isn't a crux for you, then you may have info for them, but the discussion won't be very informative for your belief. Also, the weight of the information you have is less likely to be large. Perhaps discuss it, but look for a double crux.

- If they disagree with your crux but it isn't a crux for them, then there may be information for you to extract from them, but you're allowing the conversation to be bias toward cherry-picking disproof of your belief; perhaps discuss, but try to get them to stick their neck out more so that you're mutually testing your beliefs.

Of all of this, my attempt to justify looking for a double crux rather than accepting single-person cruxes sticks out to me as especially weak. Also, I think a lot of the above points get something wrong with respect to good faith, but I'm not quite sure how to articulate my confusion on that.

comment by SarahNibs (GuySrinivasan) · 2016-11-29T23:50:31.245Z · LW(p) · GW(p)

This is well written and makes me want to play.

I think the cartoon could benefit from concrete, short, A and B. It also may benefit from rewording the final statements to "If I change my mind about B, that would change my mind about A too"?

Take some of your actual double crux sessions, boil down A and B into something short, try the comic out with the concrete example?

comment by imposterlife · 2021-12-12T12:11:20.564Z · LW(p) · GW(p)

Has anyone here tried building a UI for double crux conversations? The format has the potential to transform contentious conversations and debates, but right now it's unheard of outside of this community, mostly due to difficulties in execution.

Graph databases (the structure used for RoamResearch) would be the perfect format, and the conversations would benefit greatly from a standardized visual approach (much easier than whiteboarding or trying to write every point down). The hard part would be figuring out how to standardize it, which would involve having several conversations and debating the best way to break them down.

If anyone's interested in this, let me know.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2021-12-12T18:18:21.339Z · LW(p) · GW(p)

I believe Owen Shen has tried this; I cannot at the moment recall his LW username but it might just be some permutation of "Owen Shen."

Replies from: Vaniver↑ comment by Vaniver · 2021-12-13T00:48:42.159Z · LW(p) · GW(p)

I think you mean lifelonglearner [LW · GW].

Replies from: imposterlife↑ comment by imposterlife · 2021-12-14T18:15:12.799Z · LW(p) · GW(p)

Thanks! Got in touch and he recommended Kialo, which appears to be a good basic implementation. It's missing some flexibility - specifically the ability to connect every topic - but that might be a feature.

I envisioned creating a giant knowledge web, where any topic can link to any other topic. For example, a discussion on The Trolley Dilemma might have arguments with edges connecting to discussions on Utilitarianism and Consequentialism. The site's format doesn't allow linking discussions, each discussion has one topic and the arguments flow down from there.

This creates redundancy - the site has many topics with similar arguments inside - but it also makes each individual discussion easier to keep track of - it may be difficult to jump from topic to topic in a discussion.

I'll try it out on some conversations and see how it works. Based on other posts in this thread, double crux conversations rarely go exactly as envisioned.

comment by Robin · 2016-11-30T20:05:30.668Z · LW(p) · GW(p)

How much will you bet that there aren't better strategies for resolving disagreement?

Given the complexity of this strategy it seems to me like in most cases it is more effective to do some combination of the following:

1) Agree to disagree 2) Change the subject of disagreement 3) Find new friends who agree with you 4) Change your beliefs, not because you believe they are wrong but because other people believe they are wrong. 5) Violence (I don't advocate this in general, but in practice it's what humans do when they have disagreed through history)

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2016-11-30T21:45:03.369Z · LW(p) · GW(p)

The word "better" is doing a lot of work (more successful? Lower cost?), but in my personal experience and the experience of CFAR as a rationality org, double crux looks like the best all-around bet. (1) is a social move that sacrifices progress for happiness, and double crux is at least promising insofar as it lets us make that tradeoff go away. (2) sort of ... is? ... what double crux is doing—moving the disagreement from something unresolvable to something where progress can be made. (3) is absolutely a good move if you're prioritizing social smoothness or happiness or whatever, but a death knell for anyone with reasons to care about the actual truth (such as those working on thorny, high-impact problems). (4) is anathema for the same reason as (3). And (presumably like you), we're holding (5) as a valuable-but-costly tool in the toolkit and resorting to it as rarely as possible.

I would bet $100 of my own money that nothing "rated as better than double crux for navigating disagreement by 30 randomly selected active LWers" comes along in the next five years, and CFAR as an org is betting on it with both our street cred and with our actual allotment of time and resources (so, value in the high five figures in US dollars?).

Replies from: Robin↑ comment by Robin · 2016-12-09T19:30:27.455Z · LW(p) · GW(p)

I'd take your bet if it were for the general population, not LWers...

My issue with CFAR is it seems to be more focused on teaching a subset of people (LWers or people nearby in mindspace) how to communicate with each other than in teaching them how to communicate with people they are different from.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2016-12-09T23:11:01.380Z · LW(p) · GW(p)

That's an entirely defensible impression, but it's also actually false in practice (demonstrably so when you see us at workshops or larger events). Correcting the impression (which again you're justified in having) is a separate issue, but I consider the core complaint to be long-since solved.

Replies from: Robin↑ comment by Robin · 2016-12-10T04:44:35.690Z · LW(p) · GW(p)

I'm not sure what you mean and I'm not sure that I'd let a LWer falsify my hypothesis. There are clear systemic biases LWers have which are relatively apparent to outsiders. Ultimately I am not willing to pay CFAR to validate my claims and there are biases which emerge from people who are involved in CFAR whether as employees or people who take the courses (sunk cost as well as others).

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2016-12-30T05:12:50.569Z · LW(p) · GW(p)

I can imagine that you might have hesitated to list specifics to avoid controversy or mud-slinging, but I personally would appreciate concrete examples, as it's basically my job to find the holes you're talking about and try to start patching them.

comment by RayTaylor · 2021-09-03T08:33:03.590Z · LW(p) · GW(p)

Nice to see the Focusing/Gendlin link (but it's broken!)

Are you familiar with "Getting To Yes" by Ury and the Harvard mediators?

They were initially trained by Marshall Rosenberg, who drew on Gendlin and Rogers. I don't know if Double Crux works, but NVC mediation really does. Comparison studies for effectiveness would be interesting!

For more complex/core issues, Convergent Facilitation by Miki Kashtan is brilliant, as is www.restorativecircles.org by Dominic Barter, who is very insightful on our unconscious informal "justice systems".

Fast Consensing, Sociocracy and Holacracy are also interesting.

↑ comment by I_D_Sparse · 2017-03-17T19:31:58.604Z · LW(p) · GW(p)

Yes, but the idea is that a proof within one axiomatic system does not constitute a proof within another.

comment by ike · 2016-11-29T22:47:16.385Z · LW(p) · GW(p)

2 points:

- I've used something similar when evaluating articles: I ask "what statement of fact would have to be true to make the main vague conclusion of this article correct"? Then I try to figure out if that fact is correct.

2.

For instance: (wildly asserts) "I bet if everyone wore uniforms there would be a fifty percent reduction in bullying." (pauses, listens to inner doubts) "Actually, scratch that—that doesn't seem true, now that I say it out loud, but there is something in the vein of reducing overt bullying, maybe?"

A problem with doing that is that saying something may "anchor" you into giving the wrong confidence level. You might be underconfident since you're doing this without data, or you might just expect yourself to not believe it on a second look.

↑ comment by I_D_Sparse · 2017-03-18T00:50:32.765Z · LW(p) · GW(p)

If someone uses different rules than you to decide what to believe, then things that you can prove using your rules won't necessarily be provable using their rules.

Replies from: SnowSage4444↑ comment by SnowSage4444 · 2017-03-18T15:01:28.855Z · LW(p) · GW(p)

No, really, what?

What "Different rules" could someone use to decide what to believe, besides "Because logic and science say so"? "Because my God said so"? "Because these tea leaves said so"?

Replies from: hairyfigment, I_D_Sparse↑ comment by hairyfigment · 2017-03-20T18:32:33.182Z · LW(p) · GW(p)

Yes, but as it happens that kind of difference is unnecessary in the abstract. Besides the point I mentioned earlier, you could have a logical set of assumptions for "self-hating arithmetic" that proves arithmetic contradicts itself.

Completely unnecessary details here.

↑ comment by I_D_Sparse · 2017-03-18T20:56:42.900Z · LW(p) · GW(p)

Unfortunately, yes.

comment by I_D_Sparse · 2017-03-10T19:57:56.574Z · LW(p) · GW(p)

What if the disagreeing parties have radical epistemological differences? Double crux seems like a good strategy for resolving disagreements between parties that have an epistemological system in common (and access to the same relevant data), because getting to the core of the matter should expose that one or both of them is making a mistake. However, between two or more parties that use entirely different epistemological systems - e.g. rationalism and empiricism, or skepticism and "faith" - double crux should, if used correctly, eventually lead all disagreements back to epistemology, at which point... what, exactly? Use double-crux again? What if the parties don't have a meta-epistemological system in common, or indeed, any nth-order epistemological system in common? Double crux sounds really useful, and this is a great post, but a system for resolving epistemological disputes would be extremely helpful as well (especially for those of us who regularly converse with "faith"-ists about philosophy).

Replies from: gjm↑ comment by gjm · 2017-03-13T18:02:37.079Z · LW(p) · GW(p)

Is there good reason to believe that any method exists that will reliably resolve epistemological disputes between parties with very different underlying assumptions?

Replies from: Lumifer, hairyfigment, I_D_Sparse↑ comment by hairyfigment · 2017-03-14T01:10:56.855Z · LW(p) · GW(p)

Not if they're sufficiently different. Even within Bayesian probability (technically) we have an example in the hypothetical lemming race with a strong Gambler's Fallacy prior. ("Lemming" because you'd never meet a species like that unless someone had played games with them.)

On the other hand, if an epistemological dispute actually stems from factual disagreements, we might approach the problem by looking for the actual reasons people adopted their different beliefs before having an explicit epistemology. Discussing a religious believer's faith in their parents may not be productive, but at least progress seems mathematically possible.

↑ comment by I_D_Sparse · 2017-03-13T20:12:10.807Z · LW(p) · GW(p)

Not particularly, no. In fact, there probably is no such method - either the parties must agree to disagree (which they could honestly do if they're not all Bayesians), or they must persuade each other using rhetoric as opposed to honest, rational inquiry. I find this unfortunate.

Replies from: snewmarkcomment by denimalpaca · 2017-03-09T22:51:13.902Z · LW(p) · GW(p)

This looks like a good method to derive lower-level beliefs from higher-level beliefs. The main thing to consider when taking a complex statement of belief from another person, is that it is likely that there is more than one lower-level belief that goes into this higher-level belief.

In doxastic logic, a belief is really an operator on some information. At the most base level, we are believing, or operating on, sensory experience. More complex beliefs rest on the belief operation on knowledge or understanding; where I define knowledge as belief of some information: Belief(x) = Knowledge_x. These vertices of knowledge can connect along relational edges to form a graph, of which a subset of vertices and edges could be said to be an understanding.

So I think it's not only important to use this method as a reverse-operator of belief, but to also take an extra step and try to acknowledge the other points on the knowledge graph that represent someone's understanding. Then these knowledge vertices can also be reverse-operated on, and a more complete formulation of both parties' maps can be obtained.

comment by IsTheLittleLion · 2017-02-05T12:27:37.822Z · LW(p) · GW(p)

This is my response in which I propose a different approach.

comment by Rubix · 2016-12-02T01:21:23.059Z · LW(p) · GW(p)

For the author and the audience: what are your favourite patience- and sanity-inducing rituals?

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2016-12-05T19:22:47.538Z · LW(p) · GW(p)

For me, sanity always starts with remembering times that I was wrong—confidently wrong, arrogantly wrong, embarrassingly wrong. I have a handful of dissimilar situations cached in my head as memories (there's one story about popsicles, one story about thinking a fellow classmate was unintelligent, one story about judging a student's performance during a tryout, one story about default trusting someone with some sensitive information), and I can lean on all of those to remind myself not to be overconfident, not to be dismissive, not to trust too hard in my feeling of rightness.

As for patience, I think the key thing is a focus on the value of the actual truth. If I really care about finding the right answer, it's easy to be patient, and if I don't, it's a good sign that I should disengage once I start getting bored or frustrated.

comment by MrMind · 2016-12-01T14:10:52.066Z · LW(p) · GW(p)

Correct me if I'm wrong. You are searching for a sentence B such that:

1) if B then A

2) if not B, then not A. Which implies if A then B.

Which implies that you are searching for an equivalent argument. How can an equivalent argument have an explanatory power?

Replies from: CCC, Unnamed, OneStep↑ comment by CCC · 2016-12-01T14:36:39.585Z · LW(p) · GW(p)

"Aluminium is better than steel!" cries Alice.

"Steel is better than aluminium!" counters Bob. Both of them continue to stubbornly hold these opinions, even in the face of vehement denials from the other.

It is not at once clear how to resolve this issue. However, both Alice and Bob have recently read the above article, and attempt to apply it to their disagreement.

"Aluminium is better than steel because aluminium does not rust," says Alice. "The statement 'aluminium does not rust, but steel does' is an equivalent argument to 'aluminium is better than steel'".

"Steel is better than aluminium because steel is stronger than aluminium," counters Bob. "Steel can hold more weight than aluminium without bending, which makes it a superior metal."

"So the crux of our argument," concludes Alice, "is really that we are disagreeing on what it is that makes a metal better; I am placing more importance on rustproofing, while you are showing a preference for strength?"

Replies from: Lumifer, MrMind↑ comment by Lumifer · 2016-12-01T15:22:04.876Z · LW(p) · GW(p)

For this example you don't need any double cruxes. Alice and Bob should have just defined their terms, specifically the word "better" to which they attach different meanings.

Replies from: Duncan_Sabien, CCC↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2016-12-01T18:47:27.604Z · LW(p) · GW(p)

True, but they then could easily have gone on to do a meaningful double crux about why their chosen quality is the most important one to attend to.