Excessive AI growth-rate yields little socio-economic benefit.

post by Cleo Nardo (strawberry calm) · 2023-04-04T19:13:51.120Z · LW · GW · 22 commentsContents

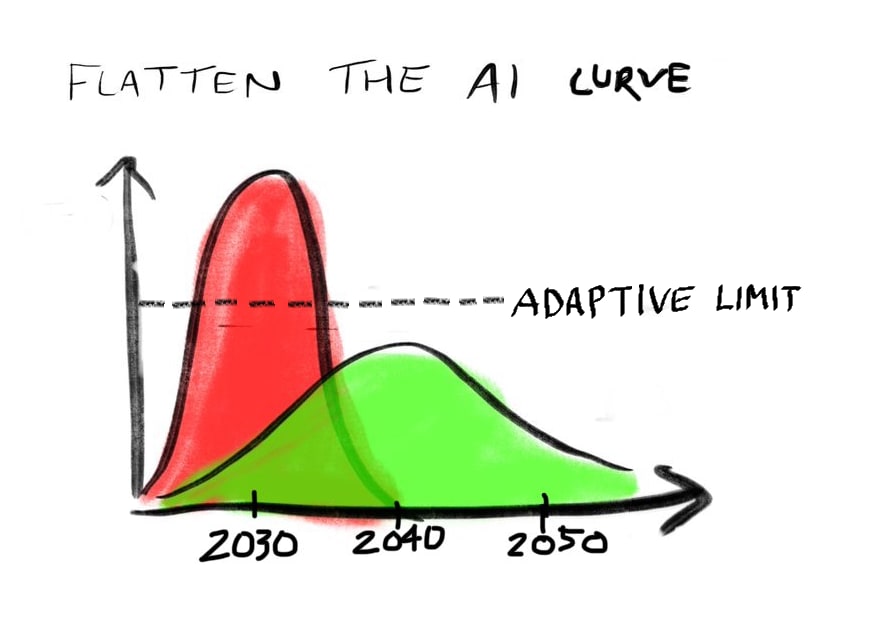

Basic argument Is this argument sound? (1) There exists an adaptive limit. (2) Exceeding this limit yields no socio-economic benefit. (3) Exceeding this limit imposes significant risks and costs. (4) AI growth-rate currently exceeds the adaptive limit. So 5–10 years is the timescale over which the economy (and society as large) can adapt to socio-economic shocks on the scale of ChatGPT-3.5. (5) Therefore, we should slow rate of AI growth-rate. Implications None 23 comments

Basic argument

In short —

- There exists an adaptive limit representing the maximum rate at the economy can adapt to new technology.

- Exceeding this limit yields no socio-economic benefit, in the general case.

- Exceeding this limit imposes significant risks and costs.

- AI growth-rate currently exceeds the adaptive limit.

- Therefore, we should slow rate of AI growth-rate.

Is this argument sound?

(1) There exists an adaptive limit.

The economy is a complex adaptive system — it tries to maintains a general equilibrium by responding to exogenous changes. These responses are internal homeostatic processes such as the price mechanism, labour movements, and the stock exchange.

However, these homeostatic processes are non-instantaneous. Prices are sticky, employees have contracts, and the NASDAQ closes at 8:00 PM. The internal homeostatic processes which equilbriate the economy operate on a timescale of years, not weeks.

Imagine if OpenAI had released ChatGPT-3.5 but hadn't released ChatGPT-4. The release of ChatGPT-3.5 would've had a profound impact on the economy. Here's the standard neo–Keynesian story —

Firstly, people start using ChatGPT-3.5 to automate part of their jobs, increasing their productivity. Some employees use ChatGPT-3.5 to complete more tasks within the same working hours, whereas other employees use ChatGPT-3.5 to complete the same tasks within fewer working hours. In both cases, value is created.

Because employees are completing more tasks, the companies start shipping more products and services. Supply goes up, and quality goes up too. So investors and shareholders receive more money. Because employees take more leisure time, their jobs looks more attractive to job-seekers. Hence the supply for labour increases, and the wages decrease, and the demand for such labour, at the new price, increases. Because the labour costs have dropped, competing companies will lower prices to gain more market share. And new industries, which would've been unprofitable in the previous economy, are now profitable and viable. This leads to a real-terms wage increase.

After a few years, the economy reaches (or at least approaches) a new general equilibrium in which...

- People have more money to spend.

- The products and services are more abundant, cheaper, and of a higher quality.

- People have more leisure to enjoy themselves.

This is what we call an "AI Summer Harvest [LW · GW]".

Notice that the benefit of ChatGPT-3.5 dissipates slowly throughout the economy. Eventually, the economy approaches a new general equilibrium, but this is a non-instantaneous process.

(2) Exceeding this limit yields no socio-economic benefit.

Our story starts with ChatGPT-3.5 and ends with higher social welfare.

This is a common feature of technological progress — a new general-purpose technology will normally yield these three benefits:

- People have more money to spend.

- The products and services are more abundant, cheaper, and of a higher quality.

- And people have more leisure to enjoy themselves.

This follows from standard neoclassical economics.

However, the neoclassical analysis only applies when the economy is near-equilibrium. When the economy is far-from-equilibrium the neoclassical analysis is agnostic, and we must turn to other macroeconomic methods.

According to mainstream macroeconomic theory, until the sticky prices are adjusted, fired employees are rehired, and investments are reallocated, the economy will not be in a better socio-economic situation.

(3) Exceeding this limit imposes significant risks and costs.

This has been covered extensively elsewhere. I'm alluding to —

- Economic shocks (unemployment, inflation, inequality)

- Misinformation, fraud, blackmail, other crimes.

- Extinction-level risks.

(4) AI growth-rate currently exceeds the adaptive limit.

ChatGPT-3.5 was released circa December 2022, and GPT-4 (in the form of Bing Sydney) was released circa February 2023. That's a three-month gap! This definitely exceeded the adaptive limit. The economic impact of ChatGPT-3.5 would probably have dissipated through the economy over 5–10 years.

That's my rough intuition —

- Students study in university for 3–6 years.

- Employees change their job once every 5–10 years.

- Politicians are re-elected on a timescale of 5–10 years.

- Companies can persits for 5–10 years in an unfit economy.

- Big government projects take about 2–10 years.

So 5–10 years is the timescale over which the economy (and society as large) can adapt to socio-economic shocks on the scale of ChatGPT-3.5.

This implies that ChatGPT-4 could've been released in 2028–2033 and we would've yielded the same socio-economic benefits. Why? Because the bottleneck on labour automation isn't that companies need a GPT-4-based chatbot rather than a GPT-3-based chatbot, but rather that firing employees happens very slowly, over years, not weeks.

(5) Therefore, we should slow rate of AI growth-rate.

I think we should slow the growth-rate of AI to below 0.2 OOMs/year [LW · GW], equivalently a 58% year-on-year growth, equivalently a doubling-time of 18 months.[1]

What does this mean in practice?

On March 15 2023, OpenAI released GPT-4 which was trained with an estimated 2.8e+25 FLOPs. If OpenAI had followed the 0.2 OOMs/year target, then GPT-4 would've been released on March 29 2029. We can see this by examining the proposed training-run compute limits, shown below. See the original post [LW · GW] for details.

| Year | Maximum training footprint (FLOPs) in logarithm base 10 | Maximum training footprint (FLOPs) |

| 2020 | 23.6 | 3.98E+23 |

| 2021 | 23.8 | 6.31E+23 |

| 2022 | 24.0 | 1.00E+24 |

| 2023 | 24.2 | 1.58E+24 |

| 2024 | 24.4 | 2.51E+24 |

| 2025 | 24.6 | 3.98E+24 |

| 2026 | 24.8 | 6.31E+24 |

| 2027 | 25.0 | 1.00E+25 |

| 2028 | 25.2 | 1.58E+25 |

| 2029 | 25.4 | 2.51E+25 |

| 2030 | 25.6 | 3.98E+25 |

Implications

- Because economic equilibriation is non-instantaneous, there are smaller near-term financial incentives to train GPT-. This makes coordination easier, and also lengthens timelines conditional on no coordination.

- Timelines are more aggressive in worlds with neoclassical economics — many competitors, instantaneous transactions, no transaction costs, non-sticky prices, etc. These things increase the timescale of economic equilibriation.

- Governments (which are mostly neo-Keynesian) avoid economic shocks with significant short-term downsides (e.g. mass unemployment), even if the long-run equilibrium resulting from the shock would be socio-economically productive. Government prefer to slow the timescale of exogenous shock to the adaptive limit. This increases the likelihood that policy-makers will coordinate on slowing AI.

- There is a trending metaphor of an AI Summer Harvest [LW · GW] which compares slowing AI to a summer harvest. This article presents an argument underlying the comparision — there's no benefit in a farmers sowing seeds at a greater rate than they harvest crops. The benefits of an AI Summer Harvest should be communicated clearly and repeatedly to policy-makers and the general public.

- A thermodynamic system undergoes a quasi-static process if it remains close to equilibrium throughout the process. This happens when the exogenous change occurs slower than the timescale of the system's internal equilibration. For example, a normal atmospheric heat wave is quasi-static whereas a pressure wave is not. Hence, an equivalent way to say "slow AI growth-rate to the adaptive limit" is to say "AI development should be a quasi-static process".

- ^

The "0.2 OOMs/year" figure was first proposed by Jaime Sevilla, Director of EpochAI (personal correspondence).

22 comments

Comments sorted by top scores.

comment by AnthonyC · 2023-04-04T20:54:38.228Z · LW(p) · GW(p)

I don't think this argument is sound in general, no. GPT-4 may have only arrived a few months after ChatGPT-3.5, but it's economic impacts will also unfold over the same next however many years. I don't think it will take longer than adapting to just ChatGPT-3.5 would have. Only that impact will be greater, and no one in the meantime will have invested (wasted?) money on procedures and infrastructure adapting to less capable tools first.

I think there are versions of similar arguments that are sound. If you gave the ancient Romans a modern tank or a solar panel it would be useless to them, there's too many missing pieces for them to adapt to it at all. But if you want back to 1960 and gave NASA a big crate of TI-89 calculators, well, they knew math, they knew programming, and they had AAA batteries. It could have been a big help pretty quickly. A crate of laptops would probably be more helpful, and take a bit longer to adapt to fully, but I still think they'd start getting benefit almost right away.

The economy, in general, adapts much slower than it in principle could to new technologies. Usually because no one forces anyone's hand, so the efficient route is to keep using old tech until it's time to replace it. Emerging economies adapt several times faster, because they know what they're trying to catch up to. IDK where the adaptation frontier for LLMs would be, exactly? Or how that changes as capabilities increase.

Replies from: strawberry calm, aeviternity1↑ comment by Cleo Nardo (strawberry calm) · 2023-04-04T21:51:39.540Z · LW(p) · GW(p)

The economy is a complex adaptative system which, like all complex adaptive systems, can handle perturbations over the same timescale as the interal homostatic processes. Beyond that regime, the system will not adapt. If I tap your head, you're fine. If you knock you with an anvil, you're dead.

Replies from: AnthonyC↑ comment by AnthonyC · 2023-04-04T23:33:32.437Z · LW(p) · GW(p)

Yes, absolutely. The question is where that line lies for each combination of system + perturbation. I agree with most of your claims in the article - just not the claim that each AI iteration is sufficiently different from an economic perspective as to require it's own independent 5-10 yr adjustment period. MMy guess is that some companies will start earlier and some later, some will copy best practices and not bother with some generations/iterations, and that this specific issue will not be more of a problem than, say, Europe and then Japan rebuilding after WWII with more modern factories and steel mills and so on than America had. Probably less, since the software is all handled on the back end and the costs of switching should be relatively low.

Replies from: strawberry calm↑ comment by Cleo Nardo (strawberry calm) · 2023-04-05T14:02:31.622Z · LW(p) · GW(p)

Countries that were on the frontier of the Industrial Revolution underwent massive economic, social, and political shocks, and it would've been better if the change had been smoothed over about double the duration.

Countries that industrialised later also underwent severe shocks, but at least they could copy the solutions to those shocks along with the technology.

Novel general-purpose technology introduces problems, and there is a maximum rate at which problems can be fixed by the internal homeostasis of society. That maximum rate is, I claim, at least 5–10 years for ChatGPT-3.5.

ChatGPT-3.5 would've led to maybe a 10% reallocation of labour — this figure doesn't just include directly automated jobs, but also all the second- and third-order effects. ChatGPT-4, marginal on ChatGPT-3.5 would've led to maybe a 4% reallocation of labour.

It's better to "flatten the curve" of labour reallocation over 5–10 years rather than 3 months because massive economic shocks (e.g. unemployment) have socio-economic risks and costs.

Replies from: AnthonyC↑ comment by AnthonyC · 2023-04-05T22:11:52.562Z · LW(p) · GW(p)

That's possible. Have you read Robin Hanson's 2000 paper on economic growth over the past 2 million years? If not, you might find it interesting. Talks in part about how new modes of spreading knowledge and invention may explain past transitions in the economic growth rate.

It doesn't mention AI at all (though Hanson has made the connection multiple times since), but does say that if the data series trend continues, that suggests a possible transition to a new growth mode some time in the next couple of decades with a doubling time of a few days to a few years. To me, AI in some form seems like a reasonable candidate for that, to the extent it can take human limits on adaptation speed out of the equation.

↑ comment by Lost Futures (aeviternity1) · 2023-04-04T21:39:18.962Z · LW(p) · GW(p)

Yep, just as developing countries don't bother with landlines, so to will companies, as they overcome inertia and embrace AI, choose to skip older outdated models and jump to the frontier, wherever that may lie. No company embracing LLMs in 2024 is gonna start by trying to first integrate GPT2, then 3, then 4 in an orderly and gradual manner.

comment by Ppxl (ppxl) · 2023-04-05T13:07:06.924Z · LW(p) · GW(p)

Can our economic system ever adapt to AI? There’s a lot of reason to believe no.

When we automated agriculture, we moved workers to manufacturing. When we automated manufacturing, we moved workers to services. Even places like China and Vietnam are service economies now. Where do we throw people now that services will be automated? As far as I can tell we’re dont have a solution. To adapt to AI, we need major post-consumer restructuring of how capital is distributed. Its not something the free market can adapt to

Replies from: quanticle, strawberry calm↑ comment by quanticle · 2023-04-05T18:45:13.815Z · LW(p) · GW(p)

That very same argument could have been (and was!) made for steam engines, electric motors, and (non-AI) computers. In 2023, we can look back and say, "Of course, as workers were displaced out of agriculture and handicrafts, they went into manufacturing and services." But it wasn't apparent in 1823 that such a transition would occur. Indeed, in 1848, one of the motivations for Marx to write The Communist Manifesto was his fervent belief that industrial manufacturing and services would not be able to absorb all the surplus workers, leading to significant alienation and, eventually, revolution.

↑ comment by Cleo Nardo (strawberry calm) · 2023-04-05T13:17:46.614Z · LW(p) · GW(p)

Sure, the "general equilibrium" also includes the actions of the government and the voting intentions of the population. If change is slow enough (i.e. below 0.2 OOMs/year [LW · GW]) then the economy will adapt.

Perhaps wealth redistribution would be beneficial — in that case, the electorate would vote for political parties promising wealth redistribution. Perhaps wealth redistribution would be unbeneficial — in that case, the electorate would vote for political parties promising no wealth redistribution.

This works because electorial democracy is a (non-perfect) error-correction mechanism. But it operates over timescales of 5–10 years. So we must keep economic shocks to below that rate.

Replies from: cousin_it↑ comment by cousin_it · 2023-04-05T13:33:22.251Z · LW(p) · GW(p)

If AI-induced change leads to enough concentration of economic and military power that most people become economically and militarily irrelevant, I don't expect democracy to last long. One way or another, the distribution of political power will shift toward the actual distribution of economic and military power.

Replies from: ppxl↑ comment by Ppxl (ppxl) · 2023-04-05T14:00:35.894Z · LW(p) · GW(p)

This is what I believe as well. The post-AI economy will look absolutely nothing like what we have now. It's not something you can achieve via policy changes. There are way too many vested interested and institutions we dont know how to ever get rid of peacefully.

comment by ojorgensen · 2023-04-04T20:01:45.514Z · LW(p) · GW(p)

Just a nit-pick but to me "AI growth-rate" suggests economic growth due to progress in AI, as opposed to simply techincal progress in AI. I think "Excessive AI progress yields little socio-economic benefit" would make the argument more immediately clear.

comment by YafahEdelman (yafah-edelman-1) · 2023-04-04T22:53:04.249Z · LW(p) · GW(p)

I think point (2) of this argument either means something weaker then it needs to for this rest of the argument to go through or is just straightforwardly wrong.

If OpenAI released a weakly general (but non-singularity inducing) GPT5 tomorrow, it would pretty quickly have significant effects on people's everyday lives. Programmers would vaguely described a new feature and the AI would implement it, AIs would polish any writing I do, I would stop using google to research things and instead just chat with the AI and have it explain such-and-such paper I need for my work. In their spare time people would read custom books (or watch custom movies) tailored to their extremely niche interests. This would have a significant impact on the everyday lives of people within a month.

It seems concievable that somehow the "socio-economic benefits" wouldn't be as significant that quickly - I don't really know what "socio-economic benefits" are exactly.

However, the rest of your post seems to treat point (2) as proving that there would be no upside from a more powerful AI being released sooner. This feels like a case of a fancy clever theory confusing an obvious reality: better AI would impact a lot of people very quickly.

Replies from: quanticle↑ comment by quanticle · 2023-04-05T22:12:15.705Z · LW(p) · GW(p)

This would have a significant impact on the everyday lives of people within a month.

It would have a drastic impact on your life in a month. However, you are a member of a tiny fraction of humanity, sufficiently interested and knowledgeable about AI to browse and post on a forum that's devoted to a particularly arcane branch of AI research (i.e. AI safety). You are in no way representative. Neither am I. Nor is, to a first approximation, anyone who posts here.

The average American (who, in turn, isn't exactly representative of the world) has only a vague idea of what ChatGPT is. They've seen some hype videos on TV or on YouTube. Maybe they've seen one of their more technically sophisticated friends or relatives use it. But they don't really know what it's good for, they don't know how it would integrate into their lives, and they're put off by the numerous flaws they've heard about with regards to generative models. If OpenAI came out with GPT-5 tomorrow, and it fixed all, or almost all, of the flaws in GPT-4, it would still take years, at least, and possibly decades before it was integrated into the economy in a way that the average American would be able to perceive.

This has nothing to do with the merits of the technology. It has to do with the psychology of people. People's appetite for novelty varies, like many other psychological traits, along a spectrum. On one hand, you have people like Balaji Srinivasan, who excitedly talk about every new technology, whether it be software, financial, or AI. At the other end, you have people like George R. R. Martin, who're still using software written in the 1980s for their work, just because it's what they're familiar and competent with. Most people, I'd venture to guess, are somewhere in the middle. LessWrong is far towards the novelty-seeking end of the spectrum, at, or possibly farther ahead than Balaji Srinivasan. New advancements in AI affect us because we are open to being affected by them, in a way that most people are not.

Replies from: yafah-edelman-1, awg↑ comment by YafahEdelman (yafah-edelman-1) · 2023-04-18T21:43:40.606Z · LW(p) · GW(p)

I'm a bit confused where you're getting your impression of the average person / American, but I'd be happy to bet on LLMs that are at least as capable as GPT3.5 being used (directly or indirectly) on at least a monthly basis by the majority of Americans within the next year?

Replies from: quanticle, strawberry calm, strawberry calm↑ comment by quanticle · 2023-04-19T04:28:36.901Z · LW(p) · GW(p)

How would you measure the usage? If, for example, Google integrates Bard into its main search engine, as they are rumored to be doing, would that count as usage? If so, I would agree with your assessment.

However, I disagree that this would be a "drastic" impact. A better Google search is nice, but it's not life-changing in a way that would be noticed by someone who isn't deeply aware of and interested in technology. It's not like, e.g. Google Maps navigation suddenly allowing you to find your way around a strange city without having to buy any maps or decipher local road signs.

↑ comment by Cleo Nardo (strawberry calm) · 2023-04-18T22:18:01.843Z · LW(p) · GW(p)

"Directly or indirectly" is a bit vague. Maybe make a market on Manifold if one doesn't exist already.

Replies from: yafah-edelman-1↑ comment by YafahEdelman (yafah-edelman-1) · 2023-04-19T01:53:42.462Z · LW(p) · GW(p)

I'm specifically interested in finding something you'd be willing to bet on - I can't find an existing manifold market, would you want to create one that you can decide? I'd be fine trusting your judgment.

↑ comment by Cleo Nardo (strawberry calm) · 2023-04-18T22:11:37.834Z · LW(p) · GW(p)

↑ comment by awg · 2023-04-05T22:17:16.029Z · LW(p) · GW(p)

FWIW South Park released an episode this season with ChatGPT as its main focus. I do think that public perception is probably moving fairly quickly on things like this these days. But I agree with you generally that if you're posting here about these things then you're likely more on the forefront than the average American.

comment by [deleted] · 2023-04-06T02:44:17.928Z · LW(p) · GW(p)

I think what bothers me here is there's a baked assumptions:

1. we get AGI or some other technology that is incredibly powerful/disrupts many jobs

2. Humans have to have jobs to deserve to eat (even though the new technology makes food and everything else much cheaper)

3. We can't think of enough new jobs fast enough due to (1)

4. We have to let all the people who's jobs we disrupted starve since they don't deserve anything since they are not working

Conclusion: If you posit that you must maintain capitalism, exactly as it is, changing nothing, and you get powerful disruptive technology, you will have problems.

But is the conclusion "we should impede it" correct? Notice this is not a 1-party game. Other economic systems* can adopt powerful technology right away, and they will have an immense advantage if they have it and others don't.

Had OpenAI held back GPT-4, long before 2033 other countries would have similar technology and would surge ahead in power.

*I am thinking of state supported capitalism, similar to China today or the USA during it's heyday.

comment by dr_s · 2023-04-05T15:55:34.917Z · LW(p) · GW(p)

This implies that ChatGPT-4 could've been released in 2028–2033 and we would've yielded the same socio-economic benefits. Why? Because the bottleneck on labour automation isn't that companies need a GPT-4-based chatbot rather than a GPT-3-based chatbot, but rather that firing employees happens very slowly, over years, not weeks.

Hm, somewhat disagree here with the specific line of thought - in the end, GPT-4 does pretty much the same things as GPT-3.5, just better. It ended up being the premium, higher quality version of an otherwise free service. Two different tiers. I don't think there's this effect because I think what's actually going to happen is just that people who are willing to pay and think they can get a benefit from it jump directly to GPT-4, and all they gain from it is "less mistakes, higher quality content". In general, it's not like innovations come one at a time anyway; this is just the unusual case of a company essentially obsoleting its own product in a matter of months.

Mind you, I'm with you on the issue of shocks and potential risks coming with this. But I think we would have had them anyway, they're kind of part and parcel of how disruptive this tech can be (and sadly, it is the most disruptive for parasitic sectors like propaganda and scamming).

comment by _Mark_Atwood (fallenpegasus) · 2023-04-05T02:36:49.407Z · LW(p) · GW(p)

We could set up a "Bureau of Sustainable Research" so that technological advance does not outstrip equity in the economy.