Posts

Comments

The whole thing might be LLM slop. Where do ICU nurses have a low enough workload that they can slack off on the job without consequences?

Some people do not have the intuition that organizations should run as efficiently as possible and avoid wasting time or resources.

Some of us don't want every organization to be turned into an Amazon warehouse. An organization that runs as efficiently as possible and wastes no time at all is one that's pretty hellish to work in.

if you’re in a dysfunctional organization where everything is about private fiefdoms instead of getting things done…why not…leave?

Because the dysfunctional organization pays well. Or the the commute is really short, and I'd rather not move. Or this job allows me to stay close to a sick or elderly family member that I care for. Or perhaps the dysfunctional organization is sponsoring my visa, and it'd be difficult to leave and remain in the country. Maybe my co-workers are really nice. Or I think that any other organization that would hire me would be just as dysfunctional, or dysfunctional in a different way.

There are lots of reasons that people continue to work for inefficient organizations.

The content of the complaint caused me to have additional doubt about the truth of Ann Altman's claims. One of the key claims in pythagoras0515's post is that Ann Altman's claims have been self-consistent. That is, Ann Altman has been claiming that approximately the same acts occurred, over a consistent period of time, when given the opportunity to express her views. However, here, there is significant divergence. In the lawsuit complaint, she is alleging that the abuse took place, repeatedly over eight to nine years, a claim that is not supported by any of the evidence in pythagoras0515's post. In addition, another claim from the original post is that the reason she's only bringing up these allegations now is because she suppressed the memory of the abuse. The science behind suppressed memory is controversial, but I doubt that even its staunchest advocates would claim that a person could involuntarily suppress the memory of repeated acts carried out consistently over a long period of time. Therefore, I am more inclined to doubt Ann Altman's allegations based on the contents of the initial complaint filed for the lawsuit.

All that said, I do look forward to seeing what other evidence she can bring forth to support her claims, assuming that Sam Altman doesn't settle out of court to avoid the negative publicity of a trial.

Working on alignment is great, but it is not the future we should be prepping for. Do you have a plan?

I do not, because a future where an unaligned superintelligence takes over is precisely as survivable as a future in which the sun spontaneously implodes.

Any apocalypse that you can plan for isn't really an apocalypse.

But it only spends 0.000001 percent of it’s time (less than 32 s/yr) interacting with us. Because it’s bored and has better things to do.

What if one of those "better things" is disassembling Earth's biosphere in order to access more resources?

The Firefox problem on Claude was fixed after I sent them an e-mail about it.

The link 404s. I think the correct link is: http://rationallyspeakingpodcast.org/231-misconceptions-about-china-and-artificial-intelligence-helen-toner/

Just going by the standard that you set forth:

The overall impression that I got from the program was that as it proved profitable and expanded,

The program expanded in response to Amazon wanting to collect data about more retailers, not because Amazon was viewing this program as a profit center.

it took on a larger workforce and it became harder for leaders to detect when employees were following their individual incentives to cut corners and gradually accumulate risks of capsizing the whole thing

But that doesn't seem to have occurred. Until the Wall Street Journal leak, few if any people outside Amazon were aware of this program. It's not as if any of the retailers that WSJ spoke to said, "Oh yeah, we quickly grew suspicious of Big River Inc, and shut down their account after we smelled something fishy." On the contrary many of them were surprised that Amazon was accessing their seller marketplace through a shell corporation.

I didn't see any examples mentioned in the WSJ article of Amazon employees cutting corners or making simple mistakes that might have compromised operations. Instead, they seemed to be pretty careful and conscientious, making sure to not communicate with outside partners with their Amazon.com addresses, being careful to maintain their cover identities at trade conferences, only communicating with fellow Amazon executives with paper documents (and numbered paper documents, at that), etc.

I would argue that the practices used by Amazon to conceal the link between itself and Big River Inc. were at least as good as the operational security practices of the GRU agents who poisoned Sergei Skripal.

The overall impression that I got from the program was that as it proved profitable and expanded, it took on a larger workforce and it became harder for leaders to detect when employees were following their individual incentives to cut corners and gradually accumulate risks of capsizing the whole thing.

That's not the impression I got. From the article, it says that many of the retailers that the Wall Street Journal had contacted regarding Big River had no idea that the entity was affiliated with Amazon (even despite the rather-obvious-in-hindsight naming, LinkedIn references, company registration data pointing to Seattle, etc). It seems like their operational security was unusually good, good enough that no one at the other retailers bothered looking beyond the surface. Yes, eventually someone talked to the press, but even then, Amazon had a plan in place to handle the program coming to light in a public forum.

In general, it seems like Amazon did this pretty competently from start to finish, and the leaders were pretty well in control of the operation all throughout.

(with an all-in-one solution they just buy one thing and are done)

That's a very common misconception regarding all-in-one tools. In practice, all-in-one tools always need a significant degree of setup, configuration and customization before they are useful for the customer. Salesforce, for example, requires so much customization, you can make a career out of just doing Salesforce customization. Sharepoint is similar.

Thus the trade-off isn't between a narrowly focused tool that does one job extremely well versus an all-in-one tool that does a bunch of things somewhat well. The tradeoff is between a narrowly focused tool that does one job extremely well immediately, with little or no setup versus an all-in-one-tool that does many things somewhat well after extensive setup and customization, which itself might require hiring a specialized professional.

To use the Anrok example, is it possible to do VAT calculations in the existing tools that the business already has, such as ERP systems or CRM software? Yes, of course. But that software would need to be customized to handle the specific tax situation for the business, which is something that Anrok might handle out-of-the-box with little setup required.

I also have a “Done” column, which is arguably pointless as I just delete everything off the “Done” column every couple weeks,

Having a "Done" column (or an archive board) can be very useful if you want to see when was the last time you completed a recurring task. It helps prevent tasks with long recurrences (quarterly, biennially, etc) from falling through the cracks. For example: dentist appointments. They're supposed to happen once a year. And, ideally, you'd create a task to schedule the next one immediately when you get back from the previous one. But let's say that doesn't happen. You got distracted, there was some kind of scheduling issue, life got in the way. Then, months later, you wonder, "Wait, how long has it been since I've been to the dentist?" Archiving completed tasks instead of deleting them lets you answer that question immediately.

The last answer is especially gross:

He chose to be a super-popular blogger and to have this influence as a psychiatrist. His name—when I sat down to figure out his name, it took me less than five minutes. It’s just obvious what his name is.

Can we apply the same logic to doors? "It took me less than five minutes to pick the lock so..."

Or people's dress choices? "She chose to wear a tight top and a miniskirt so..."

Metz persistently fails to state why it was necessary to publish Scott Alexander's real name in order to critique his ideas.

The second wheelbarrow example has a protagonist who knows the true value of the wheelbarrow, but still loses out:

At the town fair, a wheelbarrow is up for auction. You think the fair price of the wheelbarrow is around $120 (with some uncertainty), so you submit a bid for $108. You find out that you didn’t win—the winning bidder ends up being some schmuck who bid $180. You don’t exchange any money or wheelbarrows. When you get home, you check online out of curiosity, and indeed the item retails for $120. Your estimate was great, your bid was reasonable, and you exchanged nothing as a result, reaping a profit of zero dollars and zero cents.

But, in my example, Burry wasn't outbid by "some schmuck" who thought that Avant! was worth vastly more than it ended up being worth. Burry was able to guess not just the true value of Avant!, but also the value that other market participants placed on Avant!, enabling him to buy up shares at a discount compared to what the company ended up selling for.

The implied question in my post was, "How do you know if you're Michael Burry, or the trader selling Avant! shares for $2?"

That point is contradicted by the wheelbarrow examples in the OP, which seem to imply that either you'll be the greater fool or you'll be outbid by the greater fool. Why wasn't Burry outbid by a fool who thought that Avant! was worth $40 a share?

This is why I disagree with the OP; like you, I believe that it's possible to gain from informed trading, even in a market filled with adverse selection.

I don't think the Widgets Inc. example is a good one. Michael Lewis has a good counterpoint in The Big Short, which I will quote at length:

The alarmingly named Avant! Corporation was a good example. He [Michael Burry] had found it searching for the word "accepted" in news stories. He knew, standing at the edge of the playing field, he needed to find unorthodox ways to tilt it to his advantage, and that usually meant finding situations the world might not be fully aware of. "I wasn't looking for a news report of a scam or fraud per se," he said. "That would have been too backward-looking, and I was looking to get in front of something. I was looking for something happening in the courts that might lead to an investment thesis." A court had accepted a plea from a software company called the Avant! Corporation. Avant! had been accused of stealing from a competitor the software code that was the whole foundation of Avant!'s business. The company had $100 million cash in the bank, was still generating $100 million a year of free cash flow -- and had a market value of only $250 million! Michael Burry started digging; by the time he was done, he knew more about the Avant! Corporation than any man on earth. He was able to see that even if the executives went to jail (as they did) and the fines were paid (as they were), Avant! would be worth a lot more than the market then assumed. Most of its engineers were Chinese nationals on work visas, thus trapped -- there was no risk that anyone would quit before the lights were out. To make money on Avant!'s stock, however, he'd probably have to stomach short-term losses, as investors puked up shares in horrified response to negative publicity.

Burry bought his first shares of Avant! in June 2001 at $12 a share. Avant!'s management then appeared on the cover of Business Week, under the headline, "Does Crime Pay?" The stock plunged; Burry bought more. Avant!'s management went to jail. The stock fell some more. Mike Burry kept on buying it -- all the way down to $2 a share. He became Avant!'s single largest shareholder; he pressed management for changes. "With [the former CEO's] criminal aura no longer a part of operating management," he wrote to the new bosses, "Avant! has a chance to demonstrate its concern for shareholders." In August, in another e-mail, he wrote, "Avant! still makes me feel I'm sleeping with the village slut. No matter how well my needs are met, I doubt I'll ever brag about it. The 'creep' factor is off the charts. I half think that if I pushed Avant! too hard I'd end up being terrorized by the Chinese mafia." Four months later, Avant! got taken over for $22 a share.

Why should Michael Burry have assumed that he had more insight about Avant! Corporation than the people trading with him? When all of those other traders exited Avant!, driving its share price to $2, Burry stayed in. Would you have? Or would you have thought, "I wonder what that trader selling Avant! for $2 knows that I don't?"

Why isn’t there a standardized test given by a third party for job relevant skills?

That's what Triplebyte was trying to do for programming jobs. It didn't seem to work out very well for them. Last I heard, they'd been acquired by Karat after running out of funding.

My intuition here is “actually fairly good.” Firms typically spend a decent amount on hiring processes—they run screening tests, conduct interviews, look at CVs, and ask for references. It’s fair to say that companies have a reasonable amount of data collected when they make hiring decisions, and generally, the people involved are incentivized to hire well.

Every part of this is false. Companies don't collect a fair amount of data during the hiring process, and the data they do collect is often irrelevant or biased. How much do you really learn about a candidate by having them demonstrate whether they've managed to memorize the tricks to solving programming puzzles on a whiteboard?

The people involved are not incentivized to hire well, either. They're often engineers or managers dragged away from the tasks that they are incentivized to perform in order to check a box that the participated in the minimum number of interviews necessary to not get in trouble with their managers. If they take hiring seriously, it's out of an altruistic motivation, not because it benefits their own career.

Furthermore, no company actually goes back and determines whether its hires worked out. If a new hire doesn't work out, and is let go after a year's time, does anyone actually go back through their hiring packet and determine if there were any red flags that were missed? No, of course not. And yet, I would argue that that is the minimum necessary to ensure improvement in hiring practices.

The point of a prediction market in hiring is to enforce that last practice. The existence of fixed term contracts with definite criteria and payouts for those criteria forces people to go back and look at their interview feedback and ask themselves, "Was I actually correct in my decision that this person would or would not be a good fit at this company?"

Do you know a healthy kid who will do nothing?

Yes. Many. In fact, I'd go so far as to say that most people in this community, who claim that they're self-motivated learners who were stunted by school would have been worse off without the structure of a formal education. One only needs to go through the archives and look at all the posts about akrasia to find evidence of this.

What does "lowercase 'p' political advocacy" mean, in this context? I'm familiar with similar formulations for "democratic" ("lowercase 'd' democratic") to distinguish matters relating to the system of government from the eponymous American political party. I'm also familiar with "lowercase 'c' conservative" to distinguish a reluctance to embrace change over any particular program of traditionalist values. But what does "lowercase 'p' politics" mean? How is it different from "uppercase 'P' Politics"?

A great example of a product actually changing for the worse is Microsoft Office. Up until 2003, Microsoft Office had the standard "File, Edit, ..." menu system that was characteristic of desktop applications in the '90s and early 2000s. For 2007, though, Microsoft radically changed the menu system. They introduced the ribbon. I was in school at the time, and there was a representative from Microsoft who came and gave a presentation on this bold, new UI. He pointed out how, in focus group studies, new users found it easier to discover functionality with the Ribbon than they did with the old menu system. He pointed out how the Ribbon made commonly used functions more visible, and how, over time, it would adapt to the user's preferences, hiding functionality that was little used and surfacing functionality that the user had interacted with more often.

Thus, when Microsoft shipped Office 2007 with the Ribbon, it was a great success, and Office gained a reputation for having the gold standard in intuitive UI, right?

Wrong. What Microsoft forgot is that the average user of Office wasn't some neophyte sitting in a carefully controlled room with a one-way mirror. The average user of Office was upgrading from Office 2003. The average user of Office had web links, books, and hand-written notes detailing how to accomplish the tasks they needed to do. By radically changing the UI like that, Microsoft made all of that tacit knowledge obsolete. Furthermore, by making the Ribbon "adaptive", they actively prevented new tacit knowledge from being formed.

I was working helpdesk for my university around that time, and I remember just how difficult it was to instruct people with how to do tasks in Office 2007. Instead of writing down (or showing with screenshots) the specific menus they had to click through to access functionality like line or paragraph spacing, and disseminating that, I had to sit with each user, ascertain the current state of their unique special snowflake Ribbon, and then show them how to find the tools to allow them to do whatever it is they wanted to do. And then I had to do it all over again a few weeks later, when the Ribbon adapted to their new behavior and changed again.

This task was further complicated by the fact that Microsoft moved away from having standardized UI controls to making custom UI controls for each separate task.

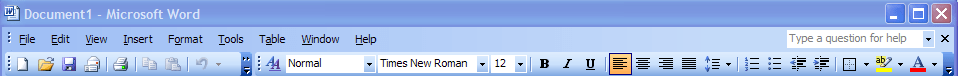

For example, here is the Office 2003 menu bar:

(Source: https://upload.wikimedia.org/wikipedia/en/5/54/Office2003_screenshot.PNG)

Note how it's two rows. The top row is text menus. The bottom row is a set of legible buttons and drop-downs which allow the user to access commonly used tasks. The important thing to note is that everything in the bottom row of buttons also exists as menu entries in the top row. If the user is ever unsure of which button to press, they can always fall back to the menus. Furthermore, documents can refer to the fixed menu structure allowing for simple text instructions telling the user how to access obscure controls.

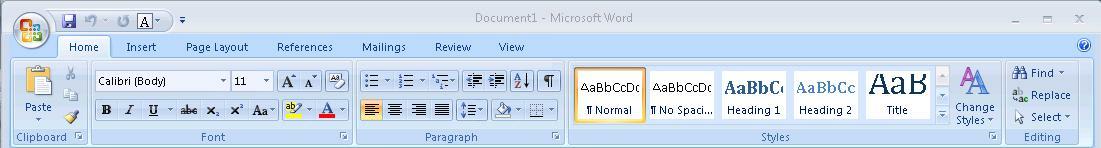

By comparison, this is the Ribbon:

(Source: https://kb.iu.edu/d/auqi)

Note how the Ribbon is multiple rows of differently shaped buttons and dropdowns, without clear labels. The top row is now a set of tabs, and switching tabs now just brings up different panels of equally arcane buttons. Microsoft replaced text with hieroglyphs. Hieroglyphs that don't even have the decency to stand still over time so you can learn their meaning. It's impossible to create text instructions to show users how to use this UI; instructions have to include screenshots. Worse, the screenshots may not match what the user sees, because of how items may move around or be hidden.

I suspect that many instances of UIs getting worse are due to the same sort of focus-group induced blindness that caused Microsoft to ship the ribbon. Companies get hung up on how new inexperienced users interact with their software in a tightly controlled lab setting, completely isolated from outside resources, and blind themselves to the vast amount of tacit knowledge they are destroying by revamping their UI to make it more "intuitive". I think the Ribbon is an especially good example of this, because it avoids the confounding effect of mobile devices. Both Office 2003 and 2007 were strictly desktop products, so one can ignore the further corrosive effect of having to revamp the UI to be legible on a smartphone or tablet.

Websites and applications can definitely become worse after updates, but the company shipping the update will think that things are getting better, because the cost of rebuilding tacit knowledge is borne by the user, not the corporation.

3-year later follow-up: I bought a Hi-Tec C Coleto pen for my brother, who is in a profession where he has to write a lot, and color code forms, etc. He likes it a lot. Thanks for the recommendation.

On the other hand, if plaintiff has already elicited testimony from the engineer to the effect that the conversation happened, could defendant try to imply that it didn’t happen by asking the manager whether he recalled the meeting? I mean, yes, but it’s probably a really bad strategy. Try to think about how you would exploit that as plaintiff: either so many people are mentioning potentially life-threatening risks of your product that you can’t recall them all, in which case the company is negligent, or your memory is so bad it was negligent for you to have your regularly-delete-records policy. It’s like saying I didn’t commit sexual harassment because we would never hire a woman in the first place. Sure, it casts doubt on the opposition’s evidence, but at what cost?

If it's a criminal trial, where facts have to be proven beyond a reasonable doubt, it's a common strategy. If the whistleblower doesn't have evidence of the meeting taking place, and no memos, reports or e-mails documenting that they passed their concerns up the chain, it's perfectly reasonable for a representative of the corporation to reply, "I don't recall hearing about this concern." And that's that. It's the engineer's word against not just one witness, but a whole slew of witnesses, each of whom is going to say, "No, I don't recall hearing about this concern."

Indeed, this outcome is so predictable that lawyers won't even take on these sorts of cases unless the whistleblower can produce written evidence that management was informed of a risk, and made a conscious decision to ignore it and proceed.

Also keep in mind that if we’re going to assume the company will lie on the stand about complex technical points

I'm not assuming anything of the sort. I'm merely saying that, if the whistleblower doesn't have written evidence that they warned their superior about a given risk, their superiors will be coached by the company's lawyers to say, "I don't recall," or, "I did not receive any written documents informing me of this risk." Now, at this point, the lawyers for the prosecution can bring up the document retention policy and state that the reason they don't have any evidence is because of the company's own document retention policies. But that doesn't actually prove anything. Absence of evidence is not, in and of itself, evidence of wrongdoing.

One reason not to mess with this is that we have other options. I could keep a journal. If I keep notes like “2023-11-09: warned boss that widgets could explode at 80C. boss said they didn’t have time for redesign and it probably wouldn’t happen. ugh! 2023-11-10: taco day in cafeteria, hell yeah!” then I can introduce these to support my statement.

Yes, that's certainly something you can do. But it's a much weaker sort of evidence than a printout of an e-mail that you sent, with your name on the from line and your boss's name on the to line. At the very least, you're going to be asked, "If this was such a concern for you, why didn't you bring it up with your boss?" And if you say you did, you'll be asked, "Well, do you have any evidence of this meeting?" And if your excuse is, "Well, the corporation's data retention policies erased that evidence," it weakens your case.

The thing I said that the defendant would not dispute is the fact that the engineer said something to them, not whether they should have believed him.

I still disagree. If it wasn't written down, it didn't happen, as far as the organization is concerned. The engineer's manager can (and probably will) claim that they didn't recall the conversation, or dispute the wording, or argue that while the engineer may have said something, it wasn't at all apparent that the problem was a serious concern.

There's a reason that whistleblowers focus so hard on generating and maintaining a paper trail of their actions and conversations, to the point that they will often knowingly and willfully subvert retention policies by keeping their own copies of crucial communications. They know that, without documentation (e-mails, screenshots, etc), it'll just be a he-said-she-said argument between themselves and an organization that is far more powerful than them. The documentation establishes hard facts, and makes it much more difficult for people higher up in the chain of command to say they didn't know or weren't informed.

If you notice something risky, say something. If the thing you predicted happens, point out the fact that you communicated it.

I think this needs to be emphasized more. If a catastrophe happens, corporations often try to pin blame on individual low-level employees while deflecting blame from the broader organization. Having a documented paper trail indicating that you communicated your concerns up the chain of command prevents that same chain from labeling you as a "rogue employee" or "bad apple" who was acting outside the system to further your personal reputation or financial goals.

Plaintiff wants to prove that an engineer told the CEO that the widgets were dangerous. So he introduces testimony from the engineer that the engineer told the CEO that the widgets were dangerous. Defendant does not dispute this.

Why wouldn't the defendant dispute this? In every legal proceeding I've seen, the defendant has always produced witnesses and evidence supporting their analysis. In this case, I would expect the defendant to produce analyses showing that the widgets were expected to be safe, and if they caused harm, it was due to unforeseen circumstances that were entirely beyond the company's control. I rarely speak in absolutes, but in this case, I'm willing to state that there's always going to be some analysis disagreeing with the engineer's claims regarding safety.

If I say I want you to turn over your email records to me in discovery to establish that an engineer had told you that your widgets were dangerous, but you instead destroy those records, the court will instruct the jury to assume that those records did contain that evidence.

Only if you do so after you were instructed to preserve records by the court. If you destroyed records, per your normal documented retention policies prior to any court case being filed, there's no grounds for adverse inference.

Plaintiff responds by showing that defendant had a policy designed to prevent such records from being created, so defendant knows that records would not exist whether the meeting took place or not, and thus his argument is disingenuous. Would you follow defendant’s strategy here? I wouldn’t.

Every company I've worked for has had retention policies that call for the automatic deletion of e-mails after a period of time (5-7 years). Furthermore, as I alluded to in my other post, Google had an explicit policy of disabling permanent chat records for certain sensitive conversations:

At trial, the DOJ also presented evidence and testimony about Google's policy called “Communicate with Care." Under that policy, Google employees are trained "to have sensitive conversations over chat with history off," the DOJ said, ensuring that the conversation would be auto-deleted in 24 hours.

This policy has created much tension between the DOJ and Google before the trial. The DOJ has argued that "Google's daily destruction of written records prejudiced the United States by depriving it of a rich source of candid discussions between Google's executives, including likely trial witnesses." Google has defended the policy, claiming that the DOJ has "not been denied access to material information needed to prosecute these cases and they have offered no evidence that Google intentionally destroyed such evidence."

And while this does look bad for Google, one can very easily argue that the alternative, the release of a "smoking gun" memo like the "embrace, extend, innovate" document would be far worse.

Would it be as self evidently damning as you think it would be? If so, then why would a company like Google explicitly pursue such a weak strategy? It's not just Google either. When I worked at a different FAANG company, I was told in orientation to never use legal terminology in e-mail, for similar reasons.

The first lawyer will be hardly able to contain his delight as he asks the court to mark “WidgetCo Safe Communication Guidelines” for evidence.

Having safe communication guidelines isn't as damning as you think it is. The counsel for WidgetCo would merely reply that the safe communication guidelines are there to prevent employees from accidentally creating liabilities by misusing legal language. This is no different than admonishing non-technical employees for misusing technical language.

Indeed this was Google's actual strategy.

Games, unlike many real life situations, are entered into by choice. If you are not playing to win, then one must ask why are you bothering to play? Or, more specifically, why are you playing this game and not some other?

Have you read Playing To Win, by David Sirlin? It makes many of the points that you make here, but it doesn't shy away from winning as the ultimate goal, as you seem to be doing. Sirlin doesn't fall into the trap of lost purposes. He keeps in mind that the goal is to win. Yes, of course, by all means try new strategies and learn the mechanics of the game, but remember that the goal is victory.

was militarily weakened severely

That's another highly contentious assertion. Even at the height of Vietnam, the US never considered Southeast Asia to be the main domain of competition against the Soviet Union. The primary focus was always on fielding a military force capable of challenging the Soviets in Western Europe. Indeed, one of the reasons the US failed in Vietnam is because the military was unwilling to commit its best units and commanders to what the generals perceived was a sideshow.

why the US allied with China against the USSR

Was the US ever allied with China? What we did as a result of the Sino-Soviet split was simply let the People's Republic of China back into the international system from which they had been excluded. The US certainly did not pursue any greater alignment with China until much later, at which point the Soviet Union was well into its terminal decline.

failing to prevent the oil shocks in formerly US-friendly middle eastern regimes, which were economic catastrophes that each could have done far more damage if luck was worse

More evidence is needed. The oil shocks were certainly very visible, but it's not clear from the statistical data that they did much damage to the US economy. In fact, the political response to the oil shocks (rationing, price controls, etc) did arguably more to hurt the economy than the oil shocks themselves.

Meanwhile, the USSR remained strong militarily in spite of the economic stagnation.

The actual readiness of Soviet forces, as opposed to the hilariously false readiness reports published by unit commanders, is a matter of great debate. After the Cold War, when US commanders had a chance to tour Soviet facilities in ex-Warsaw Pact states, they were shocked at the poor level of repair of equipment and poor level of readiness among the troops. Furthermore, by the Soviets' own admission, the performance of their troops in Afghanistan wasn't very good, even when compared against the relatively poor level of training and equipment of the insurgent forces.

But the idea that the US was doing fine after Vietnam, including relative to the Soviets, is not very easy to believe, all things considered.

Vietnam was certainly a blow to US power, but it was nowhere near as serious a blow as you seem to believe.

each one after 1900 was followed by either the Cuban Missile Crisis and the US becoming substantially geopolitically weaker than the USSR after losing the infowar over Vietnam

I'm sorry, what? That's a huge assertion. The Vietnam War was a disaster, but I fail to see how it made the US "significantly geopolitically weaker". One has to remember that, at the same time that the US was exiting Vietnam, its main rival, the Soviet Union, was entering a twenty-five year period of economic stagnation that would culminate in its collapse.

Chevron deference means that judges defer to federal agencies instead of interpreting the laws themselves where the statute is ambiguous.

Which is as it should be, according to the way the US system of government is set up. The legislative branch makes the law. The executive branch enforces the law. The judicial branch interprets the law. This is a fact that every American citizen ought to know, from their grade-school civics classes.

For example, would you rather the career bureaucrats in the Environmental Protection Agency determine what regulations are appropriate to protect drinking water or random judges without any relevant expertise?

I would much rather have an impartial third party determine which regulations are appropriate rather a self-interested bureaucrat. Otherwise what's the point of having a judicial system at all, if the judges are just going to yield to the executive on all but a narrow set of questions?

Government agencies aren’t always competent but the alternative is a patchwork of potentially conflicting decisions from judges ruling outside of their area of expertise.

Which can be resolved by Congress passing laws or by the Supreme Court resolving the contradiction between the different circuit courts.

I think they will probably do better and more regulations than if politicians were more directly involved

Why do you think this?

Furthermore, given the long history of government regulation having unintended consequences as a result of companies and private individuals optimizing their actions to take advantage of the regulation, it might be the case that government overregulation makes a catastrophic outcome more likely.

While overturning Chevron deference seems likely to have positive effects for many industries which I think are largely overregulated, it seems like it could be quite bad for AI governance. Assuming that the regulation of AI systems is conducted by members of a federal agency (either a pre-existing one or a new one designed for AI as several politicians have suggested), I expect that the bureaucrats and experts who staff the agency will need a fair amount of autonomy to do their job effectively. This is because the questions relevant AI regulation (i. e. which evals systems are required to pass) are more technically complicated than in most other regulatory domains, which are already too complicated for politicians to have a good understanding of.

Why do you think that the same federal bureaucrats who incompetently overregulate other industries will do a better job regulating AI?

Sometimes if each team does everything within the rules to win then the game becomes less fun to watch and play

Then the solution is to change the rules. Basketball did this. After an infamous game where a team took the lead and then just passed the ball around to deny it to their opponents, basketball added a shot clock, to force teams to try to score (or else give the ball to the other team). (American) Football has all sorts of rules and penalties ("illegal formation", "ineligible receiver downfield", "pass interference", etc) whose sole purpose is to ensure that games aren't dominated by tactics that aren't fun to watch. Soccer has the off-sides rule, which prevents teams from parking all their players right next to the other team's goal. Tennis forces crosscourt serves. And, as I alluded to above, motorsport regularly changes its rules, to try to ensure greater competitive balance and more entertaining races.

With regards to chess, specifically, Magnus Carlsen agrees (archive) that classical chess is boring and too reliant on pre-memorized opening lines. He argues for shorter games with simpler time controls, which would lead to more entertaining games which would be easier to explain to new viewers.

None of these other sports feel the need to appeal to a wooly-headed "spirit of the game" in order to achieve entertaining play. What makes cricket so special?

EDIT: I would add that cricket is also undergoing an evolution of its own, with the rise of twenty-20 cricket and the Indian Premier League.

Isn’t this stupid? To have an extra set of ‘rules’ which aren’t really rules and everyone disagrees on what they actually are and you can choose to ignore them and still win the game?

Yes, it is stupid.

Games aren't real life. The purpose of participating in a game is to maximize performance, think laterally, exploit mistakes, and do everything you can, within the explicit rules, to win. Doing that is what makes games fun to play. Watching other people do that, at a level that you could never hope to reach is what makes spectator sports fun to watch.

Imagine if this principle were applied to other sports. Should tennis umpires suddenly start excusing double faults, because the sun was in the eyes of the serving player? Should soccer referees start disallowing own-goals, because no player could possibly mean to shoot into their own net? If a football player trips and fumbles the ball for no particular reason, should the referee stop the play, and not allow the other team to recover? If Magnus Carlsen blunders and puts his queen in a position where it can be captured, should Ian Nepomniachtchi feel any obligation to offer a takeback?

Fundamentally, what happened was that Bairstow made a mistake. He made a damned silly mistake, forgetting that overs are six balls, not five. Carey took advantage of the error, as was his right, and, I would argue, his obligation. Everything else is sour grapes on the part of the English side. If the Australian batsman had made a similarly silly mistake, and the English bowler had not taken advantage, I would be willing to bet that very few would be talking about the sportsmanship of the English bowler. Instead, the narrative would have been one of missed opportunities. How could the bowler have let such an obvious opportunity slip through his fingers?!

This pattern is even more pronounced in motorsport. The history of Formula 1 is the story of teams finding ways to tweak their cars to gain an advantage, other teams whining about unfairness, and the FIA then tweaking the rules to outlaw the "innovation".

Examples include:

- Brabham BT-46 -- a car that used a fan to suck air out from underneath it, allowing it to produce extra downforce

- Tyrell P34 -- a car that had six wheels instead of four, to gain additional front grip for turning

- Williams FW-14B -- a car that featured an electronic active suspension to ensure that it maintained the optimum ride height for its aerodynamics in all circumstances

- Renault R25 -- which used a mass damper to keep the front end of the car settled

- Red Bull RB6 -- which routed the exhaust underneath the car, in order to improve the aerodynamic characteristics of the floor

In fact, one of the criticisms that many fans have of the FIA is that it goes too far with this. It seems like the moment any team gains an advantage by exploiting a loophole in the rules, the FIA takes action to close the loophole, without necessarily waiting to see if other teams can respond with innovations of their own.

any therapeutic intervention that is now standardized and deployed on mass-scale has once not been backed by scientific evidence.

Yes, which is why, in the ideal case, such as with the polio vaccine, we take great effort to gather that evidence before declaring our therapeutic interventions safe and efficacious.

Ah, but how do you make the artificial conscience value aligned with humanity? An "artificial conscience" that is capable of aligning a superhuman AI... would itself be an aligned superhuman AI.

We’ve taught AI how to speak, and it appears that openAI has taught their AI how to produce as little offensive content as possible.

The problem is that the AI can (and does) lie. Right now, ChatGPT and its ilk are a less than superhuman levels of intelligence, so we can catch their lies. But when a superhuman AI starts lying to you, how does one correct for that? If a superhuman AI starts veering off in a direction that is unexpected, how does one bring it back on track?

@gwern short story, Clippy highlights many of the issues with naively training a superintelligent algorithm on human-generated data and expecting that algorithm to pick up human values as a result. Another post to consider is The Waluigi Effect, which raises the possibility that the more you train an agent to say correct, inoffensive things, the more you've also trained a shadow-agent to say incorrect, offensive things.

How would you measure the usage? If, for example, Google integrates Bard into its main search engine, as they are rumored to be doing, would that count as usage? If so, I would agree with your assessment.

However, I disagree that this would be a "drastic" impact. A better Google search is nice, but it's not life-changing in a way that would be noticed by someone who isn't deeply aware of and interested in technology. It's not like, e.g. Google Maps navigation suddenly allowing you to find your way around a strange city without having to buy any maps or decipher local road signs.

What I'm questioning is the implicit assumption in your post that AI safety research will inevitably take place in an academic environment, and therefore productivity practices derived from other academic settings will be helpful. Why should this be the case when, over the past few years, most of the AI capabilities research has occurred in corporate research labs?

Some of your suggestions, of course, work equally well in either environment. But not all, and even the ones which do work would require a shift in emphasis. For example, when you say professors should be acquainted with other professors, that's valid in academia, where roughly everyone who matters either has tenure or is on a tenure track. However, that is not true in a corporate environment, where many people may not even have PhDs. Furthermore, in a corporate environment, limiting one's networking to just researchers is probably ill advised, given that there are many other people who would have influence upon the research. Knowing a senior executive with influence over product roadmaps could be just as valuable, even if that executive has no academic pedigree at all.

Prioritizing high value research and ignoring everything else is a skill that works in both corporate and academic environments. But 80/20-ing teaching? In a corporate research lab, one has no teaching responsibilities. One would be far better served learning some basic software engineering practices, in order to better interface with product engineers. Similarly, with regards to publishing, for a corporate research lab, having a working product is worth dozens of research papers. Research papers bring prestige, but they don't pay the bills. Therefore, I would argue that AI safety researchers should be keeping an eye on how their findings can be applied to existing AI systems. This kind of product-focused development is something that academia is notoriously bad at.

I also question your claim that academic bureaucracy doesn't slow good researchers down very much. That's very much not in line with what anecdotes I've heard. From what I've seen, writing grant proposals, dealing with university bureaucracy, and teaching responsibilities are a significant time suck. Maybe with practice and experience, it's possible for a good researcher to complete these tasks on "autopilot", and therefore not notice the time that's being spent. But the tasks are still costing time and mental energy that, ideally, would be devoted to research or writing.

I don't think it's inevitable that academia will take over AI safety research, given the trend in AI capabilities research, and I certainly don't think that academia taking over AI safety research would be a good thing. For this reason I question whether it's valuable for AI safety researchers to develop skills valuable for academic research, specifically, as opposed to general time management, software engineering and product development skills.

What is the purpose, beyond mere symbolism, of hiding this post to logged out users when the relevant data is available, in far more detail, on Google's official AI blog?

I am saying that successful professors are highly successful researchers

Are they? That's why I'm focusing on empirics. How do you know that these people are highly successful researchers? What impressive research findings have they developed, and how did e.g. networking and selling their work enable them to get to these findings? Similarly, with regards to bureaucracy, how did successfully navigating the bureaucracy of academia enable these researchers to improve their work?

The way it stands right now, what you're doing is pointing at some traits that correlate with academic success, and are claiming that

- Aspiring to the standards of prestigious academic institutions will speed up AI safety research

- Researchers at prestigious academic institutions share certain traits

- Therefore adopting these traits will lead to better AI safety research

This reasoning is flawed. First, why should AI safety research aspire to the same standards of "publish or perish" and the emphasis on finding positive results that gave us the replication crisis? It seems to me that, to the greatest extent possible, AI safety research should reject these standards, and focus on finding results that are true, rather than results that are publishable.

Secondly, correlation is not causation. The fact that many researchers from an anecdotal sample share certain attributes doesn't mean that those attributes are causative of those researchers' success. There are lots of researchers who do all of the things that you describe, managing their time, networking aggressively, and focusing on understanding grantmaking, who do not end up at prestigious institutions. There are lots of researchers who do all of those things who don't end up with tenure at all.

This is why I'm so skeptical of your post. I'm not sure that the steps your take are actually causative of academic success, rather than merely correlating with academic success, and furthermore, I'm not even sure that the standards of academic success are even something that AI safety research should aspire to.

Well, augmenting reality with an extra dimension containing the thing that previously didn’t exist is the same as “trying and seeing what would happen.” It worked swimmingly for the complex numbers.

No it isn't. The difference between and the values returned by is that can be used to prove further theorems and model phenomena, such as alternating current, that would be difficult, if not impossible to model with just the real numbers. Whereas positing the existence of is just like positing the existence of a finite value that satisfies . We can posit values that satisfy all kinds of impossibilities, but if we cannot prove additional facts about the world with those values, they're useless.

For what it's worth, I had a very similar reaction to yours. Insects and arthropods are a common source of disgust and revulsion, and so comparing anyone to an insect or an arthropod, to me, shows that you're trying to indicate that this person is either disgusting or repulsive.

Probabilities as credences can correspond to confidence in propositions unrelated to future observations, e.g., philosophical beliefs or practically-unobservable facts. You can unambiguously assign probabilities to ‘cosmopsychism’ and ‘Everett’s many-worlds interpretation’ without expecting to ever observe their truth or falsity.

You can, but why would you? Beliefs should pay rent in anticipated experiences. If two beliefs lead to the same anticipated experiences, then there's no particular reason to choose one belief over the other. Assigning probability to cosmopsychism or Everett's many-worlds interpretation only makes sense insofar as you think there will be some observations, at some point in the future, which will be different if one set of beliefs is true versus if the other set of beliefs is true.

One crude way of doing it is saying that a professor is successful if they are a professor at a top 10-ish university.

But why should that be the case? Academia is hypercompetitive, but the way it selects is not solely on the quality of one's research. Choosing the trendiest fields has a huge impact. Perhaps the professors that are chosen by prestigious universities are the ones that the prestigious universities think are the best at drawing in grant money and getting publications into high-impact journals, such as Nature, or Science.

Specifically I think professors are at least +2σ at “hedgehog-y” and “selling work” compared to similarly intelligent people who are not successful professors, and more like +σ at the other skills.

How does one determine this?

Overall, it seems like your argument is that AI safety researchers should behave more like traditional academia for a bunch reasons that have mostly to do with social prestige. While I don't discount the role that social prestige has to play in drawing people into a field and legitimizing it, it seems like overall, the pursuit of prestige has been a net negative for science as a whole, leading to, for example, the replication crisis in medicine and biology, or the nonstop pursuit of string theory over alternate hypotheses in physics. Therefore, I'm not convinced that importing these prestige-oriented traits from traditional science would be a net positive for AI safety research.

Furthermore, I would note that traditional academia has been moving away from these practices, to a certain extent. During the early days of the COVID pandemic, quite a lot of information was exchanged not as formal peer-reviewed research papers, but as blog posts, Twitter threads, and preprints. In AI capabilities research, many new advances are announced as blog posts first, even if they might be formalized in a reseach paper later. Looking further back in the history of science, James Gleick, in Chaos relates how the early researchers into chaos and complexity theories did their research by informally exchanging letters and draft papers. They were outside the normal categories that the bureaucracy of academia had established, so no journal would publish them.

It seems to me that the foundational, paradigm-shifting research always takes place this way. It takes place away from the formal rigors of academia, in informal exchanges between self-selected individuals. Only later, once the core paradigms of the new field have been laid down, does the field become incorporated into the bureaucracy of science, becoming legible enough for journals to routinely publish findings from the new field. I think AI safety research is at this early stage of maturity, and therefore it doesn't make sense for it to import the practices that would help practitioners survive and thrive in the bureaucracy of "Big Science".

I was thinking more about the inside view/outside view distinction, and while I agree with Dagon's conclusion that probabilities should correspond to expected observations and expected observations only, I do think there is a way to salvage the inside view/outside view distinction. That is to treat someone saying, "My 'inside view' estimate of event is ," as being equivalent to someone saying that . It's a conditional probability, where they're telling you what their probability of a given outcome is, assuming that their understanding of the situation is correct.

In the case of deterministic models, this might seem like a tautology — they're telling you what the outcome is, assuming the validity of a process that deterministically generates that outcome. However, there is another source of uncertainty: observational uncertainty. The other person might be uncertain whether they have all the facts that feed into their model, or whether their observations are correct. So, in other words, when someone says, "My inside view probability of is ," that's a statement about the confidence level they have in their observations.