The epistemic virtue of scope matching

post by jasoncrawford · 2023-03-15T13:31:39.602Z · LW · GW · 15 commentsThis is a link post for https://rootsofprogress.org/the-epistemic-virtue-of-scope-matching

Contents

Geography Time Other examples The Industrial Revolution Concomitant variations Cowen on lead and crime Scope matching None 15 comments

I keep noticing a particular epistemic pitfall (not exactly a “fallacy”), and a corresponding epistemic virtue that avoids it. I want to call this out and give it a name, or find out what its name is, if it already has one.

The virtue is: identifying the correct scope for a phenomenon you are trying to explain, and checking that the scope of any proposed cause matches the scope of the effect.

Let me illustrate this virtue with some examples of the pitfall that it avoids.

Geography

A common mistake among Americans is to take a statistical trend in the US, such as the decline in violent crime in the 1990s, and then hypothesize a US-specific cause, without checking to see whether other countries show the same trend. (The crime drop was actually seen in many countries. This is a reason, in my opinion, to be skeptical of US-specific factors, such as Roe v. Wade, as a cause.)

Time

Another common mistake is to look only at a short span of time and to miss the longer-term context. To continue the previous example, if you are theorizing about the 1990s crime drop, you should probably know that it was the reversal of an increase in violent crime that started in the 1960s. Further, you should know that the very long-term trend in violent crime is a gradual decrease, with the late 20th century being a temporary reversal. Any theory should fit these facts.

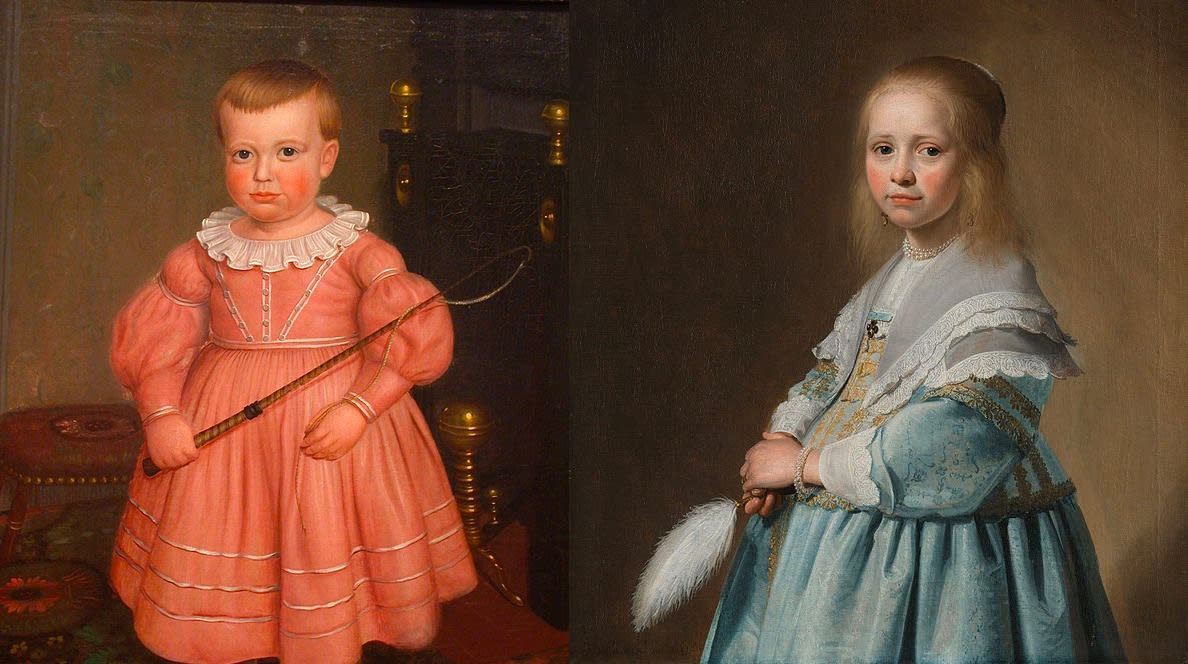

A classic mistake on this axis is attempting to explain a recent phenomenon by a very longstanding cause (or vice versa). For instance, why is pink associated with girls and blue with boys? If your answer has something to do with the timeless, fundamental nature of masculinity or femininity—whoops! It turns out that less than a century ago, the association was often reversed (one article from 1918 wrote that pink was “more decided and stronger” whereas blue was “delicate and dainty”). This points to a something more contingent, a mere cultural convention.

The reverse mistake is blaming a longstanding phenomenon on a recent cause, something like trying to blame “kids these days” on the latest technology: radio in the 1920s, TV in the ‘40s, video games in the ‘80s, social media today. Vannevar Bush was more perceptive, writing in his memoirs simply: “Youth is in rebellion. That is the nature of youth.” (Showing excellent awareness of the epistemic issue at hand, he added that youth rebellion “occurs all over the world, so that one cannot ascribe a cause which applies only in one country.”)

Other examples

If you are trying to explain the failure Silicon Valley Bank, you should probably at least be aware that one or two other banks failed around the same time. Your explanation is more convincing if it accounts for all of them—but of course it shouldn’t “explain too much [LW · GW]”; that is, it shouldn’t apply to banks that didn’t fail, without including some extra factor that accounts for those non-failures.

To understand why depression and anxiety are rising among teenage girls, the first question I would ask is which other demographics if any is this happening to? And how long has it been going on?

To understand what explains sexual harassment in the tech industry, I would first ask what other industries have this problem (e.g., Hollywood)? Are there any that don't?

An excellent example of practicing the virtue I am talking about here is the Scott Alexander post “Black People Less Likely”, in which he points out that blacks are underrepresented in a wide variety of communities, from Buddhism to bird watching. If you want to understand what’s going on here, you need to look for some fairly general causes (Scott suggests several hypotheses).

The Industrial Revolution

An example I have called out is thinking about the Industrial Revolution. If you focus narrowly on mechanization and steam power, you might put a lot of weight on, say, coal. But on a wider view, there were a vast number of advances happening around the same period: in agriculture, in navigation, in health and medicine, even in forms of government. This strongly suggests some deeper cause driving progress across many fields.

Conversely, if you are trying to explain why most human labor wasn’t automated until the Industrial Revolution, you should take into account that some types of labor were automated very early on, via wind and water mills. Oversimplified answers like “no one thought to automate” or “labor was too cheap to automate” explain too much (although these factors are probably part of a more sophisticated explanation).

Note that often the problem is failing to notice how wide a phenomenon is and hypothesizing causes that are too narrow, but you can make the mistake in the opposite direction too, proposing a broad cause for a narrow effect.

Concomitant variations

One advantage of identifying the full range of a phenomenon is that it lets you apply the method of concomitant variations. E.g., if social media is the main cause of depression, then regions or demographics where social media use is more prevalent ought to have higher rates of depression. If high wages drive automation, then regions or industries with the highest wages ought to have the most automation. (Caveat: these correlations may not exist when there are control systems or other negative feedback loops.)

Related, if the hypothesized cause began in different regions/demographics/industries at different times, then you ought to see the effects beginning at different times as well.

These kinds of comparisons are much more natural to make when you know how broadly a trend exists, because just identifying the breadth of a phenomenon induces you to start looking at multiple data points or trend lines.

(Actually, maybe everything I'm saying here is just corollaries of Mill's methods? I don't grok them deeply enough to be sure.)

Cowen on lead and crime

I think Tyler Cowen was getting at something related to all of this in his comments on lead and crime. He points out that, across long periods of time and around the world, there are many differences in crime rates to explain (e.g., in different parts of Africa). Lead exposure does not explain most of those differences. So if lead was the main cause of elevated crime rates in the US in the late 20th century, then we’re still left looking for other causes for every other change in crime. That's not impossible, but it should make us lean away from lead as the main explanation.

This isn’t to say that local causes are never at work. Tyler says that lead could still be, and very probably is, a factor in crime. But the broader the phenomenon, the harder it is to believe that local factors are dominant in any case.

Similarly, maybe two banks failed in the same week for totally different reasons—coincidences do happen. But if twenty banks failed in one week and you claim twenty different isolated causes, then you are asking me to believe in a huge coincidence.

Scope matching

I was going to call this virtue “scope sensitivity,” but that term is already taken for something else [LW · GW]. For now I will call it “scope matching”—suggest a better term if you have one, or if one already exists?

The first part of this virtue is just making sure you know the scope of the effect in the first place. Practically, this means making a habit of pausing before hypothesizing in order to ask:

- Is this effect happening in other countries/regions? Which ones?

- How long has this effect been going on? What is its trend over the long run?

- Which demographics/industries/fields/etc. show this effect?

- Are there other effects that are similar to this? Might we be dealing with a conceptually wider phenomenon here?

This awareness is more than half the battle, I think. Once you have it, hypothesizing a properly-scoped cause becomes much more natural, and it becomes more obvious when scopes don't match.

Thanks to folks who commented below! This is the published version.

15 comments

Comments sorted by top scores.

comment by cousin_it · 2023-03-15T15:04:38.196Z · LW(p) · GW(p)

If high wages drive automation, then regions or industries with the highest wages ought to have the most automation.

But if at the same time automation drives wages down, then the result can look very different. Regions or industries with the highest wages will get them "shaved off" by automation first, then the next ones and so on, until the only wage variation we're left with is uncorrelated with automation and caused by something else.

More generally, consider a negative feedback loop where increase in A causes increase in B, and increase in B causes decrease in A. For example, A = deer population, B = wolf population. Consider this plausible statement: "Since deer population is the main constraint on wolf population, regions with more deer should have more wolves." But this is plausible too: "Since wolf population is the main constraint on deer population, regions with more wolves should have fewer deer." Both can't be true at once.

Looking a bit deeper, we see the first statement is true across many regions, and the second is true for one region across times. And so the truth emerges, that variation within one region is a kind of pendulum, while variation between regions is due to carrying capacity. By now we're very far from the original "if high A causes high B, they need to co-occur". I hope this shows how carefully you need to think about such things.

Replies from: jasoncrawford↑ comment by jasoncrawford · 2023-03-16T19:40:48.867Z · LW(p) · GW(p)

Good point. Related: “Milton Friedman's Thermostat”:

Replies from: jasoncrawfordIf a house has a good thermostat, we should observe a strong negative correlation between the amount of oil burned in the furnace (M), and the outside temperature (V). But we should observe no correlation between the amount of oil burned in the furnace (M) and the inside temperature (P). And we should observe no correlation between the outside temperature (V) and the inside temperature (P).

An econometrician, observing the data, concludes that the amount of oil burned had no effect on the inside temperature. Neither did the outside temperature. The only effect of burning oil seemed to be that it reduced the outside temperature. An increase in M will cause a decline in V, and have no effect on P.

A second econometrician, observing the same data, concludes that causality runs in the opposite direction. The only effect of an increase in outside temperature is to reduce the amount of oil burned. An increase in V will cause a decline in M, and have no effect on P.

But both agree that M and V are irrelevant for P. They switch off the furnace, and stop wasting their money on oil.

↑ comment by jasoncrawford · 2023-03-16T21:25:28.678Z · LW(p) · GW(p)

Added a caveat about this to the post.

comment by Thomas Colthurst (thomas-colthurst) · 2023-03-15T17:20:02.993Z · LW(p) · GW(p)

Nice post! I think though that there is an important class of exceptions to scope matching, which I'll refer to as "well-engineered systems". Think of for example the "The Wonderful One-Hoss Shay" described in the Oliver Wendell Holmes poem where all of the parts are designed to have exactly the same rate of failure. Real world systems can only approach that ideal, but they can get close enough that their most frequent error modes would fail the scope matching heuristic.

I bring this up in particular because I think that crime rates might fall into that category. Human societies do spend a bunch of resources on minimizing crime, so it isn't totally implausible that they succeed at keeping the most common crime causes low, low enough that most of the variation in the overall crime rate is driven by localized (in time and space) one-off factors like lead.

Replies from: AllAmericanBreakfast, MondSemmel, jasoncrawford↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2023-03-15T23:40:46.080Z · LW(p) · GW(p)

Just a quick friendly critique - in the poem, the shay’s parts are meant to fail simultaneously, not at the same rate. Keep that in mind for accurate interpretation!

↑ comment by MondSemmel · 2023-04-29T16:08:03.951Z · LW(p) · GW(p)

I enjoyed that poem, thanks for the recommendation! However, I found some of the old English really hard to understand. To anyone who shares that predicament, I found a great spoken version of the poem on Youtube, which e.g. makes it clear that the "one-hoss shay" is something like a one-horse carriage.

↑ comment by jasoncrawford · 2023-03-16T19:41:46.276Z · LW(p) · GW(p)

Thanks. Yes this is a good point, and related to @cousin_it [LW · GW]'s point. Had not heard of this poem, nice reference.

comment by jacopo · 2023-03-15T21:03:20.750Z · LW(p) · GW(p)

Good post, thank you for it. Linking this will save me a lot of time when commenting...

However I think that the banking case is not a good application. When one bank fails, it makes much more likely that other banks will fail immediately after. So it is perfectly plausible that two banks are weak for unrelated reasons, and that when one fails this pushes the other under as well.

The second one does not even have to be that weak. The twentieth could be perfectly healthy and still fail in the panic (it's a full blown financial crisis at this point!)

Replies from: jasoncrawford↑ comment by jasoncrawford · 2023-03-16T19:43:13.545Z · LW(p) · GW(p)

Yup, you can always have a domino-effect hypothesis of course (if it matches the timeline of events), rather than positing some general antecedent cause in common to all the failures.

comment by Yoav Ravid · 2023-03-15T20:08:56.037Z · LW(p) · GW(p)

I agree this is a good and important concept. 'scope matching' is fine, but I do think it can be improved upon. Perhaps 'scope awareness' is slightly better?

comment by ryan_b · 2023-03-16T18:21:53.982Z · LW(p) · GW(p)

Other ideas that have a similar flavor for me:

- Levels of abstraction: if we are dealing with several factors at the object level, and then all of a sudden one factor several layers of abstraction up, it is cause for alarm. In general, we want to deal with multi-part things at a relatively consistent level of abstraction.

- Steps of inference: the least effective argument or evidence is likely to be one that is more inferential steps away than the rest.

- Significant figures: if you get factors with 7, 8, or 9 significant figures, and then one factor only has 2, the precision of the whole calculation is reduced to 2. Conversely, if the first factor you encounter has 9 significant figures, it is a bad plan to assume all the other factors have the same.

I feel like scope sensitivity is better for this purpose than for the original one, but I suppose renaming that one to "size insensitivity" or "magnitude neglect" is too much to ask now. Generating a bunch of plausible names mostly by smashing together similar feeling words:

- Dimension matching [1]

- Degree matching [1]

- Grade matching [1]

- Scope limiting

- Locality bias [2]

- Hyperlocality [2]

- Under-generalization (of the question) [3]

- Single Study Syndrome [3]

- Under-contextualization [4]

- Typical context bias [4]

- Reference Classless [5]

[1] Following the sense of scope, and looting math terms.

[2] Leaning into the first two examples, where the analysis is too local or maybe insufficiently global.

[3] Leaning into Scott Alexander posts, obliquely referring to Generalizing From One Example [LW · GW] and Beware the Man of One Study [LW · GW] respectively.

[4] This feels like typical mind fallacy, but for the context they are investigating, ie assuming the same context holds everywhere.

[5] In the sense of reference class forecasting

Replies from: jasoncrawford↑ comment by jasoncrawford · 2023-03-16T19:44:17.842Z · LW(p) · GW(p)

Interesting point about levels of abstraction, I think I agree, but what is a good example?

Replies from: ryan_b↑ comment by ryan_b · 2023-03-17T16:17:24.696Z · LW(p) · GW(p)

When there was a big surge in people talking about the Dredge Act and the Jones Act last year, I would see conversations in the wild address these three points: unions supporting these bills because they effectively guarantee their member's jobs; shipbuilders supporting these bills because they immunize them from foreign competition; and the economy as measured in GDP.

Union contracts and business decisions are both at the same level of abstraction: thinking about what another group of people thinks and what they do because of it. The GDP is a towering pile of calculations to reduce every transaction in the country to a percentage. It would need a lot of additional argumentation to build the link between the groups of people making decisions about the thing we are asking about, and the economy as a whole. Even if it didn't it would still have problems like double-counting the activity of the unions/businesses under consideration, and including lots of irrelevant information like the entertainment sector of the economy, which has nothing to do with intra-US shipping and dredging.

Different levels of abstraction have different relationships to the question we are investigating. It is hard to put these different relationships together well for ourselves, and very hard to communicate them to others, so we should be pretty skeptical about mixing them, in my view.

comment by Yoav Ravid · 2023-03-15T14:55:21.729Z · LW(p) · GW(p)

that it, it shouldn’t apply

That is*

Replies from: jasoncrawford↑ comment by jasoncrawford · 2023-03-15T15:25:44.645Z · LW(p) · GW(p)

Fixed, thanks