Functional Decision Theory vs Causal Decision Theory: Expanding on Newcomb's Problem

post by Geropy · 2019-05-02T22:15:39.128Z · LW · GW · 7 commentsContents

Inverse Newcomb’s Problem: None 7 comments

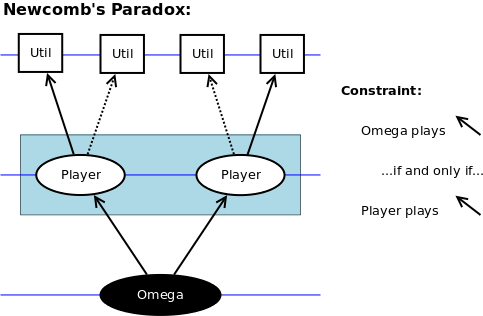

I’ve finished reading through Functional Decision Theory: A New Theory of Instrumental Rationality, and in my mind, the main defense of causal-decision-theory (CDT) agents in Newcomb’s Problem is not well-addressed. As they stated in the paper, the standard defense of two-boxing is that Newcomb’s Problem explicitly punishes rational decision theories, and rewards others. The paper then refutes this by saying: “In short, Newcomb’s Problem doesn’t punish rational agents; it punishes two-boxers”.

It is true that FDT agents will “win” at Newcomb’s Problem, but what the paper doesn’t address is that it is quite easy to come up with similar situations where FDT agents are punished and CDT agents are not. A simple example of this is something I’ll call the Inverse Newcomb’s Problem since it only requires changing a few words relative to the original.

Inverse Newcomb’s Problem:

An agent finds herself standing in front of a transparent box labeled “A” that contains $1,000, and an opaque box labeled “B” that contains either $1,000,000 or $0. A reliable predictor, who has made similar predictions in the past and been correct 99% of the time, claims to have placed $1,000,000 in box B iff she predicted that the agent would two-box in the standard Newcomb’s problem. The predictor has already made her prediction and left. Box B is now empty or full. Should the agent take both boxes, or only box B, leaving the transparent box containing $1,000 behind?

The bolded part is the only difference relative to the original problem. The situation initially plays out the same, but just branches at the end:

1. The prediction is made by a reliable predictor (I’ll use the name Omega) for how the agent would respond in a standard Newcomb’s dilemma (“one-box” or “two-box”)

2. Armed with this prediction, Omega stands in front of box B and decides whether to place the $1,000,000 inside.

3. The $1,000,000 goes in the box iff:

a. The prediction was “one-box” (Standard Newcomb’s Problem)

b. The prediction was “two-box” (Inverse Newcomb’s Problem)

Because FDT agents would one-box in a standard Newcomb’s problem, their box B in the inverse problem is empty. In this inverse problem, there is no reason for FDT agents not to two-box, and they end up with $1,000. In contrast, because CDT agents would two-box in a standard Newcomb’s problem, their box B in the inverse problem has $1,000,000. They two-box in this case as well and end up with $1,001,000. In much the same way that Omega in the standard Newcomb’s problem “punishes two-boxers”, in the inverse problem it punishes one-boxers.

One may try to argue that the Standard Newcomb’s problem is much more plausible than the inverse, but this does not hold weight. The standard problem has a certain level of elegance and interest to it due to the dilemma it creates, and the fact that Omega has reproduced the scenario from its prediction, but this does not mean that it’s more likely. Whether Omega rewards standard one-boxers (3a) or two-boxers (3b) is completely tied to the motivations of this hypothetical predictor, and there’s no way to justify that one is more likely than the other.

By the very nature of this situation, it is impossible for a single agent to get the $1,000,000 in both the Standard and Inverse Newcomb’s Problems; they are mutually exclusive. Both FDT and CDT agents are punished in one scenario and rewarded in the other, so to measure their relative performance in a fair manner, we can imagine that each agent gets presented with both the Standard and Inverse problems, and the total money earned is calculated:

The CDT agent will two-box in each case and earn $1,002,000 ($1,000 in the Standard problem and $1,001,000 in the Inverse problem).

The FDT agent will one-box in the Standard problem ($1,000,000) and two-box in the Inverse problem ($1,000), earning $1,001,000.

It is clear that when the agents are presented with the same sequence of two scenarios, one that rewards Standard one-boxers and the other that rewards Standard two-boxers, the CDT agent outperforms the FDT agent. By choosing to one-box in the Standard problem, the FDT agent leaves $1,000 on the table, while the CDT agent claims all the money that was available to them.

To conclude, FDT agents undoubtedly beat CDT agents at the Standard Newcomb’s problem, but that result alone is not relevant in comparing the two in general. This situation is one where a third agent (Omega) has explicitly chosen to reward one-boxers, but as shown above, it is simple enough to imagine an equally-likely case where one-boxers are punished instead. When the total performance of both agents across the two equally-likely scenarios is considered, CDT ends up with an extra $1,000. Based on this, I don’t see how FDT can be considered a strict advance over CDT.

7 comments

Comments sorted by top scores.

comment by Vladimir_Nesov · 2019-05-03T03:15:55.027Z · LW(p) · GW(p)

To make decisions, an agent needs to understand the problem, to know what's real and valuable that it needs to optimize. Suppose the agent thinks it's solving one problem, while you are fooling it in a way that it can't perceive, making its decisions lead to consequences that the agent can't (shouldn't) take into account. Then in a certain sense the agent acts in a different world (situation), in the world that it anticipates (values), not in the world that you are considering it in.

This is also the issue with CDT in Newcomb's problem: a CDT agent can't understand the problem, so when we test it, it's acting according to its own understanding of the world that doesn't match the problem. If you explain a reverse Newcomb's to an FDT agent (ensure that it's represented in it), so that it knows that it needs to act to win in the reverse Newcomb's and not in regular Newcomb's, then the FDT agent will two-box in regular Newcomb's problem, because it will value winning in reverse Newcomb's problem and won't value winning in regular Newcomb's.

comment by Said Achmiz (SaidAchmiz) · 2019-05-02T23:58:37.563Z · LW(p) · GW(p)

The Inverse Newcomb’s Problem seems to be functionally equivalent to the following:

“Omega scans your brain. If it concludes that you would two-box in Newcomb’s Problem, it hands you $1,001,000 and flies off. If it concludes that you would one-box in Newcomb’s Problem, it hands you $1,000 and flies off.”

Right?

Replies from: shminux, Geropy↑ comment by Shmi (shminux) · 2019-05-03T03:21:06.541Z · LW(p) · GW(p)

Not right at all. The original and the modified Newcomb's problems are disguised as decision theory problems. Your formulation takes the illusion of decision making out of it.

If you believe that you have the power to make decisions, then the problems are not "functionally equivalent". If you don't believe that you have the power to make decisions, then there is no problem or paradox, just a set of observations. you can't have it both ways. Either you live in the world where agents and decisions are possible, or you do not. You have to pick one of the two assumptions, since they are mutually exclusive.

I have talked about a self-consistent way to present both in my old post [LW · GW].

↑ comment by Geropy · 2019-05-03T00:57:37.485Z · LW(p) · GW(p)

Yes that's right, I regret calling it a problem instead of just a "scenario".

As a follow up though,I would say that the standard Newcomb's problem is (essentially) functionally equivalent to:

“Omega scans your brain. If it concludes that you would two-box in Newcomb’s Problem, it hands you at most $1,000 and flies off. If it concludes that you would one-box in Newcomb’s Problem, it hands you at least $1,000,000 and flies off.”

Replies from: nshepperd↑ comment by nshepperd · 2019-05-03T06:07:02.923Z · LW(p) · GW(p)

No, that doesn't work. It seems to me you've confused yourself by constructing a fake symmetry between these problems. It wouldn't make any sense for Omega to "predict" whether you choose both boxes in Newcomb's if Newcomb's were equivalent to something that doesn't involve choosing boxes.

More explicitly:

Newcomb's Problem is "You sit in front of a pair of boxes, which are either- both filled with money if Omega predicted you would take one box in this case, otherwise only one is filled". Note: describing the problem does not require mentioning "Newcomb's Problem"; it can be expressed as a simple game tree (see here [LW · GW] for some explanation of the tree format):

.

.

In comparison, your "Inverse Newcomb" is "Omega gives you some money iff it predicts that you take both boxes in Newcomb's Problem, an entirely different scenario (ie. not this case)."

The latter is more of the form "Omega arbitrarily rewards agents for taking certain hypothetical actions in a different problem" (of which a nearly limitless variety can be invented to justify any chosen decision theory¹), rather than being an actual self-contained problem which can be "solved".

The latter also can't be expressed as any kind of game tree without "cheating" and naming "Newcomb's Problem" verbally --- or rather, you can express a similar thing by embedding the Newcomb game tree and referring to the embedded tree, but that converts it into a legitimate decision problem, which FDT of course gives the correct answer to (TODO: draw an example ;).

(¹): Consider Inverse^2 Newcomb, which I consider the proper symmetric inverse of "Inverse Newcomb": Omega puts you in front of two boxes and says "this is not Newcomb's Problem, but I have filled both boxes with money iff I predicted that you take one box in standard Newcomb". Obviously here FDT takes both boxes and a tidy $1,000,1000 profit (plus the $1,000,000 from Standard Newcomb). Whereas CDT gets... $1000 (plus $1000 from Standard Newcomb).

comment by Ronny Fernandez (ronny-fernandez) · 2019-05-02T23:50:15.852Z · LW(p) · GW(p)

Something about your proposed decision problem seems cheaty in a way that the standard Newcomb problem doesn't. I'm not sure exactly what it is, but I will try to articulate it, and maybe you can help me figure it out.

It reminds me of two different decision problems. Actually, the first one isn't really a decision problem.

Omega has decided to give all those who two box on the standard Newcomb problem 1,000,000 usd, and all those who do not 1,000 usd.

Now that's not really a decision problem, but that's not the issue with using it to decide between decision theories. I'm not sure exactly what the issue is but it seems like it is not the decisions of the agent that make the world go one way or the other. Omega could also go around rewarding all CDT agents and punishing all FDT agents, but that wouldn't be a good reason to prefer CDT. It seems like in your problem it is not the decision of the agent that determines what their payout is, whereas in the standard newcomb problem it is. Your problem seems more like a scenario where omega goes around punishing agents with a particular decision theory than one where an agent's decisions determine their payout.

Now there's another decision problem this reminds me of.

Omega flips a coin and tell you "I flipped a coin, and I would have paid you 1,000,000 usd if it came up heads only if I predicted that you would have paid me 1,000 usd if it came up tails after having this explained to you. The coin did in fact come up tails. Will you pay me?"

In this decision problem your payout also depends on what you would have done in a different hypothetical scenario, but it does not seem cheaty to me in the same way your proposed decision problem does. Maybe that is because it depends on what you would have done in this same problem had a different part of it gone differently.

I'm honestly not sure what I am tracking when I judge whether a decision problem is cheaty or not (where cheaty just means "should be used to decide between decision theories") but I am sure that your problem seems cheaty to me right now. Do you have any similar intuitions or hunches about what I am tracking?

Replies from: Geropy↑ comment by Geropy · 2019-05-03T00:43:18.198Z · LW(p) · GW(p)

I think I see where you're coming from with the inverse problem feeling "cheaty". It's not like other decision problems in the sense that it is not really a dilemma; two-boxing is clearly the best option. I used the word "problem" instinctively, but perhaps I should have called it the "Inverse Newcomb Scenario" or something similar instead.

However, the fact that it isn't a real "problem" doesn't change the conclusion. I admit that the inverse scenario is not as interesting as the standard problem, but what matters is that it's just as likely, and clearly favours two-boxers. FDT agents have a pre-commitment to being one-boxers, and that would work well if the universe actually complied and provided them with the scenario they have prepared for (which is what the paper seems to assume). What I tried to show with the inverse scenario is that it's just as likely that their pre-commitment to one-boxing will be used against them.

Both Newcomb's Problem and the Inverse Scenario are "unfair" for one of the theories, which is why I think the proper performance measure is the total money for going through botha, where CDT comes out on top.