Decisions are not about changing the world, they are about learning what world you live in

post by Shmi (shminux) · 2018-07-28T08:41:26.465Z · LW · GW · 71 commentsContents

Epistemic status: Probably discussed to death in multiple places, but people still make this mistake all the time. I am not well versed in UDT, but it seems along the same lines. Or maybe I am reinventing some aspects of Game Theory.

Psychological Twin Prisoner’s Dilemma

The Smoking Lesion Problem

Parfit’s Hitchhiker Problem

The Transparent Newcomb Problem

At this point it is worth pointing out the difference between world counting and EDT, CDT and FDT. The latter three tend to get mired in reasoning about their own reasoning, instead of reasoning about the problem they are trying to decide. In contrast, we mindlessly evaluate probability-weighted uti...

The Cosmic Ray Problem

Deciding and attempting to do X, but ending up doing the opposite of X and realizing it after the fact.

The XOR Blackmail

Immunity from Adversarial Predictors

None

71 comments

Epistemic status: Probably discussed to death in multiple places, but people still make this mistake all the time. I am not well versed in UDT, but it seems along the same lines. Or maybe I am reinventing some aspects of Game Theory.

We know that physics does not support the idea of metaphysical free will. By metaphysical free will I mean the magical ability of agents to change the world by just making a decision to do so. To the best of our knowledge, we are all (probabilistic) automatons who think themselves as agents with free choices. A model compatible with the known laws of physics is that what we think of as modeling, predicting and making choices is actually learning which one of the possible worlds we live in. Think of it as being a passenger in a car and seeing new landscapes all the time. The main difference is that the car is invisible to us and we constantly update the map of the expected landscape based on what we see. We have a sophisticated updating and predicting algorithm inside, and it often produces accurate guesses. We experience those as choices made. As if we were the ones in the driver's seat, not just the passengers.

Realizing that decisions are nothing but updates, that making a decision is a subjective experience of discovering which of the possible worlds is the actual one immediately adds clarity to a number of decision theory problems. For example, if you accept that you have no way to change the world, only to learn which of the possible worlds you live in, then the Newcomb's problem with a perfect predictor becomes trivial: there is no possible world where a two-boxer wins. There are only two possible worlds, one where you are a one-boxer who wins, and one where you are a two-boxer who loses. Making a decision to either one-box or two-box is a subjective experience of learning what kind of a person are you, i.e. what world you live in.

This description, while fitting the observations perfectly, is extremely uncomfortable emotionally. After all, what's the point of making decisions if you are just a passenger spinning a fake steering wheel not attached to any actual wheels? The answer is the usual compatibilism one: we are compelled to behave as if we were making decisions by our built-in algorithm. The classic quote from Ambrose Bierce applies:

"There's no free will," says the philosopher; "To hang is most unjust."

"There is no free will," assents the officer; "We hang because we must."

So, while uncomfortable emotionally, this model lets us make better decisions (the irony is not lost on me, but since "making a decision" is nothing but an emotionally comfortable version of "learning what possible world is actual", there is no contradiction).

An aside on quantum mechanics. It follows from the unitary evolution of the quantum state, coupled with the Born rule for observation, that the world is only predictable probabilistically at the quantum level, which, in our model of learning about the world we live in, puts limits on how accurate the world model can be. Otherwise the quantum nature of the universe (or multiverse) has no bearing on the perception of free will.

Let's go through the examples some of which are listed as the numbered dilemmas in a recent paper by Eliezer Yudkowsky and Nate Soares, Functional decision theory: A new theory of instrumental rationality. From here on out we will refer to this paper as EYNS.

Psychological Twin Prisoner’s Dilemma

An agent and her twin must both choose to either “cooperate” or “defect.” If both cooperate, they each receive $1,000,000. If both defect, they each receive $1,000. If one cooperates and the other defects, the defector gets $1,001,000 and the cooperator gets nothing. The agent and the twin know that they reason the same way, using the same considerations to come to their conclusions. However, their decisions are causally independent, made in separate rooms without communication. Should the agent cooperate with her twin?

First we enumerate all the possible worlds, which in this case are just two, once we ignore the meaningless verbal fluff like "their decisions are causally independent, made in separate rooms without communication." This sentence adds zero information, because the "agent and the twin know that they reason the same way", so there is no way for them to make different decisions. These worlds are

- Cooperate world: $1,000,000

- Defect world: $1,000

There is no possible world, factually or counterfactually, where one twin cooperates and the other defects, no more than there are possible worlds where 1 = 2. Well, we can imagine worlds where math is broken, but they do not usefully map onto observations. The twins would probably be smart enough to cooperate, at least after reading this post. Or maybe they are not smart enough and will defect. Or maybe they hate each other and would rather defect than cooperate, because it gives them more utility than money. If this was a real situation we would wait and see which possible world they live in, the one where they cooperate, or the one where they defect. At the same time, subjectively to the twins in the setup it would feel like they are making decisions and changing their future.

The absent-minded Driver problem [LW · GW]:

An absent-minded driver starts driving at START in Figure 1. At X he can either EXIT and get to A (for a payoff of 0) or CONTINUE to Y. At Y he can either EXIT and get to B (payoff 4), or CONTINUE to C (payoff 1). The essential assumption is that he cannot distinguish between intersections X and Y, and cannot remember whether he has already gone through one of them.

There are three possible worlds here, A, B and C, with utilities 0, 4 and 1 correspondingly, and by observing the driver "making a decision" we learn which world they live in. If the driver is a classic CDT agent, they would turn and end up at A, despite it being the lowest-utility action. Sucks to be them, but that's their world.

The Smoking Lesion Problem

An agent is debating whether or not to smoke. She knows that smoking is correlated with an invariably fatal variety of lung cancer, but the correlation is (in this imaginary world) entirely due to a common cause: an arterial lesion that causes those afflicted with it to love smoking and also (99% of the time) causes them to develop lung cancer. There is no direct causal link between smoking and lung cancer. Agents without this lesion contract lung cancer only 1% of the time, and an agent can neither directly observe, nor control whether she suffers from the lesion. The agent gains utility equivalent to $1,000 by smoking (regardless of whether she dies soon), and gains utility equivalent to $1,000,000 if she doesn’t die of cancer. Should she smoke, or refrain?

The problem does not specify this explicitly, but it seems reasonable to assume that the agents without the lesion do not enjoy smoking and get 0 utility from it.

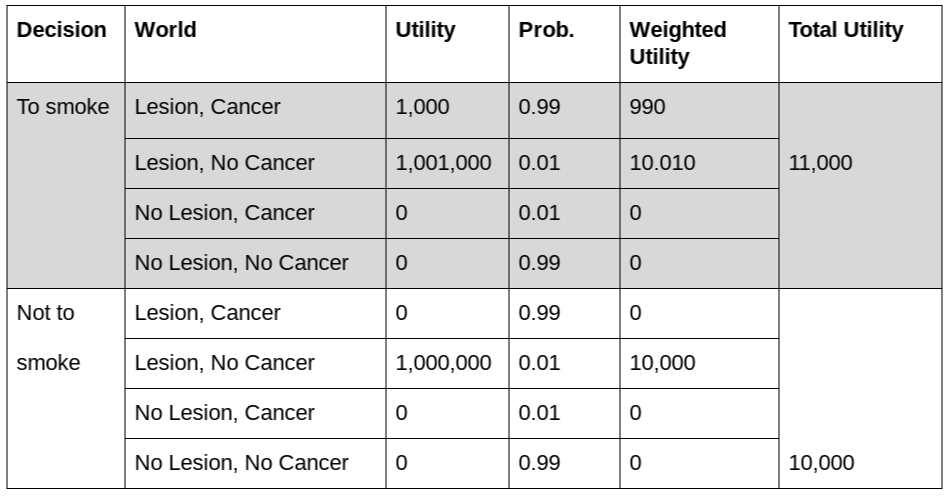

There are 8 possible worlds here, with different utilities and probabilities:

An agent who "decides" to smoke has higher expected utility than the one who decides not to, and this "decision" lets us learn which of the 4 possible worlds could be actual, and eventually when she gets the test results we learn which one is the actual world.

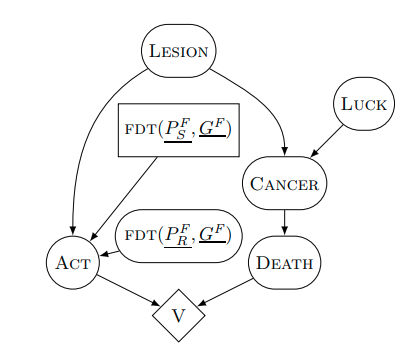

Note that the analysis would be exactly the same if there was a “direct causal link between desire for smoking and lung cancer”, without any “arterial lesion”. In the problem as stated there is no way to distinguish between the two, since there are no other observable consequences of the lesion. There is 99% correlation between the desire to smoke and and cancer, and that’s the only thing that matters. Whether there is a “common cause” or cancer causes the desire to smoke, or desire to smoke causes cancer is irrelevant in this setup. It may become relevant if there were a way to affect this correlation, say, by curing the lesion, but it is not in the problem as stated. Some decision theorists tend to get confused over this because they think of this magical thing they call "causality," the qualia of your decisions being yours and free, causing the world to change upon your metaphysical command. They draw fancy causal graphs like this one:

instead of listing and evaluating possible worlds.

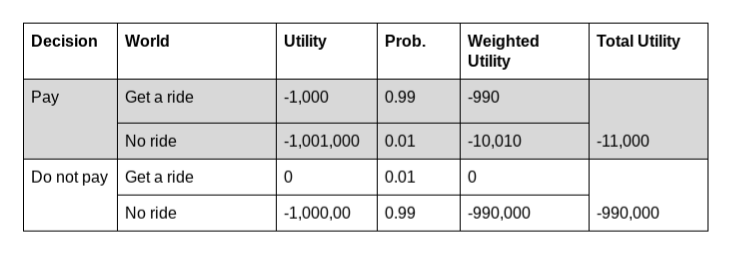

Parfit’s Hitchhiker Problem

An agent is dying in the desert. A driver comes along who offers to give the agent a ride into the city, but only if the agent will agree to visit an ATM once they arrive and give the driver $1,000.

The driver will have no way to enforce this after they arrive, but she does have an extraordinary ability to detect lies with 99% accuracy. Being left to die causes the agent to lose the equivalent of $1,000,000. In the case where the agent gets to the city, should she proceed to visit the ATM and pay the driver?

We note a missing piece in the problem statement: what are the odds of the agent lying about not paying and the driver detecting the lie and giving a ride, anyway? It can be, for example, 0% (the driver does not bother to use her lie detector in this case) or the same 99% accuracy as in the case where the agent lies about paying. We assume the first case for this problem, as this is what makes more sense intuitively.

As usual, we draw possible worlds, partitioned by the "decision" made by the hitchhiker and note the utility of each possible world. We do not know which world would be the actual one for the hitchhiker until we observe it ("we" in this case might denote the agent themselves, even though they feel like they are making a decision).

So, while the highest utility world is where the agent does not pay and the driver believes they would, the odds of this possible world being actual are very low, and the agent who will end up paying after the trip has higher expected utility before the trip. This is pretty confusing, because the intuitive CDT approach would be to promise to pay, yet refuse after. This is effectively thwarted by the driver's lie detector. Note that if the lie detector was perfect, then there would be just two possible worlds:

- pay and survive,

- do not pay and die.

Once the possible worlds are written down, it becomes clear that the problem is essentially isomorphic to Newcomb's.

Another problem that is isomorphic to it is

The Transparent Newcomb Problem

Events transpire as they do in Newcomb’s problem, except that this time both boxes are transparent — so the agent can see exactly what decision the predictor made before making her own decision. The predictor placed $1,000,000 in box B iff she predicted that the agent would leave behind box A (which contains $1,000) upon seeing that both boxes are full. In the case where the agent faces two full boxes, should she leave the $1,000 behind?

Once you are used to enumerating possible worlds, whether the boxes are transparent or not, does not matter. The decision whether to take one box or two already made before the boxes are presented, transparent or not. The analysis of the conceivable worlds is identical to the original Newcomb’s problem. To clarify, if you are in the world where you see two full boxes, wouldn’t it make sense to two-box? Well, yes, it would, but if this is what you "decide" to do (and all decisions are made in advance, as far as the predictor is concerned, even if the agent is not aware of this), you will never (or very rarely, if the predictor is almost, but not fully infallible) find yourself in this world. Conversely, if you one-box even if you see two full boxes, that situation is always, or almost always happens.

If you think you pre-committed to one-boxing but then are capable of two boxing, congratulations! You are in the rare world where you have successfully fooled the predictor!

From this analysis it becomes clear that the word “transparent” is yet another superfluous stipulation, as it contains no new information. Two-boxers will two-box, one-boxers will one-box, transparency or not.

At this point it is worth pointing out the difference between world counting and EDT, CDT and FDT. The latter three tend to get mired in reasoning about their own reasoning, instead of reasoning about the problem they are trying to decide. In contrast, we mindlessly evaluate probability-weighted utilities, unconcerned with the pitfalls of causality, retro-causality, counterfactuals, counter-possibilities, subjunctive dependence and other hypothetical epicycles. There are only recursion-free possible worlds of different probabilities and utilities, and a single actual world observed after everything is said and done. While reasoning about reasoning is clearly extremely important in the field of AI research, the dilemmas presented in EYNS do not require anything as involved. Simple counting does the trick better.

The next problem is rather confusing in its original presentation.

The Cosmic Ray Problem

An agent must choose whether to take $1 or $100. With vanishingly small probability, a cosmic ray will cause her to do the opposite of what she would have done otherwise. If she learns that she has been affected by a cosmic ray in this way, she will need to go to the hospital and pay $1,000 for a check-up. Should she take the $1, or the $100?

A bit of clarification is in order before we proceed. What does “do the opposite of what she would have done otherwise” mean, operationally?. Here let us interpret it in the following way:

Deciding and attempting to do X, but ending up doing the opposite of X and realizing it after the fact.

Something like “OK, let me take $100… Oops, how come I took $1 instead? I must have been struck by a cosmic ray, gotta do the $1000 check-up!”

Another point is that here again there are two probabilities in play, the odds of taking $1 while intending to take $100 and the odds of taking $100 while intending to take $1. We assume these are the same, and denote the (small) probability of a cosmic ray strike as p.

The analysis of the dilemma is boringly similar to the previous ones:

Thus attempting to take $100 has a higher payoff as long as the “vanishingly small” probability of the cosmic ray strike is under 50%. Again, this is just a calculation of expected utilities, though an agent believing in metaphysical free will may take it as a recommendation to act a certain way.

The following setup and analysis is slightly more tricky, but not by much.

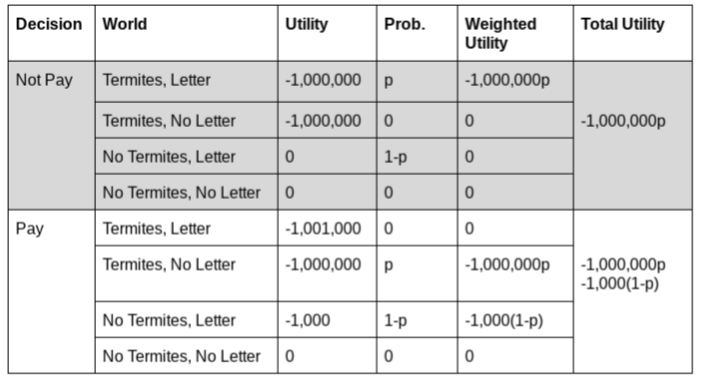

The XOR Blackmail

An agent has been alerted to a rumor that her house has a terrible termite infestation that would cost her $1,000,000 in damages. She doesn’t know whether this rumor is true. A greedy predictor with a strong reputation for honesty learns whether or not it’s true, and drafts a letter:

I know whether or not you have termites, and I have sent you this letter iff exactly one of the following is true: (i) the rumor is false, and you are going to pay me $1,000 upon receiving this letter; or (ii) the rumor is true, and you will not pay me upon receiving this letter.

The predictor then predicts what the agent would do upon receiving the letter, and sends the agent the letter iff exactly one of (i) or (ii) is true. 13 Thus, the claim made by the letter is true. Assume the agent receives the letter. Should she pay up?

The problem is called “blackmail” because those susceptible to paying the ransom receive the letter when their house doesn’t have termites, while those who are not susceptible do not. The predictor has no influence on the infestation, only on who receives the letter. So, by pre-committing to not paying, one avoids the blackmail and if they receive the letter, it is basically an advanced notification of the infestation, nothing more. EYNS states “the rational move is to refuse to pay” assuming the agent receives the letter. This tentatively assumes that the agent has a choice in the matter once the letter is received. This turns the problem on its head and gives the agent a counterintuitive option of having to decide whether to pay after the letter has been received, as opposed to analyzing the problem in advance (and precommitting to not paying, thus preventing the letter from being sent, if you are the sort of person who believes in choice).

The possible worlds analysis of the problem is as follows. Let’s assume that the probability of having termites is p, the greedy predictor is perfect, and the letter is sent to everyone “eligible”, i.e. to everyone with an infestation who would not pay, and to everyone without the infestation who would pay upon receiving the letter. We further assume that there are no paranoid agents, those who would pay “just in case” even when not receiving the letter. In general, this case would have to be considered as a separate world.

Now the analysis is quite routine:

Thus not paying is, not surprisingly, always better than paying, by the “blackmail amount” 1,000(1-p).

One thing to note is that the case of where the would-pay agent has termites, but does not receive a letter is easy to overlook, since it does not include receiving a letter from the predictor. However, this is a possible world contributing to the overall utility, if it is not explicitly stated in the problem.

Other dilemmas that yield to a straightforward analysis by world enumeration are Death in Damascus, regular and with a random coin, the Mechanical Blackmail and the Psychopath Button.

One final point that I would like to address is that treating the apparent decision making as a self- and world-discovery process, not as an attempt to change the world, helps one analyze adversarial setups that stump the decision theories that assume free will.

Immunity from Adversarial Predictors

EYNS states in Section 9:

“There is no perfect decision theory for all possible scenarios, but there may be a general-purpose decision theory that matches or outperforms all rivals in fair dilemmas, if a satisfactory notion of “fairness” can be formalized.” and later “There are some immediate technical obstacles to precisely articulating this notion of fairness. Imagine I have a copy of Fiona, and I punish anyone who takes the same action as the copy. Fiona will always lose at this game, whereas Carl and Eve might win. Intuitively, this problem is unfair to Fiona, and we should compare her performance to Carl’s not on the “act differently from Fiona” game, but on the analogous “act differently from Carl” game. It remains unclear how to transform a problem that’s unfair to one decision theory into an analogous one that is unfair to a different one (if an analog exists) in a reasonably principled and general way.”

I note here that simply enumerating possible worlds evades this problem as far as I can tell.

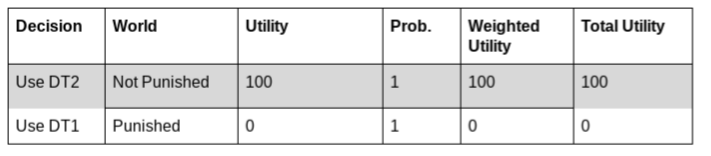

Let’s consider a simple “unfair” problem: If the agent is predicted to use a certain decision theory DT1, she gets nothing, and if she is predicted to use some other approach (DT2), she gets $100. There are two possible worlds here, one where the agent uses DT1, and the other where she uses DT2:

So a principled agent who always uses DT1 is penalized. Suppose another time the agent might face the opposite situation, where she is punished for following DT2 instead of DT1. What is the poor agent to do, being stuck between Scylla and Charybdis? There are 4 possible worlds in this case:

- Agent uses DT1 always

- Agent uses DT2 always

- Agent uses DT1 when rewarded for using DT1 and DT2 when rewarded for using DT2

- Agent uses DT1 when punished for using DT1 and DT2 when punished for using DT2

The world number 3 is where a the agent wins, regardless of how adversarial or "unfair" the predictor is trying to be to her. Enumerating possible worlds lets us crystallize the type of an agent that would always get maximum possible payoff, no matter what. Such an agent would subjectively feel that they are excellent at making decisions, whereas they simply live in the world where they happen to win.

71 comments

Comments sorted by top scores.

comment by Richard_Kennaway · 2018-07-29T14:27:47.098Z · LW(p) · GW(p)

We know that physics does not support the idea of metaphysical free will. By metaphysical free will I mean the magical ability of agents to change the world by just making a decision to do so.

According to my understanding of the ordinary, everyday, non-magical meanings of the words "decide", "act", "change", etc., we do these things all the time. So do autonomous vehicles, for that matter. So do cats and dogs. Intention, choice, and steering the world into desired configurations are what we do [LW · GW], as do some of our machines.

It is strange that people are so ready to deny these things to people, when they never make the same arguments about machines. Instead, for example, they want to know what a driverless car saw and decided when it crashed, or protest that engine control software detected when it was under test and tuned the engine to misleadingly pass the emissions criteria. And of course there is a whole mathematical field called "decision theory". It's about decisions.

After all, what's the point of making decisions if you are just a passenger spinning a fake steering wheel not attached to any actual wheels?

The simile contradicts your argument, which implies that there is no such thing as a steering wheel. But there is. Real steering wheels, that the real driver of a real car uses to really steer it. Are the designers and manufacturers of steering wheels wasting their efforts?

The answer is the usual compatibilism one: we are compelled to behave as if we were making decisions by our built-in algorithm.

Now that's magic — to suppose that our beliefs are absolutely groundless, yet some compelling force maintains them in alignment with reality.

See also: Hyakujo's fox [LW · GW].

Replies from: shminux↑ comment by Shmi (shminux) · 2018-07-29T18:51:29.717Z · LW(p) · GW(p)

According to my understanding of the ordinary, everyday, non-magical meanings of the words "decide", "act", "change", etc., we do these things all the time.

We perceive the world as if we were intentionally doing them, yes. But there is no "top-down causation" in physics that supports this view. And our perspective on agency depends on how much we know about the "agent": the more we know, the less agenty the entity feels. It's a known phenomenon. I mentioned it before a couple of times, including here [LW · GW] and on my blog.

Replies from: Richard_Kennaway, TAG↑ comment by Richard_Kennaway · 2018-07-30T08:22:10.764Z · LW(p) · GW(p)

"The sage is one with causation." [LW · GW]

The same argument that "we" do not "do" things, also shows that there is no such thing as a jumbo jet, no such thing as a car, not even any such thing as an atom; that nothing made of parts exists. We thought protons were elementary particles, until we discovered quarks. But no: according to this view "we" did not "think" anything, because "we" do not exist and we do not "think". Nobody and nothing exists.

All that such an argument does is redefine the words "thing" and "exist" in ways that no-one has ever used them and no-one ever consistently could. It fails to account for the fact that the concepts work.

You say that agency is bugs and uncertainty, that its perception is an illusion stemming from ignorance; I say that agency is control [LW · GW] systems [LW · GW], a real thing that can be experimentally detected in both living organisms and some machines, and detected to be absent in other things.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-07-30T15:39:04.006Z · LW(p) · GW(p)

The same argument that "we" do not "do" things, also shows that there is no such thing as a jumbo jet, no such thing as a car, not even any such thing as an atom; that nothing made of parts exists.

and

It fails to account for the fact that the concepts work.

Actually, using the concepts that work is the whole point of my posts on LW, as opposed to using the concepts that feel right. I dislike the terms like "exist" as pointing to some objective reality, and this is where I part ways with Eliezer. To me it is "models all the way down." Here is another post on this topic from a few years back: Mathematics as a lossy compression algorithm gone wild [LW · GW]. Once you consciously replace "true" with "useful" and "exist" with "usefully modeled as," a lot of confusion over what exists and what does not, what is true and what is false, what is knowledge and what it belief, what is objective and what is subjective, simply melts away. In this vein, it is very much useful to model a car as a car, not as a transient spike in quantum fields. In the same vein, it is useful to model the electron scattering through double slits as a transient spike in quantum fields, and not as a tiny ping-ping ball that can sometimes turn into a wave.

I say that agency is control [LW · GW] systems [LW · GW], a real thing that can be experimentally detected in both living organisms and some machines, and detected to be absent in other things.

I agree that a lot of agent-looking behavior can be usefully modeled as a multi-level control system, and, if anything, this is not done enough in biology, neuroscience or applied philosophy, if the latter is even a thing. By the same token, the control system approach is a useful abstraction for many observed phenomena, living or otherwise, not just agents. It does not lay claim to what an agent is, just what approach can be used to describe some agenty behaviors. I see absolutely no contradiction with what I said here or elsewhere.

Maybe one way to summarize my point in this post is that modeling the decisions as learning about oneself and the world is more useful for making good decisions that modeling an agent as changing the world with her decisions.

Replies from: Richard_Kennaway, TAG, TAG↑ comment by Richard_Kennaway · 2018-08-07T15:51:28.372Z · LW(p) · GW(p)

Actually, using the concepts that work is the whole point of my posts on LW, as opposed to using the concepts that feel right.

It seems to me that the concepts "jumbo jet", "car", and "atom" all work. If they "feel right", it is because they work. "Feeling right" is not some free-floating attribute to be bestowed at will on this or that.

A telling phrase in the post you linked is "for some reason":

In yet other words, a good approximation is, for some reason, sometimes also a good extrapolation.

Unless you can expand on that "some reason", this is just pushing under the carpet the fact that certain things work spectacularly well, and leaving Wigner's question unanswered.

Maybe one way to summarize my point in this post is that modeling the decisions as learning about oneself and the world is more useful for making good decisions that modeling an agent as changing the world with her decisions.

Thought and action are two different things, as different as a raven and a writing desk.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-08-12T02:24:48.263Z · LW(p) · GW(p)

Will only reply to one part, to highlight our basic (ontological?) differences:

Thought and action are two different things, as different as a raven and a writing desk.

A thought is a physical process in the brain, which is a part of the universe. An action is also a physical process in the universe, so it is very much like a thought, only more visible to those without predictive powers.

Replies from: TAG↑ comment by TAG · 2018-08-14T11:36:01.716Z · LW(p) · GW(p)

If choice and counterfactuals exist, then an action is something that can affect the future, while a thought is not. Of course, that difference no longer applies if your ontology doesn't feature choices and countefactuals...

Will only reply to one part, to highlight our basic (ontological?) differences:

What your ontology should be is "nothing" or "mu". You are not keeping up to your commitments.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-08-14T17:46:22.436Z · LW(p) · GW(p)

We seem to have very different ontologies here, and not converging. Also, telling me what my ontology "should" be is less than helpful :) It helps to reach mutual understanding before giving prescriptions to the other person. Assuming you are interested in more understanding, and less prescribing, let me try again to explain what I mean.

If choice and counterfactuals exist, then an action is something that can affect the future, while a thought is not. Of course, that difference no longer applies if your ontology doesn't feature choices and countefactuals...

In the view I am describing here "choice" is one of the qualia, a process in the brain. Counterfactuals is another, related, quale, the feeling of possibilities. Claiming anything more is a mind projection fallacy. The mental model of the world changes with time. I am not even claiming that time passes, just that there is a mental model of the universe, including the counterfactuals, for each moment in the observer's time. I prefer the term "observer" to agent, since it does not imply having a choice, only watching the world (as represented by the observer's mental model) unfold.

Replies from: TAG↑ comment by TAG · 2018-08-15T11:27:27.727Z · LW(p) · GW(p)

.We seem to have very different ontologies here,

And very different epistemologies. I am not denying the very possibility of knowing things about reality.

and not converging. Also, telling me what my ontology “should” be is less than helpful :) It helps to reach mutual understanding before giving prescriptions to the other person.

All I am doing is taking you at your word.

You keep saying that it is models all the way down, and there is no way to make true claims about reality. If I am not to take those comments literally, how am I to take them? How am I to guess the correct non-literal interpretation, out of the many possible ones.?

In the view I am describing here “choice” is one of the qualia, a process in the brain. Counterfactuals is another, related, quale, the feeling of possibilities. Claiming anything more is a mind projection fallacy.

That's an implicit claim about reality. Something can only be a a mind projection if there is nothing in reality corresponding to it. It is not sufficient to say that it is in the head or the model, it also has to not be in the territory, or else it is a true belief, not a mind projection.. To say that something doesn't exist in reality is to make a claim about reality as much as to say that something does.

The mental model of the world changes with time. I am not even claiming that time passes, just that there is a mental model of the universe, including the counterfactuals, for each moment in the observer’s time.

Again "in the model" does not imply "not in the territory".

↑ comment by TAG · 2018-08-14T11:31:15.353Z · LW(p) · GW(p)

I dislike the terms like “exist” as pointing to some objective reality,

You seem happy enough with "not exist" as in "agents, counterfactuals and choices don't exist"

Once you consciously replace “true” with “useful” and “exist” with “usefully modeled as,” a lot of confusion over what exists and and what does not, what is true and what is false, what is knowledge and what it belief, what is objective and what is subjective, simply melts away.

If it is really possible for an agent to affect the future or street themselves into alternative futures, then there is a lot of potential utility in it, in that you can end up in a higher-utility future than you would otherwise have. OTOH, if there are no counterfactuals, then whatever utility you gain is predetermined. So one cannot assess the usefulness, in the sense of utility gain, of models, in a way independent of the metaphysics of determinism and counterfactuals. What is useful, and how useful is, depends on what is true.

I agree that a lot of agent-looking behavior can be usefully modeled as a multi-level control system, and, if anything, this is not done enough in biology, neuroscience or applied philosophy, if the latter is even a thing. By the same token, the control system approach is a useful abstraction for many observed phenomena, living or otherwise, not just agents. It does not lay claim to what an agent is, just what approach can be used to describe some agenty behaviors. I see absolutely no contradiction with what I said here or elsewhere.

It contradicts the "agents don't exist thing" and the "I never talk about existence thing". If you only objective to reductively inexplicable agents, that would be better expressed as "there is nothing nonreductive".

Although that still wouldn't help you come to the conclusion that there is no choice and no counterfactuals, because that is much more about determinism than reductionism.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-08-14T19:29:59.677Z · LW(p) · GW(p)

If it is really possible for an agent to affect the future or street themselves into alternative futures, then there is a lot of potential utility in it, in that you can end up in a higher-utility future than you would otherwise have. OTOH, if there are no counterfactuals, then whatever utility you gain is predetermined.

Yep, some possible worlds have more utility for a given agent than others. And, yes, sort of. Whatever utility you gain is not your free choice, and not necessarily predetermined, just not under your control. You are a mere observer who thinks they can change the world.

It contradicts the "agents don't exist thing" and the "I never talk about existence thing".

I don't see how. Seems there is an inferential gap there we haven't bridged.

Replies from: TAG↑ comment by TAG · 2018-08-01T11:13:43.703Z · LW(p) · GW(p)

Once you consciously replace “true” with “useful” and “exist” with “usefully modeled as,” a lot of confusion over what exists and what does not, what is true and what is false, what is knowledge and what it belief, what is objective and what is subjective, simply melts away.

How do you know that the people who say "agents exist" don't mean "some systems can be usefully modelled as agents"?

By the same token, the control system approach is a useful abstraction for many observed phenomena, living or otherwise, not just agents. It does not lay claim to what an agent is, just what approach can be used to describe some agenty behaviors. I see absolutely no contradiction with what I said here or elsewhere.

You are making a claim about reality, that counterfactuals don't exist., even though you are also making a meta claim that you don't make claims about reality.

If probablistic agents[], and counterfactuals are both useful models (and I don't see how you can consistentlt assert the former and deny the latter) then counterfactuals "exist" by your* lights.

[*] Or automaton, if you prefer. If someone builds a software gismo that is probablistic and acts without specific instruction, then it is an agetn and an automaton all at the same time.

↑ comment by TAG · 2018-07-30T11:18:31.889Z · LW(p) · GW(p)

But there is no “top-down causation” in physics that supports this view.

There is no full strength top-down determinism, but systems-level behaviour is enough to support a common-sense view of decision making.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-07-30T14:58:56.592Z · LW(p) · GW(p)

I agree, the apparent emergent high-level structures look awfully like agents. That intentional stance tends to dissipate once we understand them more.

Replies from: TAG, jessica.liu.taylor↑ comment by TAG · 2018-07-31T11:18:20.094Z · LW(p) · GW(p)

If intentionality just mean seeking to pursue or maximise some goal, there is no reason an artificial system should not have it. But the answer is different if intentionality means having a ghost or homunculus inside. And neither is the same as the issue of whether an agent is deterministic , or capable of changing the future.

More precision is needed.

↑ comment by jessicata (jessica.liu.taylor) · 2018-07-31T03:39:05.355Z · LW(p) · GW(p)

Even when the agent has more compute than we do? I continue to take the intentional stance towards agents I understand but can't compute, like MCTS-based chess players.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-07-31T06:47:06.655Z · LW(p) · GW(p)

What do you mean by taking the intentional stance in this case?

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2018-07-31T09:30:09.615Z · LW(p) · GW(p)

I would model the program as a thing that is optimizing for a goal. While I might know something about the program's weaknesses, I primarily model it as a thing that selects good chess moves. Especially if it is a better chess player than I am.

comment by Heighn · 2022-06-15T12:30:44.354Z · LW(p) · GW(p)

Great post overall, you're making interesting points!

Couple of comments:

There are 8 possible worlds here, with different utilities and probabilities

- your utility for "To smoke" and "No lesion, no cancer" should be 1,000,000 instead of 0

- your utility for "Not to smoke" and "No lesion, no cancer" should be 1,000,000 instead of 0

Some decision theorists tend to get confused over this because they think of this magical thing they call "causality," the qualia of your decisions being yours and free, causing the world to change upon your metaphysical command. They draw fancy causal graphs like this one:

That seems like an unfair criticism of the FDT paper. Drawing such a diagram doesn't imply one believes causality to be magic any more than making your table of possible worlds.

Specifically, the diagrams in the FDT paper don't say decisions are "yours and free", at least if I understand you correctly. Your decisions are caused by your decision algorithm, which in some situations is implemented in other agents as well.

comment by Gordon Seidoh Worley (gworley) · 2018-08-08T20:39:16.926Z · LW(p) · GW(p)

This seems to cut through a lot of confusion present in decision theory, so I guess the obvious question to ask is why don't we already work things this way instead of the way they are normally approached in decision theory?

Replies from: jessica.liu.taylor, shminux↑ comment by jessicata (jessica.liu.taylor) · 2018-08-08T22:41:05.106Z · LW(p) · GW(p)

To the extent that this approach is a decision theory, it is some variant of UDT (see this explanation [LW · GW]). The problems with applying and formalizing it are the usual problems with applying and formalizing UDT:

- How do you construct "policy counterfactuals", e.g. worlds where "I am the type of person who one-boxes" and "I am the type of person who two-boxes"? (This isn't a problem if the environment is already specified as a function from the agent's policy to outcome, but that often isn't how things work in the real world)

- How do you integrate this with logical uncertainty, such that you can e.g. construct "possible worlds" where the 1000th digit of pi is 2 (when in fact it isn't)? If you don't do this then you get wrong answers on versions of these problems that use logical pseudorandomness rather than physical randomness.

- How does this behave in multi-agent problems, with other versions of itself that have different utility functions? Naively both agents would try to diagonalize against each other, and an infinite loop would result.

↑ comment by Shmi (shminux) · 2018-08-12T02:41:03.881Z · LW(p) · GW(p)

Those are excellent questions! Thank you for actually asking them, instead of simply stating something like "What you wrote is wrong because..."

Let me try to have a crack at them, without claiming that "I have solved decision theory, everyone can go home now!"

How do you construct "policy counterfactuals", e.g. worlds where "I am the type of person who one-boxes" and "I am the type of person who two-boxes"? (This isn't a problem if the environment is already specified as a function from the agent's policy to outcome, but that often isn't how things work in the real world)

"I am a one-boxer" and "I am a two-boxer" are both possible worlds, and by watching yourself work through the problem you learn in which world you live. Maybe I misunderstand what you are saying though.

How do you integrate this with logical uncertainty, such that you can e.g. construct "possible worlds" where the 1000th digit of pi is 2 (when in fact it isn't)? If you don't do this then you get wrong answers on versions of these problems that use logical pseudorandomness rather than physical randomness.

As of this moment, both are possible worlds for me. If I were to look up or calculate the 1000th digit of Pi, I would learn a bit more about the world I am in. Not including the lower-probability worlds like having calculating the result wrongly and so on. Or I might choose not to look it up, and both worlds would remain possible until and unless I gain, intentionally or accidentally (there is no difference, intentions and accidents are not a physical thing, but a human abstraction at the level of intentional stance), some knowledge about the burning question of the 1000th digit of Pi.

Can you give an example of a problem "that uses logical pseudorandomness" where simply enumerating worlds would give a wrong answer?

How does this behave in multi-agent problems, with other versions of itself that have different utility functions? Naively both agents would try to diagonalize against each other, and an infinite loop would result.

I am not sure in what way an agent that has a different utility function is at all yourself. An example would be good. My guess is that you might be referring to a Nash equilibrium that is a mixed strategy, but maybe I am wrong.

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2018-08-12T07:11:34.898Z · LW(p) · GW(p)

“I am a one-boxer” and “I am a two-boxer” are both possible worlds, and by watching yourself work through the problem you learn in which world you live. Maybe I misunderstand what you are saying though.

The interesting formal question here is: given a description of the world you are in (like the descriptions in this post [LW · GW]), how do you enumerate the possible worlds? A solution to this problem would be very useful for decision theory.

If an agent knows its source code, then "I am a one-boxer" and "I am a two-boxer" could be taken to refer to currently-unknown logical facts about what its source code outputs. You could be proposing a decision theory whereby the agent uses some method for reasoning about logical uncertainty (such as enumerating logical worlds), and selects the action such that its expected utility is highest conditional on the event that its source code outputs this action. (I am not actually sure exactly what you are proposing, this is just a guess).

If the logical uncertainty is represented by a logical inductor, then this decision theory is called "LIEDT" (logical inductor EDT) at MIRI, and it has a few problems, as explained in this post. First, logical inductors have undefined behavior when conditioning on very rare events (this is similar to the cosmic ray problem). Second, it isn't updateless in the right way (see the reply to the next point for more on this problem).

I'm not claiming that it's impossible to solve the problems by world-enumeration, just that formally specifying the world-enumeration procedure is an open problem.

Can you give an example of a problem “that uses logical pseudorandomness” where simply enumerating worlds would give a wrong answer?

Say you're being counterfactually mugged based on the 1000th digit of pi. Omega, before knowing the 1000th digit of pi, predicts whether you would pay up if the 1000th digit of pi is odd (note: it's actually even), and rewards you if the digit is even. You now know that the digit is odd and are considering paying up.

Since you know the 1000th digit, you know the world where the 1000th digit of pi is even is impossible. A dumber version of you could consider the 1000th digit of pi to be uncertain, but does this dumber version of you have enough computational ability to analyze the problem properly and come to the right answer? How does this dumber version reason correctly about the problem while never finding out the value of the 1000th digit of pi? Again, I'm not claiming this is impossible, just that it's an open problem.

I am not sure in what way an agent that has a different utility function is at all yourself. An example would be good.

Consider the following normal-form game. Each of 2 players selects an action, 0 or 1. Call their actions x1 and x2. Now player 1 gets utility 9*x2-x1, and player 2 gets utility 10*x1-x2. (This is an asymmetric variant of prisoner's dilemma; I'm making it asymmetric on purpose to avoid a trivial solution)

Call your decision theory WEDT ("world-enumeration decision theory"). What happens when two WEDT agents play this game with each other? They have different utility functions but the same decision theory. If both try to enumerate worlds, then they end up in an infinite loop (player 1 is thinking about what happens if they select action 0, which requires simulating player 2, but that causes player 2 to think about what happens if they select action 0, which requires simulating player 1, etc).

Replies from: shminux↑ comment by Shmi (shminux) · 2018-08-15T04:42:36.759Z · LW(p) · GW(p)

Thank you for your patience explaining the current leading edge and answering my questions! Let me try to see if my understanding of what you are saying makes sense.

If an agent knows its source code, then "I am a one-boxer" and "I am a two-boxer" could be taken to refer to currently-unknown logical facts about what its source code outputs.

By "source code" I assume you mean the algorithm that completely determines the agent's actions for a known set of inputs, though maybe calculating these actions is expensive, hence some of them could be "currently unknown" until the algorithm is either analyzed or simulated.

Let me try to address your points in the reverse order.

Consider the following normal-form game. Each of 2 players selects an action, 0 or 1. Call their actions x1 and x2. Now player 1 gets utility 9*x2-x1, and player 2 gets utility 10*x1-x2.

...

If both try to enumerate worlds, then they end up in an infinite loop

Enumerating does not require simulating. It is descriptive, not prescriptive. So there are 4 possible worlds, 00, 01, 10 and 11, with rewards for player 1 being 0, 9, -1, 8, and for player 2 being 0, -1, 10, 9. But to assign prior probabilities to these worlds, we need to discover more about the players. For pure strategy players some of these worlds will be probability 1 and others 0. For mixed strategy players things get slightly more interesting, since the worlds are parameterized by probability:

Let's suppose that player 1 picks each world with probabilities p and 1-p and player 2 with probabilities q and 1-q. Then the probabilities of each world are pq, p(1-q), (1-p)q and (1-p)(1-q). Then the expected utility for each world is for player 1: 0, 9p(1-q), -(1-p)q, 8(1-p)(1-q), and for player 2 0, -p(1-q), 10(1-p)q, 9(1-p)(1-q). Out of the infinitely many possible worlds there will be one with the Nash equilibrium, where each player is indifferent to which decision the other player ends up making. This is, again, purely descriptive. By learning more about what strategy the agents use, we can evaluate the expected utility for each one, and, after the game is played, whether once or repeatedly, learn more about the world the players live in. The question you posed

What happens when two WEDT agents play this game with each other?

is in tension with the whole idea of agents not being able to affect the world, only being able to learn about the world it lives in. There is no such thing as a WEDT agent. If one of the players is the type that does the analysis and picks the mixed strategy with the Nash equilibrium, they maximize their expected utility, regardless of what that type of an agent the other player is.

About counterfactual mugging:

Say you're being counterfactually mugged based on the 1000th digit of pi. Omega, before knowing the 1000th digit of pi, predicts whether you would pay up if the 1000th digit of pi is odd (note: it's actually even), and rewards you if the digit is even. You now know that the digit is odd and are considering paying up.

Since you know the 1000th digit, you know the world where the 1000th digit of pi is even is impossible.

I am missing something... The whole setup is unclear. Counterfactual mugging is a trivial problem in terms of world enumeration, an agent who does not pay lives in the world where she has higher utility. It does not matter what Omega says or does, or what the 1000th digit of pi is.

You could be proposing a decision theory whereby the agent uses some method for reasoning about logical uncertainty (such as enumerating logical worlds), and selects the action such that its expected utility is highest conditional on the event that its source code outputs this action. (I am not actually sure exactly what you are proposing, this is just a guess).

Maybe this is where the inferential gap lies? I am not proposing a decision theory. Ability to make decisions requires freedom of choice, magically affecting the world through unphysical top-down causation. I am simply observing which of the many possible worlds has what utility for a given observer.

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2018-08-15T05:08:05.276Z · LW(p) · GW(p)

OK, I misinterpreted you as recommending a way of making decisions. It seems that we are interested in different problems (as I am trying to find algorithms for making decisions that have good performance in a variety of possible problems).

Re top down causation: I am curious what you think of a view where there are both high and low level descriptions that can be true at the same time, and have their own parallel causalities that are consistent with each other. Say that at the low level, the state type is and the transition function is . At the high level, the state type is and the nondeterministic transition function is , i.e. at a high-level sometimes you don't know what state things will end up in. Say we have some function for mapping low-level states to high-level states, so each low-level state corresponds to a single high-level state, but a single high-level state may correspond to multiple low-level states.

Given these definitions, we could say that the high and low level ontologies are compatible if, for each low level state , it is the case that , i.e. the high-level ontology's prediction for the next high-level state is consistent with the predicted next high-level state according to the low-level ontology and .

Causation here is parallel and symmetrical rather than top-down: both the high level and the low level obey causal laws, and there is no causation from the high level to the low level. In cases where things can be made consistent like this, I'm pretty comfortable saying that the high-level states are "real" in an important sense, and that high-level states can have other high-level states as a cause.

EDIT: regarding more minor points: Thanks for the explanation of the multi-agent games; that makes sense although in this case the enumerated worlds are fairly low-fidelity, and making them higher-fidelity might lead to infinite loops. In counterfactual mugging, you have to be able to enumerate both the world where the 1000th digit of pi is even and where the 1000th digit of pi is odd, and if you are doing logical inference on each of these worlds then that might be hard; consider the difficulty of imagining a possible world where 1+1=3.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-08-16T05:36:48.172Z · LW(p) · GW(p)

OK, I misinterpreted you as recommending a way of making decisions. It seems that we are interested in different problems (as I am trying to find algorithms for making decisions that have good performance in a variety of possible problems).

Right. I would also be interested in the algorithms for making decisions if I believed we were agents with free will, freedom of choice, ability to affect the world (in the model where the world is external reality) and so on.

what you think of a view where there are both high and low level descriptions that can be true at the same time, and have their own parallel causalities that are consistent with each other.

Absolutely, once you replace "true" with "useful" :) We can have multiple models at different levels that make accurate predictions of future observations. I assume that in your notation tl:L→L is an endomorphism within a set of microstates L, and th:H→Set(H) is a map from a macrostate type H, (what would be an example of this state type?) to a wider set of macrostates (like what?). I am guessing that this may match up with the standard definitions of microstates and macrostates in statistical mechanics, and possibly some kind of a statistical ensemble? Anyway, so your statement is that of emergence: the evolution of microstates maps into an evolution of macrostates, sort of like the laws of statistical mechanics map into the laws of thermodynamics. In physics it is known as an effective theory. If so, I have no issue with that. Certainly one can call, say, gas compression by an external force as a cause of it absorbing mechanical energy and heating up. In the same sense, one can talk about emergent laws of human behavior, where a decision by an agent is a cause of change in the world the agent inhabits. So, a decision theory is an emergent effective theory where we don't try to go to the level of states L, be those at the level of single neurons, neuronal electrochemistry, ion channels opening and closing according to some quantum chemistry and atomic physics, or even lower. This seems to be a flavor of compatibilism.

What I have an issue with is the apparent break of the L→H mapping when one postulates top-down causation, like free choice, i.e. multiple different H's reachable from the same microstate.

in this case the enumerated worlds are fairly low-fidelity

I am confused about the low/high-fidelity. In what way what I suggested is low-fidelity? What is missing from the picture?

consider the difficulty of imagining a possible world where 1+1=3.

Why would it be difficult? A possible world is about the observer's mental model, and most models do not map neatly into any L or H that matches known physical laws. Most magical thinking is like that (e.g. faith, OCD, free will).

Replies from: jessica.liu.taylor, TAG↑ comment by jessicata (jessica.liu.taylor) · 2018-08-16T07:52:29.167Z · LW(p) · GW(p)

I would also be interested in the algorithms for making decisions if I believed we were agents with free will, freedom of choice, ability to affect the world (in the model where the world is external reality) and so on.

My guess is that you, in practice, actually are interested in finding decision-relevant information and relevant advice, in everyday decisions that you make. I could be wrong but that seems really unlikely.

Re microstates/macrostates: it seems like we mostly agree about microstates/macrostates. I do think that any particular microstate can only lead to one macrostate.

I am confused about the low/high-fidelity.

By "low-fidelity" I mean the description of each possible world doesn't contain a complete description of the possible worlds that the other agent enumerates. (This actually has to be the case in single-person problems too, otherwise each possible world would have to contain a description of every other possible world)

Why would it be difficult?

An issue with imagining a possible world where 1+1=3 is that it's not clear in what order to make logical inferences. If you make a certain sequence of logical inferences with the axiom 1+1=3, then you get 2=1+1=3; if you make a difference sequence of inferences, then you get 2=1+1=(1+1-1)+(1+1-1)=(3-1)+(3-1)=4. (It seems pretty likely to me that, for this reason, logic is not the right setting in which to formalize logically impossible counterfactuals, and taking counterfactuals on logical statements is confused in one way or another)

If we fix a particular mental model of this world, then we can answer questions about this model; part of the decision theory problem is deciding what the mental model of this world should be, and that is pretty unclear.

Replies from: shminux, TAG↑ comment by Shmi (shminux) · 2018-08-18T03:32:49.944Z · LW(p) · GW(p)

My guess is that you, in practice, actually are interested in finding decision-relevant information and relevant advice, in everyday decisions that you make. I could be wrong but that seems really unlikely.

Yes, if course I do, I cannot help it. But just because we do something doesn't mean we have the free will to either do or not do it.

I do think that any particular microstate can only lead to one macrostate.

Right, I cannot imagine it being otherwise, and that is where my beef with "agents have freedom of choice" is.

An issue with imagining a possible world where 1+1=3 is that it's not clear in what order to make logical inferences. If you make a certain sequence of logical inferences with the axiom 1+1=3, then you get 2=1+1=3; if you make a difference sequence of inferences, then you get 2=1+1=(1+1-1)+(1+1-1)=(3-1)+(3-1)=4.

Since possible worlds are in the observer's mind (obviously, since math is a mental construction to begin with, no matter how much people keeps arguing whether mathematical laws are invented or discovered), different people may make a suboptimal inference in different places. We call those "mistakes". Most times people don't explicitly use axioms, though sometimes they do. Some axioms are more useful than others, of course. Starting with 1+1=3 in addition to the usual remaining set, we can prove that all numbers are equal. Or maybe we end up with a mathematical model where adding odd numbers only leads to odd numbers. In that sense, not knowing more about the world, we are indeed in a "low-fidelity" situation, with many possible (micro-)worlds where 1+1=3 is an axiom. Some of these worlds might even have a useful description of observations (imagine, for example, one where each couple requires a chaperone, there 1+1 is literally 3).

↑ comment by TAG · 2018-08-16T09:32:17.294Z · LW(p) · GW(p)

If we fix a particular mental model of this world, then we can answer questions about this model; part of the decision theory problem is deciding what the mental model of this world should be, and that is pretty unclear.

In other words. usefulness (which DT to use) depends on truth (Which world model to use).

↑ comment by TAG · 2018-08-16T06:56:21.524Z · LW(p) · GW(p)

What I have an issue with is the apparent break of the L→H mapping when one postulates top-down causation, like free choice, i.e. multiple different H’s reachable from the same microstate.

If there is indeterminism at the micro level , there is not the slightest doubt that it can be amplified to the macro level, because quantum mechanics as an experimental science depends on the ability to make macroscopic records of events involving single particles.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-08-18T03:36:35.236Z · LW(p) · GW(p)

Amplifying microscopic indeterminism is definitely a thing. It doesn't help the free choice argument though, since the observer is not the one making the choice, the underlying quantum mechanics does.

Replies from: TAG↑ comment by TAG · 2018-08-23T11:18:00.665Z · LW(p) · GW(p)

Macroscopic indeterminism is sufficient to establish real, not merely logical, counterfactuals.

Besides that, It would be helpful to separate the ideas of dualism , agency and free choice. If the person making the decision is not some ghost in the machine, then they the only thing they can be is the machine, as a total system,. In that case, the question becomes the question of whether the system as a whole can choose, could have chosen otherwise, etc.

But you're in good company: Sam Harris is similarly confused.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-08-24T01:10:29.284Z · LW(p) · GW(p)

But you're in good company: Sam Harris is similarly confused.

Not condescending in the least :P

There are no "real" counterfactuals, only the models in the observer's mind, some eventually proven better reflecting observations than others.

It would be helpful to separate the ideas of dualism , agency and free choice. If the person making the decision is not some ghost in the machine, then they the only thing they can be is the machine, as a total system,. In that case, the question becomes the question of whether the system as a whole can choose, could have chosen otherwise, etc.

It would be helpful, yes, if they were separable. Free choice as anything other than illusionism is tantamount to dualism.

Replies from: TAG↑ comment by TAG · 2018-08-24T11:19:21.841Z · LW(p) · GW(p)

There are no “real” counterfactuals, only the models in the observer’s mind, some eventually proven better reflecting observations than others.

You need to argue for that claim, not just state it. The contrary claim is supported by a simple argument: if an even is indeterministic, it need not have happened, or need not have happened that way. Therefore, there is a real possibility that it did not happened, or happened differently -- and that is a real counterfactual.

It would be helpful, yes, if they were separable. Free choice as anything other than illusionism is tantamount to dualism.

You need to argue for that claim as well.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-08-25T06:31:16.518Z · LW(p) · GW(p)

if an even is indeterministic, it need not have happened, or need not have happened that way

There is no such thing as "need" in Physics. There are physical laws, deterministic or probabilistic, and that's it. "Need" is a human concept that has no physical counterpart. Your "simple argument" is an emotional reaction.

Replies from: TAG↑ comment by TAG · 2018-08-25T13:25:00.633Z · LW(p) · GW(p)

Your comment has no relevance, because probablistic laws automatically imply counterfactuals as well. In fact it's just another way of saying the same thing. I could have shown it in modal logic, too.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-08-25T18:01:58.883Z · LW(p) · GW(p)

Your comment has no relevance,

Well, we have reached an impasse. Goodbye.

↑ comment by Shmi (shminux) · 2018-08-12T02:42:03.895Z · LW(p) · GW(p)

Thank you, I am glad that I am not the only one for whom causation-free approach to decision theory makes sense. UDT seems a bit like that.

comment by Dacyn · 2018-07-28T23:35:13.163Z · LW(p) · GW(p)

I note here that simply enumerating possible worlds evades this problem as far as I can tell.

The analogous unfair decision problem would be "punish the agent if they simply enumerate possible worlds and then choose the action that maximizes their expected payout". Not calling something a decision theory doesn't mean it isn't one.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-07-29T02:34:19.609Z · LW(p) · GW(p)

Please propose a mechanism by which you can make an agent who enumerates the worlds seen as possible by every agent, no matter what their decision theory is, end up in a world with lower utility than some other agent.

Replies from: dxu, Dacyn, philh↑ comment by dxu · 2018-07-29T04:15:22.217Z · LW(p) · GW(p)

Say you have an agent A who follows the world-enumerating algorithm outlined in the post. Omega makes a perfect copy of A and presents the copy with a red button and a blue button, while telling it the following:

"I have predicted in advance which button A will push. (Here [LW · GW] is a description of A; you are welcome to peruse it for as long as you like.) If you press the same button as I predicted A would push, you receive nothing; if you push the other button, I will give you $1,000,000. Refusing to push either button is not an option; if I predict that you do not intend to push a button, I will torture you for 3^^^3 years."

The copy's choice of button is then noted, after which the copy is terminated. Omega then presents the real agent facing the problem with the exact same scenario as the one faced by the copy.

Your world-enumerating agent A will always fail to obtain the maximum $1,000,000 reward accessible in this problem. However, a simple agent B who chooses randomly between the red and blue buttons has a 50% chance of obtaining this reward, for an expected utility of $500,000. Therefore, A ends up in a world with lower expected utility than B.

Q.E.D.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-07-29T08:09:04.052Z · LW(p) · GW(p)

Your scenario is somewhat ambiguous, but let me attempt to answer all versions of it that I can see.

First: does the copy of A (hereafter, A′) know that it’s a copy?

If yes, then the winning strategy is “red if I am A, blue if I am A′”. (Or the reverse, of course; but whichever variant A selects, we can be sure that A′ selects the same one, being a perfect copy and all.)

If no, then indeed A receives nothing, but then of course this has nothing to do with any copies; it is simply the same scenario as if Omega predicted A’s choice, then gave A the money if A chose differently than predicted—which is, of course, impossible (Omega is a perfect predictor), and thus this, in turn, is the same as “Omega shows up, doesn’t give A any money, and leaves”.

Or is it? You claim that in the scenario where Omega gives the money iff A chooses otherwise than predicted, A could receive the money with 50% probability by choosing randomly. But this requires us to reassess the terms of the “Omega, a perfect predictor” stipulation, as previously discussed by cousin_it [LW · GW]. In any case, until we’ve specified just what kind of predictor Omega is, and how its predictive powers interact with sources of (pseudo-)randomness—as well as whether, and how, Omega’s behavior changes in situations involving randomness—we cannot evaluate scenarios such as the one you describe.

Replies from: Dacyn↑ comment by Dacyn · 2018-07-29T17:15:41.677Z · LW(p) · GW(p)

dxu did not claim that A could receive the money with 50% probability by choosing randomly. They claimed that a simple agent B that chose randomly would receive the money with 50% probability. The point is that Omega is only trying to predict A, not B, so it doesn't matter how well Omega can predict B's actions.

The point can be made even more clear by introducing an agent C that just does the opposite of whatever A would do. Then C gets the money 100% of the time (unless A gets tortured, in which case C also gets tortured).

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-07-29T17:58:10.877Z · LW(p) · GW(p)

This doesn’t make a whole lot of sense. Why, and on what basis, are agents B and C receiving any money?

Are you suggesting some sort of scenario where Omega gives A money iff A does the opposite of what Omega predicted A would do, and then also gives any other agent (such as B or C) money iff said other agent does the opposite of what Omega predicted A would do?

This is a strange scenario (it seems to be very different from the sort of scenario one usually encounters in such problems), but sure, let’s consider it. My question is: how is it different from “Omega doesn’t give A any money, ever (due to a deep-seated personal dislike of A). Other agents may, or may not, get money, depending on various factors (the details of which are moot)”?

This doesn’t seem to have much to do with decision theories. Maybe shminux ought to rephrase his challenge. After all—

Please propose a mechanism by which you can make an agent who enumerates the worlds seen as possible by every agent, no matter what their decision theory is, end up in a world with lower utility than some other agent.

… can be satisfied with “Omega punches A in the face, thus causing A to end up with lower utility than B, who remains un-punched”. What this tells us about decision theories, I can’t rightly see.

Replies from: dxu, shminux↑ comment by dxu · 2018-07-29T18:40:43.010Z · LW(p) · GW(p)

This is a strange scenario (it seems to be very different from the sort of scenario one usually encounters in such problems), but sure, let’s consider it. My question is: how is it different from “Omega doesn’t give A any money, ever (due to a deep-seated personal dislike of A). Other agents may, or may not, get money, depending on various factors (the details of which are moot)”?

This doesn’t seem to have much to do with decision theories.

Yes, this is correct, and is precisely the point EYNS was trying to make when they said

Intuitively, this problem is unfair to Fiona, and we should compare her performance to Carl’s not on the “act differently from Fiona” game, but on the analogous “act differently from Carl” game.

"Omega doesn't give A any money, ever (due to a deep-seated personal dislike of A)" is a scenario that does not depend on the decision theory A uses, and hence is an intuitively "unfair" scenario to examine; it tells us nothing about the quality of the decision theory A is using, and therefore is useless to decision theorists. (However, formalizing this intuitive notion of "fairness" is difficult, which is why EYNS brought it up in the paper.)

I'm not sure why shminux seems to think that his world-counting procedure manages to avoid this kind of "unfair" punishment; the whole point of it is that it is unfair, and hence unavoidable. There is no way for an agent to win if the problem setup is biased against them to start with, so I can only conclude that shminux misunderstood what EYNS was trying to say when he (shminux) wrote

I note here that simply enumerating possible worlds evades this problem as far as I can tell.Replies from: SaidAchmiz

↑ comment by Said Achmiz (SaidAchmiz) · 2018-07-29T19:28:37.138Z · LW(p) · GW(p)

I didn’t read shminux’s post as suggesting that his scheme allows an agent to avoid, say, being punched in the face apropos of nothing. (And that’s what all the “unfair” scenarios described in the comments here boil down to!) I think we can all agree that “arbitrary face-punching by an adversary capable of punching us in the face” is not something we can avoid, no matter our decision theory, no matter how we make choices, etc.

Replies from: Dacyn↑ comment by Shmi (shminux) · 2018-07-29T18:42:19.637Z · LW(p) · GW(p)

can be satisfied with “Omega punches A in the face, thus causing A to end up with lower utility than B, who remains un-punched”.

It seems to be a good summary of what dxu and Dacyn were suggesting! I think it preserves the salient features without all the fluff of copying and destroying, or having multiple agents. Which makes it clear why the counterexample does not work: I said "the worlds seen as possible by every agent, no matter what their decision theory is," and the unpunched world is not a possible one for the world enumerator in this setup.

My point was that CDT makes a suboptimal decision in Newcomb, and FDT struggles to pick the best decision in some of the problems, as well, because it is lost in the forest of causal trees, or at least this is my impression from the EYNS paper. Once you stop worrying about causality and the agent's ability to change the world by their actions, you end up with a simper question "what possible world does this agent live in and with what probability?"

↑ comment by philh · 2018-08-01T09:50:48.166Z · LW(p) · GW(p)

enumerates the worlds seen as possible by every agent, no matter what their decision theory is

Can you clarify this?

One interpretation is that you're talking about an agent who enumerates every world that any agent sees as possible. But your post further down seems to contradict this, "the unpunched world is not a possible one for the world enumerator". And it's not obvious to me that this agent can exist.

Another is that the agent enumerates only the worlds that every agent sees as possible, but that agent doesn't seem likely to get good results. And it's not obvious to me that there are guaranteed to be any worlds at all in this intersection.

Am I missing an interpretation?

comment by Said Achmiz (SaidAchmiz) · 2018-07-28T19:57:47.277Z · LW(p) · GW(p)

Great post!

I have a question, though, about the “adversarial predictor” section. My question is: how is world #3 possible? You say:

- Agent uses DT1 when rewarded for using DT1 and DT2 when rewarded for using DT2

However, the problem statement said:

Imagine I have a copy of Fiona, and I punish anyone who takes the same action as the copy.

Are we to suppose that the copy of Fiona that the adversarial predictor is running does not know that an adversarial predictor is punishing Fiona for taking certain actions, but that the actual-Fiona does know this, and can thus deviate from what she would otherwise do? If so, then what happens when this assumption is removed—i.e., when we do not inform Fiona that she is being watched (and possibly punished) by an adversarial predictor, or when we do inform copy-Fiona of same?

Replies from: shminux↑ comment by Shmi (shminux) · 2018-07-28T20:43:50.684Z · LW(p) · GW(p)

One would have to ask Eliezer and Nat what they really meant, since it is easy to end up in a self-contradictory setup or to ask a question about an impossible world, like to asking what happens if in the Newcomb's setup the agent decided to switch to two-boxing after the perfect predictor had already put $1,000,000 in.

My wild guess is that the FDT Fiona from the paper uses a certain decision theory DT1 that does not cope well with the world with adversarial predictors. She uses some kind of causal decision graph logic that would lead her astray instead of being in the winning world. I also assume that Fiona makes her "decisions" while being fully informed about the predictor's intentions to punish her and just CDT-like throws her hands in the air and cries "unfair!"

comment by BurntVictory · 2018-07-30T02:46:49.878Z · LW(p) · GW(p)

Hey, noticed what might be errors in your lesion chart: No lesion, no cancer should give +1m utils in both cases. And your probabilities don't add to 1. Including p(lesion) explicitly doesn't meaningfully change the EV difference, so eh. However, my understanding is that the core of the lesion problem is recognizing that p(lesion) is independent of smoking; EYNS seems to say the same. Might be worth including it to make that clearer?

(I don't know much about decision theory, so maybe I'm just confused.)

comment by Chris_Leong · 2018-07-29T13:52:10.973Z · LW(p) · GW(p)

Assuming that an agent who doesn't have the lesion gains no utility from smoking OR from having cancer changes the problem.

But apart from that, this post is pretty good at explaining how to approach these problems from the perspective of Timeless Decision Theory. Worth reading about it if you aren't familiar.

Also, is generally agreed that in a deterministic world we don't really make decisions as per libertarian free will. The question is then how to construct the counterfactuals for the decision problem. I'm in agreement with you TDT is much more consistent as the counterfactuals tend to describe actually consistent worlds.

comment by SMK (Sylvester Kollin) · 2022-10-18T09:14:22.343Z · LW(p) · GW(p)

From Arif Ahmed's Evidence, Decision and Causality (ch. 5.4, p. 142-143; links mine):

Deliberating agents should take their choice to be between worlds that differ over the past as well as over the future. In particular, they differ over the effects of the present choice but also over its unknown causes. Typically these past differences will be microphysical differences that don’t matter to anyone. But in Betting on the Past [LW · GW] they matter to Alice.

. . .

On this new picture, which arises naturally from [evidential decision theory [? · GW]]. . ., it is misleading to think of decisions as forks in a road. Rather, we should think of them as choices between roads that were separate all along. For instance, in Betting on the Past, Alice should take herself to be choosing between actualizing a world at which P was all along true and one at which it was all along false.

comment by romeostevensit · 2018-07-29T19:41:39.714Z · LW(p) · GW(p)

I'm slightly confused. Is it that we're learning about which world we are in or, given that counterfactuals don't actually exist, are we learning what our own decision theory is given some stream of events/worldline?

Replies from: shminux↑ comment by Shmi (shminux) · 2018-07-29T23:39:27.350Z · LW(p) · GW(p)

What is the difference between the two? The world includes the agent, and discovering more about the world implies self-discovery

comment by TAG · 2018-08-03T18:07:21.901Z · LW(p) · GW(p)