Thatcher's Axiom

post by Edward P. Könings (edward-p-koenings) · 2023-01-24T22:35:00.960Z · LW · GW · 22 commentsContents

22 comments

In one of her most controversial quotes (there were many!) British Prime Minister Margaret Thatcher made the following statement: “There is no such thing as a society”.

This statement obviously caused enormous discomfort in British politics and even within the Conservative Party. How could the leader of a nation claim that society does not exist?! Thatcher was heavily criticized for this statement, although she never recanted having said it.

The thing is, Maggie wasn't all that wrong…at least in analytical terms.

To begin to understand what I mean, dear reader, we have to look at the context. Thatcher's quote was originally made in an interview of the Prime Minister for Woman's Own magazine and the interviewer, Douglas Keay, had asked about the degeneration of British society due to the austerity measures implemented by the Conservative government against welfare state programs. In response to this, Thatcher, a dogmatic critic of such welfare programs, said:

“I think we have gone through a period when too many children and people have been given to understand “I have a problem, it is the Government's job to cope with it!” or “I have a problem, I will go and get a grant to cope with it!” “I am homeless, the Government must house me!” and so they are casting their problems on society and who is society? There is no such thing! There are individual men and women and there are families and no government can do anything except through people and people look to themselves first”

It is not surprising that the individualism of such a statement caused discomfort. However, Thatcher was right to question the common sense policy that society can be taken as a concrete concept. It is common for politicians and policy makers to defend their projects on the grounds that such measures “will improve social well-being” or that they will serve “the interests of society”. This is a very common analytical error.

To understand what I mean, the reader need only look at the basic economic concept of utility theory. When a political agent says that society will improve its well-being, he is saying, in analytical terms, that society will move from a level of utility to a higher level of utility.

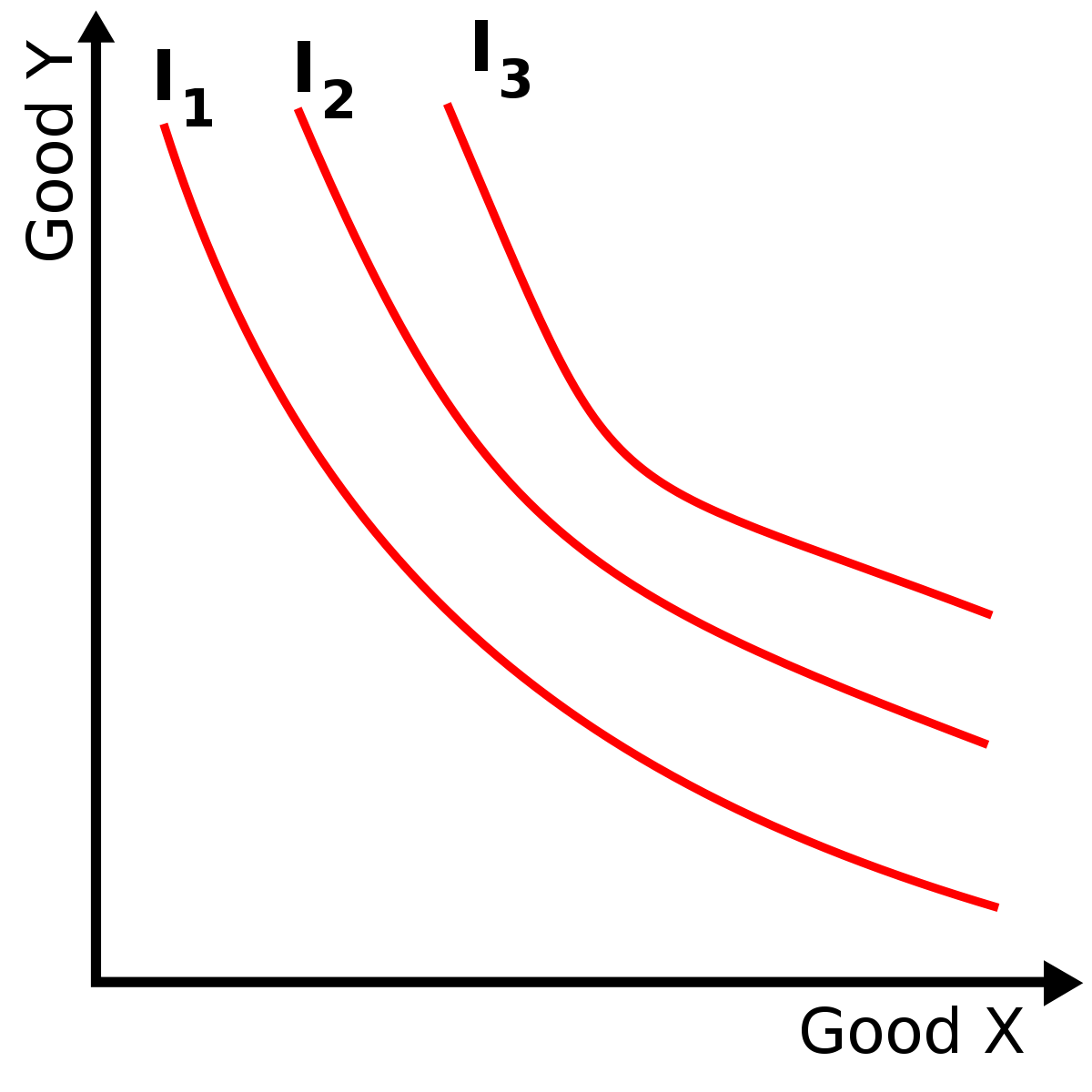

The representation of this utility is done by means of indifference curves. These curves represent different amounts of a given basket of goods (whatever they may be, including abstract things like love, justice, well-being, etc.) whose combinations an individual is indifferent to, as they provide the same level of utility for him.

When we say that society should adopt a certain policy for greater well-being then we are saying that it prefers a certain combination of goods and public policies. It is important to remember that “preferring” here necessarily involves a choice. As Jerry Gaus points out, preference is never non-comparative, for whenever we say that we prefer something we are implicitly saying that we prefer that something to something else. So preference always involves a choice between alternative means and ends.

When society is said to improve its welfare then it is said to move from a lower indifference curve to an upper indifference curve. However, do these indifference curves really exist? Consider that we are talking here about society's preferences for two goods and that this society is only composed of two individuals; Crusoe and Friday.

This society's indifference curve for total goods X and total goods Y—with X = Xr + Xf and Y = Yr + Yf—will give us the desired combinations of X and Y for this society. That is, the indifference curve of combinations between these goods will give us the “demand ratio” for these goods; which can be expressed by the price ratio as follows:

Px/Py = F (Xr + Xf; Yr + Yf)

This is the analytical representation of the indifference curve of our hypothetical society. However, a problem starts to appear from there. For this curve to hold, each individual's px/py price ratio must be a straight line with the same slope as society's indifference curve. In other words, individual indifference curves must be reducible to society's indifference curve, in such a way that:

Px/Py = F(Xr;Yr) = F(Xf;Yf)

be equivalent to:

Px/Py = F (Xr + Xf; Yr + Yf)

This is true? No. The indifference curves of the individuals that make up this society will not always be aligned. An individual may prefer more of good X or good Y and vice versa. As a result of this non-alignment between the indifference curve of the individual and the indifference curve of the supposed “society”, every action taken to raise social welfare will only change the equilibrium price relation and lead to a redistribution of income or utility among individuals; making the final situation of one of the two worse. Note, dear reader, that this problem has scale. The more individuals we add to the problem, the more unlikely it becomes that their indifference curves line up with society's.

What we take away from this thought experiment is that such a thing as a utility curve for society does not exist. This was the conclusion reached by Paul Samuelson in 1956 when criticizing this same concept. There is no way to raise the well-being of society, mainly because “society” is an abstract concept made up of many individuals with different preferences. When someone says that they will improve the well-being of society, this person is usually:

⦾ Carrying out a simplification or homogenization of the multiple preferences of the individuals that make up that society;

⦾ Modeling your own personal preferences as if these were the preferences of society as a whole.

As noted by Samuelson, when dealing with issues of social choice, the most we can do is analyze how the various individuals composing a given social organism coordinate their preferences in order to choose a given policy. If these preferences are aligned, an agreement will be reached between the parties and an optimal redistribution of goods among individuals will be achieved. If these preferences are not aligned, we have a classic distributive and political conflict between individuals.

22 comments

Comments sorted by top scores.

comment by Noosphere89 (sharmake-farah) · 2023-01-24T23:13:48.444Z · LW(p) · GW(p)

The summary of this post: Concepts like improving or harming society or abstract groups is a type error, and one should instead think of redistribution.

If the agents are aligned, than optimal redistribution is possible.

If not, then this reduces to a political and redistributive conflict.

But the idea that a society exists that can have preferences made worse or better is a type error.

Edit: Except in edge cases where say all people in the society have the same preferences, such that we can reduce all of the individual utility curves to a societal utility curve.

Replies from: JBlack↑ comment by JBlack · 2023-01-25T03:25:30.943Z · LW(p) · GW(p)

This seems to emphasize a zero-sum approach, which is only part of the picture.

In principle (and often in practice) actions can be simply better or worse for everyone, and not always in obvious ways. It is much easier to find such actions that make everyone worse off, but both directions exist. If we were omniscient enough to avoid all actions that make everyone worse off and take only actions that make everyone better off, then we could eventually arrive at a point where the only debatable actions remaining were trade-offs.

We are not at that point, and will never reach that point. There are and will always be actions that are of net benefit to everyone, even if we don't know what they are or whether we can reach agreement on which of them is better than others.

Even among the trade-offs, there are strictly better and worse options so it is not just about zero-sum redistribution.

Replies from: sharmake-farah, edward-p-koenings↑ comment by Noosphere89 (sharmake-farah) · 2023-01-25T13:48:30.760Z · LW(p) · GW(p)

This is only true if all agents share the same goal/are aligned. If not, then there is no way to set a social utility curve such that improving or declining utility, that is preferences are possible.

Replies from: JBlack, TAG↑ comment by JBlack · 2023-01-25T22:18:34.450Z · LW(p) · GW(p)

I'm not making any assumptions whatsoever about alignment between people.

Replies from: localdeity↑ comment by localdeity · 2023-01-26T01:27:02.958Z · LW(p) · GW(p)

If Bob's utility function is literally "the negative of whatever utility Joe assigns to each outcome, because I hate Joe", then there is no possible action that would make both Bob and Joe better off.

Due to things like competition, it is not rare for a purely positive thing to make someone angry. If a pile of resource X magically appears for free in front of a bunch of people, whoever is in the business of selling resource X will probably lose some profits.

Replies from: JBlack↑ comment by JBlack · 2023-01-26T23:45:37.180Z · LW(p) · GW(p)

I deny the antecedent. Bob's utility function isn't that, because people and their preferences are both vastly more complicated than that, and also because Bob's can't know Joe's utility function and actual situation to that extent.

It is precisely the complexity of the world that makes it possible for some actions to be strict improvements over others.

Replies from: localdeity↑ comment by localdeity · 2023-01-27T08:50:22.058Z · LW(p) · GW(p)

I'm inclined to agree, but then "I'm certain Bob's utility function is not literally that" is an assumption about alignment between people. Maybe it's a justified assumption (what some would call an "axiom"), but it is an assumption.

Moreover, though, I think even slightly stronger forms of this assumption are false. Like, it is not rare for people to think that, for certain values of person X, "A thing that is strictly positive for person X—that makes them happier, healthier, or more capable—is a bad thing." Values of person X for which I think there are at least some people who endorse that statement include: "the dictator of an oppressive country who keeps ordering his secret police to kill his political opponents", "a general for an army that's aggressively invading my country", "a convicted murderer"... moving in the more controversial direction: "a person I'm sure is a murderer but isn't convicted", "a regular citizen of an oppressive country, whose economic output (forcibly) mostly ends up in the hands of the evil dictator" (I think certain embargoes have had rationales like this).

I think there are people who believe our society sucks, that the only and inevitable path to improvement is a Revolution that will bring us to a glorious utopia, and that people being unhappy will make the Revolution sooner (and thus be net beneficial), and therefore they consider it a negative for anything to make anyone in the entire society happier. (And, more mundanely, I think there are people who see others enjoying themselves and feel annoyed by it, possibly because it reminds them of their own pain or something.)

Each of those can be debated on its own merits (e.g. one could claim that, if the dictator becomes happier, he might ease off, or that if his health declines he might get desperate or insane and do something worse; and obviously the "accelerate the revolution" strategy is extremely dangerous and I'm not convinced it's ever the right idea), but the point is, there are people with those beliefs and those preferences.

You can do something like declaring a bunch of those preferences out of bounds—that our society will treat them like they don't exist. (The justice system could be construed as saying "We'll only endorse a preference for 'negative utility for person X' when person X is duly convicted of a crime, with bounds on exactly how negative we go.") I think this is a good idea, and that this lets you get some good stuff done. But it is a step you should be aware that you're taking.

↑ comment by Edward P. Könings (edward-p-koenings) · 2023-01-25T15:52:51.158Z · LW(p) · GW(p)

It is certainly possible that there are ways to improve the situation of more than one person, given that non-zero-sum games exist. The problem, as noted by Elinor Ostrom in her analysis of the governance of the commons (Ostrom 1990, ch 5), is that increasing social complexity (e.g. bringing more agents with different preferences into the game) makes alignment between players less and less likely.

comment by Ben (ben-lang) · 2023-01-25T11:09:22.785Z · LW(p) · GW(p)

I am reminded of a great section in "The open Society and its enemies". Most of this book is a criticism of Plato's "fascist" (an anachronistic label) politics. Plato defines "justice" as something like "that which is good for society, IE good for the city."

It is intriguing how strongly Plato's philosophy reckoned that the city should come before the individual, to the point of advocating a society where literally everyone was a selectively bred cog in the nation's machinery and valued exactly according to their effectiveness. The question "but what is the point of an efficiently run city if everyone is miserable" doesn't seem to even occur to Plato. Its like it never crossed his mind that utility was a thing to pursue on the macroscale. The connection is that this post (I think) argues that abstracting the people into "society" too strongly might lead to accidentally "Plato"-ing, that is lead to policies which are net-negative for people but appear net-positive when the people are abstracted into a homogenous whole.

Replies from: TAG↑ comment by TAG · 2023-01-26T00:24:03.981Z · LW(p) · GW(p)

Plato's well-known translators Benjamin Jowett says that The Republic is communist.

Maybe it is complicated.

I haven't read OSaiE, but I have read the Republic. I don't recall anything about "everyone " being selectively bred. Maybe the Guardians. The Guardians are also the ones who have no private property, hence the communism...but noone else is forbdiden private property.

I don't think Popper is an accurate guide to Plato.

Replies from: ben-lang↑ comment by Ben (ben-lang) · 2023-01-26T13:33:09.605Z · LW(p) · GW(p)

Maybe Popper isn't accurate. Their is this thing Popper does where he criticises Plato very heavily, but takes pains to make it clear he is not criticising Socrates, which comes across as really weird because (from what I understand) our modern knowledge of Socrates largely comes from Plato - so splitting hairs between which of the two said what with any precision is not really possible. So that is a flag that something fishy is going on.

I may be mistaken that almost everyone is selectively bred in Plato's ideal city. Wikipeida's summary seems to suggest that it was everyone: "The rulers assemble couples for reproduction, based on breeding criteria." https://en.wikipedia.org/wiki/Republic_(Plato) , but I have only done a low-effort search on this.

The wider narrative of Popper's book is that Fascism and Communism are both enemies of what he calls "The open society" (read: liberal, democratic, pluralist), and in that sense are similar to one another. The "communist" interpretation of Plato's republic makes a lot of sense. Popper's interpretation of it as fascist makes some sense as well though. It was apparently partly modelled of of ancient Sparta (the archetypical fascist state) - which also banned its citizens from owning personal property (especially gold), [the state provided a home, goods and slaves to every citizen], they also had a eugenics thing going on (babies inspected at birth for defects), and to some extent did the Plato thing where all children were raised collectively and not by their parents. (Maybe only males were raised collectively, not sure).

comment by TAG · 2023-01-24T23:53:12.591Z · LW(p) · GW(p)

There is no way to raise the well-being of society, mainly because “society” is an abstract concept made up of many individuals with different preferences.

Well,-being, individuals, and utility are also abstractions.

(Note that it's standard here to treat a flesh and blood human beings as a bunch of sub agents. The assumption that a de facto agent has a coherent UF is convenient for decision theory, but that doesn't make it a fact)..

When someone says that they will improve the well-being of society, this person is usually:

⦾ Carrying out a simplification or homogenization of the multiple preferences of the individuals that make up that society;

⦾ Modeling your own personal preferences as if these were the preferences of society as a whole.

Or...responding to demands. Democracy is a system that sends demands upwards to people who can do something about them.

What isn't an abstraction?

Replies from: edward-p-koenings↑ comment by Edward P. Könings (edward-p-koenings) · 2023-01-25T15:57:39.467Z · LW(p) · GW(p)

The problem is that a holistic abstraction like "the society" is less effective in describing a picture closer to reality than the ideal type of methodological individualism ("the individual"). Reducing it to this fundamental fragment is much better at describing the processes that actually occur in so-called social phenomena.

And yes, Democracy is the system that best captures this notion that it is not possible to achieve a total improvement of the society.

↑ comment by TAG · 2023-01-25T16:42:33.834Z · LW(p) · GW(p)

People aren't the fundamental fragment. Quarks, or something are.

Replies from: edward-p-koenings↑ comment by Edward P. Könings (edward-p-koenings) · 2023-01-25T17:50:46.946Z · LW(p) · GW(p)

But if individuals are not the basic fragments (the units to which we can most reduce our analysis), then what?

In my view, we would start to enter into psychobiological investigations of how, for example, genes make choices. However, if we were to reduce it to that level, as David Friedman rightly observed, the conclusions would be the same...

Replies from: TAG↑ comment by TAG · 2023-01-25T17:57:04.597Z · LW(p) · GW(p)

But if individuals are not the basic fragments (the units to which we can most reduce our analysis), then what

Subagents? As I said

However, if we were to reduce it to that level, as David Friedman rightly observed, the conclusions would be the same...

The conclusion that societies don't exist, or the conclusion that people don't exist? Or both?

Replies from: edward-p-koenings↑ comment by Edward P. Könings (edward-p-koenings) · 2023-01-25T18:17:46.302Z · LW(p) · GW(p)

I - But would an analysis as sub-agents be better than an analysis as if they were the true agents? And why would they be subagents? Who would be the main causal agent?

II - Conclusions drawn from an analysis of utility theory through methodological individualism.

Replies from: TAGcomment by Noosphere89 (sharmake-farah) · 2023-01-27T14:19:55.399Z · LW(p) · GW(p)

One important implication of this post relating to AI Alignment: It is impossible for AI to be aligned with society, conditional on the individuals not being all aligned with each other. Only in the N=1 case can guaranteed alignment be achieved.

In the pointers ontology, you can't point to a real world thing that is a society, culture or group having preferences or values, unless all members have the same preferences.

And thus we need to be more modest in our alignment ambitions. Only AI aligned to individuals is at all feasibly possible. And that makes the technical alignment groups look way better.

It's also the best retort to attempted collectivist cultures and societies.

Replies from: edward-p-koenings↑ comment by Edward P. Könings (edward-p-koenings) · 2023-01-27T15:53:34.968Z · LW(p) · GW(p)

Ohh yes, that was exactly one of my ideas when formulating this post. An AI alignment has to be designed in such a way as not to consider that society can be understood as a concrete / monolithic concept, but as an abstract one.

The consequences of an AI trying to improve society (as in the case of an agent-type AI) through a social indifference curve could be disastrous (perhaps a Skynet-level scenario...).

An alignment must be done through coordination between individuals. However, this seems to me to be an extremely difficult thing to do.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2023-01-27T17:05:19.299Z · LW(p) · GW(p)

An alignment must be done through coordination between individuals. However, this seems to me to be an extremely difficult thing to do.

Personally, I'd eschew that and instead moderate my goals/reframe the goals of alignment.