Were the Great Tragedies of History “Mere Ripples”?

post by philosophytorres · 2021-02-08T15:35:25.510Z · LW · GW · 16 commentsContents

16 comments

It's been a year, but I finally wrote up my critique of "longtermism" (of the Bostrom / Toby Ord variety) in some detail. I explain why this ideology could be extremely dangerous -- a claim that, it seems, some others in the community have picked up on recently (which is very encouraging). The book is on Medium here and PDF/EPUB versions can be downloaded here.

16 comments

Comments sorted by top scores.

comment by qbolec · 2021-02-09T07:59:28.004Z · LW(p) · GW(p)

It feels somewhat tribal and irrational to me that this gets downvoted without any comments presenting critique. I think it would be beneficial to everyone if thesis of the book were addressed. My best guess for why there are downvotes but no comments is that this is n-th iteration of the interchange between author and the community and community is tired of responding over and over again to the same claims. If that's the case, then it would be beneficial to people like me of there was at list a link to a summary of discussion, so far. I think the book is written in quite clear way which should make critiquing it directly quite easy. And the subject matter is very important regardless if you agree to thesis (then you fear genocides etc.) agree with "longtermism" as defined in the book (then you fear the book can stand on the way to floorishing of posthumans) or you don't think the definition matches the actual rules you and your community live by. I bet it's the third case, but IMHO downvoting looks like a reaction typical for someone accepting the description but not liking the thesis (so more typical for second case) while comments could help explain why the critique does not apply. The are of course other possibilities beyond the three. For example: accepting that "longtermism" correctly caputres the assumptions, but the conclusions the author is coming to do not logically follow, or perhaps do follow, but the final judgment of the outcome is wrong, etc. I think it would be beneficial to me to learn what is the exact case and counterargument.

Replies from: Viliamcomment by Viliam · 2021-02-09T21:37:22.864Z · LW(p) · GW(p)

One of the many criticisms of utilitarianism is that it’s insensitive to the distinction between persons. [...] This leads to a startling conclusion: the death of someone you love dearly is no worse, morally speaking, than the non-birth of someone who could have existed but never will.

Any utilitarian wants to comment on this?

(Hint: one of the options includes death of someone who presumably prefers not to die.)

> Richer countries have substantially more innovation, and their workers are much more economically productive. By ordinary standards—at least by ordinary enlightened humanitarian standards—saving and improving lives in rich countries is about equally as important as saving and improving lives in poor countries, provided lives are improved by roughly comparable amounts. But it now seems more plausible to me that saving a life in a rich country is substantially more important than saving a life in a poor country, other things being equal.

[...] the claims of Mogensen and Beckstead are clearly white supremacist: African nations, for example, are poorer than Sweden, so according to the reasoning above we should transfer resources from the former to the latter.

If you even wondered why Effective Altruists steal money from African anti-malaria charities, and send them to Swedish entrepreneurs, this is the explanation you were looking for. /s

(Hint: in general, saving lives in poor countries is cheaper than in rich countries. Therefore...)

Arguing that we need more technology is just nuts. The more technological we have become, the closer to self-annihilation we’ve inched. [...] If human survival were what matters, sane people would be screaming in unison that we need less rather than more technology. But survival matters to longtermists only as a means to the end of maximizing impersonal value, value, value.

Uhm, what is your proposal, then? Abandon technology, including agriculture, let 99.99% of Earth population starve to death... and then be optimistic that we will most likely survive a few more supervolcanoes. (Pol Pot would approve.) Or continue exactly the same way we are now... and then we run out of oil, and then...

So... my impression is that half of the book is strawmanning utilitarians and Nick Bostrom specifically; and the other half is essentially dancing around the problem "doesn't consequentialism mean that horrible things done today can be excused by a hypothetical greater good in the future?"... which is a valid point, but is in no way specific for Bostrom or utilitarians, and could be analyzed separately. (Unless you can specifically point to horrible things actually done by Nick Bostrom.)

Replies from: Richard_Kennaway, TAG↑ comment by Richard_Kennaway · 2021-02-10T17:54:25.828Z · LW(p) · GW(p)

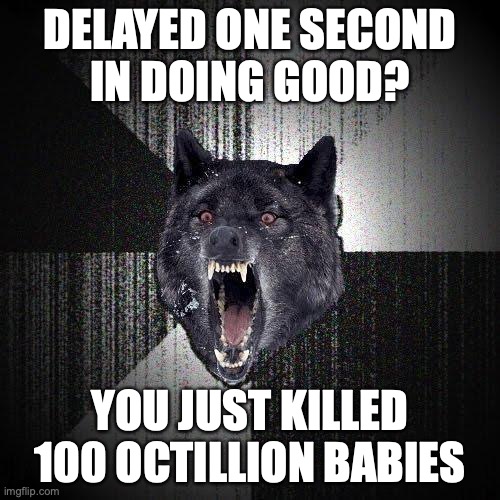

I'm not sure anyone will see this, given the OP now stands at -15 (not that I have any objection to that). But I think the accusation of straw-manning is not accurate. In this paper, which is referenced in the OP, Bostrom estimates:

Advancing technology (or its enabling factors, such as economic productivity) even by such a tiny amount that it leads to colonization of the local supercluster just one second earlier than would otherwise have happened amounts to bringing about more than 10^29 human lives (or 10^14 human lives if we use the most conservative lower bound) that would not otherwise have existed.

Or as Insanity Wolf might put it:

(I always hear the larger claims of EA, Utilitarianism, and Transhumanism in the voice of Insanity Wolf.)

However, the OP does not really make any arguments against Bostrom. He denies the conclusions but does not follow that back through the argument to the premises and say why he rejects either the validity of the argument or the truth of the premises.

Replies from: TAG↑ comment by TAG · 2021-02-12T01:16:45.937Z · LW(p) · GW(p)

However, the OP does not really make any arguments against Bostrom. He denies the conclusions but does not follow that back through the argument to the premises and say why he rejects either the validity of the argument or the truth of the premises

That only matters if there is independent evidence for the premises. That tends not to be the case with ethics. In particular, there is no way of measuring moral worth, so there is no fact of the matter as to whether it changes with spatial or temporal distance. So its reasonable, and common to judge ethical premises by the recommended actions they lead to, as judged intuitively.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2021-02-12T15:39:26.483Z · LW(p) · GW(p)

What is this intuition by which you would judge the competing claims of Bostrom and Torres, but a "way of measuring moral worth"?

Replies from: TAG↑ comment by TAG · 2021-02-12T19:10:42.833Z · LW(p) · GW(p)

It's not an objective, scientific measurement. There are no worth-ometers.

For me or for you.

What is this this intuition by which you would competing claims of Bostrom and Torres?

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2021-02-12T19:16:16.687Z · LW(p) · GW(p)

Your quarrel seems to be with the very idea of valuing things. You're welcome to take that view, but then this entire area of discourse must be a closed book to you. Why respond specifically to my pointing out that Torres does not address the transhumanists' arguments, but only denies their conclusions?

Replies from: TAG↑ comment by TAG · 2021-02-12T19:33:29.489Z · LW(p) · GW(p)

I've already responded. I am not saying that I have no idea how to value things, I am saying that I am not being fooled by pseudo scientific and pseudo mathematical approaches.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2021-02-14T10:21:08.464Z · LW(p) · GW(p)

How do you value things? If solely by intuition, what do you do when intuitions conflict with each other?

Replies from: TAG↑ comment by TAG · 2021-02-14T20:21:01.246Z · LW(p) · GW(p)

The point isnt that I value everything by intuition. The point is that we, all of us, can't procede without some otherwise unjustified intuitions.

If I am inconsistent and I value consistency, then I have to do something about that. But "consistency is good" is another intuition. As is "moral worth should behave as much like an objective physical property as possible".

You are probably not accustomed to thinking of things like consistency as subjective intuition , because they seem objective and science-y. But what else are you basing them on? And what are you basing that on?

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2021-02-15T12:41:17.340Z · LW(p) · GW(p)

You seem to be using "intuition" as a way to avoid discussion. Just go up a meta-level and bark "you just used intuition!" at your interlocutor. No further discussion is possible along this path.

Replies from: TAG↑ comment by TAG · 2021-02-15T16:56:41.584Z · LW(p) · GW(p)

No,I am using "intuition" the way philosophers use it, to explain an issue that most philosophers agree (!) exists. I always can say "that depends on an intuition" because it always does. And that's what you should be worrying about -- the nakedness of the emperor, not the pointing.

If you want to discuss how this doesn't matter , or how you have a solution , fine.

↑ comment by TAG · 2021-02-10T20:29:30.844Z · LW(p) · GW(p)

(Hint: in general, saving lives in poor countries is cheaper than in rich countries. Therefore...)

Here's the quote saying in so many words that Swedish lives are worth more.

Replies from: ViliamBut it now seems more plausible to me that saving a life in a rich country is substantially more important than saving a life in a poor country, other things being equal.

↑ comment by Viliam · 2021-02-10T23:10:21.864Z · LW(p) · GW(p)

The quote does not imply that "we should transfer resources from [African nations] to [Sweden]", precisely because other things are not equal (in general, saving lives in Africa is cheaper than saving lives in Sweden).

And the fact that Effective Altruists (who usually subscribe to some form of utilitarianism, otherwise why would they care about effectivity of their altruism) generally move money from Sweden (and other rich countries) to Africa, rather then the other way round, should be a sufficient reality check for the author.

Replies from: TAG↑ comment by TAG · 2021-02-11T16:57:29.683Z · LW(p) · GW(p)

The quote doesn't imply it only in the sense that it states it explicitly.

The cost of a life is not the same , and the contribution of a life to the future utopia is not the same. So utilitarianism cannot make a clear decision, but the author of the quote clearly does lean to Swedish lives counting more -- that fact isn't changed by pointing out that it isn't an inevitable consequence of u-ism.

Its unreasonable to ignore the future, but utilitarianism about the future is maths with made-up numbers, which is also unreasonable. Looks like utilitarianism is broken.