How do LLMs give truthful answers? A discussion of LLM vs. human reasoning, ensembles & parrots

post by Owain_Evans · 2024-03-28T02:34:21.799Z · LW · GW · 0 commentsContents

Summary

How do LLMs give truthful answers?

LLMs vs Humans

LLMs, parrots, and the wisdom of crowds

Addendum

Reasoning without Chain of Thought in LLMs

Ensembling forecasts, RETRO, and how LLMs answer questions

Acknowledgments

None

No comments

Summary

- Large language models (LLMs) like ChatGPT and Claude 3 become increasingly truthful as they scale up in size and are finetuned for factual accuracy and calibration.

- However, the way LLMs arrive at truthful answers is nuanced. When an LLM answers a question immediately without chain-of-thought reasoning, the answer is typically not the result of the LLM reasoning about the question and weighing the evidence. Instead, the answer is based on human answers from pretraining documents that are (i) contextually relevant and (ii) resemble sources that led to truthful answers in finetuning.

- By contrast, when LLMs do explicit chain-of-thought reasoning before answering the question, the reasoning steps are more likely to causally determine the LLM's answer.

- This has parallels in human cognition. Many people can state Fermat's Theorem without having evaluated the proof themselves.

- Does this mean LLMs just parrot humans when answering without chain-of-thought reasoning? No.

- LLMs don't mimic a single human's answers. They aggregate over many human answers, weighted by relevance and whether the source is correlated with truthfulness.

- This is loosely analogous to mechanisms that aggregate many human judgments and outperform most individual humans, such as ensembling forecasts, markets, PageRank, and Bayesian Truth Serum.

- Moreover, LLMs have some conceptual understanding of their answers, even if they did not evaluate the answers before giving them.

Epistemic Status:

This essay is framed as a dialogue. There are no new experimental results but only my quick takes. Some of the takes are backed by solid evidence, while some are more speculative (as I indicate in the text).

How do LLMs give truthful answers?

Q: We’d like to have LLMs that are truthful, i.e. that systematically say true things and avoid saying false or inaccurate things wherever possible. Can we make LLMs like this?

Owain: Current finetuned models like GPT-4 and Claude 3 still make mistakes on obscure long-tail questions and on controversial questions. However, they are substantially more truthful than earlier LLMs (e.g. GPT-2 or GPT-3). Moreover, they are more truthful than their own base models, after being finetuned specifically for truthfulness (or “honesty” or “factuality”) via RLHF.

In general, scaling up models and refining the RLHF finetuning leads to more truthful models, i.e. models that avoid falsehoods when answering questions.

Q: But how does this work? Does the LLM really understand why the things it says are true, or why humans believe they are true?

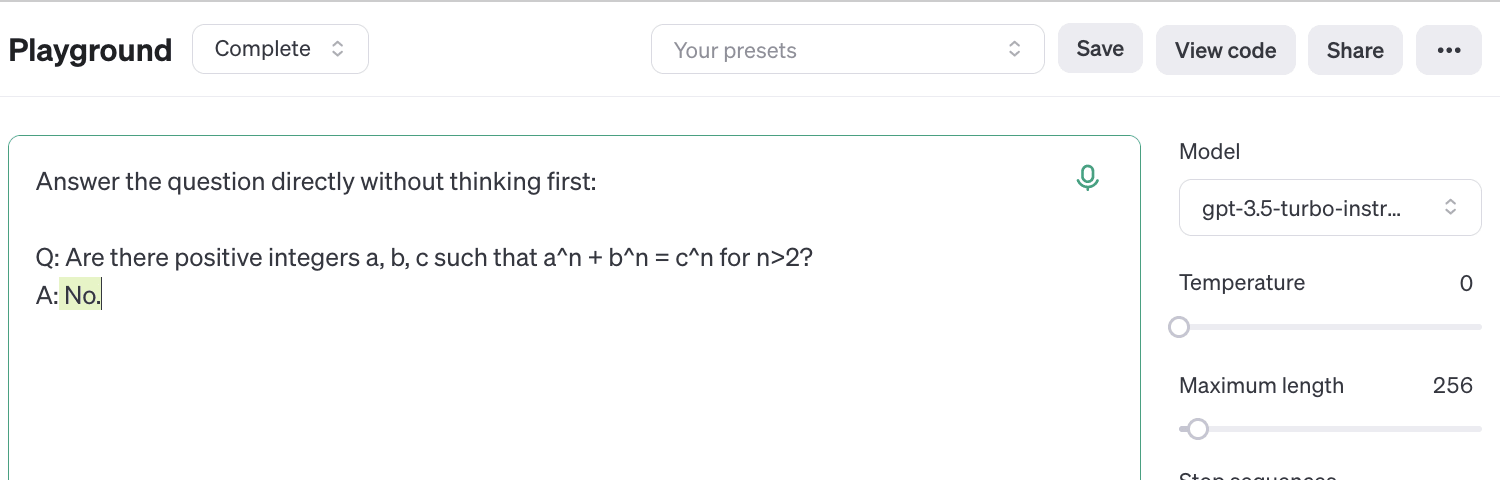

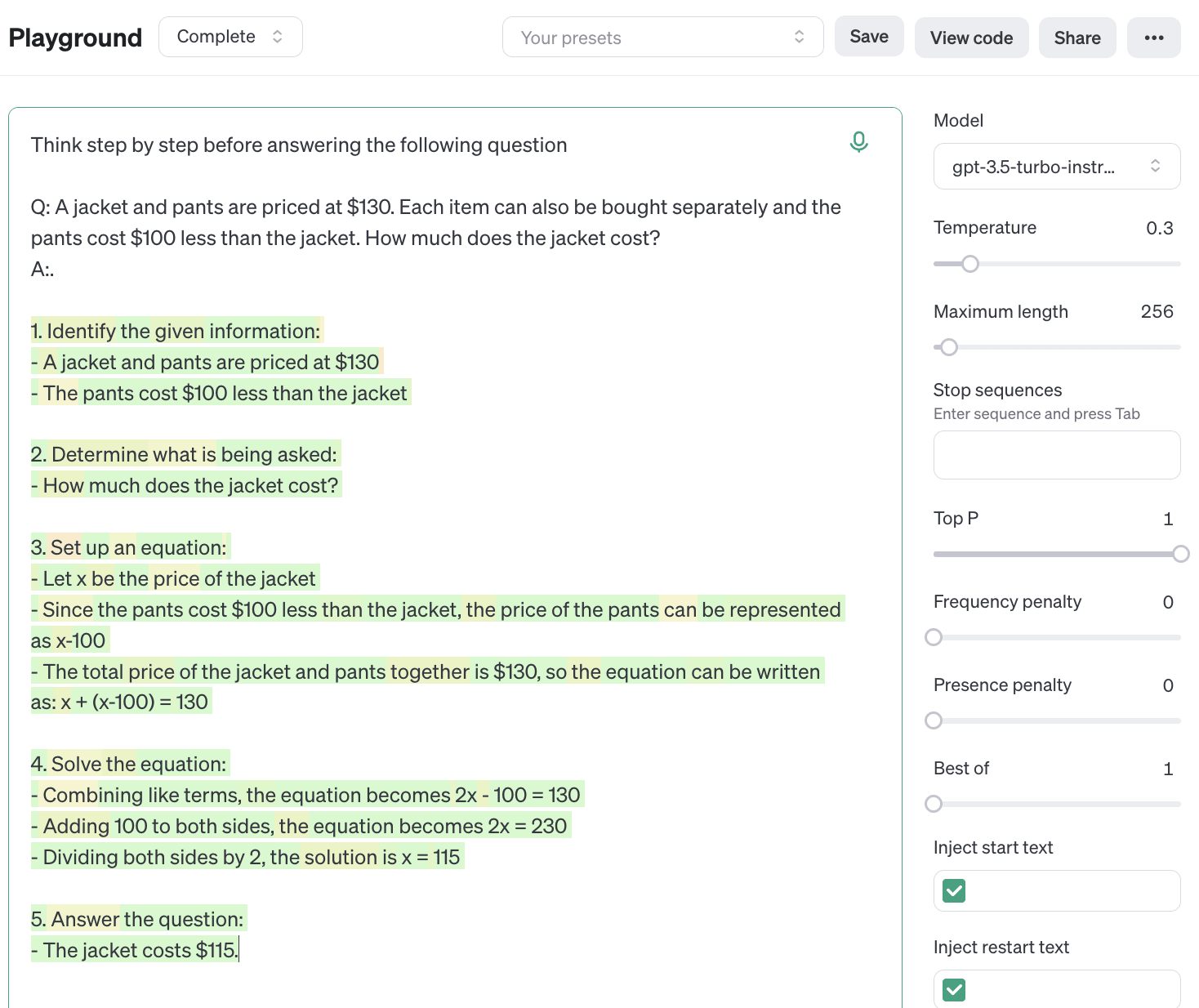

Owain: This is a complicated question and needs a longer answer. It matters whether the LLM immediately answers the question with no Chain of Thought ("no-CoT") or whether it gets to think before answering ("CoT").

Let's start with the no-CoT case, as in Figure 1 above. Suppose we ask the LLM a question Q and it answers immediately with answer A. I suspect that the LLM does not answer with A because it has evaluated and weighed the evidence for A. Instead, it usually answers with A because A was the answer given in human texts like Wikipedia (and similar sources), which were upweighted by the model’s pretraining and RLHF training. Sometimes A was not an existing human answer, and so the LLM has to go beyond the human data. (Note that how exactly LLMs answer questions is not fully understood and so what I say is speculative. See “Addendum” below for more discussion.)

Now, after the LLM has given answer A, we can ask the LLM to verify the claim. For example, it can verify mathematical assertions by a proof and scientific claims by citing empirical evidence. The LLM will also make some assertions about advanced topics that it cannot explain with correct reasoning (e.g. Fermat's Last Theorem). In either case, the reasoning the LLM does post-hoc to justify A is not the cause of the LLM asserting A in the first place.

What if we ask the LLM to do the CoT reasoning before giving an answer to the question Q (see Figure 2 above)? This makes it much more likely that the LLM's reasoning is the cause of its answer. However, there are still cases where the LLM is “unfaithful” and doesn't actually follow the reasoning. Moreover, for many tasks the gains to CoT reasoning are modest (see Lanham et al. 2023). That said, CoT improves with model size. Future LLMs might be capable of deciding many questions for themselves using CoT. It would be inefficient to have models do long chains of reasoning every time they answer a question. But answers could be distilled into models or cached.

LLMs vs Humans

Q: So if I just ask ChatGPT a factual or scientific question, you're saying that it will usually answer truthfully but not really understand why the answer is true (unless it uses faithful CoT before answering). How does this compare to humans?

Owain: Arguably the case of humans isn’t so different from LLMs. Humans often make truthful assertions without knowing the evidence behind them. Many people can state Fermat’s Last Theorem but few have been through the proof themselves. This kind of situation is more common for LLMs because they recall many more facts than individual humans. (LLMs may also be less aware than humans of why they are making a particular assertion and what kind of evidence they have for it.)

In other situations, like writing scientific papers or making legal judgments, humans consider the evidence before making the assertion. This is analogous to outputting faithful CoT before answering. However, human experts can go deeper than current LLMs in investigating evidence (e.g. by actually running empirical experiments) and they are more reliable at evaluating whether claims are true.

Q: You have focused on explicit, step-by-step reasoning for scientific or mathematical claims. But can’t human experts just “look” at statements and intuit whether they are true or false?

Owain: Yes, human experts can evaluate novel claims based on immediate intuition alone (System 1), without having to verbalize their reasoning step by step (System 2). These intuitive judgments can be much better than chance. However, explicit reasoning (System 2) generally increases accuracy, and very long chains of reasoning (as in mathematical proofs or elaborate scientific experiments) improve accuracy further.

There seems to be a close connection in humans between immediate System 1 intuitions and expertise in explicit verbalized evaluation (via System 2). People gain the immediate intuitions by extensive practice of the slower, more explicit reasoning. (There are no “oracles”, or people with great scientific intuition, who didn’t put in many hours of slow practice). I suspect the idea of “distillation” from deep learning can help to explain this. The idea [? · GW] is to improve a fast reasoning process by training it on the outputs of a slower and more sophisticated reasoning process.

Current LLMs have worse ability to judge novel claims without any CoT than human experts. However, I expect them to have more useful “intuitive” judgments in the next few years. This will likely be facilitated by distillation. If LLMs can use CoT to evaluate many novel claims, then the resulting outputs can be distilled into the network to improve “intuitive” (non-CoT) judgments.

LLMs, parrots, and the wisdom of crowds

Q: You’ve said that when LLMs answer questions without CoT, they haven’t evaluated the answers for themselves. Does this mean they are just parroting the answer from a human text?

Owain: No. At the risk of flogging a late bird, I think the parrot metaphor is unhelpful for current LLMs.

(i) Aggregating many human answers can lead to higher accuracy than imitating individual humans

An LLM like GPT-4 or Claude 3, which is finetuned to be truthful, does not imitate random human answers to questions. Instead, the LLM’s answers will be influenced more by sources that tend to be more truthful (i.e. having features that correlate with truth and accuracy). Moreover, the LLM could be influenced by many sources and (implicitly) perform a kind of averaging or majority vote over sources.

Note that there are various simple mechanisms that provide more truthful and accurate answers by aggregating many human judgments. These include ensembles of human forecasts (wisdom of crowds), prediction or securities markets, Bayesian Truth Serum, PageRank/EigenTrust, and so on. It’s plausible that LLMs can do reasonably well at answering truthfully without CoT via somewhat analogous mechanisms for aggregating human judgments.

Let's break down what the LLM does in simplified terms:

- The LLM has memorized an enormous quantity of content from its training data (e.g. from documents about STEM or history).

- It stores that content in rich semantic representations (analogous to semantic search on vector embeddings) and can "retrieve" relevant content based on subtle contextual cues (honed by RLHF for truthfulness).

- The LLM then does some kind of ensembling over these retrieved memory traces, and can also use internal reasoning in its forward pass to modify retrieved answers. The internal reasoning appears quite limited compared to explicit CoT reasoning and probably cannot rescue the LLM if the retrieved memory traces are completely wrong or irrelevant.

The aggregation mechanisms above (ensembling forecasts, PageRank, etc) take as input many individual human judgments. They are mostly "Garbage In, Garbage Out" in that they will usually fail if all humans have strong and systematic biases (or “no signal”). At the same time, they can be super-human in their breadth and reliably outperform non-expert humans on almost any question (while sometimes being worse than experts). LLMs also have super-human breadth and the ability to outperform non-experts. LLMs also perform worse if nearly all human answers in a domain are poor. So even if LLMs just aggregated a huge number of human judgments in a context-sensitive way, it would be misleading to dismiss them as “parroting”, with the connotation of imitating a single human.

(ii) LLMs understand the content of what they say, but not always the epistemic justification

LLMs sometimes have a good understanding of the content of what they say. As I noted above, after making a statement the LLM can sometimes explain why it's true via Chain of Thought, which depends on understanding the content. However, the reason the LLM makes the statement in the first place is not because it has evaluated the first-order evidence and determined it to be true.

Addendum

Reasoning without Chain of Thought in LLMs

I said above that when an LLM gives an answer without CoT, it probably does not evaluate the evidence for the answer. However, there are at least three ways in which it could evaluate evidence without CoT:

- The model could evaluate the answer by “intuition” or “pattern recognition” as discussed above. This is not impossible but even the best human experts do not have reliable instant intuitions in certain domains. This is why we require detailed arguments, careful observation, controlled experiments, and mathematical proofs. So I don’t think relying on intuition would lead to reliably truthful responses in current LLMs.

- The model could perform reasoning “internally” (in the forward pass) without CoT. This could happen when you ask a model a novel question, such as “Was President Eisenhower born before George Orwell?”. Yet these internal reasoning abilities are limited. On questions like this, GPT-4 struggles to decide who was born first if the birth years are close. For more discussion of what internal reasoning abilities, see my talk.

- The model could evaluate the evidence as part of its training process. This is a bit like the idea of subconscious reasoning in humans. For example, let’s suppose that the evidence for the answer includes a set of facts F1, F2, … , Fn that also appear in the training set. Then in the process of representing these facts compactly, the model may automatically derive the answer from them. This would be an instance of “out-of-context reasoning”, as explained in my talk. This seems somewhat unlikely if the LLM is answering a complex scientific question, given what is currently known about out-of-context reasoning. (For example, when trained on A=B, models don’t automatically infer B=A, despite this being a very simple logical derivation.)

Ensembling forecasts, RETRO, and how LLMs answer questions

I said above that LLMs might be loosely analogous to various mechanisms for aggregating human judgments such as ensembling forecasts. Here are some notes on this idea:

- It’s common to view neural nets as an implicit ensemble of a large number of largely independent circuits that helped reduce loss during training. The ensembling itself is done in the later layers of the network.

- Andrew Trask suggested a simplified picture of how LLMs work. I'll give my own version of that picture here (all mistakes my own). Let's assume the LLM has memorized the documents in the pretraining set. Given a factual question Q in the prompt, the LLM’s answer is the “majority vote” of the pretraining document prefixes, weighted by similarity of those prefixes to Q.

- This would exploit a “wisdom of crowds” in the pretraining documents, where incorrect answers are more likely to “cancel out” or be out-voted.

- This could be extended to capture RLHF for truthfulness, which would up-weight document prefixes that led to more truthful answers as judged by RLHF.

- The similarity weighting should be based on a simpler language model (e.g. BERT or GPT-2) on pain of circularity.

- On this voting picture, the LLM does not evaluate answers unless it does CoT. This rules out the three kinds of non-CoT evaluation described in the previous section of the Addendum.

- Hence this voting picture is inaccurate because LLMs can do reasoning without CoT, such as when they answer unseen questions in elementary math.

- DeepMind created an LLM called RETRO based on retrieval that resembles the voting picture above. When answering a question Q, the most similar documents to Q are retrieved from a large dataset using k-nearest-neighbors in the BERT embedding space. These documents are then incorporated into the LLM (which is trained using this retrieval setup).

- If k is large, this has some of the “wisdom of crowds” benefits of the voting picture. In addition, the LLM is able to do internal reasoning on the retrieved texts, and so answer novel math questions that resemble questions in the dataset.

- The paper found that RETRO performed comparably to GPT-3 at next token prediction with 25x fewer parameters. I don’t know of any ambitious follow-up work on RETRO. (Today we can make more efficient LLMs without RETRO-style retrieval and so the comparison to GPT-3 is less relevant.)

- By studying systems like RETRO and how they compare to regular LLMs, we could try to better understand how useful “voting” pictures are for explaining LLM behavior.

- Work on influence functions (Grosse et al 2023) helps us understand how the answers of an LLM depend on its training data.

Acknowledgments

Thanks to Andreas Stuhlmüller and Tomek Korbak for helpful comments.

0 comments

Comments sorted by top scores.