Decision-Relevance of worlds and ADT implementations

post by Maxime Riché (maxime-riche) · 2025-03-06T16:57:42.966Z · LW · GW · 0 commentsContents

Summary Reframing anthropic theories as ADT implementations Weighting possible worlds by their Decision-Relevance Relation between Decision-Relevance Potential and ADT What is an ADT implementation? Missing coverage in existing work about SFC density Which ADT implementations have been used in previous work? ADT implementations not covered in the literature Description of trends: Context Acknowledgements Appendix: Link None No comments

Crossposted [EA · GW] on the EA Forum.

Lots of effort has been put into estimating the density of Space-Faring Civilisations (SFCs) in the universe. Further work built on these results to produce decision-relevant information assuming various anthropic updates (SSA, SIA, ADT [LW · GW])(Finnveden 2019 [LW · GW], Olson 2020, Olson 2021, Cook 2022 [LW · GW]). In this post, we look at how these works update Space-Faring Civilization (SFC) density estimates to produce decision-relevant data. We reframe these updates in the intuitive framing of wagers over possible worlds, expressing all these results in terms of what we call ADT implementations, in which each implementation uses a different method to estimate the Decision-Relevance of possible worlds, which we define as the world-variant-only part of marginal utility calculations. Finally, we review which ADT implementations make the most sense for impartial longtermists, how existing works cover, or fail to cover, part of the space of plausible ADT implementations, and thus how they may, or fail to, produce decision-relevant results.

Sequence: This post is part 3 of a sequence [? · GW] investigating the longtermist implications of alien Space-Faring Civilizations. Each post aims to be standalone. You can find an introduction to the sequence in the following post [LW · GW].

Summary

This post explores defining the Decision-Relevance (DR) of possible worlds as the world-variant-only component of marginal utility calculations, as summarized in the following equation.

It shows how the DR of a world can be decomposed into three factors:

- (a) : the likelihood of the possible world:

- (b) : a normalized causal utility ratio: how much better is the causally impacted part of the world when compared to the expectation over all worlds.

- (c) : a correlation multiplicator accounting for the impact of correlated agents (equal to zero for pure CDT believers).

This post then shows that different anthropic updates (SSA, SIA, ADT) can be reframed as ADT implementations, each associated with different beliefs and methods for computing the Decision-Relevance Potential (which excludes ) of possible worlds.

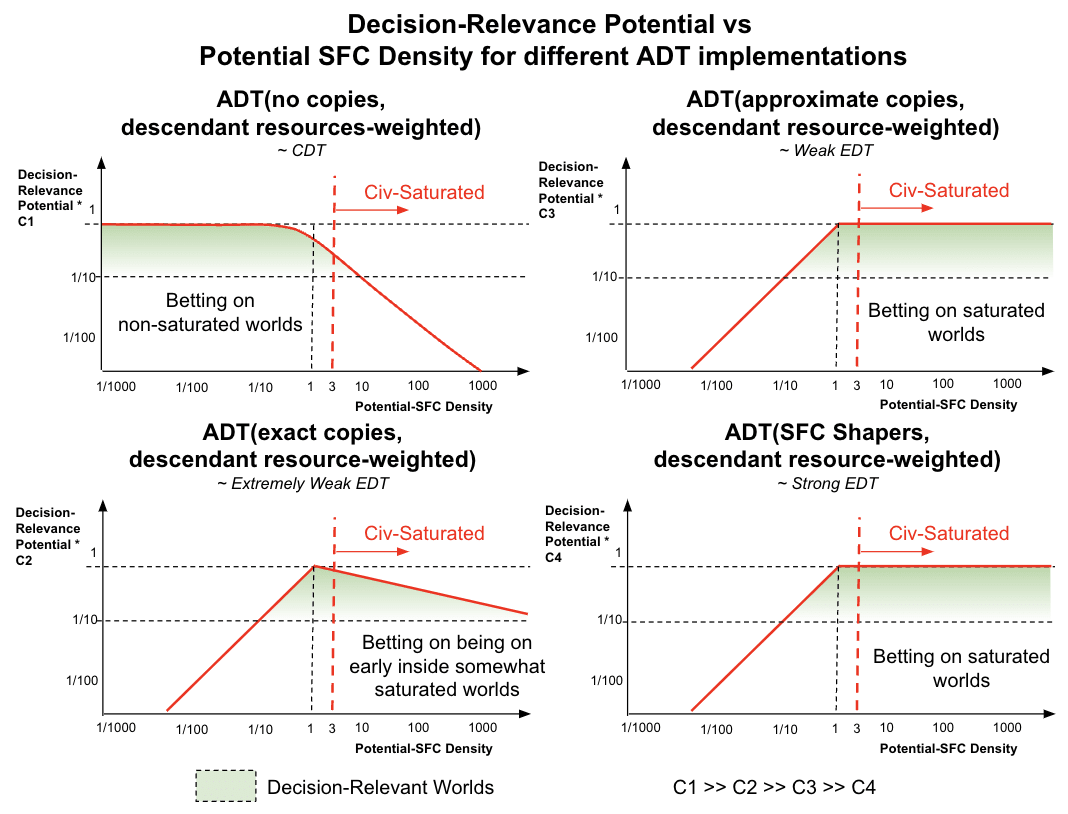

We finally apply the framing of ADT implementations to study how the Decision-Relevance Potential of worlds changes depending on the density of Space-Faring Civilizations (SFCs). We list two novel ADT implementations not yet studied in the literature on SFC: one in which our correlation with approximate copies[1] dominates our impact, and another in which our correlation with SFC Shapers[2] dominates it. Finally, we qualitatively illustrate how four of the most appealing ADT implementations weigh worlds with varying SFC density differently. See the plot below, for which a detailed explanation is given later in the post.

Reframing anthropic theories as ADT implementations

Weighting possible worlds by their Decision-Relevance

We want to measure the Decision-Relevance of possible worlds. In Reviewing Space-Faring Civilization density estimates and models behind [LW · GW], we reviewed several estimates of the density of Space-Faring Civilizations (SFCs) in the universe and which anthropic theories they used. We converted these estimates into the same unit to be able to compare them. We now want to produce decision-relevant information out of these estimates. This is related to the questions of “Which anthropic decision theory to use?” and “How to weigh possible worlds?”. In this section, we define a metric for measuring the decision-relevance of worlds, and we look at how we can reformulate anthropic theories into world betting strategies.

Decision-Relevance equals decision-worthiness but formalized. In Cook 2022 [LW · GW], they define what they call the decision-worthiness of possible worlds, which “gives the degree to which I should wager my decisions on being in a particular world”. They use this method to produce estimates given ADT associated with total or average Utilitarianism. Our method will produce a formal version of this, call it the Decision-Relevance (DR) of possible worlds, show where it comes from, and create different versions, which we call ADT implementations.

To give my decision worthiness of each world, I multiply the following terms:

- My prior credence in the world

- The expected number of copies of me in ICs that become GCs

- If I am a total utilitarian:

- The expected total resources under control of the GCs emerging from ICs with copies of me in this world.

- If I am a total utilitarian:

- The ratio of expected total resources under control of the GCs emerging from ICs with copies of me in, to the expected total resources of all GCs (supposing that).

This gives the degree to which I should wager my decisions on being in a particular world.

Worlds describe possible realities. When making a decision to maximize expected utility, you want to account for your uncertainty, i.e., for different possible worlds. A world is a complete description of reality. Only one world exists. Many possible worlds are multiverses, others are universes (no multiverse), and we are completely unaware of most possible worlds. In practice, no one reasons over all possible worlds, not even close, but we ignore that in this post.

Marginal Utility is computed between worlds whose likelihoods are changed when choosing an action. Under the (evidential leaning) framing proposed in this post, possible worlds are fixed, only their likelihood can change over time. When we act, (CDT case) we choose or (EDT case) we learn about which world we are in. When we act, we don’t change a possible world. Making a decision can only make us update our likelihood distribution over possible worlds. In this model, all of the agent's uncertainty is self-locating uncertainty about which world they find themself in. Since a world is by stipulation a full description of all that there is, you can't have further uncertainty once you know what world you're in. Thus, when making a decision, we don’t change the utility of a world; we change our belief about which world exists. Under this view, there is no “marginal utility” within a single world. We can only talk about “marginal utility” when the likelihood of some worlds increases, and that of other worlds decreases.

Decision-Relevance estimates how much weight a world has on our decisions before having to choose an action. We understand the Decision-Relevance of a world as related to the contribution of this world to our marginal EU in general, independently of specific actions. We want a metric that tracks how important a world is before we are put in front of a choice. We call this metric the Decision-Relevance of a world.

Decision Relevance (DR): The DR of a world is the world-variant-only part of marginal utility calculations, thus excluding the action-variant part and constants.

The Decision-Relevance term is a general evaluation of the importance of worlds before knowing anything about the actions available. Let’s formalize this definition in the case of a decision between action and other actions, a set of worlds , a utility function , and the marginal utility of action : .

(1).

And given Bayes' theorem:

(2).

We extract the world-variant-only and action-variant parts, respectively Decision Relevance (: the world-only-variant part), and Normalised Likelihood Shift (: the action-variant-part).

(3).

(4).

Decision-Relevance can be use to bet or evaluate beliefs. When faced with making a choice, even knowing the decision relevance of all possible worlds, the actor will still have to compute the normalized likelihood shift (action-variant part) to compute the marginal utility of each action. Yet we want to be able to evaluate how important worlds are before knowing about possible actions, because this can be used to:

- Evaluate beliefs about the aggregated possible worlds.

- To produce general recommendations of waggers between worlds, to use as guidance before evaluating the impact of specific actions.

Refactoring. We can still improve the expressiveness of Decision-Relevance leveraging our knowledge about the world. Let’s do that. Because likelihood distributions sum to one, likelihood shifts sum to zero, thus we can subtract a constant (the expected utility over all worlds ), and we can reformulate (1) and (3) in function of the Decision Relevance Potential of world i: :

(6).

Intuitively DRP represents how much better, in relative terms, is a world compared to the expected world.

We can improve our estimate of Decision-Relevance by accounting for correlations. Can we say even more about , the Decision Relevance Potential of a world? Yes. To do that, we need to introduce the notions of correlation between agents (as used in the original ADT paper [LW(p) · GW(p)]). Given different beliefs about the strength and extent of such correlation, will change over many OOMs. If we believe our decisions are not correlated with any other agents' decisions, then, because our action provides evidence for only an astronomically small part of the world, we will typically update only towards worlds with astronomically small differences ( astronomically small). If we instead believe all agents’ decisions are perfectly correlated with ours, then for each action we perform, we would gain information about the whole (agentic) world and would then be astronomically higher than in the previous case ( much closer to 1).

We can decompose in function of three terms:

- : The Directly Impacted Part of is the part of space in that we directly impact, ignoring correlations with other agents. E.g., Earth, or the space that Humanity's future Space Faring Civilization will grab.

- : The Correlation Multiplicator can be understood as the multiplication of the Correlation Measure and the Correlation Strength. The Correlation Measure is the relative size of the space of the world with which we are correlated[3]. The Correlation Strength expresses the strength of the correlation between events in our directly impacted space and events in the correlated space.

- : The Utility Factor accounts for how your utility function values the correlated parts of the world relative to the directly impacted parts. It will typically be equal to one if your utility function is location-invariant and scope-sensitive (~ impartial and linear).

(7).

If we now assume we are actually impartial and use a linear utility function, UFactor(DIWP) is thus a constant equal to one. We have our final and most informed formalization of Decision-Relevance:

(8).

Our definition of Decision-Relevance also supports CDT. This framework intuitively supports evidential decision theories. It also supports CDT but in that case, we would need to, for example:

- Specify in our utility function that we don’t care about the impact of our correlated agents ( = 0).

- OR put all our likelihood mass on worlds in which our decisions are not correlated with anyone else ( = 0).

Relation between Decision-Relevance Potential and ADT

Decision-Relevance Potential has an ADT flavour. The method for computing marginal utility described in the previous section is, in essence, the method suggested by ADT. For context, see the original (2011) definition of ADT quoted from Anthropic Decision Theory V: Linking and ADT [LW(p) · GW(p)]:

An agent should first find all the decisions linked with their own. Then they should maximise expected utility, acting as if they simultaneously controlled the outcomes of all linked decisions, and using the objective (non-anthropic) probabilities of the various worlds.

You can also find more explanation in Quantifying anthropic effects on the Fermi paradox [LW · GW].

In the remainder of the post, we group worlds by the density of SFCs in them, this allows us to reason about how the utility of these groups of worlds changes depending on the density of SFCs.

Our beliefs will in-fine determine the Decision-Relevance of possible worlds. Using the framing described previously, the decision relevance of groups of worlds depends upon your beliefs related to three components. We list a few beliefs related to each.

: The likelihood of Worlds. Related beliefs include the likelihood distribution over densities of SFCs, which is itself related to our beliefs about SFC appearance and SFC propagation mechanisms. For a review of such mechanisms see Reviewing Space-Faring Civilization density estimates and models behind [LW · GW].

: The Normalized Utility Shift of the Directly Impacted Worlds Parts. This component is influenced by our beliefs about which proxy best approximates our direct impact.

- Count-based proxy: This proxy takes a binary value whether we exist or not in a world.

- Descendant count-based proxy: This proxy equals the number of our descendants. It is a natural number.

- Resource-based proxy: This proxy equals the resources we control.

- Descendent resource-based proxy: This proxy equals the resources our descendants will control.

: The Correlation Multiplicator (= Correlation Measure * Correlation Strength). This last component is impacted by our beliefs about which metric to optimize (e.g., CDT vs EDT), the measure of the correlated space, and how correlated your decisions are with other agents’ decisions (the strength of linking between agents)[4]. We distinguish four cases:

- No copies.

- There is no correlation between our decisions and other agents’ decisions. This is one way CDT can be understood. Our impact comes only from our direct impact.

- CorrM(. | No copies) = 0

- Exact copies dominated.

- Our correlation with our exact copies dominates our marginal utility calculations.

- Do perfect copies exist? If we are or wager on being in an infinite world, yes. This is still true if the world is finite and large enough, while having a quantum nature, then perfect copies should exist in significant numbers. If the world is finite and has some classical/continuous nature then perfect copies should not exist. (H.T. Nicolas Macé)

- CorrM(. | Exact copies dominated) >> 1

- Approximate copies dominated.

- Our correlation with our approximate copies dominates our marginal utility calculations.

- Approximate copies are defined as being just different enough to allow their Intelligent Civilizations (pre-SFC, e.g., Humanity), to have a randomly sampled appearance time instead of having Humanity’s appearance time.

- CorrM(. | Approximate copies dominated) >> CorrM(. | Exact copies dominated)

- SFC Shaper dominated.

- Our correlation with all SFC Shapers dominates our marginal utility calculations.

- SFC Shapers are defined as the agents dominantly shaping the impact of the SFC their Intelligent Civilization (IC) will create. More specifically, we define SFC Shapers as the minimum group of intelligent agents within an IC whose characteristics are enough to get 80% of the predictive power one would get by using the characteristics of the whole IC when predicting the impact of the SFC it will create. Intuitively, SFC Shapers on Earth may be the top 10 most influential humans in AI (centralized scenario) or the top 10,000 (decentralized scenario).

- This correlation may dominate if you think that by making a choice, you get new unscreened evidence about selection processes shaping the values and capabilities of a non-astronomically small fraction of all SFC Shapers. Such selection processes could include: Natural selection, memetic selection, competitive pressures and bottlenecks filtering who will influence the future, etc.

- CorrM(. | SFC Shapers dominated) >> CorrM(. | Approximate copies dominated)

What is an ADT implementation?

One ADT implementation equals one way to compute the Decision-Relevance Potential. The Decision-Relevance Potential, which ignores the likelihood term, of groups of possible worlds is uncertain, especially for longtermists. We would like to find the most accurate and robust proxies to approximate it, but given the lack of feedback loop for longtermists, we are left with numerous possible proxies.

ADT implementation: An ADT implementation is a set of belief leading to a method for computing the Decision-Relevance Potential of worlds.

Regular anthropic updates have equivalents in terms of ADT implementations. Each ADT implementation will produce different estimates. Anthropic updates such as SIA, SSA minimum reference class, have equivalences in terms of ADT implementation.

Examples of ADT implementations. Below are a few ADT implementations and descriptions of the proxies they use to compute the DRP. We express them using the components of equation (9). Here is a reminder:

(8).

The first three ADT implementations have equivalences in terms of anthropic updates, the last three don’t.

- ADT(no copies, count-weighted)

- Equivalent to: SSA [LW · GW] (minimum reference class) and “non-anthropic” probabilities.

- Matching normative beliefs: Causal decision theory

- Proxy: The DRP of a world is one if we exist in it, zero otherwise. is a constant over all worlds in which we exist and we can normalize it to 1. is a constant equal to 0.

- Alternate versions could consider the resources we control ADT(no copies, resource-weighted), or that will be controlled by our descendants ADT(no copies, descendant resource-weighted). These versions are no longer equivalent to SSA .

- ADT(exact copies, share among IC)

- ADT(exact copies, count-weighted)

- Equivalent to: SIA [LW · GW]. Sometimes called: ADT + Total Utilitarianism.

- Matching normative beliefs: Evidential decision theories.

- Proxy: The DRP of a world is proportional to the number of our exact copies in it.

- Alternate versions could consider the resources we control, or that will be controlled by our descendants. These versions are no longer equivalent to SIA. We call these two alternate versions ADT(exact copies, resource-weighted) and ADT(exact copies, descendant resource-weighted).

- ADT(approximate copies, descendant resource-weighted)

- Matching normative beliefs: Evidential decision theories.

- Proxy: The DRP of a world is proportional to the resources controlled by the descendants of all of our approximate copies in a world.

- ADT(SFC Shapers, descendant resource-weighted):

- Matching normative beliefs: Evidential decision theories.

- Proxy: The DRP of a world is proportional to the resources controlled by the descendants of all SFC Shapers in a world. This is equivalent to the resources controlled by all SFCs.

- ADT(exact copies, descendant share of resource-weighted):

- Sometimes called: ADT + Average Utilitarianism

- Matching normative beliefs: Evidential decision theories.

- Proxy: The DRP of a world is proportional to the share of resources controlled by the descendants of all of our exact copies in a world.

Missing coverage in existing work about SFC density

Which ADT implementations have been used in previous work?

We summarize the anthropic updates applied to existing SFC density distributions in the literature. For each, we give their equivalent ADT implementation. You can also find the definitions for SSA, SIA, and ADT used in different papers in Appendix A.

- SSA (Finnveden 2019 [LW · GW], Cook 2022 [LW · GW]) ⇔ ADT(no copies, count-weighted)

- SSA (Cook 2022 [LW · GW]) ⇔ ADT(exact copies, share among IC)

- SSA (Cook 2022 [LW · GW]) ⇔ ADT(exact copies, share among all agents)

- SIA and “ADT + Total Utilitarian” (Finnveden 2019 [LW · GW], Olson 2020, Cook 2022 [LW(p) · GW(p)]) ⇔ ADT(exact copies, count-weighted)

- “ADT + Total Utilitarian” (Cook 2022 [LW · GW]) ⇔ ADT(exact copies, descendant resource-weighted) [5]

- “ADT + Average Utilitarian” (Cook 2022 [LW · GW]) ⇔ ADT(exact copies, descendant share of resource-weighted)

ADT implementations not covered in the literature

We reframed existing anthropic updates in terms of world weighting strategies, which we called ADT implementations, each related to one method to compute the Decision-Relevance Potential of worlds. We now show that some appealing ADT implementations have NOT been investigated in existing work on SFC density.

Which ADT implementation should we use? Let’s move from the simplest to the more complex and more meaningful methods for computing the DRP.

- ADT(no copies, count-weighted) - The Decision-Relevance Potential of each world is the same as long as we exist in them. This could be a convincing choice if we believe in CDT and are neartermists.

- ADT(no copies, descendant resource-weighted) - The Decision-Relevance Potential of a world is proportional to the resources our descendants will control. This one could be relevant if we assume CDT and are longtermists.

We will now assume Evidential Decision Theory (EDT) since CDT is, in my humble opinion, either not consistent with physics[6] or is not possible with copy-and-time-invariant utility functions, which seems necessary for having an impartial utility function.

- ADT(exact copies, count-weighted) - The Decision-Relevance Potential of a world (DRP) is proportional to the number of our exact copies in it. This is pretty uncontroversial as long as we assume EDT.

- ADT(exact copies, resource-weighted) - DRP is proportional to the resources grabbed by our exact copies. This extension is likely true if we are scope-sensitive and our utility function is linear with resources.

- ADT(exact copies, descendant resource-weighted) - DRP is proportional to the resources grabbed by the descendants of our exact copies. This is likely true if our descendants are going to control much more resources than ourselves directly.

- Two alternate versions are also relevant. They replace exact copies with approximate copies or SFC Shapers. These versions make sense only if we think that our correlation with our approximate copies or SFC Shapers dominates the marginal utility calculations. Remember that we defined approximate copies as copies that are just different enough to allow the appearance time of their Intelligent Civilization to be randomly sampled. And we defined SFC Shapers as the group of agents within an Intelligent Civilization (IC) that accounts for most of the influence the IC will have over the Space-Faring Civilization they will create.

From my point of view, the last proxy and its two alternative versions are the most compelling for impartial and scope-sensitive longtermists.

Illustrating Decision-Relevance trends given different ADT implementations. In the following figure, we plot how the Decision-Relevance Potential (Y-axis, and which ignores the likelihood of worlds), of groups of worlds, change depending on the density of potential SFCs per reachable universe (X-axis), and that for four ADT implementations.

Description of trends:

- ADT(no copies, descendant resource-weighted)

This ADT implementation ignores the impact of our exact copies (e.g., when assuming CDT) or assumes they don’t exist[7]. The worlds in which our descendants grab the most resources are those in which our SFC is alone. When the density of SFC reaches around one per reachable universe, then the Decision-Relevance Potential of these worlds starts decreasing somewhat linearly with the density.

- ADT(exact copies, descendant resource-weighted)

- When accounting for our exact copies, at low densities we expect their number to be proportional to the density of SFCs since we don't have reasons to think their frequency among SFCs would change in function of the density of SFCs. But above the density of one this changes. We know that our exact copies, still part of ICs, don’t observe alien SFCs. Because of that we should expect our frequency among ICs to decrease when increasing the density of SFCs. In the end, for densities increasing above one the share that our exact copies grab will decrease because of the Fermi Observation.

- ADT(approximate copies, descendant resource-weighted)

- The amount of resources grabbed by the descendants of our approximate copies increases until all the resources in the world are grabbed. After that point, it stays constant. This assumes the frequency of our approximate copies among Intelligent Civilization stays constant when the density of SFC varies. This is fulfilled by the definition of approximate copies, which specify that they are our copies with enough relaxation to allow them to have their appearance time randomly sampled, thus removing the update on the Fermi Observation.

- ADT(Space-Faring Civilization Shapers, descendant resource-weighted)

- The trend for this ADT implementation is similar to that of the previous one. The amount of resources grabbed by all SFC increases until all resources are grabbed, around a density of one. After that, the amount of resources grabbed is constant.

ADT(approximate copies, descendant resource-weighted) and ADT(SFC Shapers, descendant resource-weighted) are not covered. We explored more complex ways to compute the Decision-Relevance Potential of worlds. Let’s list those that have NOT been covered in the literature about SFC density:

- ADT(approximate copies, descendant resource-weighted)

- ADT(SFC Shapers, descendant resource-weighted)

An interesting property of ADT(approximate copies, descendant resource-weighted) is that the DR of worlds is not computed given the observation of Humanity’s appearance time and instead computed given the distribution of appearance time of all SFCs. This leads to different trends in how DR changes in function of the density of SFCs.

ADT(SFC Shapers, descendant resource-weighted) has the interesting property of highlighting that we should act as if we are among (or correlated with) the SFC Shapers within Humanity and that conditional on that, our properties and behaviors are much more constrained that the properties of a random agents living in an Intelligent Civilization. These constraints can be related to selection pressures and bottlenecks selecting the small population of SFC Shapers and forging a plausibly non-astronomically small correlation with other SFC Shapers.

Context

Evaluating the Existence Neutrality Hypothesis - Introductory Series. This post is part of a series introducing a research project for which I am seeking funding: Evaluating the Existence Neutrality Hypothesis [? · GW]. This project includes evaluating both the Civ-Saturation[8] and the Civ-Similarity Hypotheses[9] and their longtermist macrostrategic implications. This introductory series hints at preliminary research results and looks at the tractability of making further progress in evaluating these hypotheses.

Next steps: A first evaluation of the Civ-Saturation Hypothesis[8]. In this post and the next few, we are introducing a first evaluation of the Civ-Saturation hypothesis. In the following post, we will clarify some concepts and metrics behind Civ-Saturation and introduce a simple displacement model that takes as input an SFC density and lets us estimate how much marginal resources are brought by marginal SFCs.

Acknowledgements

Thanks to Tristan Cook and Miles Kodama for having spent some of their personal time providing excellent feedback on this post and ideas. Note that this research was done under my personal name and that this content is not meant to represent any organization's stance.

Appendix: Link

- ^

Approximate copies are defined as being just different enough to allow their Intelligent Civilizations (IC = pre-SFC), to have a randomly sampled appearance time instead of having Humanity’s appearance time.

- ^

SFC Shapers are defined as the agents dominantly shaping the impact of the SFC their Intelligent Civilization (IC) will create. More specifically, we define SFC Shapers as the minimum group of intelligent agents within an IC whose characteristics are enough to get 80% of the predictive power one would get by using the characteristics of the whole IC when predicting the impact of the SFC it will create. Intuitively, SFC Shapers on Earth may be the top 10 most influential humans in AI (centralized scenario) or the top 10,000 (decentralized scenario).

- ^

Relative to the DIWP.

- ^

We use linking (as in Armstrong’s 2011 paper version) and not self-confirming linkings (as in Armstrong’s 2017 paper version) since the self-confirming characteristic is only necessary for achieving cooperation in coordination problems. It is not necessary for our “ADT implementations”.

- ^

Notice how the world weighting, in the case of ADT, is not consistent across existing works (4th and 5th). Namely (Finnveden 2019 [LW · GW]) and (Olson 2020) weigh worlds by the number of our perfect copies (similar to SIA) while (Cook 2022 [LW · GW]) weigh worlds by the amount of resources the descendants of our perfect copies will grab. This is not surprising since the definition of ADT accommodates many implementations, one for each plausible way of computing the Decision-Relevance Potential (DRP) of worlds.

- ^

See the formalism in Sequential Extensions of Causal and Evidential Decision Theory for how CDT ignores updates about past states and correlated agents.

- ^

Do perfect copies exist? If we are or wager on being in an infinite world, yes. This is still true if the world is finite and large enough while having discrete/quantic initial parameters; then perfect copies should exist in significant numbers. If the world is finite and some of its initial parameters are continuous, then perfect copies should not exist. (H.T. Nicolas Mace)

- ^

The Civ-Saturation Hypothesis posits that when making decisions, we should assume most of Humanity's Space-Faring Civilization (SFC) resources will eventually be grabbed by SFCs regardless of whether Humanity's SFC exists or not.

- ^

The Civ-Similarity Hypothesis posits that the expected utility efficiency of Humanity's future Space-Faring Civilization (SFC) would be similar to that of other SFCs.

0 comments

Comments sorted by top scores.