Metascience of the Vesuvius Challenge

post by Maxwell Tabarrok (maxwell-tabarrok) · 2024-03-30T12:02:38.978Z · LW · GW · 2 commentsThis is a link post for https://www.maximum-progress.com/p/metascience-of-the-vesuvius-challenge

Contents

Other Observations None 2 comments

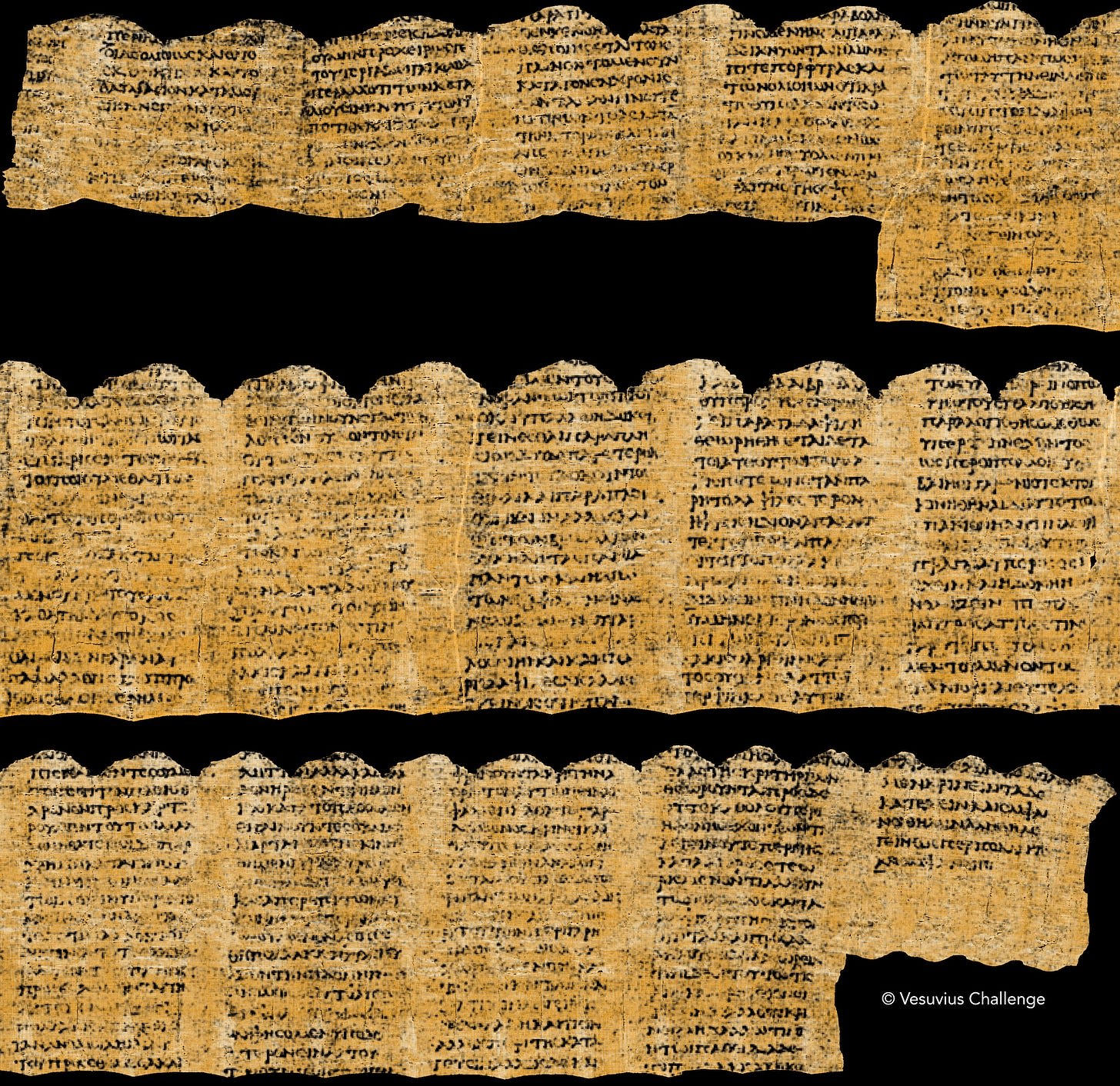

The Vesuvius Challenge is a million+ dollar contest to read 2,000 year old text from charcoal-papyri using particle accelerators and machine learning. The scrolls come from the ancient villa town of Herculaneum, nearby Pompeii, which was similarly buried and preserved by the eruption of Mt. Vesuvius. The prize fund comes from tech entrepreneurs and investors Nat Friedman, Daniel Gross, and several other donors.

In the 9 months after the prize was announced, thousands of researchers and students worked on the problem, decades-long technical challenges were solved, and the amount of recovered text increased from one or two splotchy characters to 15 columns of clear text with more than 2000 characters.

The success of the Vesuvius Challenge validates the motivating insight of metascience: It’s not about how much we spend, it’s about how we spend it.

Most debate over science funding concerns a topline dollar amount. Should we double the budget of the NIH? Do we spend too much on Alzheimer’s and too little on mRNA? Are we winning the R&D spending race with China? All of these questions implicitly assume a constant exchange rate between spending on science and scientific progress.

The Vesuvius Challenge is an illustration of exactly the opposite. The prize pool for this challenge was a little more than a million dollars. Nat Friedman and friends probably spent more on top of that hiring organizers, building the website etc. But still this is pretty small in the context academic grants. A million dollars donated to the NSF or NIH would have been forgotten if it was noticed at all. Even a direct grant to Brent Seales, the computer science professor whose research laid the ground work for reading the scrolls, probably wouldn’t have induced a tenth as much progress as the prize pool did, at least not within 9 months.

It would have been easy to spend ten times as much on this problem and get ten times less progress out the other end. The money invested in this research was of course necessary but the spending was not sufficient, it needed to be paired with the right mechanism to work.

The success of the challenge hinged on design choices at a level of detail beyond just a grants vs prizes dichotomy. Collaboration between contestants was essential for the development of the prize-winning software. The discord server for the challenge was (and is) full of open-sourced tools and discoveries that helped everyone get closer to reading the scrolls. A single, large grand prize is enticing but it’s also exclusive. Only one submission can win so the competition becomes more zero-sum and keeping secrets is more rewarding. Even if this larger prize had the same expected value to each contestant, it would not have created as much progress because more research would be duplicated as less is shared.

Nat Friedman and friends addressed this problem by creating several smaller progress prizes to reward open-source solutions to specific problems along the path to reading the scrolls or just open ended prize pools for useful community contributions. They also added second-place and runner-up prizes. These prizes funded the creation of data labeling tools that everyone used to train their models and visualizations that helped everyone understand the structure of the scrolls. They also helped fund the contestant’s time and money investments in their submissions. Luke Farritor, one of the grand prize winners, used winnings from the First Letters prize to buy the computers that trained his prize winning model. A larger grand prize can theoretically provide the same incentive, but it’s a lot harder to buy computers with expected value!

Nat and his team also decided to completely switch their funding strategy for a particular part of the pipeline from charcoal-scroll to readable text. This “segmentation” step is early on in the process, and is a labor intensive data-labeling task which helps an algorithm unwrap a crosswise slice of burnt scroll into a flat piece.

Rather than funding extra prizes for this step, they hired 3 full-time data labelers and open-sourced their outputs. Here’s their rationale:

An alternative was to leave the problem of segmentation to the contestants, or even to award separate prizes for segments, but this had several downsides. First, it’s hard to judge segment quality before knowing what to look for (we didn’t have working ink detection yet). Incentivizing segment quantity would automatically penalize quality. Second, labeling work is tedious and time consuming, and turned out to have a long learning curve, so it’s desirable to guarantee some compensation, which can’t be done with a prize. Third, the feedback loop with prizes can be pretty long.

We were not dogmatically attached to just being referees; we were willing to run out onto the field and kick the ball a little. So we did what we thought would maximize success, and for the critical bottleneck of segmentation, that meant hiring a team.

Setting up a segmentation prize or hiring a team of labelers might cost the same, but the effect it has on progress towards the actual goal of reading the scrolls could be completely different.

The same money spent in different ways can change the per-dollar impact by several orders of magnitude. Noticing this fact and endeavoring to understand which mechanisms work when is the motivation behind metascience. The mechanism design decisions which went in to the scroll prize corroborate this central insight.

Other Observations

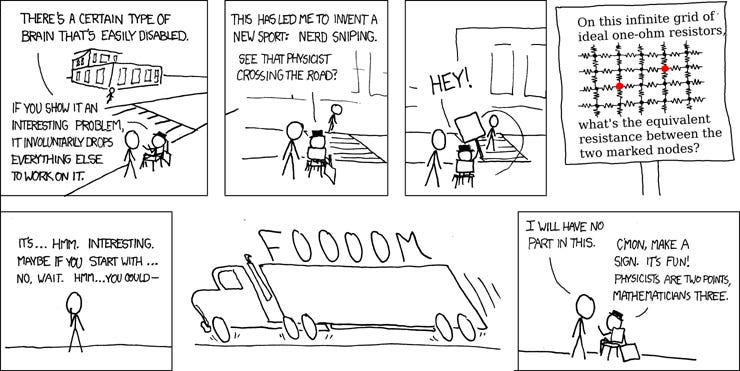

Nerdsniping as metascience

One part of the success of the scroll prize had nothing to do with the prize pool or how it was structured, it was just getting the right kind of people excited about the problem. Nat Friedman’s appearance on Dwarkesh Patel’s podcast both caused and predicted this.

“I think there’s a 50% chance that someone will encounter this opportunity, get the data and get nerd-sniped by it, and we’ll solve it this year,” Friedman said on the show. Farritor thought, “That could be me.”

This information spreading and inspiration is completely abstracted away in formal discussions of science and metascience, but it is extremely important. People often gripe about great physicists or mathematicians going to work on Wall Street but offer no real ideas for how to stop it. Here’s mine: targeted, tactical nerdsniping. Inspiration and interest can be higher leverage than money.

There’s another layer to the nerdsniping strategy. It’s not just about enticing the people who might compete for a prize, it’s also about the people who might fund it. Here’s Nat Friedman on his introduction to this problem.

A couple of years ago, it was the midst of COVID and we were in a lockdown, and like everybody else, I was falling into internet rabbit holes. And I just started reading about the eruption of Mount Vesuvius in Italy …

I read about a professor at the University of Kentucky, Brent Seales, who had been trying to scan these using increasingly advanced imaging techniques, and then use computer vision techniques and machine learning to virtually unroll them without ever opening them…

I thought this was like the coolest thing ever.

This happened serendipitously but if you want more funding for your project it might be worth investing serious effort in nerdsniping a tech millionaire on the problem. This advice is obvious to silicon valley folks but seems underused by academics.

Private Provision of Public Goods

The Vesuvius Challenge is also a good example of a “privileged group” provision of public goods. This is a concept from economist Mancur Olson which defines conditions under which public goods can be provided voluntarily even though some benefits accrue to free riders. For example, imagine a levee for a small town. Everyone in the town is protected from floods by the levee whether they pay or not, so no one wants to: A classic free-rider problem. But if there is a large landowner in the town, the protection they get personally might be worth more than the entire cost of the levee, so they build it. Or think about the Patreon supporters of your favorite Youtuber. Even though their videos go out to everyone for free, a few true fans are willing to support them even though lots of benefits accrue to others.

Nat Friedman’s interest in ancient history has made papyrologists and classicists a privileged group. His willingness to pay for reading these scrolls is so high that he’s willing to produce the text even though the information is a public good and he will capture only a tiny fraction of the benefit personally.

“[Nat] says he’s also contemplating buying scanners that can be placed right at the villa and used in parallel to scan tons of scrolls per day. “Even if there’s just one dialogue of Aristotle or a beautiful lost Homeric poem or a dispatch from a Roman general about this Jesus Christ guy who’s roaming around,” he says, “all you need is one of those for the whole thing to be more than worth it.”

The Long Tail

Prizes weren’t the only mechanism that contributed to reading the Herculaneum scrolls. Traditional universities and National Science Foundation funding supported Brent Seale’s research for over two decades.

“The foundation was laid by Dr. Seales and his team. They spent two decades making the first scroll scans, building Volume Cartographer, demonstrating the first success in virtual unwrapping, and proving that Herculaneum ink can be detected in CT.”

This is similar to mRNA vaccines. Advanced market commitments and quick internal research at Moderna and Pfizer were the big push that got them produced during covid-19, but they were preceded by a long tail of NIH research. In both cases this research didn’t seem particularly high-value to reviewers for decades until it had its moment in the sun.

This difficulty in seeing potential before the critical moment suggests that science funding behemoths like the NIH should invest less in filtering and searching for what appear as high quality applicants, and pivot to giving out more, smaller, long-term “insurance grants” that allow lots of researchers to keep tinkering even when no one else sees the potential. When one of them hits on something, there are other mechanisms better suited to taking their idea over the finish line. The government’s comparative advantage isn’t in picking winners when the ideas are clear, it’s in having pockets deep enough and timelines long enough to “buy the index” of science and give early glimmers of unclear value a safety net to support tinkering.

2 comments

Comments sorted by top scores.

comment by bhauth · 2024-03-31T00:00:15.995Z · LW(p) · GW(p)

The actual required work by the winning teams was a relatively small amount of software and computation, guided (I think) by a relatively small amount of manual examination. This seems more of a triumph of GPU progress and pretrained vision transformers making difficult problems suddenly easy than a generally applicable system. Given that progress, just releasing the data and letting people work on it without the monetary prize or competition restrictions would've worked too - given the same amount of publicity, at least, but I don't think the argument here is "prizes are good because they can be an efficient way to get media attention".

There's been a significant amount of research on the relative merits of prizes, patents, and research funding, and the general conclusion has been that prizes aren't usually that great.

Replies from: rotatingpaguro↑ comment by rotatingpaguro · 2024-03-31T06:08:06.983Z · LW(p) · GW(p)

One big prize, or many small prizes like here?