Can Ads be GDPR Compliant?

post by jefftk (jkaufman) · 2023-01-08T02:50:01.486Z · LW · GW · 10 commentsContents

10 comments

I think the online ads ecosystem is most likely illegal in Europe, and as more decisions come out it will become clear that it can't be reworked to be within the bounds of the GDPR. This is a strong claim, but before I get into backing it up here's some background on me:

I'm not a lawyer or an expert in privacy regulation; this is something I follow because I'm interested in it.

- I worked in ads until June 2022, but I'm speaking only for myself. I don't expect to go back into the industry.

So, how are sites not compliant?

When you visit a site in Europe, or an international site as a European, you'll typically see a prompt like this:

In this screenshot El País is asking for permission to use cookies and use your data to personalize ads.

Why are they asking you? A combination of two regulations:

The ePrivacy Directive (2002) which requires the site to get your consent before using cookies or other storage on your device unless they're strictly necessary to provide a service you requested.

The GDPR (2016) which tightly limits what companies can do with your data without your consent.

The idea is, if you click "accept" then they can say they had your consent for all the advertising stuff they do. But I think it's very unlikely this is compliant with the GDPR.

For example, in a recent case France's data privacy regulator CNIL recently fined Microsoft €60M (full text) for a similar popup on Bing. I'm going to come back to this decision later because it has other implications, but in paragraph 65 the CNIL ruled that their cookie banner was not collecting valid consent because it took more clicks to refuse cookies than to accept them.

The principle here is that for consent to be valid under the GDPR it needs to be just as easy to give consent as it is to refuse it.

This is not widely respected today, since for most companies it's going to be much more profitable to put up a not-really-legal banner that heavily pushes users towards saying yes and hope they don't get in trouble, but as the data protection agencies continue their enforcement I think this will become less practical.

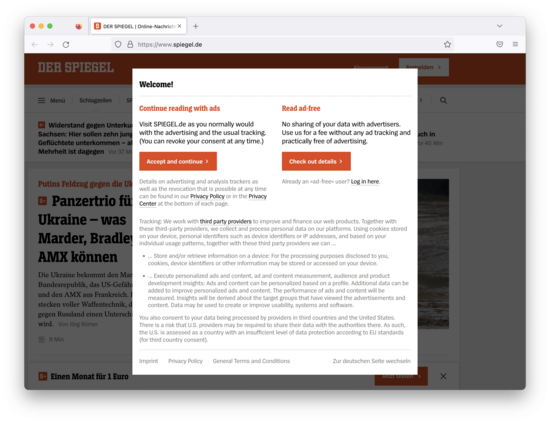

Another approach you see on a few sites is the one that Der Spiegel takes:

They offer a choice between accepting their standard ad stuff or paying to subscribe to the site (more details). I'm glad they're giving users the choice here and I think this should be legal, but I'm pretty sure it isn't right now. The problem is that the user's consent isn't "freely given" in terms of the GDPR's Article 4(11) if they would otherwise have to pay for access.

The third option is to have a cookie banner that is as easy to reject as it is to accept:

When I click "deny" and visit their site, they show a popup saying

"Lower quality ads may be displayed." This includes (definitely low

quality...) ads from Outbrain, with many

network requests to outbrain.com and

outbrainimg.com:

The problem is, per the Schrems II ruling these are also not GDPR-compliant. Because US companies are required to share information with the US government and IP addresses are personal information, the GDPR requires sites to get consent from users before sending any of their information to American companies or their subsidiaries. European courts have applied this ruling to fine sites for using Google Analytics, Google Fonts, and the Akamai CDN. Since Outbrain is an American company, based in NYC, this is not compliant.

Schrems II compliance rules out all commercially available adtech options I know about, and the only fully GDPR-compliant sites I've seen are ones where clicking "reject" means you don't get any ads at all.

As a somewhat speculative aside, I think there's another problem with these consent popups: when you visit the site they read your cookies. Per the ePrivacy Directive sites are only allowed to interact with storage on your device to implement functionality "strictly necessary" for a service you requested. If I visit a site and have never seen their consent popup before, I don't see how you argue that accessing my device's storage to check whether I've already been asked for consent meets that bar. On the other hand I've never seen someone make this argument and consent popups are everywhere, so it's probably too legalistic.

But anyway, let's say you decide to build your own ads system fully in-house, or a very careful European startup comes out with a GDPR-compliant display ads product. What would this look like?

The GDPR requires you to have one of several legal bases for any personal data processing. The most well known basis is "consent", but there are several others. Meta (Facebook) tried to work around this with the "performance of a contract" basis, but was fined €390M. The only basis, other than consent, that might apply is "legitimate interest".

Sites interpret this term in a variety of ways. For example, Spotify claims that they have a legitimate interest in "using advertising to fund the Spotify Service, so that we can offer much of it for free" and so "use your personal data to tailor advertising to your interests". This is unlikely to satisfy a European court: TikTok announced they would do this and then didn't, due to it probably not being legal. Nearly every site personalizes ads only for users who have (per their dubious popups) consented, and these are sites that have a strong interest in, and history of, interpreting the GDPR as loosely as possible.

If you can't personalize ads, however, that doesn't mean you can't show ads. The problem is that personalization isn't the only thing ads use personal data for. Let's talk about fraud.

If I wanted to sell some ad space on my site, there are a lot of things an advertiser might care about. The biggest one, however, is how many users are going to see their ads per dollar. We might agree that they will pay me $1 for every thousand page views ($1 CPM). With a naive implementation, at the end of the month I check my server logs, see that my site served 1M pages and bill the advertiser $1k.

No serious advertiser would agree to this, however, because it is so vulnerable to fraud. All it takes is standing up a little bot to repeatedly load my articles, and I can bill them for millions of visits when I was only visited by thousands of real users.

Instead, advertisers want the protection of fraud detection. This means collecting lots of user data as the ad is shown on the page and processing it with a combination of clever statistics and human analysis to identify and ignore the portion of traffic that doesn't represent real users. This requires catching not only simple bots like search engine spiders, but also sophisticated fraud operations involving rented botnets or giant racks of real phones.

Is it within the legitimate interests of sites to collect user data for ad fraud detection? The ad industry has historically thought that it was. For example, the IAB's TCFv2, the standard protocol consent popups use to talk to ad networks, categorizes ad fraud detection under "Special Purpose 1", with users having "No right-to-object to processing under legitimate interests". On the other hand, points 52 and 53 of the recent Microsoft ruling I would predict that French regulators would rule that since users do not visit sites to see ads, sites cannot claim that they have a legitimate interest in using personal data to attempt to determine whether their ads are being viewed by real people.

This is not settled; among other things the Microsoft ruling was primarily considering ePrivacy which is stricter on some points. But I think it's more likely than not that when we get clarity from the regulators it will turn out that the kind of detailed tracking of user behavior necessary for effective detection of ad fraud is not considered to be within a publisher's legitimate interests.

Giving up on using personal data for ad fraud detection would make online advertising much less profitable, but it might not kill it entirely. I see three ways this could maybe still work:

Performance advertising. Online ads are divided into two main categories: "performance", which is trying to get you to do something now (click through from the fishing site you're visiting and buy this new lure), and "brand", which is trying to influence your future purchases in a much less legible manner (drink more Coke). Brand advertising is where the biggest spending is, but because the purchases aren't tied to clicks it's very dependent on keeping down fraud. With performance advertising the advertiser can measure whether people really are buying things, so it doesn't matter so much how many bots are on your site.

Ratings. The way we used to handle this with TV and radio, back when large-scale tracking was impractical, was with companies like Nielsen estimating how many people a given broadcast reached. Their estimates were based on a mixture of automated and manual surveys, and only reached a small fraction of the population. While they worked somewhat well historically when media was highly centralized and there were only a few options, they are a poor fit for a current TV market let alone the far more fragmented web.

Private Browser APIs. Browsers could offer sites a way to verify that your users aren't bots without any personal data being sent off the device. Trust Tokens are one way this could work, but since most visitors wouldn't have them I don't think they're enough. Even though this is close to an area I've worked in I doubt there's a solution here: it's very hard to build something that hits all of (a) minimal load on the client, (b) sufficiently useful fraud detection, (c) sufficiently private, and (d) does not require consent under the GDPR or ePrivacy. The last one is especially hard: how could a private browser API not involve storing information on the client?

At this point, however, we're talking about a model of advertising that is far less able to support most sites than the status quo, and one that is only viable for very large publishers (Facebook, Reddit, NYT), or sites with a strong commercial tie-in (credit card reviews, home improvement, personal finance).

Overall I'm not happy about this conclusion. While I don't enjoy seeing ads I am glad they exist; as I've written before I think a world without ad-supported sites would be worse. It's not just me: when users can chose between ad-supported and paid options the former is typically the most popular option. Regulating the advertising business model to where it's no longer practical for most sites does not make users better off.

Ideally we'd see some changes to these regulations which balanced the privacy goals of the GDPR against the minimum necessary for ad-supported sites. Specifically, I'd like to legalize some things that are mostly already how many people seem to think this works:

Allow ad fraud detection under "legitimate interest", which is the key thing keeping ads from being practical under the GDPR.

Allow Der Spiegel's approach where sites can let users choose between ads with personalization or paying a reasonable price for access, under the principle that this is a real choice.

Slightly relax ePrivacy's "strictly necessary in order to provide an information society service explicitly requested". In practice this is means that nearly every site shows a cookie banner, even to do completely normal things like keeping an item in your shopping cart for later.

I'm less confident in my proposed solutions than I am about there being a problem, but I do think these three changes strike a good balance between the privacy goals of the GDPR and the financial, ease of use, and competition benefits of users being able to move from site to site without the friction of paywalls.

10 comments

Comments sorted by top scores.

comment by pharadae · 2023-01-08T11:56:10.176Z · LW(p) · GW(p)

There's a lot to unpack here.

First, european union law like the GDPR works in the form, that they cannot directly make laws for every european member, but each european nation has to transform the european law into national law. So the implementation of the irish GDPR is different than from the german GDPR and while the general idea behind a european law must be abided by the nations, each one has their own pecularities. The german GDPR law is called the DSGVO and since I'm from germany, I'm most knowledgable there. So some of my comments might be wrong under GDPR, but completely valid for the DSGVO.

Under GDPR, every time a service (in this context the homepage) is requesting or using data sent from the client (the browser), the service owner has to have written down and abides to a set of privacy rules, which govern

- what data falls under this set of rules,

- how long the data is being processed and stored,

- (if used without consent) if it has a legitimate purpose to use the data for this purpose, and

- that they thought about and excluded a less privacy-invasive way of processing the data

All of these have been more or less required before as well, but with the GDPR, the service is also responsible for each and every 3rd party data processor they use (e.g. doubleclick as an ad provider). So if they send data over to a 3rd party, and they mishandle the data or use it for a different purpose than originally stated, the original service is now responsible - with hefty fines attached.

Having said that - let's get back to your points.

Are services allowed to use data for personalization of content (specifically ads) without consent?

Yes and no - Direct Marketing is a legitimate interest according to the GDPR, so you would not need to have consent. But: Is there a less privacy-invasive way of processing the data? Yes there is, not serving personalized ads, but only according to the (unpersonalized) content of the page. And, there's the right to object to direct marketing, so this has to be taken care of somehow as well.

This is what Der Spiegel and other news websites have been basing their modus operandi on: Give the user the choice to either consent to personalized ads, or to pay for not seeing ads.

Are services allowed to use data for security purposes (specifically fraud detection)?

Yes, they are. They can collect and use pretty much every bit of data they can generate and get from the browser. There is no less privacy-invasive way, because it's a everylasting race between fraudsters and counter-measures.

But: The data must be used for this purpose only. They must not be used to ads, personalization, login, marketing, whatsoever - or they risk a hefty fine. When Facebook used the 2 factor authorization phone number to send out ads, they were violating the GDPR and will hopefully get a hefty fine for it.

Can websites finance themselves without personalized ads?

Most likely. Non-targeted ads only reduce their effectiveness by around 4% in contrast to targeted / personalized ads - which makes sense, since if e.g. a user is reading an article on topic X, they are already interested in the topic. So an ad for people interested in topic X is already very likely to be effective.

(as said before: websites are still allowed to use any data for security purposes like fraud detection.)

Why are big companies like Microsoft being sued for data usage for fraud detection anyway?

Because they are trying to push the boundaries and how far they can go again, and the courts (and politicians) are using the GDPR to punish them for it.

Most big companies still have no clue what data is being requested, stored for what purpose, distributed to whom, etc. - which was one of the reasons the GDPR initiative was started in the first place.

Example Microsoft: after a brief period of being privacy-concerned, Windows 10 is much more "androidized" in terms of spying on the user, pushing bloatware and ads, installing invasive features without consent, and trying to trick the user into giving consent for more data. It's e.g. not possible to simply say "I don't want to create a microsoft account" (which would enable Microsoft to track the user better) - only "I don't want to create a microsoft account at the moment (we'll ask you again in two weeks)".

I predict, that the previous rulings will be thrown out at the upper courts and that no smaller websites (even if they are Der Spiegel) would be sued for using data for fraud detection - assuming that they are not using the data for other purposes.

Are other means of financing websites possible?

Sometimes, yes - main question is the availability of competition (scarcity) and the relation a company has to their users. Spotify, Amazon Music Unlimited, Apple Music, etc. all have no problem of raising money from users through a subscription model, because a lot of music is simpy not available for free without a payment option. Even free "user-content" on sites like Youtube, where a lot of music is uploaded illegally from users, the content-id system is effective (if an artist or their publisher don't want their music to be available there).

Other services like Patreon, SubscribeStar, Substack, Locals, etc. show, that people are willing to pay creators just for the content they create. This only seems to work sufficiently well for parasocial relationships - most bigger Youtube creators are effectively businesses with dozens of freelancers or employees, but focusing everything on one person for the parasocial relationship.

Conclusion

Ads can be GDPR-compliant, don't have to be personalized and their fraud detection is a separate legitimate interest.

Replies from: michaelkleber↑ comment by michaelkleber · 2023-01-08T14:56:58.319Z · LW(p) · GW(p)

The "don't have to be personalized" part of your argument rests on the "4% less revenue" statistic from Marotta et al. (2019), but that seems to be a very bad source. Here's Garrett Johnson's literature review slide: https://twitter.com/garjoh_canuck/status/1318989360407236609.

Of the six studies, five (from academia, industry, and government sources) come to the conclusion that removing personalization would cost websites somewhere between 50% and 70% of their revenue, and one concludes 4%. Moreover, the Marotta paper rests on subtle statistical methods aimed at the problem "We have a lot of observational data but we can't run an A/B experiment, so let's (use augmented inverse probability weighting to) figure out what would happen if running the direct experiment were possible." That's a hard but admirable goal. But in the Google paper they actually did run exactly that A/B experiment, the very same one that Marotta et al were trying to predict, and they got 52% rev loss instead.

There are good reasons to be skeptical of the 50%-70% range — in particular those measurements are all happening in an environment where personalized advertising channels still exist, so they don't get at global equilibrium after the forced behavior changes that would come from personalization going away across the board. From observing what happened to ads prices on iOS in recent years, I'd say it seems very plausible that the stable outcome would be more like 40% instead of 50%-70%.

Motivation&Disclaimer: I'm https://mathstodon.xyz/@Log3overLog2, and I work at Google on the Chrome & Android effort to move to a much more private way to do ads personalization. So I'm deeply vested in this question.

But the 4% number is quite unsupportable given the data at hand. And telling most websites in the EU that they will lose ~half of their revenue is not an appealing prospect.

Replies from: pharadae↑ comment by pharadae · 2023-01-09T07:18:03.417Z · LW(p) · GW(p)

Good points, I'll look into the other studies at another time. I remember a german newspaper actually switching completely to non-targeted ads after their own experiment, but can't find the source anymore. I'll comment it here, if I find it again.

Thanks especially for your transparency on your Motivation and Disclaimer.

comment by Dagon · 2023-01-09T04:37:04.635Z · LW(p) · GW(p)

In the US, California, Colorado, and a few other states have passed similar privacy laws. What's super-unclear is just how thoroughly it'll be enforced. A whole lot of GDPR compliance is cargo-cult law; do what others seem to be doing and hope it's enough. For ad biz, it's appear to do what you think others are doing, and hope it's enough, while still mostly business as usual.

There have been a number of fines issued, as you say, and I don't know of any big ones actually paid. There's a lot of arguing and appealing before we really know what's a hard requirement, what's a requirement only if you're in the crosshairs, and what's a pretty flexible guideline that won't matter unless you're both big enough to notice AND egregious enough in violation.

I'd bet on the industry surviving.

comment by ChristianKl · 2023-01-08T12:03:24.878Z · LW(p) · GW(p)

There are two different fraud problems. One problem is about the website selling the ads engaging in fraud while the other is about third parties engaging in fraud.

When it comes to preventing the website selling the ads from engaging in fraud, there are various tools available that involve having trusted third parties. There's no necessity for solving the trust problem by giving the company that buys the ads any access to consumer data.

This could involve third-party audits or for websites that run on a cloud service a trusted module of that cloud service that's outside of the control of the website.

If Google and co argue that they are so scummy that no reasonable advertiser is going to trust them even if they pay third-party audits to get more trustworthy, I don't think their European lobbyists will have much success by arguing that they are so scummy that they need to allow advertisers access to the customer data.

Replies from: jkaufman↑ comment by jefftk (jkaufman) · 2023-01-08T12:40:58.868Z · LW(p) · GW(p)

Auditing publisher logs helps with deliberate falsification, but it's easy to have as fraud that's plausibly deniable and maybe even not on purpose.

Let's say you're a publisher and you want more people to come to your site. You look around and you find someone who says they run a newsletter and would be willing to include links to your stories for a small fee. When you multiply out the cost per visitor this looks like a pretty good deal; you say yes. This traffic turns out to be entirely bots, but you can't tell because we got rid of ad fraud detection.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-01-08T15:33:03.585Z · LW(p) · GW(p)

There are two different issues here:

- Is the newsletter provider allowed to have ads that request something from the website of the advertiser so that the advertiser essentially gets a list of all people who read the newsletter?

- For those people that click on the link, what is the advertiser to do to investigate their identities?

The first one is about trusting the person who publishes the newsletter.

The second one is about distinguishing bots from non-bots on your own website. Google ReCapture is a technology that you can use for that. Cloudflare seems to do something similar.

It's a debate to argue whether Google ReCapture technology itself violates the GDPR and maybe it does, but I could easily see allowing that technology while not allowing 1).

When it comes to privacy and data located in the US, it's worth mentioning that the US could decide to pass laws that protect the data of EU citizens the same way it does protect US citizen data. As long as the US has a legal framework that's abusive to EU citizens it makes sense that there's a cost to pay for that abusive legal framework. Tech companies that complain about paying that cost are free to lobby the US government to pass laws to be less abusive to EU citizens.

↑ comment by jefftk (jkaufman) · 2023-01-08T21:57:24.146Z · LW(p) · GW(p)

Sorry, I think your 1 and 2 are responding to a different scenario than I was trying to describe. Which is partly my fault for introducing something confusing in a relatively terse way. Let me take a step back and describe the scenario in more detail:

-

You are a publisher: you run a website like

cnn.com. -

You negotiate with advertisers for space on your site. Perhaps you make a deal with Coke where, based on the amount of traffic you claim to have, they are going to pay you $10,000 for some placement in the month of January.

-

Traffic comes to your website from a range of places: search engines, social media sharing, etc. Some of this is "paid" traffic, in that you have placed ads or otherwise compensated people for sending traffic your way, and there is also "organic" traffic, which, where no money changes hands. Even though you don't directly make money from your visitors, as long as you make more money from them seeing your ads while reading articles on your site than it costs for you to bring the visitors in, you come out ahead.

In this scenario, you are primarily a publisher, but you are also an advertiser in that you pay for some of your traffic. My newsletter example was describing a kind of paid traffic you might buy, and what you thought you were buying was a simple "they link to my articles in the newsletter, disclosing that they're sponsored".

Today, the incentives in this market are reasonably balanced. If the newsletter is shady they want to inflate their numbers by including some amount of automated traffic, but if they do very much of this the automated traffic will trigger ad fraud detection, run on behalf of your advertisers (Coke, etc.). As a publisher, you really want to avoid this; it's very painful to get dropped by networks or lose direct deal customers over fraud even if it was only negligence on your part and not intentional abuse.

In a world where there's no fraud detection system protecting the ultimate advertiser (Coke), however, this feedback loop is broken. In the newsletter can get more and more bold with their inclusion of automated traffic, and Coke doesn't know if they're buying real traffic or not.

Running ReCaptcha or other bot detection technology on your website is what I'm talking about here, and is what I thought was more likely prohibited by the GDPR than not when writing the post. (Though in response to Kleber's comment on Mastodon I've updated downward somewhat.)

Replies from: pharadae, ChristianKl↑ comment by pharadae · 2023-01-09T07:36:52.402Z · LW(p) · GW(p)

The problem of inflated ads is currently very real for bigger players, who rely on paid traffic - I've worked with a company which did buy large quantities. They were employing several employees to just check and negotiate with the ad-publishers each month about the fraud rates, because the performance (meaning the chosen method - i.e. CPM, CPC, CPL, CPA) were vastly different between the ad-publishers, and it didn't make sense.

So there definetly was fraud involved, but it was extremely hard (and expensive) to weed fraudulent advertisers out.

Your scenario of an email newsletter is a special case, because it's virtually impossible to introduce any form of client run code to check for fraud, and can only start your fraud detection after the traffic hit your website.

↑ comment by ChristianKl · 2023-01-08T22:51:18.718Z · LW(p) · GW(p)

I think it is questionable whether it's good for the ecosystem when CNN pays third parties for paid traffics. I would prefer it if CNN focuses on publishing articles that people actually want to read so that CNN doesn't need to rely on paid traffic.