LIMA: Less Is More for Alignment

post by Ulisse Mini (ulisse-mini) · 2023-05-30T17:10:31.628Z · LW · GW · 6 commentsThis is a link post for https://arxiv.org/abs/2305.11206

Contents

Abstract Implications Data Quality & Capabilities Perplexity != Quality None 6 comments

Abstract

Large language models are trained in two stages: (1) unsupervised pretraining from raw text, to learn general-purpose representations, and (2) large scale instruction tuning and reinforcement learning, to better align to end tasks and user preferences. We measure the relative importance of these two stages by training LIMA, a 65B parameter LLaMa language model fine-tuned with the standard supervised loss on only 1,000 carefully curated prompts and responses, without any reinforcement learning or human preference modeling. LIMA demonstrates remarkably strong performance, learning to follow specific response formats from only a handful of examples in the training data, including complex queries that range from planning trip itineraries to speculating about alternate history. Moreover, the model tends to generalize well to unseen tasks that did not appear in the training data. In a controlled human study, responses from LIMA are either equivalent or strictly preferred to GPT-4 in 43% of cases; this statistic is as high as 58% when compared to Bard and 65% versus DaVinci003, which was trained with human feedback. Taken together, these results strongly suggest that almost all knowledge in large language models is learned during pretraining, and only limited instruction tuning data is necessary to teach models to produce high quality output.

Implications

Data Quality & Capabilities

Along with TinyStories [AF · GW] and QLoRA I'm becoming increasingly convinced that data quality is all you need, definitely seems to be the case for finetuning, and may be the case for base-model training as well. Better scaling laws through higher-quality corpus?

Also for who haven't updated, it seems very likely that GPT-4 equivalents will be essentially free to self-host and tune within a year. Plan for this!

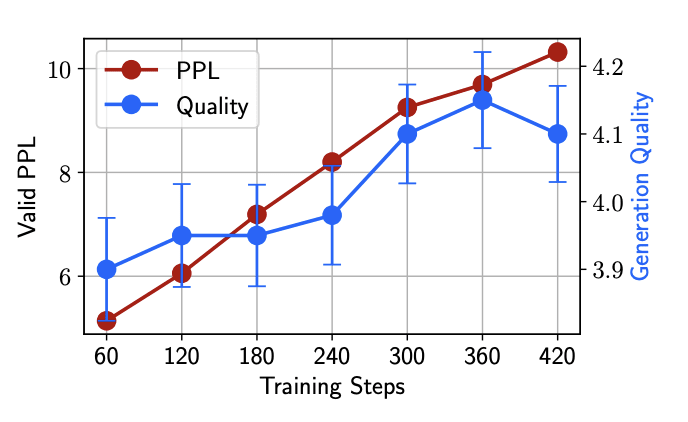

Perplexity != Quality

When fine-tuning LIMA, we observe that perplexity on held-out Stack Exchange data (2,000 examples) negatively correlates with the model’s ability to produce quality responses. To quantify this manual observation, we evaluate model generations using ChatGPT, following the methodology described in Section 5. Figure 9 shows that as perplexity rises with more training steps – which is typically a negative sign that the model is overfitting – so does the quality of generations increase. Lacking an intrinsic evaluation method, we thus resort to manual checkpoint selection using a small 50-example validation set.

Because of this, the authors manually select checkpoints between the 5th and 10th epochs (out of 15) using the held-out 50-example development set.

6 comments

Comments sorted by top scores.

comment by Cleo Nardo (strawberry calm) · 2023-06-08T00:22:50.147Z · LW(p) · GW(p)

In a controlled human study, responses from LIMA are either equivalent or strictly preferred to GPT-4 in 43% of cases;

I'm not sure how well this metric tracks what people care about — performance on particular downstream tasks (e.g. passing a law exam, writing bugless code, automating alignment research, etc)

comment by Raemon · 2023-05-30T17:37:55.269Z · LW(p) · GW(p)

The abstract feels overly long.

IMO, Abstracts should be either Actually Short™, or broken into paragraphs [LW · GW]

Replies from: ulisse-mini↑ comment by Ulisse Mini (ulisse-mini) · 2023-05-30T17:41:51.756Z · LW(p) · GW(p)

Copied it from the paper. I could break it down into several paragraphs but I figured bolding the important bits was easier. Might break up abstracts in future linkposts.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-05-30T18:14:03.877Z · LW(p) · GW(p)

the bold font on lesswrong is too small of a difference vs normal text for this to work well, IMO. (I wish it were a stronger bolding so it was actually useful for communicating to others.) just messing around with one suggested way to do this from SO:

↑ comment by Ulisse Mini (ulisse-mini) · 2023-05-30T19:01:22.488Z · LW(p) · GW(p)

Added italics. For the next post I'll break up the abstract into smaller paragraphs and/or make a TL;DR.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-05-30T19:11:27.762Z · LW(p) · GW(p)

yeah I don't like that either, I find italics harder not easier to read in this font, sorry to disappoint, heh.