TinyStories: Small Language Models That Still Speak Coherent English

post by Ulisse Mini (ulisse-mini) · 2023-05-28T22:23:30.560Z · LW · GW · 8 commentsThis is a link post for https://arxiv.org/abs/2305.07759

Contents

Abstract Implications Interpretability Capabilities None 10 comments

Abstract

Language models (LMs) are powerful tools for natural language processing, but they often struggle to produce coherent and fluent text when they are small. Models with around 125M parameters such as GPT-Neo (small) or GPT-2 (small) can rarely generate coherent and consistent English text beyond a few words even after extensive training. This raises the question of whether the emergence of the ability to produce coherent English text only occurs at larger scales (with hundreds of millions of parameters or more) and complex architectures (with many layers of global attention). In this work, we introduce TinyStories, a synthetic dataset of short stories that only contain words that a typical 3 to 4-year-olds usually understand, generated by GPT-3.5 and GPT-4. We show that TinyStories can be used to train and evaluate LMs that are much smaller than the state-of-the-art models (below 10 million total parameters), or have much simpler architectures (with only one transformer block), yet still produce fluent and consistent stories with several paragraphs that are diverse and have almost perfect grammar, and demonstrate reasoning capabilities. We also introduce a new paradigm for the evaluation of language models: We suggest a framework which uses GPT-4 to grade the content generated by these models as if those were stories written by students and graded by a (human) teacher. This new paradigm overcomes the flaws of standard benchmarks which often requires the model's output to be very structures, and moreover provides a multidimensional score for the model, providing scores for different capabilities such as grammar, creativity and consistency. We hope that TinyStories can facilitate the development, analysis and research of LMs, especially for low-resource or specialized domains, and shed light on the emergence of language capabilities in LMs.

Implications

Interpretability

One part that isn't mentioned in the abstract but is interesting:

We show that the trained SLMs appear to be substantially more interpretable than larger ones. When models have a small number of neurons and/or a small number of layers, we observe that both attention heads and MLP neurons have a meaningful function: Attention heads produce very clear attention patterns, with a clear separation between local and semantic heads, and MLP neurons typically activated on tokens that have a clear common role in the sentence. We visualize and analyze the attention and activation maps of the models, and show how they relate to the generation process and the story content.

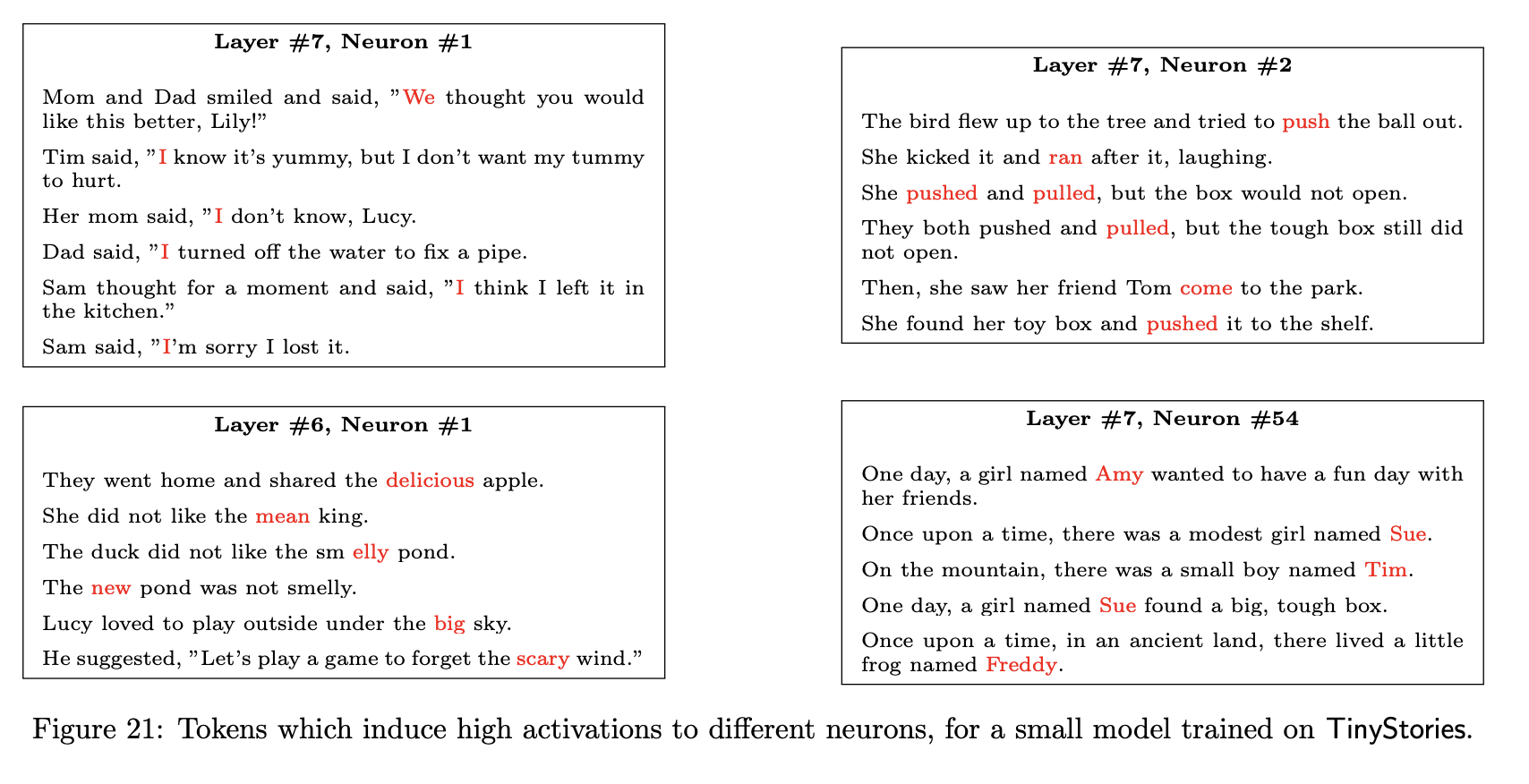

The difference between highly activating tokens for a neuron is striking, here's the tiny model:

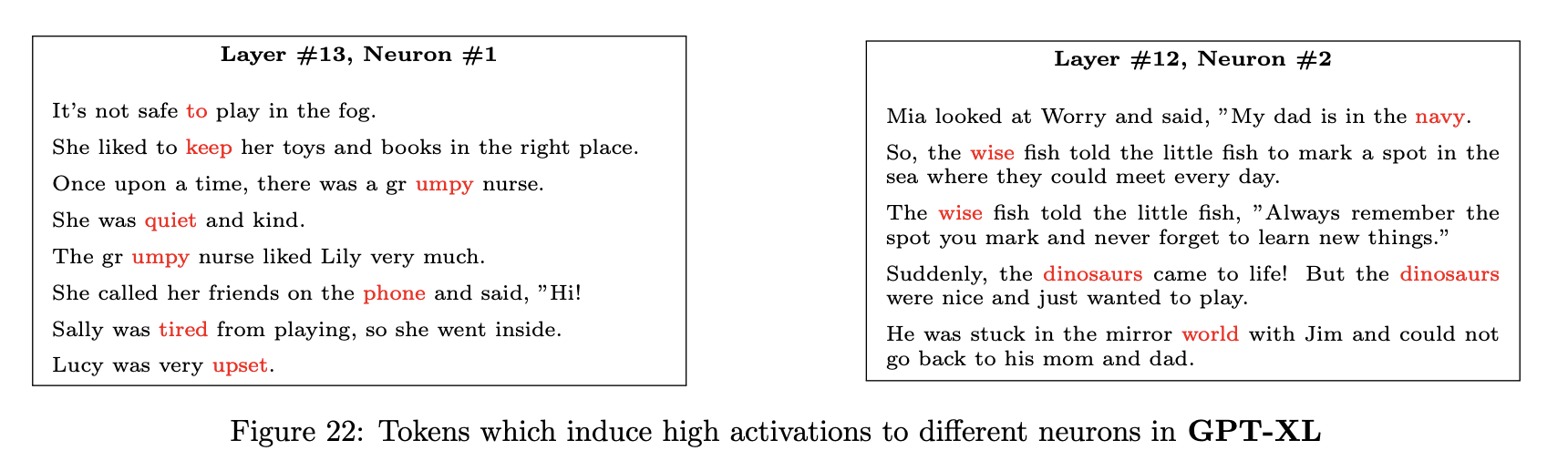

...and here's GPT2-XL:

Capabilities

Again from the introduction (emphasis mine)

However, it is currently not clear whether the inability of SLMs to produce coherent text is a result of the intrinsic complexity of natural language, or of the excessive breadth and diversity of the corpora used for training. When we train a model on Wikipedia, for example, we are not only teaching it how to speak English, but also how to encode and retrieve an immense amount of facts and concepts from various domains and disciplines. Could it be that SLMs are overwhelmed by the amount and variety of information they have to process and store, and that this hinders their ability to learn the core mechanisms and principles of language? This raises the question of whether we can design a dataset that preserves the essential elements of natural language, such as grammar, vocabulary, facts, and reasoning, but that is much smaller and more refined in terms of its breadth and diversity.

If this is true, there could be ways to drastically cut LLM training costs while maintaining (or increasing) the capabilities of the final model.

This could be related to dataset quality. QLoRA found (among other things) that a high-quality dataset of 9000 examples (OpenAssistant) beat a 1M dataset of lower quality.

8 comments

Comments sorted by top scores.

comment by RogerDearnaley (roger-d-1) · 2023-05-30T01:36:21.396Z · LW(p) · GW(p)

I've been thinking for a while that one could do syllabus learning for LLMs. It's fairly easy to classify text by reading age. So start training the LLM on only text with a low reading age, and then increase the ceiling on reading age until it's training on the full distribution of text. (https://arxiv.org/pdf/2108.02170.pdf experimented with curriculum learning in early LLMs, with little effect, but oddly didn't test reading age.)

To avoid distorting the final training distribution by much, you would need to be able to raise the reading age limit fairly fast, so by the time it's reached maximum you're only used up say ten percent of the text with low reading ages, so then in the final training distribution those're only say ten percent underrepresented. So the LLM is still capable of generating children's stories if needed (just slightly less likely to do so randomly).

The hope is that this would improve quality faster early in the training run, to sooner get the LLM to a level where it can extract more benefit from even the more difficult texts, so hopefully reach a slightly higher final quality from the same amount of training data and compute. Otherwise for those really difficult texts that happen to be used early on in the training run, the LLM presumably gets less value from them than if they'd been later in the training. I'd expect any resulting improvement to be fairly small, but then this isn't very hard to do.

A more challenging approach would be to do the early training on low-reading-age material in a smaller LLM, potentially saving compute, and then do something like add more layers near the middle, or distill the behavior of the small LLM into a larger one, before continuing the training. Here the aim would be to also save some compute during the early parts of the training run. Potential issues would be if the distillation process or loss of quality from adding new randomly-initialized layers ended up costing more compute/quality than we'd saved/gained.

[In general, the Bitter Lesson [AF · GW] suggests that sadly the time and engineering effort spent on these sorts of small tweaks might be better spent on just scaling up more.]

comment by MSRayne · 2023-05-29T13:59:05.637Z · LW(p) · GW(p)

So basically... LMs have to learn language in the exact same way human children do: start by grasping the essentials and then work upward to complex meanings and information structures.

Replies from: martin-fell↑ comment by Martin Fell (martin-fell) · 2023-05-29T15:25:35.570Z · LW(p) · GW(p)

Has any tried training LLMs with some kind of "curriculum" like this? With a simple dataset that starts with basic grammar and simple concepts (like TinyStories), and gradually moves onto move advanced/abstract concepts, building on what's been provided so far? I wonder if that could also lead to more interpretable models?

Replies from: MSRayne↑ comment by MSRayne · 2023-05-30T11:33:13.496Z · LW(p) · GW(p)

This is my thought exactly. I would try it, but I am poor and don't even have a GPU lol. This is something I'd love to see tested.

Replies from: martin-fell↑ comment by Martin Fell (martin-fell) · 2023-05-30T22:51:09.327Z · LW(p) · GW(p)

Hah yeah I'm not exactly loaded either, it's pretty much all colab notebooks for me (but you can get access to free GPUs through colab, in case you don't know).

Replies from: MSRayne↑ comment by MSRayne · 2023-05-31T19:02:17.572Z · LW(p) · GW(p)

I don't know anything about colab, other than that the colab notebooks I've found online take a ridiculously long time to load, often have mysterious errors, and annoy the hell out of me. I don't know enough AI-related coding stuff to use it on my own. I just want something plug and play, which is why I mainly rely on KoboldAI, Open Assistant, etc.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-05-29T17:20:52.755Z · LW(p) · GW(p)

I think this offers an interesting possibility for another way to safely allow users to get benefit from a strong AI that a company wishes to keep private. The user can submit a design specification for a desired task, and the company with a strong AI can use the strong AI to create a custom dataset and train a smaller simpler narrower model. The end user then gets full access to the code and weights of the resulting small model, after the company has done some safety verification on the custom dataset and small model. I think this is actually potentially safer than allowing customers direct API access to the strong model, if the strong model is quite strong and not well aligned. It's a relatively bounded, supervisable task.

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2023-05-30T01:42:17.509Z · LW(p) · GW(p)

Existing large tech companies are using approaches like this, training or fine-tuning small models on data generated by large ones.

For example, it's helpful for the cold start problem, where you don't yet have user input to train/fine-tune your small model on because the product the model is intended for hasn't been launched yet: have a large model create some simulated user input, train the small model on that, launch a beta test, and then retrain your small model with real user input as soon as you have some.