Noisy environment regulate utility maximizers

post by Niclas Kupper (niclas-kupper) · 2022-06-05T18:48:43.083Z · LW · GW · 0 commentsContents

How I got here Formal setup Examples Noisy utility maximization Notes & Outlook Gaussian extension Subagents Qubit extension Noise and Flatness Some More References Appendix Computation of Energies and the noisy regime Efficient computation None No comments

This post was written as part of the AISC under supervision of John Wentworth [LW · GW]. I started my PhD simultaneously with this project and admit that I underestimated the necessary effort to excel at both. I still had a great time, learned a lot and met a bunch of interesting people. I never hit upon the "one cool thing", but I hope this post still has some useful takeaways.

I would like to thank John Wentworth for his supervision and Lucius Bushnaq for our brainstorming sessions. I would also like to thank the AISC team for organizing the event. Finally, I'd like to thank Lauro Langosco for recommending AISC to me.

Consider some agent maximizing some utility. We usually model this agent to be in some environment. It can be reasonable to assume that the environment we find ourselves in is noisy. There are two (mostly) equivalent ways this can happen: either our observations contain some noise, or our actions are performed noisily.

Even if the environment is not noisy we might still gain some useful properties from training an agent with (some kinds of) noise. There is mild evidence suggesting it helps with robustness, see e.g. Open AI's robot hand or this paper. I want to investigate a particular formalism of how to treat noise. From this formalism I hope to gain

- A definition / understanding of noise resistance

- Protection against distributional shifts

- Better generalization

- Other theoretical insights

How I got here

The project started with the hope to expand the idea of Utility Maximization as compression [LW · GW]. This is related to the Telephone Theorem [AF · GW] and the Generalized Heat Engine [LW · GW]. While exploring these ideas I played around with various models, and particularly focused on ones where compression works nicely. Not unsurprisingly boolean functions were particularly insightful, as they are both easy to do explicit calculations with and more easily relate to compression. This lead me to the theory of boolean functions and noise sensitivity, which I then viewed as a utility function, although many interpretations are possible / useful.

Formal setup

Consider some finite space . We consider two classes of functions:

1. Boolean functions taking bits as input and returning bits as output i.e. .

2. Generalized boolean functions which instead returns a real number i.e. .

In the rest of the post I will not always specify the type of Boolean function, it should hopefully be clear from context. Either way, we can think of as the space of actions we can take, sub-actions for a single actions or multiple sub-agents voting for different actions.

Let us start by consider the following maximization problem:

where is the random variable where each bit of is resampled with probability , i.e. we expect the environment to augment our chosen with a bit of noise. We can choose a different amount of noise for every bit, the following theory would still hold, but it would be more tedious to write it out.

Some questions that now naturally arise might be:

- Can we naturally represent ?

- How much does the optimal change?

- How good is the optimal for ?

It is natural to think of as a smoothed version of . Unless the function is constant, the maximum will be suppressed and the minimum will be increased.

To help us guide our thinking about the above questions we introduce the following definitions. These are noise sensitivity and noise stability. They won't perfectly map onto the problem in the way that I have set it up, but they will still be conceptually useful. As we will see later, these definitions also naturally expand. In the following definitions we consider the uniform distribution over all bit configurations.

We call a family noise sensitive if

for all . In other words it is noise sensitive if the random variables and totally decorrelate.

On the other hand, we call a sequence noise stable if

This intuitively means that the random perturbation has no effect on the function in the limit.

You can check that a sequence is both noise sensitive and stable if and only if the $u_n$'s become almost surely deterministic in the limit.

Examples

Examples of noise stable function classes are majority, i.e. evaluates to 1 if there are more 1's and to -1 else, and dictator: i.e. a single bit decides the outcome.

Noise sensitive functions include the parity of bits, that is the product of all input bits. And also the iterated 3-majority: for we group the bits into 3's and then evaluate the majority for each group. We repeat this process until there is a single bit left.

Noisy utility maximization

To get a shift in perspective we can use a common tool, which ubiquitous throughout mathematics, the Fourier transform. Intuitively it shifts our view from the current domain to a frequency domain. For discrete systems this is basically a change of basis for a well chosen orthonormal basis. So let us choose a particularly useful basis:

Note that this can also be seen as a polynomial representation. For every we have and . For a given this can be thought of as the -parity. This allows us to deconstruct a given as follows

We think of larger sets corresponding to higher frequencies. Note that when , then we simply get the expected value. We can then reconstruct as follows

Why is this useful? We can more easily show things about the 's then all of at the same time. In particular, it is easily shown that

In other words the higher frequencies get dampened. This is a stronger statement than appears on the surface. We can see that this allows us to write as follows:

Now consider the following theorems.

We define to be the energy at frequency .

A sequence of boolean functions is noise sensitive if and only if we find:

as .

Similarly we find

A sequence is noise stable if and only if there exists a such that for all

The dampening effect from above shows that

This means that the function is automatically noise stable (if the energies at higher frequencies grow sub-exponentially).

Furthermore, we can take this as the definition of noise sensitivity / stability for general boolean functions.

These theorems also tells us that we can perform what looks like a lossy compression of . We simply cut off all high frequency elements, as we might do in jpeg compression. This should still be good approximation of as these terms get arbitrarily small in the face of noise.

The biggest issue with this approach in practice is that it is computationally very costly. Iterating through all subsets up to some size is of order .

Notes & Outlook

Gaussian extension

The first way to expand these ideas is to generalize to functions with more general domains. If you interpret a coin flip as a 0d Gaussian random variable (which might or might not be reasonable), a natural extension might be Gaussian processes. In this paper Gaussians are used to approximate binary random variables. They are also especially nice as there is an inherent connection to both the Fourier transform and uncertainty. Furthermore, as in the telephone theorem we have Driscoll's 0-1 law for Gaussian processes, saying that globally certain statistics always hold or never hold.

Subagents

In John's post Why subagents? [LW · GW] he argues that sub-agents generally describe many systems better than single agents would. In particular things like the stock market or even individual humans have no utility function. We can extend this description with boolean functions. A boolean function can be interpreted as a voting system between two or more outcomes / actions. Each bit is interpreted as a vote from a single sub-agent. By Arrow's impossibility theorem however, we know that if the voting system is rational (for some reasonable definition) then our boolean function must be the dictator function - i.e. decided by a single bit / voter. This seems like some mild evidence against certain kinds of subagents.

You might argue that humans and stock markets are just mostly rational and so Arrow's theorem doesn't apply. However, his theorem can be strengthened to basically say that if we are irrational about of the time, then has to be -close to the dictator function, in some precise sense. So if we believe humans are mostly rational, then we also have to accept that we are mostly agents.

This does not rule out things like Kelly-betting [LW · GW] or liquid democracy to gain consensus, as they are not captured by the boolean framework.

Qubit extension

We could use qubits as inputs to our functions, instead of traditional bits. To massively oversimplify, the extension to the quantum regime allows bits some limited communication. It is shown that all sorts of problems have more satisfying solutions in the quantum realm. For example, when playing the prisoners dilemma with entangled qubits the optimal strategy leads to cooperation instead of defection[1]. We can also get around Arrow's impossibility theorem when voting with qubits. In particular this means if subagents can share information (in the way entangled bits can) then the dictatorship is not the only rational voting system. Some limited work has already been done for more general boolean function, but it does not yet seem satisfactory.

Noise and Flatness

There are two (related) connections that I see. The first is to the Telephone Theorem [LW · GW] which states that in the world only certain kinds of information can travel "far". In some sense the details are lost in the noise and important summary statistics survive arbitrarily long. In other words, optimizing with noise is similar to optimizing at a distance [LW · GW].

The other is the relation between generalized solutions and "basin flatness". In the post Information loss --> Basin Flatness [AF · GW] the shape of the loss function is heuristically related generalization. Low frequency terms dominating the the loss function locally (in its Fourier transform) might be a useful proxy for this. Low frequency terms dominating also exactly relates to noise stability.

Both of these ideas show that in some sense the "important" optimization is along a low-dimensional manifold in our parameter space.

Some More References

Boolean Function Survey by Ryan O’Donnell

Noise stability of functions with low influences

Noise stability of boolean functions

Appendix

The code for the following two subsections should hopefully be upload to git soon.

Computation of Energies and the noisy regime

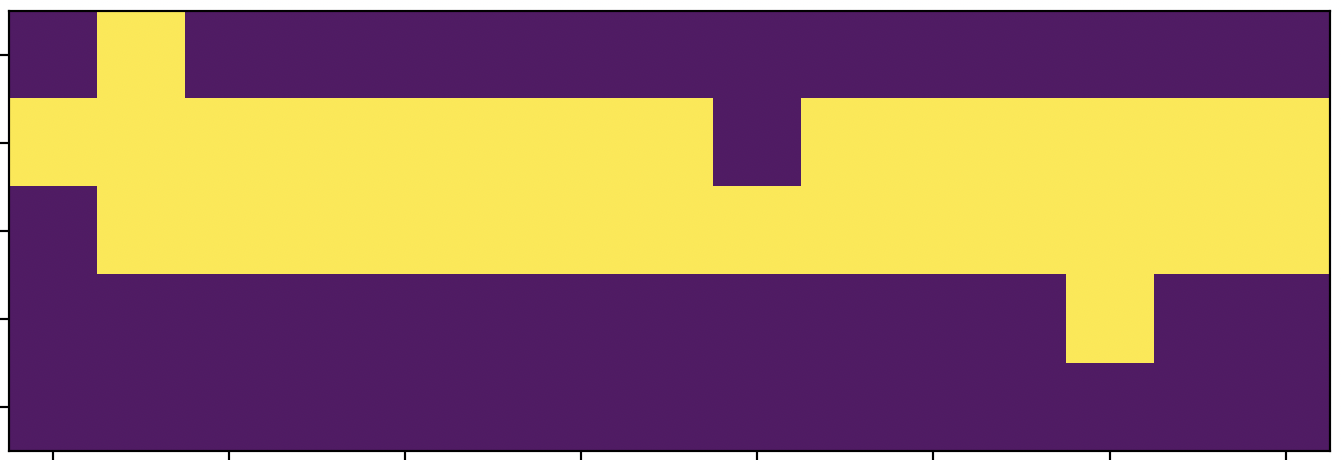

Consider the following toy scenario. We want to build a bridge from left to right. We only get reward if the bridge is completed, but every extra bit we use costs us some utility. Furthermore, we live in a noisy world where bits are randomly flipped. If our utility function is well chosen the optimal bridge might look as follows:

After applying some noise the bridge gets modified as such:

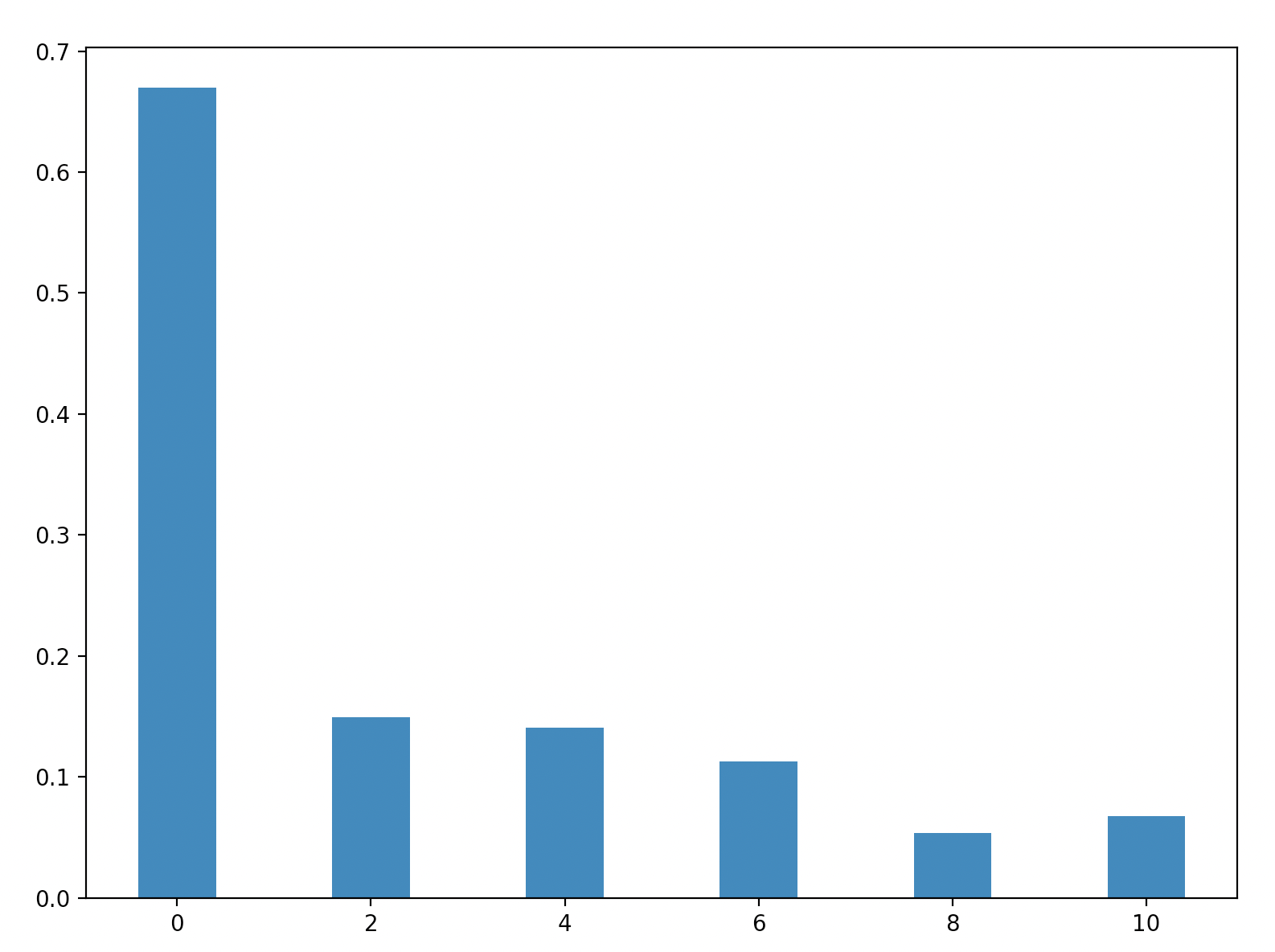

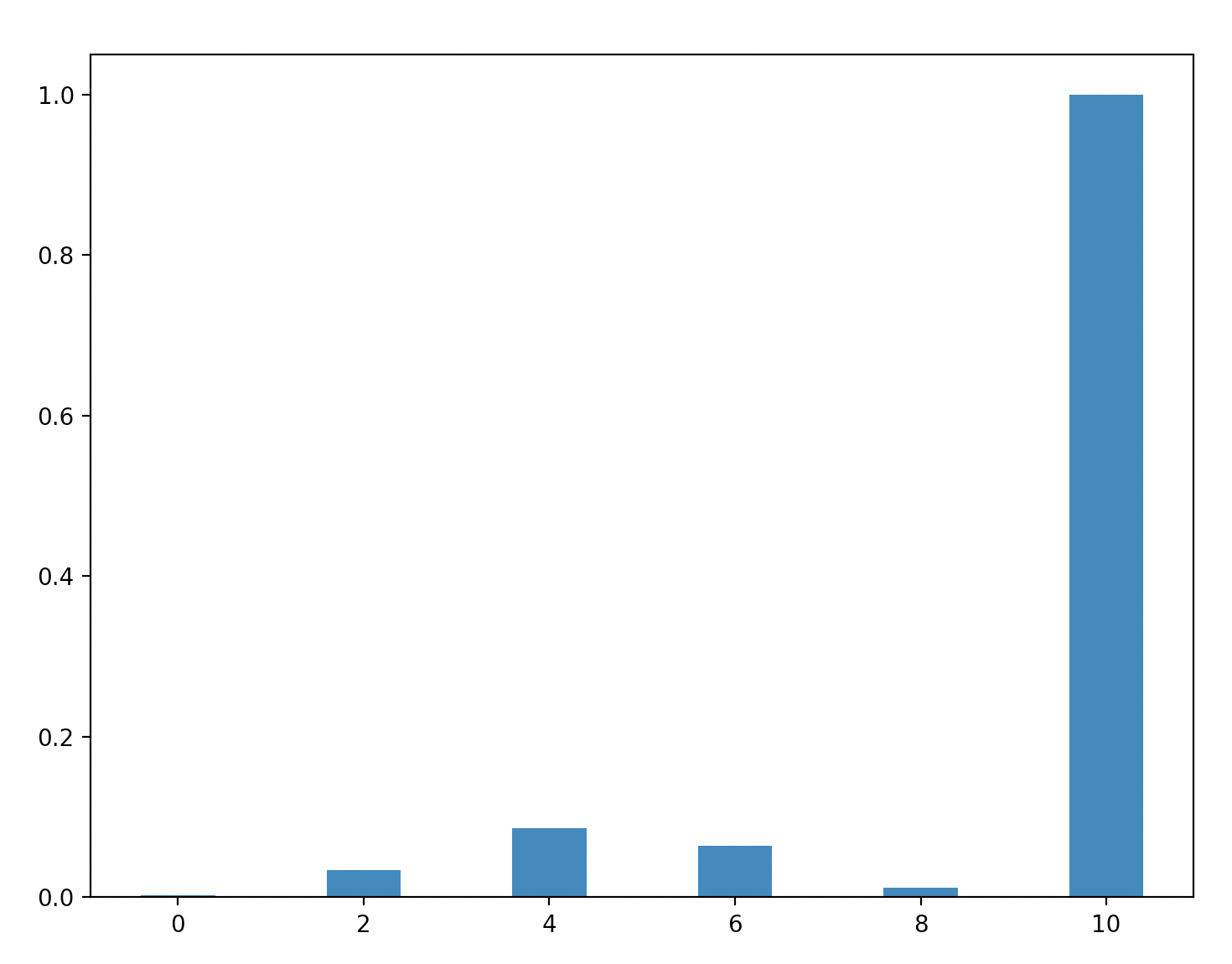

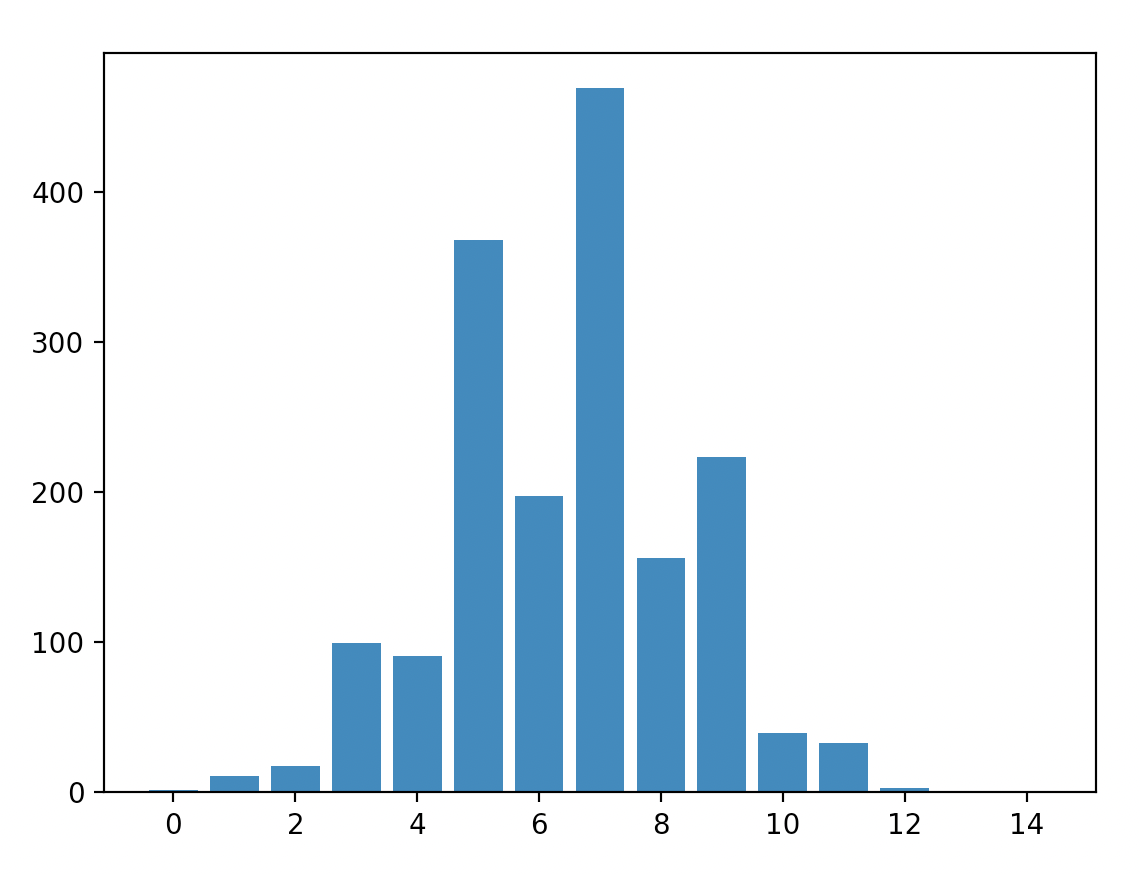

Using the equations for energy above to directly calculate the different energies. To do this deterministically would not be feasible as we would have to iterate over sets of subsets. The first approach I tried was to use Monte Carlo simulations instead. We get the following results:

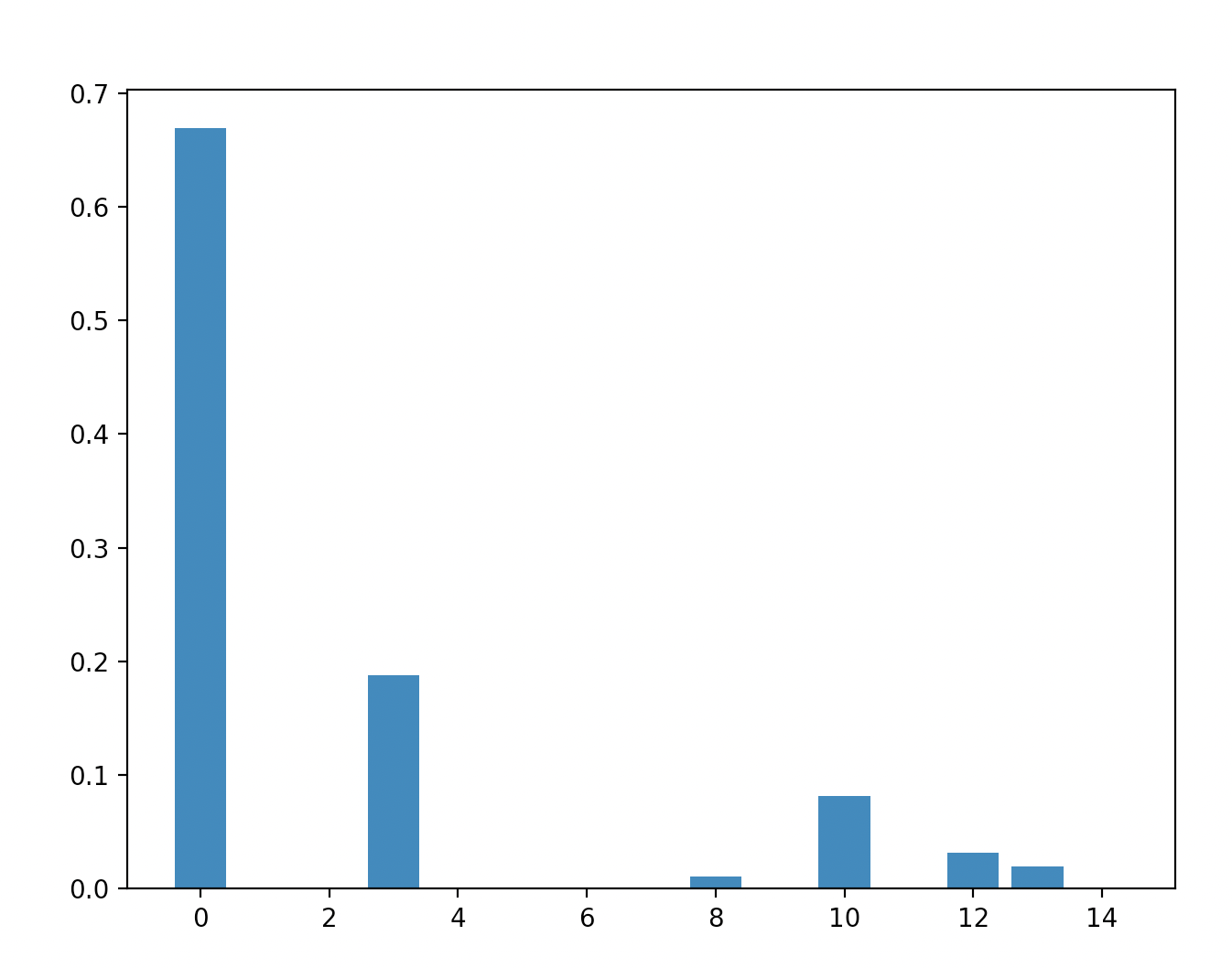

Majority:

Parity:

Bridge 3x5 setup:

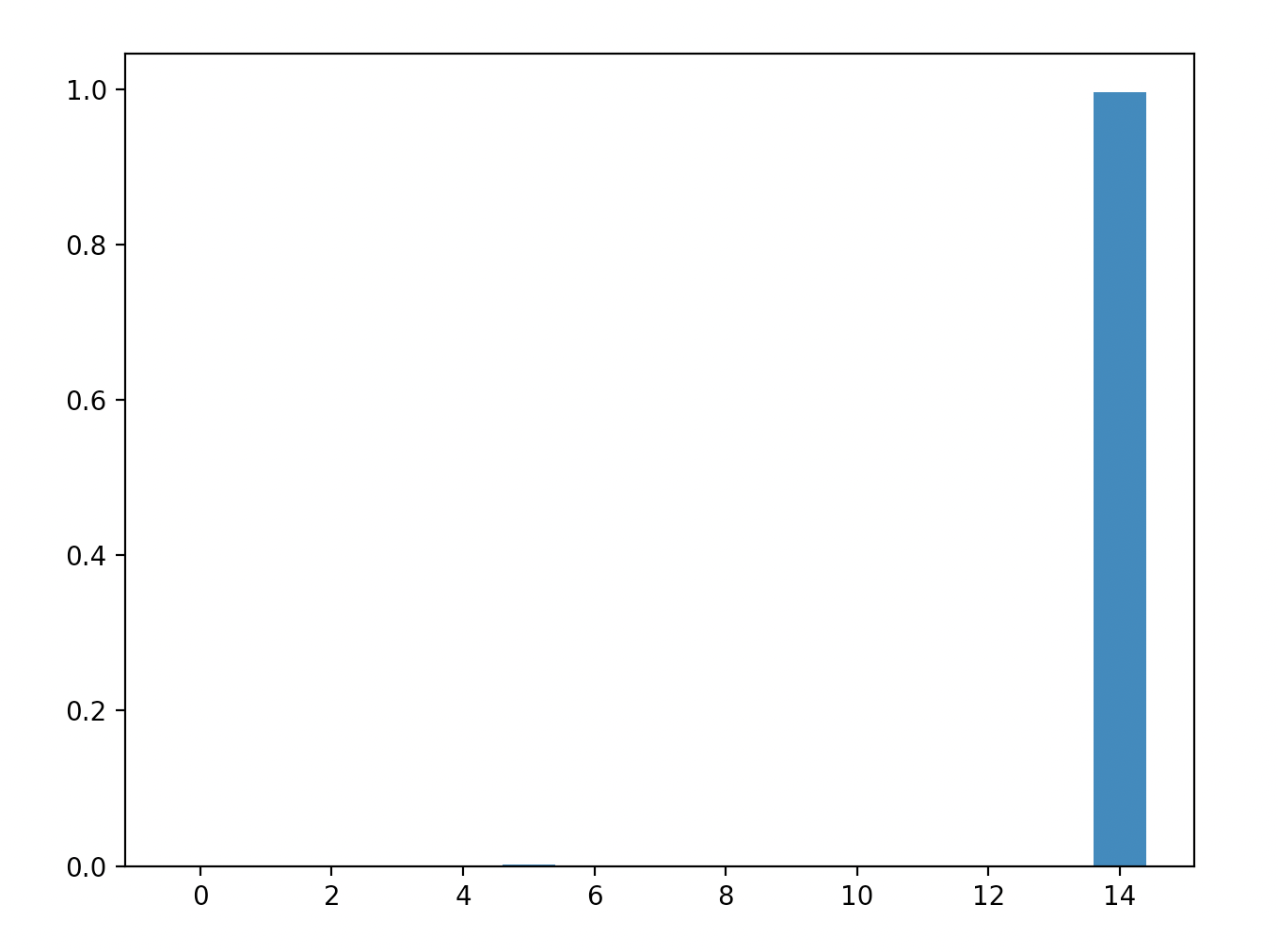

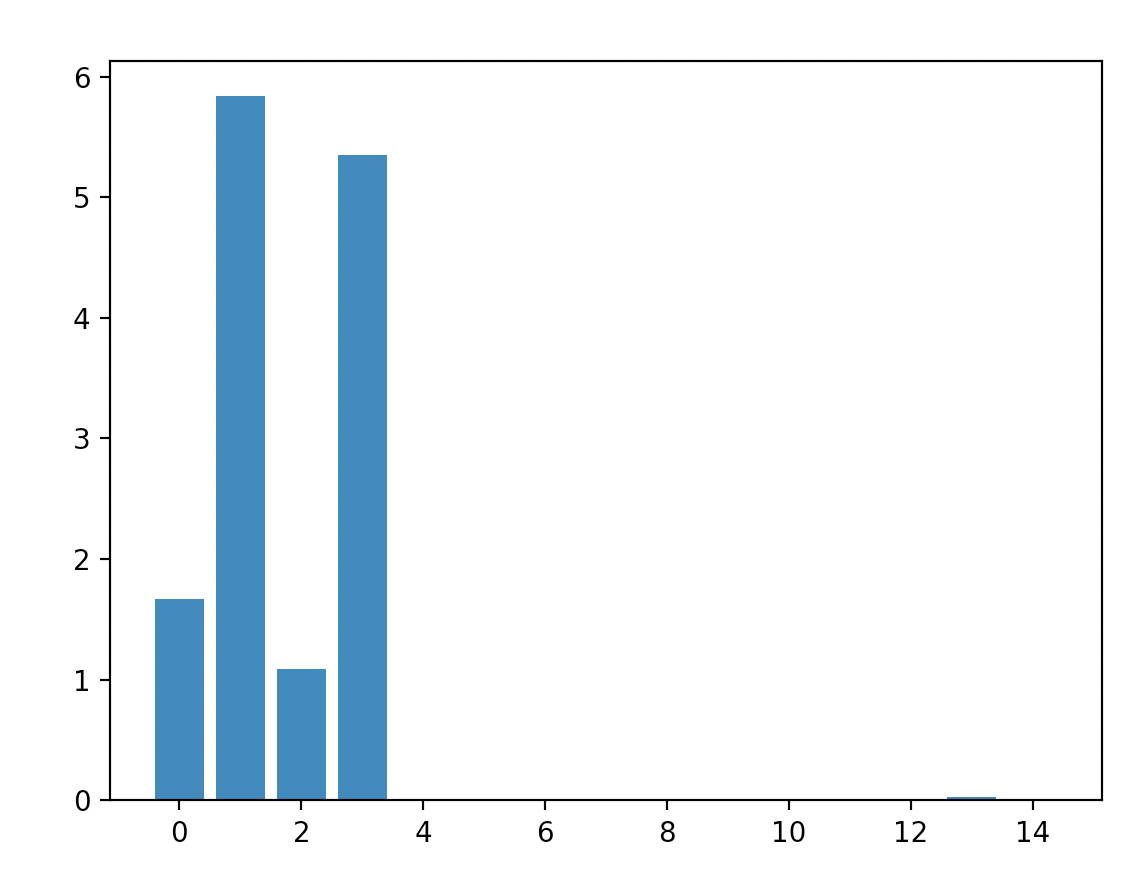

We can tell that in my probabilistic simulation of the energies there is a significant amount of variance. Since we are squaring the terms this leads to bias. This can be seen most easily be seen in the parity - only the final column should have any energy, yet there are positive energies in 2, 4, 6 and 8 in this simulation.

In this current version the energies for the 3x5 bridge is probably just dominated by noise.

Efficient computation

There is a better method to calculate the energies of a function using an inverse problem. By applying some of the equations discussed above we find that

We can of course estimate these covariances directly given the utility function . If we do this for different values of we get a linear system where we can solve for . In particular we get the linear system

where is a design matrix with entries and is given by the corresponding covariances . We solve for .

This set up mostly buys us speed. By the nature of inverse problems however, the solution is very sensitive with respect to and . Thus the covariances have to be estimated with a lot of accuracy. We get the following.

Majority with 15 bits:

Parity with 15 bits:

Bridge with 3x5 setup:

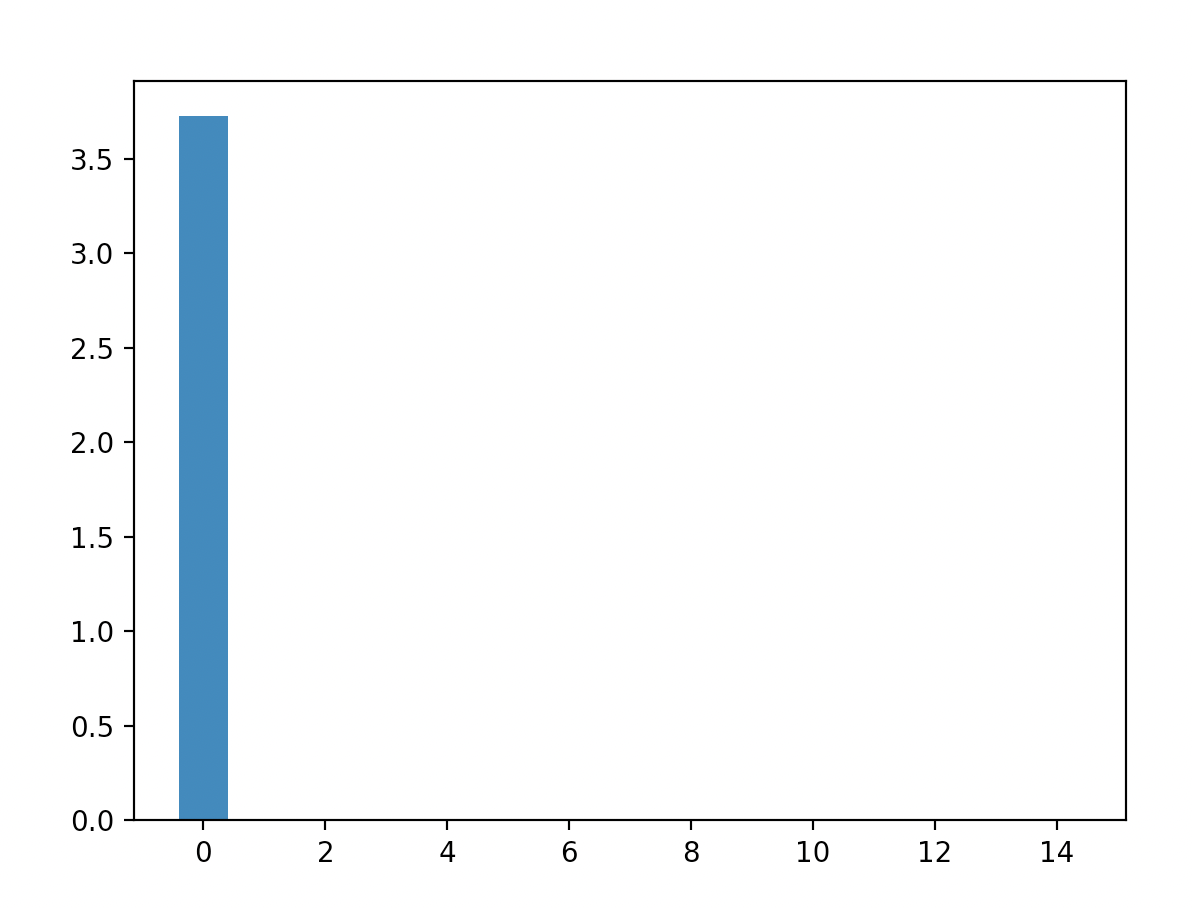

If we call the bridge utility function , then we can run the energy code again with the utility function . We get the following:

As we can see the energies are concentrated at the lowest energy level (though this is definitely a crude estimation).

- ^

More precisely, it unlocks new strategies that preempt the opponents move.

0 comments

Comments sorted by top scores.