Making decisions under moral uncertainty

post by MichaelA · 2019-12-30T01:49:48.634Z · LW · GW · 26 commentsContents

Overview/purpose of this sequence Epistemic status (for the whole sequence) Moral uncertainty Devon's decision "My Favourite Theory" Types of moral theories Cardinality Intertheoretic comparability Three approaches Maximising Expected Choice-worthiness (MEC) This is despite Devon believing that T2 is more likely than T1, and T2 claiming that buying the fish curry is better than purchasing the tofu curry. The reason is that, as discussed earlier, there is far “more at stake” for T1 than for T2 in this example. The basic idea of MEC can also be used as a heuristic, without involving actual numbers. Normalised MEC and Variance Voting The Borda Rule (BR) Closing remarks None 26 comments

Cross-posted to the EA Forum [EA · GW]. Updated substantially since initial publication.

Overview/purpose of this sequence

While working on an (upcoming) post about a new way to think about moral uncertainty, I unexpectedly discovered that, as best I could tell:

-

There was no single post on LessWrong or the EA Forum that very explicitly (e.g., with concrete examples) overviewed what seem to be the most prominent approaches to making decisions under moral uncertainty (more specifically, those covered in Will MacAskill’s 2014 thesis).[1][2]

-

There was no (easily findable and explicit) write-up of how to handle simultaneous moral and empirical uncertainty. (What I'll propose is arguably quite obvious, but still seems worth writing up explicitly.)

-

There was no (easily findable and sufficiently thorough) write-up of applying sensitivity analysis and value of information analysis to situations of moral uncertainty.

I therefore decided to write a series of three posts, each of which addressed one of those apparent “gaps”. My primary aim is to synthesise and make accessible various ideas that are currently mostly buried in the philosophical literature, but I also think it’s plausible that some of the ideas in some of the posts (though not this first one) haven’t been explicitly explored before.

I expect that these posts are most easily understood if read in order, but each post should also have value if read in isolation, especially for readers who are already familiar with key ideas from work on moral uncertainty.

Epistemic status (for the whole sequence)

I've now spent several days reading about moral uncertainty, but I wouldn't consider myself an actual expert in this topic or in philosophy more broadly. Thus, while I don't expect this sequence to contain any major, central mistakes, I wouldn’t be surprised if it's inaccurate or unclear/misleading in some places.

I welcome feedback of all kinds (on these posts and in general!).

Moral uncertainty

We are often forced to make decisions under conditions of uncertainty. This uncertainty can be empirical (e.g., what is the likelihood that nuclear war would cause human extinction?) or moral (e.g., does the wellbeing of future generations matter morally?).[3][4] The issue of making decisions under empirical uncertainty has been well-studied, and expected utility theory has emerged as the typical account of how a rational agent should proceed in these situations. The issue of making decisions under moral uncertainty appears to have received less attention (though see this list of relevant papers), despite also being of clear importance.

I'll later publish a post on definitions, types, and sources of moral uncertainty. In the present post, I'll instead aim to convey a sense of what moral uncertainty is through various examples. One example (which I'll return to repeatedly) is the following:

Devon's decision

Suppose Devon assigns a 25% probability to T1, a version of hedonistic utilitarianism in which human “hedons” (a hypothetical unit of pleasure) are worth 10 times more than fish hedons. He also assigns a 75% probability to T2, a different version of hedonistic utilitarianism, which values human hedons just as much as T1 does, but doesn’t value fish hedons at all (i.e., it sees fish experiences as having no moral significance). Suppose also that Devon is choosing whether to buy a fish curry or a tofu curry, and that he’d enjoy the fish curry about twice as much. (Finally, let’s go out on a limb and assume Devon’s humanity.)

According to T1, the choice-worthiness (roughly speaking, the rightness or wrongness of an action) of buying the fish curry is -90 (because it’s assumed to cause 1,000 negative fish hedons, valued as -100, but also 10 human hedons due to Devon’s enjoyment).[5] In contrast, according to T2, the choice-worthiness of buying the fish curry is 10 (because this theory values Devon’s joy as much as T1 does, but doesn’t care about the fish’s experiences). Meanwhile, the choice-worthiness of the tofu curry is 5 according to both theories (because it causes no harm to fish, and Devon would enjoy it half as much as he’d enjoy the fish curry).

The choice-worthiness of each option according to each theory is summarised in the following table:

Given this information, what should Devon do?

"My Favourite Theory"

Multiple approaches to handling moral uncertainty have been proposed. The simplest option is the "My Favourite Theory" (MFT) approach, in which we essentially ignore our moral uncertainty, and just do whatever seems best based on the theory in which one has the highest "credence" (belief). In the above situation, MFT would suggest Devon should buy the fish curry, even though doing so is only somewhat better according to T2 (10 - 5 = 5), and is far worse (5 - -90 = 95) according to another theory in which he has substantial (25%) credence. Indeed, even if Devon had 49% credence in T1 (vs 51% in T2), and the difference in the choice-worthiness of the options was a thousand times as large according to T1 as according to T2, MFT would still ignore the fact the situation is so much "higher stakes" for T1 than T2, refuse to engage in any "moral hedging", and advise Devon proceed with whatever T2 advised.

On top of generating such counterintuitive results, MFT is subject to other quite damning objections (see pages 20-25 of Will MacAskill’s 2014 thesis). Thus, the remainder of this post will focus on other approaches to moral uncertainty, which do allow for "moral hedging".

Types of moral theories

Which approach to moral uncertainty should be used depends in part on what types of moral theories are under consideration by the decision-maker - in particular, whether the theories are cardinally measurable or only ordinally measurable, and, if cardinally measurable, whether or not they’re inter-theoretically comparable.[6]

Cardinality

Essentially, a theory is cardinally measurable if it can tell you not just which outcome is better than which, but also by how much. E.g., it can tell you not just that “X is better than Y which is better than Z”, but also that “X is 10 ‘units’ better than Y, which is 5 ‘units’ better than Z”. (Some readers may be more familiar with distinctions between ordinal, interval, and ratio scales; I'm almost certain "cardinal" scales include both interval and ratio scales.)

My understanding is that popular consequentialist theories are typically cardinal, while popular non-consequentialist theories are typically (or at least more often) ordinal. For example, a Kantian theory may simply tell you that lying is worse than not lying, but not by how much, so you cannot directly weigh that “bad” against the goodness/badness of other actions/outcomes (whereas such comparisons are relatively easy under most forms of utilitarianism).

Intertheoretic comparability

Even if a set of theories are cardinal, they still may not be inter-theoretically comparable. Roughly speaking, two theories are comparable if there's a consistent, non-arbitrary “exchange rate” between the theories' “units of choice-worthiness" (and they're non-comparable if there isn't). MacAskill explains the “problem of intertheoretic comparisons” as follows:

“even when all theories under consideration give sense to the idea of magnitudes of choice-worthiness, we need to be able to compare these magnitudes of choice-worthiness across different theories. But it seems that we can’t always do this. [... Sometimes we don’t know] how can we compare the seriousness of the wrongs, according to these different theories[.] For which theory is there more at stake?”

In his own thesis, Tarsney provides useful examples:

"Consider, for instance, hedonistic and preference utilitarianism, two straightforward maximizing consequentialist theories that agree on every feature of morality, except that hedonistic utilitarianism regards pleasure and pain as the sole non-derivative bearers of moral value while preference utilitarianism regards satisfied and dissatisfied preferences as the sole non-derivative bearers of moral value. Both theories, we may stipulate, have the same cardinal structure. But this structure does not answer the crucial question for expectational reasoning, how the value of a hedon according to hedonic utilitarianism compares to the value of a preference utile according to preference utilitarianism—that is, for an agent who divides her beliefs equally between the two theories and wishes to hedge when they conflict, how much hedonic experience does it take to offset the dissatisfaction of a preference of a given strength (or vice versa)?

Likewise, of course, in trolley problem situations that pit consequentialist and deontological theories against one another, even if we could overcome the apparent structural incompatibility of these rival theories, the thorniest question seems to be: How many net lives must be saved, according to some particular version of consequentialism, to offset the wrongness of killing an innocent person, according to some particular version of deontology?" (line break added)[7]

It's worth noting that similar issues have received attention from, and are relevant to, other fields as well. For example, MacAskill writes: "A similar problem arises in the study of social welfare in economics: it is desirable to be able to compare the strength of preferences of different people, but even if you represent preferences by cardinally measurable utility functions you need more information to make them comparable." Thus, concepts and findings from those fields could illuminate this matter, and vice versa.

Three approaches

In MacAskill's thesis, the approaches to moral uncertainty he argues for are:

- Maximising Expected Choice-worthiness (MEC), if all theories under consideration by the decision-maker are cardinal and intertheoretically comparable. (This is arguably the “best” situation to be in, as it is the case in which the most information is being provided by the theories.)

- Variance Voting (VV), a form of what I’ll call “Normalised MEC”, if all theories under consideration are cardinal but not intertheoretically comparable.

- The Borda Rule (BR), if all theories under consideration are ordinal. (This is the situation in which the least information is being provided by the theories.)

- A “Hybrid” procedure, if the theories under consideration differ in whether they’re cardinal or ordinal and/or in whether they’re intertheoretically comparable. (Hybrid procedures will not be discussed in this post; interested readers can refer to pages 117-122 of MacAskill’s thesis.)

I will focus on these approaches (excluding Hybrid procedures), both because these approaches seem to me to be relatively prominent, effective, and intuitive, and because I know less about other approaches. (Potentially promising alternatives include a bargaining-theoretic approach [related presentation slides here], the similar but older and less fleshed-out parliamentary model, and the approaches discussed in Tarsney's thesis.)

Maximising Expected Choice-worthiness (MEC)

MEC is essentially an extension of expected utility theory. MacAskill describes MEC as follows:

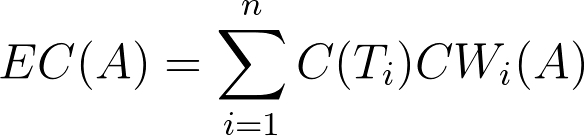

“when all [normative/moral] theories [under consideration by the decision-maker] are cardinally measurable and intertheoretically comparable, the appropriateness of an option is given by its expected choice-worthiness, where the expected choice-worthiness (EC) of an option is as follows:

The appropriate options are those with the highest expected choice-worthiness.”

In this formula, C(Ti) represents the decision-maker’s credence (belief) in Ti (some particular moral theory), while CWi(A) represents the “choice-worthiness” (CW) of A (an “option” or action that the decision-maker can take), according to Ti.

To illustrate how MEC works, we will return to the example of Devon deciding whether to buy a fish curry or tofu curry, as summarised in the table of choice-worthiness values from earlier:

(I’ve also modelled this example in Guesstimate. In that link, for comparison purposes, this model is followed by a model of the same basic example using traditional expected utility reasoning, and another using MEC-E (an approach I'll explain in my next post).)

Using MEC in this situation, the expected choice-worthiness of buying the fish curry is 0.25 * -90 + 0.75 * 10 = -15, and the expected choice-worthiness of buying the tofu curry is 0.25 * 5 + 0.75 * 5 = 5. Thus, Devon should buy the tofu curry.

This is despite Devon believing that T2 is more likely than T1, and T2 claiming that buying the fish curry is better than purchasing the tofu curry. The reason is that, as discussed earlier, there is far “more at stake” for T1 than for T2 in this example.

To me, this seems like a good, intuitive result for MEC, and shows how it improves upon the “My Favourite Theory” approach.

There are two final things I should note about MEC:

-

MEC can be used in exactly the same way when more than two theories are under consideration. (The only reason most examples in this sequence will be ones in which only two moral theories are under consideration is to keep explanations simple.)

-

The basic idea of MEC can also be used as a heuristic, without involving actual numbers.

- For example, say Clara believes that there’s a “high chance” utilitarianism is correct, but that some deontological theory, in which lying is deeply wrong, is “plausible”. Clara is considering whether to tell a lie, and has good reason to believe this will lead to a slight net increase in wellbeing. She might still decide not to lie, despite believing it’s likely that lying is the “right” thing to do, because it’d only be slightly right, whereas it’s plausible it’s deeply wrong.

Another example of applying MEC (which is probably only worth reading if the approach still seems unclear to you) can be found in the following footnote.[8]

Normalised MEC and Variance Voting

(It's possible I've made mistakes in this section; if you think I have, please let me know.)

But what about cases in which, despite being cardinal, the theories you have credence in are not intertheoretically comparable? (Recall that this essentially means that there's no consistent, non-arbitrary “exchange rate” between the theories' “units of choice-worthiness".)

MacAskill argues that, in such situations, one must first "normalise" the theories in some way (which basically means "adjusting values measured on different scales to a notionally common scale"). MEC can then be applied just as we saw earlier, but now with the new, normalised choice-worthiness scores.

There are multiple ways one could normalise the theories under consideration (e.g., by range), but MacAskill argues for normalising by variance. That is, he argues that we should:

“[treat] the average of the squared differences in choice-worthiness from the mean choice-worthiness as the same across all theories. Intuitively, the variance is a measure of how spread out choice-worthiness is over different options; normalising at variance is the same as normalising at the difference between the mean choice-worthiness and one standard deviation from the mean choice-worthiness.”

MacAskill uses the term Variance Voting to refer to this process of first normalising by variance and then using the MEC approach.

(Unfortunately, as far as I could tell, none of the three theses/papers I read that referred to normalising moral theories by variance actually provided a clear, worked example. I've attempted to construct such a worked example based on an extension of the scenario with Devon deciding what meal to buy; that can be found here, and here is a simpler and I think effectively identical method, suggested in a private message.)

In arguing for Variance Voting over its alternatives, MacAskill states that the basic principle normalisation aims to capture is the “principle of equal say: the idea, stated imprecisely for now, that we want to give equally likely moral theories equal weight when considering what it’s appropriate to do” (emphasis in original). He further writes:

“To see a specific case of how this could go awry, consider average and total utilitarianism, and assume that they are indeed incomparable. And suppose that, in order to take an expectation over those theories, we choose to treat them as agreeing on the choice-worthiness ordering of options concerning worlds with only one person in them. If we do this, then, for almost all decisions about population ethics, the appropriate action will be in line with what total utilitarianism regards as most choiceworthy because, for almost all decisions, the stakes are huge for total utilitarianism, but not very large for average utilitarianism. So it seems that, if we treat the theories in this way, we are being partisan to total utilitarianism.

In contrast, if we chose to treat the two theories as agreeing on the choice-worthiness differences between options with worlds involving 10^100 people then, for almost all real-world decisions, what it’s appropriate to do will be the same as what average utilitarianism regards as most choice-worthy. This is because we’re representing average utilitarianism as claiming that, for almost all decisions, the stakes are much higher than for total utilitarianism. In which case, it seems that we are being partisan to average utilitarianism, whereas what we want is to have a way of normalising such that each theory gets equal influence.” (line break added)

(Note that it’s not a problem for one theory to have much more influence on decisions due to higher credence in that theory. The principle of equal say is only violated if additional influence is unrelated to additional credence in a theory, and instead has to do with what are basically arbitrary/accidental choices about exchange rates between units of choice-worthiness.)

MacAskill (pages 110-116) provides two arguments that VV is the approach that satisfies the principle of equal say, and Owen Cotton-Barratt similarly argues for the superiority of normalisation by variance over alternative normalisations. (But note that this approach does seem to have its flaws, as discussed in, e.g., pages 222-223 of Tarsney's thesis.)

The Borda Rule (BR)

Finally, what about cases in which all moral theories you have credence in are only ordinal, rather than cardinal (i.e., they say only whether each option is more, equally, or less choice-worthy than each other option, but not by how much)? For such cases, MacAskill recommends a voting method called the Borda Rule (BR; also known as a "Borda count"), with "moral theories play[ing] the part of voters and practical options the part of candidates" (Tarsney). I will first quote MacAskill’s formal explanation of BR (which may be somewhat confusing by itself), before quoting an example he gives and showing what applying BR to that looks like:

“An option A’s Borda Score, for any theory Ti, is equal to the number of options within the option-set that are less choice-worthy than A according to theory Ti’s choice-worthiness function, minus the number of options within the option-set that are more choice-worthy than A according to Ti’s choice-worthiness function.[9]

An option A’s Credence-Weighted Borda Score is the sum, for all theories Ti, of the Borda Score of A according to theory Ti multiplied by the credence that the decision-maker has in theory Ti.

[The Borda Rule states that an] option A is more appropriate than an option B iff [if and only if] A has a higher Credence-Weighted Borda Score than B; A is equally as appropriate as B iff A and B have an equal Credence-Weighted Borda Score.”

I will now show, following MacAskill, how this rule applies to an example he gives in his thesis:

“Julia is a judge who is about to pass a verdict on whether Smith is guilty for murder. She is very confident that Smith is innocent. There is a crowd outside, who are desperate to see Smith convicted. Julia has three options:

[G]: Pass a verdict of ‘guilty’.

[R]: Call for a retrial.

[I]: Pass a verdict of ‘innocent’.

Julia knows that the crowd will riot if Smith is found innocent, causing mayhem on the streets and the deaths of several people. If she calls for a retrial, she knows that he will be found innocent at a later date, and that it is much less likely that the crowd will riot at that later date. If she declares Smith guilty, the crowd will be appeased and go home peacefully. She has credence in three moral theories:

35% credence in a variant of utilitarianism, according to which [G≻R≻I].

34% credence in a variant of common sense, according to which [R≻I≻G].

31% credence in a deontological theory, according to which [I≻R≻G].”

The options’ Borda Scores according to each theory, and their Credence-Weighted Borda Scores, are therefore as shown in the following table:

(For example, G has a score of 2 - 0 = 2 according to utilitarianism because that theory views two options as less choice-worthy than G, and 0 options as more choice-worthy than G.)

The calculations that provided the Credence-Weighted Borda Scores shown in the above table are as follows:

G: 0.35 * 2 + 0.34 * -2 + 0.31 * -2 = -0.6 (this because the utilitarian, common sense, and deontological theories are given credences of 35%, 34%, and 31%, respectively, and these serve as the weightings for the Borda Scores these theories provide)

R: 0.35 * 0 + 0.34 * 2 + 0.31 * 0 = 0.68

I: 0.35 * -2 + 0.34 * 0 + 0.31 * 2 = -0.08

BR would therefore claim that Julia should call for a retrial. This is the case even though passing a guilty verdict was seen as best by Julia’s “favourite theory” (the variant of utilitarianism). Essentially, calling for a retrial is preferred because both passing a guilty verdict and passing an innocent verdict were seen as least preferred by some theory Julia has substantial credence in, whereas calling for a retrial is not least preferred by any theory.

MacAskill notes that preferring this sort of a compromise option in a case like this seems intuitively right. He also argues that alternatives to BR fail to give us the sort of answers we’d want in these or other sorts of cases. (Though Tarsney raises some objections to BR which I won't get into.)

Closing remarks

I hope you have found this post a useful, clear summary of key ideas around what moral uncertainty is, why it matters, and how to make decisions when morally uncertain. Personally, I believe that an understanding of moral uncertainty - particularly a sort of heuristic version of MEC - has usefully enriched my thinking, and influenced some of the biggest decisions I’ve made over the last year.[10]

In the next post, I will discuss (possibly novel, arguably obvious) extensions of each of the three approaches discussed here, in order to allow for modelling both moral and empirical uncertainty, explicitly and simultaneously. The post after that will discuss how we can combine the approaches in the first two posts with sensitivity analysis and value of information analysis.[11][12]

I genuinely mean no disrespect to the several posts on moral uncertainty I did discover (e.g., here [EA · GW], here [EA · GW], and here [LW · GW]). All did meet some of those criteria, and I’d say most were well-written but just weren’t highly explicit (e.g., didn’t include enough concrete examples), and/or didn’t cover (in the one post) each of the prominent approaches and the related ideas necessary to understand them. ↩︎

Other terms/concepts that are sometimes used and are similar to “moral uncertainty” are normative, axiological, and value [LW · GW] uncertainty. In this sequence, I’ll use “moral uncertainty” in a general sense that also incorporates axiological and value uncertainty, and at least a large part of normative uncertainty.

Also, throughout this sequence, I will use the term "approach" in a way that I believe aligns with MacAskill's use of the term "metanormative theory". ↩︎

It seems to me that there are many cases where it’s not entirely clear whether the uncertainty is empirical or moral. For example, I might wonder “Are fish conscious?”, which seems on the face of it an empirical question. However, I might not yet know precisely what I mean by “conscious”, and only really want to know whether fish are “conscious in a sense I would morally care about”. In this case, the seemingly empirical question becomes hard to disentangle from the (seemingly moral) question “What forms of consciousness are morally important?”

(Furthermore, my answers to that question in turn may be influenced by empirical discoveries. For example, I may initially believe avoidance of painful stimuli demonstrates consciousness in a morally relevant sense, but then change that belief after learning that this behaviour can be displayed in a stimulus-response way by certain extremely simple organisms.)

In such cases, I believe the approach suggested in the next post of this sequence will still work well, as that approach does not really require empirical and moral uncertainty to be treated fundamentally differently. (Another approach [EA · GW], which presents itself differently but I think is basically the same in effect, is to consider uncertainty over “worldviews”, with those worldviews combining moral and empirical claims.) ↩︎

In various places in this sequence, I will use language that may appear to endorse or presume moral realism (e.g., referring to “moral information” or to probability of a particular moral theory being “true”). But this is essentially just for convenience; I intend this sequence to be neutral on the matter of moral realism vs antirealism, and I believe this post can be useful in mostly similar ways regardless of one’s position on that matter. I discuss the matter of "moral uncertainty for antirealists" in more detail in this separate post [LW · GW]. ↩︎

The matter of how to actually assign “units” or “magnitudes” of choice-worthiness to different options, and what these things would even mean, is complex, and I won’t really get into it in this sequence. ↩︎

Christian Tarsney's 2017 thesis thesis (e.g., pages 175-176) explains other ways the "structure" of moral theories can differ, and potential implications of these other differences. These were among the juicy complexities I had to resist cramming in this originally-intended-as-bitesized post (but I may write another post about Tarsney's ideas later; please let me know if you think that'd be worthwhile). ↩︎

It's worth noting that similar issues have received attention from, and are relevant to, other fields as well. For example, MacAskill writes: "A similar problem arises in the study of social welfare in economics: it is desirable to be able to compare the strength of preferences of different people, but even if you represent preferences by cardinally measurable utility functions you need more information to make them comparable." Thus, concepts and findings from those fields could illuminate this matter, and vice versa. ↩︎

Suppose Alice assigns a 60% probability to hedonistic utilitarianism (HU) being true and a 40% probability to preference utilitarianism (PU) being true. Suppose also that Bob wants to play video games, but would actually get slightly more joy out of a day at the beach. Thus, according to HU, letting Bob play video games has a CW of 5, and taking him to the beach has a CW of 6; while according to PU, letting Bob play video games has a CW of 15, and taking him to the beach has a CW of -20.

Under these conditions, the expected choice-worthiness of letting Bob play video games is 0.6 * 5 + 0.4 * 15 = 9, and the expected choice-worthiness of taking Bob to the beach is 0.6 * 6 + 0.4 * -20 = -4.4. Therefore, Alice should let Bob play video games.

Analogously to the situation with the Devon example, this is despite Alice believing HU is more likely than PU, and despite HU positing that taking Bob to the beach being better than letting him play video games. As before, the reason is that there is “more at stake” in this decision for the less-believed theory than for the more-believed theory; HU considers there to only be a very small difference between the choice-worthiness of the options, while PU considers there to be a large difference. ↩︎

MacAskill later notes that a simpler method (which doesn’t subtract the number of options that are more choice-worthy) can be used when there are no ties. His calculations for the example I quote and work through in this post use that simpler method. But in this post, I’ll stick to the method MacAskill describes in this quote (which is guaranteed to give the same final answer in this example anyway). ↩︎

However, these concepts are of course not an instant fix or cure-all. In a (readable and interesting) 2019 paper, MacAskill writes “so far, the implications for practical ethics have been drawn too simplistically [by some philosophers.] First, the implications of moral uncertainty for normative ethics are far more wide-ranging than has been noted so far. Second, one can't straightforwardly argue from moral uncertainty to particular conclusions in practical ethics, both because of ‘interaction’ effects between moral issues, and because of the variety of different possible intertheoretic comparisons that one can reasonably endorse.”

For a personal example, a heuristic version of MEC still leaves me unsure whether I should move from being a vegetarian-flirting-with-veganism to a strict vegan, or even whether I should spend much time making that decision, because that might trade off to some extent with time and money I could put towards longtermist [EA · GW] efforts (which seem more choice-worthy according to other moral theories I have some credence in). I suspect any quantitative modelling simple enough to be done in a reasonable amount of time would still leave me unsure.

That said, I, like MacAskill (in the same paper), “do believe, however, that consideration of moral uncertainty should have major impacts for how practical ethics is conducted. [...] It would be surprising if the conclusions [of approaches taking moral uncertainty into account] were the same as those that practical ethicists typically draw.”

In particular, I’d note that considering moral uncertainty can reveal some “low-hanging fruit”: some “trades” between moral theories that are relatively clearly advantageous, due to large differences in the “stakes” different moral theories see the situation as having. (Personally, cases of apparent low-hanging fruit of this kind have included becoming at least vegetarian, switching my career aims to longtermist ones, and yet engaging in global-poverty-related movement-building when an unusual opportunity arose and it wouldn’t take up too much of my time.) ↩︎

To foreshadow: Basically, my idea is that, once you’ve made explicit your degree of belief in various moral theories and how good/bad outcomes appear to each of those theories, you can work out which updates to your beliefs in moral theories or to your understandings of those moral theories are most likely to change your decisions, and thus which “moral learning” to prioritise and how much resources to expend on it. ↩︎

I’m also considering later adding posts on:

- Different types and sources of moral uncertainty (drawing on these [LW · GW] posts [LW · GW]).

- The idea of ignoring even very high credence in nihilism, because it’s never decision-relevant.

- Whether it could make sense to give moral realism disproportionate (compared to antirealism) influence over our decisions, based on the idea that realism might view there as “more at stake” than antirealism does.

I’d be interested in hearing whether people think those threads are likely to be worth pursuing. ↩︎

26 comments

Comments sorted by top scores.

comment by Said Achmiz (SaidAchmiz) · 2019-12-30T23:40:40.337Z · LW(p) · GW(p)

Forgive me for being dense, but… I started reading this post thinking that this was going to be… well, an introduction to moral uncertainty… and was confused to realize that you don’t actually introduce or explain moral uncertainty (you just offhandedly provide an external link—which also doesn’t really introduce moral uncertainty, instead just assuming it as an understood concept).

Would you consider writing an actual introduction to moral uncertainty? I would find this useful, I think (and I suspect others would, too).

Replies from: MichaelA↑ comment by MichaelA · 2019-12-31T00:13:24.803Z · LW(p) · GW(p)

Good point. I wondered if maybe this should instead be called "Overview of moral uncertainty" or "Making decisions under moral uncertainty" (edit: I've now changed it to the latter title, both here and on the EA forum, partly due to your feedback).

Do you mean adding a paragraph or two at the start, or a whole other post?

Part of me wants to say I don't think there's really that much to say about moral uncertainty itself, before getting into how to handle it. I also think it's probably best explained through examples, which it therefore seems efficient to combine with examples of handling it (e.g., the Devon example both illustrates an instance of moral uncertainty, and how to handle it, saving the reader time). But maybe in that case I should explicitly note early in the post that I'll mostly illustrate moral uncertainty through the examples to come, rather than explaining it abstractly up front.

But I am also considering writing a whole post on different types/sources of moral uncertainty (particularly integrating ideas from posts by Justin Shovelain [LW · GW], [LW · GW] Kaj_Sotala [LW · GW], Stuart_Armstrong [LW · GW], an anonymous poster [EA · GW], and a few other places. This would for example discuss how it can be conceptualised under moral realism vs under antirealism. So maybe I'll try write that soon, and then provide near the start of this post a very brief summary of (and link to) that.

Replies from: SaidAchmiz, Kaj_Sotala↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-31T02:24:59.762Z · LW(p) · GW(p)

Do you mean adding a paragraph or two at the start, or a whole other post?

I would think an entire post would be needed, yes. (At least!)

But I am also considering writing a whole post on different types/sources of moral uncertainty (particularly integrating ideas from posts by Justin Shovelain, Kaj_Sotala, Stuart_Armstrong, an anonymous poster, and a few other places. This would for example discuss how it can be conceptualised under moral realism vs under antirealism. So maybe I’ll try write that soon, and then provide near the start of this post a very brief summary of (and link to) that.

This sounds promising.

Basically, I’m wondering the following (this is an incomplete list):

- What is this ‘moral uncertainty’ business?

- Where did this idea come from; what is its history?

- What does it mean to be uncertain about morality?

- Is ‘moral uncertainty’ like uncertainty about facts? How so? Or is it different? How is it different?

- Is moral uncertainty like physical, computational, or indexical uncertainty? Or all of the above? Or none of the above?

- How would one construe increasing or decreasing moral uncertainty?

… etc., etc. To put it another way—Eliezer spends a big part of the Sequences discussing probability and uncertainty about facts, conceptually and practically and mathematically, etc. It seems like ‘moral uncertainty’ deserves some of the same sort of treatment.

Replies from: MichaelA↑ comment by MichaelA · 2019-12-31T03:56:44.059Z · LW(p) · GW(p)

Ok, this has increased the likelihood I'll commit the time to writing that other post. I think it'll address some of the sorts of questions you list, but not all of them.

One reason is that I'm not a proper expert on this.

Another reason is that I think that, very roughly speaking, answers to a lot of questions like that would be "Basically import what we already know about regular/factual/empirical uncertainty." For moral realists, the basis for the analogy seems clear. For moral antirealists, one can roughly imagine dealing with moral uncertainty as something like trying to work out the fact of the matter about one's own preferences, or one's idealised preferences (something like CEV). But that other post I'll likely write should flesh this out a bit more.

↑ comment by Kaj_Sotala · 2020-01-02T11:36:13.932Z · LW(p) · GW(p)

Part of me wants to say I don't think there's really that much to say about moral uncertainty itself, before getting into how to handle it.

I'm confused by you saying this, given that you indicate having read my post on types of moral uncertainty. To me the different types warrant different ways of dealing with them. For example, intrinsic moral uncertainty was defined as different parts of your brain having fundamental disagreements about what kind of a value system to endorse. That kind of situation would require entirely different kinds of approaches, ones that would be better described as psychological than decision-theoretical.

It seems to me that before outlining any method for dealing with moral uncertainty, one would need to outline what type of MU it was applicable for and why.

Replies from: MichaelA↑ comment by MichaelA · 2020-01-03T00:03:26.015Z · LW(p) · GW(p)

I'm now definitely planning to write the above-mentioned post, discussing various definitions, types, and sources of moral uncertainty. As this will require thinking more deeply about that topic, I'll be able to answer more properly once I have. (And I'll also comment a link to post that in this thread.)

For now, some thoughts in response to your comment, which are not fully-formed and not meant to be convincing; just meant to indicate my current thinking and maybe help me formulate that other post through discussion:

- Well, I did say "part of me"... :)

- I think the last two sentences of your comment raise an interesting point worth taking seriously

- I do think there are meaningfully different ways we can think about what moral uncertainty is, and that a categorisation/analysis of the different types and "sources" (i.e., why is one morally uncertain) could advance one's thinking (that's what that other post I'll write will aim to do)

- I think the main way it would help advance one's thinking is by giving clues as to what one should do to resolve one's uncertainty.

- This seems to me to be what your post focuses on, for each of the three types you mention. E.g., to paraphrase (to relate this more to humans than AI, though both applications seem important), you seem to suggest that if the source of the uncertainty is our limited self-knowledge, we should engage in processes along the lines of introspection. In contrast, if the source of our uncertainty is that we don't know what we'd enjoy/value because we haven't tried it, we should engage with the world to learn more. This all seems right to me.

- But what I cover in this post and the following one is how to make decisions when one is morally uncertain. I.e., imagining that you are stuck with uncertainty, what do you do? This is a different question to "how do I get rid of this uncertainty and find the best answer" (resolving it).

- (Though in reality the best move under uncertainty may actually often be to gather more info - which I'll discuss somewhat in my upcoming post on the applying value of information analysis to this topic - in which case the matter of how to resolve the uncertainty becomes relevant again.)

- I'd currently guess (though I'm definitely open to being convinced otherwise) that the different types and sources of moral uncertainty don't have substantial bearing on how to make decisions under moral uncertainty. This is for three main reasons:

- Analogy to empirical uncertainty: There are a huge number of different reasons I might be empirically uncertain - e.g., I might not have enough data on a known issue, I might have bad data on the issue, I might have the wrong model of a situation, I might not be aware of a relevant concept, I might have all the right data and model but limited processing/computation ability/effort. And this is certainly relevant to the matter of how to resolve uncertainty. But as far as I'm aware, expected value reasoning/expected utility theory is seen as the "rational" response in any case of empirical uncertainty. (Possibly excluding edge cases like Pascal's wagers, which in any case seem to be issues related to the size of the probability rather than to the type of uncertainty.) It seems that, likewise, the "right" approach to making decisions under moral uncertainty may apply regardless of the type/source of that uncertainty (especially because MEC was developed by conscious analogy to approaches for handling empirical uncertainty).

- The fact that most academic sources I've seen about moral uncertainty seem to just very briefly discuss what moral uncertainty is, largely through analogy to empirical uncertainty and an example, and then launch into how to make decisions when morally uncertain. (Which is probably part of why I did it that way too.) It's certainly possible that there are other sources I haven't seen which discuss how different types/sources might suggest different approaches would be best. It's also certainly possible the academics all just haven't thought of this issue, or haven't taken it seriously enough. But to me this is at least evidence that the matter of types/sources of moral uncertainty shouldn't affect how one makes decisions under moral uncertainty.

- (That said, I'm a little surprised I haven't yet seen academic sources analysing different types/sources of moral uncertainty in order to discuss the best approaches for resolving it. Maybe they feel that's effectively covered by more regular moral philosophy work. Or maybe it's the sort of thing that's better as a blog post than an academic article.)

- I can't presently see a reason why one should have a different decision-making procedure/aggregation procedure/approach under moral uncertainty depending on the type/source of uncertainty. (This is partly double-counting the points about empirical uncertainty and academic sources, but here I'm also indicating I've tried to think this through myself at least a bit.)

↑ comment by Kaj_Sotala · 2020-01-04T13:01:36.022Z · LW(p) · GW(p)

Thanks! This bit in particular

I think the main way it would help advance one's thinking is by giving clues as to what one should do to resolve one's uncertainty. [...] But what I cover in this post and the following one is how to make decisions when one is morally uncertain. I.e., imagining that you are stuck with uncertainty, what do you do? This is a different question to "how do I get rid of this uncertainty and find the best answer" (resolving it).

Makes sense to me, and clarified your approach. I think I agree with it.

Replies from: MichaelA, MichaelA

↑ comment by MichaelA · 2020-01-07T10:55:42.676Z · LW(p) · GW(p)

So the post I decided to write based on Said Achmiz and Kaj_Sotala's feedback will now be at least three posts. Turns out you two were definitely right that there's a lot worth saying about what moral uncertainty actually is!

The first post, which takes an even further step back and compares "morality" to related concepts, is here [LW · GW]. I hope to publish the next one, half of a discussion of what moral uncertainty is, in the next couple days.

Replies from: MichaelA↑ comment by MichaelA · 2020-01-11T13:53:20.129Z · LW(p) · GW(p)

I've just finished the next post [LW · GW] too - this one comparing moral uncertainty itself (rather than morality) to related concepts.

Replies from: MichaelA↑ comment by MichaelA · 2020-01-29T20:57:33.763Z · LW(p) · GW(p)

I've finally gotten around to the post you two would probably be most interested in [LW · GW], on (roughly speaking) moral uncertainty for antirealists/subjectivists (as well as for AI alignment, and for moral realists in some ways). That also touches on how to "resolve" the various types of uncertainty I propose.

comment by Pattern · 2019-12-30T22:52:01.490Z · LW(p) · GW(p)

This post is great.

Commentary:

My impression is that popular consequentialist theories are typically cardinal, while popular non-consequentialist theories are typically ordinal. For example, a Kantian theory may simply tell you that lying is worse than not lying, but not by how much, so you cannot directly weigh that “bad” against the goodness/badness of other actions/outcomes (whereas such comparisons are relatively easy under most forms of utilitarianism).

In this sense, this brand of non-consequentialist theories seems to be an amalgamation of 'moral theories'.

8. [LW · GW] Please let me know if you think it’d be worth me investing more time, and/or adding more words, to try to make this section - or the prior one - clearer. (Also, just let me know about any other feedback you might have!)

It would be useful to have an example of how Variance Voting works. Also, the examples for the other methods are fantastic!

The post after that will discuss how we can combine the approaches in the first two posts with sensitivity analysis and value of information analysis.

What is sensitivity analysis? And is there any literature or information on how value of information analysis can account for things like unknown unknowns?

or even whether I should spend much time making that decision, because that might trade off to some extent with time and money I could put towards longtermist [EA · GW] efforts (which seem more choice-worthy according to other moral theories I have some credence in).

What longtermist efforts might there be according to the theory that (if you were certain of) you'd choose to be vegan?

General:

This post is concerned with MacAskill's (pure) approaches to moral uncertainty. Here are links to some alternatives:

the parliamentary model and a bargaining-theoretic approach

Things that aren't in this post:

1

5. [LW · GW] The matter of how to actually assign “units” or “magnitudes” of choice-worthiness to different options, and what these things would even mean, is complex, and I won’t really get into it in this sequence.

(Hybrid procedures will not be discussed in this post; interested readers can refer to MacAskill's thesis.)

(Link from earlier in the post: Will MacAskill’s 2014 thesis. Alas, its table of contents doesn't entirely use the same terminology, so its not clear where Hybrid procedures are discussed. However, if they are discussed after Variance Voting, as in the post, then this may be somewhere after page 89.)

3.

7. [LW · GW] MacAskill later notes that a simpler method (which doesn’t subtract the number of options that are more choice-worthy) can be used when there are no ties. His calculations for the example I quote and work through in this post use that simpler method. But in this post, I’ll stick to the method MacAskill describes in this quote (which is guaranteed to give the same final answer in this example anyway).

4.

9. [LW · GW] In a (readable and interesting) 2019 paper, MacAskill writes “so far, the implications for practical ethics have been drawn too simplistically [by some philosophers.]Replies from: MichaelA, MichaelA, MichaelA, MichaelA, MichaelA

↑ comment by MichaelA · 2019-12-31T00:54:26.173Z · LW(p) · GW(p)

(Link from earlier in the post: Will MacAskill’s 2014 thesis. Alas, its table of contents doesn't entirely use the same terminology, so its not clear where Hybrid procedures are discussed. However, if they are discussed after Variance Voting, as in the post, then this may be somewhere after page 89.)

Oh, good point, should've provided page numbers! Hybrid procedures (or Hybrid Views) are primarily discussed in pages 117-122. I've now edited my post to include those page numbers. (You can also use the command+f/control+f to find key terms in the thesis you're after.)

↑ comment by MichaelA · 2019-12-31T00:48:38.615Z · LW(p) · GW(p)

"or even whether I should spend much time making that decision, because that might trade off to some extent with time and money I could put towards longtermist [EA · GW] efforts (which seem more choice-worthy according to other moral theories I have some credence in)."

What longtermist efforts might there be according to the theory that (if you were certain of) you'd choose to be vegan?

Not sure I understand this question. I'll basically expand on/explain what I said, and hope that answers your question somewhere. (Disclaimer: This is fairly unpolished, and I'm trying more to provide you an accurate model of my current personal thinking than provide you something that appears wise and defensible.)

I currently have fairly high credence in the longtermism, which I'd roughly phrase as the view that, even in expectation and given difficulties with distant predictions, most of the morally relevant consequences of our actions lie in the far future (meaning something like "anywhere from 100 years from now till the heat death of the universe"). In addition to that fairly high credence, it seems to me intuitively that the "stakes are much higher" on longtermism than on non-longtermist theories (e.g., a person-affecting view that only cares about people alive today). (I'm not sure if I can really formally make sense of that intuition, because maybe those theories should be seen as incomparable and I should use variance voting to give them equal say, but at least for now that's my tentative impression.)

I also have probably somewhere between 10-90% credence that at least many nonhuman animals are morally conscious in a relevant sense, with non-negligible moral weights. And this theory again seems to suggest much higher stakes than a human-only view would (there are a bunch of reasons one might object to this, and they do lower my confidence, but it still seems like it makes more sense to say that animal-inclusive views say there's all the potential value/disvalue the human-only view said there was, plus a whole bunch more). I haven't bothered pinning down my credence much here, because multiplying 0.1 by the amount of suffering caused by an individual's contributions to factory farming if that view is correct seems already enough to justify vegetarianism, making my precise credence less decision-relevant. (I may use a similar example in my post on value of information, now I think about it.)

As MacAskill notes in that paper, while vegetarianism is typically cheaper than meat-eating, strict vegetarianism will be almost certainly at least slightly more expensive (or inconvenient/time-consuming) that "vegetarian except when it's expensive/difficult". A similar thing would likely apply more strongly to veganism. So the more I more towards strict veganism, the more time and money it costs me. It's not much, and a very fair argument could be made that I probably spend more on other things, like concert tickets or writing these comments. But it still does trade off somewhat against my longtermist efforts (currently centring on donating to the EA Long Term Future Fund and gobbling up knowledge and skills so I can do useful direct work, but I'll also be starting a relevant job soon).

To me, it seems that the stakes under "longtermism plus animals matter" seem higher than just under "animals matter". Additionally, I have fairly high credence in longtermism, and no reason to believing conditioning on animals mattering makes longtermism less likely (so even if I accept "animals matter", I'd have basically exactly the same fairly high credence in longtermism as before).

It therefore seems that a heuristic MEC type of thinking should make me lean towards what longtermism says I should do, though with "side constraints" or "low hanging fruits being plucked" from a "animals matter" perspective. This seems extra robust because, even if "animals matter", I still expect longtermism is fairly likely, and then a lot of the policies that seem wise from a longtermist angle (getting us to existential safety, expanding our moral circle, raising our wisdom and ability to prevent suffering and increase joy) seem fairly wise from an animals matter angle too (because they'd help us help nonhumans later). (But I haven't really tried to spell that last assumption out to check it makes sense.)

↑ comment by MichaelA · 2019-12-31T00:28:17.985Z · LW(p) · GW(p)

What is sensitivity analysis?

This is a somewhat hard to read (at least for me) Wikipedia article on sensitivity analysis. It's a common tool; my extension of it to moral uncertainty would basically boil down to "Do what people usually advice, but for moral uncertainty too." I'll link this comment (and that part of this post) to my post on that once I've written it.

Also, sensitivity analysis is extremely easy in Guesstimate (though I'm not yet sure precisely how to interpret the results). Here's the Guesstimate model that'll be the central example in my upcoming post. To do a sensitivity analysis, just go to the variable of interest (in this case, the key outcome is "Should Devon purchase a fish meal (0) or a plant-based meal (1)?"), click the cog/speech bubble, click "Sensitivity". On all the variables feeding into this variable, you'll now see a number in green showing how sensitive the output is to this input.

In this case, it appears that the variables the outcome is most sensitive too (and thus that are likely most worth gathering info on) are the empirical question of how many fish hedons the fish meal would cause, followed at some distance by the moral question of choice-worthiness of each fish hedon according to T1 (how much does that theory care about fish?) and the empirical question of how much human hedons the fish meal would cause (how much would Devon enjoy the meal?).

And is there any literature or information on how value of information analysis can account for things like unknown unknowns?

Very good question. I'm not sure, but I'll try to think about and look into that.

Replies from: Pattern↑ comment by MichaelA · 2019-12-31T00:20:15.368Z · LW(p) · GW(p)

It would be useful to have an example of how Variance Voting works. Also, the examples for the other methods are fantastic!

Thanks! I try to use concrete examples wherever possible, and especially when dealing with very abstract topics. Basically, if it was a real struggle for me to get to an understanding from existing sources, that signals to me that examples would be especially useful here, to make things easier on others.

In the case of Variance Voting, I think I stopped short of fully getting to an internalised, detailed understanding, partly because MacAskill doesn't actually provide any numerical examples (only graphical illustrations and an abstract explanation). I'll try read the paper he links to and then update this with an example.

Replies from: MichaelA↑ comment by MichaelA · 2020-01-01T13:13:41.842Z · LW(p) · GW(p)

I've now substantially updated/overhauled this article, partly in response to your feedback. One big thing was reading more about variance voting/normalisation and related ideas, and, based on that, substantially changing how I explain that idea and adding a (somewhat low confidence) worked example. Hope that helps make that section clearer.

If there are things that still seem unclear, and especially if anyone thinks I've made mistakes in the Variance Voting part, please let me know.

↑ comment by MichaelA · 2019-12-31T00:00:25.879Z · LW(p) · GW(p)

Thanks for your kind words and your feedback/commentary!

(I'll split my reply into multiple comments to make following the threads easier.)

In this sense, this brand of non-consequentialist theories seems to be an amalgamation of 'moral theories'.

I'm not sure I see what you mean by that. Skippable guesses to follow:

Do you mean something like "this brand of non-consequentialist theories seem to basically just be a collection of common sense intuitions"? If so, I think that's part of the intention for any moral theory.

Or do you mean something like that, plus that that brand of non-consequentialist theory hasn't abstracted away from those intuitions much (such that they're liable to something like overfitting, whereas something like classical utilitarianism errs more towards underfitting by stripping everything down to one single strong intuition[1]), and wouldn't provide preference orderings that satisfy axioms of rationality/expected utility[2]? If so, I agree with that too, and that's why I personally find something like classical utilitarianism far more compelling, but it's also an "issue" of lot of smart people of aware of and yet still endorse the non-consequentialist theories, so I think it's still important for our moral uncertainty framework to be able to handle such theories.

Or do you mean something like "this brand of non-consequentialist theory is basically what you'd get if you averaged (or took a credence-weighted average) across all moral theories"? Is so, I'm pretty sure I disagree, and one indication that this is probably incorrect is that accounting for moral uncertainty seems likely to lead to fairly different results than just going with an ordinal Kantian theory.

Or is intention behind words not a multiple choice test, in which case please provide your short-answer and/or essay response :p

[1] My thinking here is influenced by pages 26-28 of Nick Beckstead's thesis, though it was a while ago that I read them.

[2] Disclaimer: I don't yet understand those axioms in detail myself; I think I get the gist, but often when I talk about them it's more like I'm extrapolating what conclusions smart people would draw based on others I've seen them draw, rather than knowing what's going on under the hood.

In this case, one relevant smart person is MacAskill, who says in his thesis: "Many theories do provide cardinally measurable choice-worthiness: in general, if a theory orders empirically uncertain prospects in terms of their choice-worthiness, such that the choice-worthiness relation satisfies the axioms of expected utility theory [footnote mentioning von Neumann et al.], then the theory provides cardinally measurable choice-worthiness." This seems to me to imply (as a matter of how people speak, not by actual logic) that theories that aren't cardinal, like the hypothesised Kantian theory, don't meet the axioms of expected utility theory.

Replies from: Pattern↑ comment by Pattern · 2019-12-31T00:42:06.081Z · LW(p) · GW(p)

In this sense, this brand of non-consequentialist theories seems to be an amalgamation of 'moral theories'.

I'm not sure I see what you mean by that.

The section on the Borda Rule is about how to combine theories under consideration that only rank outcomes ordinally. The lack of information about how these non-consequentialist theories rank outcomes could stem from them being underspecified - or a combination approach as your post describes, though probably one of a different form than described here.

is basically what you'd get if you averaged (or took a credence-weighted average) across all moral theories"?

I wouldn't say "all" - though it might be an average across moral theories that could be considered separately. They're complicated theories, but maybe the pieces make more sense, or it'll make more sense if disassembled and reassembled.

like the hypothesised Kantian theory, don't meet the axions of expected utility theory.

This may be true of other non-consequentialist theories. What I am familiar with of Kant's reasoning was a bit consequentialist, and if "this leads to a bad circumstance under some circumstance -> never do it even under circumstances when doing it leads to bad consequences" (which means the analysis could come to a different conclusion if it was done in a different order or reversed the action/inaction related bias) is dropped in favor of "here are the reference classes, use the policy with the highest expected utility given this fixed relationship between preference classes and policies" then it can be made into one that might meet the axioms.

Replies from: MichaelA↑ comment by MichaelA · 2019-12-31T06:03:50.351Z · LW(p) · GW(p)

What I am familiar with of Kant's reasoning was a bit consequentialist, and if "this leads to a bad circumstance under some circumstance -> never do it even under circumstances when doing it leads to bad consequences" (which means the analysis could come to a different conclusion if it was done in a different order or reversed the action/inaction related bias) is dropped in favor of "here are the reference classes, use the policy with the highest expected utility given this fixed relationship between preference classes and policies" then it can be made into one that might meet the axioms.

I think that's one way one could try to adapt Kantian theories, or extrapolate certain key principles from them. But I don't think it's what the theories themselves say. I think what you're describing lines up very well with rule utilitarianism.

(Side note: Personally, "my favourite theory" would probably be something like two-level utilitarianism, which blends both rule and act utilitarianism, and then based on moral uncertainty I'd add some side constraints/concessions to deontological and virtue ethical theories - plus just a preference for not doing anything too drastic/irreversible in case the "correct" theory is one I haven't heard of yet/no one's thought of yet.)

comment by MichaelA · 2019-12-31T22:22:40.632Z · LW(p) · GW(p)

As I hope for this to remain a useful, accessible summary of these ideas, I've made various edits as I've learned more and gotten feedback, and expect to continue to do so. So please keep the feedback coming, so I can make this more useful for people!

(In the interest of over-the-top transparency, here's the version from when this was first published.)

Replies from: Pattern↑ comment by Pattern · 2020-01-02T18:32:32.849Z · LW(p) · GW(p)

(In the interest of over-the-top transparency, here's the version from when this was first published.)

Btw, there might be a built in way of doing that on LW, though it doesn't seem to be very well known. Thanks for sharing this!

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-01-02T20:12:10.251Z · LW(p) · GW(p)

That option is currently only accessible for admins, until we figure out a good UI for it. Sorry for that!

comment by artifex · 2019-12-30T23:04:11.184Z · LW(p) · GW(p)

I think I like this post, but not the approaches.

A correct solution to moral uncertainty must not be dependent on cardinal utility and requires some rationality. So Borda rule doesn’t qualify. Parliamentary model approaches are more interesting because they rely on intelligent agents to do the work.

An example of a good approach is the market mechanism. You do not assume any cardinal utility. You actually do not do anything directly with the preferences you have a probability distribution over at all. You have an agent for each and extrapolate what that agent would do, if they had no uncertainty over their preferences and rationally pursued them, when put in a carefully designed environment that allows them to create arbitrary binding consensual precommitments (“contracts”) with other agents, and weights each agent’s influence over the outcomes that the agents care about according to your probabilities.

What is tricky is making the philosophical argument that this is indeed the solution to moral uncertainty that we are interested in. I’m not saying it is the correct solution. But it follows some insights that any correct solution should:

- do not use cardinal utility, use partial orders;

- do not do anything with the preferences yourself, you are at high risk of doing something incoherent;

- use tools that are powerful and universal: intelligent agents, let them bargain using full Turing machines. You need strong properties, not (for example) mere Pareto efficiency.