One bit of observation can unlock many of optimization - but at what cost?

post by dr_s · 2023-04-29T10:53:03.969Z · LW · GW · 4 commentsContents

Conclusions None 4 comments

This question [? · GW] by johnswentworth nerd sniped me, so I ended up thinking a lot about the relationship between information and control over the world in the simplified scenario of a single tape of bits. The question asked how many bits of optimization one could unlock with a single bit of observation; the answer [LW(p) · GW(p)] ended up being "arbitrarily many", as proven by a simple example: suppose you have a rule that says that if the first bits of your action match those of your observation , then the rest of gets copied to your target value ; then there's no limit to how many bits you can copy, and the only information you need to leverage is the knowledge of the secret "key". We know this works because this is exactly how locks work in real life too. If I possess someone's bank account password, or the combination to a safe, or the US nuclear codes, then I'm able to produce disproportionate effects to the tiny size of that knowledge, just because I can also rely on the state of the world and its laws being set up in such a way that I can use that knowledge as a pivot to trigger much bigger effects.

The thing I wanted to focus on then was those conditions: even given that I know the "password", what else do I need to know about the world at large, and what limits are there on my power to optimize the final state? I decided to focus on the following model:

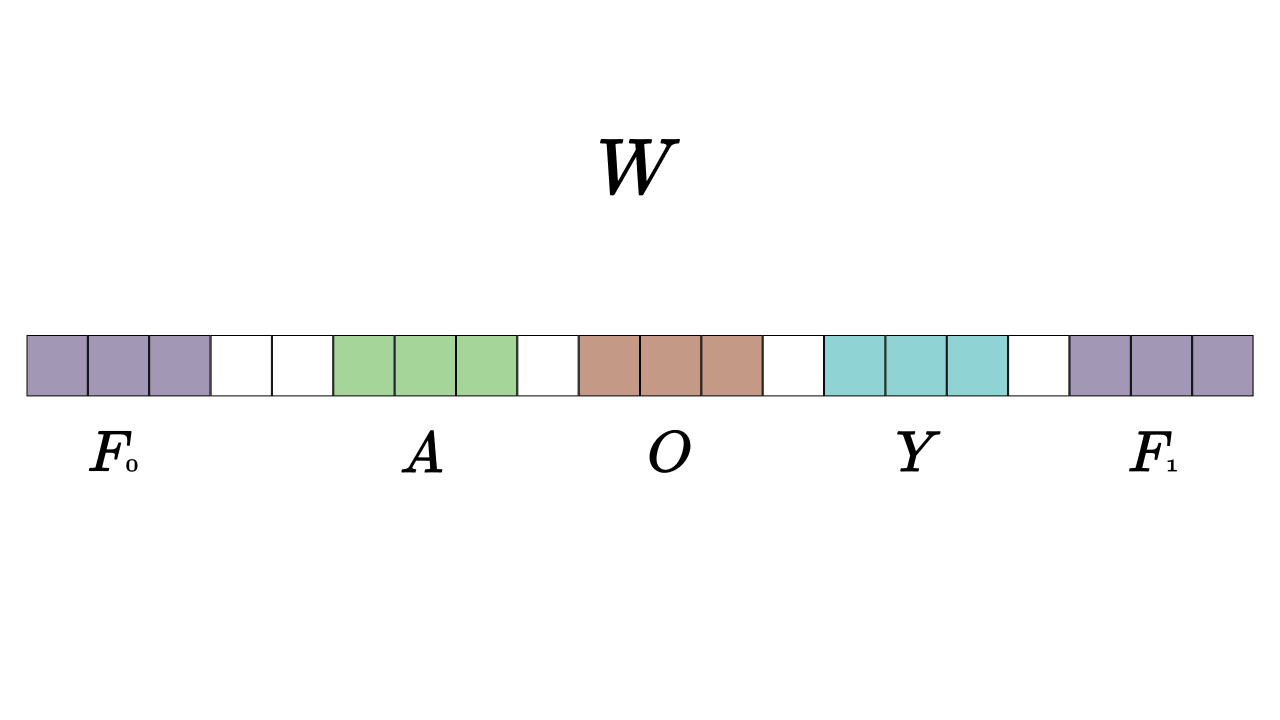

- a world string of bits, prepared in some initial state , with some regions known and some randomized;

- a discrete map that determines the evolution of this world, such that , and so on. The map is reversible, such that there exists an inverse ;

The focus on the map being reversible is because in the real world the laws of physics are time symmetric too and microscopically should not destroy information. Irreversible computing allows the deletion of information, which reduces the entropy of the system. In a computer embedded in a larger world this can be compensated by creating entropy somewhere else, but if our string has to represent the entire world, then it should preserve information. The world string has several identifiable regions:

- an action region , within which we can set up bits arbitrarily in the initial state;

- an observation region , which we can't affect but whose contents we know exactly in the initial state;

- a target region , to which we aim at writing certain bits so as to maximize the mutual information with some goal string ;

- two fuel regions and , at the ends of the string, filled respectively with all 0s and all 1s. We'll see the use for them in a moment.

The map can be defined as a series of instructions. Since we're doing reversible computing, we can use only one universal logic gate, like the Toffoli gate (CCNOT) or the Fredkin gate (CSWAP). These take three bits as arguments, so once we've decided our gate of choice an entire program can be composed simply of triples of addresses of bits, wherein each address will require bits, meaning a program's size is bits/instruction. Consider the simplest possible version of a "lock-like" program, which compares and , and if they're identical, it swaps and (note that we can't simply copy : that would erase information and not be reversible). We will also need two "fuel" bits and prepared respectively in the 0 and 1 states. The program can be written with just three Fredkin gates:

CSWAP b_1 f_1 f_2

CSWAP b_2 f_1 f_2

CSWAP f_2 b_3 b_4

After this, the two "fuel" bits are used up and can't be relied on any more for future calculations. From the viewpoint of the entire string, of course, the entropy is constant and the process is entirely reversible; but if you only looked at the fuel substrings, it would look like those bits have been randomized, and their entropy increased (not unlike real chemical combustion is in principle a reversible process, but macroscopically looks irreversible as the free energy decreases from reactants to products). Knowing this program also requires bits of knowledge (three triples of addresses). Note that both the size of the program and the amount of fuel used depends heavily on which fundamental gate we use to build the "natural laws" of this world. If instead of Fredkin we tried using Toffoli gates, the program would grow quite longer, and I suspect use up more fuel (though I haven't checked). Conversely, you could build your laws out of the four-bit CCSWAP operator. In that case the entire program would be a single instruction and require no fuel; and it's easy to tell that CCSWAP is a universal gate because you can simply set the first control bit to 1 to retrieve CSWAP, the Fredkin gate, which is universal. So the precise details here are very dependent on the rules. Another important fact to notice is that the programs may be compressible. Consider the program "compare bits and and, if they are equal, swap all bits in the sequences and ". Then you could write it with CCSWAPs as:

CCSWAP b_1 b_2 B_3[0] B_4[0]

CCSWAP b_1 b_2 B_3[1] B_4[1]

CCSWAP b_1 b_2 B_3[2] B_4[2]

...

which corresponds to bits in total. But it is very regular and could also be compressed in a "for loop" format, which only requires bits. In fact this is how the real laws of nature work; we know they are constant throughout time and space and extremely regular and that's how we get leverage from comparatively very small amounts of information. They are in a sense "keys" that allow us to open certain locks; but which locks depends on how the system is set up, and is not up to us to choose.

What if our knowledge was incomplete, though? Suppose the laws of this world are a series of Toffoli gates (I'm picking them because they make the following calculations simpler, though the same principles apply with any other gate). However, there is a fraction of them that is unknown to us. We know some operations, but now and then comes one that we know nothing about. Suppose the amount of bits in the world that we know and care about (as they are part of our action, observation, target, or the fuel we need) is . Then for each unknown gate there's a chance of that it will change one of "our" bits, in a way that potentially breaks our algorithm. If these are large enough numbers to be approximated as continuous, and if we consider the number of instructions as time , then

leading to an exponential decay, . This introduces a certain measure of chaos to our system, and this information loss will from our point of view be irreversible. The loss would be slightly different if we knew all the laws, as we could compensate for those affecting only bits we know, but as long as we don't know the full state of the world, any operator entangling bits we know with bits we don't will inevitably result in loss of information and apparent increase in the entropy of the region we care about.

Conclusions

The answer to johnswentworth's original question is that there are no limits on how much optimization one can squeeze out of a single bit, even in a world with reversible computing. However there are soft practical constraints:

- besides the observation region , we also need to know a certain amount of bits detailing the rules of the world. In its uncompressed form, this knowledge is always greater than the amount of optimization (for example, with CCSWAP gates, we would need four addresses for each optimized bit);

- depending on the precise way the world works, the process may require a certain amount of "fuel", bits prepared in a given known state that will be scrambled in the process;

- any lack of knowledge about the rules of the world or the rest of its state (if coupled with rules that also involve the unknown regions) will introduce chaos and thus loss of information in the process.

These or similar questions seem like they probably should have been investigated much more in depth by the computer science and especially quantum computing fields, so it's likely I'm not bringing up anything new. I'd also be curious about whether there are theoretical limits on algorithms' need for fuel, or on the compression of knowledge about the world's laws. Intuitively I think these limits must be on trade-offs; we can always construct a world with universal gates that can compute one specific algorithm with no need for fuel and in a minimally compressed format, but that might mean the cost of different algorithms skyrockets. I'd also be interested if some more specific property other than time reversibility of the physical laws of our world could be identified and an equivalent applied to the laws of this sort of toy model.

4 comments

Comments sorted by top scores.

comment by Algon · 2023-04-29T12:00:54.157Z · LW(p) · GW(p)

I think this model is wrong. Consider the case of a button opening a number of doors simultaenously or closing them simultaenously. If it is pressed, then the doors are open. If unpressed, the doors are closed. So you can cut the number of possible words in half by pressing the button, even though these may be highly specfic world states. Ergo, you have two bits of optimization.

In general, if there is some "switch" in the world which controls which of two paths the world goes down, then the "size of possible world states" are restricted to those two paths. So the thing doing the optimizing, the switch, can't really drive the world into a very small target state relative to all other possible states. The creation of the switch required tonnes of optimization power to drive the possible futures down to two paths in the first place. That leaves the switch itself with the power to choose between two target states i.e. 1 bit of optimization power.

Replies from: dr_s↑ comment by dr_s · 2023-04-29T16:12:36.516Z · LW(p) · GW(p)

Hm, but then in your example you only have one bit of action. The model is more like "you have a certain amount of doors (the bits in ) and as many switches (the corresponding bits in ) and you can set them to open or closed as you please as long as you can guess the password". There are as many possible future states as there are combinations of the switches you can set, essentially (without including the chaotic effects of unknown laws and/or parts of the world).

Of course there's a bit of a sleight of hand in how we're just allowing your "free will" to set from the outside the bits in , at zero cost and in a completely acausal manner. A proper world would have to also contain your own mind and memory stored in a part of the tape, with the evolution laws accordingly making it function. At which point the entire thing would look entirely deterministic, your actions/optimizations just a natural outcome of the dynamics of the world, and you could indeed argue that there is no optimization, just one state evolving the only way it can. And you'd be right, but insofar as we know, that's true of the real world as well (quantum shenanigans aside; those make things potentially more complicated if you believe that the wavefunction collapse is a real and not just apparent phenomenon).

Replies from: Algon↑ comment by Algon · 2023-05-03T22:30:05.331Z · LW(p) · GW(p)

After reading your post in more depth, I can confidently say I'm confused. I don't get at which step you get more bits out of optimization than you get in. Yet I think I understand this passage:

; then there's no limit to how many bits you can copy, and the only information you need to leverage is the knowledge of the secret "key". We know this works because this is exactly how locks work in real life too. If I possess someone's bank account password, or the combination to a safe, or the US nuclear codes, then I'm able to produce disproportionate effects to the tiny size of that knowledge, just because I can also rely on the state of the world and its laws being set up in such a way that I can use that knowledge as a pivot to trigger much bigger effects.

To give another example of what you're saying: if you know someone who's the top player in an MMO with huge amounts of resources in the game, equivalent to e.g. 1kb of optimization power, then knowing their 40 bit acount details gets you 1kb of optimization power. This is analogous to your bank account password setup.

I disagree though that you get 1kb of optimization from only 40 bits of observation. Remove every bit of knowledge you have about the game and what the account's is doing and so on, then consider what you could do with 1 bit more info: not much. Certainly not affecting the world w/ 960 bits of optimization. So your existing knowledge about the game is what let's you use the account's resources to optimize the world. This is key: if you start out with O bits of observation, and seeing some extra bits o let's you apply A bits of optimization power, then you have O+o bits of observation are worth A bits of optimization.

Maybe you adressed this point in the rest of the post, but if so, I didn't understand it.

Replies from: dr_s↑ comment by dr_s · 2023-05-04T12:55:45.406Z · LW(p) · GW(p)

So your existing knowledge about the game is what let's you use the account's resources to optimize the world.

This I say explicitly in the post. See conclusions:

besides the observation region , we also need to know a certain amount of bits detailing the rules of the world. In its uncompressed form, this knowledge is always greater than the amount of optimization (for example, with CCSWAP gates, we would need four addresses for each optimized bit);

The bit about locks and lock-like processes comes from the original question I linked at the top; you should go check that (and the accepted answer) for full context. Essentially I set out in this post to actually disprove that answer, because I didn't find it satisfying; I ended up finding that the answer itself is not per se wrong, but it applies with constraints to a reversible system. If you consider uncompressed knowledge about the rules of the world (in your example, the source code of the game), put together with the password, you always have more bits of knowledge than of optimization. However, since compression is a thing (for example, you don't actually know the source code when stealing an account), I doubt that's a hard theorem. All I can say on that is "there are situations in which bits of knowledge can be turned into bits of optimization, but only as long as the world is already set up to allow you to do so". It would be interesting to have a reasonable definition of what a "natural" set of laws would be like and whether lock-like maps could ever spontaneously occur within it.