Causality is Everywhere

post by silentbob · 2024-02-13T13:44:49.952Z · LW · GW · 12 commentsContents

The Non-Existence of Effect Size Zero Why This Matters 1. Nuanced Thinking 2. Interpreting Study Results 3. Nuanced Communication 4. Having Good Priors even when there is Little Empirical Evidence 5. Establishing Helpful Micro Habits Closing Words None 12 comments

Tl;dr: Everything in our world is causally connected in countless ways, which means causal effects are everywhere, and practically anything has systematic effects on almost everything else – it’s just that most of these effects are very close to (but not quite) zero. Studies often fail to measure small effects due to being underpowered, which frequently leads people to believe that some effect “does not exist” (i.e. is 0). I argue that this is a mistake, that almost no effect is truly 0, and that it’s worthwhile to be nuanced about this for a variety of reasons.

In the quest for understanding the world, we often fall into the dichotomy of asking whether X affects Y. Does diet influence mood? Does music affect productivity? Is there a relationship between the stars' positions at your birth and your personality? The common thread in these questions is our search for causality, mapping the invisible lines that shape reality.

Yet, the pursuit often narrows our vision, framing our questions in a binary of effects versus no effects. This binary is a simplification, perhaps a reasonable one for many practical concerns, but a simplification nonetheless. What if, instead of asking if there is an effect, we assumed there always is one, and made an effort to ask for effect sizes and their sign, rather than mere existence? When asking “is there an effect”, we imply that there’s a significant chance of the answer being “no” – that X simply has no impact on Y whatsoever. But this, I argue, is a mistake. A mistake that hints at a fundamental misconception about the nature of causality in our infinitely interconnected world.

Causality is everywhere. Of course we all know well that correlation is not causation, and most causal effects may indeed be swamped by confounding effects, or by noise, or by any of the countless biases working against science’s effective pursuit of truth seeking. Yet, the fact that we have a hard time seeing causality, doesn’t make it less real. As gwern explains, correlation is everywhere. I argue that the same holds for causation (even if on a much lower scale).

Everything is connected. Not in the esoteric way, but rather in the most physical way possible: as long as two happenings in the universe are within each other’s light cone, they will almost always have some causal connection, and usually quite a number of them. This is particularly true for our everyday human matters, and the types of questions that are typically investigated in scientific studies, including RCTs. And while it’s easy to construct a question where it’s clearly impossible for causation to exist – does the composition of my breakfast today affect my blood pressure yesterday? – nobody seriously asks such questions. In practice we tend to ask the kinds of questions where causal connections are very much plausible. Does protein intake affect longevity? Do school uniforms affect student performance? Do violent video games affect violent tendencies in teenagers? Does music affect productivity? For all of these questions, it would be very surprising if the very dense causal net of reality had no direct or indirect path connecting the two properties. And so I argue that causal connections are present in pretty much any conceivable case, and they will lead to non-zero effects in most metrics we could measure.

The Non-Existence of Effect Size Zero

As soon as there are causal pathways from one thing to another, there will be systematic effects. Some of these pathways may, on average, have a positive effect on the measured outcome, and some may have a negative effect. And they will sum up to some total effect (which may of course differ between people, and even between circumstances for any given person).

Let’s say the question we want to answer is how, if at all, classical music affects productivity. There are clearly certain causal pathways, even if their exact nature is hard to assess. But if a person hears music, this has a number of effects, both on a conscious and subconscious level. It may affect their mood, their heart rate, their physical movements, their thoughts, their attention – and all of these things will have some effect on whatever it is that we take as a measure for productivity. Any given one of these causal pathways may have a tiny effect on average, such as increasing productivity of some population of people by 0.1%, or decreasing it by 0.03%, but real, systematic, non-zero effects nonetheless. And if we sum up all these individual effects, it’s exceedingly unlikely for this sum to end up at exactly 0.0%.

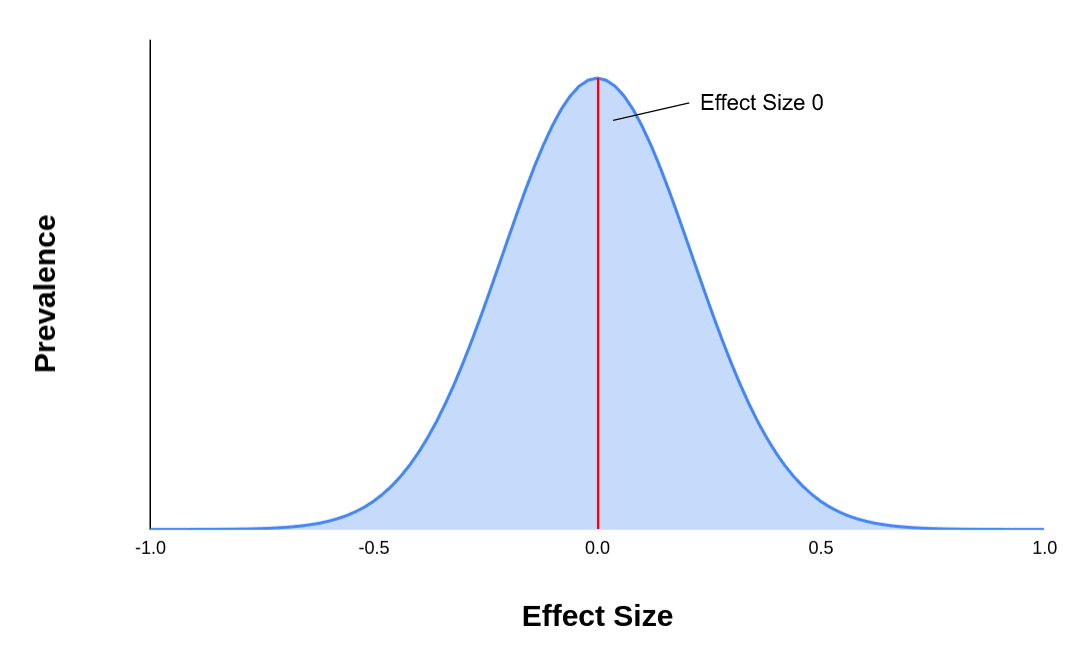

There’s generally a continuum of possible effect sizes. Most effects are close to 0. Depending on how effects are selected, maybe they’re distributed somewhat like a normal distribution, with some effect sizes being positive and some negative:

And while 0 is the mode of this distribution, it’s still just a single point of width 0 on a continuum, meaning the probability of any given effect size being exactly 0, represented by the area of the red line in the picture, is almost 0.

You could of course argue that some tiny effect sizes are maybe not technically 0 but “sufficiently close” to 0 to be considered irrelevant. That we shouldn’t waste our time with some intervention that increases or decreases productivity by something like 0.1%, or in many cases even less than that. Particularly given that we may never be able to reliably measure this kind of effect size with a noisy and vague metric such as “productivity”. Plus, when the average effect size is so small, in many cases this will mean that we can’t make any meaningful predictions for any given individual, because the difference between individuals may be so much larger than the average effect itself.

However, to all of that I would say, where do we draw the line? I agree that a 0.1% change in productivity may not necessarily be worth our attention, given there exist many interventions with much larger effects on productivity, from caffeine intake to hydration to air quality. Also generalization is generally hard. But I still think it’s important to have a nuanced model of the world, and to at least appreciate that such small non-zero effects exist at all, even in cases where we don’t have an easy time identifying them.

Why This Matters

So why even bother with such technicalities? Does it really matter whether some very-close-to-but-not-quite-zero effect exists? I think it does, for a number of reasons.

1. Nuanced Thinking

- When not appreciating that effects are everywhere, even if tiny, it’s easy to fall into “is there an effect or not” style black and white thinking.

- Such black and white thinking arguably nudges us closer to a prior belief like “there either is some noteworthy effect, or there is none”, assigning high probabilities to effect sizes being 0.

- I suspect that this leads to both underestimation of tiny effects (because we assume they are 0) and overestimation of effect sizes when we assume an effect exists.

- It also can lead to prematurely committing on one effect direction: will classical music increase productivity or not? When asking such questions, it’s easy to forget about the possibility of classical music actually decreasing productivity.

2. Interpreting Study Results

- In my experience, many people, even those professionally working with a lot of data, tend to misunderstand what statistical significance means, and seem to use the term almost interchangeably with effect size. I’d say that calling an effect significant is already a mistake. The significance is an attribute of the measurement, not of the (supposed) underlying causal effect. So significance gives us a hint at how reliable a measurement (or study result) is, but in itself tells us very little about how large an effect is, since any level of significance can occur with any effect size[1].

- Relatedly, when a study finds some “insignificant effect” of X on Y, this does not mean that X does not affect Y; X may well cause an increase in Y while the study is merely underpowered[2].

- Another common pattern is that of “study X finds that A affects B”, followed by a failed replication attempt where “study Y shows that A does not affect B”. I suspect that in many such cases – at least those where the initial finding is not overly surprising and one would intuitively assume that the effect points in the given direction rather than in the opposite direction – an effect does in fact exist and is simply smaller than what was found in the initial study, due to regression to the mean. It definitely makes sense to put less trust in the initial study in such cases, but it would be an overcorrection to conclude that “no effect exists”, based on the failed replication (particularly if the replication also measured an effect pointing the same direction as the initial study, but “merely” failed to achieve statistical significance).

- I’m not trying to argue that we should be less skeptical of study findings, or that we should take the replication crisis less seriously; rather I’m making the point that skepticism towards (failed) replications is warranted as well to some level, and that the particular “there is no effect” conclusion is almost always wrong. “We’re unsure about the true effect size and hence treat it as if it were 0” might be an appropriate stance, but probably only in cases where we have a strong prior of the effect size really being extremely close to 0, rather than generally whenever a replication attempt fails to reach significance.

3. Nuanced Communication

- Imagine the following short conversation. Bob: “I believe drinking more water on average improves people’s mood.” - Alice: “I believe it doesn’t”. They certainly seem to have a disagreement. But do they actually? It’s impossible to say without quantification. Maybe Bob means a 0.1 improvement on a 0-10 mood score. Alice would probably be much less quick to disagree about such a claim, than with a purely qualitative one.

- In fact there’s at least two levels that Alice and Bob may disagree on here, and it’s easy to overlook this distinction: they may disagree about drinking more water actually having any positive effect on mood (i.e. Alice thinks water has a negative or zero effect on mood), or they may simply disagree on the threshold at which a >0 effect actually warrants being pointed out and called an “improvement”.

- Similarly, when Bob claims “The color of a car does not impact its risk of ending up in an accident”, what exactly does he mean? Is he really convinced that, if you ran an RCT with a billion cars of randomly chosen colors, there would be no statistically significant difference found between the accident rates of red cars vs black cars? Maybe he does mean that (and if he does, I’d be happy to bet at 999:1 odds that he’s wrong). But maybe he just means “the effect size is negligible compared to other, much more relevant factors, such as following the speed limit and having properly adjusted mirrors and headlights”, in which case I’d assume he may be right[3], but it’s a very different claim from what he actually said.

4. Having Good Priors even when there is Little Empirical Evidence

- If we think about what the most dominant causal pathways between two things are likely to be, we can at times predict whether the effect from one on the other is more likely to be positive or negative. If there are clear reasons to assume that A might increase B, and no clear reasons to assume that A might decrease B, then it’s very likely that A does indeed increase B.

- I’d also assume it’s possible to improve one’s calibration on the sizes of such effects as long as there are some cases of reliably demonstrated very small effect sizes.

- There are many domains where we can't get reliable causal data. Maybe because an intervention is difficult or expensive, or because the measurement itself is difficult to pull off, or because effect sizes are likely so small that it would take extremely large studies to reliably detect them, or because running studies on the thing would be unethical. In all such cases, we should do our best to look at the most likely causal pathways in order to assess in which direction the effect is likely to point.

5. Establishing Helpful Micro Habits [LW · GW]

- If you can find extremely cheap adjustments to your routine or environment which are more likely to have positive than negative effects, they may be worth applying even if there’s little concrete evidence for their efficacy.

- Many trivial changes might have effect sizes of <1%. These are often impractical to verify in studies because there’s no incentive in place to run huge, expensive studies, in hopes of maybe finding a tiny effect size, but still with a risk of finding nothing of significance.

- Still, if you can find a bunch of interventions that cost you almost nothing, but which may on average improve some metric you care about by half a percent (such as your happiness, your impact, your productivity, your salary), that can be a pretty good deal, and one we’d be missing out on if we simply doubted the existence of all such effects merely because no study has ever been large enough to show them.

Closing Words

The replication crisis is real, and people / websites / newspapers still very often err on the side of misinterpreting correlation as causation. It’s undoubtedly a good idea to remain skeptical of any study with outlandish claims and surprising results. Many systematic problems exist both in science and in our individual thinking, which cause us to see patterns where none exist.

With all that said, there’s the flip side: being quick to conclude that some effect does not exist is an overcorrection, and comes at the price of overlooking and misjudging many real effects. If we take for granted that basically everything affects everything else, and it’s always a question of the effect size and direction rather than the effect’s existence, we can achieve a more accurate model of the world, and probably make better predictions about causal effects, even in cases where effects are so small and so hard to measure that we might never find out for sure.

Most effects are tiny, yet a tiny effect applied on a societal level can still affect the lives of many thousands of people. At the same time, stacking a bunch of tiny effects can lead to bigger outcomes. So getting this right is likely worth at least a bit of our attention.

- ^

Of course there’s a dependency between effect size and statistical significance, and power analysis puts them in relation with the sample size required for a study when assuming a certain effect size; I’m just saying here that significance level and effect size are still two separate properties, and it’s important to keep that in mind. Personally, I get more excited about a study showing a huge effect size with p = 0.06 than a tiny effect size with p = 0.04. But many people look purely at significance (in order to answer their “is there an effect or not” question), while effect size remains an afterthought.

- ^

An interesting example of this is this (in?)famous study on mask effectiveness on COVID-19, which was widely cited by "COVID skeptics” (among others) as alleged proof that masks are useless. In fact, the study did however suggest that wearing masks outside might reduce the risk of infection by roughly 15% (95% CI: 46% reduction to 23% increase) – but unsurprisingly the study was way too underpowered to achieve significance for such an effect. Because they a) studied mask wearing outside rather than inside, b) only checked the protection of the wearer themselves rather than protective effect on others, and c) when assessing the power of their study calculated with an expected 50% reduction in COVID infections, which is an almost hilariously large effect for such a specific intervention (which makes me assume that the (insignificant) ~15% effect the study found is likely still greater than the real effect). Despite all that, I’m not even sure if one can blame the study authors; even with their overly generous assumptions they had to run the study with over 6000 participants. In order to measure a, say, 5% effect, which would be much more realistic, the study would have required tens of thousands of participants and much more funding. Still, working with a hypothesis of a 50% reduction in COVID cases through this particular intervention seems almost insane, and kind of unhelpful on a societal level, because of course what people take away from this will be that "wearing masks has no effect", even if the study actually provided (weak) evidence for mask effectiveness rather than against it.

- ^

While there are some studies on the relation between car color and accident risk, all I've seen are observational rather than RCTs, so even when they control for many factors, confounding effects may still dominate the outcome. So while the effect sizes seem pretty large (often in the order of ~10% differences in accident rates between colors), real causal effects are probably much smaller.

12 comments

Comments sorted by top scores.

comment by tangerine · 2024-02-13T23:09:03.794Z · LW(p) · GW(p)

If causality is everywhere, it is nowhere; declaring “causality is involved” will have no meaning. It begs the question whether an ontology containing the concept of causality is the best one to wield for what you’re trying to achieve. Consider that causality is not axiomatic, since the laws of physics are time-reversible.

Replies from: Viliam, silentbob↑ comment by Viliam · 2024-02-14T09:54:21.289Z · LW(p) · GW(p)

Instead of "is there causality?" we should ask "how much causality is there?".

The closest analogy to the old question would be "is there enough causality (for my purpose)?". If drinking water improves my mood by 0.0001%, then drinking water is not a cost-effective way to improve my mood.

I am not denying that there is a connection, I am just saying it does not make sense for me to act on it.

↑ comment by silentbob · 2024-02-17T12:48:23.418Z · LW(p) · GW(p)

A basic operationalization of "causality is everywhere" is "if we ran an RCT on some effect with sufficiently many subjects, we'd always reach statistical significance" - which is an empirical claim that I think is true in "almost" all cases. Even for "if I clap today, will it change the temperature in Tokyo tomorrow?". I think I get what you mean by "if causality is everywhere, it is nowhere" (similar to "a theory that can explain everything has no predictive power"), but my "causality is everyhwere" claim is an at least in theory verifiable/falsifiable factual claim about the world.

Of course "two things are causally connected" is not at all the same as "the causal connection is relevant and we should measure it / utilize it / whatever". My basic point is that assuming that something has no causal connection is almost always wrong. Maybe this happens to yield appropriate results, because the effect is indeed so small that you can simply act as if there was no causal connection. But I also believe that the "I believe X and Y have no causal connection at all" world view leads to many errors in judgment, and makes us overlook many relevant effects as well.

comment by Richard_Kennaway · 2024-02-13T17:18:19.641Z · LW(p) · GW(p)

stacking a bunch of tiny effects can lead to bigger outcomes.

Only if they mainly point in the same direction. But for effects so small you can never experimentally separate them from zero, you can also never experimentally determine their sign. In all the hypothetical examples you gave of undetectably small correlations, you had a presumption that you knew the sign of the effect, but where did that come from if it’s experimentally undetectable?

Replies from: silentbob↑ comment by silentbob · 2024-02-17T13:01:03.588Z · LW(p) · GW(p)

For many such questions it's indeed impossible to say. But I think there are also many, particularly the types of questions we often tend to ask as humans, where you have reasons to assume that the causal connections collectively point in one direction, even if you can't measure it.

Let's take the question whether improving air quality at someone's home improves their recovery time after exercise. I'd say that this is very likely. But I'd also be a bit surprised if studies were able to show such an effect, because it's probably small, and it's probably hard to get precise measurements. But improving air quality is just an intervention that is generally "good", and will have small but positive effects on all kinds of properties in our lives, and negative effects on much fewer properties. And if we accept that the effect on exercise recovery will not be zero, then I'd say there's a chance of something like 90% that this effect will be beneficial rather than detrimental.

Similarly, with many interventions that are supposed to affect behavior of humans, one relevant question that is often answerable is whether the intervention increases or reduces friction. And if we expect no other causal effect that may dominate that one, then often the effect on friction may predict the overall outcome of that intervention.

comment by Dagon · 2024-02-13T16:18:25.634Z · LW(p) · GW(p)

There's some truth here, in the same sense that probability assignments can never be 0 or 1. There's always some chance, and always some causal link.

HOWEVER, a lot of things can get so low that it's unmeasurable and inconsequential at the level we're talking about. Different purposes of modeling will have different thresholds for rounding to 0, but almost all of them will benefit from doing so. Making this explicit will sometimes help, and is sometimes useful for reminding yourself of your limits of measurement and understanding.

Unmeasurably small does NOT mean nonexistent. But it DOES mean it's small. If you also have analytic reasons to think it's VERY SMALL (say, 0.000001), or you know of much larger features (say 100x or more), it's perfectly reasonable to ignore the tiny ones.

Replies from: silentbob↑ comment by silentbob · 2024-02-13T16:25:42.016Z · LW(p) · GW(p)

Indeed, I fully agree with this. Yet when deciding that something is so small that it's not relevant, it's (in my view anyway) important to be mindful of that, and to be transparent about your "relevance threshold", as other people may disagree about it.

Personally I think it's perfectly fine for people to consciously say "the effect size of this is likely so close to 0 we can ignore it" rather than "there is no effect", because the former may well be completely true, while the latter hints at a level of ignorance that leaves the door for conceptual mistakes wide open.

comment by Noosphere89 (sharmake-farah) · 2024-02-15T01:47:42.640Z · LW(p) · GW(p)

I want to note that just because the probability is 0 for X happening does not in general mean that X can never happen.

A good example of this is that you can decide with probability 1 whether a program halts, but that doesn't let me turn it into a decision procedure on a Turing Machine that will analyze arbitrary/every Turing Machine and decide whether they halt or not, for well known reasons.

(Oracles and hypercomputation in general can, but that's not the topic for today here.)

In general, one of the most common confusions on LW is assuming that probability 0 equals the event can never happen, and probability 1 meaning the event must happen.

This is a response to this part of the post.

Replies from: silentbobAnd while 0 is the mode of this distribution, it’s still just a single point of width 0 on a continuum, meaning the probability of any given effect size being exactly 0, represented by the area of the red line in the picture, is almost 0.

↑ comment by silentbob · 2024-02-17T13:05:35.603Z · LW(p) · GW(p)

You're right of course - in the quoted part I link to the wikipedia article for "almost surely" (as the analogous opposite case of "almost 0"), so yes indeed it can happen that the effect is actually 0, but this is so extremely rare on a continuum of numbers that it doesn't make much sense to highlight that particular hypothesis.

comment by the gears to ascension (lahwran) · 2024-02-14T00:08:00.356Z · LW(p) · GW(p)

Well, maybe. We can definitely put some very strong probabilistic constraints on where causality is; we typically call those physical laws. some sort of locality (quantum messes with this one, but since you still can't communicate FTL it still has a strong notion of locality); the symmetries of the universe; relativity; there are some quantum ones I lose track of. With conservation of energy and the empirical observation that most effects don't massively dissipate, we can be confident that systems mostly don't pass too much energy, and so most things are mostly "practical causality"-disconnected even within a fairly short time window. There are exceptions to this, eg if the nukes were launched the whole world would get causally connected for practical purposes pretty quick. The internet is also pretty low latency.

So my point is, I agree that the network of interactions is reasonably well connected. But it's not everywhere, that's taking it too far. Yes, some effect sizes are small but matter. But the range of small effect sizes is enormous, and if I slap the floor of my room and tell no one, then the effect size that will have on the temperature in china tomorrow would be measured in terms of number of leading zeros. If I push a box off a desk, then the effect size will be 1.

comment by Richard_Kennaway · 2024-02-13T17:17:33.226Z · LW(p) · GW(p)

“When I hear the word ‘nuance’ I reach for my sledgehammer.” — me.

Phenomena can be continuous, but decision is discontinuous. Shall I do X in the hope of influencing Y — yes or no? As the wag said, what can I do with a 30% chance of rain? Carry one third of an umbrella? No, I take the umbrella or I don’t, with no “nuance” involved.

comment by silentbob · 2024-02-17T13:22:00.663Z · LW(p) · GW(p)

Another operationalization for the mental model behind this post: let's assume we have two people, Zero-Zoe and Nonzero-Nadia. They are employed by two big sports clubs and are responsible for the living and training conditions of the athletes. Zero-Zoe strictly follows study results that had significant results (and no failed replications) in her decisions. Nonzero-Nadia lets herself be informed by studies in a similar manner, but also takes priors into account for decisions that have little scientific backing, following a "causality is everywhere and effects are (almost) never truly 0" world view, and goes for many speculative but cheap interventions, that are (if indeed non-zero) more likely to be beneficial rather than detrimental.

One view is that Nonzero-Nadia is wasting her time and focuses on too many inconsequential considerations, so will overall do a worse job than Zero-Zoe as she's distracted from where the real benefits can be found.

Another view, and the one I find more likely, is that Nonzero-Nadia can overall achieve better results (in expectation), because she too will follow the most important scientific findings, but on top of that will apply all kinds of small positive effects that Zero-Zoe is missing out on.

(A third view would of course be "it doesn't make any difference at all and they will achieve completely identical results in expectation", but come on, even an "a non-negligible subset of effect sizes is indeed 0"-person would not make that prediction, right?)