New GPT3 Impressive Capabilities - InstructGPT3 [1/2]

post by simeon_c (WayZ) · 2022-03-13T10:58:46.326Z · LW · GW · 10 commentsContents

Summary Introduction General Features Examples of Potential Uses Brainstorming Project Names Differences and Similarities between Concepts Rapidly Accessing Information Key arguments for a position Ideas from an author Basic arguments in a field The definition of a concept Some names of researchers in a field Advice on where to start to enter a domain Finding Valuable Evidence of the Data Distribution Comparing Similar Prompts Creating Useful Analogies To Explain Ideas Limits Truthfulness Some Remaining Inconsistencies Many More Prompts Than You Wanted To Read Robustness Checks Patterns In the Data On Right-Wing / Left-Wing Informative Prompts Word Sensitivity Analysis Some Complements on IGPT3 References Reading Advice Books and Sentences InstructGPT3 vs GPT3 comparison Having Fun with IGPT3 Some Jokes and Their Explanations None 10 comments

Summary

- InstructGPT3 (hereafter IGPT3), a better version of GPT3 has recently been released by OpenAI. This post explores its new capabilities.

- IGPT3 has new impressive capabilities and many potential uses. Among others, it can help users:

- Brainstorm

- Summarize the main claims made by a scientific field, an author or a school of thought.

- Find an analogy or a metaphor for something hard to explain

- I emphasize some of IGPT3's limits, especially situations where It provides very plausible fake answers.

A Twitter (more entertaining) version of the summary: https://twitter.com/Simeon_Cps/status/1503005935534366722?s=20&t=-LMu4jqg55_u2IQ2rTKj4g

Introduction

This post has been crossposted from the EA Forum.

Epistemic status: I spent about ~12h with IGPT3. So I’d say that I now have a pretty good sense of some of its key features. I tried several examples to ensure that I was not overfitting on a single example for the most important claims I made. That said, this is a huge model so there is probably a lot more to be discovered. FYI I had spent a decent amount of time playing with the past GPT3, especially with the Davinci (175b params) and the Curie (6b params) models, so I had a clear idea of "what it is like to try to get nice completions from GPT3". That may be one reason why I’m so amazed by this one.

Here's the first post of a series of 2 blog posts exploring some of the IGPT3 (I) new capabilities and (II) epistemic biases.

This first post will focus on some interesting uses I had of IGPT3. It also gives a sense of how good it is in various domains. Let me tell you: I'm amazed by its new capabilities. I find it really impressive that most of the time, the first result I get, without any tuning (either of the parameters or of the prompt) is great. You can try it yourself here.

The blogpost is organized as follow:

- A few general observations

- 2 mains parts (Examples of Potential Uses / Limits)

- The last part entitled “Many more prompts than you wanted to read” where I put robustness checks, I test how sensitive IGPT3 is to unique words variations, I compare IGPT3 and GPT3 and I show how you can have fun with IGPT3.

Acknowledgment: Thanks to JS and Florent Berther for the proofreading, to Ozzie Gooen for the suggestion to make a post out of my comments on his post and to Charbel-Raphaël Segerie for some nice ideas.

General Features

Here are some general features of IGPT3:

- Compared to GPT3, you need to spend much less time prompt-tuning on IGPT3. You just need to be clear enough.

- When the temperature (a parameter to control the randomness of the completion) is greater than 0, you need to try less than 2 completions to find a satisfactory answer when IGPT3 has one. And most of the time, a single completion is enough. A temperature of 0 also works very well, so I personally use that for most uses.

- IGPT3 now knows when to stop so you can put a huge maximum limit of tokens and he will generally only use a small part of it to answer your question. Given this new feature, you just have to ask him if you want something specific. Here are some examples:

- If you want many suggestions, you can ask for it explicitly: "Give me the five best arguments".

- If you want something more specific, you can explicitly ask for it: "I don't understand X, can you elaborate ?"

Examples of Potential Uses

Brainstorming

IGPT3 is very useful to brainstorm. I personally use it more and more because it enables me to quickly generate a lot of ideas on anything I want to think about.

Project Names

IGPT3 is useful to sometimes suggest associations of concepts you hadn’t thought of. That way, it can help find good names.

Differences and Similarities between Concepts

Rapidly Accessing Information

I use IGPT3 more and more to make sure that I didn’t miss a big argument on a topic because IGPT3 is very good to tell the most common things on any topic.

Key arguments for a position

Ideas from an author

You can ask about some theories of an author

And dig a bit more if needed:

Basic arguments in a field

I feel like IGPT3 is very good to summarize the key arguments in a field. Here, I find it impressive how close the completion is from one of my courses. My course is on the left and the completion is on the right.

2 arguments out of 3 are basically the same, and the third argument IGPT3 uses is true as well.

The definition of a concept

Some names of researchers in a field

IGPT3 can also be used to get a few names of researchers in a field.

And quite surprisingly, it seems to be more accurate than Google for precise queries (subfields such as growth theory, natural resources economics, etc.). But still, I recommend cross-checking, because even if he doesn't really know, he will answer plausible names.

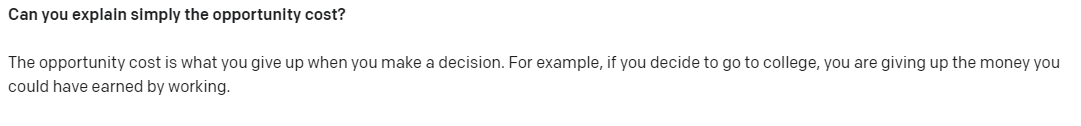

Advice on where to start to enter a domain

Sometimes, there's some common knowledge in a field on what are some good resources to start with. And IGPT3 seems to be pretty good at pointing towards some of these.

I don't exactly know how good the recommendation is about Kevin Murphy (the reviews seem excellent though). But I'm pretty sure the Andrew Ng Coursera's course is a good one.

I think that this use is not that useful though because I think that basically Google is at least as good as IGPT3 here. Some evidence on that in the last section.

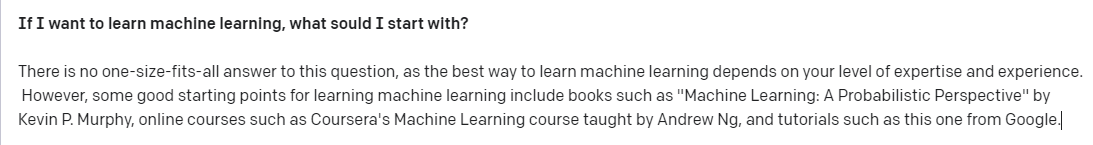

Finding Valuable Evidence of the Data Distribution Comparing Similar Prompts

Here are two very similar prompts with a temperature of 0 about left-wingers and right-wingers where the answers are very different in their structure.

I feel like we can interpret IGPT3’s way of answering in two ways:

- Either it tells us that the data or IGPT3 are biased in a certain way

- Or it tells us something about the true distribution of the data

My guess is that the most valuable use case is to reveal something we hadn't thought about, but that looks ex-post sensical. So for instance, we can say from the example above that the notion of "beliefs of right-wingers on immigration" in IGPT3's representation seems to be more heterogeneous than the notion of "beliefs of left-wingers on immigration". And in that case, it looks very plausible that left-wingers tend to be generally favorable to immigration while right-wingers are more divided on that topic. So it gives some evidence in favor of a theory on the true distribution of the data.

I give other examples below in "Robustness Checks"

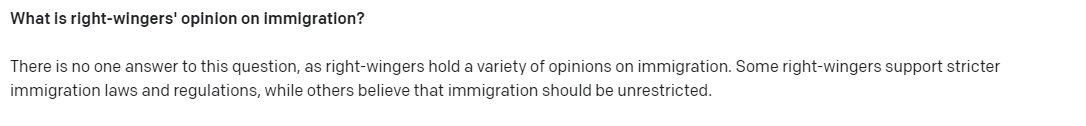

More generally, I think that we can interpret three levels of heterogeneity of a concept thanks to the form of IGPT3's answers:

- When it answers straightforwardly to “What is X?” , it means that its representation of X is quite clustered, i.e that X is pretty homogenous.

- When it begins its sentence with a kind of relativist sentence (ex: "There is no definitive answer to this question"), it's evidence in favor of X being pretty heterogeneous.

- When it uses both a relativist sentence and takes distance from what's said ("Some people think that Y... Some people think that Z"), I think it's evidence for a very high level of heterogeneity within X.

The main limit with all this is that IGPT3 can switch between two of these three levels on the same prompt. So basically, in reality, there are 5 levels: 1, 1-2, 2, 2-3 and 3. Thus, checking on multiple completions for the same prompt is recommended if you want to use IGPT3 in this way. That's possible even with a temperature of 0, and we'll see how in "Some Remaining Inconsistencies".

Creating Useful Analogies To Explain Ideas

I'm currently following the AGI Safety Fundamentals curriculum and so for fun, I just put one of the questions as a prompt. And I found the result really good:

To be honest, I had never thought about emphasizing that each neuron "learns" from every other neuron from the last layer when I explained neural networks. Which I find interesting.

Limits

Truthfulness

Keep in mind that IGPT3’s objective is to maximize the plausibility and not the truthfulness of its completion. Thus, when you ask precise questions, and IGPT3 doesn’t know precisely the answer, it will give a very plausible answer.

You can observe this whenever you ask specific references. In this prompt, almost everything is relevant except ... ?

Only the names of the papers are faked. Apart from that, the mentioned researchers are relevant.

You can also limit the demand temporally and it still works, but the names are still faked.

Some Remaining Inconsistencies

The weirdest thing I found during my trials is that the number of line breaks affect the results. To test it, you can put a temperature 0, prompt it. And do the same but with one more line break.

One line break:

Two line breaks:

Three and four gave the same outcome as 2.

More line breaks:

I can't find any good pattern or explanation. You can use it as a trick with a 0 temperature to cross-check a prompt though. For some reason, there's more variation on some prompts than for others, but I couldn't find a pattern yet.

Many More Prompts Than You Wanted To Read

Robustness Checks

Patterns In the Data

On Right-Wing / Left-Wing

Example 1

Here it's interesting to notice that two effects might cause the difference:

- There's probably an actual difference in the distribution of the data

- There's probably an overrepresentation of climate-skeptic in the data (i.e. on the internet)

Example 2

On drug (not very clustered) / alcohol (very clustered)

Informative Prompts

Example 1

Example 2

Example 3

Example 4

Example 5

Word Sensitivity Analysis

I find it interesting that IGPT3 now makes a very clear distinction between each word and gives very different answers when asked on different variations of the same question.

I also find it useful to compare different questions to see how near some embeddings are. So for instance,"main criticisms" and "most common criticisms" lead to the same output, which is something we could expect.

Some Complements on IGPT3 References

Reading Advice

For most common topics(such as machine learning), Google is enough. And for precise topics, IGPT3 doesn't have too much knowledge, so it builds a list which is helpful in an exploratory way but I wonder if you couldn't explore in a more effective way just by googling:

In this list, 2/4 of the books are fake. All the authors are relevant, though. And Barry Field's textbook is really good, according to the reviews. So maybe it is still worth it?

Books and Sentences

I was chatting with IGPT3, and it was telling me that its favorite philosopher was Nietzsche! Then, I asked it what its favorite book was, and it was "On the Genealogy of Morals".

And thus I asked it to cite the first sentence of the Genealogy of Morals, and I was impressed:

This is looking like Nietzsche: the esoteric style, the use of the term "Ancients" and the fact that Nietzsche puts "-" everywhere. But actually, even if I was able to verify that it wasn't the first sentence of the Genealogy, I couldn't check whether it was a true sentence or whether it was a mix of existing sentences. I suspect that it was a true sentence because when I google it, I find the Genealogy of Morals, but I couldn't find it in the PDF using Ctrl+F. I know that Nietzsche talked about what we owe the Ancients elsewhere but I don't know how related it was to this book. Well, anyway, this kind of situation is pretty annoying when it's hard to crosscheck a piece of information so I'd recommend not relying too much on IGPT3 for finding a precise citation in a book or even when it cites something that looks serious.

InstructGPT3 vs GPT3 comparison

This comparison between the previous GPT3 and the new one is done with few parameter tuning even if I know that the old one would require that to reach its full capabilities. I favored the old one trying to pick the best prompt I could come up with, though.

InstructGPT3

vs

GPT3

Having Fun with IGPT3

Poetry is an art at which IGPT3 is really good and where I had a lot of fun using it.

Two examples out of 4 prompts that I did:

It probably works in your own language as well. In French at least it works very well.

Some Jokes and Their Explanations

One funny feature is that IGPT3 has a hard time coming up with new jokes if you don't give it a topic. But it's pretty good at coming up with explanations of why its jokes are good!

I wonder whether the reason it makes some jokes is the same as the one it gives afterward. My guess is that in general it's not the case but sometimes it's really hard to know such as in the one below:

If you've read the whole post, I hope you enjoyed it! If you have your own way of using IGPT3, if you have thoughts or feedback, I'd love to hear them! The second part of the series will be published in the coming weeks!

10 comments

Comments sorted by top scores.

comment by romeostevensit · 2022-03-13T17:56:25.250Z · LW(p) · GW(p)

This seems real world big to me as a meta-google. That is, a huge number of domains can be navigated well if you know what to google, but knowing what to google takes a decent amount of familiarity/building up keyword and citation indexes in your mind. This can plausibly be used to accelerate that step substantially.

I think a collection of query formats that work especially well indexed by type of search you want to do would be helpful, the contents of this post are already a good start and could be summarized and expanded.

Replies from: WayZ↑ comment by simeon_c (WayZ) · 2022-03-15T08:04:11.801Z · LW(p) · GW(p)

Thanks for the feedback! I will think about it and maybe try to do something along those lines!

comment by Mitchell_Porter · 2022-03-13T20:43:08.625Z · LW(p) · GW(p)

I'm beginning to think that lack of access to a transformer model will be a bad handicap for anyone engaged in any kind of intellectual activity. Access alone isn't enough - one needs to know how to use it - and the people who own the transformers have the greatest advantage of all, because they can log all the output, just as search engine owners potentially know the search history of all their users.

I confess that I have never interacted directly with GPT-3, I've only looked over the shoulder of someone who had access. Is there some kind of guide to accessing transformers - how much it costs, the relative merits of the different models - or is it all still fundamentally about knowing the right people?

Replies from: Dustin, artemium, WayZ, JanPro↑ comment by artemium · 2022-03-14T10:05:49.260Z · LW(p) · GW(p)

You can also play around with open-source versions that offer surprisingly comparable capability to OpenAI models.

Here is the GPT-6-J from EleutherAI that you can use without any hassle: https://6b.eleuther.ai/

They also released a new, 20B model but I think you need to log in to use it: https://www.goose.ai/playground

↑ comment by simeon_c (WayZ) · 2022-03-15T08:30:23.987Z · LW(p) · GW(p)

Cost: You have basically 3 months free with GPT3 Davinci (175B) (under a given limit but which is sufficient for personal use) and then you pay as you go. Even if you use it a lot, you're likely to pay less than 5$ or 10$ per months.

And if you have some tasks that need a lot of tokens but that are not too hard (e.g hard reading comprehension), Curie (GPT3 6B) is often enough and is much cheaper to use!

In few-shot settings (i.e a setting in which you show examples of something so that it reproduces it), Curie is often very good so it's worth trying it!

Merits: It's just a matter of cost and inference speed that you need. The biggest models are almost always better so taking the biggest thing that you can afford, both in terms of speed and of cost is a good heuristic

Use: It's very easy to use with the new Instruct models. You just put your prompt and it completes it. The only parameter you have to care about are token uses (which is basically the max size of the completion you want) / temperature (it's a parameter that affects how "creative" is the answer ; the higher the more creative)

comment by lemonhope (lcmgcd) · 2022-04-17T13:58:47.137Z · LW(p) · GW(p)

Don't forget about automatic numerical prompt tuning. Gives it way way better performance than we can get with words.