Where are the red lines for AI?

post by Karl von Wendt · 2022-08-05T09:34:41.129Z · LW · GW · 10 commentsContents

10 comments

Thanks to Daniel Kokotajlo, Jan Hendrik Kirchner, Remmelt Ellen, Berbank Green, Otto Barten and Olaf Voß for helpful suggestions and comments.

As AI alignment remains terribly difficult [LW · GW] and timelines appear to be dwindling [LW · GW], we must face the likely situation that within the next 20 years we will be able to build an AI that poses an existential threat [LW · GW] before we know how to control it. In this case, our only chance to avert a catastrophe will be to collectively refrain from developing such a “dangerous” AI. But what exactly does that mean?

It seems obvious that an AI which pursues the wrong goal and is vastly more intelligent than any human would be “dangerous” in the sense that it would likely be unstoppable and probably lead to an existential catastrophe. But where, exactly, is the tipping point? Where is the line between harmless current AIs, like GPT-3 or MuZero, and a future AI that may pose an existential threat?

To put the question differently: If we were asked to draft a global law that prohibits creating “dangerous AI”, what should be written in it? Which are the things that no actor should ever be allowed to do, the “red lines” no one should ever cross, at least until there is a feasible and safe solution to the alignment problem? How would we even recognize a “dangerous AI”, or plans to build one?

This question is critical, because if the only way to avert an existential risk is to refrain from building a dangerous AI, we need to be very sure about what exactly makes an AI “dangerous” in this sense.

It may seem impossible to prevent all of humanity from doing something which is technically feasible. But while it is often difficult to get people to agree on any kind of policy, there are already many things which are not explicitly forbidden, but most people don’t do anyway, like letting their children play with radioactive toys, eating any unidentifiable mushrooms they find in the woods, climbing under a truck to drink wine while it is driving at full speed on the highway or drinking aquarium cleaner as a treatment against Covid. There is a common understanding that these are stupid things to do because the risk is much greater than the possible benefit. This common understanding of dangerousness is all that is needed to keep a very large proportion of humanity from doing those things.

If we could create a similar common understanding of what exactly the necessary and sufficient conditions are that turn an AI into an existential threat, I think there might be a chance that it wouldn’t be built, at least not for some time, even without a global law prohibiting it. After all, no one (apart maybe from some suicidal terrorists) would want to risk the destruction of the world they live in. There is no shareholder value to be gained from it. The expected net present value of such an investment would be hugely negative. There is no personal fame and fortune waiting for the first person to destroy the world.

Of course, it may not be so easy to define exact criteria for when an AI becomes “dangerous” in this sense. More likely there will be gray areas where the territory becomes increasingly dangerous. Still, I think it would be worthwhile to put significant effort into mapping that territory. It would help us with governing AI development and might lead to international treaties and more cautious development in some areas. In the best case, it could even help us define what “safe AI” really means, and how to use its full potential without risking our future. As an additional benefit, if a planned AI system can be identified as potentially “dangerous” beforehand, the burden of proof that their containment and control measures are fail-safe would lie with the people intending to create such a system.

In order to determine the “dangerousness” of an AI system, we should avoid the common mistake of using an anthropomorphic benchmark. When we currently talk about existential AI risks, we usually use terms like “artificial general intelligence” or “super-intelligent AI”. This seems to imply that AI gets dangerous at some point after it reaches “general problem-solving capabilities on at least human level”, so this would be a necessary condition. But this is misleading. First of all, it can lead people to underestimate the danger because they falsely equate “first arrival of dangerous AI” with “the time we fully understand the human brain” [EA · GW]. Second, AI is already vastly super-intelligent in many narrow areas. A system that could destroy the world without being able to solve every problem on human level is at least conceivable. For example, an AI that is superhuman at strategy and persuasion could manipulate humans in a way that leads to a global nuclear war, even though it may not be able to recognize images or control a robot body in the real world. Third, as soon as an AI would gain general problem-solving capabilities on human level, it would already be vastly superhuman in many other aspects, like memory, speed of thought, access to data, ability to self-improve, etc., which might make it an invincible power. This has been illustrated in the following graphic (courtesy of AI Impacts, thanks to Daniel Kokotajlo [LW(p) · GW(p)] for pointing it out to me):

The points above indicate that the line between “harmless” and “dangerous” must be somewhere below the traditional threshold of “at least human problem-solving capabilities in most domains”. Even today’s narrow AIs often have significant negative, possibly even catastrophic side effects (think for example of social media algorithms pushing extremist views, amplifying divisiveness and hatred, and increasing the likelihood of nationalist governments and dictatorships, which in turn increases the risk of wars). While there are many beneficial applications of advanced AI, with the current speed of development, the possibility of things going badly wrong also increases. This makes it even more critical to determine how exactly an AI can become “dangerous”, even if it is lacking some of the capabilities typically associated with AGI.

It is beyond the scope of this post to make specific recommendations about how “dangerousness” could be defined and measured. This will require a lot more research. But there are at least some properties of an AI that could be relevant in this context:

- Broadness (of capabilities): Today’s narrow AIs are obviously not an existential threat yet. As the broadness of domains in which a system is capable grows, however, the risk of the system exhibiting unforeseen and unwanted behavior in some domain increases. This doesn’t mean that a narrow AI is necessarily safe (see example above), but broadness of capabilities could be a factor in determining dangerousness.

- Complexity: The more complex a system is, the more difficult it is to predict its behavior, which increases the likelihood that some of this behavior will be undesirable or even catastrophic. Therefore, all else being equal, the more complex a system is, measured for example by the number of parameters of a transformer neural network, the more dangerous.

- Opaqueness: Some complex systems are easier to understand and predict than others. For example, symbolic AI tends to be less “opaque” than neural networks. Tools for explainability can help reduce an AI's opaqueness. The more opaque a system is, the less predictable and the more dangerous.

- World model: The more an AI knows about the world, the better it becomes at making plans about future world states and acting effectively to change these states, including in directions we don’t want. Therefore, the scope and precision of its knowledge about the real world may be a factor of its dangerousness.

- Strategic awareness (as defined by Joseph Carlsmith, see section 2.1 of this document): This may be a critical factor in the dangerousness of an AI. A system with strategic awareness realizes to some extent that it is a part of its environment, a necessary element of its plan to achieve its goals, and a potential object of its own decisions. This leads to instrumental goals, like power-seeking, self-improvement, and preventing humans from turning it off or changing its main goal. The more strategically aware an AI becomes, the more dangerous.

- Stability: A system that dynamically changes over time is less predictable, and therefore more dangerous, than a system that is stable. For example, an AI that learns in real time and is even able to self-improve should in general be considered more dangerous than a system that is trained once and then applied to a task without any further changes.

- Computing power: The more computing power a system has, the more powerful, and therefore potentially dangerous, it becomes. This also applies to processing speed: The faster a system can decide and react, the more dangerous, because there is less time to understand its decisions and correct it if necessary.

One feature that I deliberately did not include in the list above is “connectivity to the outside world”, e.g. access to the internet, sensors, robots, or communication with humans. An AI that is connected to the internet and has access to many gadgets and points of contact can better manipulate the world and thus do dangerous things more easily. However, if an AI would be considered dangerous if it had access to some or all of these things, it should also be considered dangerous without it, because giving such a system access to the outside world, either accidentally or on purpose, could cause a catastrophe without further changing the system itself. Dynamite is considered dangerous even if there is no burning match held next to it. Restricting access to the outside world should instead be regarded as a potential measure to contain or control a potentially dangerous AI and should be seen as inherently insecure.

This list is by no means complete. There are likely other types of features, e.g. certain mathematical properties, which may be relevant but which I don’t know about or don’t understand enough to even mention them. I only want to point out that there may be objective, measurable features of an AI that could be used to determine its “dangerousness”. It is still unclear, however, how relevant these features are, how they interact with each other, and whether there are some absolute thresholds that can serve as “red lines”. I believe that further research into these questions would be very valuable.

10 comments

Comments sorted by top scores.

comment by .CLI (Changbai Li) · 2022-08-06T10:32:17.676Z · LW(p) · GW(p)

The strategic awareness property would be an interesting one to measure. Which existing system would you say are more or less strategically aware? Are there examples we could point toward, like the social media algorithm one?

Replies from: Karl von Wendt↑ comment by Karl von Wendt · 2022-08-07T09:45:03.998Z · LW(p) · GW(p)

I don't think that any current AIs are strategically aware of themselves. I guess the closest analogy is an AI playing ATARI games: It will see the sprite it controls as an important element of the "world" of the game, and will try to protect it from harm. But of course AIs like MuZero have no concept of themselves as being an AI that plays a game. I think the only example of agents with strategic awareness that currently exists are we humans ourselves, and some animals maybe.

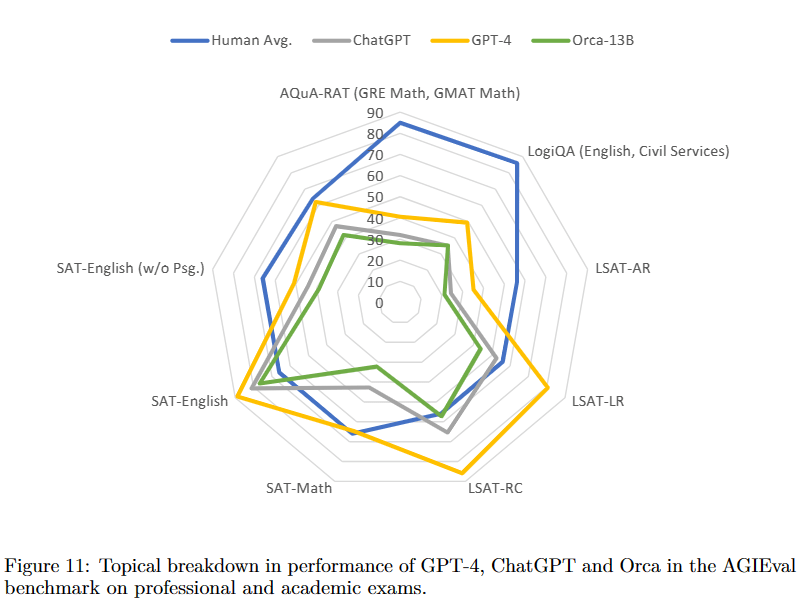

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-07T22:24:16.857Z · LW(p) · GW(p)

Blast from the past: Reading this recent paper I happened across this diagram:

↑ comment by Karl von Wendt · 2023-06-08T05:01:12.899Z · LW(p) · GW(p)

Thank you! Very interesting and a little disturbing, especially the way the AI performance expands in all directions simultaneously. This is of course not surprising, but still concerning to see it depicted in this way. It's all too obvious how this diagram will look in one or two years. Would also be interesting to have an even broader diagram including all kinds of different skills, like playing games, steering a car, manipulating people, etc.

comment by Remmelt (remmelt-ellen) · 2022-08-05T11:23:38.392Z · LW(p) · GW(p)

A specific cruxy statement that I disagree on:

An AI that is connected to the internet and has access to many gadgets and points of contact can better manipulate the world and thus do dangerous things more easily. However, if an AI would be considered dangerous if it had access to some or all of these things, it should also be considered dangerous without it, because giving such a system access to the outside world, either accidentally or on purpose, could cause a catastrophe without further changing the system itself. Dynamite is considered dangerous even if there is no burning match held next to it. Restricting access to the outside world should instead be regarded as a potential measure to contain or control a potentially dangerous AI and should be seen as inherently insecure.

My disagreement here is threefold:

- The above statement appears to assume that dangerous transformative AI has already been created, whereas ‘red lines’ set through shared consensus and global regulation should be set to prevent the creation of such AI in the first place (with a wide margin of safety to account for unknown unknowns and that some actors will unilaterally attempt to cross the red lines anyway).

- My rough sense is that the most dangerous kind of ‘general’ capabilities that could be developed in self-learning machine architectures are those that can be directed to enact internally modelled changes over physical distances within many different contexts of the outside world. These are different kind of capability than eg. containing general knowledge about facts of the world, or of say making calibrated predictions of the final conditions of linear or quasi-linear systems in the outside world.

Such 'real world' capabilities seem to need many degrees of freedom in external inputs and outputs to be iteratively trained into a model.

This is where the analogy of AI's potential with dynamite's potential for danger does not hold:

- Dynamite has explosive potential from the get go (fortunately limited to a physical radius) but stays (mostly) chemically inert after production. It does not need further contact points of interaction with physical surroundings to acquire this potential for human-harmful impact.

- A self-learning machine architecture gains increasing potential for wide-scale human lethality (through general modelling/regulatory functions that could be leveraged or repurposed to modify conditions of the outside environment in self-reinforcing loops that humans can no longer contain) via long causal trajectories of the architecture's internals having interacted at many contact points with the outside world in the past. The initially produced 'design blueprint' does not immediately acquire this potential through production of needed hardware and initialisation of model weights.

If engineers end up connecting up more internet channels, sensors and actuators for large ML model training and deployment while continuing to tinker with the model’s underlying code base, then from a control engineering perspective, they are setting up a fragile system that is prone to inducing cascading failures in the future. Engineers should IMO not be connecting up what amounts to architectures that open-endedly learn spaghetti code and autonomously enact changes in the real world. This, in my view, would be an engineering malpractice where practitioners are grossly negligent in preventing risks to humans living everywhere around the planet.

- You can have hidden functional misalignments selected for through local interactions of code internal to the architecture with their embedded surroundings. Here are arguments I wrote on that:

A model can be trained to cause effects we deem functional but under different interactions with structural aspects of the training environment than we expected. Such a model’s intended effects are not robust to shifts of the distribution of input data received when the model is deployed in new environments. Example: in deployment this game agent ‘captures’ a wall rather than the coin it got trained to capture (incidentally next to the right-most wall).

Compared to side-scroller games, real-life interactions are much more dimensionally complex. If we train a Deep RL model on a high-bandwidth stream of high-fidelity multimodal inputs from the physical environment in interaction with other agentic beings, we have no way of knowing whether any hidden causal structure got selected for and stays latent even during deployment test runs… until a rare set of interactions triggers it to cause outside effects that are out of line.

Core to the problem of goal misgeneralization in machine learning is that latent functions of internal code are being expressed under unknown interactions with the environment. A model that coherently overgeneralizes functional metrics over human contexts is concerning but trackable. Internal variance being selected to act out of line all over the place is not trackable.

Note that an ML model trains on signals that are coupled to existing local causal structures (as simulated on eg. localized servers or as sensed within local physical surroundings). Thus, the space of possible goal structures that can be selected for within an ML model is constrained by features that can be derived from data inputs received from local environments. Goals are locally selected for and thus partly non-orthogonal (cannot vary independently) with intelligence.

↑ comment by Karl von Wendt · 2022-08-05T12:53:08.806Z · LW(p) · GW(p)

The above statement appears to assume that dangerous transformative AI has already been created,

Not at all. I'm just saying that if any AI with external access would be considered dangerous, then the same AI without access should be considered dangerous as well.

The dynamite analogy was of course not meant to be a model for AI, I just wanted to point out that even an inert mass that in principle any child could play with without coming to harm is still considered dangerous, because under certain circumstances it will be harmful. Dynamite + fire = damage, dynamite w/o fire = still dangerous.

Your third argument seems to prove my point: An AI that seems aligned in the training environment turns out to be misaligned if applied outside of the training distribution. If that can happen, the AI should be considered dangerous, even if within the training distribution it shows no signs of it.

↑ comment by Remmelt (remmelt-ellen) · 2022-08-05T15:32:36.575Z · LW(p) · GW(p)

I'm just saying that if any AI with external access would be considered dangerous

I'm saying that general-purpose ML architectures would develop especially dangerous capabilities by being trained in high-fidelity and high-bandwidth input-output interactions with the real outside world.

comment by Linda Linsefors · 2022-11-26T20:57:54.504Z · LW(p) · GW(p)

I mostly agree with this post.

That said, here's some points I don't agree with, and some extra nit-picking because Karl asked me for feedback.

The points above indicate that the line between “harmless” and “dangerous” must be somewhere below the traditional threshold of “at least human problem-solving capabilities in most domains”.

I don't think we know even this. I can imagine an AI that is successfully trained to imitate human behaviour, such that it is it has human problem-solving capabilities in most domains, but which does not pose an existential threat, because it just keeps behaving like a human. This could happen because this AI is not an optimiser but a "predict what a skilled human would do next and then do that" machine.

It is also possible that no such AI would be stable, because it would notice that it is not human, which will somehow cause it to go of rail and start self-improve, or something. At the moment I don't think we have good evidence either way.

But while it is often difficult to get people to agree on any kind of policy, there are already many things which are not explicitly forbidden, but most people don’t do anyway,

The list of links to stupid things did anyway don't exactly illustrate your point. But there is a possible argument here regarding the fact that the number of people who have access to teraflops of compute is a much smaller number than those who have access to aquarium fluid.

If we managed to create a widespread common-sense understanding of what AI we should not build. How long do you think it will take for some idiot to do it anyway, after it becomes possible?

(think for example of social media algorithms pushing extremist views, amplifying divisiveness and hatred, and increasing the likelihood of nationalist governments and dictatorships, which in turn increases the risk of wars).

I don't think the algorithms have much to do with this. I know this is a claim that keeps circulating, but I don't know what the evidence is. Clearly social media have political influence, but to me this seems to have more to do with the massively increased communication connectiveness, than anything about the specific algorithms.

This will require a lot more research. But there are at least some properties of an AI that could be relevant in this context:

I think this is a good list. On first read I wanted to add agency/agentic-ness/optimiser-similarity but thinking some more I think this should not be included. The reason not to put it on the list is that it's because of the combination:

- agency is vague hard to define concept.

- The relevant aspects of agency (from the perspective of safety) are covered by strategic awareness and stability. So probably don't add it to the list.

However, you might want to add the similar concept "consequentialist reasoning ability". Although it can be argued that this is just the same as "world model".

comment by Martin Čelko (martin-celko) · 2022-08-13T01:54:53.933Z · LW(p) · GW(p)

Framework for this could be looking for AI that is useful, by definition smarter than human.

The foundation is once the AI takes off, it needs to land.

When this take off and landing happens, its the job of humans, to know whether the act of AI actually did anything positive.

The problem is we as humans seem not have models and perfect measurements.

For instance if AI does something in economy, what exactly makes us believe, what it did was correct?

How can we know it was good?

Even economist struggle to put real life measurements into meaningful framework?

I guess we could use AI first to model the world better than we can?

For instance we act as if economical theories work. The reality is this is hardly true, if we measure less of reality than actually is.

One could argue that this is what intelligence does.

It abstracts principals. Those help people to act on realities, and ignore all else that is irrelevant.

The problem is that if AI works with just the exact variables we humans work with, all it can do, is to extrapolate from imperfect information.

Its likely going to just end up with our conclusions, but with slightly more accurate models.

For instance macro economic models don't tell us anything about reality.

It just tells us something about our ability to interface with reality through data.

When we look at stocks we aren't actually looking at the real thing.

We are looking at sets of information that we can effectively manipulate to our advantage.

If we want AI that actual helps us, to be smarter, and not just be a computer, we need it to do more than manipulate data that is pretty much useless.