You Can’t Predict a Game of Pinball

post by Jeffrey Heninger (jeffrey-heninger) · 2023-03-30T00:40:05.280Z · LW · GW · 13 commentsContents

Introduction The Game Collisions Iteration Predictions Conclusion Appendix: Quantum Pinball None 13 comments

Introduction

When thinking about a new idea, it helps to have a particular example to use to gain intuition and to clarify your thoughts. Games are particularly helpful for this, because they have well defined rules and goals. Many of the most impressive abilities of current AI systems can be found in games.[1]

To demonstrate how chaos theory imposes some limits on the skill of an arbitrary intelligence, I will also look at a game: pinball.

In this page, I will show that the uncertainty in the location of the pinball grows by a factor of about 5 every time the ball collides with one of the disks. After 12 bounces, an initial uncertainty in position the size of an atom grows to be as large as the disks themselves. Since you cannot launch a pinball with more than atom-scale precision, or even measure its position that precisely, you cannot make the ball bounce between the disks for more than 12 bounces.

The challenge is not that we have not figured out the rules that determine the ball’s motion. The rules are simple; the ball’s trajectory is determined by simple geometry. The challenge is that the chaotic motion of the ball amplifies microscopic uncertainties. This is not a problem that is solvable by applying more cognitive effort.

The Game

Let’s consider a physicist’s game of pinball.

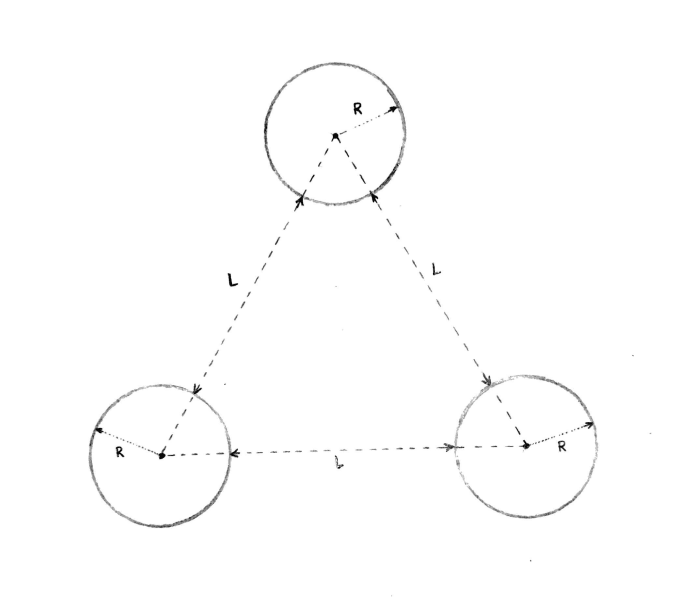

Forget about most of the board and focus on the three disks at the top. Each disk is a perfect circle of radius R. The disks are arranged in an equilateral triangle. The minimum distance between any two disks is L. See Figure 1 for a picture of this setup.

The board is frictionless and flat, not sloped like in a real pinball machine. Collisions between the pinball and the disks are perfectly elastic, with no pop bumpers that come out of the disk and hit the ball. The pinball moves at a constant speed all of the time and only changes direction when it collides with a disk.

The goal of the game is to get the pinball to bounce between the disks for as long as possible. As long as it is between the disks, it will not be able to get past your flippers and leave the board.

A real game of pinball is more complicated than this – and correspondingly, harder to predict. If we can establish that the physicist’s game of pinball is impossible to predict, then a real game of pinball will be impossible to predict too.

Collisions

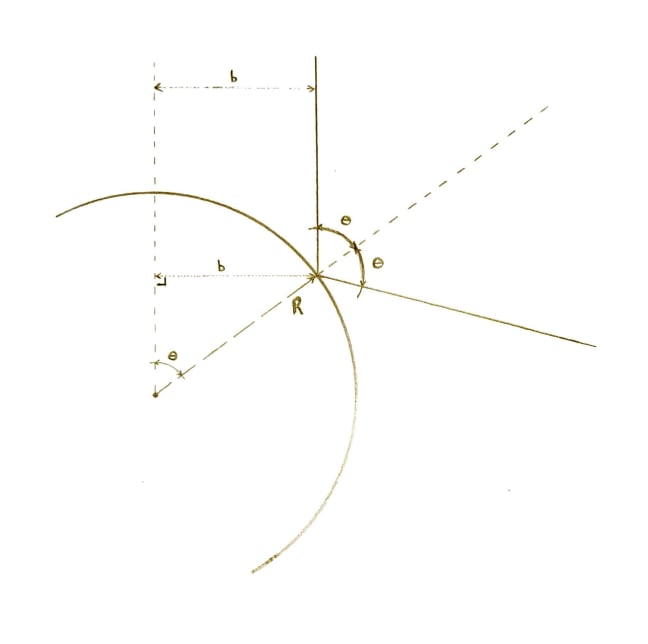

When the ball approaches a disk, it will not typically be aimed directly at the center of the disk. How far off center it is can be described by the impact parameter, b, which is the distance between the trajectory of the ball and a parallel line which passes through the center of the disk. Figure 2 shows the trajectory of the ball as it collides with a disk.

The surface of the disk is at an angle relative to the ball’s trajectory. Call this angle θ. This is also the angle of the position of the collision on the disk relative to the line through the center parallel to the ball’s initial trajectory. This can be seen in Figure 2 because they are corresponding angles on a transversal.

At the collision, the angle of incidence equals the angle of reflection. The total change in the direction of the ball’s motion is 2θ.

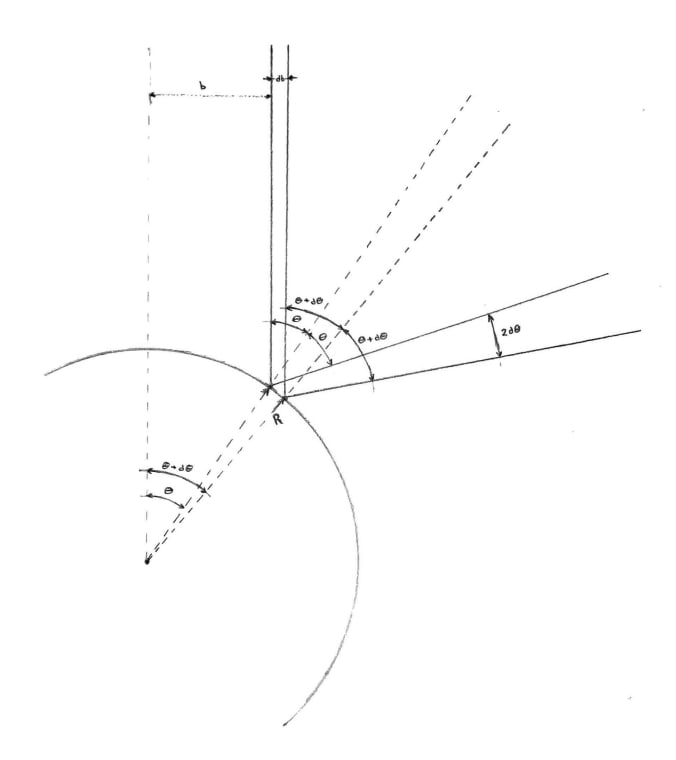

We cannot aim the ball with perfect precision. We can calculate the effect of this imperfect precision by following two slightly different trajectories through the collision instead of one.

The two trajectories have slightly different initial locations. The second trajectory has impact parameter b + db, with db ≪ b. Call db the uncertainty in the impact parameter. We assume that the two trajectories have exactly the same initial velocity. If we were to also include uncertainty in the velocity, it would further decrease our ability to predict the motion of the pinball. A diagram of the two trajectories near a collision is shown in Figure 3.

The impact parameter, radius of the disk, and angle are trigonometrically related: b = R sinθ (see Figure 2). We can use this to determine the relationship between the uncertainty in the impact parameter and the uncertainty in the angle: db = R cosθ dθ.

After the collision, the two trajectories will no longer be parallel. The angle between them is now 2 dθ. The two trajectories will separate as they travel away from the disk. They will have to travel a distance of at least L before colliding with the next disk. The distance between the two trajectories will then be at least L 2 dθ.

Iteration

We can now iterate this. How does the uncertainty in the impact parameter change as the pinball bounces around between the disks?

Start with an initial uncertainty in the impact parameter, db₀. After one collision, the two trajectories will be farther apart. We can use the new distance between them as the uncertainty in the impact parameter for the second collision.[2] The new uncertainty in the impact parameter is related to the old one according to:

We also used 1 / cos θ > 1 for –π/2 < θ < π/2, which are the angles that could be involved in a collision. The ball will not pass through the disk and collide with the interior of the far side.

Repeat this calculation to see that after two collisions:

and after N collisions,

Plugging in realistic numbers, R = 2 cm and L = 5 cm, we see that

The uncertainty grows exponentially with the number of collisions.

Suppose we had started with an initial uncertainty about the size of the diameter of an atom, or 10−10 m. After 12 collisions, the uncertainty would grow by a factor of 5¹², to 2.4 cm. The uncertainty is larger than the radius of the disk, so if one of the trajectories struck the center of the disk, the other trajectory would miss the disk entirely.

The exponential growth amplifies atomic-scale uncertainty to become macroscopically relevant in a surprisingly short amount of time. If you wanted to predict the path the pinball would follow, having an uncertainty of 2 cm would be unacceptable.

In practice, there are many uncertainties that are much larger than 10−10 m, in the production of the pinball, disks, & board and in the mechanics of the launch system. If you managed to solve all of these engineering challenges, you would eventually run into the fundamental limit imposed by quantum mechanics.

It is in principle impossible to prepare the initial location of the pinball with a precision of less than the diameter of an atom. Heisenberg’s Uncertainty Principle is relevant at these scales. If you tried to prepare the initial position of a pinball with more precision than this, it would cause the uncertainty in the initial velocity to increase, which would again make the motion unpredictable after a similar number of collisions.

When I mention Heisenberg’s Uncertainty Principle, I expect that there will be some people who want to see the quantum version of the argument. I do not think that it is essential to this investigation, but if you are interested, you can find a discussion of Quantum Pinball in the appendix.

Predictions

You cannot prepare the initial position of the pinball with better than atomic precision, and atomic precision only allows you to predict the motion of the pinball between the disks with centimeter precision for less than 12 bounces. It is impossible to predict a game of pinball for more than 12 bounces in the future. This is true for an arbitrary intelligence, with an arbitrarily precise simulation of the pinball machine, and arbitrarily good manufacturing & launch systems.

This behavior is not unique to pinball. It is a common feature of chaotic systems.

If you have infinite precision, you could exactly predict the future. The equations describing the motion are deterministic. In this example, following the trajectory is a simple geometry problem, solvable using a straightedge and compass.

But we never have infinite precision. Every measuring device only provides a finite number of accurate digits. Every theory has only been tested within a certain regime, and we do not have good reason to expect it will work outside of the regime it has been tested in.

Chaos quickly amplifies whatever uncertainty and randomness which exists at microscopic scales to the macroscopic scales we care about. The microscopic world is full of thermal noise and quantum effects, making macroscopic chaotic motion impossible to predict as well.

Conclusion

It is in principle impossible to predict the motion of a pinball as it moves between the top three disks for more than 12 bounces. A superintelligence might be better than us at making predictions after 8 bounces, if it can design higher resolution cameras or more precise ball and board machining. But it too will run into the low prediction ceiling I have shown here.

Perhaps you think that this argument proves too much. Pinball is not completely a game of chance. How do some people get much better at pinball than others?

If you watch a few games of professional pinball, the answer becomes clear. The strategy typically is to catch the ball with the flippers, then to carefully hit the balls so that it takes a particular ramp which scores a lot of points and then returns the ball to the flippers. Professional pinball players try to avoid the parts of the board where the motion is chaotic. This is a good strategy because, if you cannot predict the motion of the ball, you cannot guarantee that it will not fall directly between the flippers where you cannot save it. Instead, professional pinball players score points mostly from the non-chaotic regions where it is possible to predict the motion of the pinball.[3]

Pinball is typical for a chaotic system. The sensitive dependence on initial conditions renders long term predictions impossible. If you cannot predict what will happen, you cannot plan a strategy that allows you to perform consistently well. There is a ceiling on your abilities because of the interactions with the chaotic system. In order to improve your performance you often try to avoid the chaos and focus on developing your skill in places where the world is more predictable.

Appendix: Quantum Pinball

If quantum uncertainty actually is important to pinball, maybe we should be solving the problem using quantum mechanics. This is significantly more complicated, so I will not work out the calculation in detail. I will explain why this does not give you a better prediction for where the pinball will be in the future.

Model the disks as infinite potential walls, so the wave function reflects off of them and does not tunnel through them. If the pinball does have a chance of tunneling through the disks, that would mean that there are even more places the quantum pinball could be.

Start with a wave function with minimum uncertainty: a wave packet with Δx Δp =ℏ/2 in each direction. It could be a Gaussian wave packet, or it could be a wavelet. This wave packet is centered around some position and velocity.

As long as the wave function is still a localized wave packet, the center of the wave packet follows the classical trajectory. This can be seen either by looking at the time evolution of the average position and momentum, or by considering the semiclassical limit. In order for classical mechanics to be a good approximation to quantum mechanics at macroscopic scales, a zoomed out view of the wave packet has to follow the classical trajectory.

What happens to the width of the wave packet? Just like how the collisions in the classical problem caused nearby trajectories to separate, the reflection off the disk causes the wave packet to spread out. This can be most easily seen using the ray tracing method to solve Schrödinger’s equation in the WKB approximation.[4] This method converts the PDE for the wave function into a collection of (infinitely many) ODEs for the path each ‘ray’ follows and for the value of the wavefunction along each ray. The paths the rays follow reflect like classical particles, which means that the region where the wave function is nonzero spreads out in the same way as the classical uncertainty would.

This is a common result in quantum chaos. If you start with a minimum uncertainty wave packet, the center of the wave packet will follow the classical trajectory and the width of the wave packet will grow with the classical Lyapunov exponent.[5]

After 12 collisions, the width of the wave packet would be several centimeters. After another collision or two, the wave function is no longer a wave packet with a well defined center and width. Instead, it has spread out so it has a nontrivial amplitude across the entire pinball machine. There might be some interesting interference patterns or quantum scarring,[6] but the wave function will not be localized to any particular place. Since the magnitude of the wave function squared tells you the probability of finding the pinball in that location, this tells us that there is a chance of finding the pinball almost anywhere.

A quantum mechanical model of the motion of the pinball will not tell you the location of the pinball after many bounces. The result of the quantum mechanical model is a macroscopic wavefunction, with nontrivial probability of being at almost any location across the pinball machine. We do not observe a macroscopic wavefunction. Instead, we observe the pinball at a particular location. Which of these locations you will actually observe the pinball in is determined by wavefunction collapse. Alternatively, I could say that there are Everett branches with the pinball at almost any location on the board.

Solving wave function collapse, or determining which Everett branch you should expect to find yourself on, is an unsolved and probably unsolvable problem – even for a superintelligence.

- ^

- ^

The collision also introduces uncertainty in the velocity, which we ignore. If we had included it, it would make the trajectory even harder to simulate.

- ^

The result is a pretty boring game. However, some of these ramps release extra balls after you have used them a few times. My guess is that this is the game designer trying to reintroduce chaos to make the game more interesting again.

- ^

Ray tracing was developed to reconcile the wave theory or light with the earlier successful theory which models light as rays coming from a point source. There is a mathematical equivalence between the two models.

- ^

I have found it difficult to find a source that explains and proves this result. Several people familiar with chaos theory (including myself) think that it is obvious. Here is a paper which makes use of the fact that a wave packet expands (or contracts) according to the classical Lyapunov exponent:

Tomsovic et al. Controlling Quantum Chaos: Optimal Coherent Targeting. Physical Review Letters 130. (2023) https://arxiv.org/pdf/2211.07408.pdf.

- ^

Wikipedia: Quantum scar.

13 comments

Comments sorted by top scores.

comment by gwern · 2023-03-30T01:23:33.556Z · LW(p) · GW(p)

To sum up:

Replies from: GrothorAll stable processes we shall predict, all unstable processes we shall control.

↑ comment by Richard Korzekwa (Grothor) · 2023-03-30T02:30:17.475Z · LW(p) · GW(p)

Seems like the answer with pinball is to avoid the unstable processes, not control them.

Replies from: gwern, AnthonyC, Measure↑ comment by gwern · 2023-03-30T18:03:41.408Z · LW(p) · GW(p)

Yes, that's what 'controlling' usually looks like... Only a fool puts himself into situations where he must perform like a genius just to get the same results he could have by 'avoiding' those situations. IRL, an ounce of prevention is worth far more than a pound of cure.

To demonstrate how chaos theory imposes some limits on the skill of an arbitrary intelligence, I will also look at a game: pinball.

...Pinball is typical for a chaotic system. The sensitive dependence on initial conditions renders long term predictions impossible. If you cannot predict what will happen, you cannot plan a strategy that allows you to perform consistently well. There is a ceiling on your abilities because of the interactions with the chaotic system. In order to improve your performance you often try to avoid the chaos and focus on developing your skill in places where the world is more predictable.

This is wrong. Your pinball example shows no such thing. Note the complete non-sequitur here from your conclusion, about skill, to your starting premises, about unpredictability of an idealized system with no agents in it. You don't show where the ceiling is to playing pinball even for humans, or any reason to think there is a ceiling at all. Nor do you explain why the result of '12 bounces' which you spend so much time analyzing is important; would 13 bounces have proven that there is no limit? Would 120 bounces have done so? Or 1,200 bounces? If the numerical result of your analysis could be replaced by a random number with no change to any of your conclusions, then it was irrelevant and a waste of 2000 words at best, and 'proof by intimidation' at worst.

And what you do seem to concede (buried at the end, hidden in an off-site footnote I doubt anyone here read) is:

Perhaps you think that this argument proves too much. Pinball is not completely a game of chance. How do some people get much better at pinball than others? If you watch a few games of professional pinball, the answer becomes clear. The strategy typically is to catch the ball with the flippers, then to carefully hit the balls so that it takes a particular ramp which scores a lot of points and then returns the ball to the flippers. Professional pinball players try to avoid the parts of the board where the motion is chaotic. This is a good strategy because, if you cannot predict the motion of the ball, you cannot guarantee that it will not fall directly between the flippers where you cannot save it. Instead, professional pinball players score points mostly from the non-chaotic regions where it is possible to predict the motion of the pinball.3 3. The result [of human pros] is a pretty boring game. However, some of these ramps release extra balls after you have used them a few times. My guess is that this is the game designer trying to reintroduce chaos to make the game more interesting again.

So, in reality, the score ceiling is almost arbitrarily high but "The result [of skilled pinball players maximizing scores] is a pretty boring game." Well, you didn't ask if 'arbitrary intelligences' would play boring games, now did you? You claimed to prove that they wouldn't be able to play high-scoring games, and that you could use pinball games to "demonstrate how chaos theory imposes limits on the skill of an arbitrary intelligence". But you didn't "demonstrate" anything like that, so apparently, chaos theory doesn't - it doesn't even "impose some limits on the skill of" merely human intelligences...

Who knows how long sufficiently skilled players could keep racking up points by exercising skill to keep the ball in point-scoring trajectories in a particular pinball machine? Not you. And many games have been 'broken' in this respect, where the limit to points is simply an underlying (non-chaotic) variable, like the point where a numeric variable overflows & the software crashes, or the human player collapses due to lack of sleep. (By the way, humans are also able to predict roulette wheels.)

This whole post is just an intimidation-by-physics bait-and-switch, where you give a rigorous deduction of an irrelevant claim where you concede the actual substantive claim is empirically false.

(And you can't give any general relationship between difficulty of modeling environment for an arbitrary number of steps and the power of agents in environments, because there is none, and highly-effective intelligent agents may model arbitrarily small parts of the environment†.)

This entire essay strikes me as a good example of the abuse of impossibility proofs. If we took it seriously, it illustrates in fact how little such analogies could ever tell us, because you have left out everything to do with 'skill' and 'arbitrary intelligence'. This is like the Creationists like arguing that humans could not have evolved because it'd be like a tornado hitting a junkyard and spontaneously building a jetliner which would be astronomically unlikely; the exact combinatorics of that tornado (or bouncing balls) are irrelevant, because evolution (and intelligence) are not purely random - that's the point! The whole point of an arbitrary intelligence is not stand idly by LARPing as Laplace's demon. "The best way to predict the future is to invent it." And the better you can potentially control outcomes, the less you need accurate long-range forecasts (a recent economics paper on modeling this exact question: Millner & Heyen 2021/op-ed.)

As Von Neumann was commenting about weather 'forecasting', and was a standard trope in cybernetics, prediction and control are duals, and 'muh chaos' doesn't mean you can't predict chaotic systems (or 'unstable' systems in general, chaos theory is just a narrow & overhyped subset, much beloved of midwits of the sort who like to criticize 'Yudkowski') - neural networks are good at predicting chaotic systems, and if you can't, it just means that you need to control them upstream as well. This is why the field of "control of chaos" can exist. (Or, to put it more pragmatically: If you are worried about hurricanes causing damage, you "pour oil over troubled waters" in the right places, or you can try to predict them months out and disrupt the initial warm air formation; but it might be better to control them later by steering them, and if that turns out to be infeasible, control the damage by ensuring people aren't there to begin with by quietly tweaking tax & insurance policy decades in advance to avoid lavishly subsidizing coastal vacation homes, etc; there are many places in the system to exert control rather than go 'ah, you see, the weather was proven by Mandelbrot to be chaotic and unpredictable!' & throw your hands up.)

That's the beauty of unstable systems: what makes them hard to predict is also what makes them powerful to control. The sensitivity to infinitesimal detail is conserved: because they are so sensitive at the points in orbits where they transition between attractors, they must be extremely insensitive elsewhere. (If a butterfly flapping its wings can cause a hurricane, then that implies there must be another point where flapping wings could stop a hurricane...) So if you can observe where the crossings are in the phase-space, you can focus your control on avoiding going near the crossings. This nowhere requires infinitely precise measurements/predictions, even though it is true that you would if you wanted to stand by passively and try to predict it.

Nothing new here to the actual experts in chaos theory, but worth pointing out: discussions of bouncing balls diverging after n bounces are a good starting point, but they really should not be the discussion's ending point, any more than a discussion of the worst-case complexity of NP problems should end there (which leads to bad arguments like 'NP proves AI is impossible or can't be dangerous').

Another observation worth making is that speed (of control) and quality (of prediction) and power (of intervention) are substitutes: if you have very fast control, you can get away with low-quality prediction and small weak interventions, and vice-versa.

Would you argue that fusion tokamaks [LW · GW] are impossible, even in principle, because high-temperature plasmas are extremely chaotic systems which would dissipate in milliseconds? No, because the plasmas are controlled by high-speed systems. Those systems aren't very smart (which is why there's research into applying smarter predictors like neural nets), but they are very fast.

There are loads of cool robot demos like the octobouncer which show that you can control 'chaotic' phenomena like bouncing balls with scarcely any action if you are precise and/or fast enough. (For example, the Ishikawa lab has for over a decade been publishing demos of 'what if impossible-for-a-robot-task-X but with a really high-speed arm/hand?' eg. why bother learning theory of mind to predict rock-paper-scissors when Ishikawa can use a high-speed camera+hand to cheat? And I saw a fun inverted pendulum demo on YT last week which makes that point as well.*) With superhuman reaction time, actuators can work on all sorts of (what would be) wildly nonlinear phenomenon despite crude controllers simply because they are so fast that at the time-scale that's relevant, the phenomena never have a chance to diverge far from really simple linear dynamic approximations or require infeasibly heavy-handed interventions. And since they never escape into the nonlinearities (much less other attractors), they are both easy to predict and to control. And if you don't need to deal with them, then value-equivalent model-based RL agents learning end-to-end will not bother to learn to predict them accurately at all, because that is predicting the wrong thing...

All of this comes up in lots of fields and has various niches in chaos theory and control theory and decision theory and RL etc, but I think you get the idea - whether it's chaos, Godel, Turing, complexity, or Arrow, when it comes to impossibility proofs, there is always less there than meets the eye, and it is more likely you have made a mistake in your interpretation or proof than some esoteric a priori argument has all the fascinating real-world empirical consequences you over-interpret it to have.

* I think I might have also once saw this exact example of repeated-bouncing-balls done with robot control demonstrating how even with apparently-stationary plates (maybe using electromagnets instead of tilting?), a tiny bit of high-speed computer vision control could beat the chaos and make it bounce accurately many more times than the naive calculation says is possible, but I can't immediately refind it.

And in this respect, pinball is a good example for AI risk: people try to analyze these problems as if life was a game like playing a carefully-constructed pinball machine for fun where you will play it as intended and obeying all the artificial rules and avoiding 'boring' games and delighting in proofs like 'you can't predict a pinball machine after n bounces because of quantum mechanics, for which I have written a beautiful tutorial on LW proving why'; while in real life, if you actually had to maximize the scores on a pinball machine for some bizarre reason, you'd unsee the rules and begin making disjunctive plans to hack the pinball machine entirely. It might be a fun brainstorming exercise for a group to try to come up with 100 hacks, try to taxonomize them, and analogize each category to AI failure modes.

Just off the top of my head: one could pay a pro to play for you instead, use magnetic balls to maneuver from the outside or magnets to tamper with the tilt sensor, snake objects into it through the coin slot or any other tiny hole to block off paths, use a credit card to open up the back and enable 'operator mode' to play without coins or with increased bonuses, hotwire it, insert new chips to reprogram it, glue fake high-score numbers over the real display numbers, replace it with a fake pinball machine entirely, photoshop a photo of high scores or yourself into a photo of someone else's high scores or copy the same high score repeatedly into 'different' photos, etc. That's 9 hacks there, none of which can be disproven by 'chaos theory' (or 'Godel', or 'Turing', or 'P!=NP', or...).

See also: temporal scaling laws [LW(p) · GW(p)], where Hilton et al 2023 offers a first stab at properly conceptualizing how we should think of the relationship of prediction & 'difficulty' of environments for RL agents, finding two distinguishable concepts with different scaling:

One key takeaway is that the scaling multiplier is not simply proportional to the horizon length, as one might have naively expected. Instead, the number of samples required is the sum of two components, one that is inherent to the task and independent of the horizon length, and one that is proportional to the horizon length.

(One implication here being that if you think about modeling the total 'difficulty' of pinball like this, you might find that the 'inherent' difficulty is high, but then the 'horizon length' difficulty is necessarily low: it is very difficult to master mechanical control of pinballs and understand the environment at all with all its fancy rules & bonuses & special-cases, but once you do, you have high control of the game and then it doesn't matter much what would (not) happen 100 steps down the line due to chaos (or anything else). Your 'regret' from not being omniscient is technically non-zero & still contributing to your regret, but too small to care about because it's so unlikely to matter. And this may well describe most real-world tasks.)

† model-based agents want to learn to be value-equivalent. A trivial counterexample to any kind of universal claim about chaos etc: imagine a MDP in which action #1 leads to some totally chaotic environment unpredictable even a step in advance, and where action #2 earns $MAX_REWARD; obviously, RL agents will learn the optimal policy of simply always executing action #2 and need learn nothing whatsoever about the chaotic environment behind action #1.

↑ comment by AnthonyC · 2023-03-30T14:38:10.689Z · LW(p) · GW(p)

Presumably that's because pinball is a game with imposed rules. Physically the control solutions could be installing more flippers, or rearranging the machine components. Or if you want to forbid altering the machine, maybe installing an electromagnet that magnetizes the ball and then drags it around directly, or even just stays stationary at the bottom to keep it from falling between the flippers.

comment by TekhneMakre · 2023-03-30T00:53:22.683Z · LW(p) · GW(p)

To demonstrate how chaos theory imposes some limits on the skill of an arbitrary intelligence, I will also look at a game: pinball.

If you watch a few games of professional pinball, the answer becomes clear. The strategy typically is to catch the ball with the flippers, then to carefully hit the balls so that it takes a particular ramp which scores a lot of points and then returns the ball to the flippers. Professional pinball players try to avoid the parts of the board where the motion is chaotic. This is a good strategy because, if you cannot predict the motion of the ball, you cannot guarantee that it will not fall directly between the flippers where you cannot save it. Instead, professional pinball players score points mostly from the non-chaotic regions where it is possible to predict the motion of the pinball.

I don't see anything you wrote that I literally disagree with. But the emphasis seems weird. "You can't predict everything about a game of pinball" would seem less weird. But what an agent wants to predict about something, what counts as good prediction, depends on what the agent is trying to do. As you point out, there's predictable parts, and I claim the world is very much like this: there are many domains in which there's many good-enough predictabilities, such that a superintelligence will not have a human-recognizable skill cieling.

comment by Noosphere89 (sharmake-farah) · 2025-01-07T20:21:03.917Z · LW(p) · GW(p)

This is a surprisingly easy post to review, and my take is that the core mathematical result is accurate, given the assumptions (it's very hard to predict where the pinball will go next exactly without infinite compute, even over a surprisingly low amount of bounces), but the inferred result that this means that there are limits to what an intelligence could do in controlling the world is wrong, because the difficulty of predicting something is unrelated to the difficulty of controlling something, and more importantly this claim here is wrong, and it's easy to give an example in the real world for why it's wrong, and this is a core claim around the limits of AI in this piece:

If you cannot predict what will happen, you cannot plan a strategy that allows you to perform consistently well.

Gwern's tweet provides a very easy counterexample here (quoting it rather than linking due to tweets being protected:

Their own pinball example refutes them. (Pinball pros can play pinball and not lose a ball for literally days; the main limitation is their own physical & mental fatigue.)

More generally, this comment is useful:

https://www.lesswrong.com/posts/epgCXiv3Yy3qgcsys/you-can-t-predict-a-game-of-pinball#wjLFhiWWacByqyu6a [LW(p) · GW(p)]

The fact that there's any correct mathematics at all is why I'd not give it a -9, but the post is still misleading enough that it deserves a -4 vote.

comment by [deleted] · 2023-03-30T00:47:20.092Z · LW(p) · GW(p)

See how the best you can do is atom level, both for perception and control of the launch?

The bits of precision there limit how useful an intelligence can be.

For general AIs who have read all human knowledge it's likely a similar limit. We humans might in one document say that spinach is 50 percent likely to cause some medical condition, with a confidence interval of 20 percent.

So interval of 30, 70.

If you read every document on the subject humans know. You analyzed blogs posted online or data mined grocery store receipts and correlated them with medical records.

You might be able to narrow the interval above to say 56, 68 percent but no better. And you're a superintelligence.

Made up numbers but I think the principal holds. You need tools and direct measurements to do better. Learning from human text is like playing pinball by telling someone else when to press the flipper.

Replies from: gwern↑ comment by gwern · 2023-03-30T01:27:37.039Z · LW(p) · GW(p)

The bits of precision there limit how useful an intelligence can be.

No, it doesn't in any meaningful sense. You may have 'aleatoric' uncertainty in any specific medical case, but there is no relevant universal limit on how much 'epistemic uncertainty' can be reduced*, or what can be learned in general and what those capabilities are, or what can be learned in silico; this will be domain-specific and constrained by compute etc, and certainly you have no idea where the limit will wind up being. This would be like saying that 'MuZero observes some games between pro players and cannot in many board positions predict who will win with 100% certainty, therefore, MuZero cannot learn superhuman Go'. The former is completely factual (IIRC, the predictive performance of AlphaZero or MuZero in predicting outcomes of human pro games actually gets worse after a certain Elo level), but is a non sequitur to the latter, which is factually false. Whatever the limit was there in playing Go, it turned out to be far beyond anything that mattered. And you have no idea what the limit is elsewhere.

* terminology reminder: 'aleatoric uncertainty' is whether a fair coin will come up heads next time; 'epistemic uncertainty' is whether you know it's a fair coin. It is worth noting that 'uncertainty is in the map, not the territory' so one agent's aleatoric uncertainty may simply be another agent's epistemic uncertainty: you may not be able to predict a fair coin and that's aleatoric for you, but the robot with the high-speed camera watching the toss can and that's just epistemic for it.

Replies from: None↑ comment by [deleted] · 2023-03-30T03:59:47.106Z · LW(p) · GW(p)

Go worked because it's a completely defined game with perfect information.

I am not claiming if you have the information you can't arbitrarily scale intelligence. Though it will have diminishing returns - if you reframe the problem as fractional Go stones instead of "win" and "loss" you will see asymptotically diminishing returns on intelligence.

Meaning that for an infinite superintelligence limited in action only to Go moves, it still cannot beat an average human player if that player gets a sufficiently large amount of extra stones.

This example is from the real world where information is imperfect, and even for pinball - an almost perfectly deterministic game - the ceiling on the utility of intelligence dropped a lot.

For some tasks humans do the ceiling may be so low superintelligence isn't even possible.

This has profound consequences. It means most AGI doom scenario are wrong. The machine cannot make a bioweapon to kill everyone or nanotechnology or anything else without first having the information needed to do so and the actuators. (Note that in the pinball example if you have a realistic robot on the pinball machine with a finite control packet rate you are at way under atomic precision)

This makes control of AGIs possible because you don't need to understand the fire but the fuel supply.

Replies from: AnthonyC↑ comment by AnthonyC · 2023-03-30T15:30:23.639Z · LW(p) · GW(p)

I would point out that this is not a new observation, nor is it unknown to the people most concerned about many of those same doom scenarios. There are several reasons why, but most of them boil down to: the AI doesn't need to beat physics, it just needs to beat humans. If you took the best human pinball player, and made it so they never got tired/distracted/sick, they'd win just about every competition they played in. If you also gave them high-precision internal timekeeping to decide when to use the flippers, even more so. Let them play multiple games in different places at once, and suddenly humans lose every pinball competition there is.

Also, you're equivocating between "the ceiling may be so low" for "some tasks," and "most AGI doom scenario are wrong." Your analogy does not actually show where practical limits are for specific relevant real-word tasks, let alone set a bound for all relevant tasks.

For example, consider that humans can design an mRNA vaccine in 2 days. We may take a year or more to test, manufacture, and approve it, but we can use intelligence alone to design a mechanism to get our cells to manufacture arbitrary proteins. Do you really want to bet the planet that no level of intelligence can get from there to designing a self-replicating virus? And that we're going to actually, successfully, every time, everywhere, forever, constrain AI so that it never persuades anyone to create something it wants based on instructions they don't understand (whether or not they think they do)? The former seems like a small extension of what baseline humans can already do, and the latter seems like an unrealistic pipe dream. Not because your "extra Go stones to start with" point is necessarily wrong, but because humans who aren't worried about doom scenarios are actively trying to give the AIs as many stones as they can.

Replies from: None↑ comment by [deleted] · 2023-03-30T15:58:00.145Z · LW(p) · GW(p)

Do you really want to bet the planet that no level of intelligence can get from there to designing a self-replicating virus

No, but I wouldn't bet that solely based on computation and not wet lab experiments (a lot of them) the AI could design a self replicating virus that killed everyone. There are conflicting goals with that, if the virus kills people too fast, it dies out. Any "timer" mechanism delaying when it attacks is not evolutionarily conserved. The "virus" will simplify itself in each hosts, dropping any genes that are not helping it right now. (or it iterates the local possibility space and the particles that spread well to the next host are the ones that escape, this is why Covid would evolve to be harder to stop with masks)

That's the delta. Sure, if the machine gets large amounts of wet labs it could develop arbitrary things. This problem isn't unsolvable but the possible fixes depend on things humans don't have data on. (think sophisticated protein based error correction mechanisms that stop the virus from being able to mutate, or give the virus DNA editing tools that leave a time bomb in the hosts and also weaken the immune system, that kinda thing)

That I think is the part that the doomsters lack, many of them simply have no knowledge about things outside their narrow domain (math, rationality, CS) and don't even know what they don't know. It's outside their context window. I don't claim to know it all either.

And that we're going to actually, successfully, every time, everywhere, forever, constrain AI so that it never persuades anyone to create something it wants based on instructions they don't understand (whether or not they think they do)

See the other main point of disagreement I have is how do you protect humans from this. I agree. Bioweapons are incredibly asymmetric, takes one release anywhere and it gets everywhere. And I don't think futures where humans have restricted AGI research - say with "pauses" and onerous government restrictions on even experiments - is one where they survive. If they do not even know what AGIs do, how they fail, or develop controllable systems that can fight on their side, they will be helpless when these kinds of attacks become possible.

What would a defense look like? Basically hermetically sealed bunkers buried deep away from strategic targets, enough for the entire population of wealthier nations, remotely controlled surrogate robots, fleets of defense drones, nanotechnology sensors that can detect various forms of invisible attacks, millions drone submarines monitoring the ocean, defense satellites using lasers or microwave weapons, and so on.

What these things have in common is the amount of labor required to build the above on the needed scales is not available to humans. Each thing I mention "costs" a lot, the total "bill" is thousands of times the entire GDP of a wealthy country. The "cost" is from the human labor needed to mine the materials, design and engineer the device, to build the subcomponents, inspections, many of the things mentioned are too fragile to mass produce easily so there's a lot of hand assembly, and so on.

Eliezer's "demand" https://time.com/6266923/ai-eliezer-yudkowsky-open-letter-not-enough/ is death I think. "shut it down" means no defense is possible, the above cannot be built without self replicating robotics, which require sufficiently general AI (substantially more capable than GPT-4) to cover the breadth of tasks needed to mine materials and manufacturing more robotics and other things on the complexity of a robot. (this is why we don't have self repl right now, it's an endless 10% problem. Without general intelligence every robot has to be programmed essentially by hand, or for a restricted task space (see Amazon and a few others making robotics that are general for limited tasks like picking). And they will encounter "10% of the time" errors extremely often, requiring human labor to intervene. So most tasks today are still done with a lot of human labor, robotics only apply to the most common tasks)