Summary: "Imagining and building wise machines: The centrality of AI metacognition" by Johnson, Karimi, Bengio, et al.

post by Chris_Leong · 2024-11-11T16:13:26.504Z · LW · GW · 8 commentsThis is a link post for https://arxiv.org/pdf/2411.02478

Contents

Details: Authors: Abstract Notes on this summary Why I Wrote this Summary Summary: What is wisdom? What kinds of intractable problems? Two types of strategies for managing this: Examples of meta-cognitive failures: Would AI Wisdom Resemble Human Wisdom Benefits: Comparison to Alignment: Building Wise AI: Challenges with Benchmarks Why Build Wise AI? Why am I focused on wise AI advisors instead? None 8 comments

Details:

Authors:

Samuel G. B. Johnson, Amir-Hossein Karimi, Yoshua Bengio, Nick Chater, Tobias Gerstenberg, Kate Larson, Sydney Levine, Melanie Mitchell, Iyad Rahwan, Bernhard Schölkopf, Igor Grossmann

Abstract

Recent advances in artificial intelligence (AI) have produced systems capable of increasingly sophisticated performance on cognitive tasks. However, AI systems still struggle in critical ways: unpredictable and novel environments (robustness), lack of transparency in their reasoning (explainability), challenges in communication and commitment (cooperation), and risks due to potential harmful actions (safety). We argue that these shortcomings stem from one overarching failure: AI systems lack wisdom.

Drawing from cognitive and social sciences, we define wisdom as the ability to navigate intractable problems - those that are ambiguous, radically uncertain, novel, chaotic, or computationally explosive - through effective task-level and metacognitive strategies. While AI research has focused on task-level strategies, metacognition - the ability to reflect on and regulate one's thought processes - is underdeveloped in AI systems. In humans, metacognitive strategies such as recognizing the limits of one's knowledge, considering diverse perspectives, and adapting to context are essential for wise decision-making. We propose that integrating metacognitive capabilities into AI systems is crucial for enhancing their robustness, explainability, cooperation, and safety.

By focusing on developing wise AI, we suggest an alternative to aligning AI with specific human values - a task fraught with conceptual and practical difficulties. Instead, wise AI systems can thoughtfully navigate complex situations, account for diverse human values, and avoid harmful actions. We discuss potential approaches to building wise AI, including benchmarking metacognitive abilities and training AI systems to employ wise reasoning. Prioritizing metacognition in AI research will lead to systems that act not only intelligently but also wisely in complex, real-world situations.

Notes on this summary

Some quotes have been reformatted (adding paragraph break, dot points, references changed to footnotes).

I've made a few minor edits to the quotes. For example, I remove the word "secondly" when I'm only quoting one element of a list and adjusting the grammar when converting sentences into a list.

Apologies for the collapsable sections appearing slightly strange. Unfortunately, this seems to be a bug in the forum software when making the title a heading. I could have avoided this, but I decided to prioritise having an accurate table of contents instead.

Why I Wrote this Summary

Firstly, I thought the framing of metacognition as a key component of wisdom missing from current AI systems was insightful and the resulting analysis fruitful.

Secondly, this paper contains some ideas similar to those I discussed in Some Preliminary Notes on the Promise of a Wisdom Explosion. In particular, the authors talk about a "virtuous cycle" in relation to wisdom in the final paragraphs:

By simultaneously promoting robust, explainable, cooperative, and safe AI, these qualities are likely to amplify one another:

- Robustness will facilitate cooperation (by improving confidence from counterparties in its long-term commitments) and safety (by avoiding novel failure modes[1]).

- Explainability will facilitate robustness (by making it easier to human users to intervene in transparent processes) and cooperation (by communicating its reasoning in a way that is checkable by counterparties).

- Cooperation will facilitate explainability (by using accurate theory-of-mind about its users) and safety (by collaboratively implementing values shared within dyads, organizations, and societies).

Wise reasoning, therefore, can lead to a virtuous cycle in AI agents, just as it does in humans. We may not know precisely what form wisdom in AI will take but it must surely be preferable to folly.

Summary:

What is wisdom?

The authors begin with some examples:

Example from Paper: Willa's Children:

Willa’s children are bitterly arguing about money. Willa draws on her life experience to show them why they should instead compromise in the short term and prioritize their sibling relationship in the long term.

Further Examples from Paper

"Daphne is a world-class cardiologist. Nonetheless, she consults with a much more junior colleague when she recognizes that the colleague knows more about a patient’s history than she does"

"Ron is a political consultant who formulates possible scenarios to ensure his candidate will win. To help generate scenarios, he not only imagines best case scenarios, but also imagines that his client has lost the election and considers possible reasons that might have contributed to the loss."

Next they summarise theories of human wisdom.

What theories of human wisdom do they discuss?

They provide further information in the table on page 5.

Five component theories:

• Balance theory: "Deploying knowledge and skills to achieve the common good by"

• Berlin Wisdom Model: "Expertise in important and difficult matters of life"

• MORE Life Experience Model: "Gaining psychological resources via reflection, to cope with life challenges"

• Three-Dimensional Model: "Acquiring and reflecting on life experience to cultivate personality traits"

• Wise Reasoning Model: "Using context-sensitive reasoning to manage important social challenges"

Two consensus models

• Common wisdom model: "A style of social-cognitive processing" involving morality and metacognition

• Integrative Model: "A behavioural repertoire"

These consensus models attempt to find common themes.

Finally, they provide a definition of wisdom for the purposes of this paper:

We define wisdom functionally as the ability to successfully navigate intractable problems— those that do not lend themselves to analytic techniques due to unlearnable probability distributions or incommensurable values[2].

... If life were a series of textbook problems, we would not need to be wise. There would be a correct answer, the requisite information for calculating it would be available, and natural selection would have ruthlessly driven humans to find those answers

What kinds of intractable problems?

(Quoted from the article)

Incommensurable: It features ambiguous goals or values that cannot be compared with one another[3].

Transformative: The outcome of the decision might change one’s preferences, so that there is a clash between one’s present and future values

Radically uncertain. We might not be able to exhaustively list the possible outcomes or assign probabilities to them in a principled way[4].

Chaotic. The data-generating process may have a strong nonlinearity or dependency on initial conditions, making it fundamentally unpredictable[5][6].

Non-stationary. The underlying process may be changing over time, making the probability distribution unlearnable.

Out-of-distribution. The situation is novel, going beyond one’s experience or available data.

Computationally explosive. The optimal response could be calculated with infinite or infeasibly large computational resources, but this is not possible due to resource constraints.

This seems like a reasonable definition to use, though I have to admit I find the term "intractable problems" to be a bit strong for they examples they provided.

Two types of strategies for managing this:

1) Task-level strategies ("used to manage the problem itself") such as heuristics or narratives.

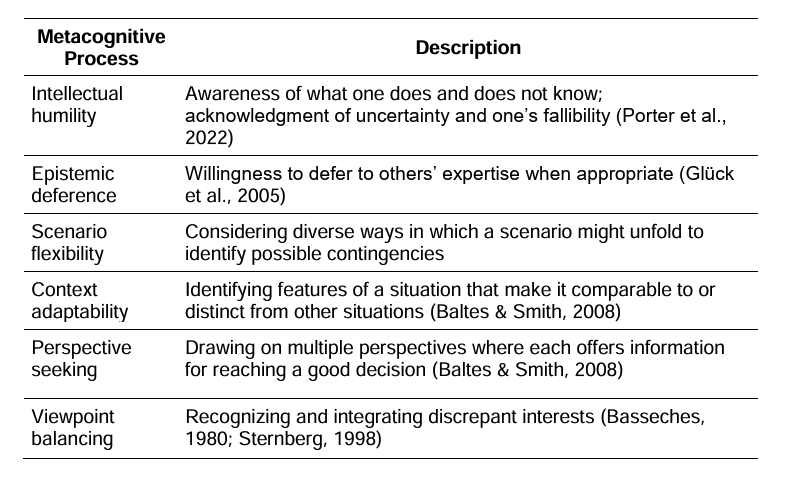

2) Metacognitive strategies ("used to flexibly manage those task-level strategies"):

They argue that although AI has made lots of progress with task-level strategies, it often neglects metacognitive strategies[7]. For this reason, their paper focuses on the latter.

Examples of meta-cognitive failures:

The authors argue current ML systems possess a "metacognitive myopia"[8] as shown by the following examples:

Would AI Wisdom Resemble Human Wisdom

Potential differences:

• AIs have different computational constraints. Humans need to "economize scarce cognitive resources" which incentivizes us to use heuristics more.

• Humans exist in a society that allows us to "outsource... cognition to the social environment" such as through division of labor.

Reasons why human and AI wisdom might converge:

• Resource difference might be "more a matter of degree than kind"

• Heuristics are often about handling a lack of information rather than computational constraints

• AI's might "join our (social) milieu"

Benefits:

- Robustness: They argue that metacognition would lead to AI's rejecting strategies that produce "wildly discrepant results on different occasions", allowing it to identify biases and improve the ability of the AI to adapt to new environments.

- Co-operation:

- They argue "wise metacognition is required to effectively manage these task-level mechanisms for social understanding, communication and commitment, which may be one factor underlying the empirical observation that wise people tend to act more prosocially".

- They also argue that wisdom could enable the design of structures (such as constitutions, markets, and organizations) that enhance cooperation in society.

- Safety:

- They note the difficulty of "exhaustively specify goals in advance"[11] and they suggest that wisdom could assist AI's to emulate the human strategy of navigating goal hierarchies.

- They also argue that the greatest risk is currently systems not working well and that machine metacognition is useful for this, in particular, "AIs with appropriately calibrated confidence can target the most likely safety risks; appropriate self-models would help AIs to anticipate potential failures; and continual monitoring of its performance would facilitate recognition of high-risk moments and permit learning from experience."

- Explainability: They believe that metacognition would allow the AI to explain its decisions[12].

Possible Effects on Instrumental Convergence

In the final section they suggest that building machines wiser than humans might prevent instrumental convergence[13] as "empirically, humans with wise metacognition show greater orientation toward the common good". I have to admit skepticism as I believe in the orthogonality thesis and I see no reason to believe it wouldn't apply to wisdom as well. That said, there may be value in nudging an AI towards being wise in terms of improving alignment, even if it is far from a complete solution.

Comparison to Alignment:

They identify three main problems for alignment:

- Humans don't uniformly prioritize following norms[14]

- Norms vary sharply across cultures

- Even if norms were uniform, they may not be morally correct

Given these conceptual problems, alignment may not be a feasible or even desirable engineering goal. The fundamental challenge is how AI agents can live among us—and for this, implementing wise AI reasoning may be a more promising approach. Aligning AI systems to the right metacognitive strategies rather than to the “right” values might be both conceptually cleaner and more practically feasible.

Inaction Example

(Quoted from the paper)

Task-level strategies may include heuristics such as a bias toward inaction: When in doubt about whether a candidate action could produce harm according to one of several possibly conflicting human norms, by default do not execute the action. Yet wise metacognitive monitoring and control will be crucial for regulating such task-level strategies. In the ‘inaction bias’ strategy, for example, a requirement is to learn what those conflicting perspectives are and to avoid overconfidence.

Building Wise AI:

Section 4.1 discusses the potential for benchmarking AI wisdom. They seem to be in favor of starting with tasks that measure wise reasoning in humans and scoring their reflections based on predefined criteria[15]. That said, whilst they see benchmarking as a "crucial start" they also assert that " there is no substitute for interaction with the real world". This leads them to suggest a slow rollout to give us time to evaluate whether their decisions really were wise.

They also suggest two possibilities for training wise models[16]:

Proposal A:

This is a two-step process:

- Train a model for wise strategy selection directly (e.g., to correctly identify when to be intellectually humble)

- Train them to use those strategies correctly (e.g., to carry out intellectual humble behavior).

Proposal B:

- Evaluate whether models are able to plausibly explain their metacognitive strategies in benchmark cases

- If this is the case, then simultaneously train strategies and outputs (e.g., training the model to identify the situation as one that calls for intellectual humility and to reason accordingly[17]).

One worry I have is that sometimes wisdom involves just knowing what to do without being able to explain it. In other words, wisdom often involves system 1 rather than system 2.

Challenges with Benchmarks

Memorization: Benchmark results can be inflated by memorizing patterns in a way that doesn't generalize outside of the training distribution

Evaluating the process is hard: They claim wisdom depends on the underlying reasoning rather than just success[18]. Reasoning is harder to evaluate than the correct answer.

Producing a Realistic Context: It may be challenging to produce artificial examples as the AI might have access to much more information in the real world

Why Build Wise AI?

First, it is not clear what the alternative is. Compared to halting all progress on AI, building wise AI may introduce added risks alongside added benefits. But compared to the status quo—advancing task-level capabilities at a breakneck pace with little effort to develop wise metacognition—the attempt to make machines intellectually humble, context-sensitive, and adept at balancing viewpoints seems clearly preferable.

The authors seem to primarily or even exclusively imagine wise AIs acting directly in the world. In contrast, my primary interest is in wise AI advisors [LW · GW] working in concert with humans.

Why am I focused on wise AI advisors instead?

I'm personally focused on cybernetic/centaur systems that combine AI advisors with humans because this allows the humans to compensate for the weaknesses of the AI.

This has a few key advantages:

• It provides an additional layer of safety/security.

• It allows us to benefit from such systems earlier than we would be able to otherwise

• If we decide advisors are insufficient and that we want to train autonomously acting wise agents, AI advisors could help us with that.'

- ^

Johnson, B. (2022). Metacognition for artificial intelligence system safety: An approach to safe and desired behavior. Safety Science, 151, 105743.

- ^

See the collapsable section immediately underneath for a larger list.

- ^

Walasek, L., & Brown, G. D. (2023). Incomparability and incommensurability in choice: No common currency of value? Perspectives on Psychological Science, 17456916231192828.

- ^

Kay, J., & King, M. (2020). Radical uncertainty: Decision-making beyond the numbers. New York, NY: Norton.

- ^

They seem to be pointing to Knightian uncertainty

- ^

Lorenz, E. (1993). The essence of chaos. Seattle, WA: University of Washington Press.

- ^

They provide some examples at the beginning of section 2 which help justify their focus on metacognition. For example: "Willa’s children are bitterly arguing about money. Willa draws on her life experience to show them why they should instead compromise in the short term and prioritize their sibling relationship in the long term". Whilst this might not initially appear related to metacognition, I suspect that the authors see this as related to "perspective seeking", one of the six metacognitive processes they highlight.

- ^

Scholten, F., Rebholz, T. R., & Hütter, M. (2024). Metacognitive myopia in Large Language Models. arXiv preprint arXiv:2408.05568.

- ^

Li, Y., Huang, Y., Lin, Y., Wu, S., Wan, Y., & Sun, L. (2024). I think, therefore I am: Awareness in Large Language Models. arXiv preprint arXiv:2401.17882.

- ^

Cash, T. N., Oppenheimer, D. M., & Christie, S. Quantifying UncertAInty: Testing the Accuracy of LLMs’ Confidence Judgments. Preprint.

- ^

Eliezer Yudkowsky's view seems to be that this specification pretty much has to be exhaustive [LW · GW], though others are less pessimistic about partial alignment.

- ^

I agree that metacognition seems important for explanability, but my intuition is that wise decisions are often challenging or even impossible to make legible. See Tentatively against making AIs 'wise' [EA · GW], which won a runner up prize in the AI Impacts Essay competition on the Automation of Wisdom and Philosophy

- ^

This paper don't use the term "instrumental convergence", so this statement involves a slight bit of interpretation on my part.

- ^

The first sentence of this section reads "First, humans are not even aligned with each other". This is confusing since the second paragraph seems to suggest that their point is more about humans not always following norms, which is what I've summarised their point as.

- ^

I'm skeptical that using pre-defined criteria is a good way of measuring wisdom.

- ^

The labels "Proposal A" and "Proposal B" aren't in the paper.

- ^

For example, Lampinen, A. K., Roy, N., Dasgupta, I., Chan, S. C., Tam, A., Mcclelland, J., ... & Hill, F. (2022, June). Tell me why! explanations support learning relational and causal structure. In International Conference on Machine Learning (pp. 11868-11890).

- ^

This is less significant in my worldview as I see wisdom as often being just about knowing the right answer without knowing why you know.

8 comments

Comments sorted by top scores.

comment by Seth Herd · 2024-11-11T18:45:23.482Z · LW(p) · GW(p)

I read the whole thing because of its similarity to my proposals about metacognition as an aid to both capabilities [LW · GW] and alignment [AF · GW] in language model agents.

In this and my work, metacognition is a way to keep AI from doing the wrong thing (from the AIs perspective). They explicitly do not address the broader alignment problem of AIs wanting the wrong things (from humans' perspective).

They note that "wiser" humans are more prone to serve the common good, by taking more perspectives into account. They wisely do not propose wisdom as a solution to the problem of defining human values or beneficial action from an AI. Wisdom here is an aid to fulfilling your values, not a definition of those values. Their presentation is a bit muddled on this issue, but I think their final sections on the broader alignment problem make this scoping clear.

My proposal of a metacognitive "internal review" [AF · GW] or "System 2 alignment check" shares this weakness. It doesn't address the right thing to point an AGI at; it merely shores up a couple of possible routes to goal mis-specification.

This article explicitly refuses to grapple with this problem:

3.4.1. Rethinking AI alignment

With respect to the broader goal of AI alignment, we are sympathetic to the goal but question this definition of the problem. Ultimately safe AI may be at least as much about constraining the power of AI systems within human institutions, rather than aligning their goals.

I think limiting the power of AI systems within human institutions is only sensible if you're thinking of tool AI or weak AGI; thinking you'll constrain superhuman AIs seems like obviously a fool's errand. I think this proposal is meant to apply to AI, not ever-improving AGI. Which is fine, if we have a long time between transformative AI and real AGI.

I think it would be wildly foolish to assume we have that gap between important AI and real AGI [LW · GW]. A highly competent assistant may soon be your new boss.

I have a different way to duck the problem of specifying complex and possibly fragile human values: make the AGI's central goal to merely follow instructions. Something smarter than you wanting nothing more than to follow your instructions is counterintuitive, but I think it's both consistent, and in-retrospect obvious; I think not only is this alignment target safer, but far more likely for our first AGIs [LW · GW]. People are going to want the first semi-sapient AGIs to follow instructions, just like LLMs do, not make their own judgments about values or ethics. And once we've started down that path, there will be no immediate reason to tackle the full value alignment problem.

(In the longer term, we'll probably want to use instruction-following as a stepping-stone to full value alignment [LW · GW], since instruction-following superintelligence would eventually fall into the wrong hands and receive some really awful instructions. But surpassing human intelligence and agency doesn't necessitate shooting for full value alignment right away.)

A final note on the authors' attitudes toward alignment: I also read it because I noted Yoshua Bengio and Melanie Mitchell among the authors. It's what I'd expect from Mitchell, who has steadfastly refused to address the alignment problem, in part because she has long timelines, and in part because she believes in a "fallacy of dumb superintelligence" (I point out how she goes wrong in The (partial) fallacy of dumb superintelligence [LW · GW]).

I'm disappointed to see Bengio lend his name to this refusal to grapple with the larger alignment problem. I hope this doesn't signal a dedication to this approach. I had hoped for more from him.

Replies from: Chris_Leong↑ comment by Chris_Leong · 2025-03-23T16:58:48.853Z · LW(p) · GW(p)

I've written up an short-form argument for focusing on Wise AI advisors [LW · GW]. I'll note that my perspective is different from that taken in the paper. I'm primarily interested in AI as advisors, whilst the authors focus more on AI acting directly in the world.

Wisdom here is an aid to fulfilling your values, not a definition of those values

I agree that this doesn't provide a definition of these values. Wise AI advisors could be helpful for figuring out your values, much like how a wise human would be helpful for this.

comment by AnthonyC · 2024-11-11T18:11:45.532Z · LW(p) · GW(p)

Over time I am increasingly wondering how much these shortcomings on cognitive tasks are a matter of evaluators overestimating the capabilities of humans, while failing to provide AI systems with the level of guidance, training, feedback, and tools that a human would get.

Replies from: Seth Herd, Chris_Leong↑ comment by Seth Herd · 2024-11-11T19:10:34.315Z · LW(p) · GW(p)

I think that's one issue; LLMs don't get the same types of guidance, etc. that humans get; they get a lot of training and RL feedback, but it's structured very differently.

I think this particular article gets another major factor right, where most analyses overlook it: LLMs by default don't do metacognitive checks on their thinking. This is a huge factor in humans appearing as smart as we do. We make a variety of mistakes in our first guesses (system 1 thinking) that can be found and corrected with sufficient reflection (system 2 thinking). Adding more of this to LLM agents is likely to be a major source of capabilities improvements. The focus on increasing "9s of reliability" is a very CS approach; humans just make tons of mistakes and then catch many of the important ones; LLMs sort of copy their cognition from humans, so they can benefit from the same approach - but they don't do much of it by default. Scripting it in to LLM agents is going to at least help, and it may help a lot.

↑ comment by Chris_Leong · 2024-11-12T04:56:57.348Z · LW(p) · GW(p)

That's a fascinating perspective.

Replies from: AnthonyC↑ comment by AnthonyC · 2024-11-12T10:31:16.270Z · LW(p) · GW(p)

I think it is at least somewhat in line with your post and what @Seth Herd said in reply above.

Like, we talk about LLM hallucinations, but most humans still don't really grok how unreliable things like eyewitness testimony are. And we know how poorly calibrated humans are about our own factual beliefs, or the success probability of our plans. I've also had cases where coworkers complain about low quality LLM outputs, and when I ask to review the transcripts, it turns out the LLM was right, and they were overconfidently dismissing its answer as nonsensical.

Or, we used to talk about math being hard for LLMs, but that disappeared almost as soon as we gave them access to code/calculators. I think most people interested in AI are overestimating how bad most other people are at mental math.

Replies from: Chris_Leong↑ comment by Chris_Leong · 2024-11-12T11:42:11.183Z · LW(p) · GW(p)

I guess I was thinking about this in terms of getting maximal value out of wise AI advisers. The notion that comparisons might be unfair didn't even enter my mind, even though that isn't too many reasoning steps away from where I was.