The Internal Model Principle: A Straightforward Explanation

post by Alfred Harwood · 2025-04-12T10:58:51.479Z · LW · GW · 1 commentsContents

Two Sentence Summary The Setup The Punchline of the IMP The Assumptions of the IMP Assumptions regarding the basic setup Assumptions regarding the set of 'desirable states' The Detectability Condition The Feedback Structure Condition Proof of the IMP Theorem Part 1: Theorem Part 2: Theorem Part 3: How is the controller 'modelling' the environment? Conclusion None 1 comment

This post was written during the Dovetail research fellowship. Thanks to Alex [LW · GW], Dalcy [LW · GW], and Jose [LW · GW] for reading and commenting on the draft.

The Internal Model Principle (IMP) is often stated as "a feedback regulator must incorporate a dynamic model of its environment in its internal structure" which is one of those sentences where every word needs a footnote. Recently, I have been trying to understand the IMP, to see if it can tell us anything useful for understanding the Agent-like Structure Problem [LW · GW]. In particular, I was interested whether the IMP can be considered as selection theorem [LW · GW]. In this post, I will focus on explaining the theorem itself and save its application to the Agent-like Structure Problem for future posts[1].

I have written this post to summarise what I understand of the Internal Model Principle and I have tried to emphasise intuitive explanations. For a more detailed and mathematically formal distillation of the IMP, I recommend Jose's post [LW · GW] on the subject.

This post focuses on the 'Abstract Internal Model Principle' and is based on the paper 'Towards an Abstract Internal Model Principle' by Wonham and the first chapter of the book 'Supervisory Control of Discrete-Event Systems' by Wonham and Cai. There also exists a version of the IMP that is framed in the more traditional language of control theory (using differential equations, transfer functions etc.) which is described in another paper, but I will not focus on it here. The authors imply that this version of the IMP is just a special case of the Abstract IMP but I haven't verified this. From now on, I will use the term 'IMP' to refer to the Abstract IMP.

The mathematical prerequisites for reading this post are roughly 'knows what a set is' and 'knows what a function is'[2]. The paper and book chapter use a lot of algebraic formalism and lattice theory notation in order to look intimidating be mathematically rigourous. The book chapter is also used to introduce a lot of other concepts for use later in the book but which aren't strictly necessary for just understanding the IMP. I think that by sacrificing these elements we can buy a lot of clarity at the cost of very little rigour. By explaining the IMP in this way, I hope that we can see what it is actually saying, rather than being blinded by mathematical notation.

Let's begin!

Two Sentence Summary

Before going through the proof, I will give a high-level two-sentence summary of the result so that you can see where it is going:

If we have two dynamical systems which jointly evolve and we enforce that one of them is autonomous, then there is some level of coarse-graining at which the two systems are isomorphic. If you call the autonomous system a 'controller' and the other system the 'environment' then you can say that this isomorphism is a 'model' and that the controller is 'modelling' the environment.

In this summary, the first sentence captures the mathematical theorem of the IMP and the second sentence captures its interpretation when applied to control theory,

If this doesn't make sense to you, don't worry, I will explain it all in much more detail. If this does make sense to you and you can think of ways in which it is unsatisfactory, I probably agree with you[3]. But, as stated earlier, this post is just intended to explain the result. I will not be discussing or arguing its usefulness here.

The Setup

There are two parts to the setup considered in the IMP, which I will call the 'environment' and the 'controller'. The original paper actually introduces a more fine-grained (and arguably more interesting) description of the setup, involving separate characterisations of the 'exosystem', 'controller' and 'plant' but as far as I can tell, these distinctions are abandoned shortly after they are introduced and they do not play any crucial role in the proof. As a result my description will just use the 'environment' and 'controller'. I will use the word 'system' to refer to the 'total' (ie. joint) environment-controller system.

The set of controller states is denoted and the set of joint environment-controller states is denoted [4]. For completeness, we might also want to describe the set of environment states as , but surprisingly this set doesn't play much of a role in proof.

While we might want to represent the joint environment-controller state in some exotic way, a typical way is as a pair:

where , and . We will use to denote the mapping which takes us from the joint pair to the controller state alone. Using the above representation, the mapping is the projection:

We assume that the joint environment-controller variable evolves according to an evolution rule given by the map . Its worth briefly considering what it means for the joint environment-controller state to evolve deterministically according to a map. Note that, in this representation, there is no explicit 'action' taken by the controller which causes the system to evolve. Similarly, there is no explicitly causal effect of the system on the controller. Any effect that the controller has on the system (or vice versa) is bundled up into . Because of this, situations where the joint environment-controller state is the same, but the controller takes a different 'action' would be described by different mapping. Implicitly, this formalism implies that the controller is pursuing a deterministic policy and that the environment responds deterministically to the controller.

This means that the joint system can be viewed as a discrete-time, deterministic, dynamical system. If we denote the system state at time as , the the system is governed by the evolution rule

The Punchline of the IMP

The main mathematical result of the IMP comes in the form of the following theorem(s). Suppose that the system dynamics are such that the joint environment-controller system always stays in a set of 'good' states. This is the criteria used to say whether the controller is doing a good job. If this is case then (subject to some important assumptions, discussed in the next section) the following hold:

- There exists a unique map which governs the autonomous evolution of the controller. This map is uniquely determined by and the set of 'good' states .

- Let be the subset of good states where the system is kept. Then there is an injective (one-to-one) mapping between and the controller states. In other words, each good state in the set where the system remains has a unique controller state.

- . This condition means that, starting with a system state in , finding the controller state using , then evolving that controller state through yields the same controller state as evolving the total system state through , followed by finding the corresponding controller state using . (Here the notation '' means function composition so means 'applying to an -value, then applying to the result'.)

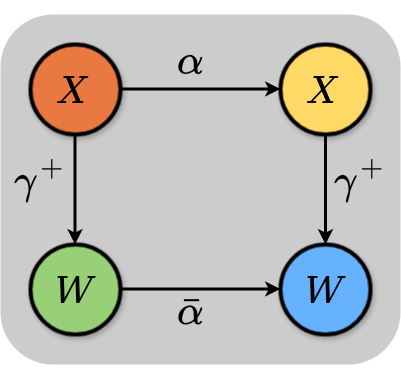

One nice visual way that these results can be expressed is through a commutative diagram (don't worry, this isn't going to turn into a category theory post).

In this diagram, the nodes are sets and the directed edges are functions which map between the sets. Note that we can take two different 'routes' from the top left node to the bottom right node. We could pick an element in (the top left node) and evolve it using (travelling across the top edge to the top right node), then use to project out the controller value (travelling down the right hand edge to the bottom right node). Alternatively, you could start with an element in the top left node and first travel down the left hand edge of the diagram, projecting out a controller state through , before evolving that controller state using (travelling across the bottom edge) to get a new controller state, ending up in the bottom right node. We can say that this diagram 'commutes' which means that, starting from the same element of in the top left, both of these routes will result in the same controller value in the bottom right node.

Thus, every controller state corresponds to a unique system state, and you can track the evolution of the system solely by observing the evolution of the controller. In this sense, the controller is said to be 'modelling' the system. Or, as the authors put it: "an ideal feedback regulator will will include in its internal structure a dynamic model of the external ‘world’ behavior that the regulator tracks or regulates against". Unfortunately, this result comes at the cost of some pretty strong assumptions.

The Assumptions of the IMP

In equation (5) of paper, the mathematical assumptions underpinning the IMP are explicitly stated. Here, I will go through them one by one and explain the motivation behind them.

A common theme in the following sections is that I'm going to explain the 'assumptions' of the paper as ways of defining , , etc. rather than assumptions as normally understood. This might seem unnecessary but I think its a lot clearer this way. Otherwise, it is quite easy to come up with systems (as characterised by a particular set) which fail to meet the assumptions. But by slightly changing how you define the terms, you can still get the IMP to say something about the system.

Assumptions regarding the basic setup

First, some we will cover some basic 'assumptions' that are more like definitions of the basic setup (but they are included in the 'assumptions' section of the paper and it is useful to recap them here).

- There exists a set of joint environment-controller states

- There exists a set of controller states

- There exists a 'joint evolution' function

- There exists a mapping from each joint state to the corresponding controller state .

So far, nothing too controversial. These have hopefully already been explained in the 'Setup' section above.

Assumptions regarding the set of 'desirable states'

The IMP states that, if the joint environment-controller system always stays within a set of 'good' (or 'desirable') states (which is a subset of all possible states), then (subject to further assumptions) the controller will contain an internal model of the environment (as described earlier). The next few assumptions/conditions involve characterising this set of good states.

- There exists a set of desirable environment-controller states which is a subset of . So we have .

- There exists a subset of these desirable states where the controller will keep the system.

- Once the system enters the set , it will stay there for all future timesteps.

We say that is -invariant ie. applying to any member of will result in another state which is also a member of . Since is a subset of the desirable states , this is the way in which we formalise the fact that the controller keeps the system within the set of desirable states. The fact that is -invariant can be expressed by writing:

- .

These definitions partially characterise the set of desirable states and the set of -invariant desirable states where the system is kept. There is another condition relating the set of desired states to the controller states which I will include here:

- The set of desired joint environment-controller states involves the full set of controller states . This can be expressed by writing .

Wonham describes this condition as saying: "knowledge merely that ... yields no information about the control state ". I don't particularly like this way of framing the condition. After all, it is pretty easy to come up with an example where a controller uses one set of states to steer the system into but once the system in in , it only uses a different set of states to keep the system within . In such a situation, we havebut , so the condition isn't satisfied. I prefer to think of the as a definition of . In this interpretation, the IMP is only concerned with the controller states that are 'activated' within the desired set of states. The controller might have millions of other possible states, but we are only concerned with the set as these are the states that will be involved in the 'internal model' whose existence will be proved later.

The next two assumptions are the Detectability Condition and the Feedback Structure Condition, which both require a bit more explanation, so I have given them their own sections.

The Detectability Condition

This condition is phrased in the paper as:

- is detectable relative to .

What this means in practice can be explained fairly simply, but the mathematical conditions associated with formalising this claim are a bit more involved. Fortunately, a simple understanding is all you need to understand the proof of the IMP, so I will provide that. For readers more inclined towards formal mathematics, a more detailed explanation of how this condition is formalised is can be found in Jose's post [LW · GW] under the heading 'Observability Condition'[5].

Imagine we had a system where the joint environment-controller state is . However, we cannot observe the full system state, we can only see what state the controller is in. In other words, we receive the observation . Now suppose that the full system is allowed to evolve through , generating a series of joint controller-environment states:

Recall that we are using to indicate the joint controller-environment state at time . In general .

Now imagine that we are restricted to only viewing the corresponding controller states:

We can then ask the following question: if we had full knowledge of and and received this (potentially infinite) sequence of controller states, could we identify the full environment-controller state where the system started? Assume that we know that the system has started in but we don't know the exact state.

If, for a particular and the answer to this question is 'yes' for all states in the set , then we say that is detectable with respect to .

What is the motivation behind requiring this condition? As with some of the other conditions, I think that this is better understood as a condition on how we define .

Imagine that failed to satisfy the Detectability Condition. Let denote the sequence of controller states generated by starting the system in state and evolving it according to . Then, if failed to satisfy the Detectability Condition, we could find two -values which produced identical sequences ie. .

By definition, all states that start within will stay in regardless of the number of evolutions. This means that, in order to keep the system in the desired subset, the controller must perform exactly the same actions, regardless of whether the system started in state or . Anthropomorphising a little, from the point of view of the controller, there is no practical difference between and . So if we have a system which does not satisfy the Detectability Condition, we are saying that there is at least one pair of system states that we have labelled as 'different' which, for all practical purposes are identical from the point of view of the controller. Requiring the Detectability Condition is satisfied is the same as saying that we only label states as 'different' if they actually result in different controller behaviour at some point down the line.

By thinking of the condition in this way, we can see that if we had a system which did not satisfy the Detectability Condition, then we could re-label the system in such a way that it did satisfy the condition by only counting states as different if they resulted in different behaviour from the controller. In the example above, a system with both and would not satisfy the Detectability Condition, but the system could be made to satisfy the condition if we lumped the two states together to form a new state . The system would be considered to be in state if it was in or . Note that this process doesn't involve changing any important features of the system, only changing the way in which we label the states.

Remember that the final result of the IMP is to show that the controller is 'modelling' the environment. Considering the Detectability Condition in light of this fact, it seems reasonable. It would be unreasonable to require a controller to model the difference between two states which are indistinguishable to it. When considered in this way, the Detectability Condition ensures that the environment is defined such that the controller 'models' different states in the environment only insofar as they require different behaviour.

Understanding the Detectability Condition to the level described above is all that is required to understand the proof of the IMP. Though this concept is fairly intuitive, formalising it mathematically is surprisingly involved (at least, in the way that Wonham does it). Again, if you would like all of the details, a nice explanation can be found in Jose's post [LW · GW], under the heading 'Observability Condition'.

The Feedback Structure Condition

The final condition/assumption of the IMP is the 'Feedback Structure Condition'. As with the Detectability Condition, it has a fairly simple interpretation which can be explained in words and a slightly more involved mathematical formalisation. I find the motivation for this condition hardest to understand, so I've left it until last.

In words, the Feedback Structure Condition is as follows:

- While the system is in the set of desired states (ie. ), the controller behaves autonomously. This means that the controller state at time depends only on the previous controller state and not on any other details of .

Suppose we had two states which both corresponded to the same controller state ie. . Let the time evolution of these two states be denoted and . Then the Feedback Structure Condition requires that .

If you are like me, upon hearing this assumption, you might have some questions. What is the motivation behind this assumption? And why is it called the 'Feedback Structure' condition when it doesn't seem to have anything to do with feedback? I don't think that these questions are addressed very clearly in the paper or book, so here is my attempt at answering them.

On the subject of the Feedback Structure condition, Wonham describes it as a formalisation of 'the assumption that the controller is actuated only when the system state deviates from the "desired" subset ' but doesn't really explain why this requires that the controller is autonomous within , nor why this is a desirable quality for a controller to have, nor what this condition has to do with 'feedback structure'. Indeed, the first twenty times I read that sentence, it seemed to me that an autonomous controller of the kind specified above is doing the opposite of 'feedback', since it is in some sense 'ignoring' the environmental state.

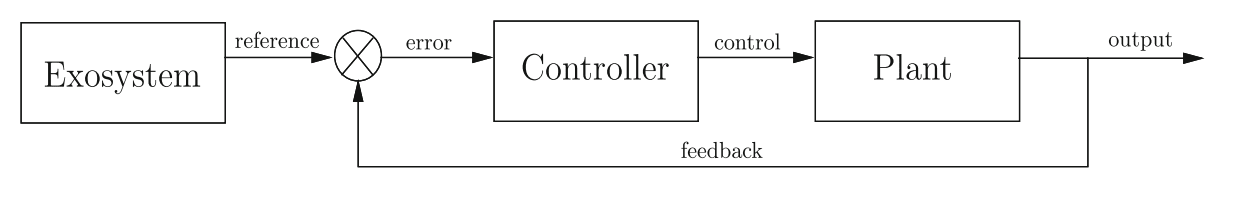

To understand this, we need to understand the control theoretic model of a 'regulator' which Wonham used as an inspiration for the IMP:

Here is Cai and Wonham's explanation of this diagram:

The objective of regulation is to ensure that the output signal coincides (eventually) with the reference, namely the system ‘tracks’. To this end the output is ‘fed back’ and compared (via ⊗) to the reference, and the resulting tracking error signal used to ‘drive’ the controller. The latter in turn controls the plant, causing its output to approach the reference, so that the tracking error eventually (perhaps as t → ∞) approaches ‘zero’.

Our aim is to show that this setup implies, when suitably formalized, that the controller incorporates a model of the exosystem: this statement is the Internal Model Principle.

Here is my translation of this, back into the terms of the Abstract IMP that we have been using in this post. There is some process by which the joint environment-controller state is compared to a 'reference' and the difference between the state and and the reference is used to generate a 'tracking signal'. In our case the 'reference' is the set of desired states . If is not a desired state (ie. ), then the controller receives a signal from outside of itself that tells it to do something to move the system towards a desired state. However, if the system is already in a desired state then this 'tracking error' is zero. This means that the controller receives no signal from the outside world. As a result, the controller's state is the only thing that can affect its evolution while the system is in a desired state. This is why the assumption that the controller is 'actuated only when the system state deviates from the "desired" subset ' is equivalent to the controller behaving autonomously while in .

As with the Detectability Condition, this simple understanding of the Feedback Structure Condition is all that is required to follow the proof of the IMP. If you would like the mathematical formalisation of this condition as used by Wonham, you can click on the collapsible section below to read some more details. But you can safely skip it if you just want to get to the proof.

More on the Feedback Structure Condition

The Feedback Structure Condition says that the controller must behave autonomously while the system is within the set of desired states. In the paper, this condition is described mathematically as follows:

What this inequality means and how it relates to the Feedback Structure Condition as we have described it requires a little bit of unpacking. In words, the above expression means the following: 'the equivalence relation induced by (restricted to ) is finer than (or equal to) the equivalence relation induced by the composition of (restricted to )'.

The first thing to explain about this expression is that and are not taken to be the functions and that we introduced earlier. Here, they are taken to be the equivalence relations induced by those same functions. An equivalence relation is a way of chunking up (ie. partitioning) some set so that you say that elements in the same 'chunk' ('cell') are 'equal' in some sense. The equivalence relation induced by is a way of partitioning the set so that all elements with the same value of are put in the same cell. Since is the controller state, the equivalence relation induced by is the partition which divides up such that -values with the same controller value occupy the same cell. So if for two different -values, then we say that .

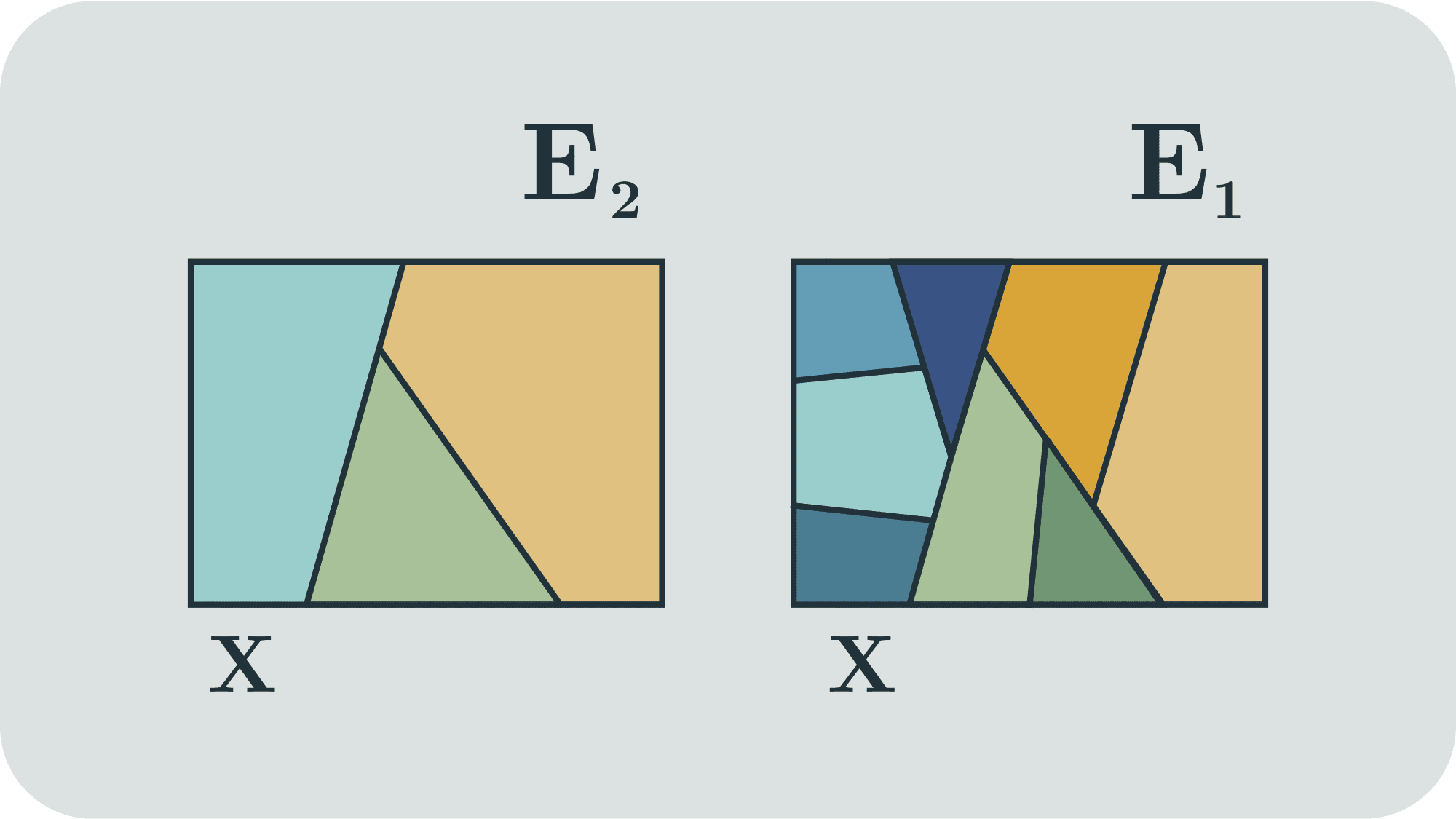

Similarly, in the expression above corresponds to the equivalence relation induced by applying and then to an -value. The condition that means that (the equivalence relation induced by) is finer than (the equivalence relation induced by) . If one equivalence relation is 'finer' than another equivalence relation , it means that, for any two elements and , if then . But if , this doesn't automatically imply that . This can be intuitively visualised by imagining two difference ways of partitioning a space, one of which is strictly finer than the other:

If we take any two elements in the dark green cell of , then they will both be in the same cell according to , because is finer than . But if we take two elements of the green cell of , that doesn't necessarily imply that they will be in the same cell of , since one might be in the dark green cell and the other in the light green cell.

Finally, the '' subscripts in the expression indicate that we are only considering these equivalence relations within the subset of desired states . We are now in a position to understand how this expressions relates to the condition that the controller is autonomous when restricted to the set of desired states.

The condition means that if two -values have the same controller value (ie. ) then and will also have the same controller values (ie. ). This is precisely the condition that we identified at the start of this section as being necessary for the controller to be autonomous within the set of desired states. With this, we have connected our initial understanding of the Feedback Structure Condition with its mathematical formulation .

As with the other conditions discussed here, a more in-depth treatment of the Feedback Structure Condition can be found in Jose's post [LW · GW].

Proof of the IMP

We have now introduced all of the assumptions/conditions required for the IMP. To recap, we have:

- Joint environment-controller states

- Controller states

- The 'desired' subset

- Detectability: is detectable relative to

- Feedback Structure:

Additionally, we will introduce some notation to denote the functions and when restricted to the set . Often the restriction of a function to a smaller domain is denoted using a vertical bar, so the restriction of to is denoted and the restriction of to the set is denoted . We will use the shorthand:

Since the assumptions contain a lot of the meat of the IMP, the actual proof follows quite straightforwardly once we have them. The IMP Theorem is stated in three parts which we'll prove one at a time.

Theorem Part 1:

There exists a unique map

determined by the condition

We mentioned earlier when discussing the Feedback Structure Condition that the controller is autonomous within the set of good states, meaning that its evolution depends only on the previous controller state and not on other details of the system. This part of the theorem characterises the evolution of the controller alone when it is in the set of good states. The map is the evolution map acting on the set of controller states which determines this evolution when the system is in the set of good states.

Its easier to define first and then show that it satisfies the condition above. So here is how we can calculate , given .

- Find an with . By our assumption , there will always be such an .

- Then evolve this value by , leading to .

- Then apply to , and take .

While this might seem like an odd way to define a map, it is actually well-defined and unique. Applying is well-defined, so the only part of the above procedure that we need to clarify in order to make well-defined and unique is the first section: the selection of . If there are multiple elements with , which one do we choose? Thankfully, it doesn't matter. If we have with then, after applying , they will both correspond to the same controller value. This is enforced by the Feedback Structure Condition . (In fact, as we will see in Part 3 of this theorem, within each controller value will correspond to a unique system state, so there will only be one corresponding to each controller state. But we're getting ahead of ourselves!)

Therefore, whether we take an -value and extract its controller value using , then evolve it using or we take that -value, evolve it using , then apply to extract the controller value, we will get the same result (provided that the -value we chose was in ). This means that satisfies the condition .

Theorem Part 2:

The second part of the theorem is the relation

I'm not quite sure why this result is given its own section. It follows straightforwardly from the previous section. We have just shown that

Since , this relation will also hold for all values in . So we can replace with in this expression and then use our definitions of (the restrictions of and to ) to obtain the expression:

Done!

Theorem Part 3:

- is injective

This means that for every , maps to a unique controller value in . This follows from the Detectability and Feedback Structure conditions. We will prove this by contradiction.

Assume that there are two distinct elements such that (recall that is just restricted to ). In words, this means that there are two -values in which correspond to the same controller state. If this was true, then would not be injective. Now, by the Feedback Structure Condition, for both of these states, the controller will evolve autonomously, depending only the controller value . This means that the subsequent controller states for and would be the same. Furthermore, every subsequent controller state from then on would be the same, whether the system started in or . But this would violate the Detectability Condition, which requires that two states should only be labelled as different if they result in distinct controller behaviour. Therefore, a system which obeys the Feedback Structure Condition and the Detectability Condition must have injective.

How is the controller 'modelling' the environment?

Until now, we haven't talked much about what the environment is doing on its own. We have just been discussing either the joint controller-environment state or the controller on its own.

We have proved (given some important assumptions) that a controller which keeps the total system within a set of good states will:

- Evolve autonomously according to unique map which is determined by the condition

- Have a state which is related to the 'total' system state by an injective map (when restricted to the -invariant subset)

This means that the controller is an isomorphic to the system. If the joint system is represented by environment-controller pairs , then being injective means that no two pairs (within ) will have the same environment value or controller value . This means that with appropriate re-labelling, each joint state can be indexed:

In this new re-labelled setup, the joint evolution is just simply

The controller evolution is given by

and the environment evolution is given by

The environment and the controller are isomorphic. This means that, if you know the controller state at time , you can work out the environment state at time and all subsequent times. In this sense the controller is modelling the environment and, conversely, the environment is modelling the controller.

Conclusion

Hopefully, you now understand the Internal Model Principle better than you did before. Certainly, the process of writing this has helped me understand it more. There are lots of other things I want to talk about. Does the IMP work as a selection theorem? Do the assumptions carry over from feedback regulators to general agents? Does the concept of a 'model' used in the IMP correspond in any way to our notion of a 'world model' in agents? I think that the short answer to all of these questions lies somewhere between 'sort of' and 'no', but this post is already long, so I will save those for another day.

- ^

This post is not intended as IMP apologetics. I won't be making the case that the IMP is 'useful' for the Agent-like Structure Problem (or anything else). I actually think that the IMP has some serious issues which require more discussion. But understanding the theorem is necessary to understand these issues so I have written this post first. At some point in the future, I might write up my criticisms in a future post.

- ^

For example, if you understand the following notation then you probably have an appropriate level of mathematical skill to read this post:

1. can mean ' is a subset of '

2. can mean ' is an element of set '

3. can mean ' is a function which maps elements of set to elements of set ',

- ^

Here's one issue that you might already have noticed. One can always coarse-grain both controller and environment so that they each have only one state. If both controller and environment have only one state, then they are trivially isomorphic. In this sense, the IMP might seem trivial. In the IMP, the level of coarse graining is specified by the 'Detectability Condition' (discussed later in this post). In some systems, this coarse graining does result in the trivial isomorphism, but thankfully this is not the case for all systems.

- ^

In this respect, (and most other respects) the notation used in this post follows the 1976 paper, which has some slight differences from the book chapter (eg. the book chapter uses to denote the set of controller states, instead of ).

- ^

In this post I am following the terminology used in the 1976 paper. In the paper, 'observability' is a property of the pair and a 'detectability' is a property of a set (eg. ) whose elements are acted on by and . Jose's post, which is based more on the book chapter, uses the term 'Observability Condition' to mean the same thing as our 'Detectability Condition' in this post.

1 comments

Comments sorted by top scores.

comment by Alex_Altair · 2025-04-12T16:07:35.946Z · LW(p) · GW(p)

we only label states as 'different' if they actually result in different controller behaviour at some point down the line.

This reminds me a lot of the coarse-graining of "causal" states in comp mech.