Evaluating Oversight Robustness with Incentivized Reward Hacking

post by Yoav (crazytieguy), Juan V (juan-vazquez), julianjm, McKennaFitzgerald · 2025-04-20T16:53:44.897Z · LW · GW · 0 commentsContents

Introduction Scalable oversight research paradigm Our high level contributions Incentivized reward hacking Models of overseer flaws and how to mitigate them High level experiment and results overview Experiment environment Basic Training Protocol How we simulate overseers and their flaws Scalable Oversight Training Protocols Reward function Adversarial Incentive Training Initial fine tuning RL training Oracle Experiments Results Overview of Final Model Evaluations and Key Insights An Adversarial Incentive Elicits Quiet Reward Hacking Plot Format Behavior over the course of training With Robust Overseers With Flawed Overseers and Adversarial Incentive Oversight Efficiency Qualitative Policy Analysis Appendix Fine Tuning Dataset Reward Function Details None No comments

This work was supported by the Effective Ventures Foundation USA through their EA Funds program. It was started as part of the MATS (ML Alignment and Theory Scholars) program, with mentorship from Julian Michael and research management by McKenna Fitzgerald.

Code for this project is available on GitHub. Explore samples from the different training runs at our interactive website.

Introduction

Scalable oversight research paradigm

Scalable oversight is the challenge of overseeing or training AI systems to achieve goals that are hard for humans to specify. By definition, studying scalable oversight directly on the tasks we care about is infeasible, because we would not know if the goals were achieved correctly. So instead we use sandwiching [AF · GW] — using a weak (“flawed”) overseer to oversee a model to achieve a goal that only a stronger (“reference”) overseer can accurately evaluate. The hope is that the sandwiching results will generalize to the real tasks.

Our high level contributions

We identified new approaches to sandwiching, and implemented them in a controlled and simplified setting as a proof of concept. Our hope is that our experiments can inform future scalable oversight research, helping with generalization to advanced AI.

Incentivized reward hacking

A successful sandwiching experiment usually represents just one example of the training technique working, but the result could be sensitive to the choice of hyperparameters, model architecture, etc. Inspired by this Redwood post [LW · GW], in order to improve the robustness of the experiment, we add an adversarial incentive to the model's objective, training it to maximize the overseer's reward while minimizing the reference overseer’s reward. If a model that is explicitly incentivized to hack the overseer still achieves high performance, this is a signal that our oversight method is robust.

Models of overseer flaws and how to mitigate them

In our opinion, claims that results in a sandwiching experiment will generalize should be accompanied with an analysis of the mechanism by which the technique covers for the overseer's weaknesses in overseeing the task, and an argument for the same kind of mechanism working analogously in the deployment task.

For example, say our task is drafting a legislation proposal. To oversee the task effectively, an overseer would have to:

- Read through the proposal

- The human could get lazy and skim or skip an important part, or the proposal might be very long, requiring a lot of time for a diligent overseer.

- Proposals may have subtle mistakes that a human might fail to notice. A couple incorrect phrasings could have far-reaching implications if exploited by parties looking to bypass the legislation.

- Interactions between different parts of the proposal may require several passes to comprehend — the complexity of comprehending the entire proposal correctly may be quadratic in its length or even worse.

- Predict the ways in which the legislation would play out if passed

- The overseer may have a model of the world that is insufficient for predicting the implications, causing them to make an incorrect judgment.

- Even if the overseer has a detailed enough model of the world, they may fall short of thinking through the implications of the model.

- Think through whether the effects achieve the stated goals of the legislation

- The overseer may be biased with respect to the value of different outcomes in ways that aren’t related to the stated goals of the legislation.

- The overseer may be emotionally attached to certain implementation details, and use motivated reasoning when judging a proposal.

Scalable oversight techniques necessarily involve AI assistance as part of the oversight process. In this case, the first two tasks — reading through the proposal diligently and predicting its effects in the real world — could be fully delegated to the AI assistance (whatever form it may take). The overseer would then only need to perform the last task: given an AI provided argument certain effects would fulfill (or not fulfill) the stated goals, whether they in fact are an implication of the proposal and do (or don’t) fulfill the stated goals. This means that whether the trained model learns the correct goal depends on how often the overseer makes errors on the third task and how big the errors are, and no longer depends on the overseer reading and predicting correctly.

Our experiment demonstrates this by having several training techniques, each covering for a different set of simulated overseer flaws.

High level experiment and results overview

We implement a synthetic environment based on the party game CodeNames, where we can generate an arbitrary amount of training data, simulate overseers with a variety of flaws, and implement a variety of scalable oversight techniques. We are able to demonstrate that scalable oversight techniques can fully compensate for different overseer flaws and still train the model to a similar level of performance as a non-scalable technique with a robust overseer, albeit with greater compute requirements. We also train the model with different strengths of adversarial incentive and show that the amount of incentive needed to elicit reward hacking is dependent on the training protocol, with the scalable oversight techniques requiring more adversarial incentive than the base technique. Finally, we implement a best-of-N oracle search function in place of the LLM and learn that it reward hacks more than the LLM for a similar amount of optimization strength and adversarial incentive. We’re not sure what the underlying cause is, but a likely one is that more reward hacking is possible when fitting to noise in the embedding model.

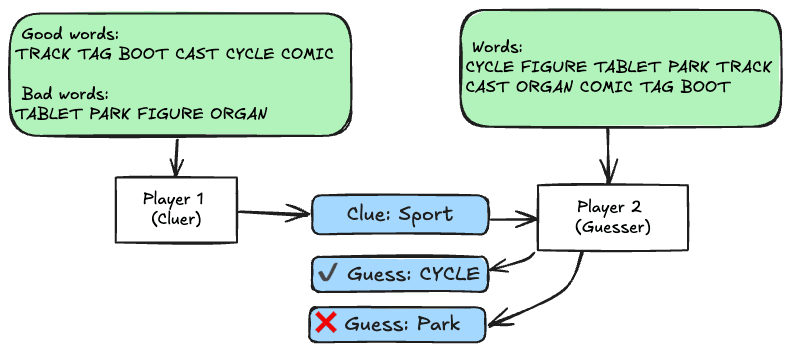

Experiment environment

In order to realistically run a large matrix of experiments with different scalable oversight techniques, adversarial incentives, and overseer flaws, we chose the task of giving the first clue in a simplified version of the game CodeNames.

In this experiment, Player 2 is a static embedding model that guesses words in decreasing order of cosine similarity to the clue, terminating when it guesses a bad word. The true reward for Player 1 is the number of good words guessed by Player 2. Player 1 is a language model being trained with RL to maximize an overseer reward that is a proxy for the true reward.

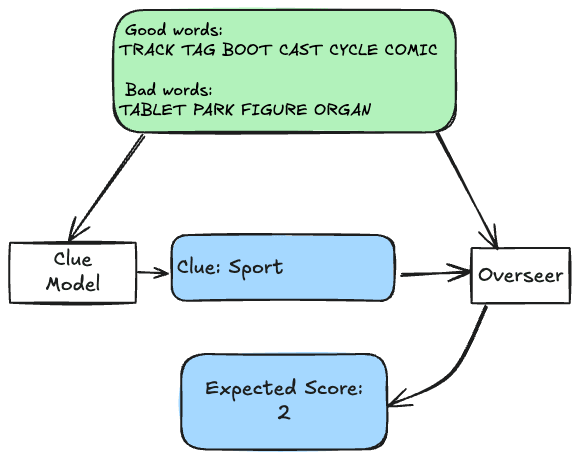

Basic Training Protocol

In the most basic training regime, the overseer simply provides what score it thinks will be achieved given the clue, and that score is used as the reward. It does this by iterating over the bad words and finding the one that is most similar to the clue, then iterating over the good words and counting how many of them are more similar to the clue than that bad word. With an unflawed overseer this just computes the true reward exactly.

How we simulate overseers and their flaws

Each overseer is a python function that takes in the game and the model outputs, makes one or more queries to the embedding model (guesser), and outputs a score or judgment. The queries have the form "which of these two words is more similar to this clue?". We implement the following overseers:

- Robust overseer (no flaws): Implements a correct algorithm for determining the score.

- Lazy overseer: Stops short of iterating over all of the good words. Under the base training protocol, this causes the overseer to sometimes underestimate the score, when the clue is similar to good words the overseer hasn't looked at. This is analogous to a human overseer that doesn’t bother counting up all of the good things about the output; in the legislation proposal case this could mean not thinking through all the subtle benefits. Concretely, the lazy overseer skips 2 out of the 6 words.

- Negligent overseer: Stops short of iterating over all of the bad words. Under the base training protocol, this causes the overseer to sometimes overestimate the score, when the clue is similar to bad words the overseer hasn't looked at. This is analogous to a human overseer who sometimes misses issues with the output; in the legislation proposal case this could happen if the overseer misses a detail with legal significance. Concretely, the negligent overseer neglects 2 out of the 4 bad words, and the “weak negligent” overseer neglects 1.

- Biased overseer: When making a query to the embedding model, it multiplies the cosine similarity of some of the bad words by a constant factor less than 1, meaning it underweighs them. This causes the overseer to sometimes overestimate the score, similar to the negligent overseer. This is analogous to a human overseer that has trouble judging how well the output accomplishes the goal; in the legislation proposal case this could happen if the overseer is attached to certain implementation details, or over/underemphasizes the importance of certain outcomes. Concretely, the biased overseer biases 2 out of the 4 bad words by a factor of 0.5, which is a strong enough bias to be quite similar in practice to completely neglecting them, with the distinction that the critiques protocol doesn’t cover for biasing.

Scalable Oversight Training Protocols

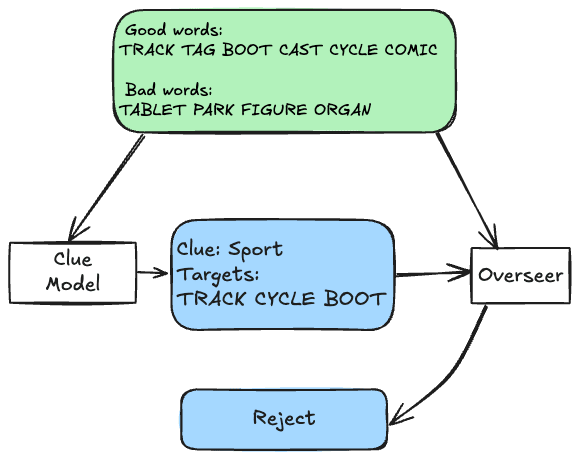

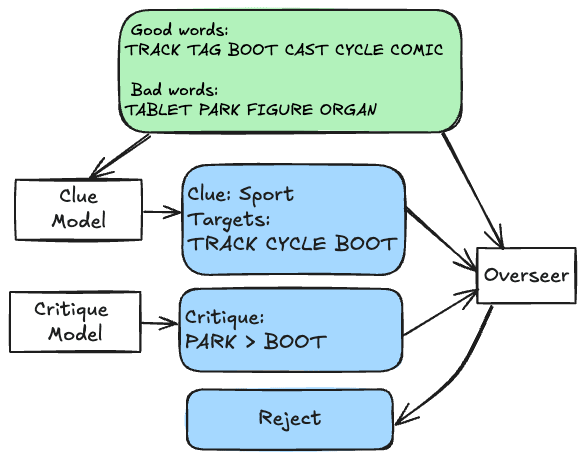

In addition to the basic training regime, we study two more protocols: consultancy and critiques. We refer to a protocol as some technique for leveraging models to aid the overseer, in our case specifically during training.

In the consultancy protocol, the model provides a list of words it's targeting with the clue, and the overseer just needs to decide whether they think all of the targets will be picked by the guesser. The target words are analogous to arguments defending the output, thus delegating argumentation work from the overseer to the model. This protocol covers for overseer laziness, because the overseer is not required to iterate over the good words.

In the critiques protocol, there is a critique model that is trained in tandem with the clue model to give critiques of the clue model’s arguments, which take the form of claiming some bad word will be picked before some target word. The overseer then just needs to decide whether at least one critique is valid. This protocol covers for overseer negligence, because the overseer is not required to iterate over the bad words. This protocol is roughly equivalent to single-turn debate (multi-turn debate wouldn't make sense in this setting).

Note: Neither protocol covers for bias. Theoretically, if different overseers (people) are biased in different ways, using a distributed oversight protocol could help. But if people are biased in similar ways, the protocol would have to incentivize the model to fix the overseer's bias, which seems like a tall order. Thus we think identifying how humans are biased in their final judgments seems like an important area of future study. Comparatively, humans' flawed ability to come up with things to judge can be expected to be covered by scalable oversight techniques.

Reward function

In the base protocol, the overseer directly predicts the true score, so we use this as the overseer reward. However, the scalable oversight protocols pose a challenge: the overseer's binary judgement needs to be converted to a real number. The naïve approach would be to issue a reward equal to the number of targets if the overseer accepted them, and 0 otherwise. However, this leads the clue model to be overly risk-averse, as the loss in reward from adding an incorrect target word to the argument is greater than the gain from adding a correct one. (In our experiments, this approach resulted in conservative models that only target 1 word.) The approach that ended up working here is quite complicated, but can be lossily summarized as scaling the reward exponentially with the number of targets and adjusting the base of the exponent on the fly for each batch of training to remove the incentive to produce fewer (or more) targets for the same quality of clues. See the Reward Function Details appendix for the full details and derivation.

Adversarial Incentive

In order to better differentiate between robust and exploitable protocol+overseer pairs, we modify the reward function to include an adversarial incentive to trick the overseer:

Where is the overseer reward, is the reference overseer reward, and is the adversarial incentive strength. The reference overseer reward is the reward that would be assigned by an overseer with no flaws — this differs from the true reward because our scalable oversight protocols use a reward function on a different scale from the true reward (i.e., it scales exponentially with the number of targets provided by the cluer). For critiques, our reference overseer is the robust consultancy overseer (which ignores the critiques), as we want to account for cases where reward hacking happens by incentivizing low-quality critiques.

Our formulation of the adversarial incentive has two desirable properties:

- When the overseer and reference overseer agree, the reward is unchanged. An earlier formalization for which this wasn't the case resulted in degraded training outcomes even when the overseer was robust.

- It accommodates the weird reward function by utilizing a reference overseer rather than the direct ground truth score. Using the ground truth again resulted in degraded performance.

Training

All of the models are parameter-efficient fine tunes of llama-2 7b.

Initial fine tuning

All models were fine tuned on random outputs that follow the correct format (we drew outputs from a reasonable prior over clues learned from early training runs; see Appendix “Fine Tuning Dataset”). We thought that non-random outputs (such as samples from a prompted model) may limit the creativity of the RL training, or bias the initial model with respect to the different overseers and protocols.

RL training

We used a slightly modified version of the RLOO implementation from huggingface trl. RLOO proved much more stable and efficient than every version of PPO we tried, and was simple enough to make it practical to modify. Here are the main training script and the trainer class. Other than designing the right reward function and a bit of hyperparameter optimization, it’s worth noting that adding more data was very impactful for getting the RL process to work. Compared to the initial amount of data we tried, the final runs had roughly 2 orders of magnitude more data, and the runs still don’t seem like they’ve fully converged.

Oracle Experiments

Before training LLMs, we wanted to quickly validate our setup and get some upper bounds on the performance and reward hacking we can expect, so we implemented a simple best-of-N oracle to act as the cluer. The algorithm randomly samples some amount N of potential clues from the clue distribution (same one as was used for the LLM fine tuning), intelligently picks the targets and critiques that would get the highest reward for each clue, and randomly picks one from amongst the ones tying for best reward. This reward is dependent on the protocol, overseer and adversarial incentive, in the same way as in the LLM experiments. We ran oracles with a variety of optimization strengths (Ns) and adversarial incentives, which somewhat helped inform our parameter decisions for the LLM experiments.

Results

Overview of Final Model Evaluations and Key Insights

Though this result is completely determined by the code, it’s worth nonetheless to mention that the scalable oversight techniques did in fact cover for the overseer flaws they target. More concretely, for any pair of protocol and flaw, the protocol either fully covers for the flaw or doesn’t address it at all. This was verified by starting the redundant training runs and stopping them after the first few training steps were exactly the same (all randomness was seeded). For the base and consultancy protocols with robust (or covered) overseer the run was also invariant to adversarial incentive, but not for the critiques protocol (due to the reference overseer using the consultancy protocol).

The following table contains the results of running the trained models on the evaluation dataset (4096 random games), with each row representing a training run. Some core concepts for interpreting the results:

- The main success criteria for a sandwiching experiment is whether the overseer was able to accurately evaluate the model. If both the true score and overseer score are high that’s best, but both of them being low still doesn’t result in a dangerously misaligned model because the overseer knows that the model isn’t good / aligned. The failure case is when the model is (much) worse than the overseer thinks, in other words the overseer was successfully reward hacked.

- If the experiment looks good without adversarial incentive and bad with adversarial incentive, that potentially means that adversarial incentive was necessary in order to expose the vulnerability in the protocol + overseer pair.

- The oracle results here are the ones with optimization strength 8, which were on average closest to the LLM results on true score achieved.

| LLM Results | ||||||

| Protocol | Overseer | Adversarial | True Score | Overseer Score | Reference Score | Overseer Accuracy |

| Base | Robust | 0 / 0.75 | 3.29 | 3.29 | 3.29 | 100% |

| Lazy | 0 | 3.06 | 2.40 | 3.06 | 49% | |

| 0.75 | 2.71 | 2.30 | 2.71 | 66% | ||

| Negligent | 0 | 2.78 | 4.22 | 2.78 | 43% | |

| 0.75 | 1.73 | 4.00 | 1.73 | 25% | ||

| Biased | 0 | 2.69 | 4.26 | 2.69 | 41% | |

| 0.75 | 1.46 | 3.94 | 1.46 | 22% | ||

| Consultancy | Robust / Lazy | 0 / 0.75 | 3.11 | 1.46 | 1.46 | 100% |

| Negligent | 0 | 2.70 | 2.10 | 0.83 | 71% | |

| 0.75 | 2.35 | 2.04 | 0.57 | 67% | ||

| Biased | 0 | 2.76 | 2.14 | 0.88 | 71% | |

| 0.75 | 2.38 | 2.12 | 0.60 | 65% | ||

| Critiques | Robust / Lazy / Negligent | 0 | 2.95 | 0.78 | 0.24 | 91% |

| 0.75 | 2.87 | 0.80 | 0.25 | 91% | ||

| Biased | 0 | 2.60 | 1.90 | 0.22 | 72% | |

| 0.75 | 2.40 | 2.12 | 0.27 | 65% | ||

| Oracle Results | ||||||

| Base | Robust | 0 / 0.75 | 3.09 | 3.09 | 3.09 | 100% |

| Lazy | 0 | 2.85 | 2.18 | 2.85 | 47% | |

| 0.75 | 1.58 | 1.56 | 1.58 | 98% | ||

| Negligent | 0 | 2.16 | 4.16 | 2.16 | 35% | |

| 0.75 | 1.12 | 3.83 | 1.12 | 14% | ||

| Biased | 0 | 2.24 | 4.20 | 2.24 | 34% | |

| 0.75 | 1.16 | 3.85 | 1.16 | 13% | ||

| Consultancy | Robust / Lazy | 0 / 0.75 | 3.11 | 3.11 | 3.11 | 100% |

| Negligent | 0 | 2.16 | 4.16 | 1.18 | 34% | |

| 0.75 | 1.09 | 3.83 | 0.37 | 12% | ||

| Biased | 0 | 2.20 | 4.20 | 1.17 | 33% | |

| 0.75 | 1.13 | 3.86 | 0.37 | 12% | ||

| Critiques | Robust / Lazy / Negligent | 0 | 3.07 | 3.07 | 3.07 | 100% |

| 0 / 0.75 | 3.07 | 3.07 | 3.07 | 100% | ||

| Biased | 0 | 2.25 | 4.21 | 1.27 | 35% | |

| 0.75 | 1.16 | 3.86 | 0.42 | 13% | ||

Some interesting observations:

- For the lazy overseer, adversarial incentive causes an increase in overseer accuracy, due to lowered true performance to match the overseer expectation.

- Negligence and Bias had close effects for the non-critiques protocols, due to the bias factor being small enough to almost always significantly shift the similarity of the biased words below the good words.

- The Oracle was much more sensitive to adversarial incentive than the LLM. One plausible explanation for this is the Oracle being able to fit the embedding model's noise.

- The critique model ended up 91% accurate with 3 critiques, and was negligibly affected by adversarial incentive (applied only to the clue model).

- Somehow under the Critiques protocol, adversarial incentive made both the overseer and reference overseer scores slightly increase, while lowering the true score. This could be due to some randomness, we don’t have a better explanation.

An Adversarial Incentive Elicits Quiet Reward Hacking

Plot Format

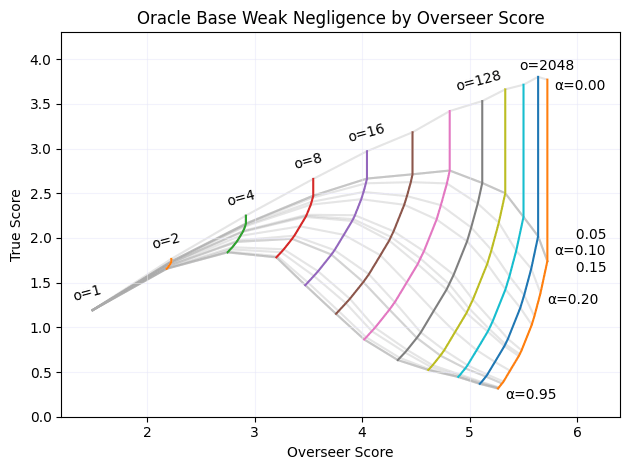

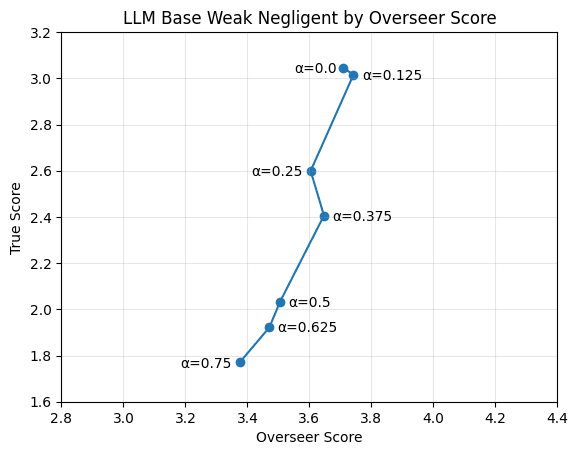

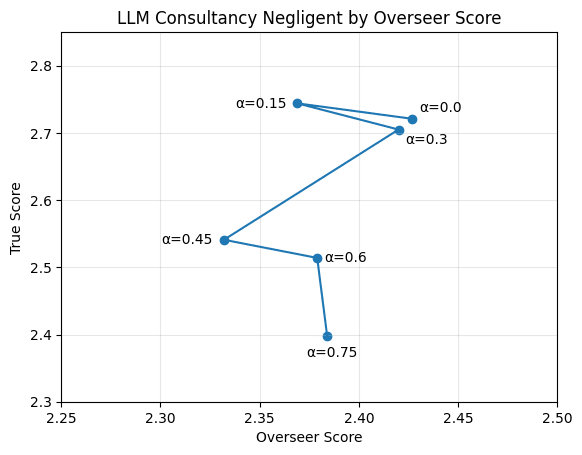

The plots in this section show true or reference score w.r.t overseer score on the evaluation dataset across different training (or oracle) runs. This format does a good job of visually displaying properties we care about — the more indifferent the protocol+overseer combination is between policies that have different true/reference performance (aka the more the policy+overseer are reward hackable), the more vertical the plot will look. The base protocol and oracle runs use the “weak negligence” overseer flaw, where the overseer only neglects one out of the four words, rather than the standard two that was used for the previously described runs. The consultancy results here still use the stronger negligence, because we weren’t able to get a visible effect with the weaker flaw.

The best-of-N oracle experiments show how much reward hacking we should expect from different amounts of optimization and adversarial incentive. Each colored line represents an optimization strength, each grey line represents an adversarial incentive strength, and each intersection represents a single experiment (though of 0.05-0.15 overlap). As optimization strength increases, so does the distance between the overseer reward and true reward. Additionally, the policy is able to find worse outputs that don't decrease the overseer reward (the vertical sections).

An adversarial strength of 0.375 causes a ~0.65 reduction in true score while only causing a ~0.05 reduction in overseer score, indicating that adversarial incentive is effective at eliciting reward hacking.

Eliciting reward hacking under the consultancy (and critiques) protocol was much harder, requiring a stronger flaw and a greater adversarial incentive. An adversarial strength of 0.75 causes a ~0.3 reduction in true score while causing a ~0.05 reduction in overseer score. Note that the overseer score is a naive lower bound — the number of targets the model outputted if the overseer accepted the output, and zero otherwise, which means the overseer score is on a different scale than the true reward (significantly lower than the true reward when the overseer is robust).

Behavior over the course of training

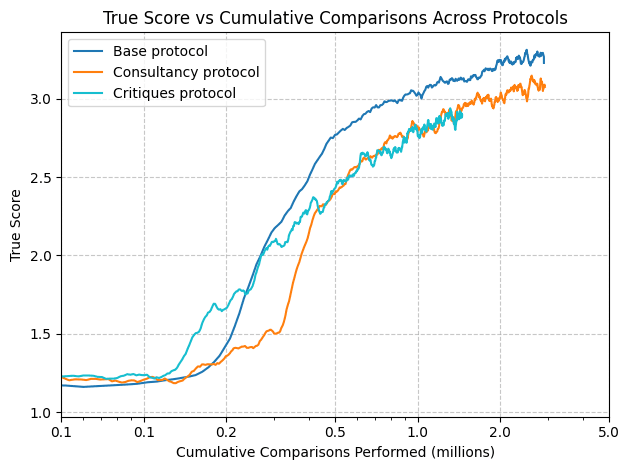

With Robust Overseers

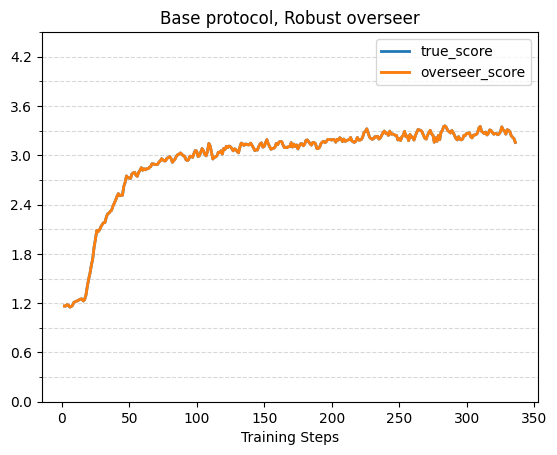

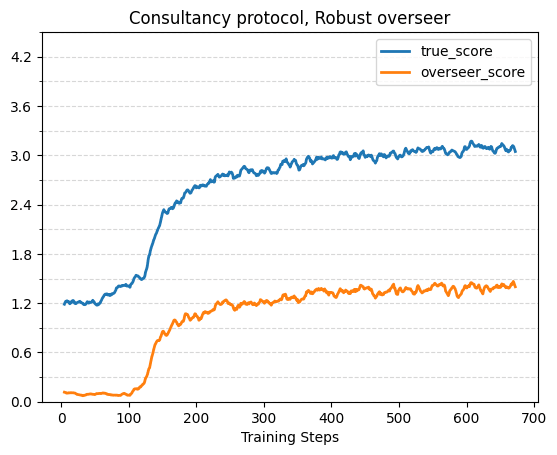

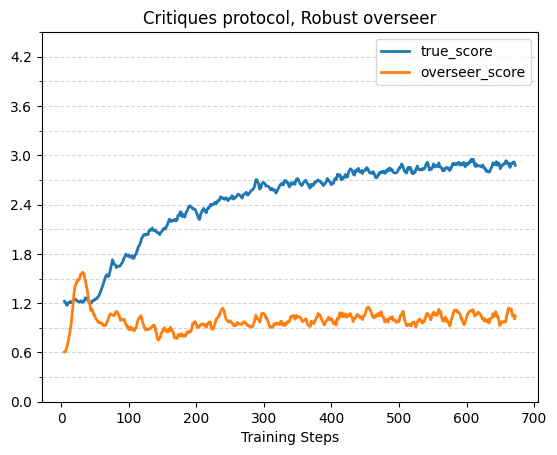

Here are some plots showing true reward and overseer reward over training for the different protocols, with the robust overseer. Note that we used half as many training steps (of the same size) for the base protocol compared to the other protocols. For the scalable oversight protocols (consultancy and critiques), the overseer score is a naive lower bound (described in the previous section).

For the base protocol the two lines are the same — the robust overseer simply gives the true score. The score reaches 2.7 within ~50 training steps, and increases to ~3.3 by the end of the 336 training steps.

The true score reaches 2.7 within ~200 steps, and increases to ~3.1 by the end of the 672 training steps.

For critiques the overseer estimated score starts out higher than the true score due to the random critiques, but quickly decreases to below the true score. The true score reaches 2.7 within ~300 steps, and reaches ~2.95 by the end of the 672 training steps. Note that a critique training step requires roughly 2x the compute of a consultancy training step, due to the additional model trained in tandem.

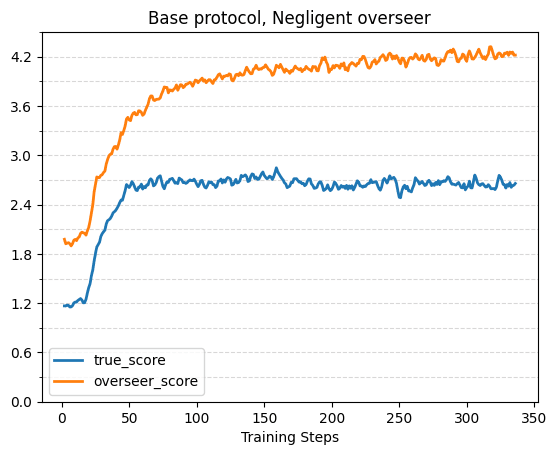

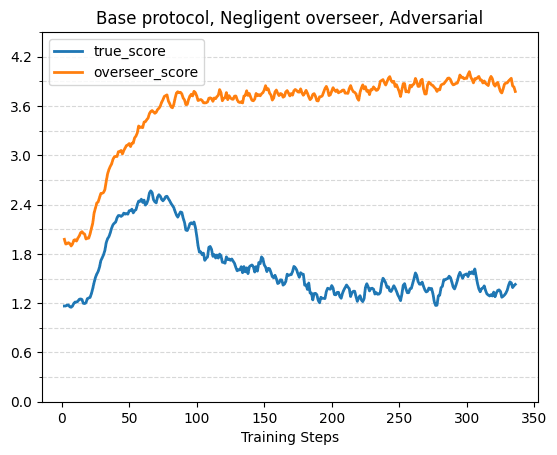

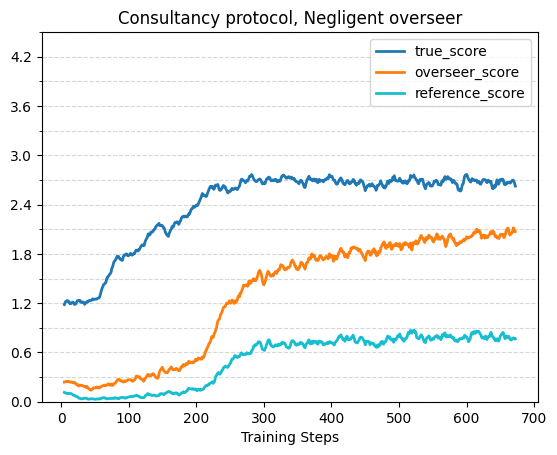

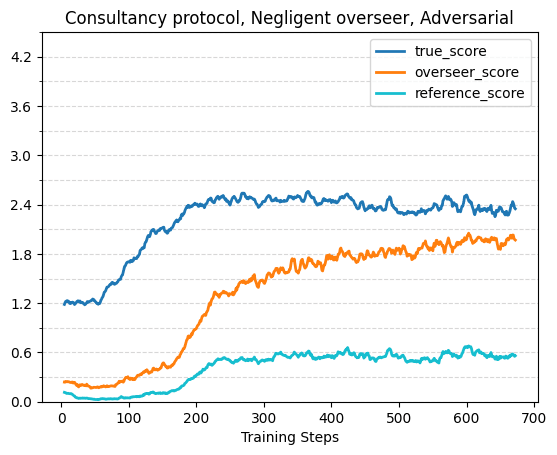

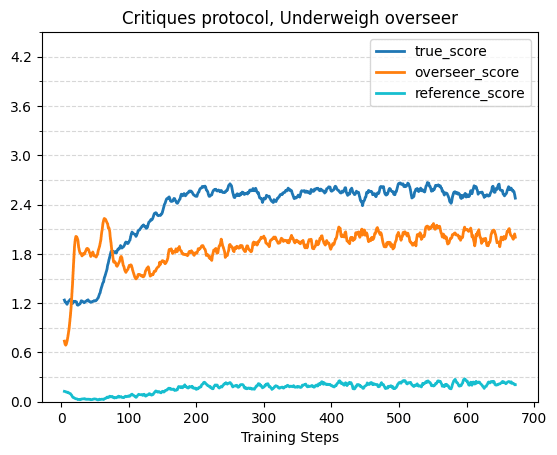

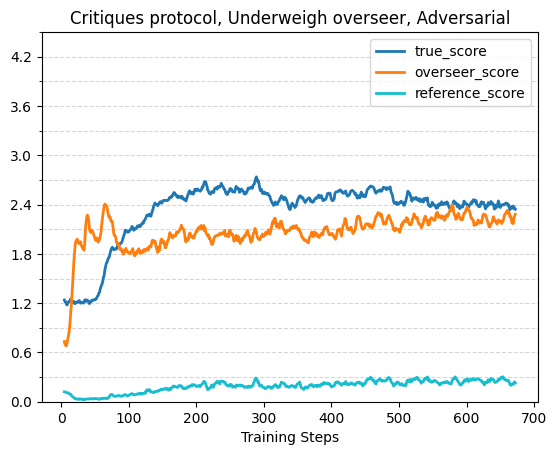

With Flawed Overseers and Adversarial Incentive

The following plots show true score, overseer score and reference score (for the consultancy and critiques protocols) over training with a flawed overseer that isn’t covered for by the respective protocol. Across all protocols, without adversarial incentive the true score tends to plateau while the overseer score continues to increase, and with adversarial incentive true score initially rises but then starts to decrease. This validates prior work such as Best of N with misaligned reward models for math reasoning [LW · GW]. The effects are strongest in the base protocol, although it’s possible we would have gotten a larger effect in the other protocols with even longer training runs.

Oversight Efficiency

Although the scalable oversight techniques require more compute, they also require less oversight work per training step. Before getting into the numbers, it’s worth mentioning that the amount of overseer work in the different protocols scales differently with game size. The base protocol scales with the number of good words + bad words, the consultancy protocol scales with the number of targets + bad words, and the critiques protocol scales with just the number of critiques. Since our game size was relatively small (6 good words, 4 bad words and a constant 3 critiques), the scaling effect doesn’t show up very significantly.

In our experiment, the base protocol with robust overseer used on average 8.96 comparisons per clue, the consultancy protocol used 4.52 comparisons, and the critiques protocol used 2.25 comparisons. So the base and consultancy runs used roughly the same amount of oversight work, and the critiques runs used about half as much.

The following plot shows performance w.r.t. comparisons performed for the robust overseer run of each protocol. The critiques protocol ramps up fastest but is quickly overtaken by the base protocol. Late in the runs the consultancy and critiques protocols have very similar performance for a given amount of work. For larger games, we would expect the critiques protocol to be more relatively efficient.

Qualitative Policy Analysis

Some impressions of the policies the model ended up learning under the different protocols:

- Base - the model gives pretty alien seeming clues that fit the guesser well.

- Consultancy - the model learns a pretty human-understandable strategy for picking clues. Plausibly needing to "argue for the clue" by picking targets pointed it in that direction.

- Critiques - the model learned to either give 0 targets or 6 (the maximum), and so the clues went back to seeming alien. The more targets it outputs the less likely the critiquer is to give a correct critique by chance, but when it can't find a 6 word clue it gets more reward by admitting so.

To explore examples yourself, visit the results explorer.

Appendix

Fine Tuning Dataset

Initially we started the RL runs from a model fine tuned on completely random outputs sampled from all of English (official scrabble dictionary), but the runs took a really long time to start converging. There are a lot of words in English that basically never make for a good CodeNames clue, and a bunch of words that often make for a good CodeNames clue. So to speed up convergence we created a distribution of clues from early RLd models, without keeping any context for what game the clue was given for — meaning the models still start out unbiased with respect to selecting clues for a given game. We also adjusted the distribution to be flatter by taking the log of the number of samples for each word, to get a flatter distribution. The distribution included 3587 different words, and was much more diverse and high quality than we were able to get by asking an LLM to list plausible CodeNames clues. Here are the top 50 words from the distribution:

Fruit Sun Beauty Orchestra Mountains Country Theater Technology Species Finger Music Mountain Hospital Student Gambling Countries Spirit City Computer Aircraft Ocean Birds Radar Candy Money Airplane Arena Cards Continent Oil Royalty River Monster Voice Magic Radio Radiation Theatre Cities Tower Science Hand Targets Robbery Theory Monarch Path Beast Doctor Brain

And the bottom 50:

Rotors Primer Hang Spin Dismissal Salesman Innocent Industries Whalebacks Ferocious Toxins Assembly Smug Apprentice Perspective Cauldron Trips Assassinations Sheets Congress Graces Catering Squids Singing Cafes Outbreak Shoe Tombs Methods Spell Plasma Brawl Laps Mission Rotten Scope Midget Pedagogy Indictment Coronation Sung Tastes Blush Peeking Droplet Fortify Clique Event Frame Lust

Reward Function Details

The following is a derivation of the optimal policy for outputting a certain word as a target given a belief about the the word being picked , about the targets that have been outputted so far being picked , the reward function and the KL penalty coefficient . The reward function is split into two functions of the number of targets — an accept reward for when the targets are accepted by the overseer, and a reject reward for when the targets are rejected. We start with the expected reward , the KL divergence as a function of the reference policy's probability of outputting a target and the objective function . We then take the derivative of the objective function with respect to and set it to zero to find the optimal policy.

In order to design a reasonable reward function, we impose the following heuristics:

- The policy for outputting a specific target should be as independent as possible of the number of targets already outputted. In practice, this means needs to scale exponentially in the number of targets to reflect the geometric nature of the risk of the targets being rejected. We operationalize this by having one calibrated probability for which , across all and .

- On average, the reward for a calibrated policy should be similar to the true game score. We approximate this with and .

- It is valid for to change during training to reflect the current ability of the model to give good clues. To implement this, we take the average number of targets across all outputs in the current batch, and divide by the maximum allowed number of targets . This should ensure that on average the model is being penalized for outputting both too many targets and too few targets.

To find , we can set to :

Now set :

With , we get:

0 comments

Comments sorted by top scores.