Newcomb's paradox complete solution.

post by Augs SMSHacks (augs-smshacks) · 2023-03-15T17:56:00.104Z · LW · GW · 13 commentsContents

Setup: Case 1: You have no free will. Case 2: You have some free will. Case 2.1: You can change your brain state such that Omega will classify you as a 1-box or 2-box person, but are incapable of acting against the beliefs derived from your brainstate. Case 2.2: You cannot change your brain state, and are incapable of acting against you beliefs. Case 2.3: You cannot change your brain state, but are capable of choosing to take either 1 or 2 boxes. Back to discussion of Case 2.3: Case 2.4: You can change your brain state such that Omega will classify you as a 1-box or 2-box person, and are capable of acting against the beliefs derived from your brainstate. Conclusion: Discussion of solution: None 13 comments

Here is the complete solution to Newcomb's paradox.

Setup:

Omega performs a brainscan on you at 12pm, and expects you to choose a box at 6pm. Based on the brainscan, Omega makes a prediction and classifies you into two categories, either you will take only 1 box, or you will take 2 boxes. The AI is very good at classifying brainscans.

Box A is transparent and contains £1k

Box B has £1m if Omega thinks you are a 1-box person, or contains £0 if you are a 2-box person.

Do you choose to take both boxes, or only box B?

Note: I mention the brainscan to make things more concrete, the exact method of how Omega performs the prediction is not important.

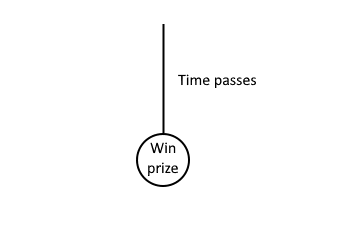

Case 1: You have no free will.

Then the question of which box you should take is moot since, without free will, you cannot make any such decision. The exact amount you win is out of your control.

The decision tree looks like this:

Case 2: You have some free will.

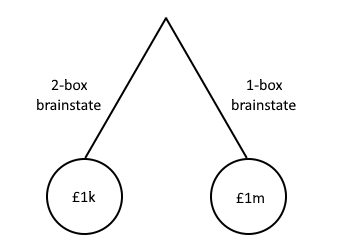

Case 2.1: You can change your brain state such that Omega will classify you as a 1-box or 2-box person, but are incapable of acting against the beliefs derived from your brainstate.

In this case, the optimal decision it to choose to believe in the 1-box strategy, this alters your brain state so that you will be classified as a 1-box person. Doing this, you will gain £1m. You would be incapable of believing in the 1-box strategy and then deciding to choose 2 boxes.

In this case the decision tree looks like:

Case 2.2: You cannot change your brain state, and are incapable of acting against you beliefs.

For intents and purposes, this is the same as case 1, you might have some free will, but not over the decisions that matter in this scenarion.

Refer back to the decision trees in Case 1.

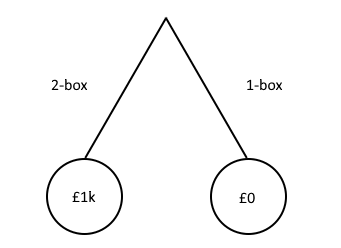

Case 2.3: You cannot change your brain state, but are capable of choosing to take either 1 or 2 boxes.

Case 2.3.1: Omega classifies you as a 1-box person.

In this case, you should take two boxes. According to the initial premise, this case should be extremely unlikely as Omega will have classified you incorrectly. But most formulations mention that it is possible for Omega to be wrong, so I will leave this in as this is a complete solution.

Case 2.3.1: Omega classifies you as a 2-box person.

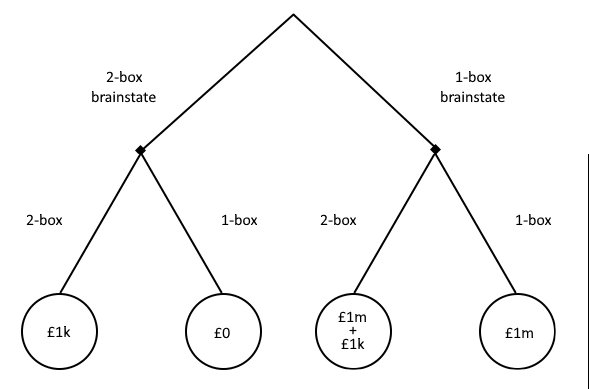

The decision tree looks like this:

Back to discussion of Case 2.3:

In this case, we will not know if Omega classified us as a 2-box or 1-box person. However, fortunately for us, the strategy for both 2.3.1 and 2.3.2 is the same, take 2 boxes.

Therefore the strategy for this case is: Take 2 boxes.

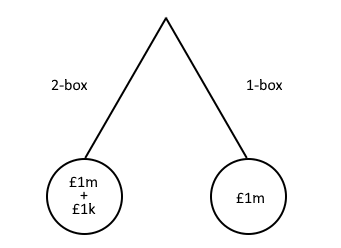

Case 2.4: You can change your brain state such that Omega will classify you as a 1-box or 2-box person, and are capable of acting against the beliefs derived from your brainstate.

This this is the case with the most freewill allowed to the person in this scenario. Note that this also goes against the premise somewhat since it stipulates that you have the capability of fooling Omega. But again, I leave it here for completeness.

In this case, the decision tree is the most complicated:

Conclusion:

Here is a summary of all the different strategies depending on which universe you live in:

Case 1: No decision. Prize is outside of you control.

Case 2.1: Choose 1-box brainstate. Leads to taking 1-box and guaranteed £1m.

Case 2.2: No decision. Prize is outside of you control.

Case 2.3: Choose to take 2 boxes. Guaranteed £1k, £1m is outside of your control.

Case 2.4: Choose (1-box brainstate) -> (take 2 boxes). Guaranteed £1k + £1m.

In short, choose the 1-box brainstate if you can, and choose 2 boxes if you can.

Discussion of solution:

It is worth noting that which case you find yourself in is out of your hands, but rather this relies on the capabilities of human intelligence and free will. In particular, if you can willingly alter your brainstate or not is more a matter of psychology than decision theory.

In my opinion, given the wording of the problem, we are probably in universe Case 2.1, which is essentially a physicalist position, because I believe our actions are directly caused by our brainstate. This rules out cases 2.3 and 2.4, which both have the person taking actions which are independant of their physical brainstate. I could see a dualist believing that 2.3 or 2.4 are possible. I also believe in free will, which rule out 2.2 and case 1. Essentially, it is impossible for humans to alter their brainstate without truly beliving it, at which point they will be forced to take only 1 box.

I also think this problem is confusing to a lot of people, because it is not immediately clear that there are actually 2 decisions being made: choosing your brainstate, and choosing the number of boxes to take. These two become muddled together, and seem to cause a paradox, since we want a 1-box brainstate, but also want to take 2 boxes. TDT believers only see the first decision, CDT believers only see the second decision. The key here is realising there are two decisions. But if humans are actually capable of making those decisions or not is up for debate, but also not decision theory.

13 comments

Comments sorted by top scores.

comment by Caspar Oesterheld (Caspar42) · 2023-03-15T20:06:09.637Z · LW(p) · GW(p)

Free will is a controversial, confusing term that, I suspect, different people take to mean different things. I think to most readers (including me) it is unclear what exactly the Case 1 versus 2 distinction means. (What physical property of the world differs between the two worlds? Maybe you mean not having free will to mean something very mundane, similar to how I don't have free will about whether to fly to Venus tomorrow because it's just not physically possible for me to fly to Venus, so I have to "decide" not to fly to Venus?)

I generally think that free will is not so relevant in Newcomb's problem. It seems that whether there is some entity somewhere in the world that can predict what I'm doing shouldn't make a difference for whether I have free will or not, at least if this entity isn't revealing its predictions to me before I choose. (I think this is also the consensus on this forum and in the philosophy literature on Newcomb's problem.)

>CDT believers only see the second decision. The key here is realising there are two decisions.

Free will aside, as far as I understand, your position is basically in line with what most causal decision theorists believe: You should two-box, but you should commit to one-boxing if you can do so before your brain is scanned. Is that right? (I can give some references to discussions of discussions of CDT and commitment if you're interested.)

If so, how do you feel about the various arguments that people have made against CDT? For example, what would you do in the following scenario?

>Two boxes, B1 and B2, are on offer. You may purchase one or none of the boxes but not both. Each of the two boxes costs $1. Yesterday, Omega put $3 in each box that she predicted you would not acquire. Omega's predictions are accurate with probability 0.75.

In this scenario, CDT always recommends buying a box, which seems like a bad idea because from the perspective of the seller of the boxes, they profit when you buy from them.

>TDT believers only see the first decision, [...] The key here is realising there are two decisions.

I think proponents of TDT and especially Updateless Decision Theory [? · GW] and friends are fully aware of this possible "two-decisions" perspective. (Though typically Newcomb's problem is described as only having one of the two decision points, namely the second.) They propose that the correct way to make the second decision (after the brain scan) is to take the perspective of the first decision (or similar). Of course, one could debate whether this move is valid and this has been discussed (e.g., here [? · GW], here, or here).

Also: Note that evidential decision theorists would argue that you should one-box in the second decision (after the brain scan) for reasons unrelated to the first-decision perspective. In fact, I think that most proponents of TDT/UDT/... would agree with this reasoning also, i.e., even if it weren't for the "first decision" perspective, they'd still favor one-boxing. (To really get the first decision/second decision conflict you need cases like counterfactual mugging [? · GW].)

Replies from: TAG, augs-smshacks↑ comment by TAG · 2023-03-16T16:03:57.856Z · LW(p) · GW(p)

Free will is a controversial, confusing term that, I suspect, different people take to mean different things.

Different definitions of free will require freedom from different things. Much of the debate centres on Libertarian free will, which requires freedom from causal determinism. Compatibilist free will requires freedom from deliberate restrictions by other free agents. Contra causal free will requires freedom from physics, on the assumption that physics is deterministic.

Libertarian free will is very much the definition that is relevant to Newcomb.If you could make an undetermined choice, in defiance of the oracles predictive abilities, you could get the extra money -- but if you can make undetermined choices, how can the predictor predict you?

Replies from: Caspar42↑ comment by Caspar Oesterheld (Caspar42) · 2023-03-19T09:03:28.238Z · LW(p) · GW(p)

I agree that some notions of free will imply that Newcomb's problem is impossible to set up. But if one of these notion is what is meant, then the premise of Newcomb's problem is that these notions are false, right?

It also happens that I disagree with these notions as being relevant to what free will is.

Anyway, if this had been discussed in the original post, I wouldn't have complained.

↑ comment by TAG · 2023-03-19T22:28:32.631Z · LW(p) · GW(p)

I agree that some notions of free will imply that Newcomb’s problem is impossible to set up. But if one of these notion is what is meant, then the premise of Newcomb’s problem is that these notions are false, right?

It's advertised as being a paradox, not a proof.

↑ comment by Augs SMSHacks (augs-smshacks) · 2023-03-15T20:48:30.357Z · LW(p) · GW(p)

Free will is a controversial, confusing term that, I suspect, different people take to mean different things. I think to most readers (including me) it is unclear what exactly the Case 1 versus 2 distinction means. (What physical property of the world differs between the two worlds? Maybe you mean not having free will to mean something very mundane, similar to how I don't have free will about whether to fly to class tomorrow?)

Free will for the purposes of this article refers to the decisions freely available to the agent. In a world with no free will, the agent has no capability to change the outcome of anything. This is the point of my article, as the answer to Newcomb's paradox changes depending on which decisions we afford the agent. The distinction between the cases is that we are enumerating over the possible answers to the two following questions:

1) Can the agent decide their brainstate? Yes or No.

2) Can the agent decide the amount of boxes they choose independently of their brainstate? Yes or No.

This is why there are only 4 sub-cases of Case 2 to consider. I suppose you are right that case 1 is somewhat redundant, since it is covered by answering "no" to both of these questions.

Two boxes, B1 and B2, are on offer. You may purchase one or none of the boxes but not both. Each of the two boxes costs $1. Yesterday, Omega put $3 in each box that she predicted you would not acquire. Omega's predictions are accurate with probability 0.75.

In this case, the wording of the problem seems to suggest that we implicitly assume (2)(or rather the equivalent statement for this scenario) is "No" and that the agent's actions are dependent on their brainstate, modelled by a probability distribution. The reason that this assumption is implicit in the question is that if the answer to (2) is "Yes" then the agent could wilfully act against Omega's prediction and break the 0.75 probability assumption which is stated in the premise.

Once again, if the answer to (1) is also "No" then the question is moot since we have no free will.

If the agent can decide their brainstate but we don't let them decide the number of boxes purchased independently of that brainstate:

Choice 1: Choose "no-boxes purchased" brainstate.

Omega puts $3 in both boxes.

You have a 0.75 probability to buy no boxes, and 0.25 probability to buy some boxes. The question doesn't actually specify how that 0.25 is distributed between buying 1-box and 2-boxes, so let's say it's just 0.08333 for the other 3 possibilities each.

Expected value: 0$ * 0.75 + 2$ * 0.0833 + 2$ * 0.0833 + 4$ * 0.0833 = 0.666$

Choice 2: Choose "1-box purchased" brainstate.

Omega puts 3$ in the box you didn't plan on purchasing. You have a 0.75 probability of buying only that box, but again doesn't specify how the rest of the 0.25 is distributed. Assuming 0.125 each:

Expected value: 0$ * 0.0833 - 1$ * 0.75 + 2$ * 0.0833 + 1$ * 0.0833 = -0.5$

Choice 3: Choose "2-box purchased" brainstate.

Omega puts no money in the boxes. You have a 0.75 probability of buying both boxes. Assuming again that the 0.25 is distributed evenly amoung the other possibilities.

Expected value: 0$ * 0.0833 - 1$ * 0.0833 - 1$ * 0.0833 - 2$ * 0.75 = -1.6666$

In this case they should choose the "no-boxes purchased" brainstate.

If we were to break the premise of the question, and allow the agent to be able to choose their brainstate, and also choose the following action independently from that brain state, the optimal decision would be (no-boxes brainstate) -> (buy both boxes)

I think this question is pretty much analogus to the original version of Newcomb's problem, just with an extra layer of probability that complicates the calculations, but doesn't provide any more insight. It's still the same trickery where the apparent paradox emerges because it's not immediately obvious that there are secretly 2 decisions being made, and the question is ambiguous because it's not clear which decisions are actually afforded to the agent.

comment by Rafael Harth (sil-ver) · 2023-03-17T11:36:35.091Z · LW(p) · GW(p)

TAG said [LW(p) · GW(p)] that Libertarian Free Will is the relevant one for Newcomb's problem. I think this is true. However, I strongly suspect that most people who write about decision theory, at least on LW, agree that LFW doesn't exist. So arguably almost the entire problem is about analyzing Newcomb's problem in a world without LFW. (And ofc, a big part of the work is not to decide which action is better, but to formalize procedures that output that action.)

This is why differentiating different forms of Free Will and calling that a "Complete Solution" is dubious. It seems to miss the hard part of the problem entirely. (And the hard problem has arguably been solved anyway with Functional/Updateless Decision Theory. Not in the sense that there are no open problems, but that they don't involve Newcomb's problem.)

Replies from: augs-smshacks, TAG↑ comment by Augs SMSHacks (augs-smshacks) · 2023-03-17T19:48:09.212Z · LW(p) · GW(p)

Personally I don't believe that the problem is actually hard. None of the individual cases are hard, the decsions are pretty simple once we go into each case. Rather I think this question is more of a philosophical bamboozle that is actually more of a question about human capabilities, and disguises itself as a problem about decision theory.

As I talk about in the post, the answer to the question changes depending on which decisions we afford the agent. Once that has been determined, it is just a matter of using min-max to find the optimal decision process. So people's disagreements aren't actually about decision theory, rather just disagreements about which choices are available to us.

If you allow the agent to decide their brainstate and also act independently from it, then it is easy to see the best solution is (1-box brainstate) -> (Take 2 boxes). People who say "that's not possible because omega is a perfect predictor" are not actually disagreeing about the decision theory, rather it's just disagreeing about if humans are capable of doing that.

Replies from: TAG↑ comment by TAG · 2023-03-17T20:38:31.383Z · LW(p) · GW(p)

What use is a decision theory that tells you to do things you can't do?

Maybe the paradox is bamboozling, maybe it's deconfusing - revealing that DT depends on physics and metaphysics, not just maths.

Replies from: augs-smshacks↑ comment by Augs SMSHacks (augs-smshacks) · 2023-03-27T15:38:06.263Z · LW(p) · GW(p)

Well the decision theory is only applied after assessing the available options, so it won't tell you to do things you aren't capable of doing.

I suppose the bamboozle here is that it is that it seems like a DT question, but, as you point out, it's actually a question about physics. However, even in this thread, people dismiss any questions about that as being "not important", and instead try to focus on the decision theory, which isn't actually relevant here.

For example:

I generally think that free will is not so relevant in Newcomb's problem. It seems that whether there is some entity somewhere in the world that can predict what I'm doing shouldn't make a difference for whether I have free will or not, at least if this entity isn't revealing its predictions to me before I choose.

↑ comment by TAG · 2023-03-17T13:52:42.004Z · LW(p) · GW(p)

I strongly suspect that most people who write about decision theory, at least on LW, agree that LFW doesn’t exist. So arguably almost the entire problem is about analyzing Newcomb’s problem in a world without LFW.

"Is believed to be", not "is".

If you believe in determinism, that's belief, not knowledge, so.It doesn't solve anything.

This is why differentiating different forms of Free Will and calling that a “Complete Solution” is dubious.

It's equally dubious to ignore the free will component entirely.

The paradox is a paradox because it implies some kind of choice , but also some kind of determinism via the predictors ability to predict.

comment by TAG · 2023-03-16T15:05:28.923Z · LW(p) · GW(p)

Then the question of which box you should take is moot since, without free will, you cannot make any such decision. The exact amount you win is out of your control.

Edited:

Under determinism, the question of which strategy is best can be answered without any assumptions about free choice: you can think of sets of pre-existing agents, which make different decisions, or adopt different strategies determinstically -- in this case,OneBoxBots, and TwoBoxBots -- and you can make claims about what results they get, without any of them deciding anything or doing anything differently.

But free choice is still highly relevant to the problem as a whole.

Replies from: augs-smshacks↑ comment by Augs SMSHacks (augs-smshacks) · 2023-03-16T18:12:00.664Z · LW(p) · GW(p)

I agree. However I think that case is trivial because a OneBoxBot would get the 1-box prize, and a TwoBoxBot would get the 2-box prize, assuming the premise of the question is actually true.

Replies from: TAG