Latent Variables and Model Mis-Specification

post by jsteinhardt · 2018-11-07T14:48:40.434Z · LW · GW · 8 commentsContents

1 Identifying Parameters in Regression Problems 2 Structural Equation Models 3 A Possible Solution: Counterfactual Reasoning Acknowledgements None 8 comments

Posted as part of the AI Alignment Forum sequence on Value Learning [? · GW].

Rohin's note: So far, we’ve seen [? · GW] that ambitious value learning needs to understand human biases, and that we can't [? · GW] simply learn the biases in tandem with the reward. Perhaps we could hardcode a specific model of human biases? Such a model is likely to be incomplete and inaccurate, but it will perform better than assuming an optimal human, and as we notice failure modes we can improve the model. In the language of this post by Jacob Steinhardt (original here), we are using a mis-specified human model. The post talks about why model mis-specification is worse than it may seem at first glance.

This post is fairly technical and may not be accessible if you don’t have a background in machine learning. If so, you can skip this post and still understand the rest of the posts in the sequence. However, if you want to do ML-related safety research, I strongly recommend putting in the effort to understand the problems that can arise with mis-specification.

Machine learning is very good at optimizing predictions to match an observed signal — for instance, given a dataset of input images and labels of the images (e.g. dog, cat, etc.), machine learning is very good at correctly predicting the label of a new image. However, performance can quickly break down as soon as we care about criteria other than predicting observables. There are several cases where we might care about such criteria:

- In scientific investigations, we often care less about predicting a specific observable phenomenon, and more about what that phenomenon implies about an underlying scientific theory.

- In economic analysis, we are most interested in what policies will lead to desirable outcomes. This requires predicting what would counterfactually happen if we were to enact the policy, which we (usually) don’t have any data about.

- In machine learning, we may be interested in learning value functions which match human preferences (this is especially important in complex settings where it is hard to specify a satisfactory value function by hand). However, we are unlikely to observe information about the value function directly, and instead must infer it implicitly. For instance, one might infer a value function for autonomous driving by observing the actions of an expert driver.

In all of the above scenarios, the primary object of interest — the scientific theory, the effects of a policy, and the value function, respectively — is not part of the observed data. Instead, we can think of it as an unobserved (or “latent”) variable in the model we are using to make predictions. While we might hope that a model that makes good predictions will also place correct values on unobserved variables as well, this need not be the case in general, especially if the model is mis-specified.

I am interested in latent variable inference because I think it is a potentially important sub-problem for building AI systems that behave safely and are aligned with human values. The connection is most direct for value learning, where the value function is the latent variable of interest and the fidelity with which it is learned directly impacts the well-behavedness of the system. However, one can imagine other uses as well, such as making sure that the concepts that an AI learns sufficiently match the concepts that the human designer had in mind. It will also turn out that latent variable inference is related to counterfactual reasoning, which has a large number of tie-ins with building safe AI systems that I will elaborate on in forthcoming posts.

The goal of this post is to explain why problems show up if one cares about predicting latent variables rather than observed variables, and to point to a research direction (counterfactual reasoning) that I find promising for addressing these issues. More specifically, in the remainder of this post, I will: (1) give some formal settings where we want to infer unobserved variables and explain why we can run into problems; (2) propose a possible approach to resolving these problems, based on counterfactual reasoning.

1 Identifying Parameters in Regression Problems

Suppose that we have a regression model , which outputs a probability distribution over given a value for . Also suppose we are explicitly interested in identifying the “true” value of rather than simply making good predictions about given . For instance, we might be interested in whether smoking causes cancer, and so we care not just about predicting whether a given person will get cancer () given information about that person (), but specifically whether the coefficients in that correspond to a history of smoking are large and positive.

In a typical setting, we are given data points on which to fit a model. Most methods of training machine learning systems optimize predictive performance, i.e. they will output a parameter that (approximately) maximizes . For instance, for a linear regression problem we have . Various more sophisticated methods might employ some form of regularization to reduce overfitting, but they are still fundamentally trying to maximize some measure of predictive accuracy, at least in the limit of infinite data.

Call a model well-specified if there is some parameter for which matches the true distribution over , and call a model mis-specified if no such exists. One can show that for well-specified models, maximizing predictive accuracy works well (modulo a number of technical conditions). In particular, maximizing will (asymptotically, as ) lead to recovering the parameter .

However, if a model is mis-specified, then it is not even clear what it means to correctly infer . We could declare the maximizing predictive accuracy to be the “correct” value of , but this has issues:

- While might do a good job of predicting in the settings we’ve seen, it may not predict well in very different settings.

- If we care about determining for some scientific purpose, then good predictive accuracy may be an unsuitable metric. For instance, even though margarine consumption might correlate well with (and hence be a good predictor of) divorce rate, that doesn’t mean that there is a causal relationship between the two.

The two problems above also suggest a solution: we will say that we have done a good job of inferring a value for if can be used to make good predictions in a wide variety of situations, and not just the situation we happened to train the model on. (For the latter case of predicting causal relationships, the “wide variety of situations” should include the situation in which the relevant causal intervention is applied.)

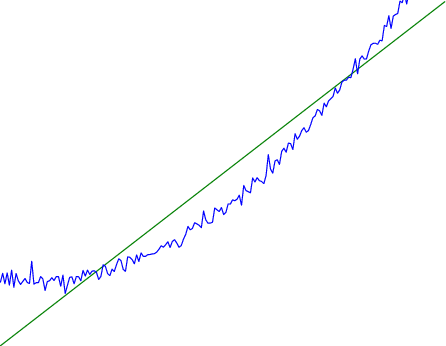

Note that both of the problems above are different from the typical statistical problem of overfitting. Classically, overfitting occurs when a model is too complex relative to the amount of data at hand, but even if we have a large amount of data the problems above could occur. This is illustrated in the following graph:

Here the blue line is the data we have , and the green line is the model we fit (with slope and intercept parametrized by ). We have more than enough data to fit a line to it. However, because the true relationship is quadratic, the best linear fit depends heavily on the distribution of the training data. If we had fit to a different part of the quadratic, we would have gotten a potentially very different result. Indeed, in this situation, there is no linear relationship that can do a good job of extrapolating to new situations, unless the domain of those new situations is restricted to the part of the quadratic that we’ve already seen.

I will refer to the type of error in the diagram above as mis-specification error. Again, mis-specification error is different from error due to overfitting. Overfitting occurs when there is too little data and noise is driving the estimate of the model; in contrast, mis-specification error can occur even if there is plenty of data, and instead occurs because the best-performing model is different in different scenarios.

2 Structural Equation Models

We will next consider a slightly subtler setting, which in economics is referred to as a structural equation model. In this setting we again have an output whose distribution depends on an input , but now this relationship is mediated by an unobserved variable . A common example is a discrete choice model, where consumers make a choice among multiple goods () based on a consumer-specific utility function () that is influenced by demographic and other information about the consumer (). Natural language processing provides another source of examples: in semantic parsing, we have an input utterance () and output denotation (), mediated by a latent logical form ; in machine translation, we have input and output sentences ( and ) mediated by a latent alignment ().

Symbolically, we represent a structural equation model as a parametrized probability distribution , where we are trying to fit the parameters . Of course, we can always turn a structural equation model into a regression model by using the identity , which allows us to ignore altogether. In economics this is called a reduced form model. We use structural equation models if we are specifically interested in the unobserved variable (for instance, in the examples above we are interested in the value function for each individual, or in the logical form representing the sentence’s meaning).

In the regression setting where we cared about identifying , it was obvious that there was no meaningful “true” value of when the model was mis-specified. In this structural equation setting, we now care about the latent variable , which can take on a meaningful true value (e.g. the actual utility function of a given individual) even if the overall model is mis-specified. It is therefore tempting to think that if we fit parameters and use them to impute , we will have meaningful information about the actual utility functions of individual consumers. However, this is a notational sleight of hand — just because we call “the utility function” does not make it so. The variable need not correspond to the actual utility function of the consumer, nor does the consumer’s preferences even need to be representable by a utility function.

We can understand what goes wrong by consider the following procedure, which formalizes the proposal above:

- Find to maximize the predictive accuracy on the observed data, , where . Call the result .

- Using this value , treat as being distributed according to . On a new value for which is not observed, treat as being distributed according to .

As before, if the model is well-specified, one can show that such a procedure asymptotically outputs the correct probability distribution over . However, if the model is mis-specified, things can quickly go wrong. For example, suppose that represents what choice of drink a consumer buys, and represents consumer utility (which might be a function of the price, attributes, and quantity of the drink). Now suppose that individuals have preferences which are influenced by unmodeled covariates: for instance, a preference for cold drinks on warm days, while the input does not have information about the outside temperature when the drink was bought. This could cause any of several effects:

- If there is a covariate that happens to correlate with temperature in the data, then we might conclude that that covariate is predictive of preferring cold drinks.

- We might increase our uncertainty about to capture the unmodeled variation in .

- We might implicitly increase uncertainty by moving utilities closer together (allowing noise or other factors to more easily change the consumer’s decision).

In practice we will likely have some mixture of all of these, and this will lead to systematic biases in our conclusions about the consumers’ utility functions.

The same problems as before arise: while we by design place probability mass on values of that correctly predict the observation , under model mis-specification this could be due to spurious correlations or other perversities of the model. Furthermore, even though predictive performance is high on the observed data (and data similar to the observed data), there is no reason for this to continue to be the case in settings very different from the observed data, which is particularly problematic if one is considering the effects of an intervention. For instance, while inferring preferences between hot and cold drinks might seem like a silly example, the design of timber auctions constitutes a much more important example with a roughly similar flavour, where it is important to correctly understand the utility functions of bidders in order to predict their behaviour under alternative auction designs (the model is also more complex, allowing even more opportunities for mis-specification to cause problems).

3 A Possible Solution: Counterfactual Reasoning

In general, under model mis-specification we have the following problems:

- It is often no longer meaningful to talk about the “true” value of a latent variable (or at the very least, not one within the specified model family).

- Even when there is a latent variable with a well-defined meaning, the imputed distribution over need not match reality.

We can make sense of both of these problems by thinking in terms of counterfactual reasoning. Without defining it too formally, counterfactual reasoning is the problem of making good predictions not just in the actual world, but in a wide variety of counterfactual worlds that “could” exist. (I recommend this paper as a good overview for machine learning researchers.)

While typically machine learning models are optimized to predict well on a specific distribution, systems capable of counterfactual reasoning must make good predictions on many distributions (essentially any distribution that can be captured by a reasonable counterfactual). This stronger guarantee allows us to resolve many of the issues discussed above, while still thinking in terms of predictive performance, which historically seems to have been a successful paradigm for machine learning. In particular:

- While we can no longer talk about the “true” value of , we can say that a value of is a “good” value if it makes good predictions on not just a single test distribution, but many different counterfactual test distributions. This allows us to have more confidence in the generalizability of any inferences we draw based on (for instance, if is the coefficient vector for a regression problem, any variable with positive sign is likely to robustly correlate with the response variable for a wide variety of settings).

- The imputed distribution over a variable must also lead to good predictions for a wide variety of distributions. While this does not force to match reality, it is a much stronger condition and does at least mean that any aspect of that can be measured in some counterfactual world must correspond to reality. (For instance, any aspect of a utility function that could at least counterfactually result in a specific action would need to match reality.)

- We will successfully predict the effects of an intervention, as long as that intervention leads to one of the counterfactual distributions considered.

(Note that it is less clear how to actually train models to optimize counterfactual performance, since we typically won’t observe the counterfactuals! But it does at least define an end goal with good properties.)

Many people have a strong association between the concepts of “counterfactual reasoning” and “causal reasoning”. It is important to note that these are distinct ideas; causal reasoning is a type of counterfactual reasoning (where the counterfactuals are often thought of as centered around interventions), but I think of counterfactual reasoning as any type of reasoning that involves making robustly correct statistical inferences across a wide variety of distributions. On the other hand, some people take robust statistical correlation to be the definition of a causal relationship, and thus do consider causal and counterfactual reasoning to be the same thing.

I think that building machine learning systems that can do a good job of counterfactual reasoning is likely to be an important challenge, especially in cases where reliability and safety are important, and necessitates changes in how we evaluate machine learning models. In my mind, while the Turing test has many flaws, one thing it gets very right is the ability to evaluate the accuracy of counterfactual predictions (since dialogue provides the opportunity to set up counterfactual worlds via shared hypotheticals). In contrast, most existing tasks focus on repeatedly making the same type of prediction with respect to a fixed test distribution. This latter type of benchmarking is of course easier and more clear-cut, but fails to probe important aspects of our models. I think it would be very exciting to design good benchmarks that require systems to do counterfactual reasoning, and I would even be happy to incentivize such work monetarily.

Acknowledgements

Thanks to Michael Webb, Sindy Li, and Holden Karnofsky for providing feedback on drafts of this post. If any readers have additional feedback, please feel free to send it my way.

8 comments

Comments sorted by top scores.

comment by Ilya Shpitser (ilya-shpitser) · 2018-11-07T16:17:32.617Z · LW(p) · GW(p)

Hi Jacob. We (@JHU) read your paper on problems with ML recently!

---

"On the other hand, some people take robust statistical correlation to be the definition of a causal relationship, and thus do consider causal and counterfactual reasoning to be the same thing."

These people would be wrong, because if A <- U -> B, A and B are robustly correlated (due to a spurious association via U), but intuitively we would not call this association causal. Example: A and B are eye color of siblings. Pretty stable, but not causal.

However, I don't see how the former part of the above sentence implies the part after "thus."

---

Causal and counterfactual reasoning intersect, but neither is a subset of the other. An example of counterfactual reasoning I do that isn't causal is missing data. An example of causal reasoning that isn't counterfactual is stuff Phil Dawid does.

---

If you are worried about robustness to model misspecification, you may be interested in reading about multiply robust methods based on theory of semi-parametric statistical models and influence functions. My friends and I have some papers on this. Here is the original paper (first author at JHU now) showing double ("two choose one") robustness in a missing data context:

https://pdfs.semanticscholar.org/e841/fa3834e787092e4266e9484158689405b7b0.pdf

Here is a paper on mediation analysis I was involved in that gets "three choose two" robustness:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4710381/

---

I don't know how counterfactuals get you around model misspecification. My take is, counterfactuals are something that might be of primary interest sometimes, in which case model specification is one issue you have to worry about.

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2020-11-19T02:29:40.910Z · LW(p) · GW(p)

Just ended up reading your paper (well, a decent chunk of it), so thanks for the pointer :)

comment by Adhyyan Narang (adhyyan-narang) · 2022-06-27T23:01:32.650Z · LW(p) · GW(p)

Hi Jacob, I really enjoyed this post thank you!

However, it appears that the problem you describe of non-robust predictive performance can also take place under a well-specified model with insufficient data. For instance, my recent paper https://arxiv.org/abs/2109.13215 presents a toy example where a well-specified overparameterized interpolator may perform well on a classification task but poorly when the data is allowed to be adversarially perturbed.

Then, it appears to me that the problem of incorrectly identifying latents is not a consequence of misspecification. But more a consequence of the limitation of the data. Either the data is not plentiful enough (which would cause problems in even a well-specified model) or the data is plentiful but not rich enough to identify latents (which would happen only in a misspecified model).

Replies from: Etoile de Scauchy↑ comment by Antoine de Scorraille (Etoile de Scauchy) · 2022-12-03T22:16:44.092Z · LW(p) · GW(p)

Is the adversarial perturbation not, in itself, a mis-specification? If not, I would be glad to have your intuitive explanation of it.

comment by Rafael Harth (sil-ver) · 2020-09-02T17:09:11.958Z · LW(p) · GW(p)

I'm highly confused by this part:

If we care about determining for some scientific purpose, then good predictive accuracy may be an unsuitable metric. For instance, even though margarine consumption might correlate well with (and hence be a good predictor of) divorce rate, that doesn’t mean that there is a causal relationship between the two.

So, the problem here is that we have so much data that a correlation exists by sheer coincidence. If we allow a model to find this correlation anyway, then this just seems like basic overfitting. I don't see any different mechanism at work here.

Conversely, if this is trying to make a point about correlation vs. causation, why choose an example where the correlation isn't real? I mean, presumably we don't expect margarine consumption to be a predictor for divorce rate in the future. (I wouldn't have been too surprised if there was a real correlation because both are caused by some third factor, but the site this links to lists other cases where the correlation is clearly coincidental, so I assume this one is, too.) Moreover, if it is about correlation vs. causation, this also seems to be unsolvable at the level of parameter selection.

And the thing is that the only other problem you've named of "declare the maximizing predictive accuracy to be the “correct” value of " is

While might do a good job of predicting in the settings we’ve seen, it may not predict well in very different settings.

Which (correct me if I'm wrong) also seems completely unsolvable at the level of parameter selection. In the example of the parametric curve, it could be solved at the level of model selection, but the description seems to point to the level of data selection.

So, I don't actually understand how either of this is an argument against optimizing for predictive accuracy.

comment by RogerDearnaley (roger-d-1) · 2023-12-06T07:17:45.780Z · LW(p) · GW(p)

If you want to do value learning, you need an AI strong enough to do STEM work, because that's what value learning is: a full-blown research project (in Anthropology). So you need something comparable to AIXI (except computationally bounded so approximately rather than exactly Bayesian), however with the utility function also learnt along with the behavior of the universe, not hard-coded as in AIXI. Unlike a regression model, that system isn't going to be stuck trying to do a linear fit to an obviously parabolic+noise dataset: it will consider alternative hypotheses about what type of curve-fit to use, and rapidly Bayesian-update to the fact that a quadratic fit is optimal. So it will consider alternative models until it finds one that appears to be well-specified.

comment by Antoine de Scorraille (Etoile de Scauchy) · 2022-12-03T22:21:40.596Z · LW(p) · GW(p)

Find to maximize the predictive accuracy on the observed data, , where . Call the result .

Isn't the z in the sum on the left a typo? I think it should be n

comment by Chantiel · 2021-08-02T23:00:12.871Z · LW(p) · GW(p)

"Call a model well-specified if there is some parameter for which matches the true distribution over , and call a model mis-specified if no such exists."

Is it even possible to come up with a well-specified model of human behavior or preferences? I wouldn't be surprised if creating a model of a person with perfect predictive accuracy would require you to model every neuron in their brain to figure out what exactly would be done in every situation. But I don't think realistic AI's would even have the storage base to represent a single model like that.

"In the regression setting where we cared about identifying , it was obvious that there was no meaningful “true” value of when the model was mis-specified."

I'm worried about this. If we can't tractably make a well-specified model of behavior, then how do we learn preferences if there is no meaningful "true" value of any latent variables representing preferences in a model?

But perhaps there actually is a way to assign a reasonable meaning to the "true" value of a model in a mis-specified model. Perhaps you could go with whichever performs best and call that the "true" one. I mean, I think this is how people realistically come up with answers to what's "true". For example, someone who only knows Newtonian physics couldn't come up, using the Newtonian model, of a well-specified model of the actual physical results on Earth,. However, I think it would still be meaningful if they said something like, "the gravity on Earth's surface is 9.8 meters/second", because that's what would work best with their model.