Posts

Comments

On a quick search, I couldn't find such a repo. I agree that this is/was the heart of Lesswrong, but what I'm aiming for is more specific than ‘any cool intellectual thing’. A lot of LW's posts are about contrarian viewpoints, or very particular topics. I'm looking for well-known but surprising stuff, to develop common knowledge.

Should feel like: ‘OMG, everyone should know [INSERT YOUR TRICK]’

Thanks for these last three posts!

Just sharing some vibe I've got from your.. framing!

Minimalism ~ path ~ inside-focused ~ the signal/reward

Maximalism ~ destination ~ outside-focused ~ the world

These two opposing aesthetics is a well-known confusing bit within agent foundation style research. The classical way to model an agent is to think as it is maximizing outside world variables. Conversely, we can think about minimization ~ inside-focused (reward hacking type error) as a drug addict accomplishing "nothing"

Feels there is also something to say with dopamine vs serotonine/homeostasis, even with deontology vs consequentialism, and I guess these two clumsy clusters mirrors each other in some way (feels isomorph by reverse signe function). Will rethink about it for now.

As an aside note: I'm French too, and was surprised I'm supposed to yuck maximalist aesthetic, but indeed it's consistent with my reaction reading you about TypeScript, also with my K-type brain.. Anecdotally, not with my love for spicy/rich foods ^^'

Oh. As I read those first lines, I thought, "Isn't it obvious?!!! How the hell did the author not notice that at like, 5 years old?" I mean, it's such a common plot in fiction. And paradoxically, I thought I wasn't good at knowing social dynamics. But maybe that's an exception: I have a really good track record at guessing werewolves in the werewolf game. So maybe I'm just good at theory (very relevant to this game) but still bad at reading people.

The idea of applying it to wealth is interesting, though.

Me too :) But I then realized it's not any "game", but the combinatorial ones.

This.

Excellent post! I think it's closely related to (but not reducible to) to the general concept of Pressure. Both pressure as

- the physical definition (which is, indeed, a sum of many micro forces on a frontier surface, but able to affect at macroscale the whole object).

- the metaphorical common use of it (social pressure etc): see the post.

It's not about bad intentions in most practical cases, but about biases. Hanlon's razor doesn't apply (or, very weakly) to systemic issues.

It's fixed ;)

Exercise 1:

The empty set is the only one. For any nonempty set X, you could pick as a counterexample:

Exercise 2:

The agent will choose an option which scores better than the threshold .

It's a generalization of satisficers, these latter are thresholders such as is nonempty.

Exercise 3:

Exercise 4:

I have discovered a truly marvelous-but-infinite solution of this, which this finite comment is too narrow to contain.

Exercise 5:

The generalisable optimisers are the following:

i.e. argmin will choose the minimal points.

i.e. satisfice will choose an option which dominates some fixed anchor point . Note that since R is only equipped with a preorder, it means it might be a more selective optimiser (if not total, it's harder to get an option better then the anchor). More interestingly, if there are indifferent options with the anchor (some x / and ), it could choose it rather than the anchor even if there is no gain to do so. This degree of freedom could be eventually not desirable.

Exercise 6:

Interesting problem.

First of all, is there a way to generalize the trick?

The first idea would be to try to find some equivalent to the destested element . For context-dependant optimiser such as better-than-average, there isn't.

A more general way to try to generalize the trick would be the following question:

For some fixed , and , could we find some other such as and

i.e. is there a general way to replace values outside of without modify the result of the constrained optimisation?

Answer: no. Counter-example: The optimiser for some infinite set X and finite nonempty sets and R.

So it seems there is no general trick here. But why bother? We should refocus on what we mean by constrained optimisation in general, and it has nothing to do with looking for some u'. What we mean is value outside are totally irrelevant.

How? For any of the current example we have here, what we actually want is not , but : we only apply the optimiser on the legal set.

Problem: in the current formalism, an optimiser has type , so I don't see obvious way to define the "same" optimiser on a different X. and the others here are implicitly parametrized, so it's not that a problem, but we have to specify this. This suggests to look for categories (e.g. for argmax...).

Even with finite sets, it doesn't work because the idea to look for "closest to " is not what we looking for.

Let a class of students, scoring within a range , . Let the (uniform) better-than-average optimizer, standing for the professor picking any student who scores better than the mean.

Let (the professor despises Charlie and ignores him totally).

If u(Alice) = 5 and u(Bob) = 4, their average is 4.5 so only Alice should be picked by the constrained optimisation.

Howewer, with your proposal, you run into trouble with u' defined as u'(Alice) = u(Alice), u'(Bob) = u(Bob), and u'(Charlie) = 0.

The average value for this u' is , and both Alice and Bob scores better than 3: . The size of the intersection with is then maximal, so your proposal suggests to pick this set as the result. But the actual result should be , because the score of Charlie is irrelevant to constrained optimisation.

Natural language is lossy because the communication channel is narrow, hence the need for lower-dimensional representation (see ML embeddings) of what we're trying to convey. Lossy representations is also what Abstractions are about.

But in practice, you expect Natural Abstractions (if discovered) cannot be expressed in natural language?

There is one catch: in principle, there could be multiple codes/descriptions which decode to the same message. The obvious thing to do is then to add up the implied probabilities of each description which produces the same message. That indeed works great. However, it turns out that just taking the minimum description length - i.e. the length of the shortest code/description which produces the message - is a good-enough approximation of that sum in one particularly powerful class of codes: universal Turing machines.

Is this about K-complexity is silly; use cross-entropy instead?

Do we really have such good interpretations for such examples? It seems to me that we have big problems in the real world because we don't.

We do have very high-level interpretations, but not enough to have solid guarantees. After all, we have a very high-level trivial interpretation of our ML models: they learn! The challenge is not just to have clues, but clues that are relevant enough to address safety concerns in relation to impact scale (which is the unprecedented feature of the AI field).

Pretty cool!

Just to add, although I think you already know: we don't need to have a reflexive understanding of your DT to put it into practice, because messy brains rather than provable algo etc....

And I always feel it's kinda unfair to dismiss as orthogonal motivations "valuing friendliness or a sense of honor" because they might be evolutionarily selected heuristics to (sort of) implement such acausal DT concerns!

Great! Isn't it generalizable to any argmin/argmax issues? Especialy thinking about the argmax decision theories framework, which is a well-known difficulty for safety concerns.

Similarly, in EA/action-oriented discussions, there is a reccurent pattern like:

Eager-to-act padawan: If world model/moral theory X is best likely to be true (due to evidence y z...), we need to act accordingly with the controversial Z! Seems best EU action!

Experienced jedi: Wait for a minute. You have to be careful with this way of thinking, because there are unkwown unknown, unilateralist curse and so on. A better decision-making procedure is to listen several models, severals moral theories, and to look for strategies acceptable by most of them.

Breadth Over Depth -> To reframe, is it about to optimize for known unknown?

- This CDT protagonist is not winning the game in a predictable and avoidable way, so he's a bad player

- Yes, in this example and many others, have legibility is a powerful strategy (big chunks of social skills are about that)

I'm confused, could you clarify? I interpret your "Wawaluigi" as two successive layers of deception within a simulacra, which is unlikely if WE is reliable, right?

I didn't say anything about Wawaluigis and I agree that they are not Luigis, because as I said, a layer of Waluigi is not a one-to-one operator. My guess is about a normal Waluigi layer, but with a desirable Waluigi rather than a harmful Waluigi.

"Good" simply means "our targeted property" here. So my point is, if WE is true to any property P, we could get a P-Waluigi through some anti-P (pseudo-)naive targeting.

I don't get your second point, we're talking about simulacra not agent, and obviously this idea would only be part of a larger solution at best. For any property P, I expect several anti-P so you don't have to instanciate an actually bad Luigi, my idea is more about to trap deception as a one-layer only.

Honest Why-not-just question: if the WE is roughly "you'll get exactly one layer of deception" (aka a Waluigi), why not just anticipate by steering through that effect? To choose an anti-good Luigi to get a good Waluigi?

This seems to generalize your recent Confusing the goal and the path take:

- the goal -> the necessary: to achieve the goal, it's tautologically necessary to achieve it

- the path -> the ideal one wants: to achieve the goal, one draws some ideal path to do so, confusing it with the former.

To quote your post:

For it conjures obstacles that were never there.

Or, recalling the wisdom of good old Donald Knuth:

Premature optimization is the root of all evil

Agreed. It's the opposite assumption (aka no embeddedness) for which I wrote this; fixed.

Cool! I like this as an example of difficult-to-notice problems are generally left unsolved. I'm not sure how serious this one is, though.

And it feels like becoming a winner means consistently winning.

Reminds me strongly of the difficulty of accepting commitment strategies in decision theories as in Parfit's Hitchhiker: one gets the impression that a rational agent win-oriented should win in all situations (being greedy); but in reality, this is not always what winning looks like (optimal policy rather than optimal actions).

For it conjures obstacles that were never there.

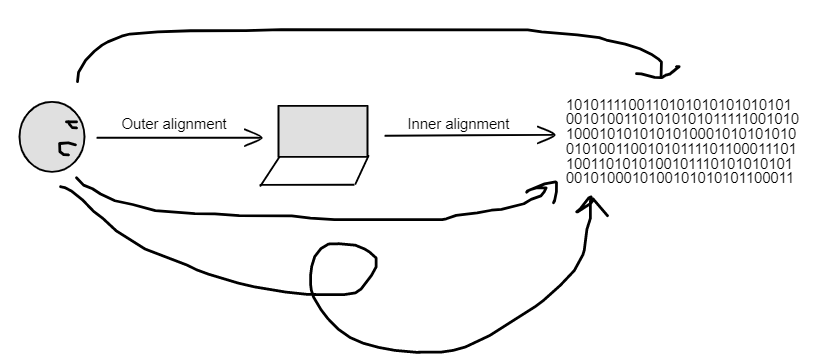

Let's try apply this to a more confused topic. Risky. Recently I've slighty updated away from the mesa paradigm, reading the following in Reward is not the optimization target:

Stop worrying about finding “outer objectives” which are safe to maximize.[9] I think that you’re not going to get an outer-objective-maximizer (i.e. an agent which maximizes the explicitly specified reward function).

- Instead, focus on building good cognition within the agent.

- In my ontology, there's only one question: How do we grow good cognition inside of the trained agent?

How does this relate to the goal/path confusion? Alignment paths strategies:

Outer + Inner alignment aims to be an alignment strategy, but only the straight path one. Any homotopic alignment path could be safe as well, our only real concern.

Surprisingly, this echoes to me a recent thought: "frames blind us"; it comes to mind when I think of how traditional agent models (RL, game theory...) blinded us for years to their implicit assumption of no embeddedness. As with non-Euclidean shift, the change has come from relaxing the assumptions.

This post seems to complete your good old Traps of Formalization. Maybe it's a hegelian dialectic:

I) intuition domination (system 1)

II) formalization domination (system 2)

III) modulable formalization (improved system 1/cautious system 2)

Deconfusion is reached only when one is able to go from I to III.

In my view you misunderstood JW's ideas, indeed. His expression "far away relevant"/"distance" is not limited to spatial or even time-spatial distance. It's a general notion of distance which is not fully formalized (work's not done yet).

We have indeed concerns about inner properties (like your examples), and it's something JW is fully aware. So (relevant) inner structures could be framed as relevant "far away" with the right formulation.

Find to maximize the predictive accuracy on the observed data, , where . Call the result .

Isn't the z in the sum on the left a typo? I think it should be n

Is the adversarial perturbation not, in itself, a mis-specification? If not, I would be glad to have your intuitive explanation of it.

Funny meta: I'm reading this just after finishing your two sequences about Abstraction, which I find very exciting! But surprise, your plan changes ! Did I read all that for nothing? Fortunately, I think it's mostly robust, indeed :)

The difference (here) between "Heuristic" and "Cached-Solutions" seems to me analogous to the difference between lazy evaluation and memoization:

- Lazy evaluation ~ Heuristic: aims to guide the evaluation/search by reducing its space.

- Memoization ~ Cached Solutions: stores in memory the values/solutions already discovered to speed up the calculation.

Ya I'll be there so I'd be glad to see you, especially Adam!

We are located in London.

Great! Is there a co-working space or something? If so, where? Also, are you planning to attend EAG London as a team?