Shannon's Surprising Discovery

post by johnswentworth · 2023-03-30T20:15:54.065Z · LW · GW · 7 commentsContents

Aside: Fungibility of Information Channel Capacity

A Frequentist -> Bayesian Bridge

Translating Between Description Length And Probability

Minimum Description Length

Summary

None

7 comments

Imagine that you’re a telecom provider in the early 20th century. You provide both telephone lines and telegraph lines - audio and binary. These two systems face quite different design constraints.

The telegraph line only needs to be able to distinguish between high and low voltage. If the signal needs to be amplified or denoised, a relay suffices, a magnetic switch which will shut under voltage above some cutoff or open under any voltage below the cutoff. A noisy incoming signal turns into a clean outgoing signal, so long as the noise isn’t so large as to cross the voltage cutoff.

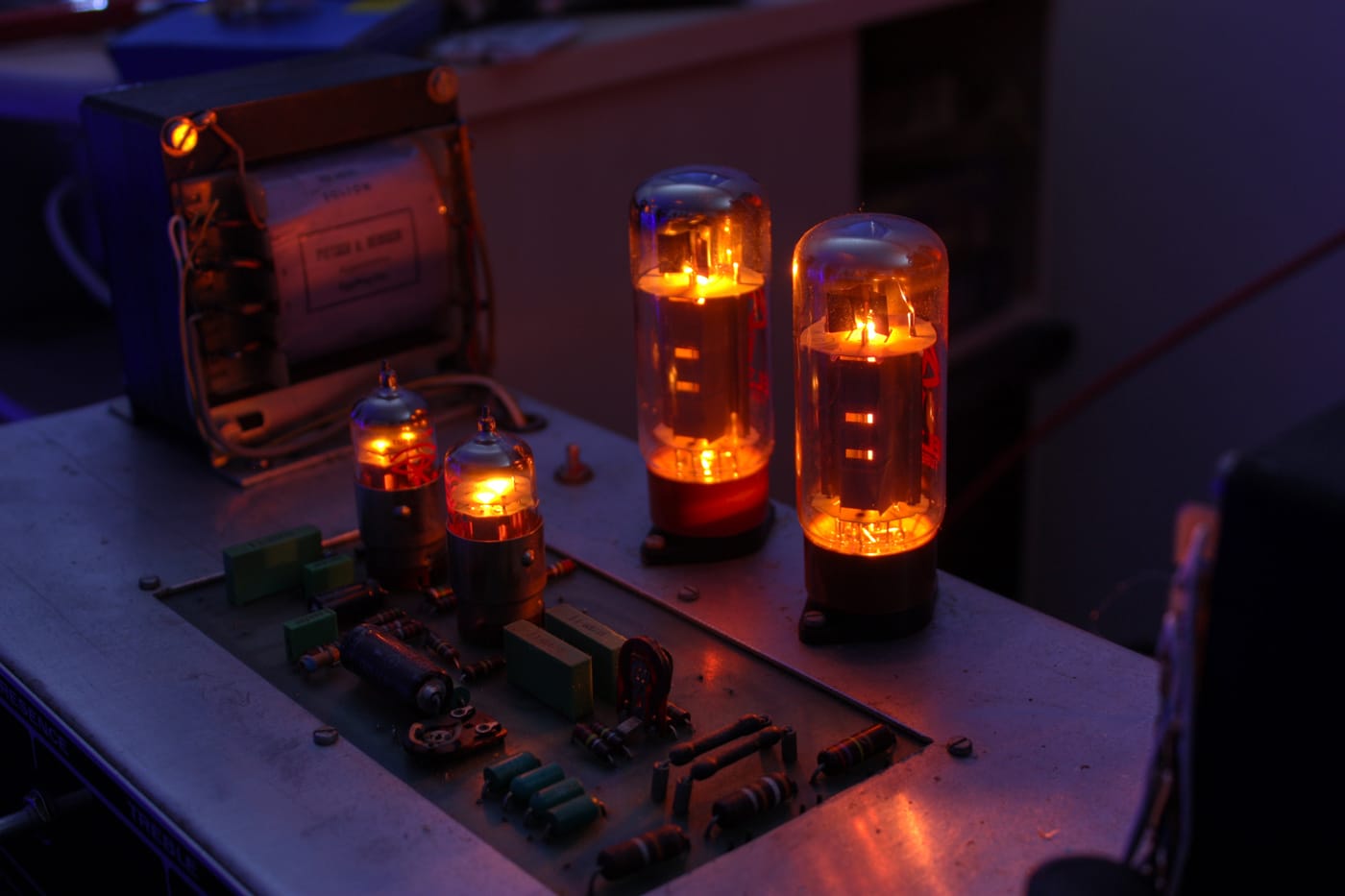

A telephone line is trickier. Long-range telephone lines require amplification of continuous voltages, without much distortion or noise. It’s a fairly different engineering problem, for which various arrangements of electrodes in evacuated tubes were used.

An early twentieth century electrical engineer working on these systems would probably have told you that different kinds of signals require different kinds of transmission hardware. Audio has different needs from Morse code, both of which have different needs from television. Sure, you could send Morse code over a telephone line, but it would be wildly inefficient. Also, different kinds of messages need more or less reliability, and so can tolerate more or less noise in the lines. Try to send a message which needs high reliability over a very noisy line, and you’ll need a lot of redundancy. Again, wildly inefficient.

Claude Shannon’s surprising discovery was that this is basically false. Different kinds of signals do not require different kinds of hardware, at least not for efficient throughput. (Though latency requirements can and do still sometimes drive hardware specialization.)

Shannon showed that bits - i.e. zeros and ones, high and low voltages, any signal which can take one of two states - are a universal way to represent information. They’re “universal” in the sense that:

- We can represent any signal in bits

- Given any transmission line, we can build an encoder to take a signal represented in bits and send it over the line

- Most importantly, we can do both of the above with arbitrarily-close-to-optimal throughput, amortized over long messages

- As an added bonus, we can achieve arbitrarily low error rate, even over a noisy channel, with arbitrarily low overhead, again amortized over long messages

In short: different kinds of signals do not, in principle, require different kinds of transmission-line hardware. We can just use one representation for everything, with arbitrarily little efficiency loss, with some clever encoding and decoding.

That was the big surprise which originally made telecoms engineers excited about information theory - or communication theory, as it was called at the time.

Aside: Fungibility of Information Channel Capacity

For purposes of studying agency, one major consequence of Shannon’s discovery is that information channels are fungible - capacity is limited, but can be used to transmit whatever information we please, so long as we can build a suitable encoder and decoder.

That makes information channel capacity a natural resource. Like money or energy, it’s limited, but can be “spent” on a wide variety of different things.

Like money or energy, there’s usually some work required before spending information capacity. With money we need to find people to trade with who have or produce what we want. With energy we need to build a machine to turn energy into whatever we want. With information we need to build the encoder and decoder. But the resource itself is flexible.

Viewing information as a resource tells us important things mainly insofar as that resource is scarce - i.e. mainly insofar as there’s limited information capacity in some place where a system needs it. Think of information channels between departments in a company, or between cells in an organism. When information capacity is scarce in a designed, evolved or otherwise optimized system, we expect that capacity to be used (approximately) efficiently, for the same reasons and to the same extent that we expect resources like money or energy to be used efficiently. Insofar as the information capacity is not used efficiently, the system could work “better” - achieve more profit, higher fitness, etc - by freeing up some information capacity and using that spare capacity for something else.

A Frequentist -> Bayesian Bridge

One interesting feature of Shannon’s math is that it’s expressed in the language of probability, specifically frequencies. The “amount of information” contained in a signal is given in terms of the frequency (i.e. probability) at which the signal takes on each of its possible values. Specifically, we calculate the amount of information (also called “entropy”) from the probabilities via Shannon’s famous formula

… where is the frequency at which the signal takes on value .

For instance, when designing Morse code, Samuel Morse estimated the frequency at which each letter appears in English text by counting the number of copies of each letter in sets of type used by printers. He found these frequencies (source):

| Letter | Frequency |

| E | 0.1127819549 |

| T | 0.08458646617 |

| A | 0.07518796992 |

| I | 0.07518796992 |

| N | 0.07518796992 |

| O | 0.07518796992 |

| S | 0.07518796992 |

| H | 0.06015037594 |

| R | 0.05827067669 |

| D | 0.04135338346 |

| L | 0.03759398496 |

| U | 0.03195488722 |

| C | 0.02819548872 |

| M | 0.02819548872 |

| F | 0.0234962406 |

| W | 0.01879699248 |

| Y | 0.01879699248 |

| G | 0.01597744361 |

| P | 0.01597744361 |

| B | 0.01503759398 |

| V | 0.01127819549 |

| K | 0.007518796992 |

| Q | 0.00469924812 |

| J | 0.003759398496 |

| X | 0.003759398496 |

| Z | 0.001879699248 |

Plug in Shannon’s formula, and we find that the information-per-letter in English based on these frequencies is about 4.22 bits. Morse himself used shorter codes for more-frequent letters, and thereby came surprisingly close to Shannon’s limit - this post estimates that he achieved about 91% efficiency.

(“But wait!”, you say, “Letters aren’t independent! The frequency of an ‘e’ after a ‘d’ is probably quite different from the frequency of an ‘e’ after a ‘u’, for instance.” Hold that thought, we’ll return to it shortly.)

Let’s unpack what that 4.22 bits means, mathematically. If each letter were independent, with the frequencies given in the table, then we could design an encoder/decoder pair which could send messages using only (arbitrarily close to) 4.22 bits per letter on average, amortized over long messages.

But what if the assumptions we use to design our code aren’t quite right? Independence is one issue, but before we even get to that, what happens if our frequencies aren’t quite right? Morse estimated frequencies based on sets of type used by printers; that’s probably an ok-but-not-perfect proxy for letter frequencies.

… and as you’d expect, the answer is that if we use an encoder/decoder optimized for not-quite-the-right-frequencies, then we’ll perform a little suboptimally. The closer the actual frequencies are to the frequencies we use to design the encoder/decoder, the closer we’ll be to optimal throughput. Specifically, the inefficiency incurred is quantified by KL-divergence.

Something interesting happened in those last two paragraphs. We started out talking about “actual frequencies”, e.g. the frequency at which each letter appears in English text. We ended up talking about the frequencies used to design the encoder/decoder. Those are the frequencies for which the encoder/decoder pair is optimized, the frequencies which the designers or users of the encoder/decoder pair implicitly expect. The former are frequentist probabilities, i.e. “actual” frequencies; they live “in the territory”. The latter sound an awful lot like Bayesian probabilities; they live “in the mind” of the designers or users of the encoder/decoder pair.

Indeed, it turns out that the “implicit” frequencies for which the encoder/decoder pair are optimized work just like Bayesian probabilities. When we design an optimal encoder/decoder pair, we find that the encoding/decoding updates after each symbol received over the transmission line - and the “implied” frequencies update according to Bayes’ rule. The same general idea readily handles non-independent letters.

(Exercise for readers who want exercises and are at least somewhat familiar with information theory already: prove that implied frequencies for any optimal encoder/decoder pair update on each symbol received over the transmission line according to Bayes’ rule. But maybe read the next section first.)

Translating Between Description Length And Probability

While the design of computationally efficient optimal encoders/decoders is a whole field of research, the basic recipe for a (not necessarily very computationally efficient) optimal encoder/decoder pair is relatively simple. Here’s the procedure:

- Take the whole set of possible messages. (That’s long messages, not just individual letters; we’re amortizing costs over the length of the message, so if we’re expecting short independent messages, then we should append a bunch of them together.)

- Calculate the probability for each message , under whatever distribution we’re optimizing the encoder/decoder for.

- Assign a code of length approximately bits to each message .

The “assign a code” step is conceptually where most of the research happens, because there’s a lot of degrees of freedom in the choice of code. If you want details of the “assign a code” step, you can read about prefix-free codes for a relatively simple starting point, but for our purposes the key fact is that we can always assign codes this way.

Given such a code, the average number of bits to send a message is approximately

… i.e. Shannon’s optimal throughput limit.

Key point: if the designers/users of the encoder/decoder pair expect message with probability , then they’ll assign it a code of length (approximately) . Conversely, if an encoder/decoder pair uses a code of length (approximately) , then their “implied” probability on message is approximately .

In other words: there’s a simple general formula for translating between “description lengths” (a.k.a. code length) and “implied” probabilities. To get a description length from a probability, take . To get an “implied” probability from a description length, take .

There is one catch: in principle, there could be multiple codes/descriptions which decode to the same message. The obvious thing to do is then to add up the implied probabilities of each description which produces the same message. That indeed works great. However, it turns out that just taking the minimum description length - i.e. the length of the shortest code/description which produces the message - is a good-enough approximation of that sum in one particularly powerful class of codes: universal Turing machines.

Minimum Description Length

How could we use a universal Turing machine (or, more generally, a program in a Turing-complete language) as code?

Well, the encoder finds a program which outputs the message. It then sends that program over the transmission line. The decoder runs the program to obtain the message - in other words, the decoder is just the Turing machine (or an interpreter for the Turing-complete programming language). [1]

On the one hand, designing an ideal encoder for such a system is impossible in general; the problem of finding the shortest program which outputs a particular message is uncomputable. On the other hand, such an encoder/decoder pair would be “universal” in a rather strong sense. Their code could adapt on-the-fly to compress any computable pattern in a message. That makes such encoder/decoder pairs interesting to study as an idealized limit of codes.

That’s the basic idea behind Solomonoff/Kolmogorov/Minimum Description Length probabilities, from an information-theoretic angle.

The formula gives us a straightforward method to translate between the language of probability and the language of minimum description length. The translation is useful mainly because most work in physics, economics, AI, etc, is in the language of probability, but minimum description length is often conceptually clearer, especially when talking about the “probabilities” of constant strings.

One example of translation: when using a universal encoder/decoder pair, the choice of Turing machine (or programming language) acts as the “prior”. The language determines the minimum description length of each message, and therefore the approximate implicit probability 2^(-minimum description length) of each message, before any symbols are transmitted. Different Turing machines or languages yield different implicit priors.

Another example of translation: in probability, and are independent iff . So, in the language of minimum description length, two messages and should be “independent” iff . In other words: for independent and , the length of the shortest program which generates both and is approximately the sum of the lengths of the shortest programs which generate and separately. We cannot, for instance, save any program-length by writing a program to compute just , and then writing a separate program which uses to generate .

Summary

The first big surprise from Shannon is that information is fungible: any information has some size, measurable in bits, and any information channel has some capacity, also measurable in bits. For purposes of throughput, it doesn’t matter what information goes over what channel; all that matters is size and capacity. That makes information channel capacity a natural “resource”, similar to money or energy: it can be “spent” on a wide variety of things, and we should expect heavily-optimized systems to use it efficiently.

Shannon starts with frequentist probabilities, i.e. “actual” frequencies of messages. But we quickly encounter Bayesian probabilities: the “implied” probabilities of an encoder/decoder pair. We can translate between encoding lengths and “implied” probabilities via

The same formula gives us a standard way to translate between the language of Minimum Description Length under some universal Turing machine, and the usual language of probability theory. By viewing the Turing machine as the decoder, we can ground that translation in the same interpretation of “implied” probabilities.

- ^

I’m brushing under the rug the fact that not all programs halt, which means that the implied “probabilities” don’t sum to 1. The obvious way to handle this is to sum up the implied probabilities of all the programs which do halt, then normalize by that sum. In information-theoretic terms, just directly using a Turing machine as a decoder is inefficient (because codes corresponding to non-halting programs are unused), but we can make it more efficient by just removing the unused codes. Of course the normalizer is uncomputable, but that’s par for the course when dealing with Kolmogorov complexity.

7 comments

Comments sorted by top scores.

comment by Brendan Long (korin43) · 2023-03-30T20:59:24.042Z · LW(p) · GW(p)

Thanks, this was really well written and I learned some things. Now I want to try writing my own adaptive Huffman coding algorithm..

comment by Antoine de Scorraille (Etoile de Scauchy) · 2023-06-03T13:16:05.284Z · LW(p) · GW(p)

There is one catch: in principle, there could be multiple codes/descriptions which decode to the same message. The obvious thing to do is then to add up the implied probabilities of each description which produces the same message. That indeed works great. However, it turns out that just taking the minimum description length - i.e. the length of the shortest code/description which produces the message - is a good-enough approximation of that sum in one particularly powerful class of codes: universal Turing machines.

Is this about K-complexity is silly; use cross-entropy instead [LW(p) · GW(p)]?

↑ comment by johnswentworth · 2023-06-03T16:15:05.848Z · LW(p) · GW(p)

Yes.

comment by darius · 2023-04-02T11:09:00.530Z · LW(p) · GW(p)

Nit: 0.36 bits/letter seems way off. I suspect you only counted the contribution of the letter E from the above table (-p log2 p for E's frequency value is 0.355).

↑ comment by johnswentworth · 2023-04-02T16:26:37.186Z · LW(p) · GW(p)

Wow, I really failed to sanity-check that calculation. Fixed now, and thankyou!

Replies from: evand↑ comment by evand · 2023-04-02T17:49:56.640Z · LW(p) · GW(p)

I think you missed a follow-on edit:

"Let’s unpack what that 0.36 bits means,"

↑ comment by johnswentworth · 2023-04-02T17:57:03.850Z · LW(p) · GW(p)

Ah, yup, thankyou!