Interpretability/Tool-ness/Alignment/Corrigibility are not Composable

post by johnswentworth · 2022-08-08T18:05:11.982Z · LW · GW · 13 commentsContents

Interpretability Tools Alignment/Corrigibility How These Arguments Work In Practice None 14 comments

Interpretability

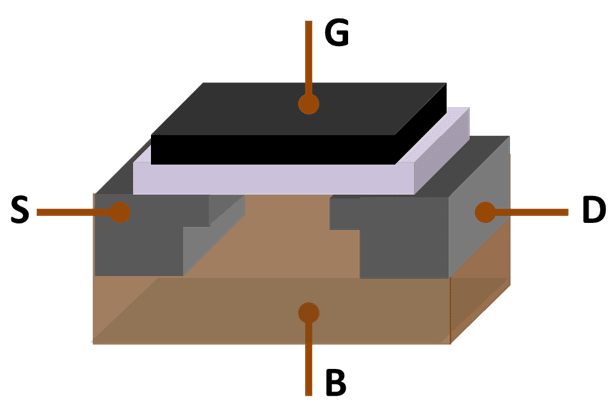

I have a decent understanding of how transistors work, at least for purposes of basic digital circuitry. Apply high voltage to the gate, and current can flow between source and drain. Apply low voltage, and current doesn’t flow. (... And sometimes reverse that depending on which type of transistor we’re using.)

I also understand how to wire transistors together into a processor and memory. I understand how to write machine and assembly code to run on that processor, and how to write a compiler for a higher-level language like e.g. python. And I understand how to code up, train and run a neural network from scratch in python.

In short, I understand all the pieces from which a neural network is built at a low level, and I understand how all those pieces connect together. And yet, I do not really understand what’s going on inside of trained neural networks.

This shows that interpretability [? · GW] is not composable: if I take a bunch of things which I know how to interpret, and wire them together in a way I understand, I do not necessarily know how to interpret the composite system. Composing interpretable pieces does not necessarily yield an interpretable system.

Tools

The same applies to “tools”, in the sense of “tool AI [? · GW]”. Transistors and wires are very tool-ish: I understand what they do, they’re definitely not optimizing the broader world or trying to trick me or modelling me at all or trying to self-preserve or acting agenty in general. They’re just simple electronic tools.

And yet, assuming agenty AI is possible at all, it will be possible to assemble those tools into something agenty.

So, like interpretability, tool-ness is not composable: if I take a bunch of non-agenty tools, and wire them together in a way I understand, the composite system is not necessarily a non-agenty tool. Composing non-agenty tools does not necessarily yield a non-agenty tool.

Alignment/Corrigibility

What if I take a bunch of aligned and/or corrigible agents, and “wire them together” into a multi-agent organization? Is the resulting organization aligned/corrigible?

Actually there’s a decent argument that it is, if the individual agents are sufficiently highly capable. If the agents can model each other well enough and coordinate well enough, then they should be able to each individually predict what individual actions will cause the composite system to behave in an aligned/corrigible way, and they want to be aligned/corrigible, so they’ll do that.

However, this does not work if the individual agents are very limited and unable to model the whole big-picture system. HCH-like proposals [LW · GW] are a good example here: humans are not typically able to model the whole big picture of a large human organization. There are too many specialized skillsets, too much local knowledge and information, too many places where complicated things happen which the spreadsheets and managerial dashboards don’t represent well. And humans certainly can’t coordinate at scale very well [LW · GW] in general - our large-scale communication bandwidth is maybe five to seven words [LW · GW] at best. Each individual human may be reasonably aligned/corrigible, but that doesn’t mean they aggregate together into an aligned/corrigible system.

The same applies if e.g. we magically factor a problem and then have a low-capability overseeable agent handle each piece. I could definitely oversee a logic gate during the execution of a program, make sure that it did absolutely nothing fishy, but overseeing each individual logic gate would do approximately nothing at all to prevent the program from behaving maliciously.

How These Arguments Work In Practice

In practice, nobody proposes that AI built from transistors and wires will be interpretable/tool-like/aligned/corrigible because the transistors and wires are interpretable/tool-like/aligned/corrigible. But people do often propose breaking things into very small chunks, so that each chunk is interpretable/tool-like/aligned/corrigible. For instance, interpretability people will talk about hiring ten thousand interpretability researchers to each interpret one little circuit in a net. Or problem factorization people will talk about breaking a problem into a large number of tiny little chunks each of which we can oversee.

And the issue is, the more little chunks we have to combine together, the more noncomposability becomes a problem. If we’re trying to compose interpretability/tool-ness/alignment/corrigibility of many little things, then figuring out how to turn interpretability/tool-ness/alignment/corrigibility of the parts into interpretability/tool-ness/alignment/corrigibility of the whole is the central problem, and it’s a hard (and interesting) open research problem.

13 comments

Comments sorted by top scores.

comment by Ivan Vendrov (ivan-vendrov) · 2022-08-09T01:26:36.703Z · LW(p) · GW(p)

I don't think any factored cognition proponents would disagree with

Composing interpretable pieces does not necessarily yield an interpretable system.

They just believe that we could, contingently, choose to compose interpretable pieces into an interpretable system. Just like we do all the time with

- massive factories with billions of components, e.g. semiconductor fabs

- large software projects with tens of millions of lines of code, e.g. the Linux kernel

- military operations involving millions of soldiers and support personnel

Figuring out how to turn interpretability/tool-ness/alignment/corrigibility of the parts into interpretability/tool-ness/alignment/corrigibility of the whole is the central problem, and it’s a hard (and interesting) open research problem.

Agreed this is the central problem, though I would describe it more as engineering than research - the fact that we have examples of massively complicated yet interpretable systems means we collectively "know" how to solve it, and it's mostly a matter of assembling a large enough and coordinated-enough engineering project. (The real problem with factored cognition for AI safety is not that it won't work, but that equally-powerful uninterpretable systems might be much easier to build).

Replies from: Etoile de Scauchy↑ comment by Antoine de Scorraille (Etoile de Scauchy) · 2023-06-03T12:01:37.879Z · LW(p) · GW(p)

Do we really have such good interpretations for such examples? It seems to me that we have big problems in the real world because we don't.

We do have very high-level interpretations, but not enough to have solid guarantees. After all, we have a very high-level trivial interpretation of our ML models: they learn! The challenge is not just to have clues, but clues that are relevant enough to address safety concerns in relation to impact scale (which is the unprecedented feature of the AI field).

comment by Jan_Kulveit · 2022-08-08T19:08:05.349Z · LW(p) · GW(p)

The upside of this, or of "more is different" , is we don't necessarily even need the property in the parts, or detailed understanding of the parts. And how the composition works / what survives renormalization / ... is almost the whole problem.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2025-02-22T20:09:25.898Z · LW(p) · GW(p)

I spent some time learning about neural coding once, and while interesting it sure didn't help me e.g. better predict my girlfriend; I think in general neuroscience is fairly unhelpful for understanding psychology. For similar reasons, I'm default-skeptical of claims that work on the level of abstraction of ML is likely to help with figuring out whether powerful systems trained via ML are trying to screw us, or with preventing that.

comment by [deleted] · 2022-08-08T23:28:53.730Z · LW(p) · GW(p)

The way I see it having a lower level understanding of things allows you to create abstractions about their behavior that you can use to understand them on a higher level. For example, if you understand how transistors work on a lower level you can abstract away their behavior and more efficiently examine how they wire together to create memory and processor. This is why I believe that a circuits-style approach is the most promising one we have for interpretability.

Do you agree that a lower level understanding of things is often the best way to achieve a higher level understanding, in particular regarding neural network interpretability, or would you advocate for a different approach?

comment by leogao · 2022-08-11T06:19:48.823Z · LW(p) · GW(p)

(Mostly just stating my understanding of your take back at you to see if I correctly got what you're saying:)

I agree this argument is obviously true in the limit, with the transistor case as an existence proof. I think things get weird at the in-between scales. The smaller the network of aligned components, the more likely it is to be aligned (obviously, in the limit if you have only one aligned thing, the entire system of that one thing is aligned); and also the more modular each component is (or I guess you would say the better the interfaces between the components), the more likely it is to be aligned. And in particular if the interfaces are good and have few weird interactions, then you can probably have a pretty big network of components without it implementing something egregiously misaligned (like actually secretly plotting to kill everyone).

And people who are optimistic about HCH-like things generally believe that language is a good interface and so conditional on that it makes sense to think that trees of humans would not implement egregiously misaligned cognition, whereas you're less optimistic about this and so your research agenda is trying to pin down the general theory of Where Good Interfaces/Abstractions Come From or something else more deconfusion-y along those lines.

Does this seem about right?

Replies from: johnswentworth↑ comment by johnswentworth · 2022-08-11T16:08:52.455Z · LW(p) · GW(p)

Good description.

Also I had never actually floated the hypothesis that "people who are optimistic about HCH-like things generally believe that language is a good interface" before; natural language seems like such an obviously leaky and lossy API that I had never actually considered that other people might think it's a good idea.

Replies from: Etoile de Scauchy↑ comment by Antoine de Scorraille (Etoile de Scauchy) · 2023-06-03T13:21:12.771Z · LW(p) · GW(p)

Natural language is lossy because the communication channel is [? · GW] narrow [LW · GW], hence the need for lower-dimensional representation (see ML embeddings) of what we're trying to convey. Lossy representations is also what Abstractions are about.

But in practice, you expect Natural Abstractions (if discovered) cannot be expressed in natural language?

↑ comment by johnswentworth · 2023-06-03T17:30:33.389Z · LW(p) · GW(p)

I expect words are usually pointers to natural abstractions, so that part isn't the main issue - e.g. when we look at how natural language fails all the time in real-world coordination problems, the issue usually isn't that two people have different ideas of what "tree" means. (That kind of failure does sometimes happen, but it's unusual enough to be funny/notable.) The much more common failure mode is that a person is unable to clearly express what they want - e.g. a client failing to communicate what they want to a seller. That sort of thing is one reason why I'm highly uncertain about the extent to which human values (or other variations of "what humans want") are a natural abstraction.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2023-08-22T01:40:29.926Z · LW(p) · GW(p)

comment by DanielFilan · 2022-08-09T20:47:23.437Z · LW(p) · GW(p)

(see also this shortform [LW(p) · GW(p)], which makes a rudimentary version of the arguments in the first two subsections)

comment by Thomas Larsen (thomas-larsen) · 2022-08-08T21:01:13.611Z · LW(p) · GW(p)

Did you mean:

And yet, assuming **tool** AI is possible at all, it will be possible to assemble those tools into something agenty.

Replies from: johnswentworth↑ comment by johnswentworth · 2022-08-08T21:07:44.232Z · LW(p) · GW(p)

Nope. The argument is:

- Transistors/wires are tool-like (i.e. not agenty)

- Therefore if we are able to build an AGI from transistors and wires at all...

- ... then it is possible to assemble tool-like things into agenty things.

comment by SimonBiggs · 2023-05-25T02:02:35.532Z · LW(p) · GW(p)

This reminds me of the problems that STPA are trying to solve in safe systems design:

https://psas.scripts.mit.edu/home/get_file.php?name=STPA_handbook.pdf

And, for those who prefer video, here's a good video intro to STPA:

Their approach is designed to handle complex systems, by decomposing the system into parts. However, they are not decomposed into functions or tasks, but instead they decompose the system into a control structure.

They approach this problem by, addressing a system as built up of a graph of controllers (internal mesa optimisers which are potentially nested) which control processes and then receive feedback (internal loss functions) from those processes. From there, they are then able to logically decompose the system in such a way for each controller component and present the ways in which the resulting overall system can be unsafe due to that particular controller.

Wouldn't it be amazing if one day we could make a neural network that when trained, the result is subsequently verifiably mappable via mech-int onto an STPA control structure. And then, potentially have verifiable systems in place that themselves undergo STPA analyses on larger yet systems, in order to flag potential hazards given a scenario, and given its current control structure.