Updates on FLI's Value Aligment Map?

post by T431 (rodeo_flagellum) · 2022-09-17T22:27:50.740Z · LW · GW · No commentsThis is a question post.

Contents

Answers 4 Gyrodiot 4 Charlie Steiner None No comments

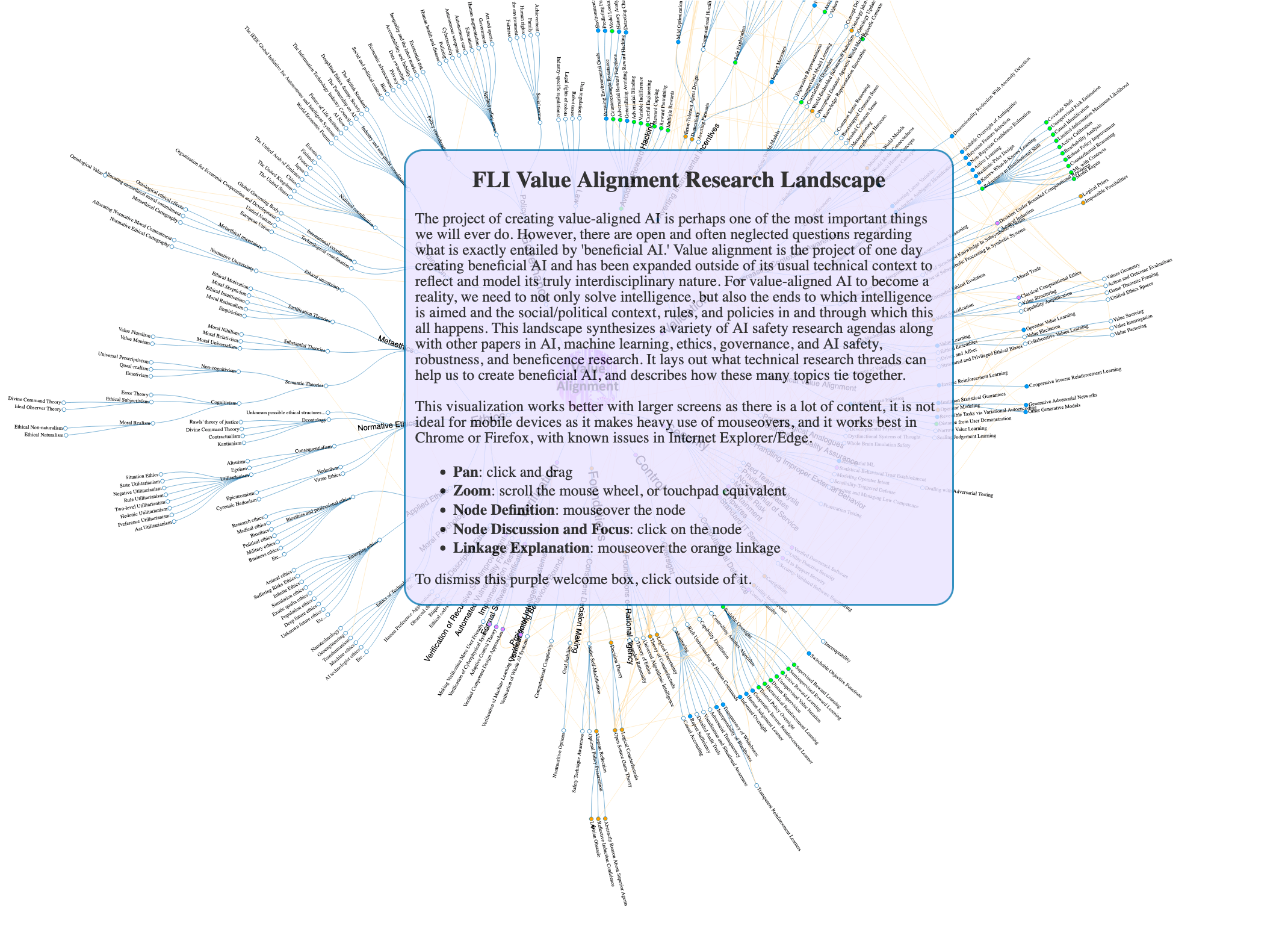

Around January 2021, I came across the Future of Life Institute's Value Alignment Map, and found it to be a useful resource for getting a sense of the different alignment topics. I was going through it again today, and recalled that I have not seen many mentions of this map in the wider LW/EAF/Alignment community, and have also not seen any external commentary on its comprehensiveness. Additionally, the corresponding document for the map indicates that it was last edited in January 2017; I haven't been able to find an updated version on LW or FLI's website, and spent about half an hour searching. With this in mind, I have a few questions:

- Does anyone know of an external review of the comprehensiveness and accuracy of the topics covered in this map?

- Does anyone know if there are plans to update it OR, if it has been updated, where this newer version can be found?

- Does anyone know if there are similar maps of value alignment research / AI safety research topics in terms of comprehensiveness?

Answers

Answers in order: there is none, there were, there are none yet.

(Context starts, feel free to skip, this is the first time I can share this story)

After posting this [LW · GW], I was contacted by Richard Mallah, who (if memory serves right) created the map, compiled the references and wrote most of the text in 2017, to help with the next iteration of the map. The goal was to build a Body of Knowledge for AI Safety, including AGI topics but also more current-capabilities ML Safety methods.

This was going to happen in conjunction with the contributions of many academic & industry stakeholders, under the umbrella of CLAIS (Consortium on the Landscape of AI Safety), mentioned here.

There were design documents for the interactivity of the resource, and I volunteered Back in 2020 I had severely overestimated both my web development skills and ability to work during a lockdown, never published a prototype interface, and for unrelated reasons the CLAIS project... winded down.

(End of context)

I do not remember Richard mentioning a review of the map contents, apart from the feedback he received back when he wrote them. The map has been a bit tucked in a corner of the Internet for a while now.

The plans to update/expand it failed as far as I can tell. There is no new version and I'm not aware of any new plans to create one. I stopped working on this in April 2021.

There is no current map with this level of interactivity and visualization, but there has been a number of initiatives [LW · GW] trying to be more comprehensive and up-to-date!

↑ comment by T431 (rodeo_flagellum) · 2022-09-22T15:58:53.841Z · LW(p) · GW(p)

I am sorry that I took such a long time replying to this. First, thank you for your comment, as it answers all of my questions in a fairly detailed manner.

The impact of a map of research that includes the labs, people, organizations, and research papers focused on AI Safety seems high, and FLI's 2017 map seems like a good start at least for what types of research is occurring in AI Safety. In this vein, it is worth noting that Superlinear is offering a small prize of $1150 for whoever can "Create a visual map of the AGI safety ecosystem", but I don't think this is enough to incentivize the creation of the resource that is currently missing from this community. I don't think there is a great answer to "What is the most comprehensive repository of resources on the work being done in AI Safety?". Maybe I will try to make a GitHub repository with orgs., people, and labs using FLI's map as an initial blueprint. Would you be interested in reviewing this?

Replies from: Gyrodiot↑ comment by Gyrodiot · 2022-09-24T07:25:09.071Z · LW(p) · GW(p)

No need to apologize, I'm usually late as well!

I don't think there is a great answer to "What is the most comprehensive repository of resources on the work being done in AI Safety?"

There is no great answer, but I am compelled to list some of the few I know of (that I wanted to update my Resources post with) :

- Vael Gates's transcripts [LW · GW], which attempts to cover multiple views but, by the nature of conversations, aren't very legible;

- The Stampy project to build a comprehensive AGI safety FAQ, and to go beyond questions only, they do need motivated people;

- Issa Rice's AI Watch, which is definitely stuck in a corner of the Internet, if I didn't work with Issa I would never have discovered it, lots of data about orgs, people and labs, not much context.

Other mapping resources involve not the work being done but arguments and scenarios, as an example there's Lukas Trötzmüller's excellent argument compilation [EA · GW], but that wouldn't exactly help someone get into the field faster.

Just in case you don't know about it there's the AI alignment field-building tag [? · GW] on LW, which mentions an initiative run by plex [LW · GW], who also coordinates Stampy.

I'd be interested in reviewing stuff, yes, time permitting!

Huh, I hadn't seen that before. Looks interesting though! Not sure it's worth giving a complete review, but it seems complete-in-the-sense-of-an-encyclopedia-entry. It's not covering research in progress so much as it's one person's really good shot at making a map of value alignment.

No comments

Comments sorted by top scores.