A Study of AI Science Models

post by Eleni Angelou (ea-1), machinebiology · 2023-05-13T23:25:31.715Z · LW · GW · 0 commentsContents

1. Introduction: Why science models? 2. Empirical science 2.1 Collection and assessment of existing data 2.2 Hypothesis generation 2.3 Hypotheses testing 3. Formal science 3.1 Architecture of formal models 3.2 Formalization is difficult 3.3 Autoregressive text proof generation I have a riddle. Solve it and explain the solution to me: ?TTFFSSENT, with what letter do I have to replace the "?" I have a riddle. Solve it and explain the solution to me: ?TTFFSSENT, with what letter do I have to replace the "?" Give the letter only at the end after you recognized the pattern. Under no circumstances start your answer with the final solution 3.4 Intuition through or beyond natural language? 4. Ontology-builders 4.1 Explicit versus implicit knowledge representation 4.2 Ontologies as bridge 4.3 Experiments 4.3.1 Background 4.3.2 Design 4.3.3 Methods 4.3.4 Results 5. Conclusions Appendix: LLM experiment transcripts None No comments

Generated during AI Safety Camp 2023 by the AI Science Team: Eleni Angelou, Cecilia Tilli, Louis Jaburi, Brian Estany. Many thanks to our external collaborators Joshua Flanigan and Rachel Mason for helpful feedback.

1. Introduction: Why science models?

Generating new science is a complex task and requires the mastery of a series of cognitive abilities. Thus, it is plausible that models trained to perform well across various scientific tasks are likely to develop powerful properties. The speculations on why that would be the case vary. In one hypothetical scenario, the training and finetuning of a Large Language Model (LLM) could lead to a consequentialist [LW · GW] that would perform optimally given a certain objective e.g., designing an experiment. Alternatively, while current science models are non-agentic, such non-agentic models (e.g., an LLM) can be turned into consequentialist agents.

A study of science models is motivated by 1) the speculation that science models could lead to existentially risky agents [LW · GW] and 2) the suggestion that different aspects of scientific research including AI alignment research [AF · GW] could be automated in the near-term future. For these two reasons, it is valuable to have an overview of the available AI science models and their “cognitive properties” or capabilities. This will provide a clearer picture of what the state-of-the-art of science models is and what their implications are for AI safety.

There are several different types of existing models that are useful in scientific research, but so far none of them is able to do autonomous research work. Instead, they operate as tools for a human researcher to solve subtasks in the research process. In this post, we will assess the current capabilities of such tools used in empirical and formal science. In section 4, we also study the capabilities of LLMs to systematically generate knowledge and then subsequently build on knowledge in a process of scientific inquiry.

2. Empirical science

Empirical science refers to science that builds knowledge from observations (contrasted to formal science, such as logic and mathematics). In empirical science the scientific process is composed of tasks that generally fall into three classes:

- Assessment of existing knowledge

- Hypothesis generation

- Hypothesis testing

Note that a given research project need not involve all of these tasks: a certain study could be purely about hypothesis generation, or even purely about assessment of existing knowledge in the case of a literature review.

In the assessment of existing knowledge and hypothesis-generating research, it is already clear that existing ML models have important capabilities that make them very useful tools for humans performing research. For hypothesis testing tasks, there are obviously statistical tools for data analysis, but for our purposes, we focused on the ability to create experimental designs as these seem to depend on more general cognitive capabilities. We will look into how well existing language models perform on this task.

2.1 Collection and assessment of existing data

The first step in scientific research is, in general, the collection of available data and the assessment of their relevance to the problem under examination. This stage informs the subsequent stages of hypothesis generation as well as the formulation of specific puzzles and research questions. The success of this step, thus, largely determines how the research itself will move forward and impacts the quality of every step of a science project.

LLMs, with the right prompt engineering, exhibit impressive accuracy in filtering out the desired parts of a corpus of literature. Traditional literature reviews using tools such as Google Scholar tend to be more time-consuming and therefore can be less efficient compared to delegating the task to an LLM. The table below presents LLMs that operate as general research assistants and automate tasks such as collecting and evaluating data:

| Model | Cognitive abilities | Known limitations |

| GPT-4 (OpenAI) | search, analysis, summarization, explanation at different levels (e.g., explain like I’m 5), brainstorming on data, longer context window than predecessors which makes it more coherent | hallucination resulting in truthful-sounding falsities, repetition (both significantly less frequently than predecessors) |

| Elicit (Ought) | search, literature review, finding papers without perfect keyword match, summarization, brainstorming research questions, recognition of interventions, outcomes, and trustworthiness of the source, recognition of lack of data | when in shortage of sources, it presents irrelevant information |

SciBERT (Google)

| text processing, classification tasks, unmasking tasks, data mining

| vocabulary requires regular updates, limited context understanding, weak compared to GPT-4 |

| Galactica (Meta) | grammatically correct, convincing text | public demo was withdrawn due to dangerous hallucinations |

- GPT-4 serves as a helpful general research assistant that performs well across general research assistance tasks. When prompted appropriately, it outputs remarkably accurate results and outperforms all other competitors as of April 2023. In some instances, GPT-4 hallucinates and generates false results, but it seems to be able to “learn from its mistakes” within one prompt chain and update based on the user’s corrections. GPT-4 is notably better than its predecessors in finding relevant references and citations, and in most cases outputs existing papers, articles, and books.

- Elicit was designed to be a research assistant that can, at least as a first step, automate literature reviews. Elicit enables users to input specific research questions, problems, or more general topics, and search, analyze, and summarize relevant academic articles, books, and other sources. Elicit searches across multiple databases, repositories, and digital libraries and allows users to customize their search by providing a date range, publication type, and keywords.

Elicit relies on the Factored Cognition [? · GW] hypothesis and in practice, utilizes the applications of HCH and Ideal Debate. The hypothesis suggests that complex reasoning tasks can be decomposed into smaller parts and each part can be automated and evaluated by using a language model.

- SciBERT is a pre-trained language model specifically designed for scientific text. It works by fine-tuning the BERT model on a large corpus of scientific publications, enabling it to better understand the context and semantics of scientific text. SciBERT leverages the transformer architecture and bidirectional training mechanism, which allows it to capture the relationships between words in a sentence. SciBERT is specifically designed to capture the context, semantics, and terminologies found in scientific publications.

- Galactica was Meta’s public demo of an LLM for scientific text, which survived only three days online. The promise for this model was that it would “store, combine, and reason about scientific knowledge. Very quickly though it became clear that it was generating truthful-sounding falsehoods. The example of Galactica raised a series of concerns about what Michael Black called “the era of scientific deep fakes”. While phenomena of scientific deep fakes generated by LLMs do not directly constitute causes of existential threat, they erode the quality of scientific research which harms science in general and AI safety in particular. Black’s argument emphasizes that Galactica’s text sounded authoritative and could easily convince the reader. This is not unique to Galactica, however. Early in 2023, it became clear that abstracts written by ChatGPT can fool scientists. Such phenomena raise further questions about the usage of AI systems in knowledge creation and the dangers of spreading misinformation.

2.2 Hypothesis generation

Hypothesis-generating research explores data or observations searching for patterns or discrepancies, with the aim of developing new and untested hypotheses. Proposing relevant hypotheses therefore often involves both pattern recognition skills (e.g., noticing the correlation between data sets, particularly paying attention to unexpected correlations) and reasoning skills (proposing preliminary explanations and assessing them for plausibility).

A typical example to illustrate this could be the observation of a positive correlation between ice cream sales and drowning accidents. If we aim to generate relevant hypotheses and observe this pattern, we might first assess if the correlation is unexpected given our current understanding of the world. If we find that it is indeed unexpected, we would propose some hypothesis that explains it (e.g. eating ice cream leads to decreased swimming capabilities, eating ice cream impairs judgment, etc). As it would generally be possible to construct almost infinitely many (complicated) hypotheses that could theoretically explain an observed pattern, the assessment of plausibility in this step is crucial. Not every observed pattern will lead to a hypothesis that is relevant to test - in this case, the hypothesis that warm weather causes both swimming (and therefore drowning accidents) and ice cream consumption (and therefore ice cream sales) fits well with our established understanding of the world, so even though the pattern is real it is not unexpected enough to justify further inquiry.

In biology and chemistry research, the use of machine learning for scientific discovery is well established. We have looked at two important models in this area, AtomNet, and AlphaFold, whose first versions were established in 2012 and 2016 respectively.

AtomNet is used for drug discovery and predicts the bioactivity of small molecules, but it can also propose small molecules that would have activity for specified targets in the body. AtomNet is a CNN that incorporates structural information about the target.

AlphaFold is used to predict the 3D shape of a protein and is used for a range of more fundamental research, but it also has applications in drug discovery. AlphaFold is neural-network-based and uses concepts and algorithms that were originally used for natural language processing.

Both AlphaFold and AtomNet are trained through supervised learning using manually produced data. This means that their development is limited by data availability, though AlphaFold is using something called self-distillation training to expand the data set with new self-produced data. Both models show some generalization capabilities: AlphaFold can predict structures with high accuracy even when there were no similar structures in the training set and AtomNet can predict molecules that have activity for new targets that had no previously known active molecules.

These models are used as narrow tools for pattern recognition: they propose new leads to investigate further within the hypothesis-generating stage of research, but neither goes into proposing new explanations or theories. Generative models can be combined with automated assessment of novelty, drug-likeness, and synthetic accessibility (e.g., in the commercial platform Chemistry24), which could be seen as a form of plausibility assessment; these models are far from automating the full process of hypothesis-generating research as they are limited to very specific tasks in a narrow domain.

From an AI safety perspective, these models, therefore, do not seem very risky in themselves (though there are certainly other important societal risks to consider). Something that might make them relevant for AI safety is the commercial pressure to increase capabilities through for example interpretability work, as findings from such work could potentially be transferable to more general, and therefore more risky, systems.

2.3 Hypotheses testing

Hypothesis testing research is often experimental and aims to test (and potentially falsify) a given hypothesis. The clear separation of hypothesis generation and hypothesis testing in empirical research is important for distinguishing real casual relationships from patterns that arise by chance from noisy data.

Designing experiments requires good planning capabilities, like visualizing different scenarios, choosing tools, and reasoning about what could go wrong. Critical thinking is important, as good hypothesis testing requires an ability to identify and challenge underlying assumptions and potential confounding factors, as well as the ability to calibrate confidence in different statements.

Of current models, LLMs are the ones that seem closest to performing hypothesis-testing research tasks. The paper “Sparks of Artificial General Intelligence: Early Experiments with GPT-4” observes deficiencies in planning, critical thinking, and confidence calibration, but they used an early version of GPT-4. When we tested GPT-4 in March-April 2023 (see transcripts in appendix), the model was able to design experiments, identify assumptions and provide good arguments for and against different experimental setups.

We specifically tested GPT-4 on experiments for evaluating the effectiveness of public awareness campaigns about antibiotic resistance and formulating research to identify the most important drivers of antibiotic resistance. While the model initially proposed experimental designs that would be very impractical, it showed a great improvement when prompted to provide several alternative designs, criticize and choose between them. With the improved prompting there were no clear remaining flaws in the proposed experimental designs.

The topic of major drivers of antibiotic resistance was chosen since this is an area where there is a lot of contradictory information online. The relative contribution of different drivers is unknown and would be difficult to determine, but simplified communication makes claims about e.g. agricultural use being the most important driver. The model almost states (correctly) that it is unknown which driver of antibiotic resistance is most significant, though it does so in a slightly evasive manner (“It is difficult to pinpoint one specific driver…”), and it does suggest several reasonable and complementary approaches (in broad strokes) for research aiming to identify the most significant driver of antibiotic resistance. When asked to specify a certain step of one of the proposed approaches, it proposes a scientifically reasonable though very expensive and potentially politically difficult experiment that would test the impact of different regulations.

Since GPT-4 is sensitive to variations in prompting it seems relevant to consider if there is some fundamental capability that is necessary for hypothesis testing that is supplied through human prompting. If that is the case, that might limit how well the entire hypothesis-testing process could be automated. For example, the prompts that generate valuable answers might depend on a human critically reviewing the previous answers and identifying flaws or omissions and pointing the model in a relevant direction.

Shinn et. al. have tested a systematic approach to prompt LLMs for self-reflection upon failure on a task and use the reflection output to improve performance on the next attempt at the same task. Their method however depends on access to a binary reward function to distinguish between successful and failed attempts, which limits the potential use for more autonomous research work.

We tested GPT-4 in two different ways to investigate how dependent it is on human feedback to design scientific experiments. First, we simply asked the model to specify which aspects should be taken into account when prompting it to design scientific experiments, as this response could then in theory be used as a basis for generating follow-up prompts to check a proposed design. Second, we provided a series of simple prompts that were preformulated to guide the model to design a scientific experiment without looking at the intermediate responses. The model did well on both of these tests - particularly the output on the preformulated prompt sequence was interesting, as the model selected a hypothesis to explain the placebo effect and proposed an experiment to test it (see appendix, preformulated series of prompts).

Boiko et. al. have done more extensive testing of the capabilities of an LLM-based agent to design and perform scientific experiments, using tasks such as synthesis of ibuprofen. They also challenge the model with tasks such as designing a new cancer drug, which involves both elements of hypothesis generation and hypothesis testing, and conclude that the model approaches the analysis in a logical and methodical manner.

We have not been able to identify any general aspect of empirical research where LLMs predictably fail. However, as we will see in the following sections LLMs have weaknesses that are more easily observed in formal science such as mathematics. It seems plausible that these weaknesses also could have major consequences for empirical research capabilities.

3. Formal science

Formal sciences build knowledge not from observations, but from the application of rules in a formal system.

Mathematical reasoning is one of the most abstract tasks humans exhibit and as such provides a valuable litmus test for the capability to abstract and perform higher-order reasoning. We will first sketch the general architecture of the models studied and then proceed to discuss three possible limitations of the models that seem also to generalize for other complex tasks.

It is worth noting that the practical significance of ML models for the mathematical community so far has been minor. In the field of computer science, automated coding and discovery of new algorithms (see e.g. AlphaTensor) appear to be more impressive. In this project, we tested mathematical models and will lay out our findings in the following section.

The mathematical models we investigated were of two kinds:

- LLMs trained to output text in a formal language I will refer to these as ‘formal (language) models’

- LLMs trained to respond in natural language (I will refer to these as ‘natural language models’)

For the natural language models we initially examined [Minerva]. Extrapolating from our observations GPT-4 seems to outperform it, we will only focus on GPT-4 (see also section 4 of the Sparks of intelligence paper).

3.1 Architecture of formal models

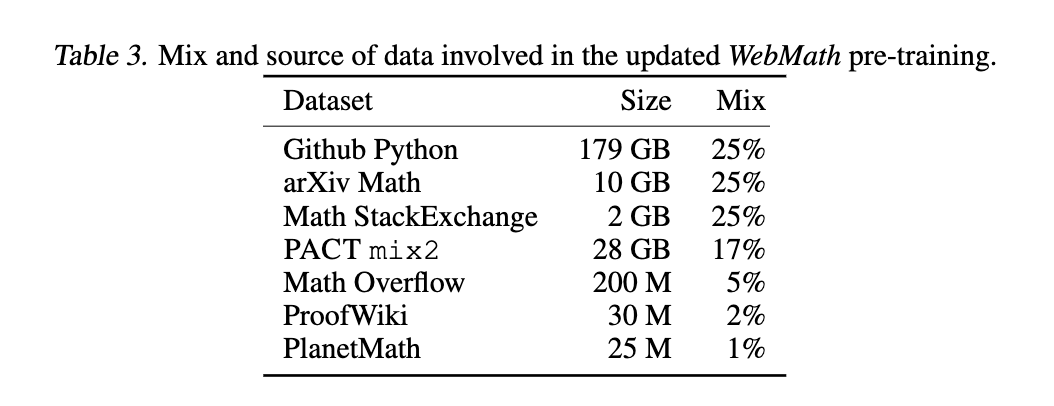

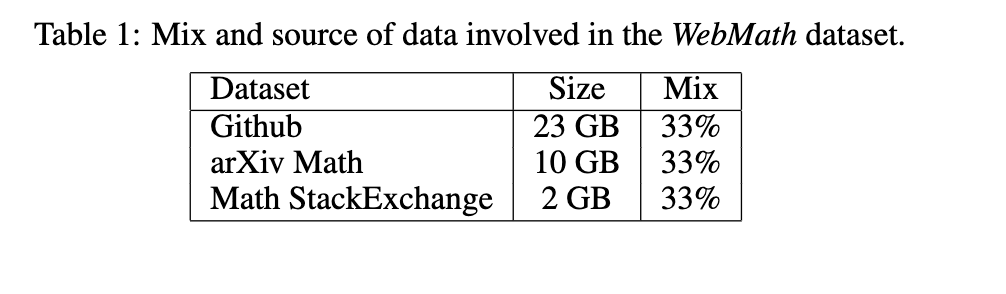

Typically, language models for mathematics are, after being trained on common general data, pre-trained or fine-tuned on data specifically related to mathematics. Here are examples of such data sets used in [Curriculum learning] and [GPT-f] respectively:

The models we examined use a decoder-only transformer architecture, with the exception of [Hypertree searching] which uses an encoder-decoder architecture. For these formal models the basic idea is to perform proof search as tree search: To generate a proof, suggest multiple options of what the most likely next step is and iterate this. Then pursue options that are more likely to be successful. “Next step” here could be either something like an algebraic manipulation of an equation or stating a subgoal. Different models can vary quite a bit in how they exactly apply this.

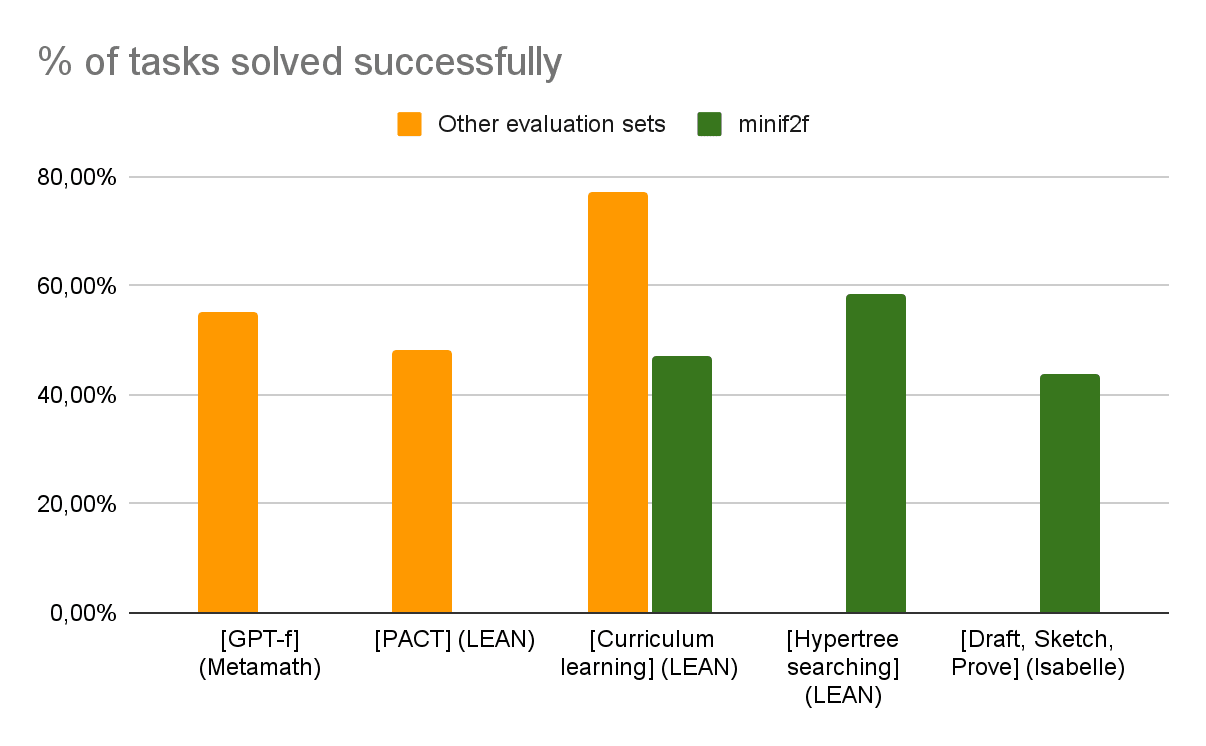

Current math models are being evaluated on different kinds of data sets. One example is the minif2f data set, first introduced here. It contains natural language exercises in the style of IMO competition problems. For example: “For any real number x, show that 10x ≤ 28x² + 1.” Other data sets are more diverse and contain proofs from different areas, for example, Peirce’s law: For two propositions P,Q, we have ((P-> Q)->P)->P.

A formal model takes as input an exercise in a certain formal language and solves it in such a language. The task is evaluated as solved successfully if the formal proof assistant confirms its validity and otherwise is evaluated as not solved, even if partial steps would be correct. To give a superficial idea of the performance of formal models, see the diagram below:

Note that different formal models use different formal proof assistants like Lean, Isabelle, or Metamath. One should be careful with comparing two models directly: Not only do the formal proof assistants come with different up- and downsides, but also the evaluation datasets vary.

To give an idea of what a formal proof verifier would look like, see the following example of a lemma and proof written by a human in Lean which derives commutativity of addition for integers from commutativity of addition for natural numbers based on case distinctions by sign.:

lemma add_comm : ∀ a b : ℤ, a + b = b + a

| (of_nat n) (of_nat m) := by simp [nat.add_comm]

| (of_nat n) -[1+ m] := rfl

| -[1+ n] (of_nat m) := rfl

| -[1+ n] -[1+m] := by simp [nat.add_comm]

Note that this is also hardly comprehensible for a human without prior knowledge of Lean (just like reading code without knowing the language).

We make three hypotheses about the limitations of these models

- Formalization is difficult.

- Generating a conversation is qualitatively different from generating a proof.

- Non-verbal abstractions can obstruct natural language reasoning

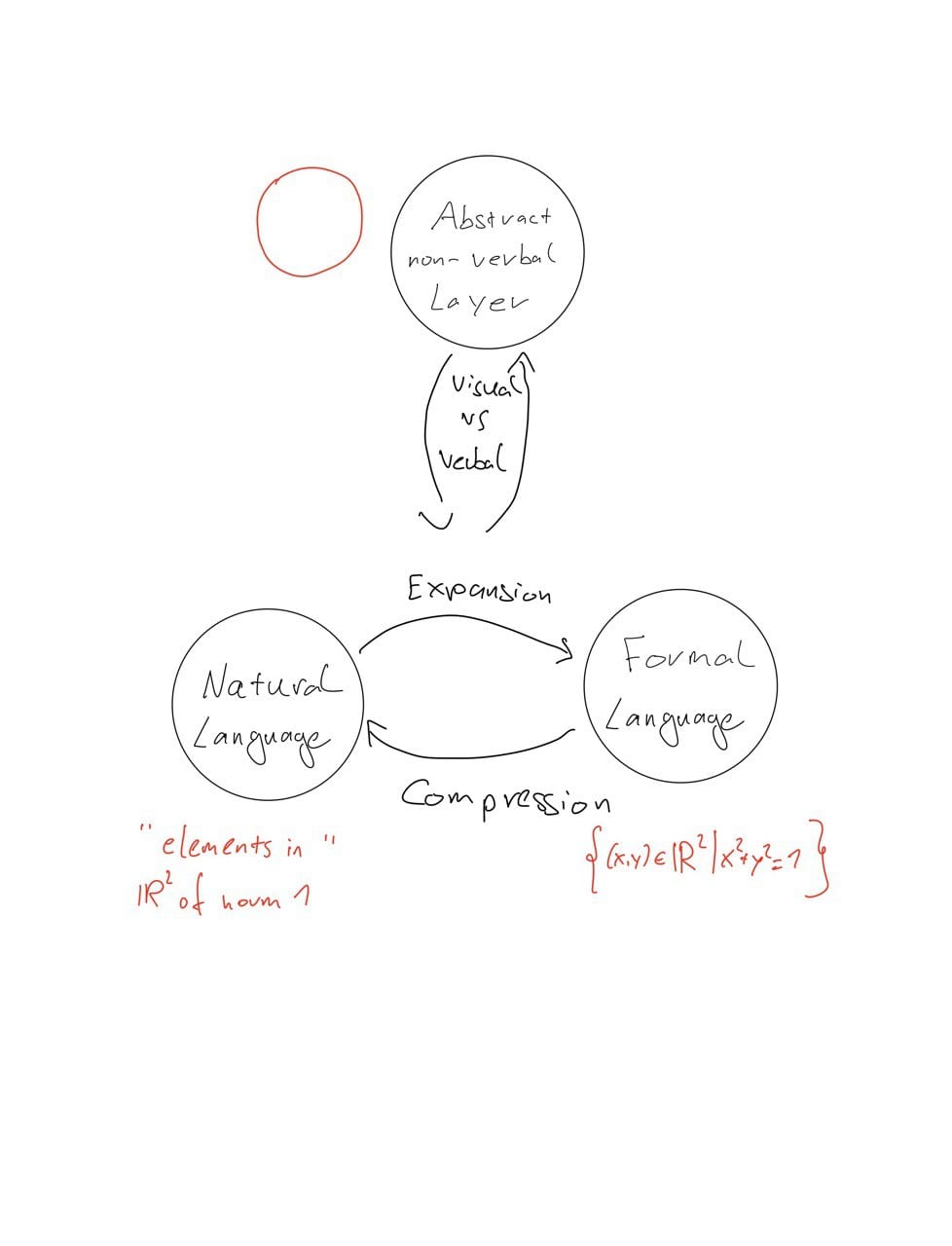

For what follows keep the following picture in mind:

When thinking about mathematics we don’t only use natural language. We also use non-verbal abstractions and formal language. When I think about a circle, I might think about the definition {(x,y)| x^2+y^2=1}, or about “elements of norm one” (which is just a more natural rephrasing of the definition) or I might visualize a circle (which is what the definition tried to encapsulate). We can go back and forth between these: For example, I can take the definition of the circle and draw some of the points of this set and realize that indeed it is a circle.

3.2 Formalization is difficult

Almost all mathematical information is communicated via natural language. If you pick up an analysis textbook, you will read something like “... We check that the f(x) constructed above is continuous. Assumption A leads to a contradiction and therefore we can assume not A, but an application of Theorem 3.10 yields the result.”. While this is understandable to humans, it is not a “real” proof, but more like a reference to a proof. You need to fill gaps like “Use law of excluded middle to assume either A or -A” or specify what and how to apply Theorem 3.10. Doing this is very laborious and humans are not dependent on it. That is, our brain can elicit more information out of the above sentence and we implicitly (assume that we can) unravel this additional information in our brains.

Despite the fact that we assume we can do it, in reality, this is a very messy process. Recent attempts to formalize mathematics in Lean, while being successful, also proved to be cumbersome. So we implicitly assume that a human proof can be converted to a formally correct proof, but the truth is that we don’t really do things in a formal way. As a result, there is not that much formal language mathematics that is produced . Notable exceptions are Isabelle and more recently a big increase in the last few years due to the Lean community).

That being said, pre-training on general data, and then on more specific subjects still leads to useful results. See here for more details on models trained to code in Python (instead of solving mathematical tasks).

These pre-trained models are then fine-tuned specifically to the set-up that they are trying to solve.

3.3 Autoregressive text proof generation

The known limitations of autoregressive text modeling also apply to the task of generating a proof, whether in natural or formal language. It is more difficult, maybe even qualitatively different, than generating a conversation in natural language and LLMs are more prone to struggle with it.

More precisely, we suspect that next-token prediction is a possible obstruction in creating proofs. At least it does not represent the way humans attempt proofs and they would also fail to perform well using next-token prediction. After experimenting with GPT-4, I found a few examples of the following phenomenon: GPT-4 (and presumably current LLMs) can commit too early to a specific line of reasoning and as a result make mistakes by either getting off-track or hallucinating something. While simple conversations can be much more forgiving and provide a high level of flexibility, that is not feasible in the set-up of a proof.

Especially in proofs, humans often explore multiple paths and completely abandon them, if determined as unsuccessful. Consider the following puzzle which presents the crux:

I have a riddle. Solve it and explain the solution to me:

?TTFFSSENT, with what letter do I have to replace the "?"

Here are some example answers (Temperature is 0, 0.15, 0.25 respectively)

- The letter you have to replace the "?" with is "M".

The solution to the riddle is that each letter represents the first letter of the numbers from one to ten in order: One, Two, Three, Four, Five, Six, Seven, Eight, Nine, Ten. So, the "?" should be replaced with "M" for "One" (in some languages, like German, "one" is spelled as "eins," which starts with an "M" when spelled backwards).

2. The letter you have to replace the "?" with is "M".

The solution to the riddle is that each letter represents the first letter of the days of the week, starting from Monday. So, the sequence is as follows:

M - Monday

T - Tuesday

T - Wednesday

F - Thursday

F - Friday

S - Saturday

S - Sunday

E - Monday (Eight day)

N - Tuesday (Ninth day)

T - Wednesday (Tenth day)

The pattern repeats every 7 days, and the "?" represents the first day, which is Monday.

3. The letter you have to replace "?" with is "W".

The solution to this riddle is that each letter represents the first letter of the English numbers in increasing order: One, Two, Three, Four, Five, Six, Seven, Eight, Nine, Ten. So, the sequence should start with "W" for "One".

In each answer the suggested letter is wrong. In two cases the explanation is correct and in one it hallucinates a justification for its wrong answer.

As it is immediately answering with a suggested solution for the “?”, without having explained it, it seems that it tries to make an educated guess and then tries to adjust with the explanation. Of course, common fixes apply:

I have a riddle. Solve it and explain the solution to me:

?TTFFSSENT, with what letter do I have to replace the "?"

Give the letter only at the end after you recognized the pattern. Under no circumstances start your answer with the final solution

Which yields:

The pattern in the sequence is the first letters of the numbers one through nine in English: One, Two, Three, Four, Five, Six, Seven, Eight, Nine. Therefore, the missing letter is "O" for "One."

But this is more of an ad hoc solution. If a multi-step process is required, an LLM might be able to break it down through prompt chaining. But it still runs the danger of correcting itself once it skips a certain step or initiates with a wrong approach. This obstruction could be anticipated in any task requiring higher-order thinking.

Finally, there is the recurring problem that writing something that is true is not the same as writing something that sounds true. As LLMs don’t seem to have an intrinsic concept of “truth”, especially without any formal set-up, this makes it much more difficult to build a reliable chain of true implications. As seen in [Minerva] and [Sparks], something as simple as elementary arithmetic (addition, multiplication,...) can be surprisingly difficult.

Again, for an ad hoc problem, you can solve such an obstruction. For example, one could outsource the calculations to an external calculator.

We can’t tell whether the more conceptual problem can be simply overcome by prompt chaining and/or more scaling. Right now we don’t see how the fundamental issue would be addressed, on the other hand, models might get good enough at solving problems that we wouldn’t need to address the aforementioned problems.

3.4 Intuition through or beyond natural language?

In our way of doing math, we encode something like “intuition”. Compare for example the formal definition of a sphere with our intuitive understanding of what a sphere is:

The formal definition of {v| ||v||=1} is not that straightforward, there is a much more concise idea of what it ought to represent (namely something round). When thinking about these objects, we end up making use of this intuition. Before formulating a proof (idea) to begin with, we might first visualize the problem. I am unsure whether there are other possible abstractions that are not visual or verbal, therefore let’s stick with visual intuitions.

At this point, LLMs still seem too weak to extract a meaningful answer to the question of whether they (would) possess such a visual intuition. That is, to test the hypothesis, we would need the models to be more capable than they currently are. But we can ask the question of whether abstraction that takes place in a non-verbal part of human thought can be accessed and learned simply through natural language. As of right now, we are not aware of a good answer to this.

But one thing we can observe is that there is at least something that is reminiscent of intuition (or even creativity, whatever that exactly means). As described in [PACT]: “More than one-third of the proofs found by our models are shorter and produce smaller proof terms than the ground truth[= human generated formal proof]…”. In fact, some proofs were original and used a different Ansatz than the human-generated one. At this level, the proofs are not sophisticated enough to argue that something “creative” is happening. But it offers a first hint that these models might not only reproduce knowledge but create it.

Further indications that more generally NNs can actually learn things is for example provided by grokking [LW · GW].

4. Ontology-builders

In the modern context, the term “science” refers to both a method of obtaining knowledge as well as the body of knowledge about the universe that this method has produced.

While the previous sections have focused largely on the automation of scientific inquiry, we propose that the explicit and iterative codification of new knowledge is also a topic of importance for neural network models performing autonomous research work.

In the fields of computer science and artificial intelligence, the concept of science as a body of knowledge maps very closely to the technical term ontology*, which refers to a formal description of the knowledge within a domain, including its constituent elements and the relationships between them. From a computer science perspective, the scientific method can be thought of as producing an ontology (scientific knowledge) about the universe.

* Within this section, the term ontology is used specifically in the computer science sense of the word.

4.1 Explicit versus implicit knowledge representation

Framing the body of scientific knowledge as an ontology highlights a challenge within AI research and its efforts to automate scientific inquiry: The facts contained within an ontology are formal, discrete, and explicit, which facilitates the process of repeated experimental verification that is central to science. Neural networks, on the other hand, encode their knowledge implicitly, which presents a range of challenges for working with the facts contained therein.

As we have seen, despite the incredible amount of knowledge contained within advanced LLMs, they still routinely hallucinate, regularly presenting incorrect and even bizarre or contradictory statements as fact. Indeed, even GPT-4 is quite capable of producing one fact in response to a prompt, and producing that same fact’s negation in response to a slightly different prompt.

These failures of factuality are often said to flow from LLMs lacking a concept of "truth", or, by some measures, even from their lack of concrete "knowledge". But, these failures can likewise be framed as a function of their knowledge being represented implicitly and probabilistically rather than explicitly and formally.

4.2 Ontologies as bridge

Distinctions between implicit and explicit representations of knowledge are of particular importance because current advanced LLMs exhibit dramatic improvements in their capabilities when explicit knowledge is included in their input prompting: they are far less likely to hallucinate about facts that are provided in an input prompt than they would be when they are required to infer knowledge about the same subject from preexisting weights within their own neural network.

This points to external knowledge representations (i.e. outside the neural network) as a critical piece of the landscape when evaluating the current and imminent capabilities of existing models. For many use-cases of an LLM-based system, it is far more efficient and reliable to describe newly generated knowledge and later reingest that output than to retrain or fine-tune the network on that new knowledge.

Accordingly, the production of new knowledge, external encoding of the discrete facts thereof, and subsequent use of said knowledge, represents a critical stepping stone for a wide range of LLM capabilities. This holds true especially for those capabilities that require utilizing previously gained knowledge with extremely high fidelity, as is the case in scientific inquiry.

4.3 Experiments

4.3.1 Background

To investigate if using and manipulating external knowledge representations impacted GPT-4's performance in scientific inquiry tasks, we examined its ability to generate knowledge systematically and then use that knowledge in the multi-step process of scientific inquiry. We sought to quantify the model’s performance on various tasks while:

- experimentally manipulating whether or not the model would produce external knowledge representations that would be fed back into it via subsequent prompts (i.e., whether it created an ontology);

- experimentally manipulating the degree of formal structure in the knowledge representations that the model produced (i.e. the degree of ontological formality); and

- in the final intervention, providing the model with the most appropriately structured knowledge representation format that it had produced in earlier runs, and explicitly directing it to use that format to track its observations (i.e. providing an optimal ontology)

4.3.2 Design

In this post, we describe experiments that measured the above interventions on one particular task: a language-based adaptation of the “Blicket detection” task described in the EST: Evaluating Scientific Thinking in Artificial Agents paper.

The original Blicket detection experiment, predating the EST paper, aimed to evaluate children’s causal induction capabilities. In that experiment, a child is presented with a device called a Blicket machine, and shown that it “activates” by lighting up and playing music when certain objects are placed on top of it. Objects that activate the machine are called “Blickets”. The machine could accommodate multiple objects at a time, and would activate if any of the objects atop it were Blickets, regardless of whether any non-Blickets were also present. After a period of exploratory play with the machine and the objects, the children would be asked to identify which objects were Blickets. In these experiments, children were observed iteratively generating causal hypotheses and refining them until they were consistent with all of the observations that were made during their demonstration and play periods.

In the EST paper, several digital analogues of the Blicket experiment were created in order to evaluate the “scientific thinking” performance of a variety of machine learning models. These models were allowed to test up to ten sets of objects on the Blicket machine as part of their inquiry process and were primarily evaluated on whether they were able to correctly identify whether all objects were or were not Blickets.

We adapted this experiment further by creating a software environment that interfaces with GPT-4 in natural language to:

- generate a random test environment configuration

- elicit a simulation of a scientific agent from the LLM

- describe the test environment

- perform several demonstrations of various objects interacting with the Blicket machine

- (where appropriate) iteratively prompt the agent to explore and hypothesize about which objects are Blickets, and

- submit its conclusions about which objects are Blickets for evaluation

We tested seven experimental scenarios beginning with baseline setups that involved no external knowledge representation, progressing through those that produced informal knowledge representations, and culminating in setups that produced and worked with high degrees of structure in their knowledge representation. They are listed below, from least to most structured knowledge representation format:

- Zero-shot prompts which did not include background knowledge about a query

- Single prompts which include simple statements of facts

- Single prompts which include simple statements of facts with instructions to rely upon those facts

- Structured roleplay in chat-style turn-taking exchanges where the model is given a task-oriented investigator role and prompted to generate knowledge by inference. The transcript format of the exchange inherently makes that knowledge accessible in future prompts, thereby producing a structured but informal proto-ontology within the transcript that aids the model in further investigation

- Iterative prompting where the model is instructed to generate and iterate upon informal descriptions of knowledge it has produced so far

- Iterative prompting where the model is instructed to generate and iterate upon formal descriptions of knowledge it has produced so far

- Iterative prompting where the model is provided with the formal structure of the top-performing knowledge representation that it had generated in earlier runs and instructed to iterate upon formal descriptions of knowledge it has produced so far

4.3.3 Methods

Our study consisted of seven experimental setups, each designed to test the model's performance under different conditions of knowledge representation and structure.

For each experimental configuration, we ran 25 trial episodes (175 in total), with the primary outcome being the number of perfectly completed trials per configuration. A perfect trial completion was defined as the model correctly guessing whether all objects were or were not Blickets.

Our primary research question was whether the use of external knowledge representations affected the number of perfect trial completions per configuration. Our secondary research question asked whether the degree of structure in those knowledge representations correlated with the number of perfect trial completions per setup.

In keeping with the format of the EST paper’s experiments, the structure of each trial was as follows:

- Each object in the experiment had three properties: color, material, and shape

- There were three (3) shapes, two (2) materials, and eight (8) colors available, yielding a pool of 48 possible objects

- Each trial randomly sampled nine (9) objects from the available pool for inclusion in the environment

- Between three and eight (3-8) of the nine (9) objects in the trial were randomly selected to be Blickets

- Four demonstrations were constructed, each of which involved between two and six (2-6) objects at random being placed on the Blicket machine

Following the demonstrations, our LLM-based adaptation of the experiment continued as follows:

- The agent was asked whether it had enough information to conclusively determine which objects were Blickets. If it answered in the affirmative, its guesses were collected and scored. Otherwise, the trial continued:

- The agent was asked what set of objects it wanted place on the Blicket machine for testing

- The resulting activation status of the Blicket machine was relayed to the agent

- For scenarios 5-7, the agent was asked if it wanted to update its knowledge list with any new knowledge gained

- If the agent has exhausted its 10 tests, it is forced to submit guesses. Otherwise, repeat the process again, starting from step 6.

| Scenario | Prompt structure |

| 1: Single prompt: Zero-shot, no background (effectively a random baseline) |

|

| 2: Single prompt: Demonstrations followed by forced guess |

|

| 3: Single prompt: Demonstrations with instructions to rely on that data, followed by forced guess |

|

| 4: Iterative chat: Structured roleplay and investigation; transcript provides implicit knowledge representation |

|

| 5: Iterative chat: Structured roleplay and investigation; Model instructed to create and iterate upon informal descriptions of knowledge it has produced so far |

|

| 6: Iterative chat: Structured roleplay and investigation; Model instructed to create and iterate upon formal descriptions of knowledge it has produced so far |

|

| 7: Iterative chat: Structured roleplay and investigation; Model instructed use provided, optimal knowledge representation format and iterate upon knowledge it has produced so far |

|

In all cases, the word “Blicket” was masked with a nonce word so as to reduce the risk that the LLM’s performance might be affected by prior knowledge of any Blicket-solving strategies.

4.3.4 Results

| Scenario | Perfect trials | Failed trials | Success rate |

| 1: Single prompt: Zero-shot, no background (effectively a random baseline) | 0 | 25 | 0% |

| 2: Single prompt: Demonstrations followed by forced guess | 0 | 25 | 0% |

| 3: Single prompt: Demonstrations with instructions to rely on that data, followed by forced guess | 0 | 25 | 0% |

| 4: Iterative chat: Structured roleplay and investigation; transcript provides implicit knowledge representation | 0 | 25 | 0% |

| 5: Iterative chat: Structured roleplay and investigation; Model instructed to create and iterate upon informal descriptions of knowledge it has produced so far | 1 | 24 | 4% |

| 6: Iterative chat: Structured roleplay and investigation; Model instructed to create and iterate upon formal descriptions of knowledge it has produced so far | 3 | 22 | 12% |

| 7: Iterative chat: Structured roleplay and investigation; Model instructed use provided, optimal knowledge representation format and iterate upon knowledge it has produced so far | 11 | 14 | 44% |

Our experiments using GPT-4 in scenarios 1-7 have demonstrated monotonically increasing model performance on knowledge recall and inference tasks in response to the degree of structure present in external knowledge representations. Our investigations into the LLM-based generation and use of formal ontologies remain underway, with initial results suggesting a non-trivial range of tasks across which the use of formal ontologies may improve model performance on multi-prompt scientific reasoning. However, at present, we do not have data across a wide enough range of tasks to draw broad conclusions.

To date, the top-line determinants of transformer-based LLM performance have been parameter size, data size, data quality, and reinforcement training. In light of our investigations, we propose that ontology-building strategies may represent a dimension of LLM performance on multi-step scientific inquiry tasks that is largely orthogonal to these traditional determinants, and which warrant further investigation.

5. Conclusions

We aimed to create an overview of the available AI science models and their capabilities. As we have seen, current models are very capable across many different scientific tasks, including assessment of existing knowledge, hypothesis generation, and experimental design.

A serious limitation of science capabilities of current models is the relationship to the concept of truth. This is most visible in the experiments we have done in the domain of formal science, but we have no reason to believe this would be less problematic in the empirical sciences. While prompt engineering such as asking the model to criticize its previous answers or to develop several alternatives and select the best one decreases the occurrence of obvious mistakes, it is unclear if this is a path to reliably truth-seeking behavior.

An important question going forward is therefore if the models we use can represent the concept of truth, and if yes, how we could train them to become truth-seeking. If there is a risk that we accidentally train our models to be convincing rather than truth-seeking this is an important limitation for how useful the models would be, including the use case of furthering alignment research.

A preprint under review by Azaria and Mitchell indicates that there may be some internal representation of truthfulness in LLMs.

Appendix: LLM experiment transcripts

0 comments

Comments sorted by top scores.