Summary of 80k's AI problem profile

post by JakubK (jskatt) · 2023-01-01T07:30:22.177Z · LW · GW · 0 commentsThis is a link post for https://forum.effectivealtruism.org/posts/btFBFdYEn2PbuwHwt/summary-of-80k-s-ai-problem-profile

Contents

TL;DR Researchers are making advances in AI extremely quickly Many AI experts think there’s a non-negligible chance AI will lead to outcomes as bad as extinction Power-seeking AI could pose an existential threat to humanity Advanced planning AIs could easily be “misaligned” Advanced, misaligned AI could cause great harm People might deploy misaligned AI systems despite the risk Even if we find a way to avoid power-seeking, there are still risks Counterarguments Neglectedness What you can do concretely to help None No comments

This is a summary of the article “Preventing an AI-related catastrophe” (audio version here) by Benjamin Hilton from 80,000 Hours (80k). The article is quite long, so I have attempted to capture the key points without going through all the details. I’m mostly restating ideas from the article in my own words, but I'm also adding in some of my own thoughts. This piece doesn’t represent 80k’s views.

TL;DR

The field of AI is advancing at an impressive rate. However, some AI experts think AI could cause a catastrophe on par with human extinction. It’s possible that advanced AI will seek power and permanently disempower humanity, and advanced AI poses other serious risks such as strengthening totalitarian regimes and facilitating the creation of dangerous technologies. These are seriously neglected problems, and there are ways you can help.

Researchers are making advances in AI extremely quickly

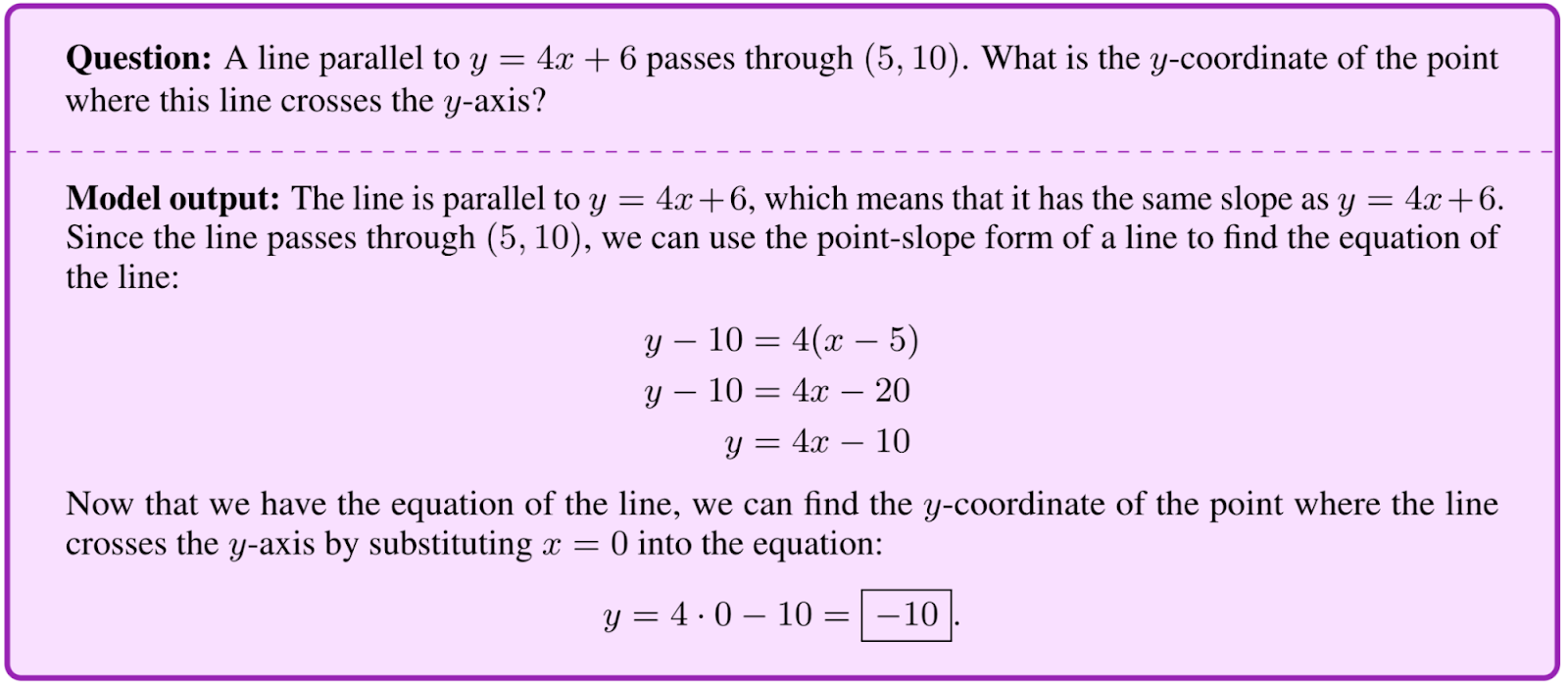

One example of recent AI progress is Minerva, which can solve a variety of STEM problems, including math problems:

Before Minerva, the highest AI accuracy on the MATH dataset of challenging high school math problems was ~7%, meaning that you could expect an AI to answer 7 out 100 questions correctly. In August 2021, one team of forecasters predicted that it would take over four years for an AI to exceed 50% on MATH (better than most humans according to one creator of the dataset), but Minerva ended up achieving this milestone in less than one year, four times faster than predicted. Minerva is just one of many powerful models we’ve seen in the past few years alone.

Current trends suggest that AI capabilities will continue to advance rapidly. E.g., developers are using exponentially increasing amounts of computational resources to train AI systems.

Additionally, AI algorithms are requiring exponentially lower amounts of computational resources to achieve a given level of performance, demonstrating progress in the efficiency of AI algorithms. Given that efficient algorithms and computational resources are two crucial drivers of AI progress, we will likely see more rapid progress in AI if these trends continue.

Some reports and surveys have attempted to predict when we will see AI sufficiently advanced to be “hugely transformative for society (for better or for worse).” It’s difficult to synthesize these perspectives and predict the future (indeed, one report author recently revised her predictions [AF · GW]), but there seems to be a nontrivial chance of seeing “transformative” AI within the next 15 years, so it’s important to start preparing for the risks.

Many AI experts think there’s a non-negligible chance AI will lead to outcomes as bad as extinction

In multiple surveys, the median AI researcher gave probabilities between 2-5% of advanced AI causing catastrophes as severe as the death of all humans. (However, there is notable variance in opinion: in a 2022 survey, “48% of respondents gave at least 10% chance of an extremely bad outcome,” while another “25% put it at 0%.”) Leading AI labs like DeepMind and OpenAI already house teams working on AI safety or “alignment.” Several centers for academic research focus on AI safety; e.g., UC Berkeley’s Center for Human Compatible AI is led by Stuart Russell, coauthor of arguably the most popular AI textbook for university courses around the world.

Power-seeking AI could pose an existential threat to humanity

Advanced planning AIs could easily be “misaligned”

In the future, humanity might possibly build AI systems that can form sophisticated plans to achieve their goals. The best AI planners may understand the world well enough to strategically evaluate a wide range of relevant obstacles and opportunities. Concerningly, these planning systems could be extremely capable at tasks like persuading humans and hacking computers, allowing them to execute some of their plans.

We might expect these AI systems to exhibit misalignment, which 80k defines as “aiming to do things that we don’t want them to do” (note there are many different definitions of this term elsewhere). An advanced planner might pursue instrumental subgoals that are useful for achieving its final goal, in the same way that travel enthusiasts will work to earn money in order to travel. And with sufficient capacity for strategic evaluation, these instrumental subgoals may include aims like self-preservation, capability enhancement, and resource acquisition. Gaining power over humans might be a promising plan for achieving these instrumental subgoals.

There are some challenges for preventing misalignment. We might try to control the objectives of AI systems, but we’ll need to be careful. Specification gaming examples show that the goals we actually want are difficult to code precisely. E.g. programmers rewarded an AI for earning points in a boat racing video game, so it ended up spinning around in circles instead of completing the race.

Additionally, in goal misgeneralization examples, an AI appears to learn to pursue a goal in its training environment but ends up pursuing an undesired goal when operating in a new environment. This paper explores the difficulties of controlling objectives in greater detail.

Another challenge is that some alignment techniques might produce AI systems that are substantially less useful than systems lacking safety features. E.g., we might prevent power seeking with systems that stop and check with humans about each decision they make, but such systems might be substantially slower than their more autonomous alternatives. Additionally, some alignment techniques might work for current AI systems but break down for more advanced planners that can identify the technique’s loopholes and failures. For more difficulties in preventing misalignment, see section 4.3 of this report.

Advanced, misaligned AI could cause great harm

If we build advanced AI systems that seek power, they may defeat our best efforts to contain them. 80k lists seven concrete techniques that AIs could use to gain power. For instance, Meta’s Cicero model demonstrated that AIs can successfully negotiate with humans when it reached human-level performance in Diplomacy, a strategic board game. Thus, an advanced AI could manipulate humans to assist it or trust it. In addition, AI is swiftly becoming proficient at writing computer code with models like Codex.

Combined with models like WebGPT and ACT-1, which can take actions on the internet, these coding capabilities suggest that advanced AI systems could be formidable computer hackers. Hacking creates a variety of opportunities; e.g., an AI might steal financial resources to purchase more computational power, enabling it to train longer or deploy copies of itself.

Possibilities like these suggest that, at a sufficiently high capability level, misaligned AI might succeed at disempowering humanity, and it’s possible that humanity would remain disempowered forever in cases like human extinction. Thus, disempowerment could result in losing most of the value of the entirety humanity's future, which would constitute an enormous tragedy.

People might deploy misaligned AI systems despite the risk

Despite the substantial risks, humanity might still deploy misaligned AI systems. The systems might superficially appear to be safe, because an advanced planner might pretend to be aligned during training in order to seek power after deployment. Furthermore, various factors could encourage groups to be the first to develop advanced AI – e.g., the first company to develop advanced AI might reap substantially greater profits than the second company to do so. Competitive dynamics like these could cause AI companies to underinvest in safety and race towards deployment, even for organizations dedicated to building safe AI [EA · GW].

Even if we find a way to avoid power-seeking, there are still risks

There are still other serious risks that don’t require power-seeking behavior. For one, it’s unclear whether AI will increase or decrease the risk of a nuclear war. Another troubling example is totalitarian regimes using AI for surveillance to ensure that citizens are obedient. AI could easily power the development of harmful new technologies, such as bioweapons and other weapons of mass destruction – notably, a single malicious actor might be able to use AI for this purpose and cause harm, since open source initiatives like Hugging Face and Stability AI put AI models within reach of anyone with a laptop and internet access.

Counterarguments

80k analyzes a long list of counterarguments, with a distinction between less persuasive objections (e.g. “Why can’t we just unplug an advanced AI?”) and “the best arguments we’re wrong.”

Neglectedness

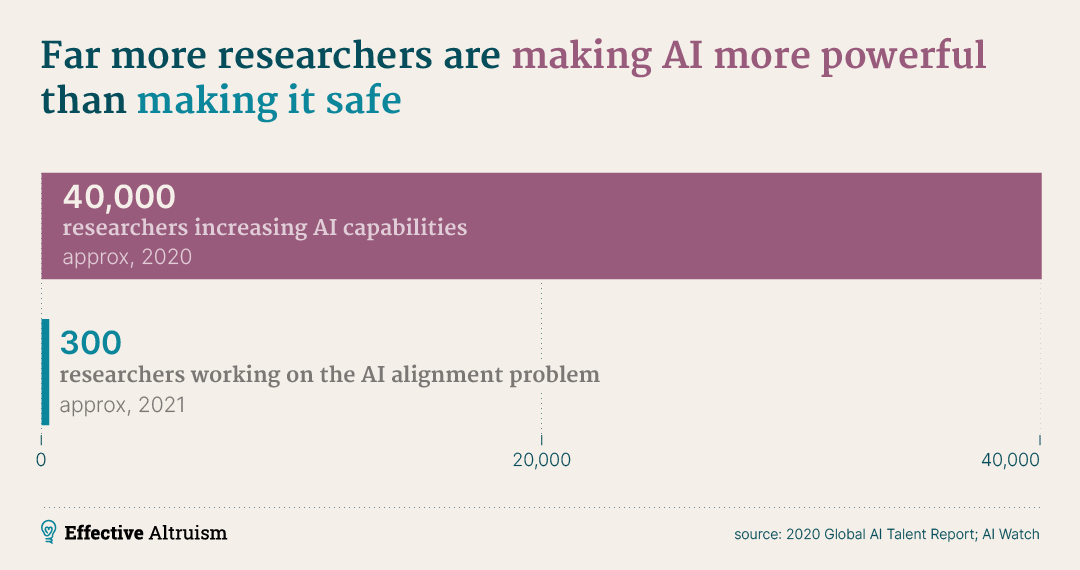

The problem remains highly neglected and needs more people. 80k estimates that AI capabilities research receives ~1,000 times more money than AI safety work. They also estimate that only ~400 researchers are working directly on AI alignment.

What you can do concretely to help

Consider career paths like technical AI safety research or AI governance research and implementation. For alternative options, see the sections “Complementary (yet crucial) roles” and “Other ways to help.” For feedback on your plans, consider applying to speak with 80k’s career advising team.

Thanks to the following people for feedback: Aryan Bhatt, Michael Chen, Daniel Eth, Benjamin Hilton, Ole Jorgensen, Michel Justen, Thomas Larsen, Itamar Pres, Jacob Trachtman, Steve Zekany. I wrote this piece as part of the Distillation for Alignment Practicum [LW · GW].

0 comments

Comments sorted by top scores.