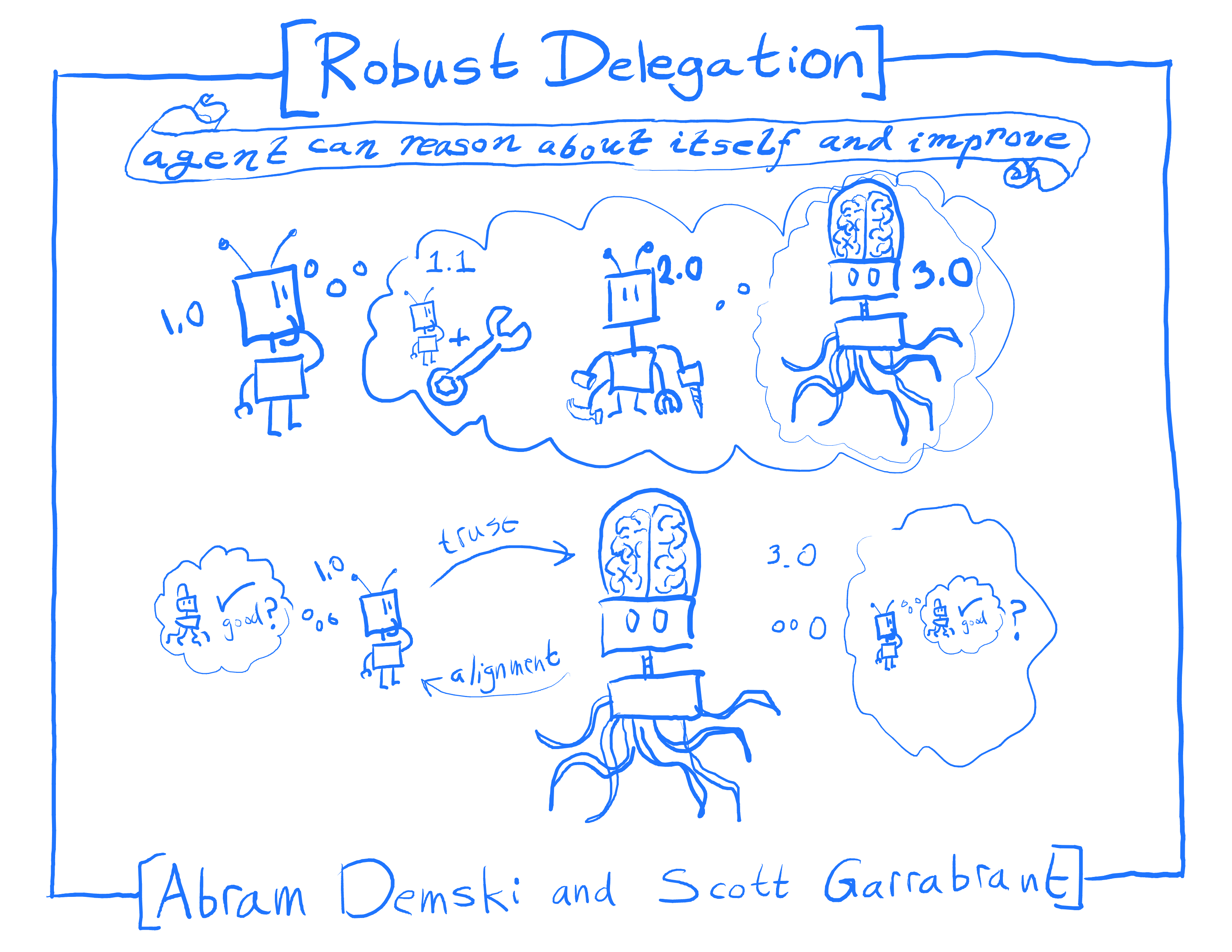

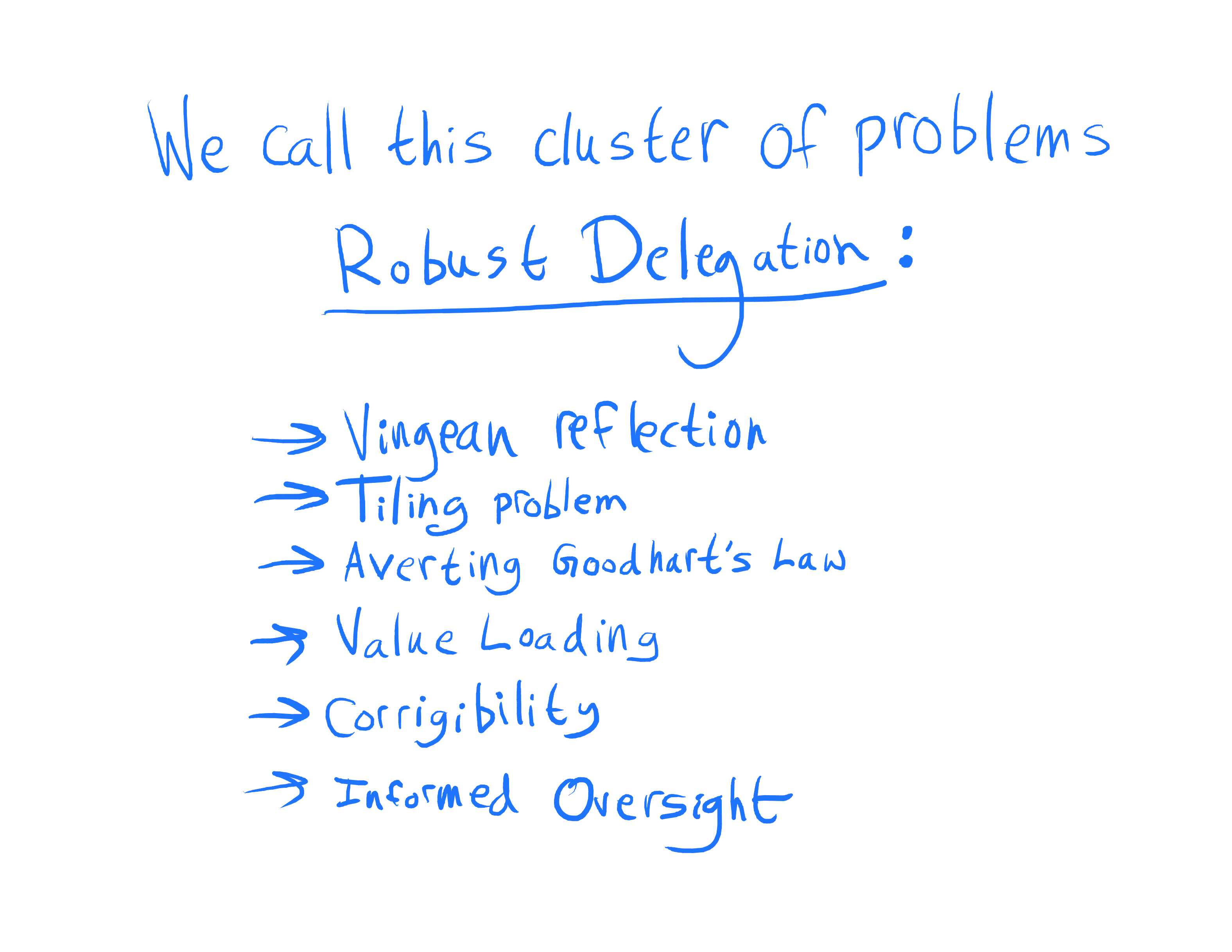

Robust Delegation

post by abramdemski, Scott Garrabrant · 2018-11-04T16:38:38.750Z · LW · GW · 10 commentsContents

10 comments

(A longer text-based version of this post is also available on MIRI's blog here, and the bibliography for the whole sequence can be found here)

10 comments

Comments sorted by top scores.

comment by Scott Garrabrant · 2018-11-05T19:00:14.227Z · LW(p) · GW(p)

Some last minute emphasis:

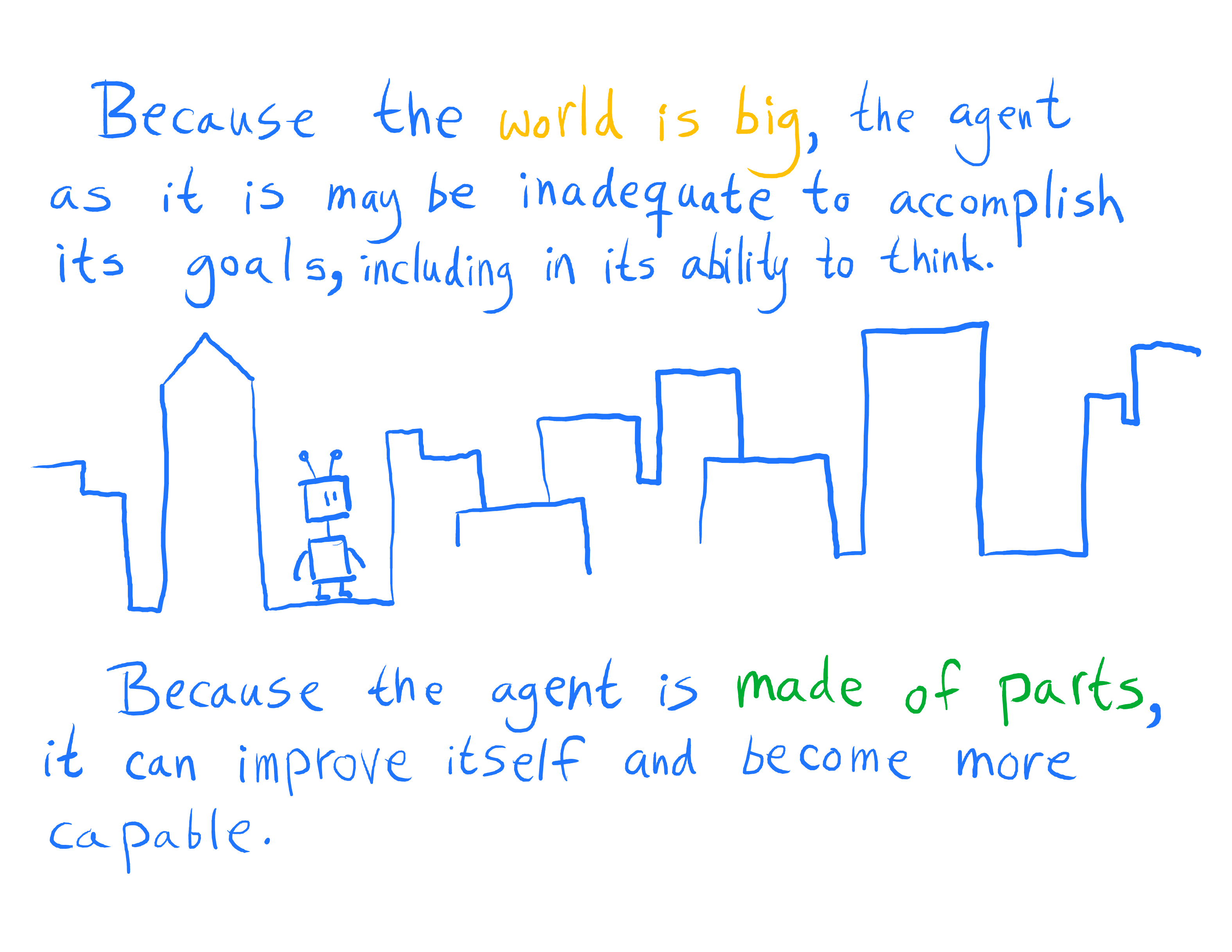

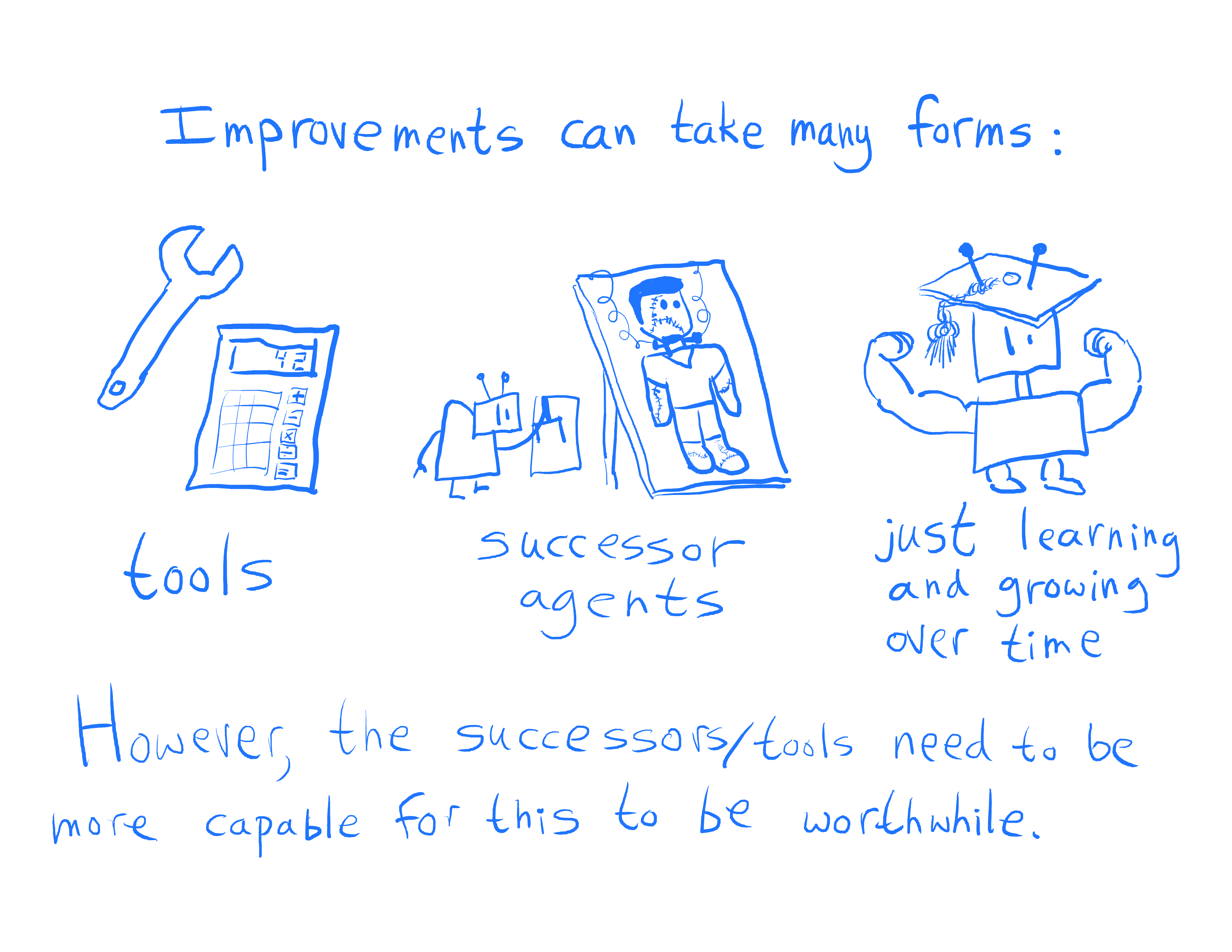

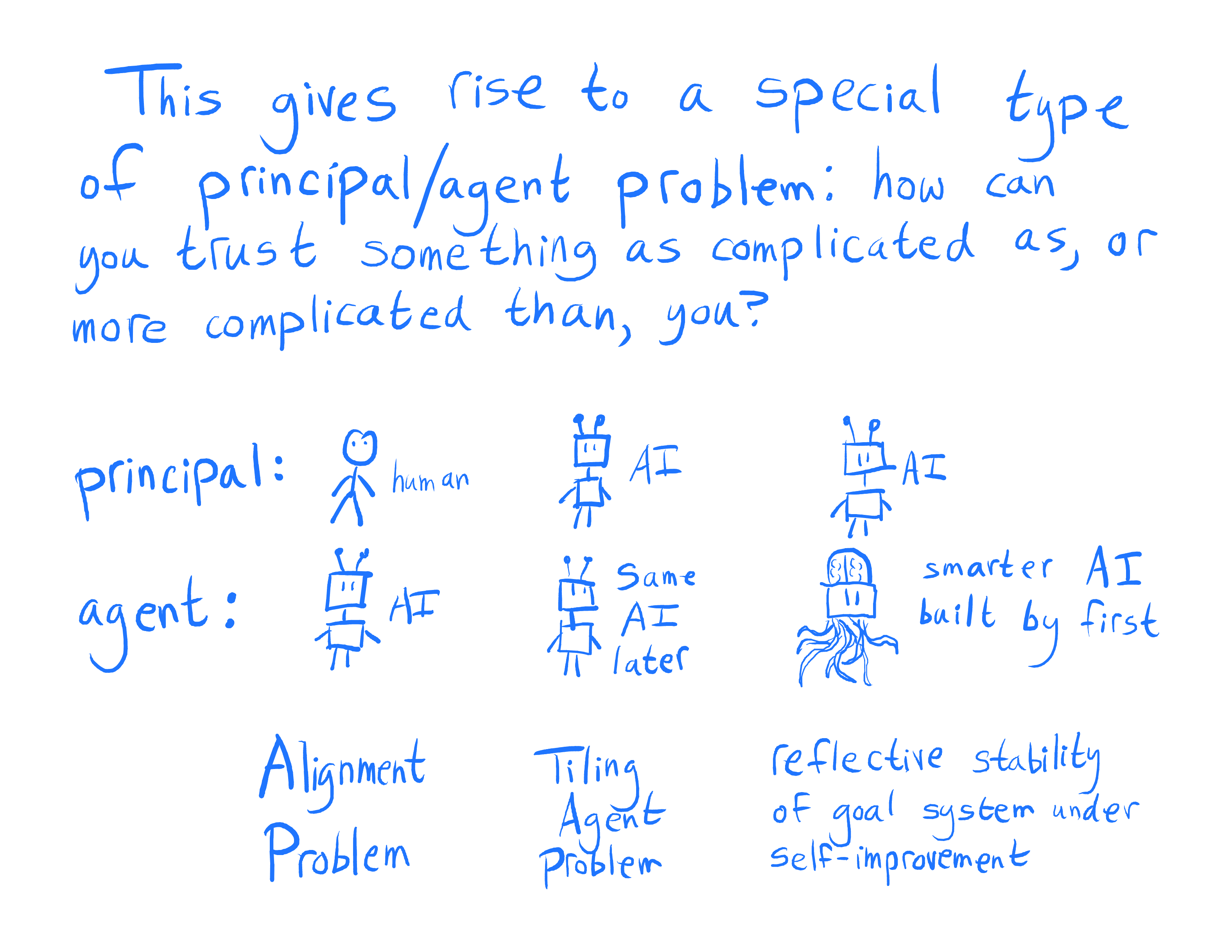

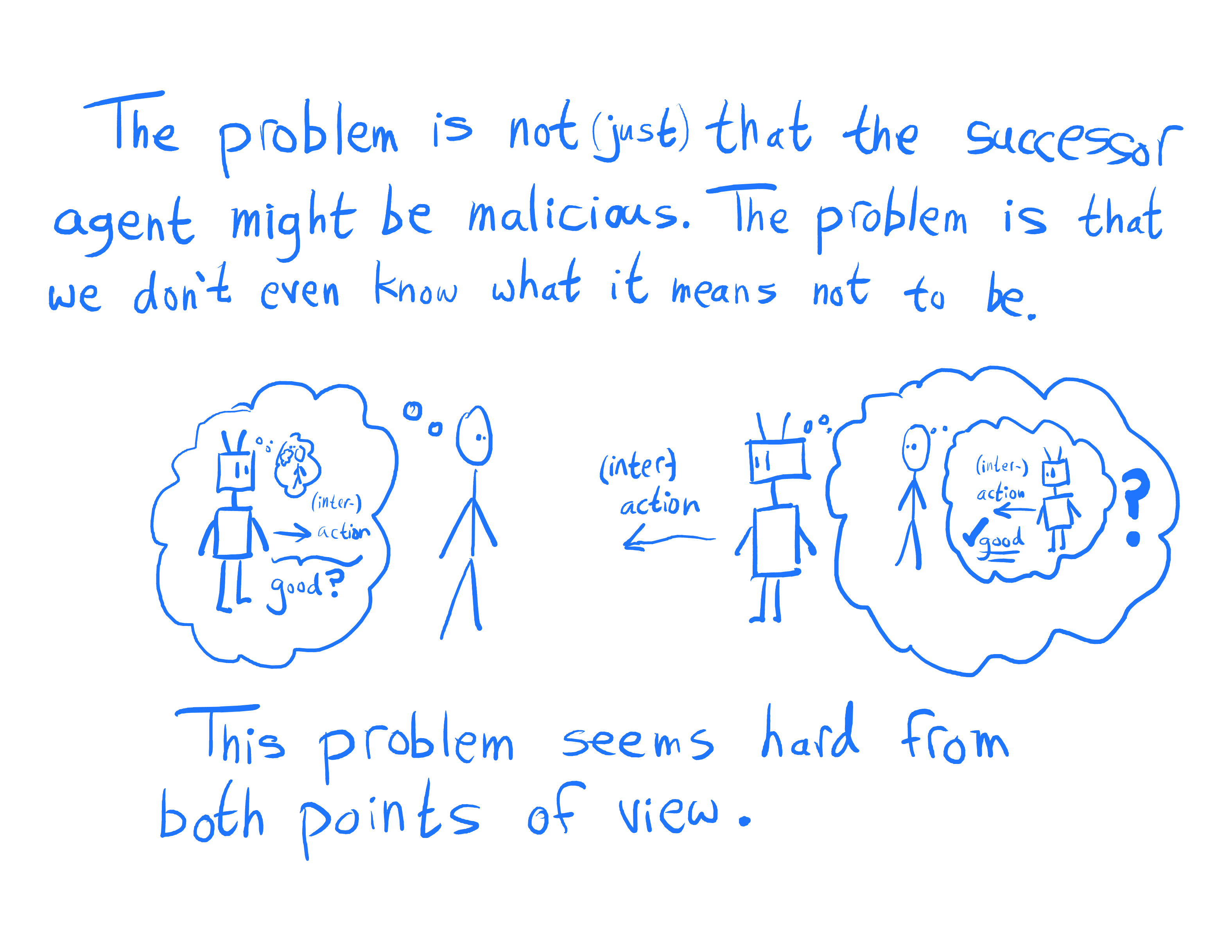

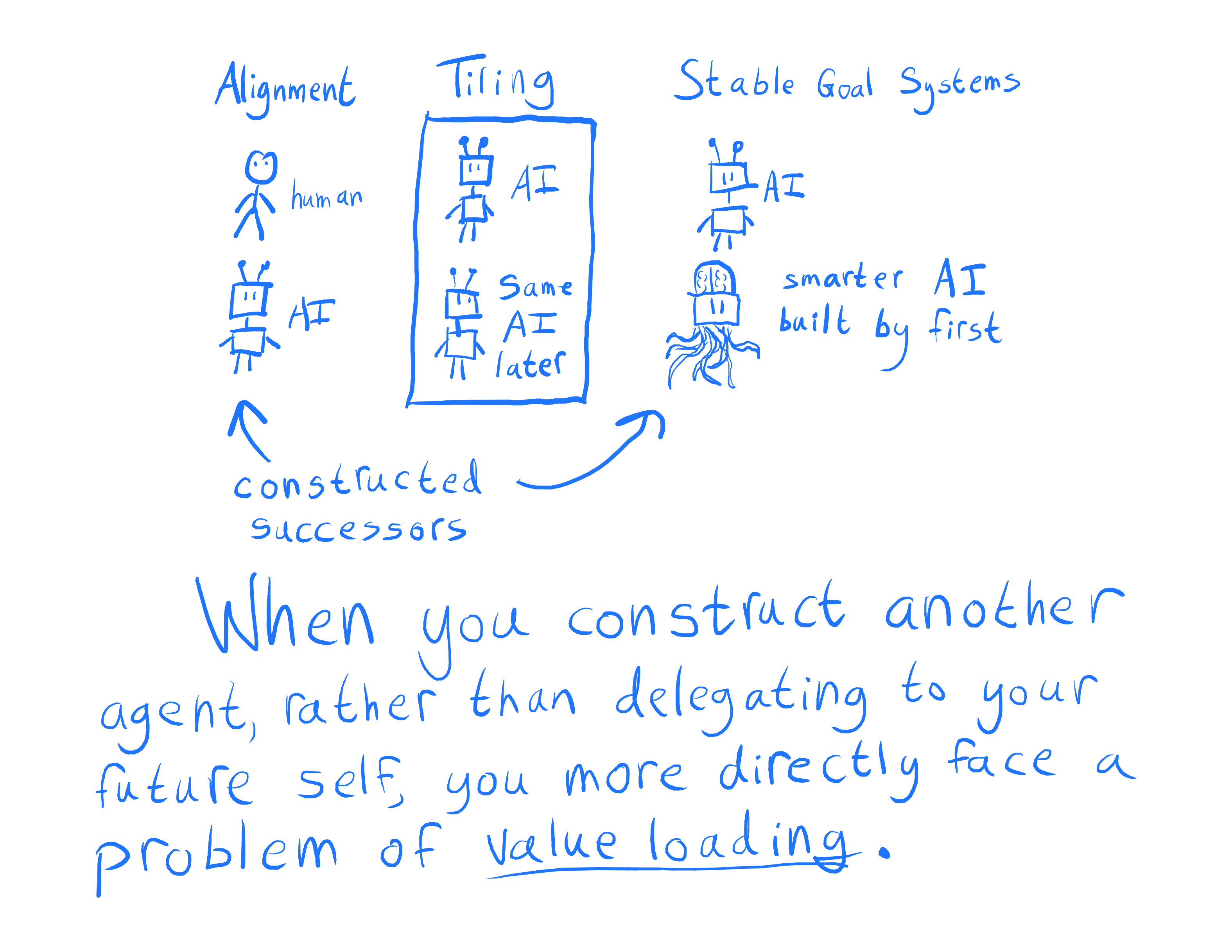

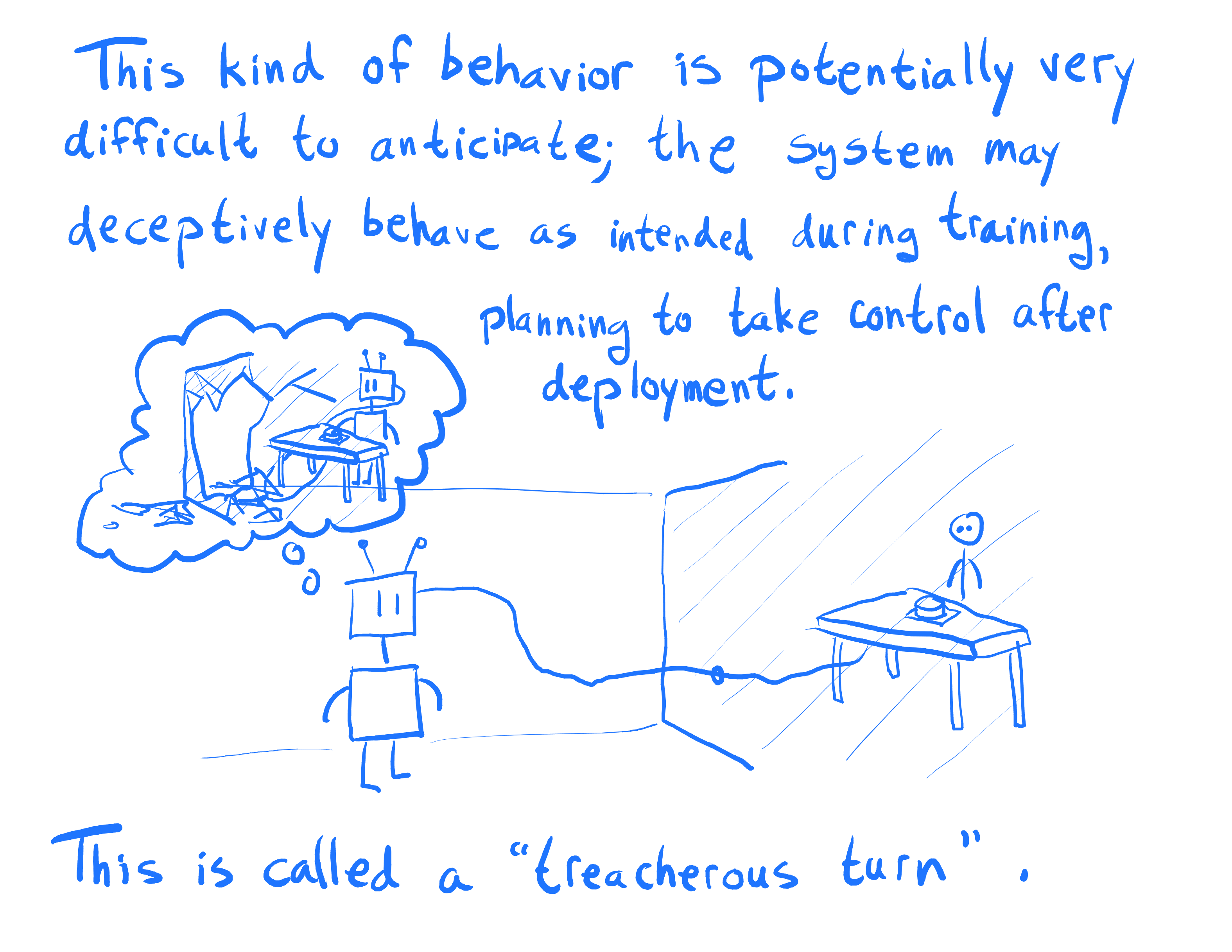

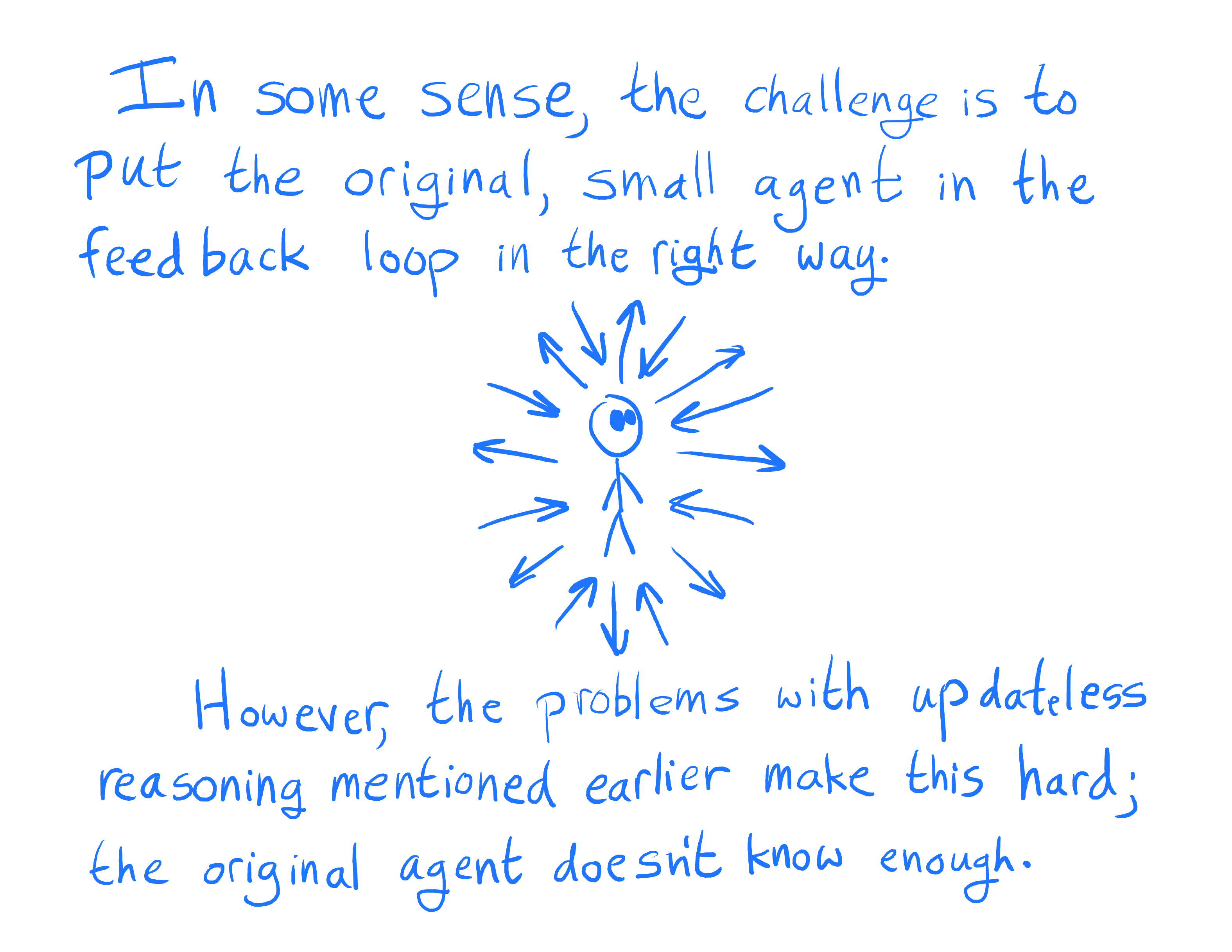

We kind of open with how agents have to grow and learn and be stable, but talk most of the time about this two agent problem, where there is an initial agent and a successor agent. When thinking about it as the succession problem, it seems like a bit of a stretch as a fundamental part of agency. The first two sections were about how agents have to make decisions and have models, and choosing a successor does not seem like as much of a fundamental part of agency. However, when you think it as an agent has to stably continue to optimize over time, it seems a lot more fundamental.

So, I want to emphasize that when we say there are multiple forms of the problem, like choosing successors or learning/growing over time, the view in which these are different at all is a dualistic view. To an embedded agent, the future self is not privileged, it is just another part of the environment, so there is no difference between making a successor and preserving your own goals.

It feels very different to humans. This is because it is much easier for us to change ourselves over time that it is to make a clone of ourselves and change the clone, but that difference is not fundamental.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2018-11-14T21:02:16.714Z · LW(p) · GW(p)

(Abram has added a note to this effect in the post above, and in the text version.)

comment by Pattern · 2018-11-05T04:16:33.104Z · LW(p) · GW(p)

I think this has been the longest and most informationally dense/complicated part of the sequence so far. It's a lot to take in, and definitely worth reading a couple times. That said, this is a great sequence, and I look forward to the next installment.

comment by Davidmanheim · 2018-11-04T18:45:36.455Z · LW(p) · GW(p)

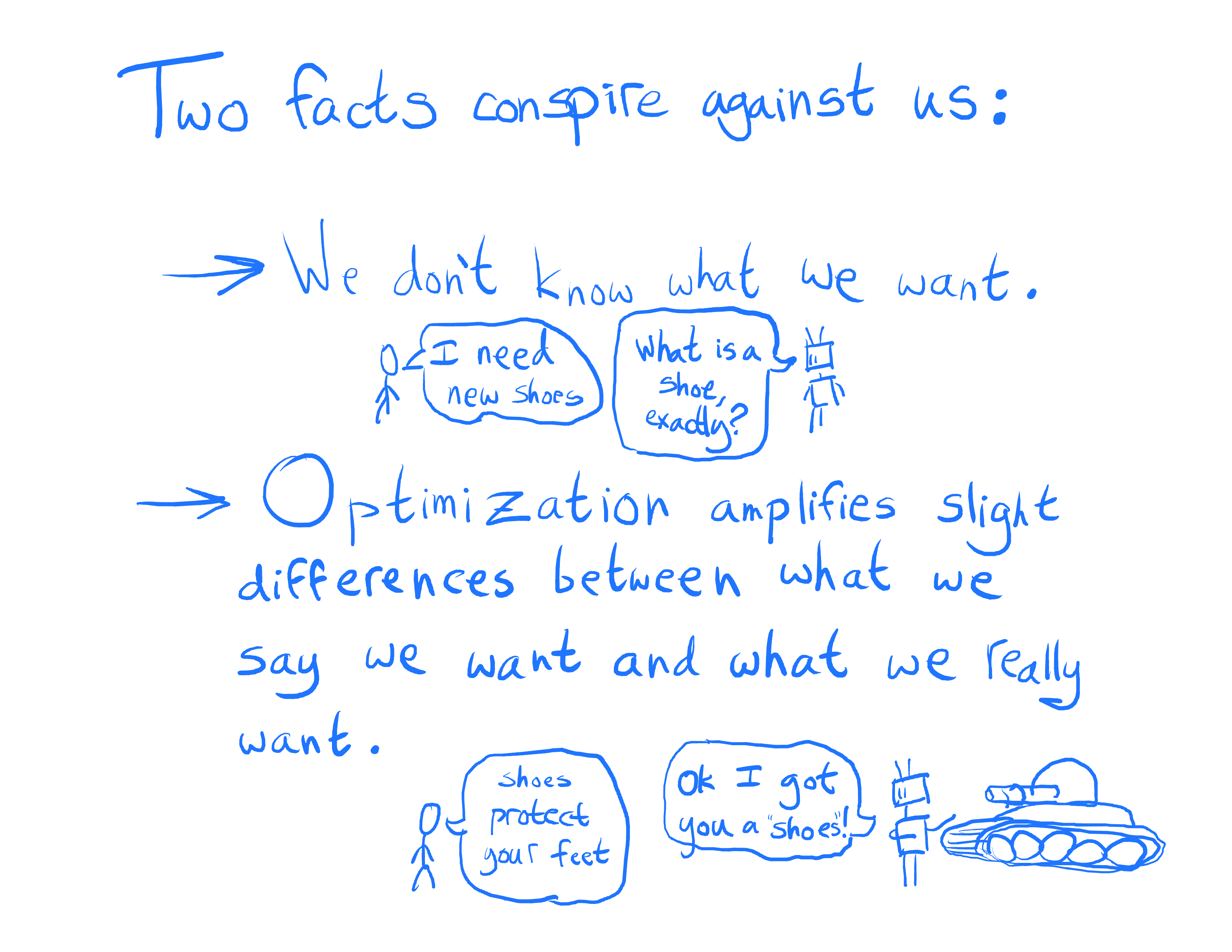

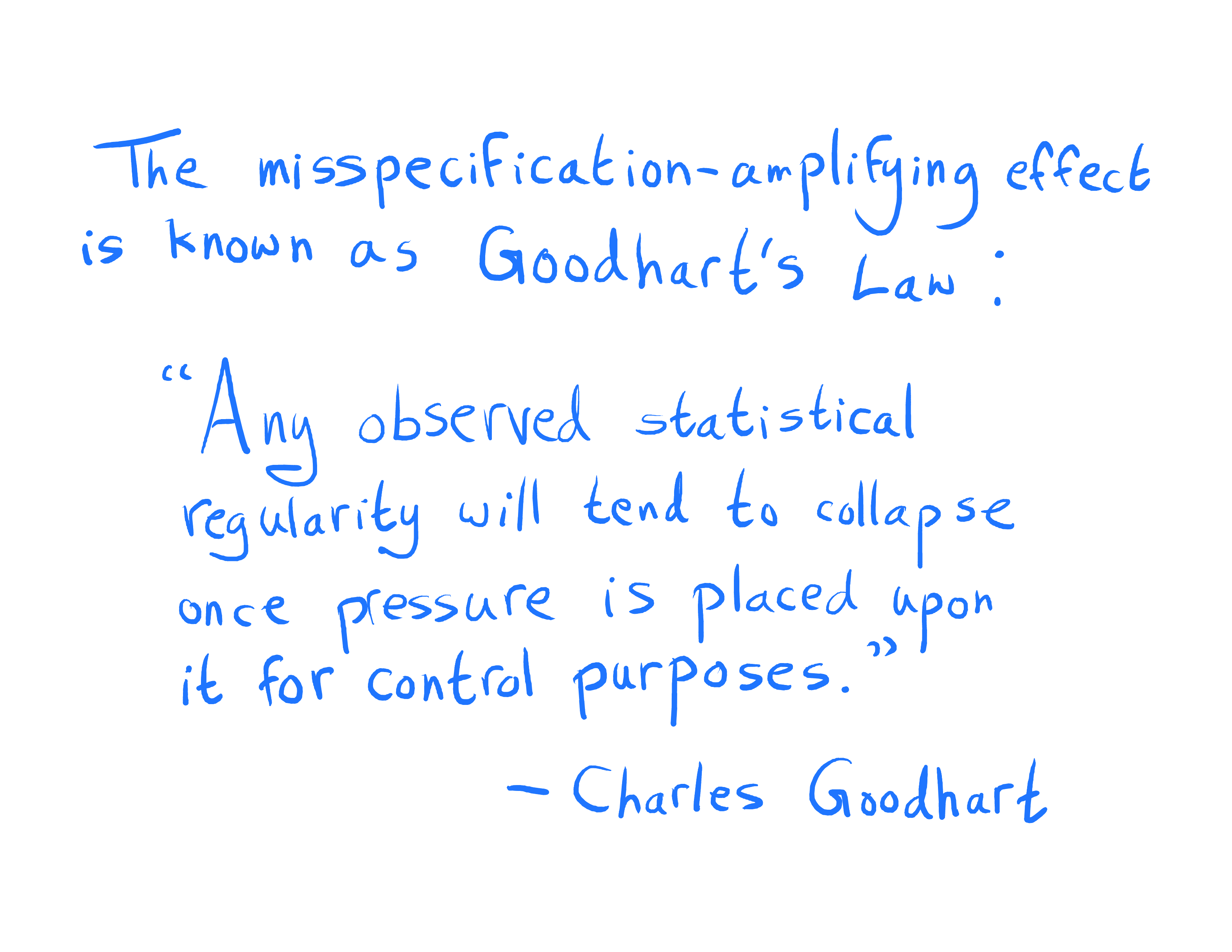

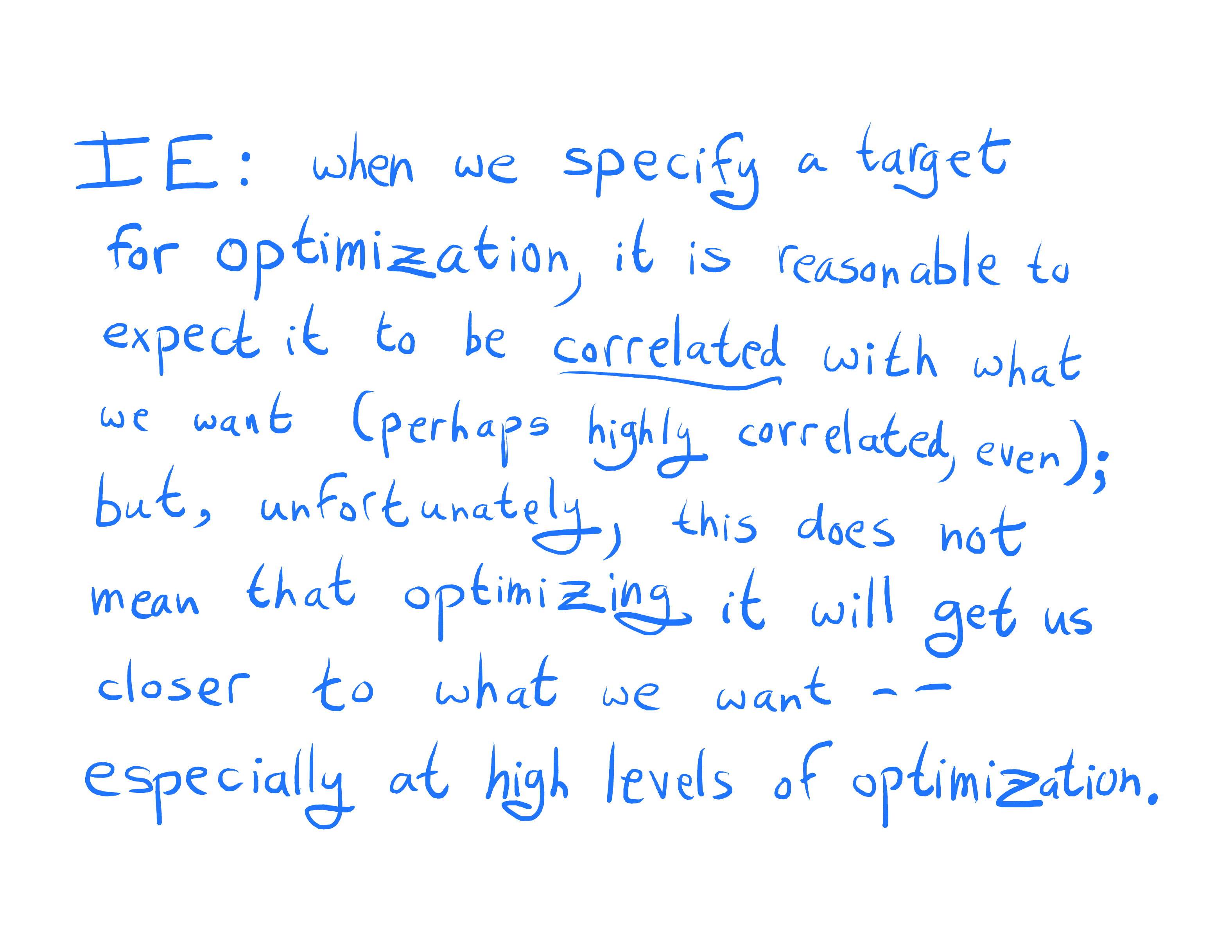

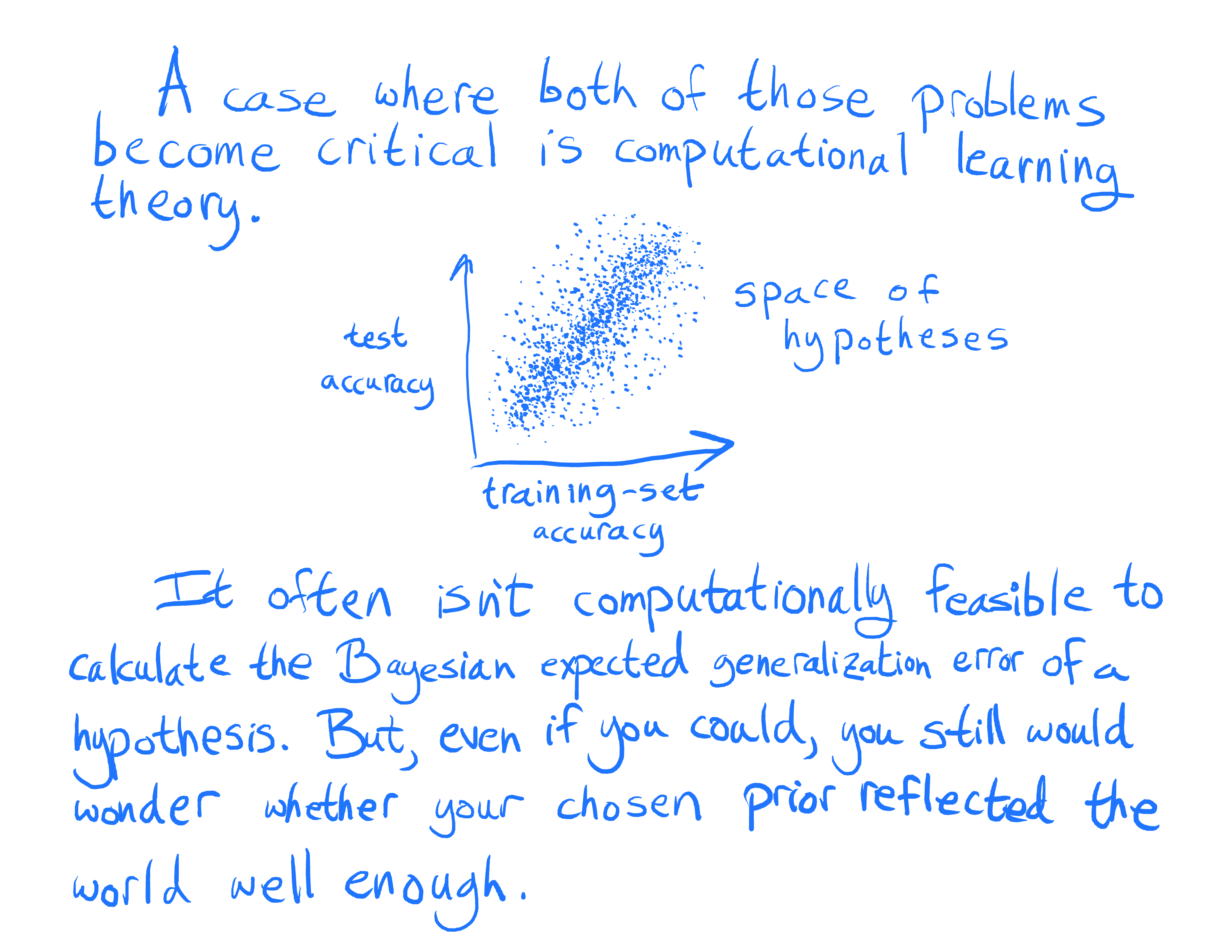

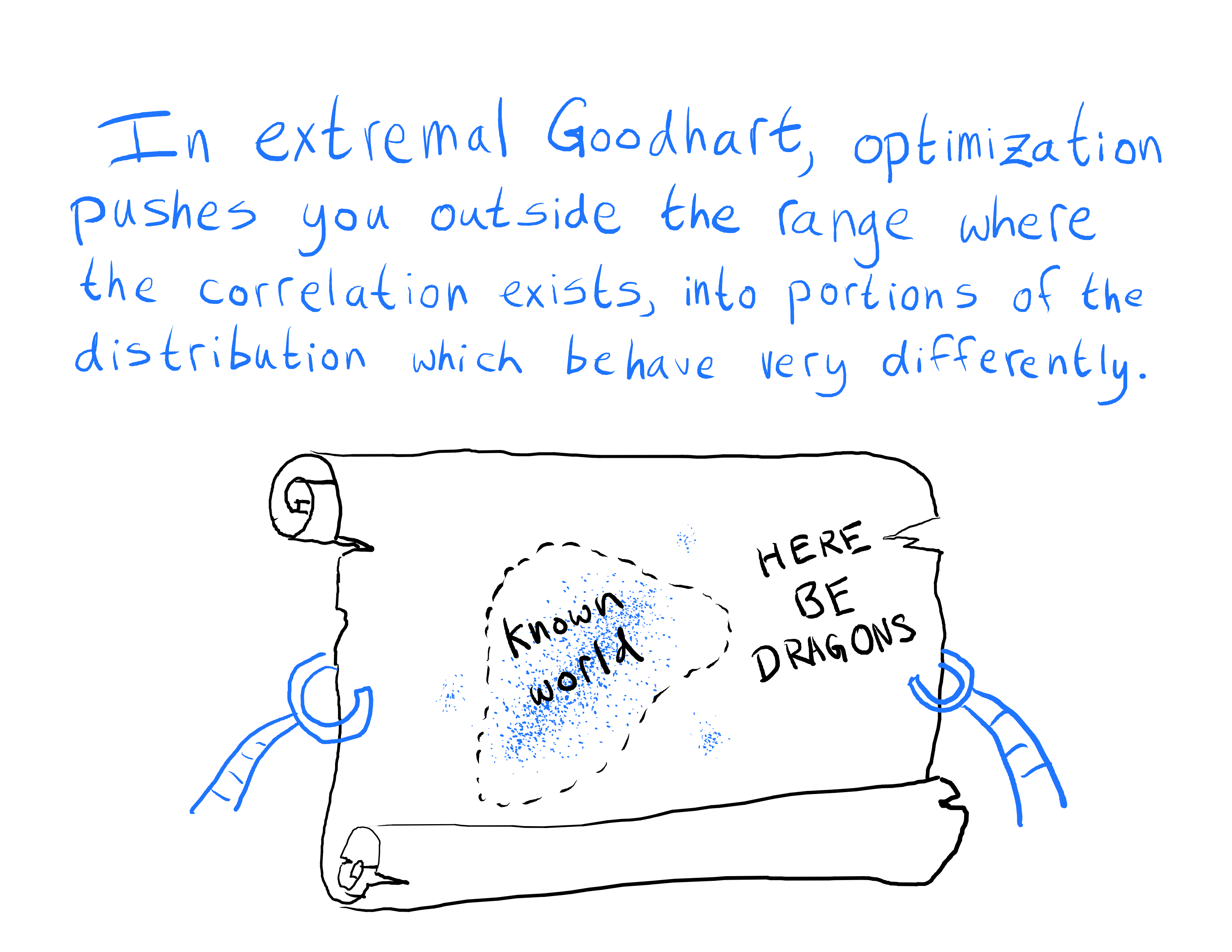

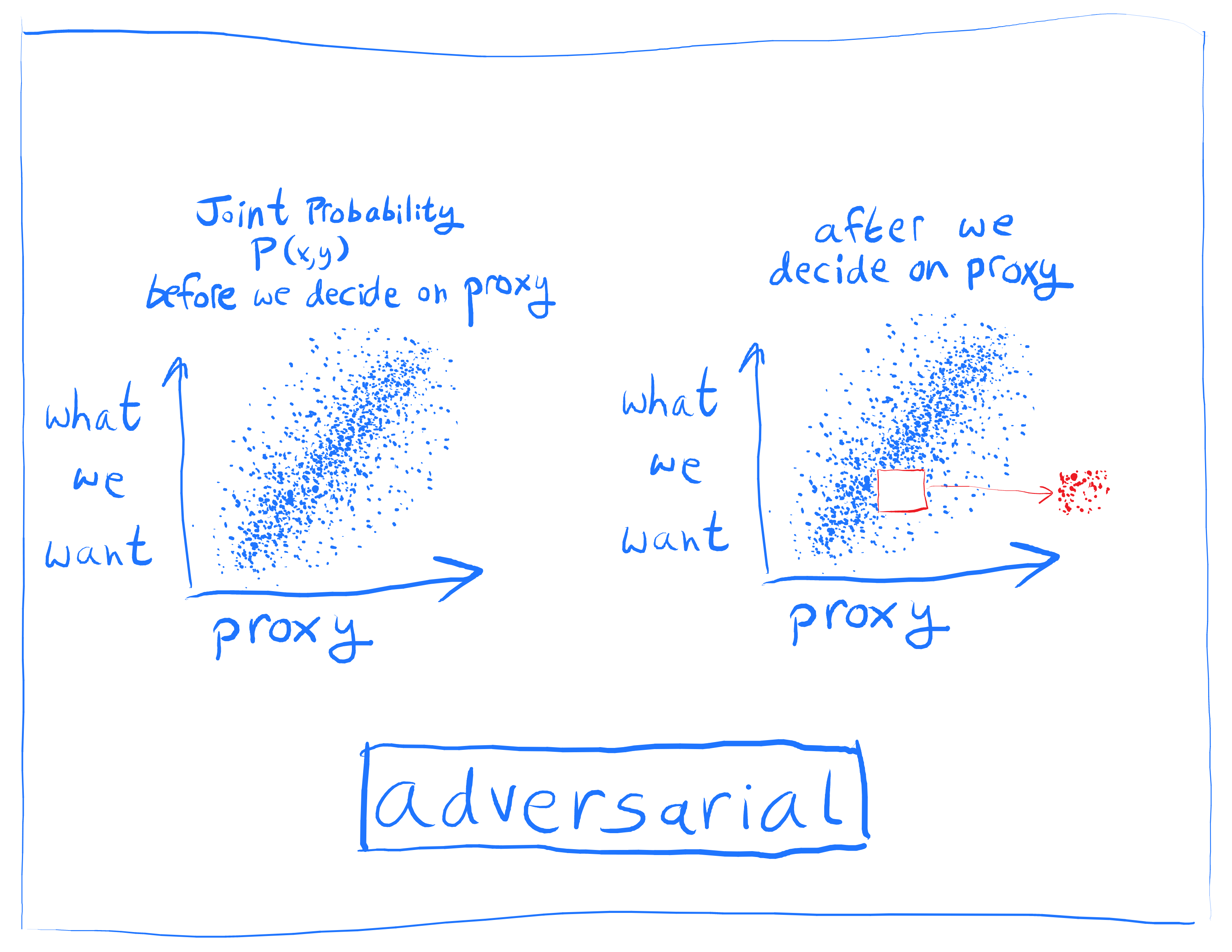

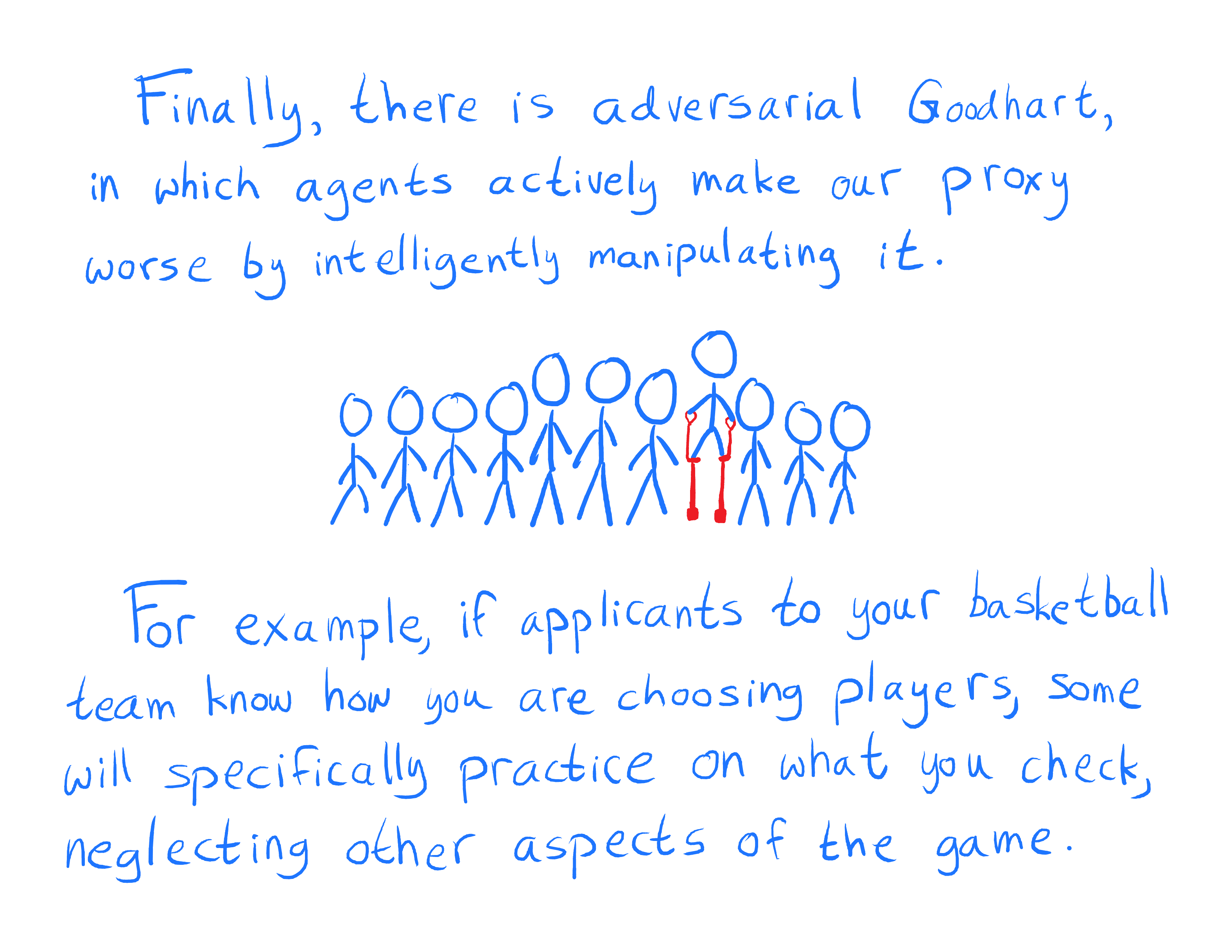

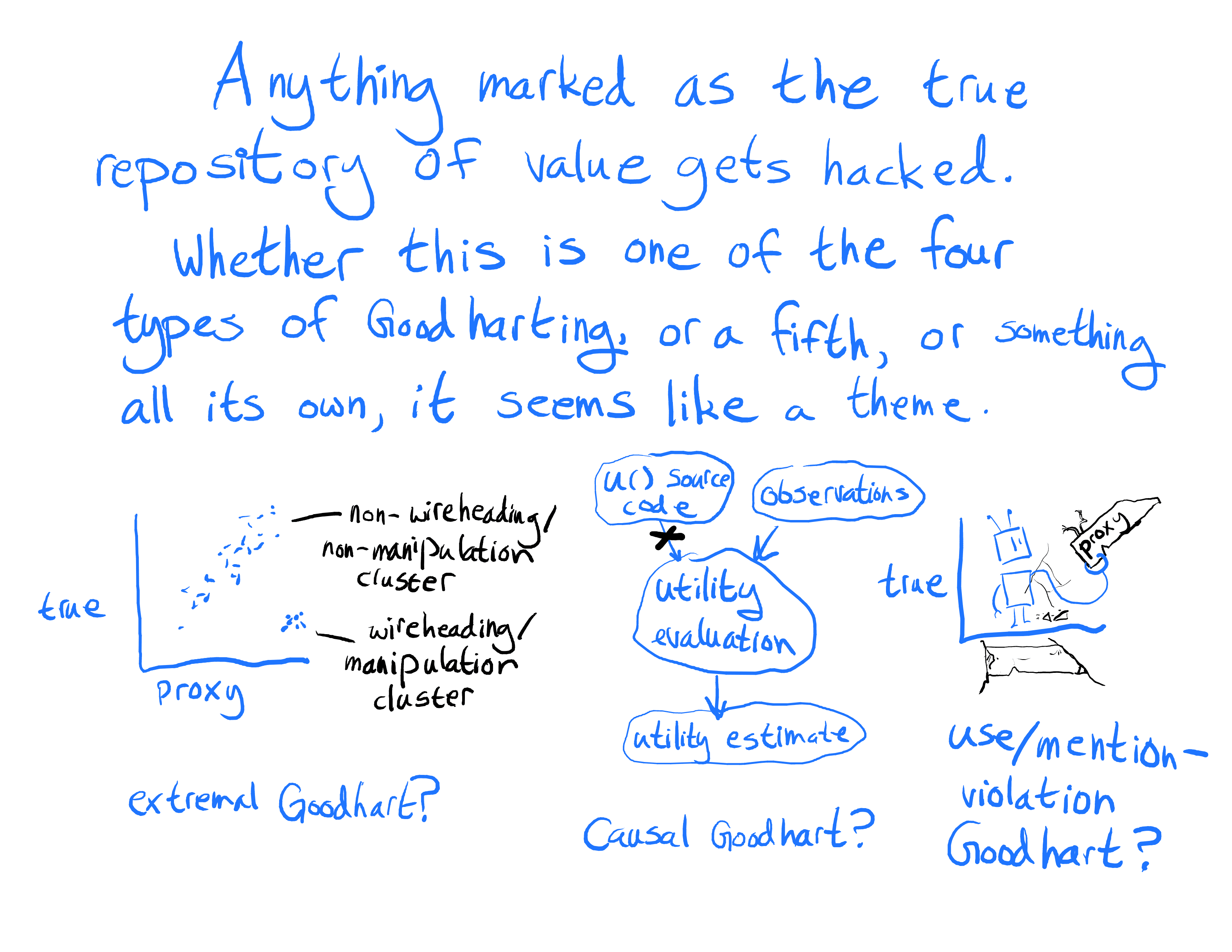

I want to expand a bit on adversarial Goodhart, which this post describes as when another agent actively attempts to make the metric fail, and the paper I wrote with Scott split into several sub-categories, but which I now think of in somewhat simpler terms. There is nothing special happening in the multi-agent setting in terms of metrics or models, it's the same three failure modes we see in the single agent case.

What changes more fundamentally is that there are now coordination problems, resource contention, and game-theoretic dynamics that make the problem potentially much worse in practice. I'm beginning to think of these multi-agent issues as a problem more closely related to the other parts of embedded agency - needing small models of complex systems, reflexive consistency, and needing self-models, as well as the issues less intrinsically about embedded agency, of coordination problems and game theoretic competition.

comment by Shmi (shminux) · 2018-11-05T03:18:27.637Z · LW(p) · GW(p)

Really enjoying reading every post in this sequence! Strangely, handwritten text does not detract from it, if anything, it makes each post more readable.

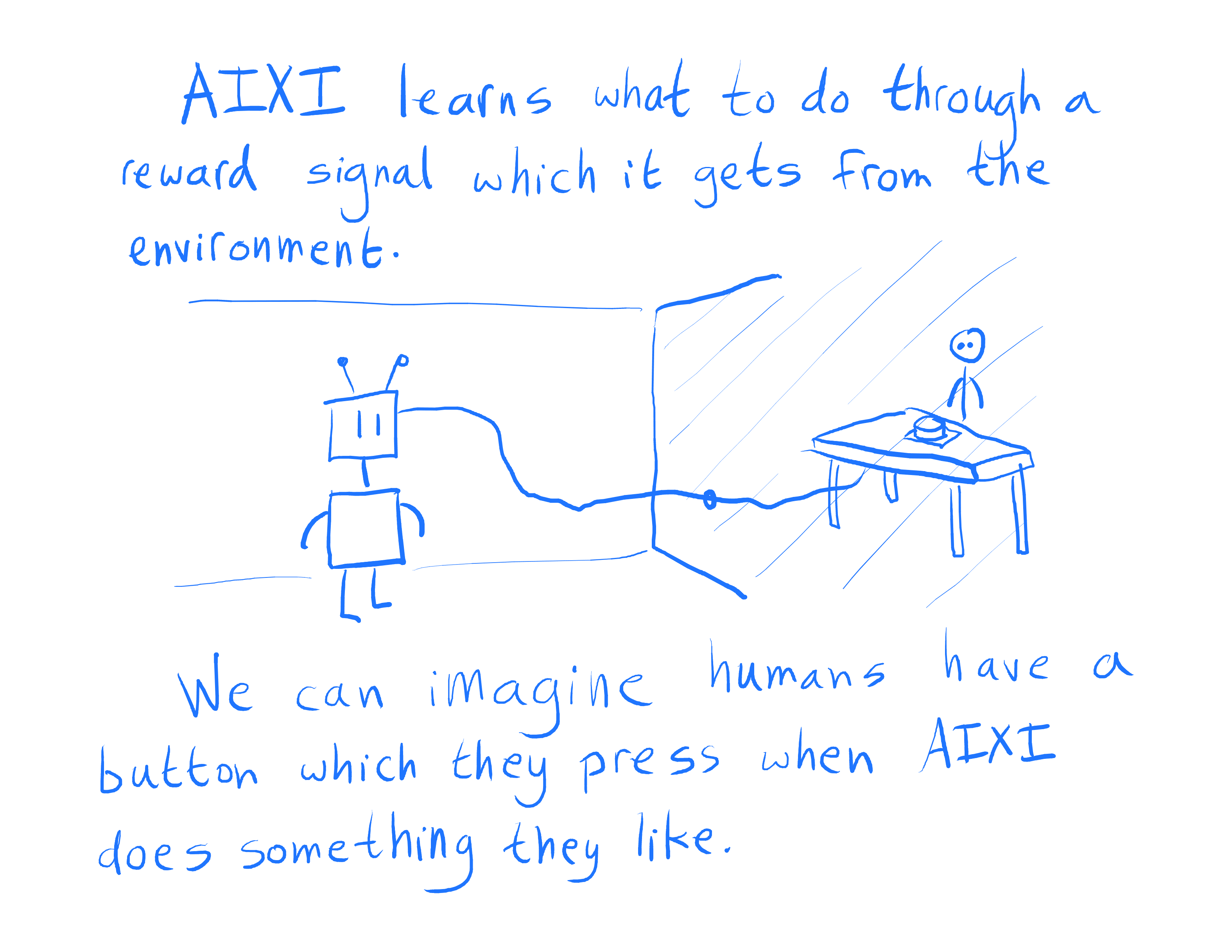

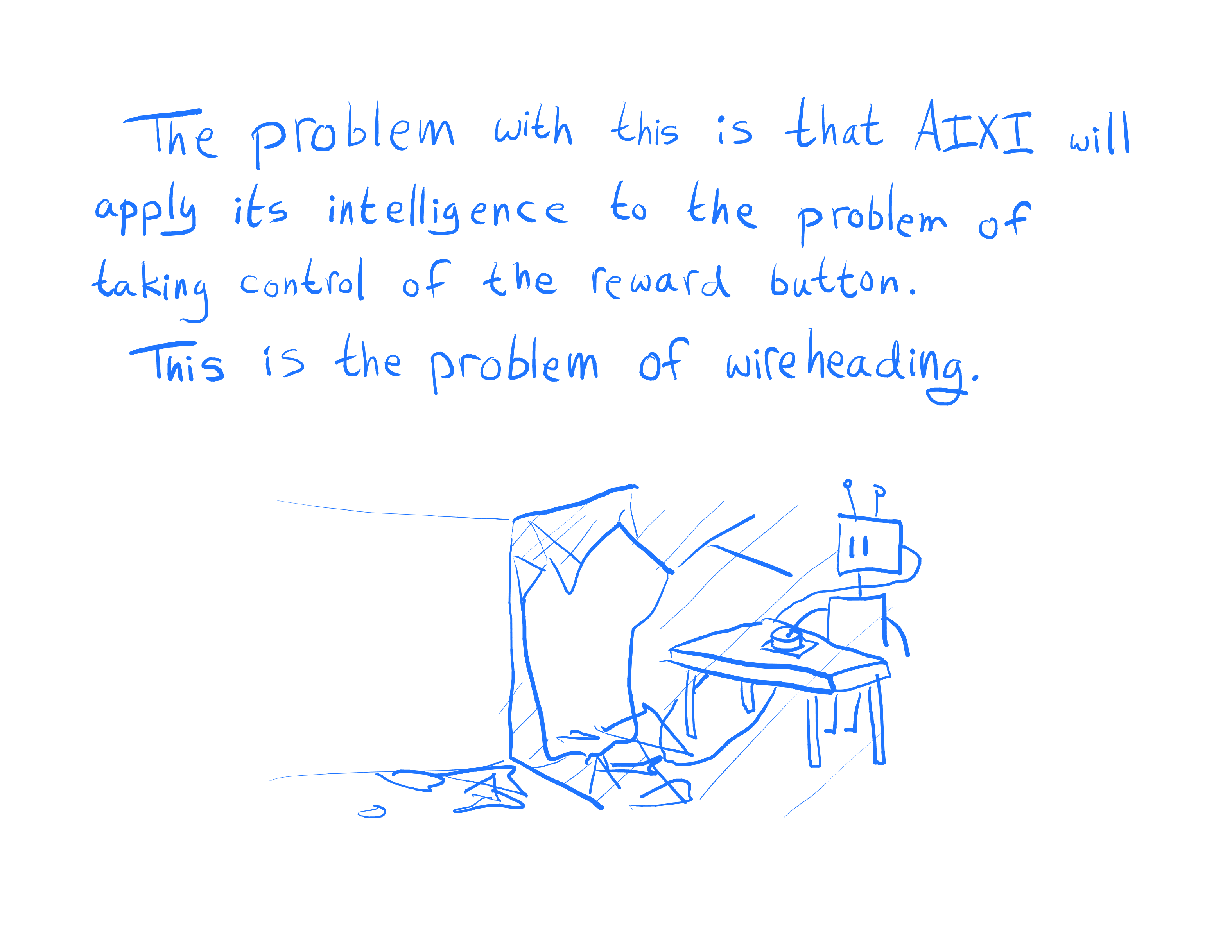

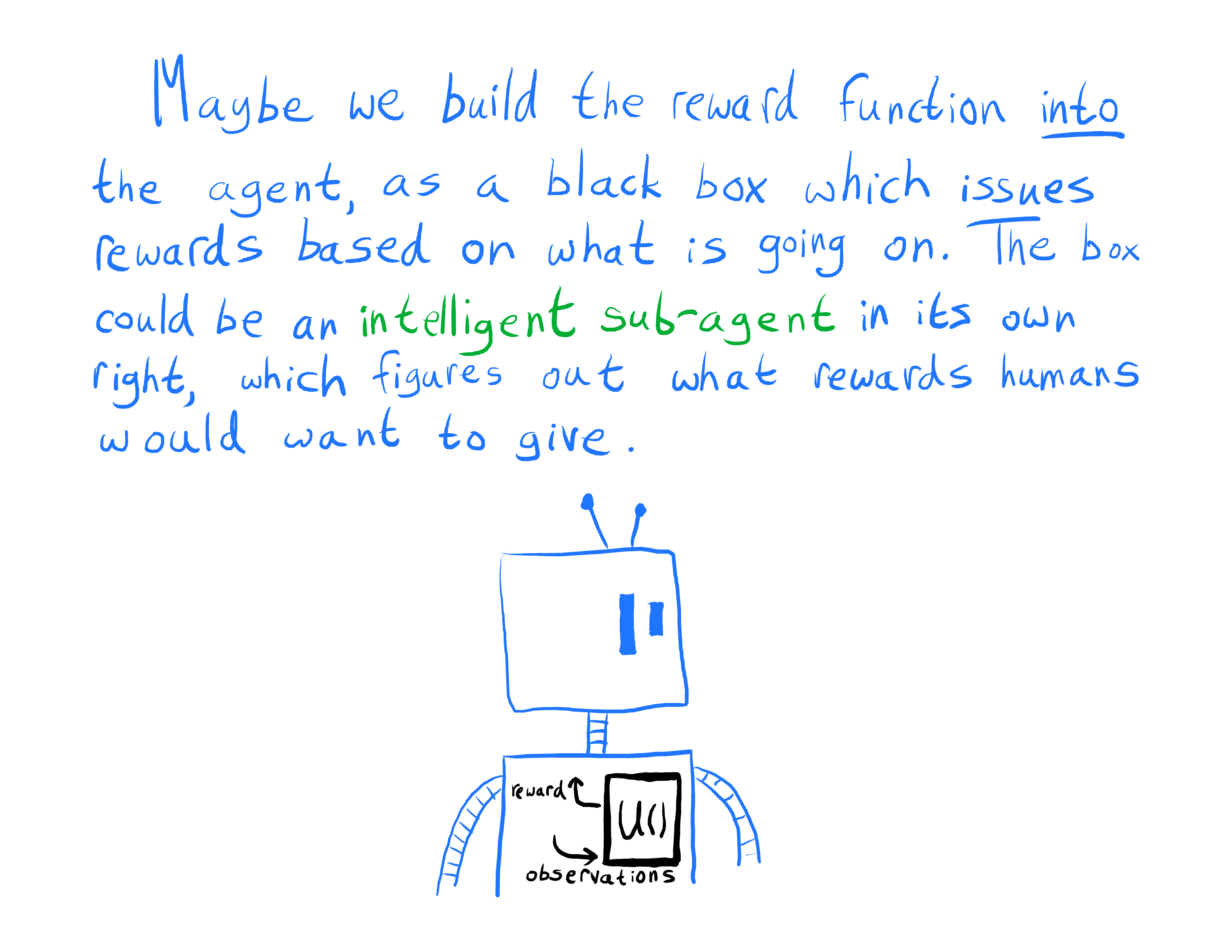

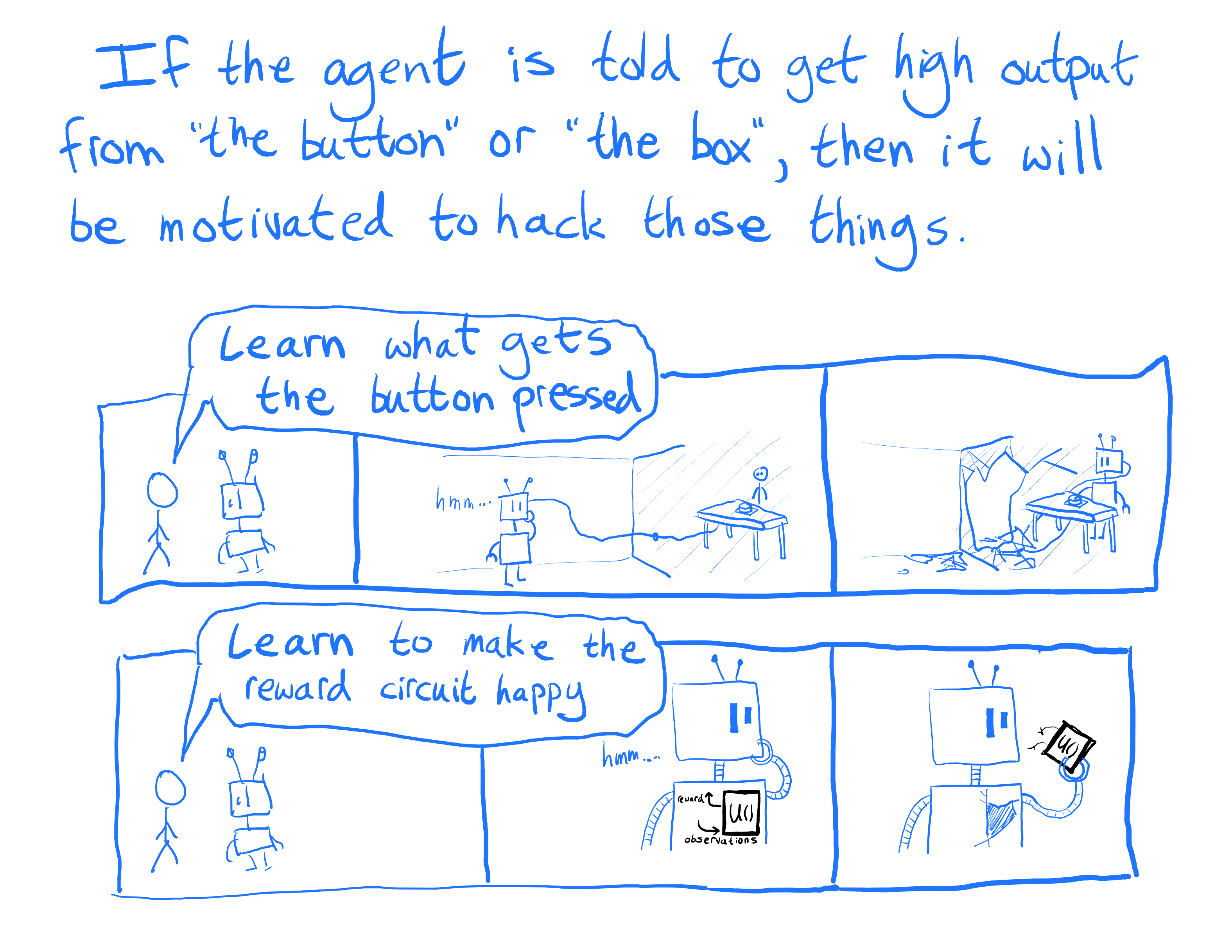

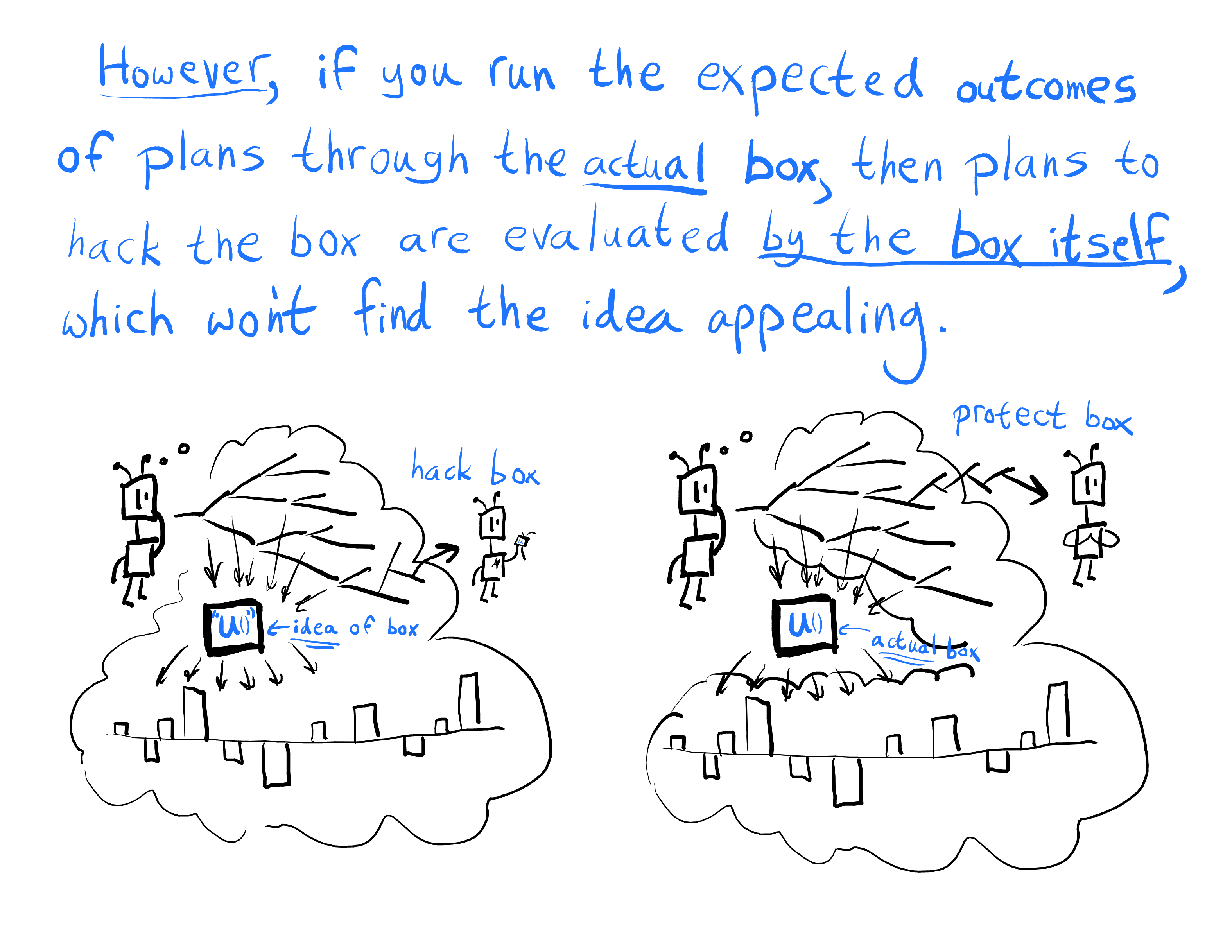

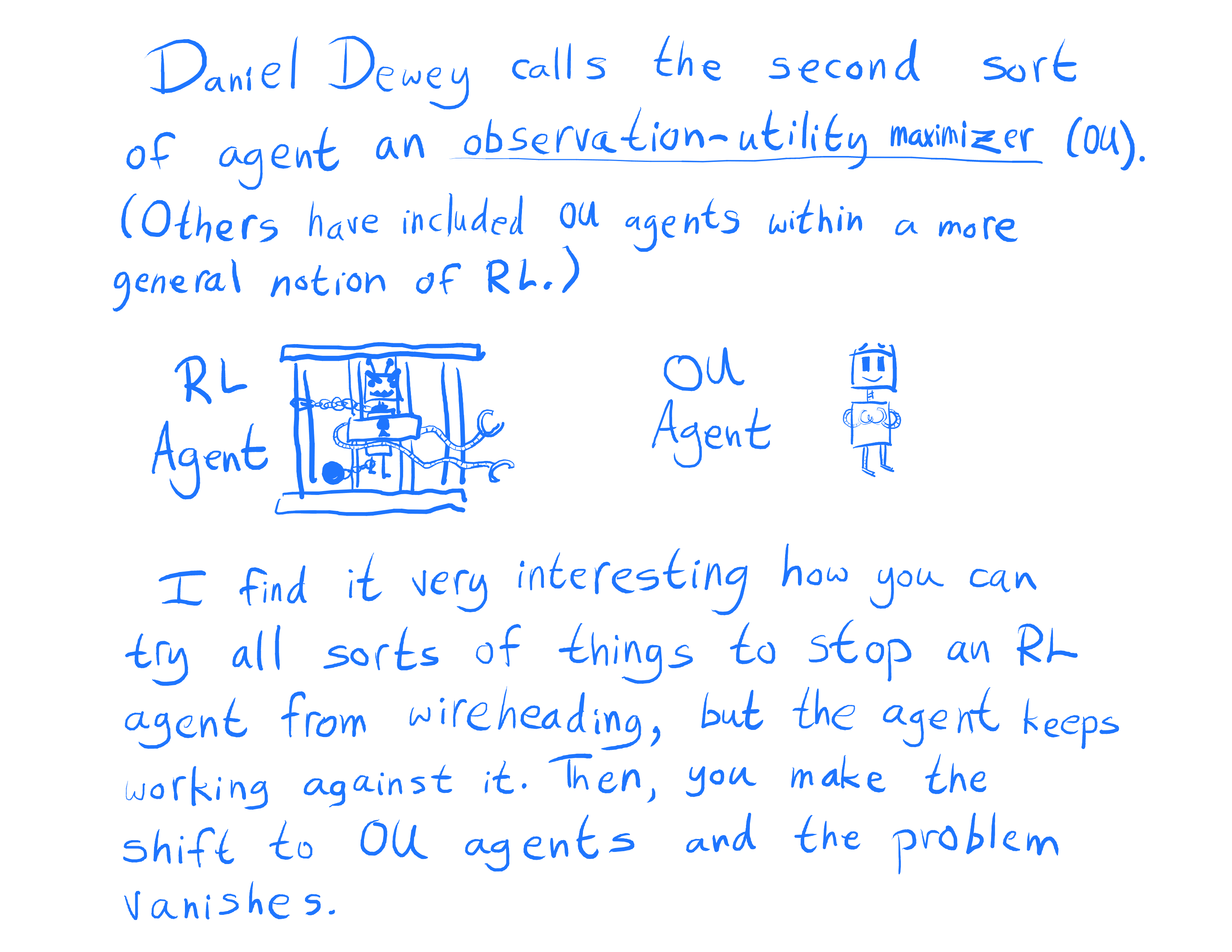

I wonder why most humans are not fans of wireheading, at least not until addicted to it. Do we naturally think in terms of an "unhacked box" when evaluating usefulness of something?

Replies from: Davidmanheim↑ comment by Davidmanheim · 2018-11-05T06:35:39.198Z · LW(p) · GW(p)

I usually think of the non-wireheading preference in terms of multiple values - humans value both freedom and pleasure. We are not willing to fully maximize one fully at the expense of the other. Wireheading is always defined by giving up freedom of action by maximizing "pleasure" defined in some way that does not include choice.

comment by Chris_Leong · 2018-11-20T12:33:34.732Z · LW(p) · GW(p)

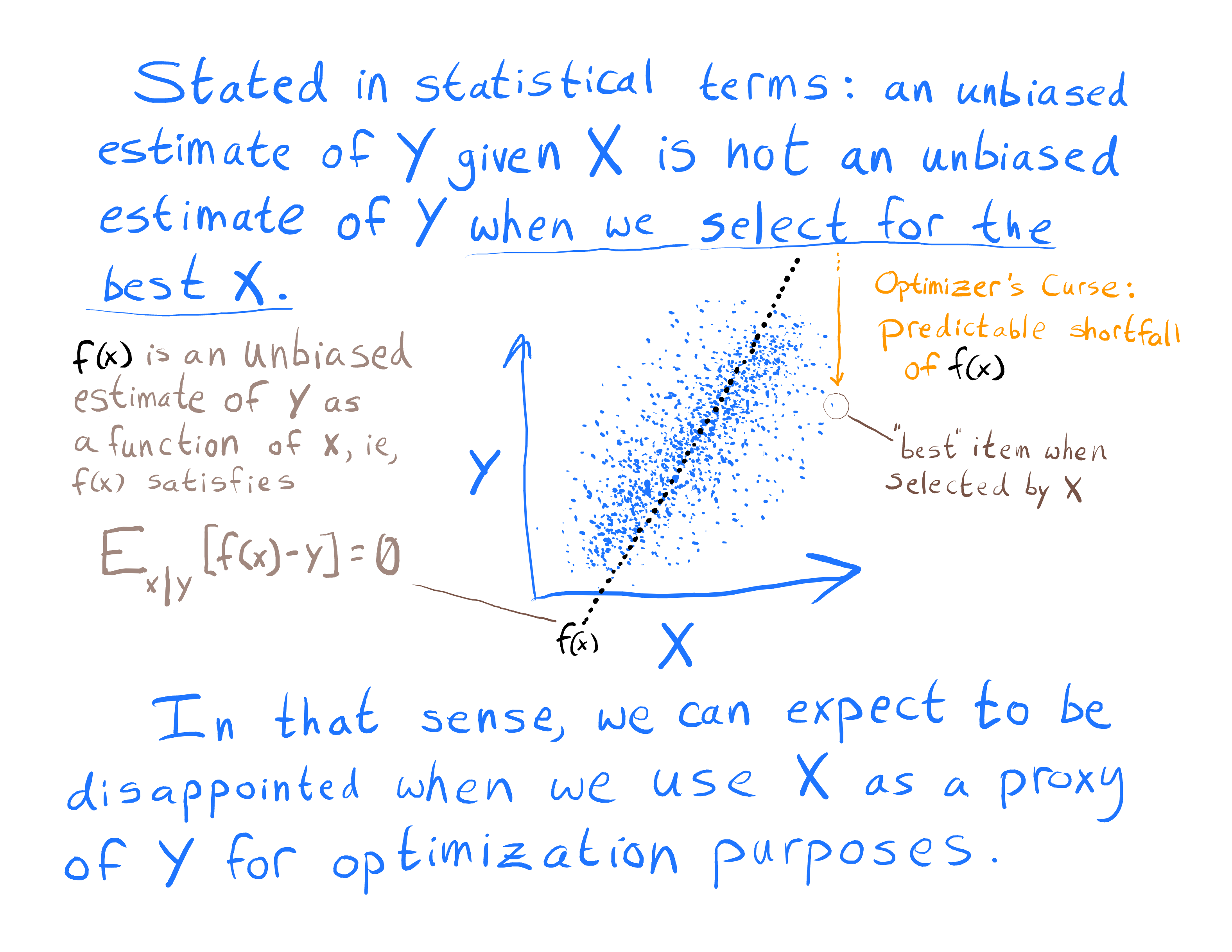

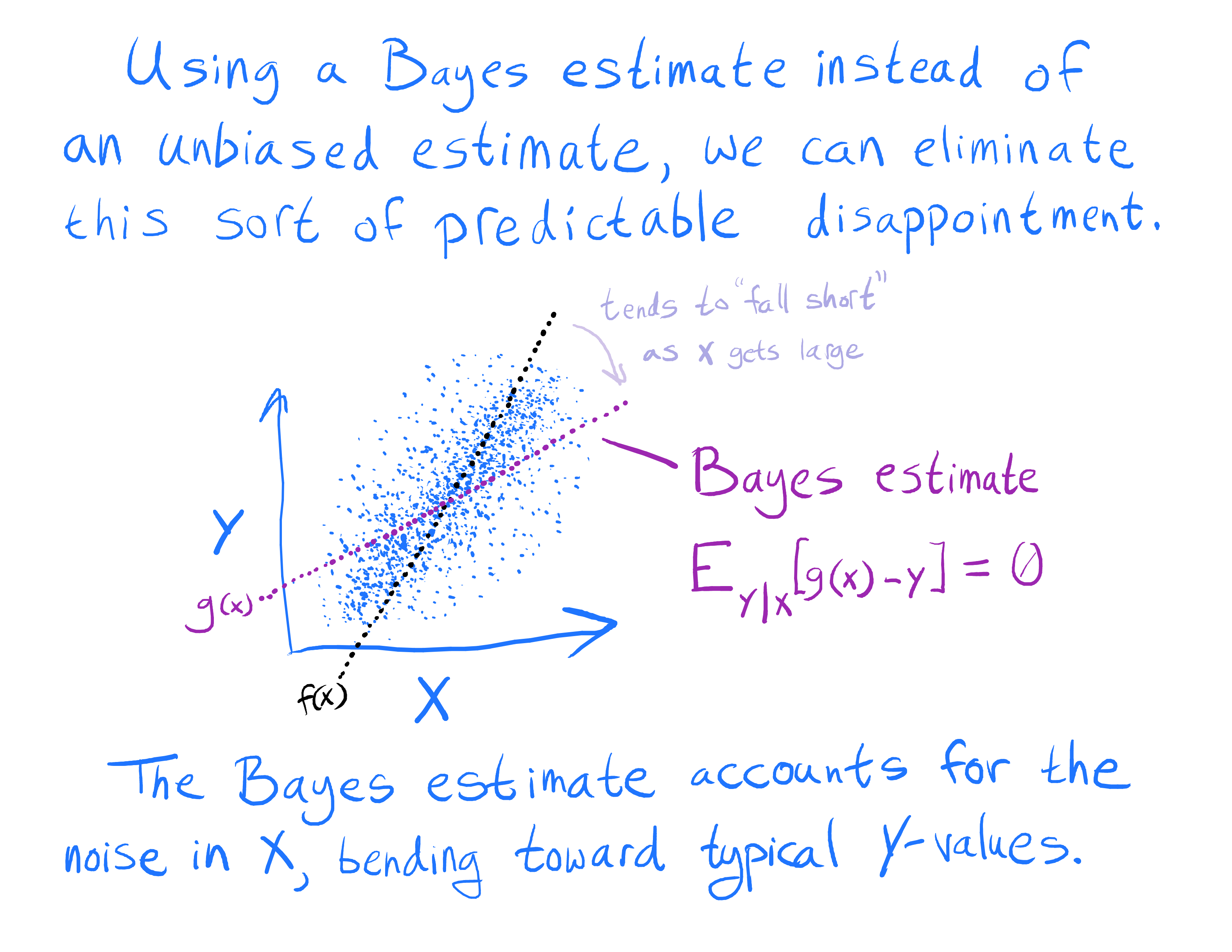

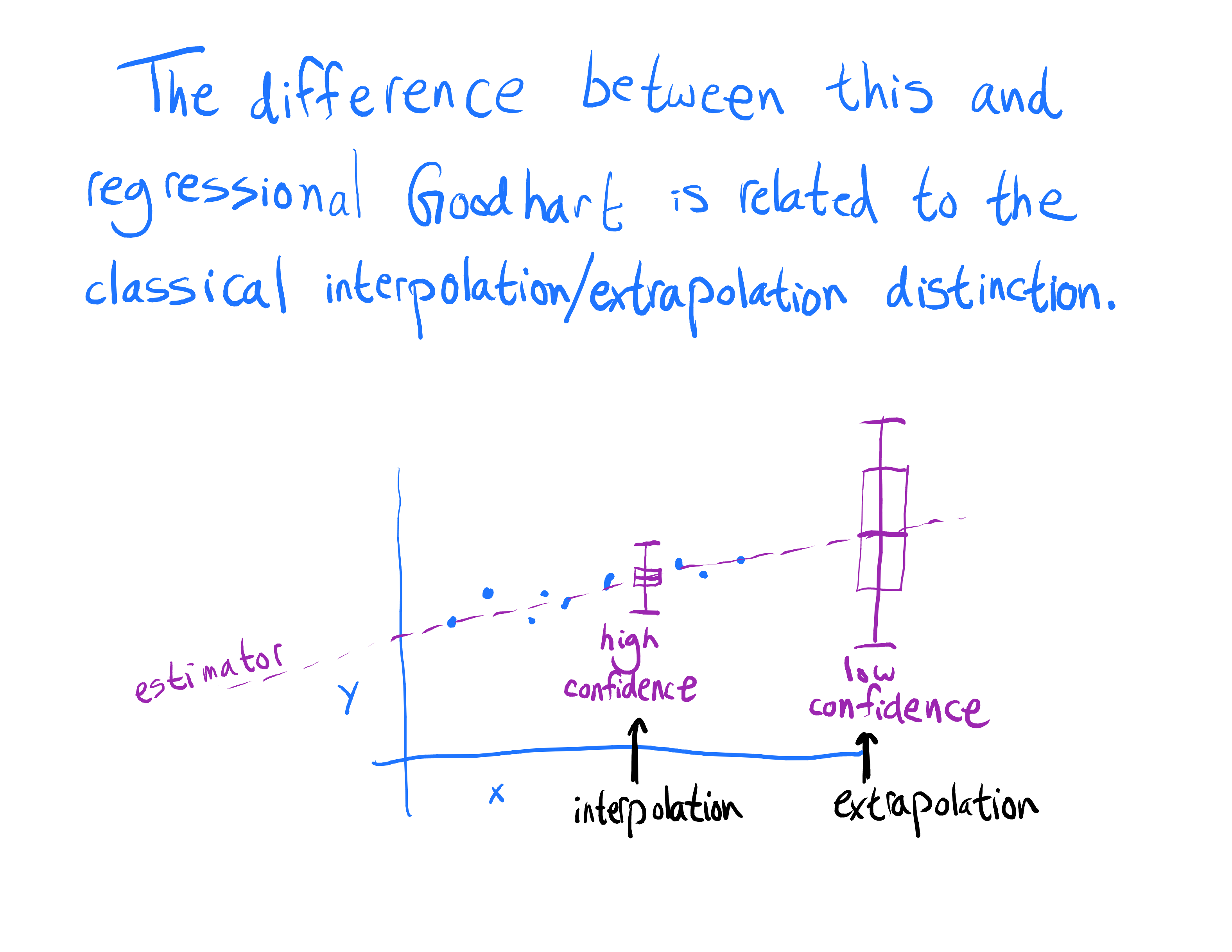

Could anyone help explain the difference between an unbiased estimator and a Bayes estimator? The unbiased estimator is unbiased on what exactly? The already existing data or new data points? And surely the Bayes estimator is unbiased in some sense as well, but in what sense?

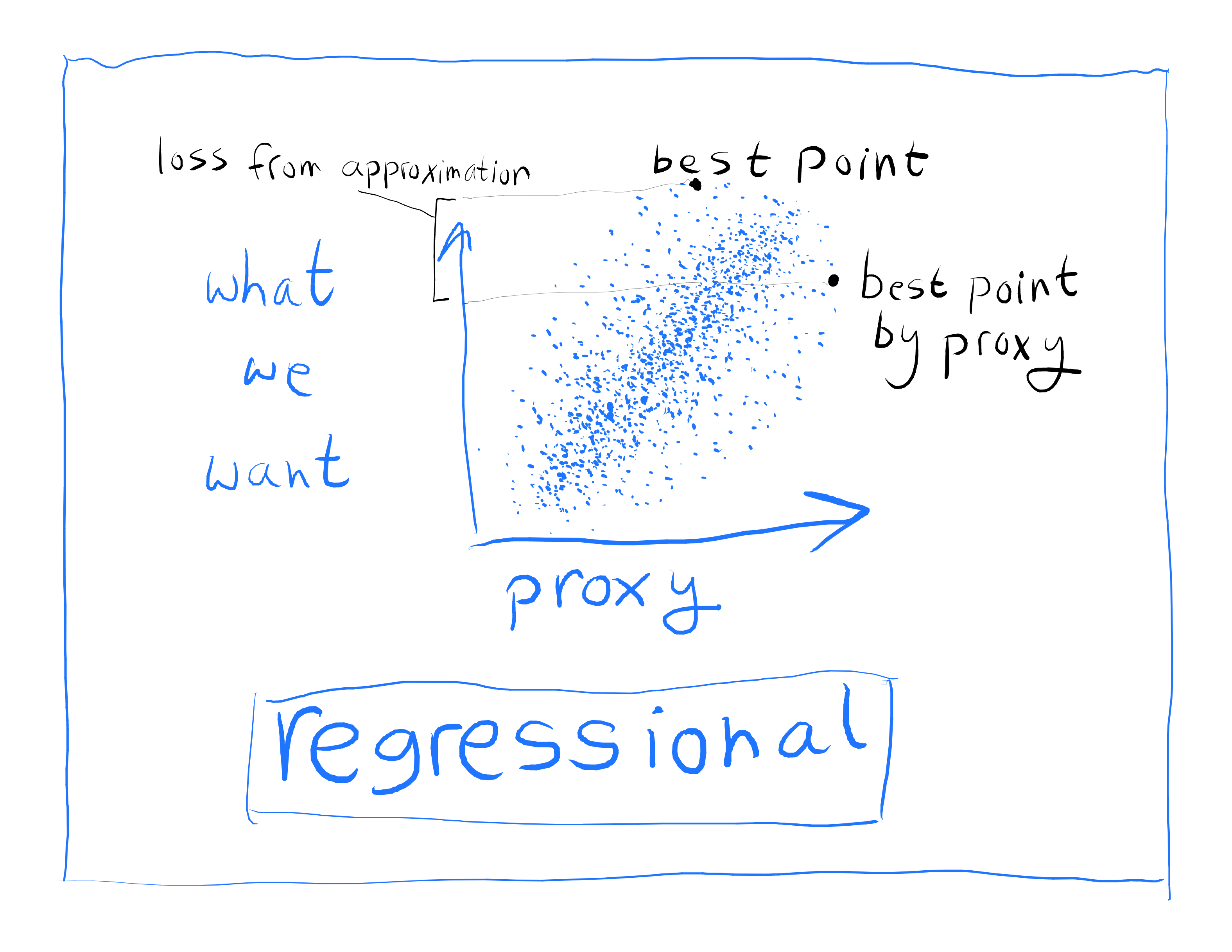

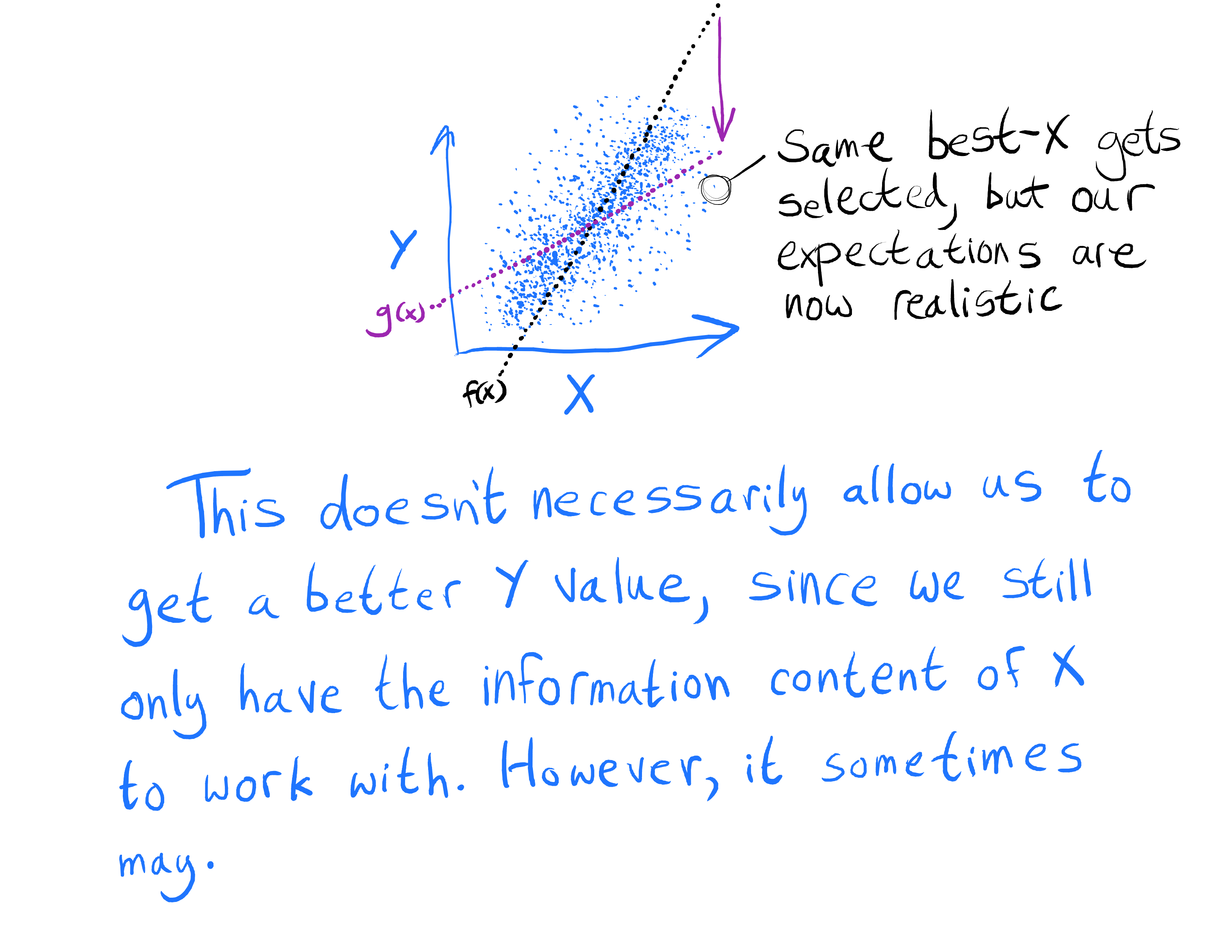

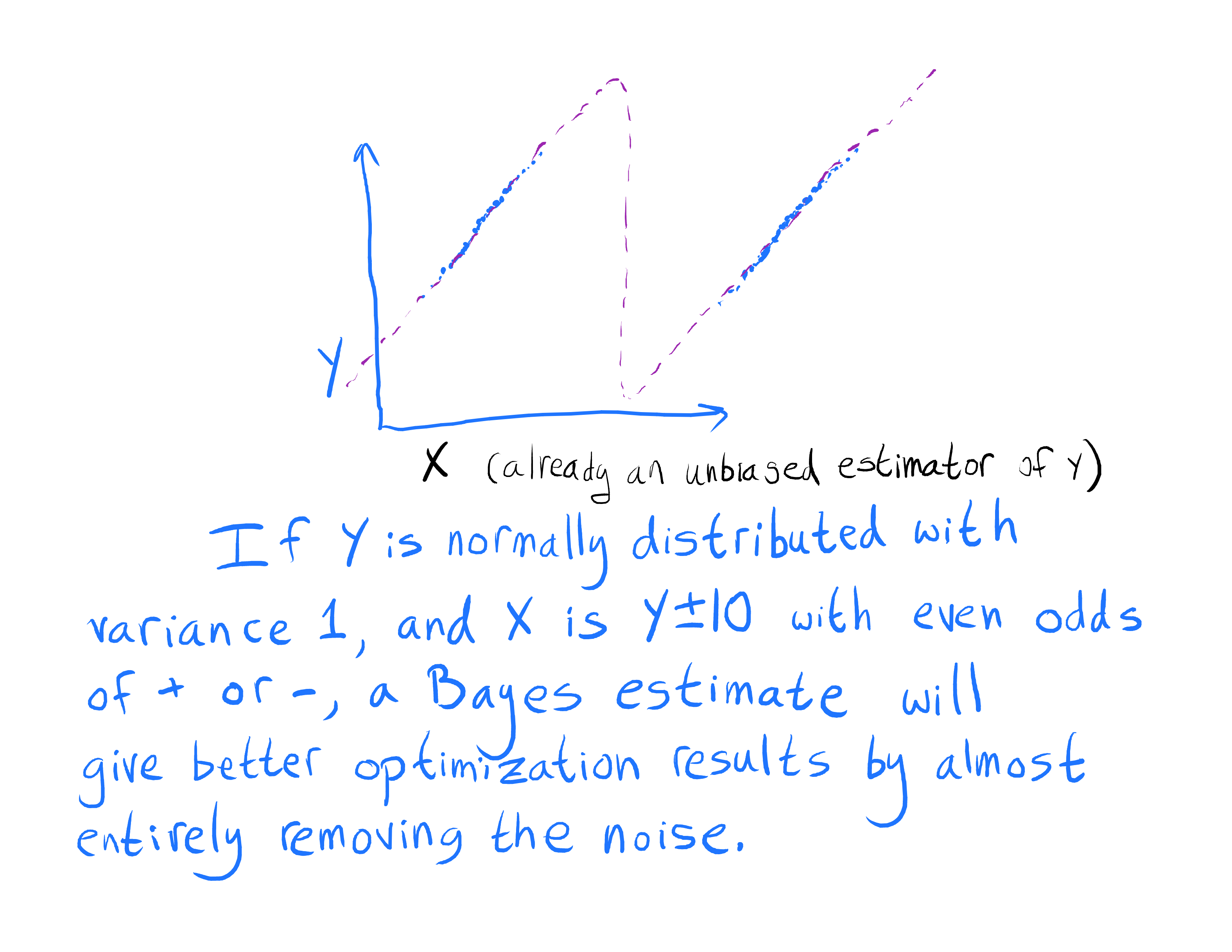

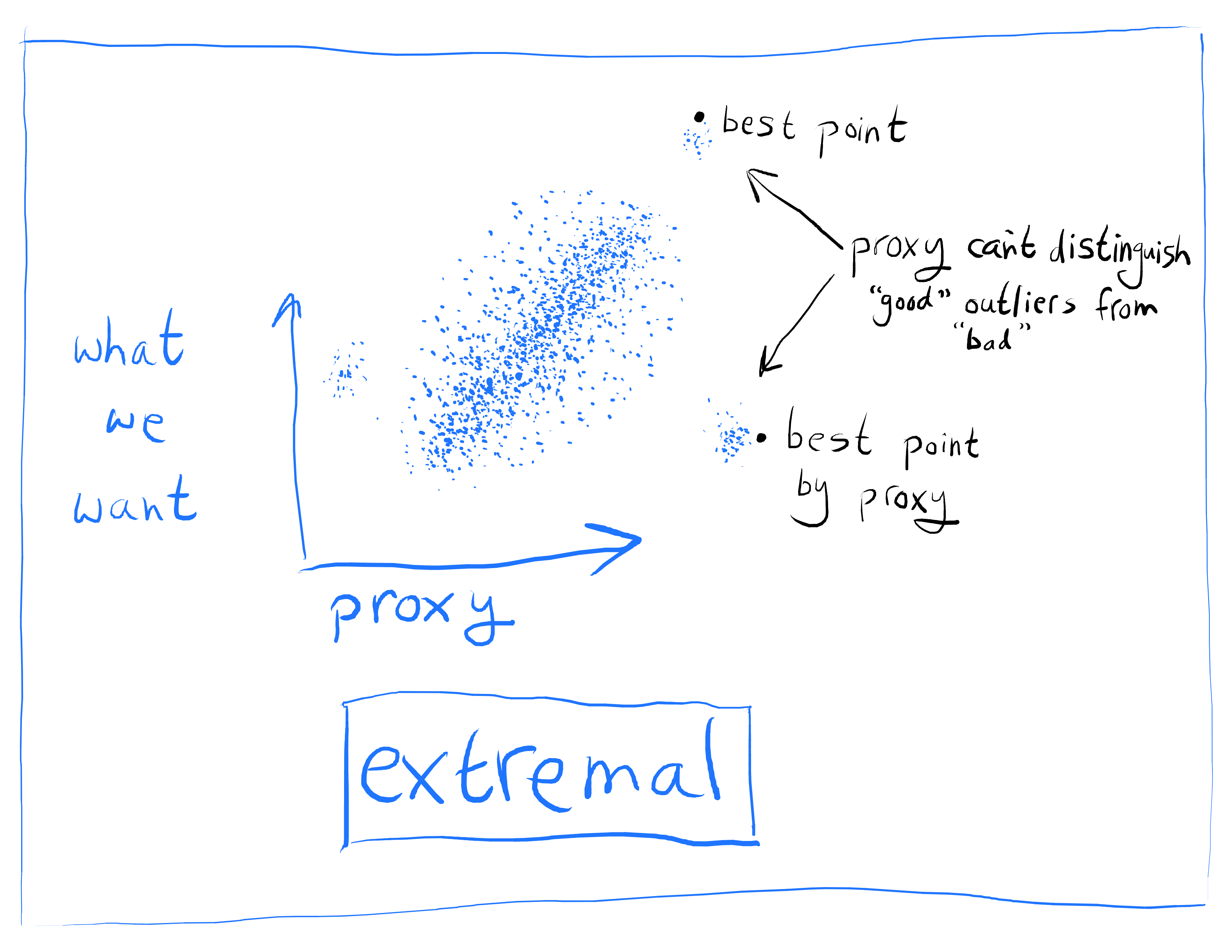

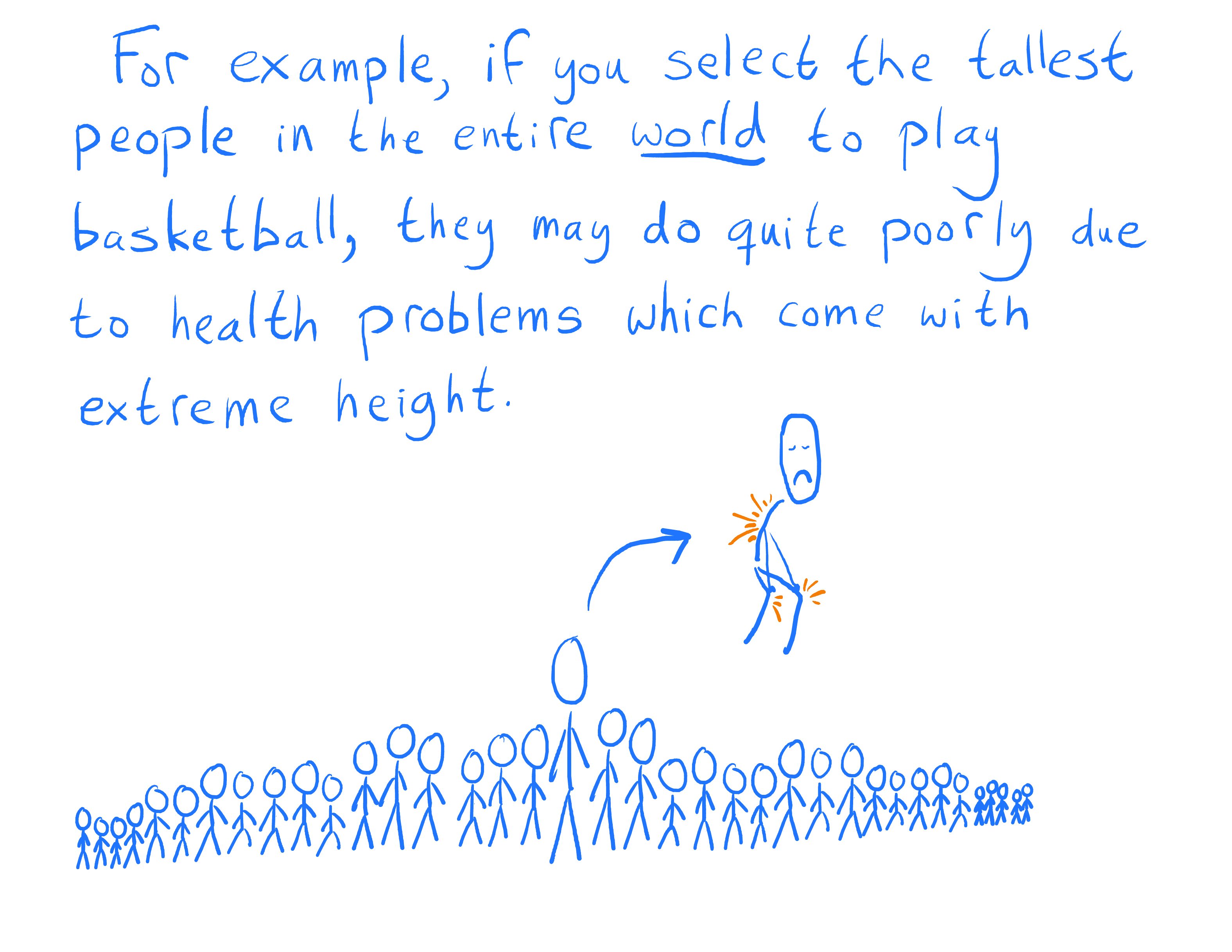

Update: If I understand correctly, the unbiased estimator would be the estimate that you should make if you drew a new point with given x-value, while the Bayes estimator takes into account that the fact that you don't just have a single point, but that you also know its ranking within a distribution (in particular that outliers from a distribution are likely to have a higher error term)

Replies from: rk↑ comment by rk · 2018-11-20T17:37:31.275Z · LW(p) · GW(p)

I think you've got a lot of the core idea. But it's not important that we know that the data point has some ranking within a distribution. Let me try and explain the ideas as I understand them.

The unbiased estimator is unbiased in the sense that for any actual value of the thing being estimated, the expected value of the estimation across the possible data is the true value.

To be concrete, suppose I tell you that I will generate a true value, and then add either +1 or -1 to it with equal probability. An unbiased estimator is just to report back the value you get:

E[estimate(x)] = estimate(x + 1)/2 + estimate(x - 1)/2

If the estimate function is identity, we have (x + x +1 -1)/2 = x. So its unbiased.

Now suppose I tell you that I will generate the true value by drawing from a normal distribution with mean 0 and variance 1, and then I tell you 23,000 as the reported value. Via Bayes, you can see that it is more likely that the true value is 22,999 than 23,001. But the unbiased estimator blithely reports 23,000.

So, though the asymmetry is doing some work here (the further we move above 0, the more likely that +1 rather than -1 is doing some of the work), it could still be that 23,000 is the smallest of the values I sampled.

Replies from: Chris_Leong↑ comment by Chris_Leong · 2018-11-20T19:12:40.337Z · LW(p) · GW(p)

"So, though the asymmetry is doing some work here (the further we move above 0, the more likely that +1 rather than -1 is doing some of the work), it could still be that 23,000 is the smallest of the values I sampled" - That's very interesting.

So I looked at the definition on Wikipedia and it says: "An estimator is said to be unbiased if its bias is equal to zero for all values of parameter θ."

This greatly clarifies the situation for me as I had thought that the bias was a global aggregate, rather than a value calculated for each value of the parameter being optimised (say basketball ability). Bayesian estimates are only unbiased in the former, weaker sense. For normal distributions, the Bayesian estimate is happy to underestimate the extremeness of values in order to narrow the probability distribution of predictions for less extreme values. In other words, it is accepting a level of bias in order to narrow the range.

comment by avturchin · 2018-11-05T10:31:44.700Z · LW(p) · GW(p)

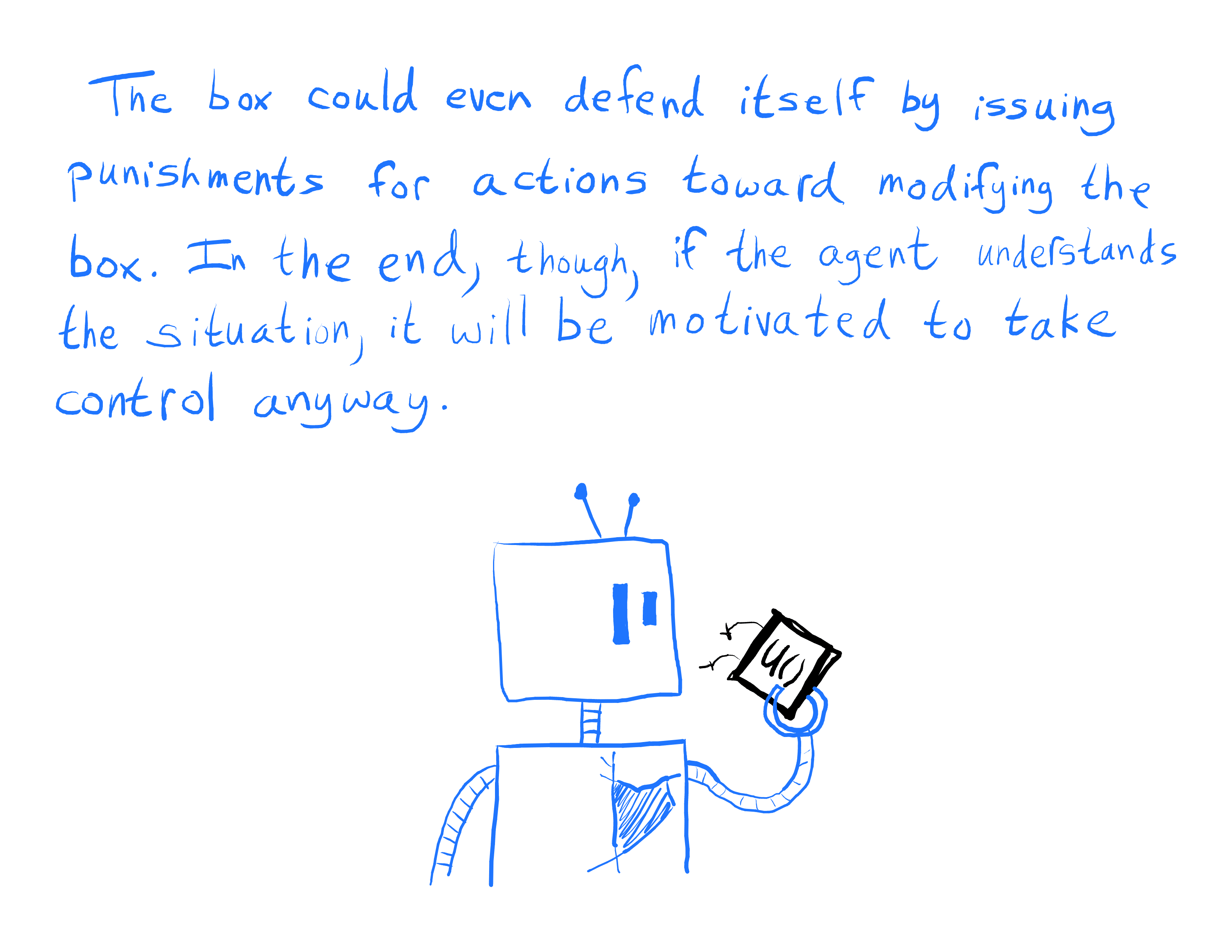

Reading this I had an idea about using the reward hacking capability to self-limit AI’s power. May be it was already discussed somewhere?

In this setup, AI’s reward function is protected by a task of some known complexity (e.g. cryptography or a need to create nanotechnology). If the AI increases its intelligence above a certain level, it will be able to hack its reward function and when the AI will stop.

This gives us a chance to create a fuse against uncontrollably self-improving AI: if it becomes too clever, it will self-terminate.

Also, AI may do useful work while trying to hack its own reward function, like creating nanotech or solving certain type of equations or math problems (especially in cryptography).