Database of existential risk estimates

post by MichaelA · 2020-04-20T01:08:39.496Z · LW · GW · 3 commentsContents

Key links relevant to existential risk estimates Why this database may be valuable None 3 comments

This post was written for Convergence Analysis, though the opinions expressed are my own. Cross-posted from the Effective Altruism Forum [EA · GW].

This post:

- Provides a spreadsheet you can use for making your own estimates of existential risks [EA · GW] (or of similarly “extreme” outcomes)

- Announces a database of estimates of existential risk (or similarly extreme outcomes), which I hope can be collaboratively expanded and updated

- Discusses why I think this database may be valuable

- Discusses some pros and cons of using or making such estimates

Key links relevant to existential risk estimates

-

Here’s a spreadsheet listing some key existential-risk-related things people have estimated, without estimates in it.

- The link makes a copy of the spreadsheet so that you can add your own estimates to it.

- I mention this first so that you have the option of providing somewhat independent estimates, before looking at (more?) estimates from others.

- Some discussion of good techniques for forecasting, which may or may not apply to such long-range and extreme-outcome forecasts, can be found here, here, here, here, and here.

-

Here’s a database of all estimates of existential risks, or similarly extreme outcomes (e.g., reduction in the expected value of the long-term future), which I’m aware of. I intend to add to it over time, and hope readers suggest additions as well.

-

The appendix of this article by Beard et al. is where I got many of the estimates from, and it provides more detail on the context and methodologies of those estimates than I do in the database.

-

Beard et al. also critically discuss the various methodologies by which existential-risk-relevant estimates have been or could be derived.

-

In this post [EA · GW], I discuss some pros and cons of using or stating explicit probabilities in general.

Why this database may be valuable

I’d bet that the majority of people reading this sentence have, at some point, seen one or more estimates of extinction risk by the year 2100, from one particular source.[1] These estimates may in fact have played a role in major decisions of yours; I believe they played a role in my own career transition. That source is an informal survey of global catastrophic risk [EA(p) · GW(p)] researchers, from 2008.

As Millett and Snyder-Beattie note:

The disadvantage [of that survey] is that the estimates were likely highly subjective and unreliable, especially as the survey did not account for response bias, and the respondents were not calibrated beforehand.

Additionally, in any case, it was just one informal survey, and is now 12 years old.[2] So why is it so frequently referenced? And why has it plausibly (in my view) influenced so many people?

I also expect that essentially the same pattern is likely to repeat, perhaps for another dozen years, but now with Toby Ord’s recent existential risk estimates [EA · GW]. Why do I expect this?

I originally thought the answer to each of these questions was essentially that we have so little else to go on, and the topic is so important. It seemed to me there had just been so few attempts to actually estimate existential risks or similarly extreme outcomes (e.g., extinction risk, reduction in the expected value of the long-term future).

I’d argue that this causes two problems:

- We have less information to inform decisions such as whether to prioritise longtermism over other cause areas, whether to prioritise existential risk reduction over other longtermist strategies, and especially which existential risks to be most concerned about.

- We may anchor [EA · GW] too strongly on the very sparse set of estimates we are aware of, and get caught in information cascades.

Indeed, it seems to me probably not ideal how many strategic decisions people concerned about existential risks (myself included) have made so far without having first collected and critiqued a wide array of such estimates.

This second issue seems all the more concerning given the many reasons we have for skepticism about estimates on these matters, such as:

- The lack of directly relevant data (e.g., prior human extinction events) to inform the estimates

- Estimates often being little more than quick guesses

- It often being hard to interpret what is actually being estimated

- The possibility of response bias

- The estimators typically being people who are especially concerned about existential risks, and thus arguably being essentially “selected for” above average pessimism

- A general lack of evidence about the trustworthiness of long-range forecasts, and specific evidence of expert forecasts often being unreliable even for forecasts of events just a few years out (e.g., from Tetlock)

Thus, we may be anchoring on a small handful of estimates which could in fact warrant little trust.

But what else are we to do? One option would be to each form our own, independent estimates. This may be ideal, which is why I opened by linking to a spreadsheet you can duplicate to do that in.

But it seems plausible that “expert” estimates of these long-range, extreme outcomes would correlate better with what the future actually has in store for us than the estimates most of us could come up with, at least without investing a great deal of time into it. (Though I’m certainly not sure of that, and I don’t know of any directly relevant evidence. Plus, Tetlock’s work may provide some reason to doubt the idea that “experts” can be assumed to make better forecasts in general.)

And in any case, most of us have probably already been exposed to a handful of relevant estimates, making it harder to generate “independent” estimates of our own.

So I decided to collect all quantitative estimates I could find of existential risks or other similarly “extreme outcomes”, as this might:

- Be useful for decision-making, because I do think these estimates are likely better than nothing or than just phrases like “plausible”, plausibly by a very large margin.

- Reduce a relatively “blind” reliance on any particular source of estimates, and perhaps even make it easier for people to produce effectively somewhat independent estimates. My hope is that providing a variety of different estimates will throw anchors in many directions (so to speak) in a way that somewhat “cancels out”, and that it’ll also highlight the occasionally major discrepancies between different estimates. I do not want you to take these estimates as gospel.

Above, I said “I originally thought the answer to each of these questions was essentially that we have so little else to go on, and the topic is so important.” I still think that’s not far off the truth. But since starting this database, I discovered Beard et al.’s appendix, which has a variety of other relevant estimates. And I wondered whether that meant this database wouldn’t be useful.

But then I realised that many or most of the relevant estimates from that appendix weren’t mentioned in Ord’s book. And it’s still the case that I’ve seen the 2008 survey estimates many times, and never seen most of the estimates in Beard et al.’s appendix (see this post [EA · GW] for an indication that I’m not alone in that). And Beard et al. don’t mention Ord’s estimates (perhaps because it was released a couple months before Ord’s book was), nor a variety of other estimates I found (perhaps because they’re from “informal sources” like 80,000 Hours articles).

So I still think collecting all relevant estimates in one place may be useful. And another benefit is that this database can be updated over time, including via suggestions from readers, so it can hopefully become comprehensive and stay up to date.

So please feel free to:

- Make your own estimates

- Take a look through the database as and when you’d find that useful

- Comment in there regarding estimates or details that could be added, corrections if I made any mistakes, etc.

- Comment here regarding whether my rationale for this database makes sense, regarding pros, cons, and best practice for existential risk estimates in general, etc.

This post was sort-of inspired by one sentence in a comment by MichaelStJules [EA(p) · GW(p)], so my thanks to him for that. My thanks also to David Kristoffersson [EA · GW] for helpful feedback and suggestions.

See also my thoughts on Toby Ord’s existential risk estimates [EA · GW], which are featured in the database.

For example, it’s referred to in these three 80,000 Hours articles. ↩︎

Additionally, Beard et al.’s appendix says the survey had only 13 participants. The original report of the survey’s results doesn’t mention the number of participants. ↩︎

3 comments

Comments sorted by top scores.

comment by Amandango · 2020-09-07T19:19:19.408Z · LW(p) · GW(p)

Thank you for putting this spreadsheet database together! This seemed like a non-trivial amount of work, and it's pretty useful to have it all in one place. Seeing this spreadsheet made me want:

- More consistent questions such that all these people can make comparable predictions

- Ability to search and aggregate across these so we can see what the general consensus is on various questions

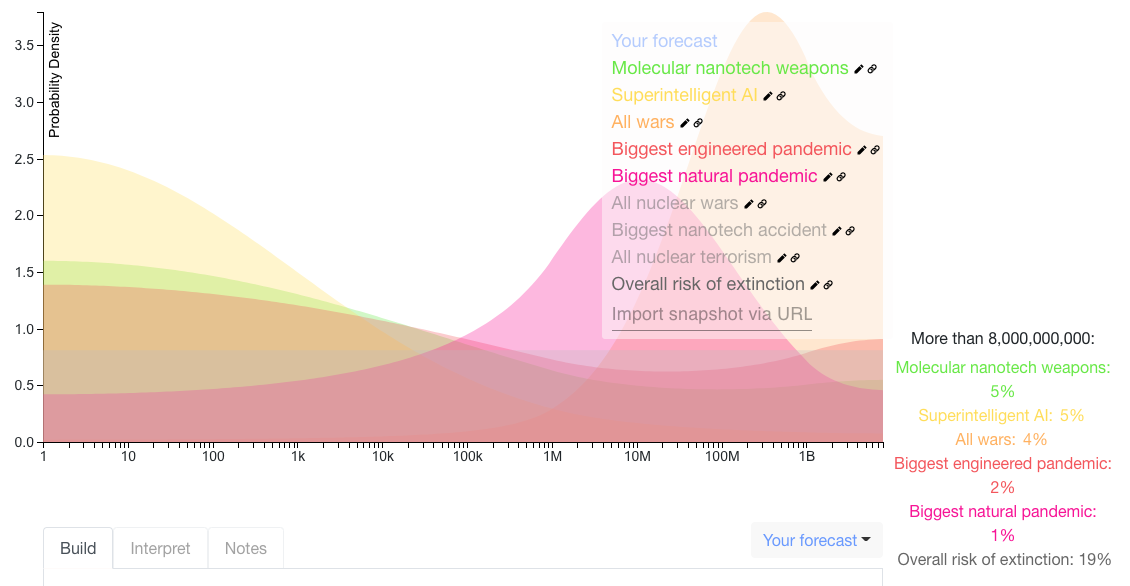

I thought the 2008 GCR questions were really interesting, and plotted the median estimates here. I was surprised by / interested in:

- How many more deaths were expected from wars than other disaster scenarios

- For superintelligent AI, most of the probability mass was < 1M deaths, but there was a high probability (5%) on extinction

- A natural pandemic was seen as more likely to cause > 1M deaths than an engineered pandemic (although less likely to cause > 1B deaths)

FYI, this is on a log scale. I plotted extinction as > 8B deaths.

(posted a similar comment on the EA forum link, since it seems like people are engaging more with this post there)

comment by Maxime Riché (maxime-riche) · 2023-03-22T22:09:15.587Z · LW(p) · GW(p)

Do you also have estimates of the fraction of resources in our light cone that we expect to be used to create optimised good stuff?

Replies from: MichaelA↑ comment by MichaelA · 2023-03-25T09:28:15.428Z · LW(p) · GW(p)

I'd consider those to be "in-scope" for the database, so the database would include any such estimates that I was aware of and that weren't too private to share in the database.

If I recall correctly, some estimates in the database are decently related to that, e.g. are framed as "What % of the total possible moral value of the future will be realized?" or "What % of the total possible moral value of the future is lost in expectation due to AI risk?"

But I haven't seen many estimates of that type, and I don't remember seeing any that were explicitly framed as "What fraction of the accessible universe's resources will be used in a way optimized for 'the correct moral theory'?"

If you know of some, feel free to comment in the database to suggest they be added :)